Learn DevOps: The Complete Kubernetes Course (Part 1)

Github Repositories

- learn-devops-the-complete-kubernetes-course.

- on-prem-or-cloud-agnostic-kubernetes.

- kubernetes-coursee.

- http-echo.

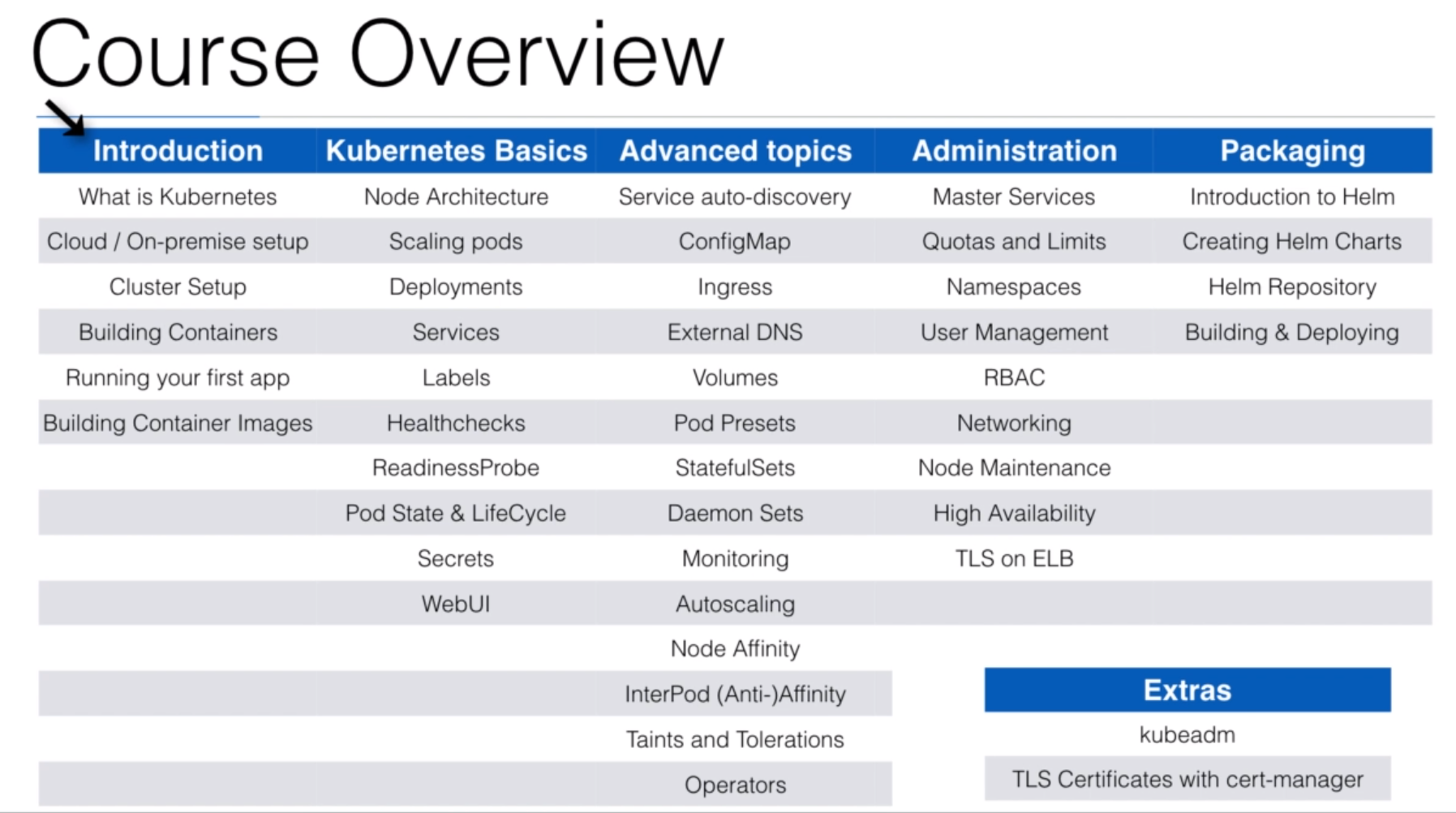

The Learn DevOps: The Complete Kubernetes Course Udemy course helps learn how Kubernetes will run and manage your containerized applications and to build, deploy, use, and maintain Kubernetes.

Other parts:

- Learn DevOps: The Complete Kubernetes Course (Part 2)

- Learn DevOps: The Complete Kubernetes Course (Part 3)

- Learn DevOps: The Complete Kubernetes Course (Part 4)

Table of contents

- What I've learned

- Section: 0. Introduction

- Section: 1. Introduction to Kubernetes

- 4. Kubernetes Introduction

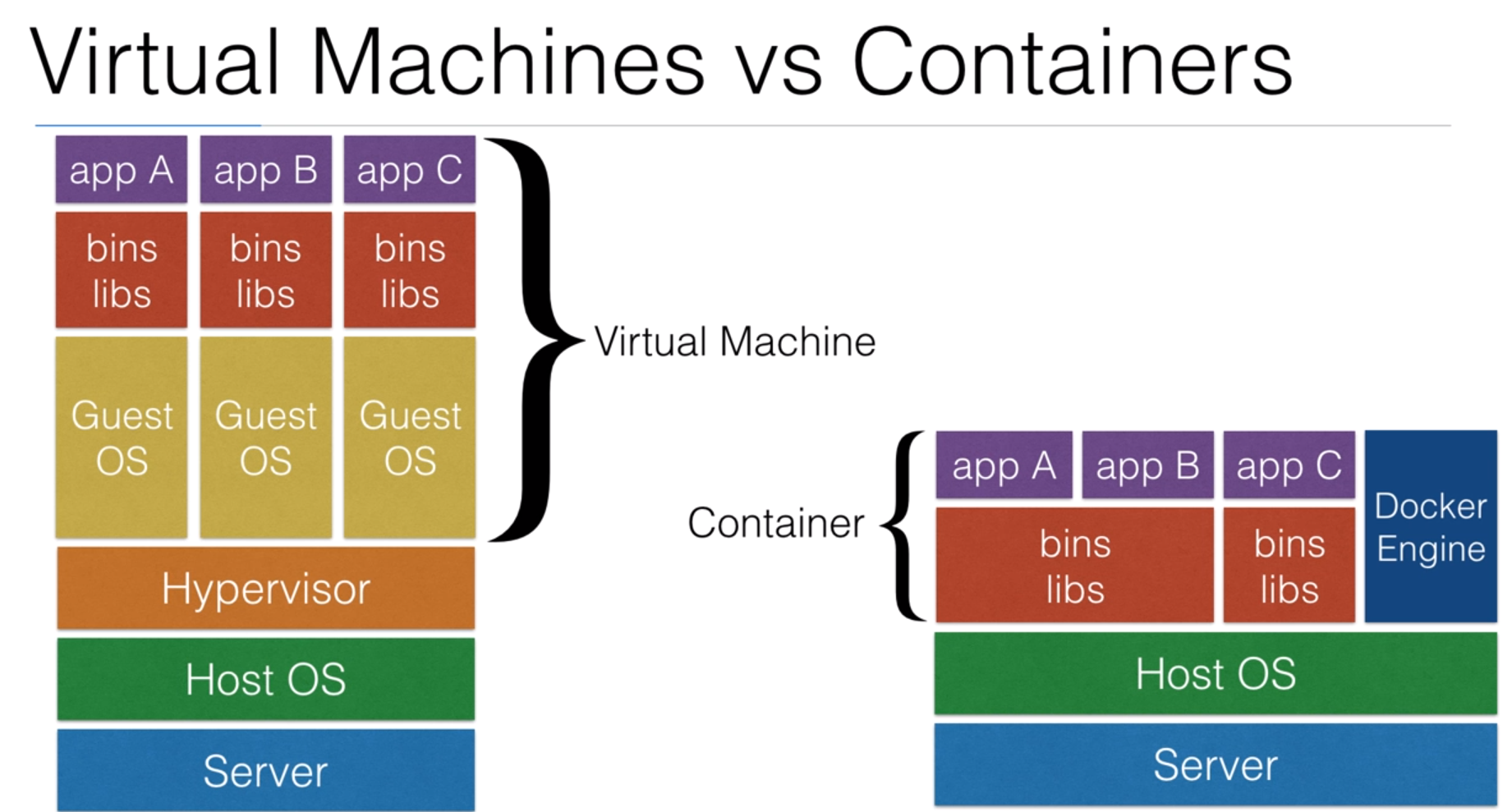

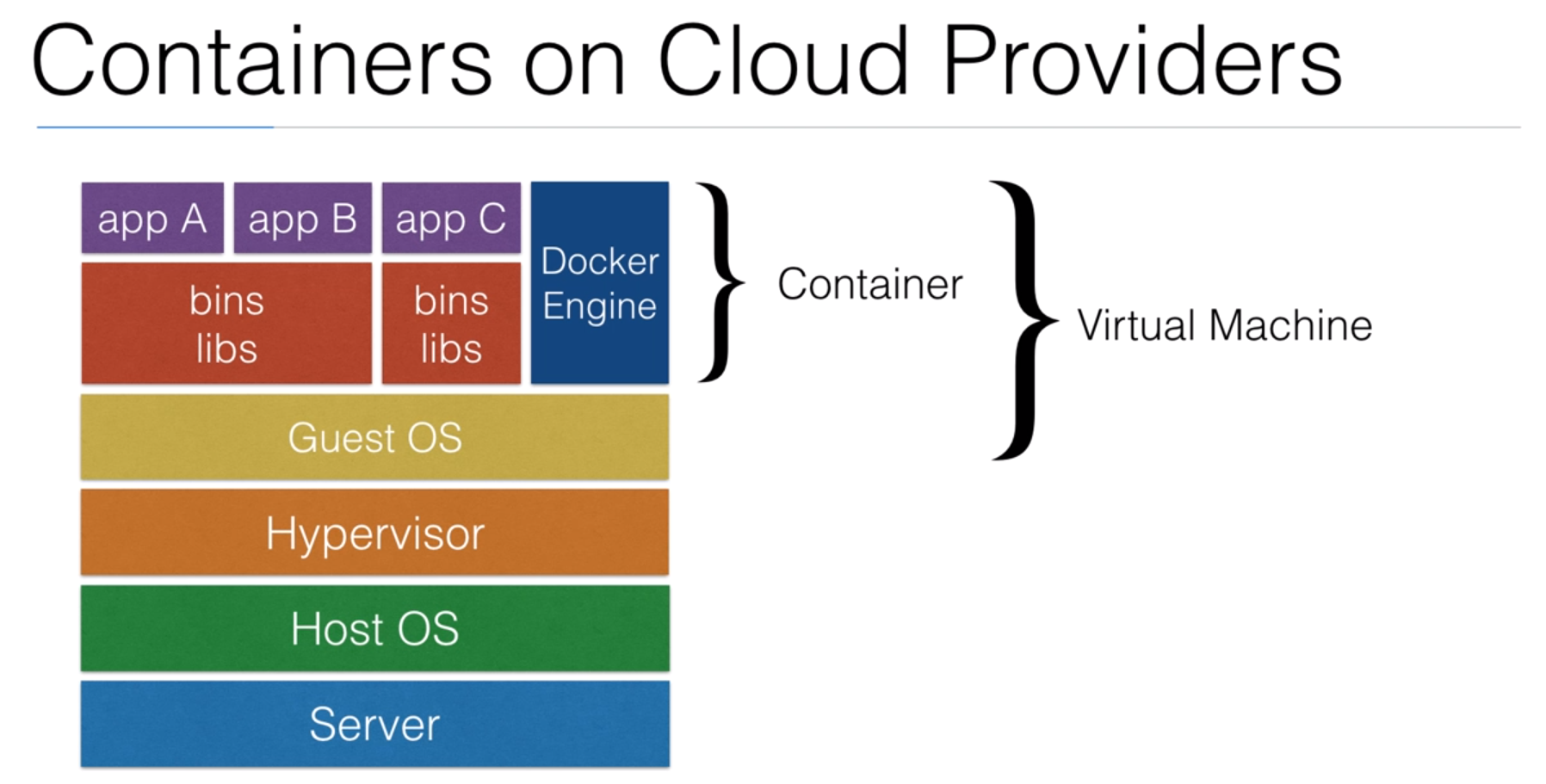

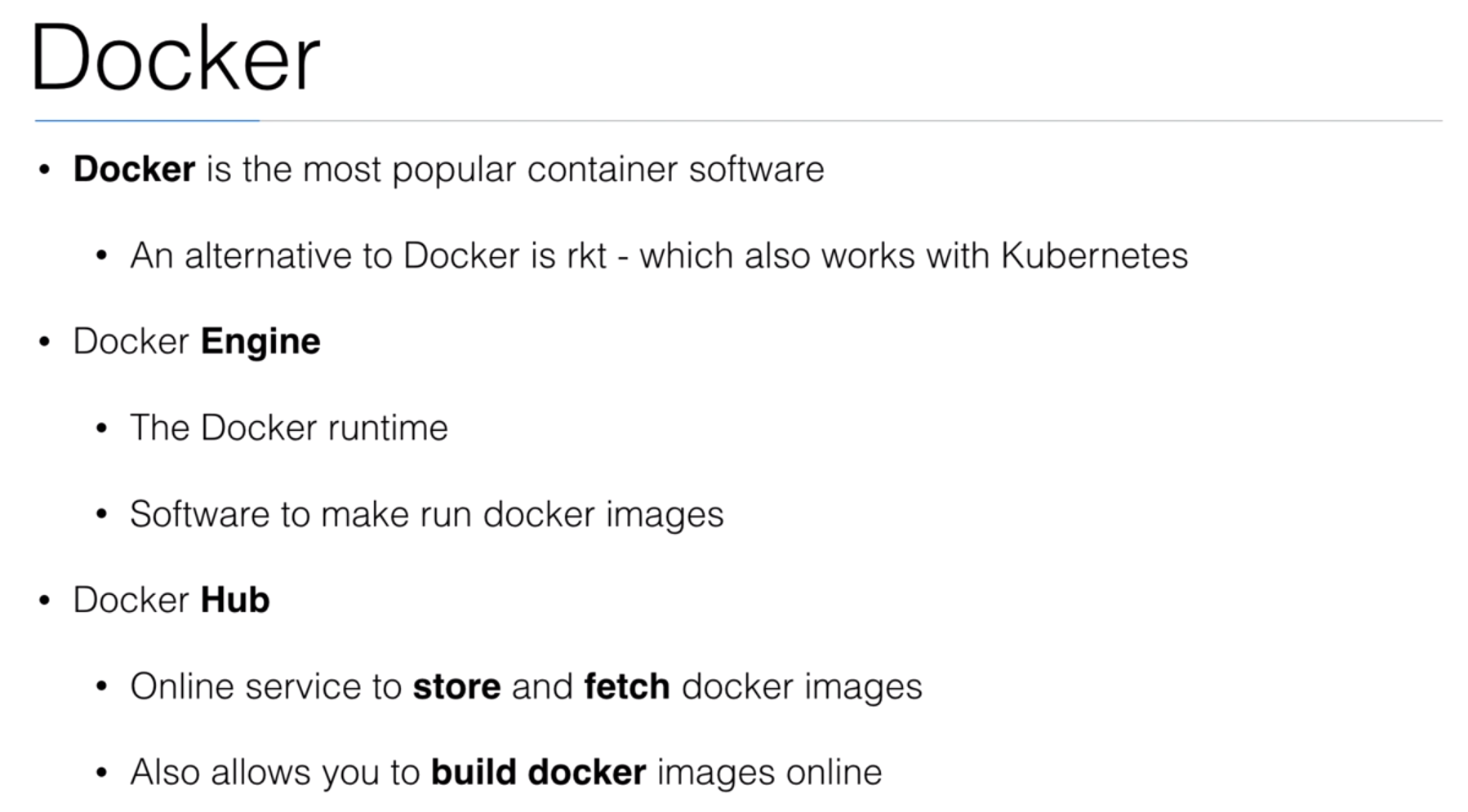

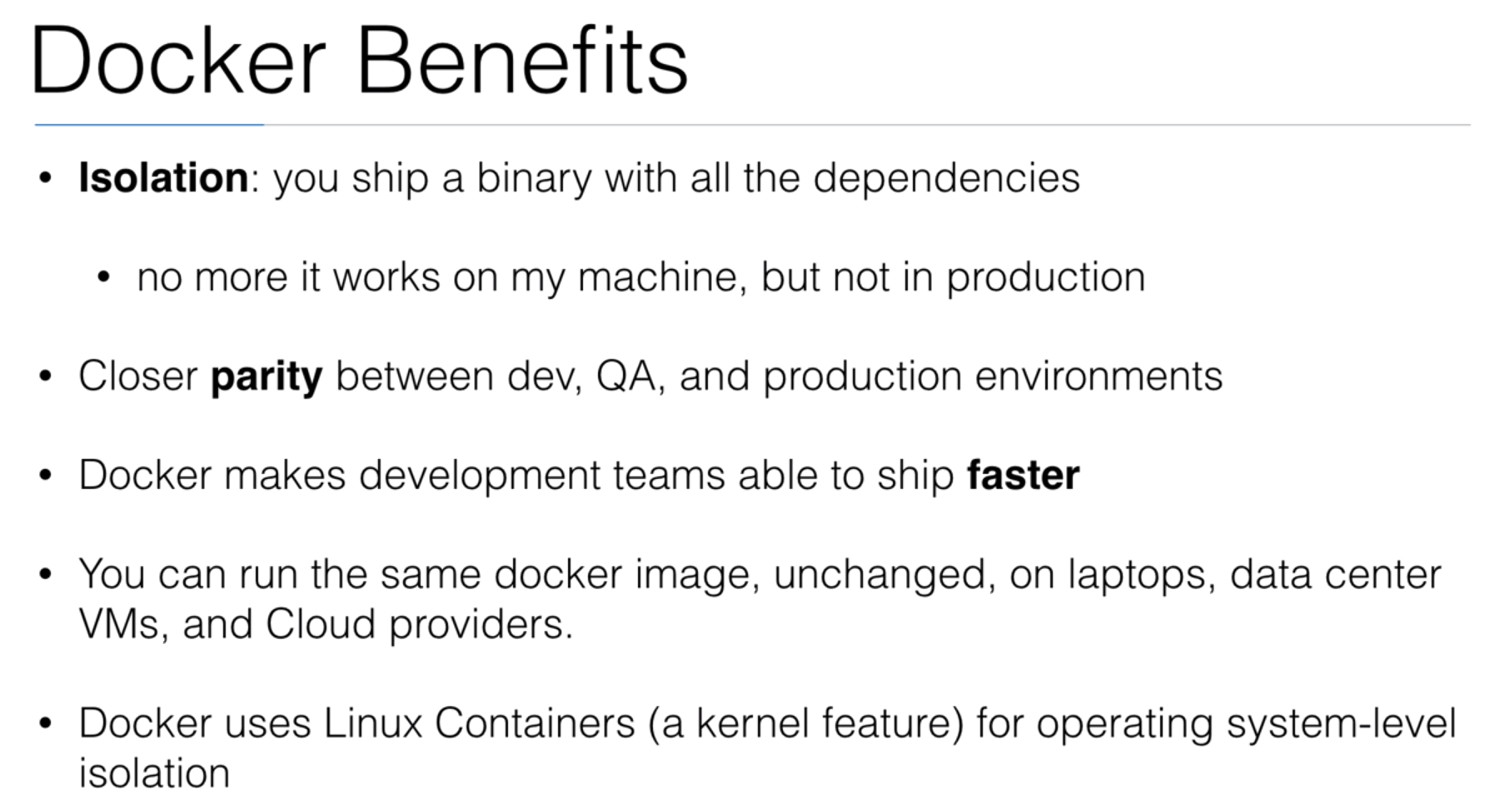

- 5. Containers Introduction

- 6. Kubernetes Setup

- 7. Local Setup with minikube

- 8. Demo: Minikube

- 9. Installing Kubernetes using the Docker Client

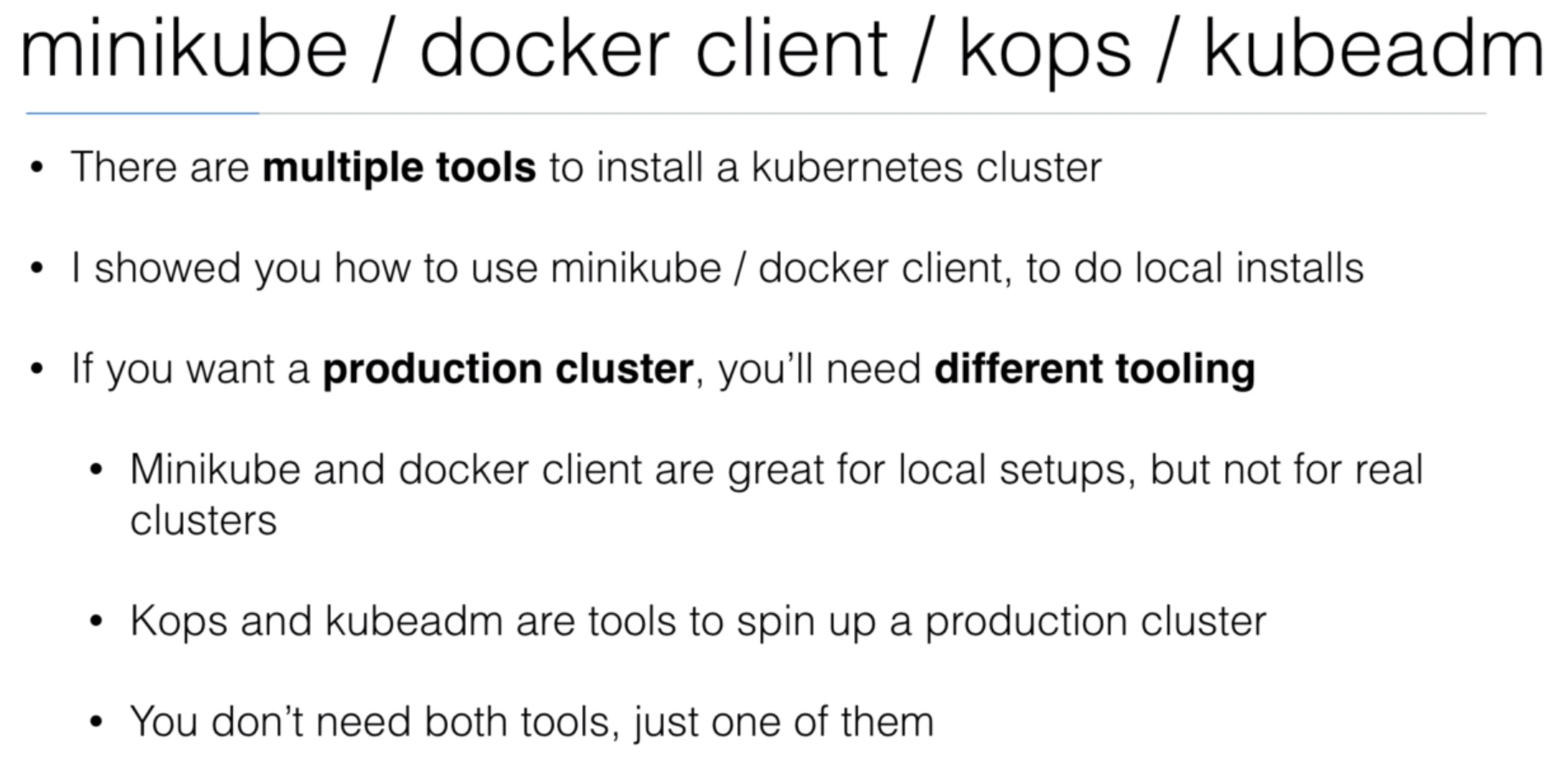

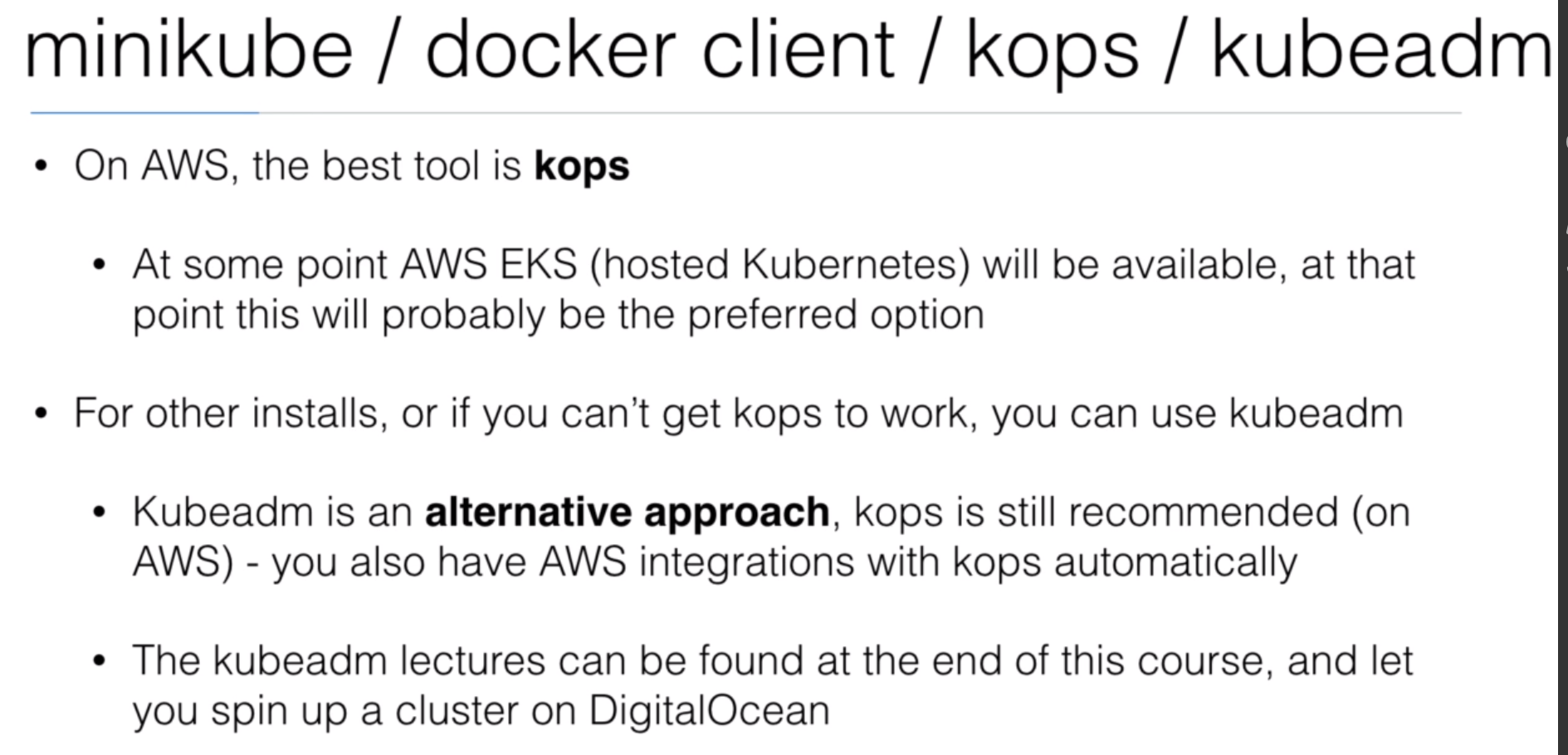

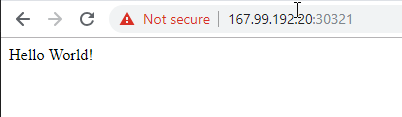

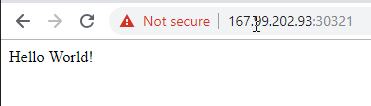

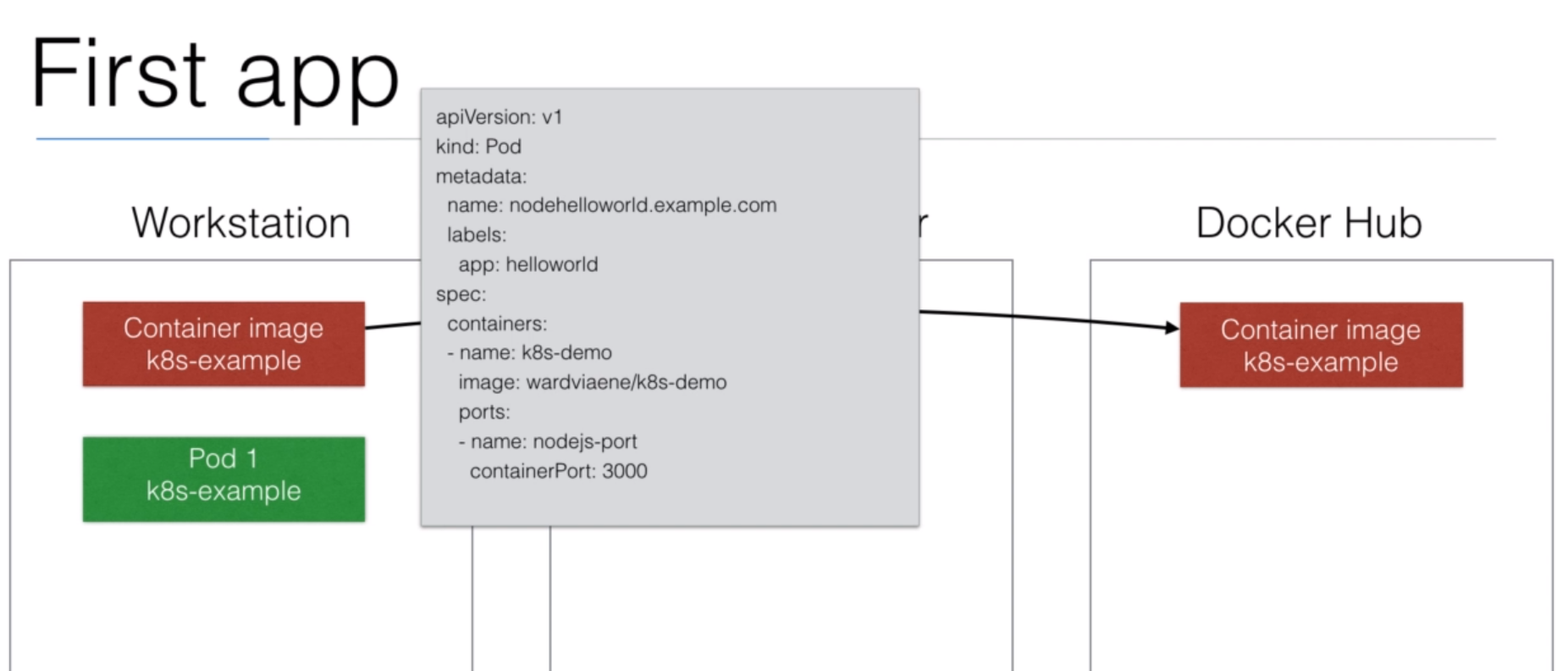

- 10. Minikube vs Docker Client vs Kops vs Kubeadm

- 11. Introduction to Kops

- 12. Demo: Preparing kops install

- 13. Demo: Preparing AWS for kops install

- 14. Demo: DNS Troubleshooting (Optional)

- 15. Demo: Cluster setup on AWS using kops

- 16. Building docker images

- 17. Demo: Building docker images

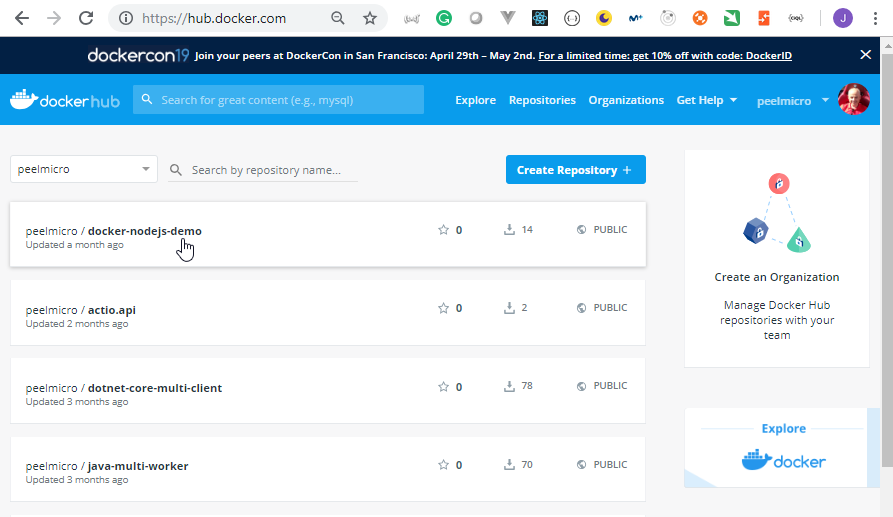

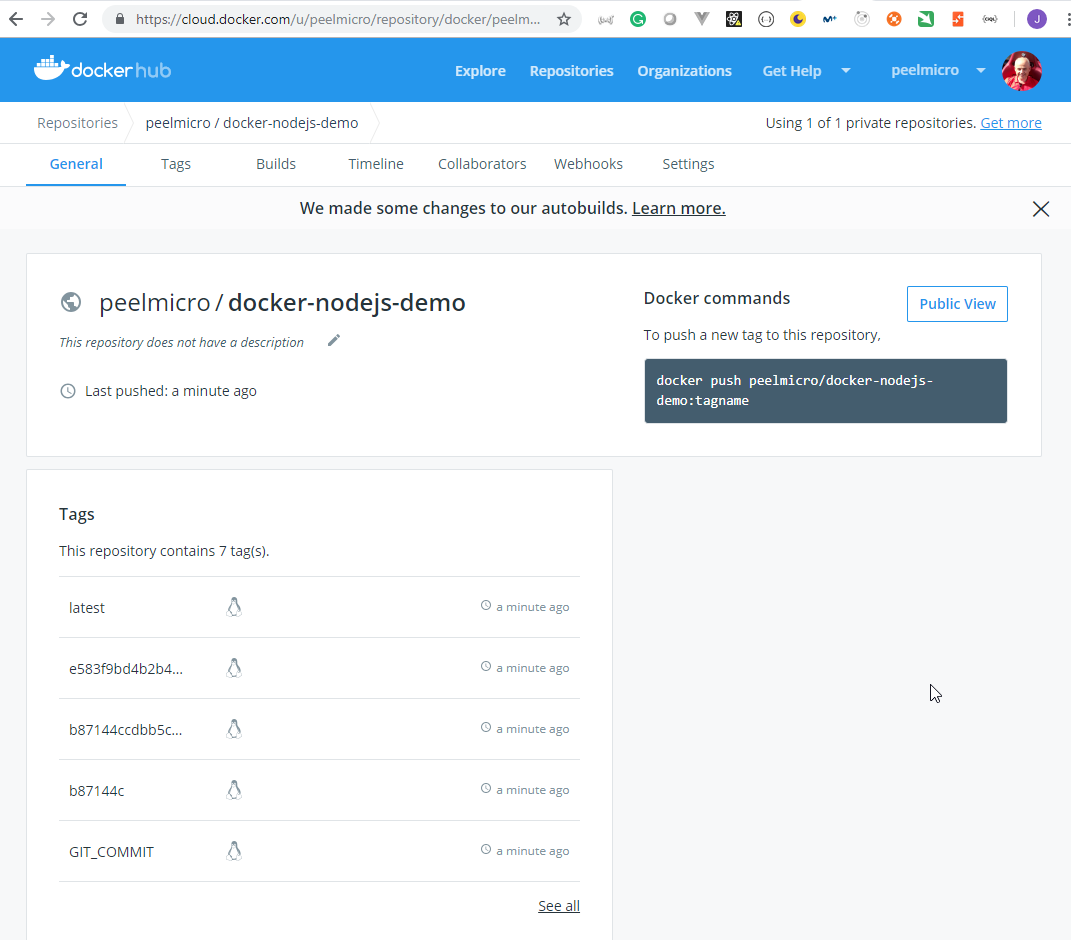

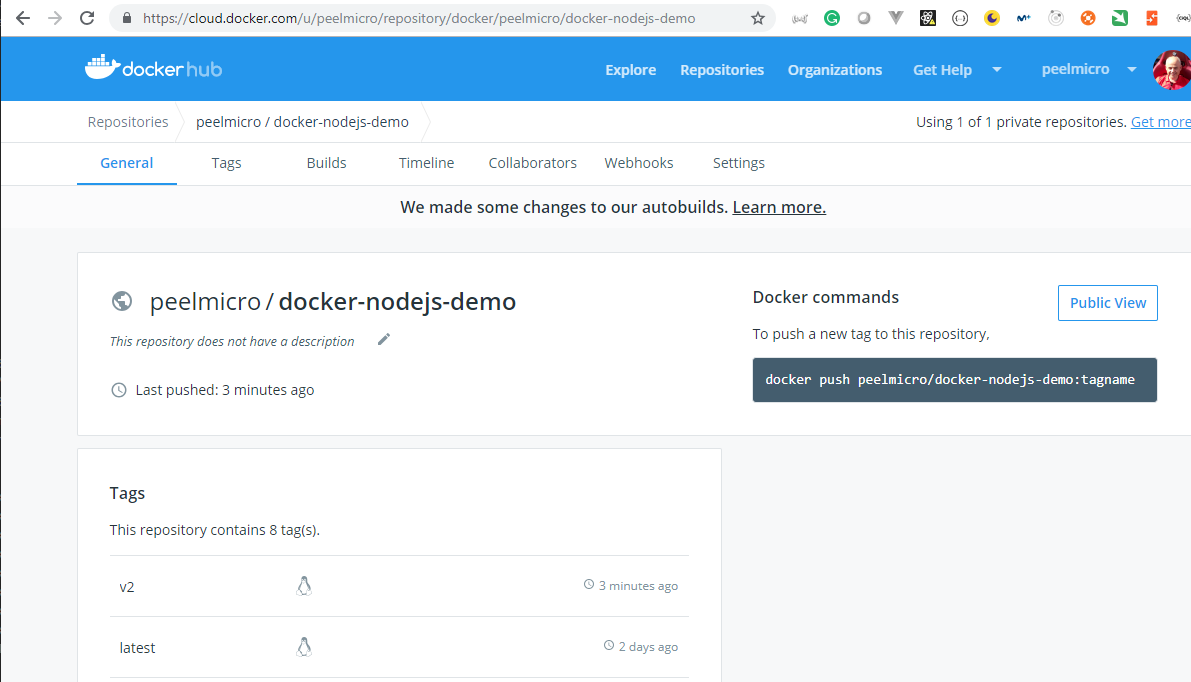

- 18. Docker Image Registry

- 19. Demo: Pushing Docker Image

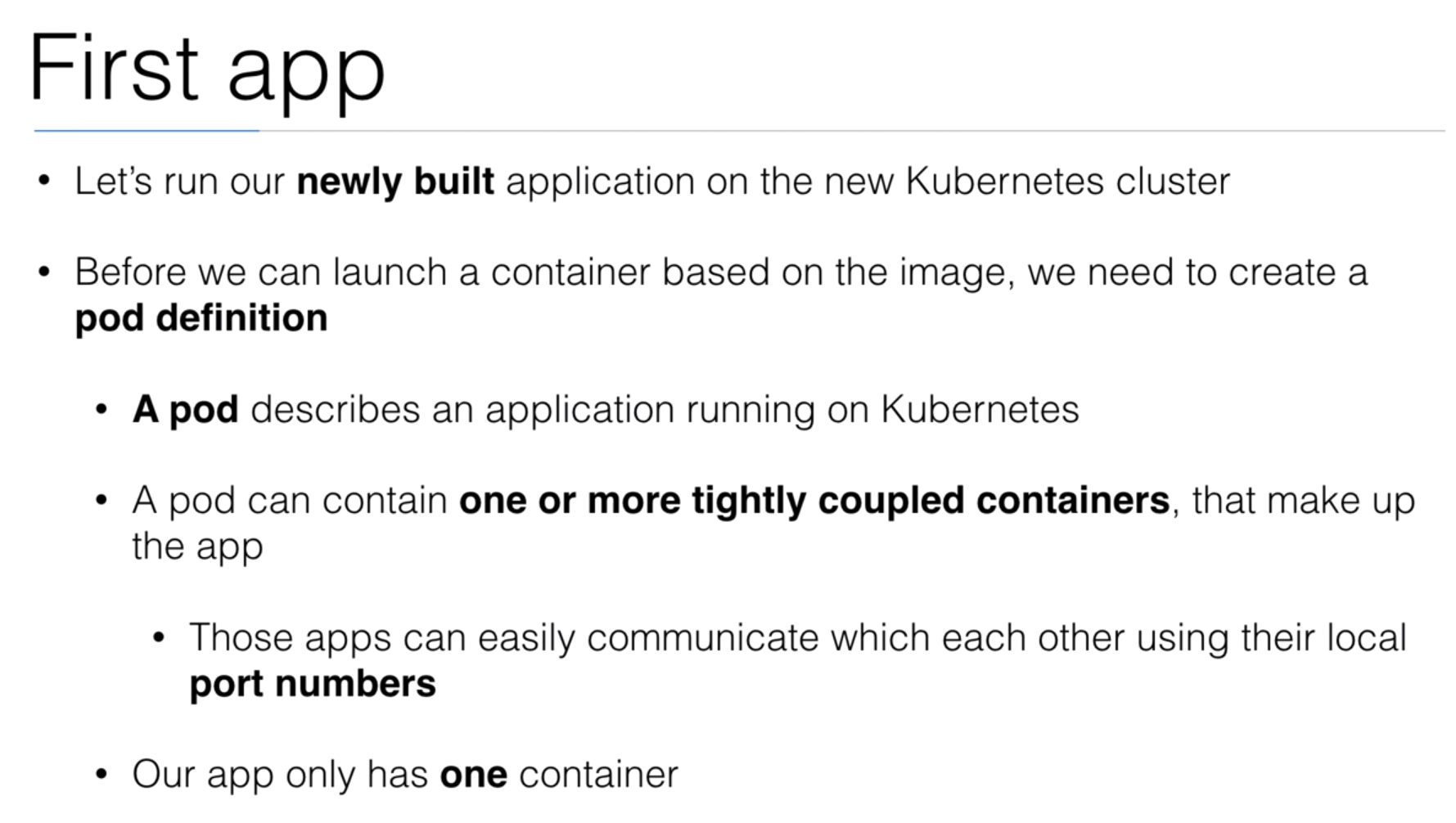

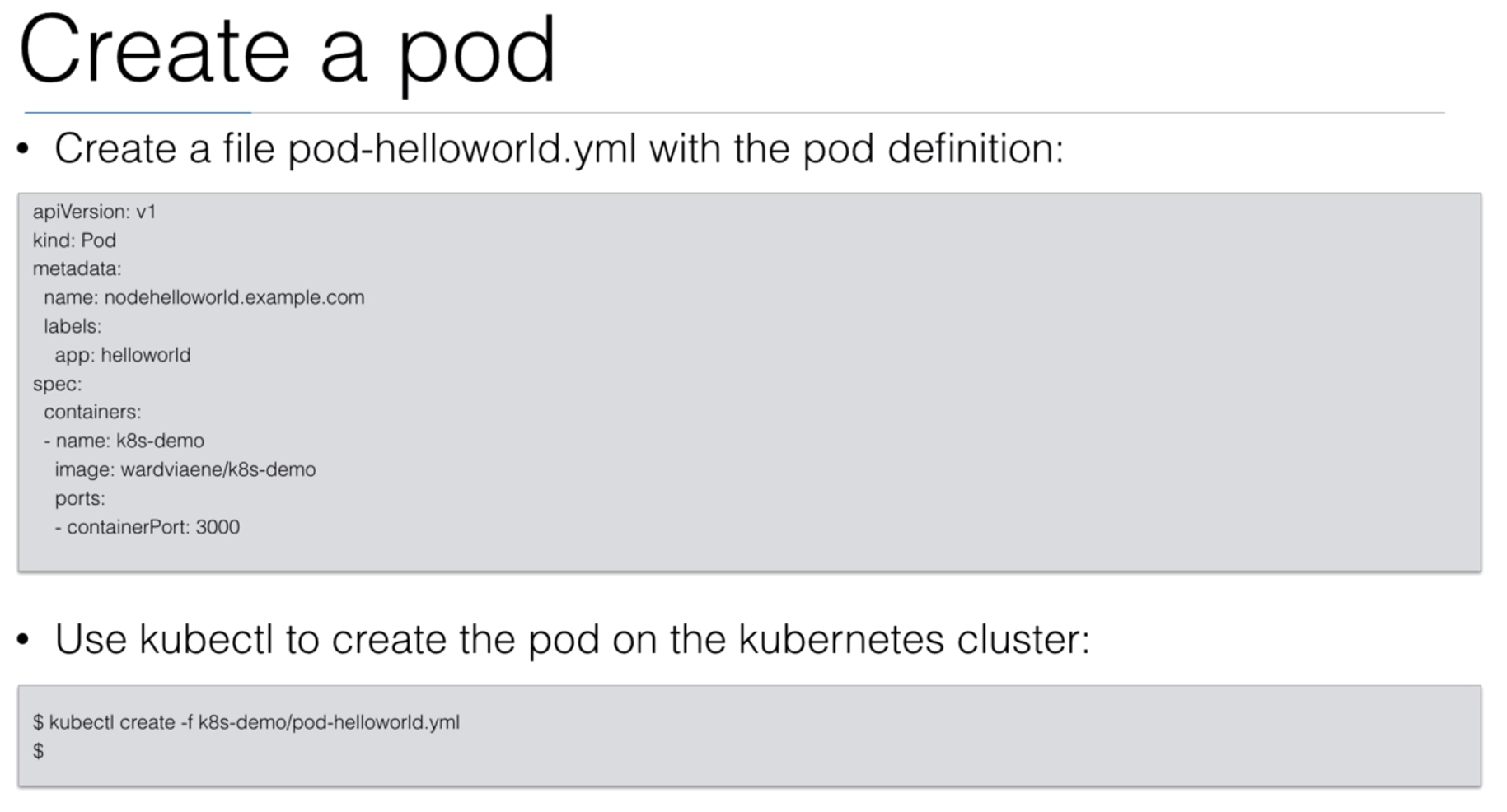

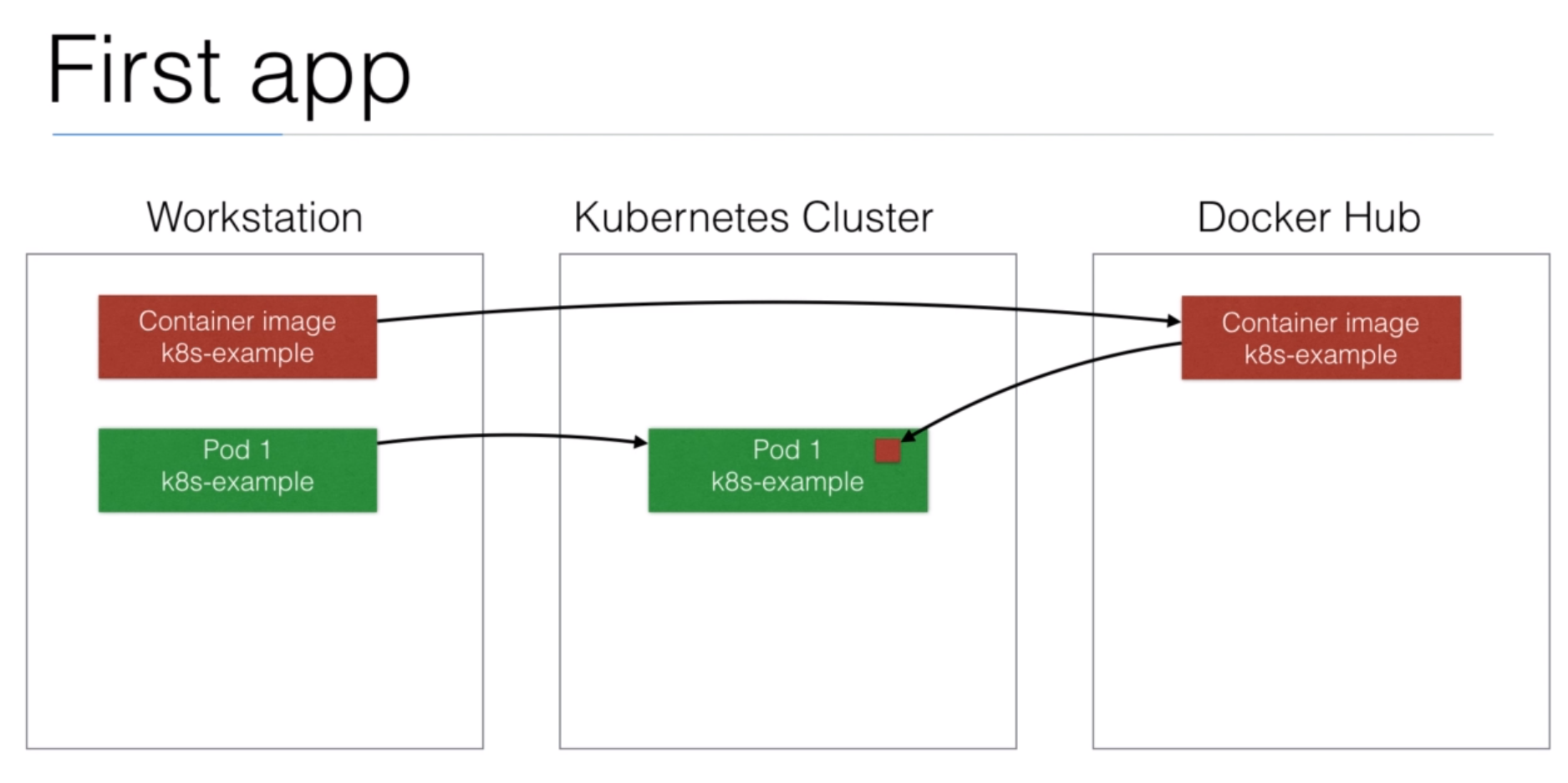

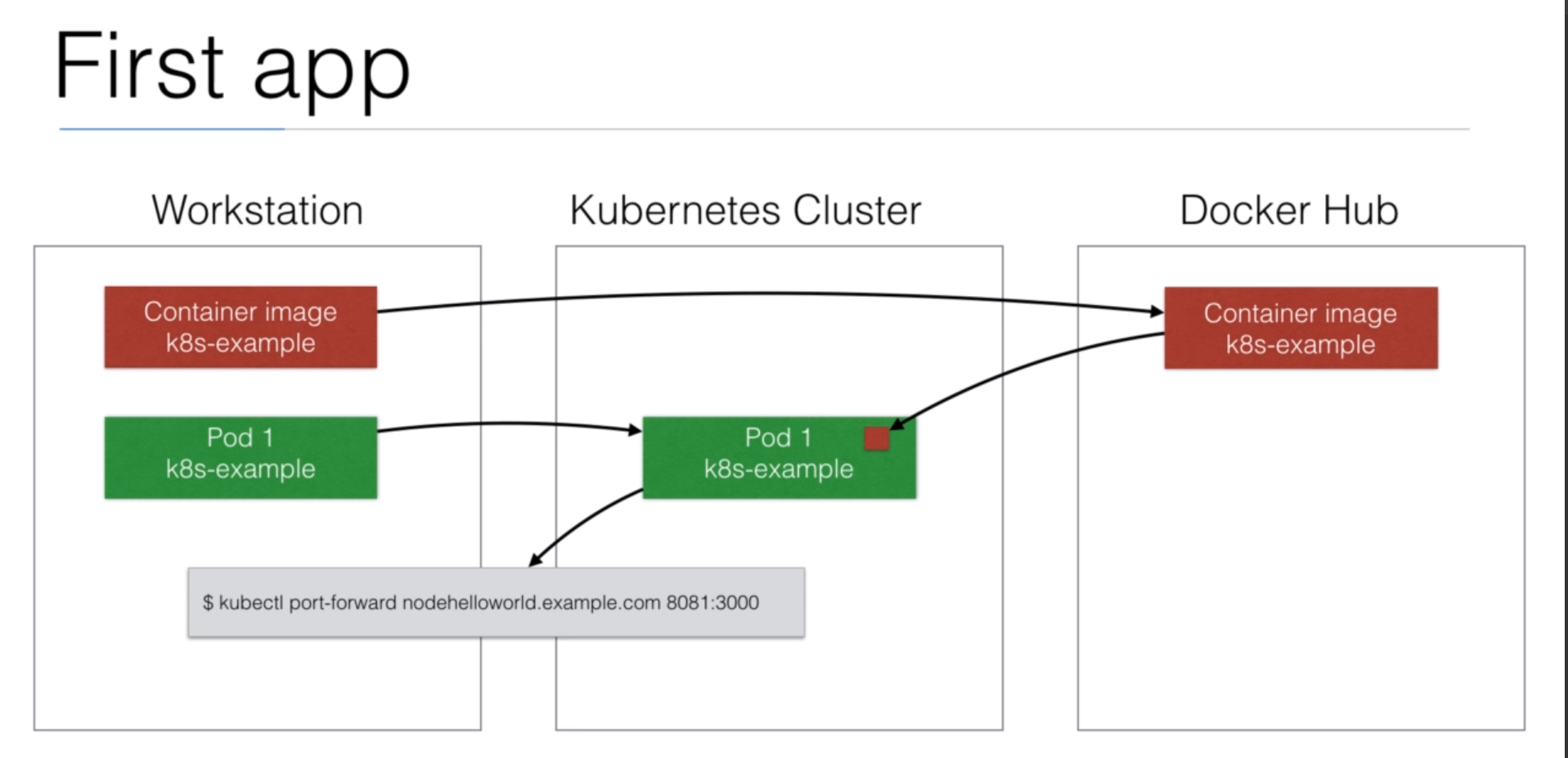

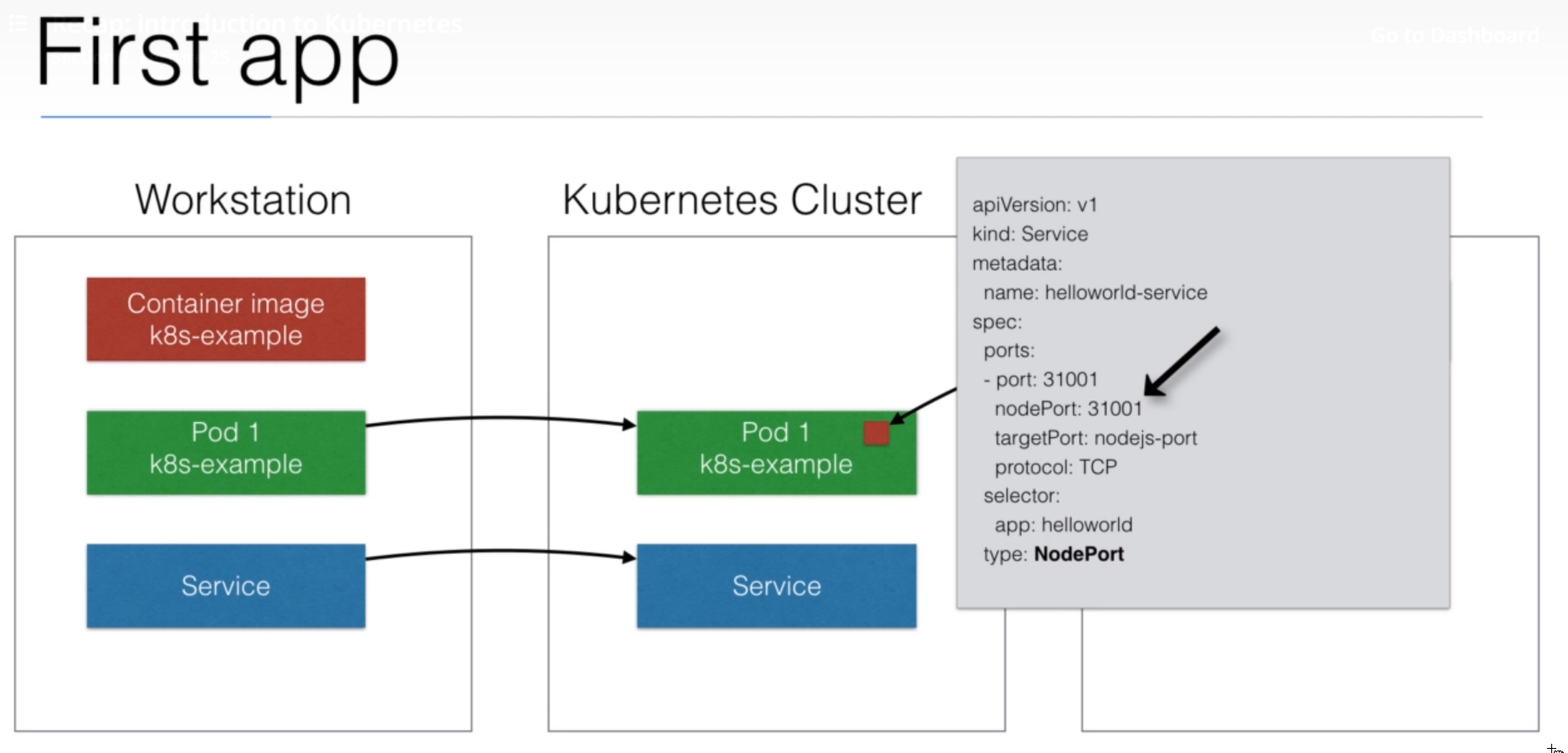

- 20. Running first app on Kubernetes

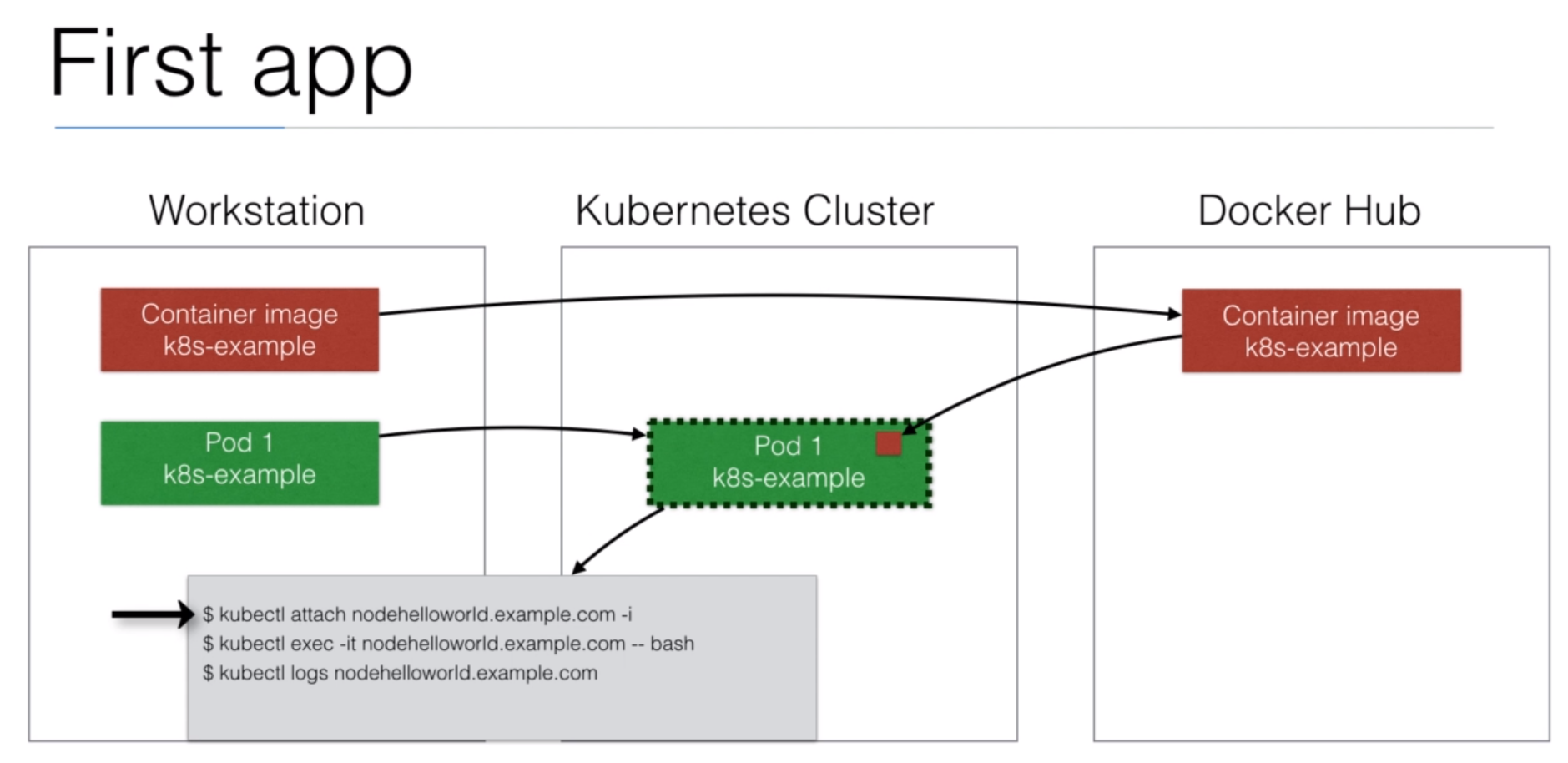

- 21. Demo: Running first app on Kubernetes

- 22. Demo: Useful commands

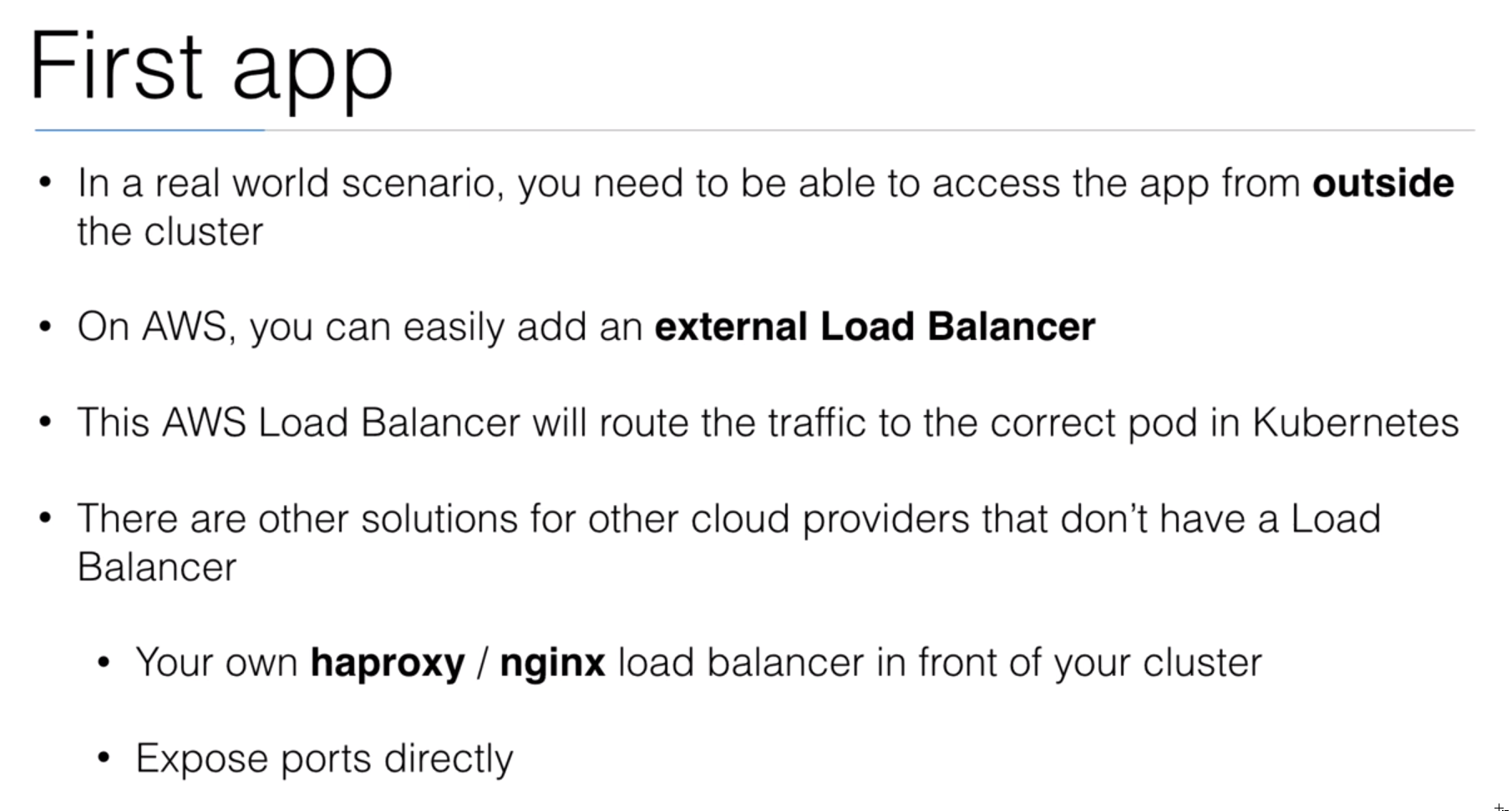

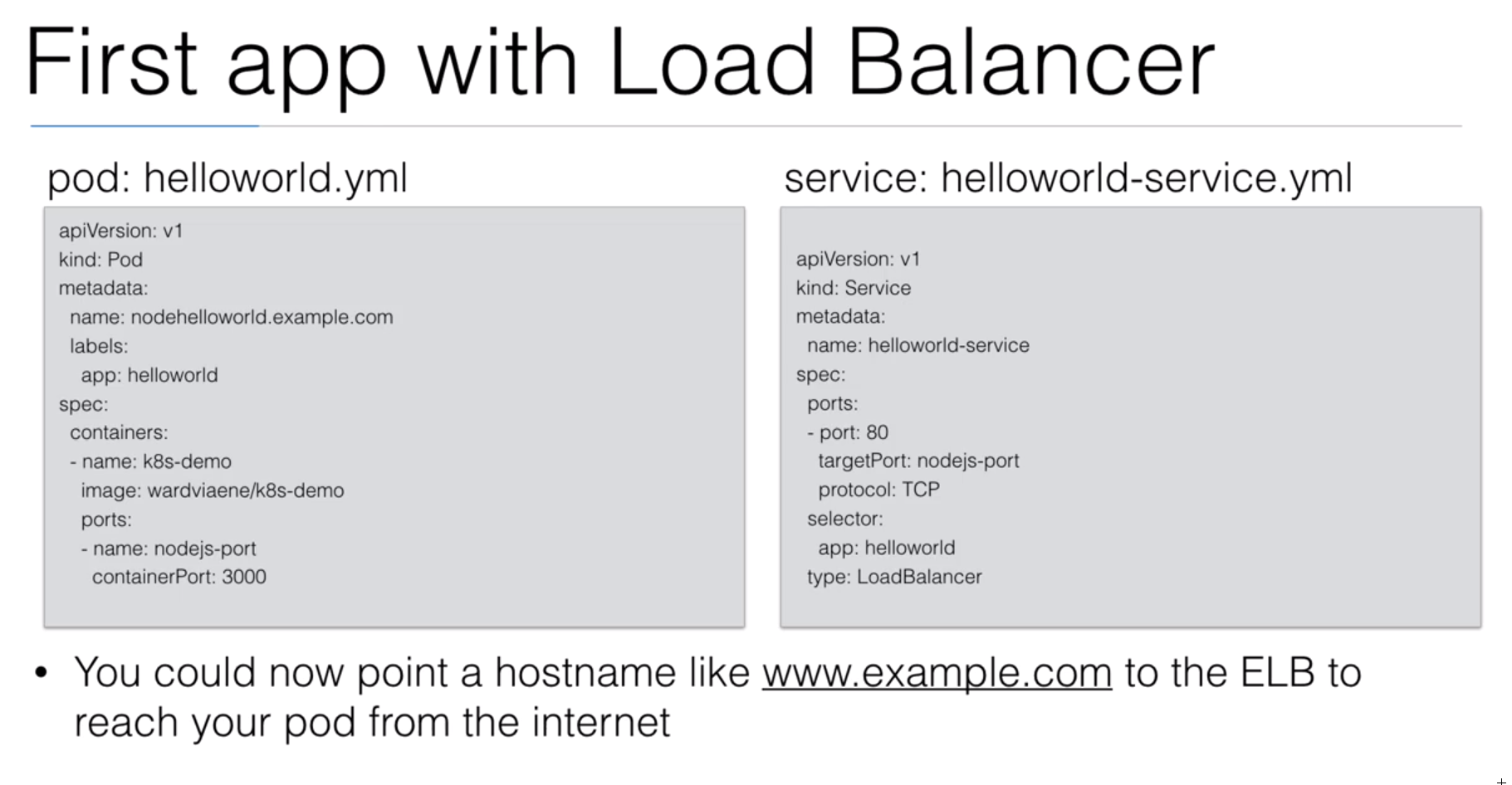

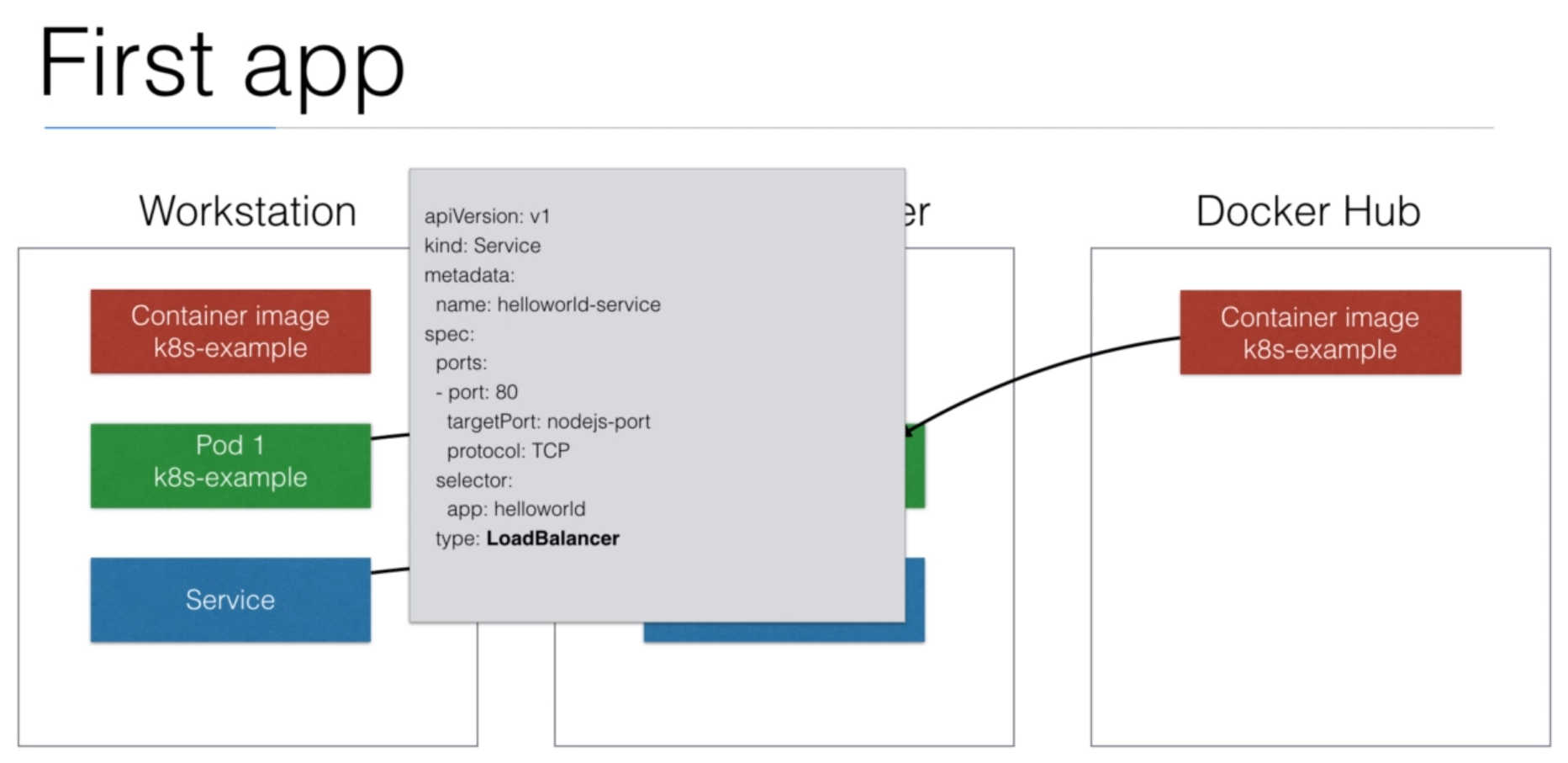

- 23. Service with LoadBalancer

- 24. Demo: Service with AWS ELB LoadBalancer

- 25. Recap: introduction to Kubernetes

- Section: 2. Kubernetes Basics

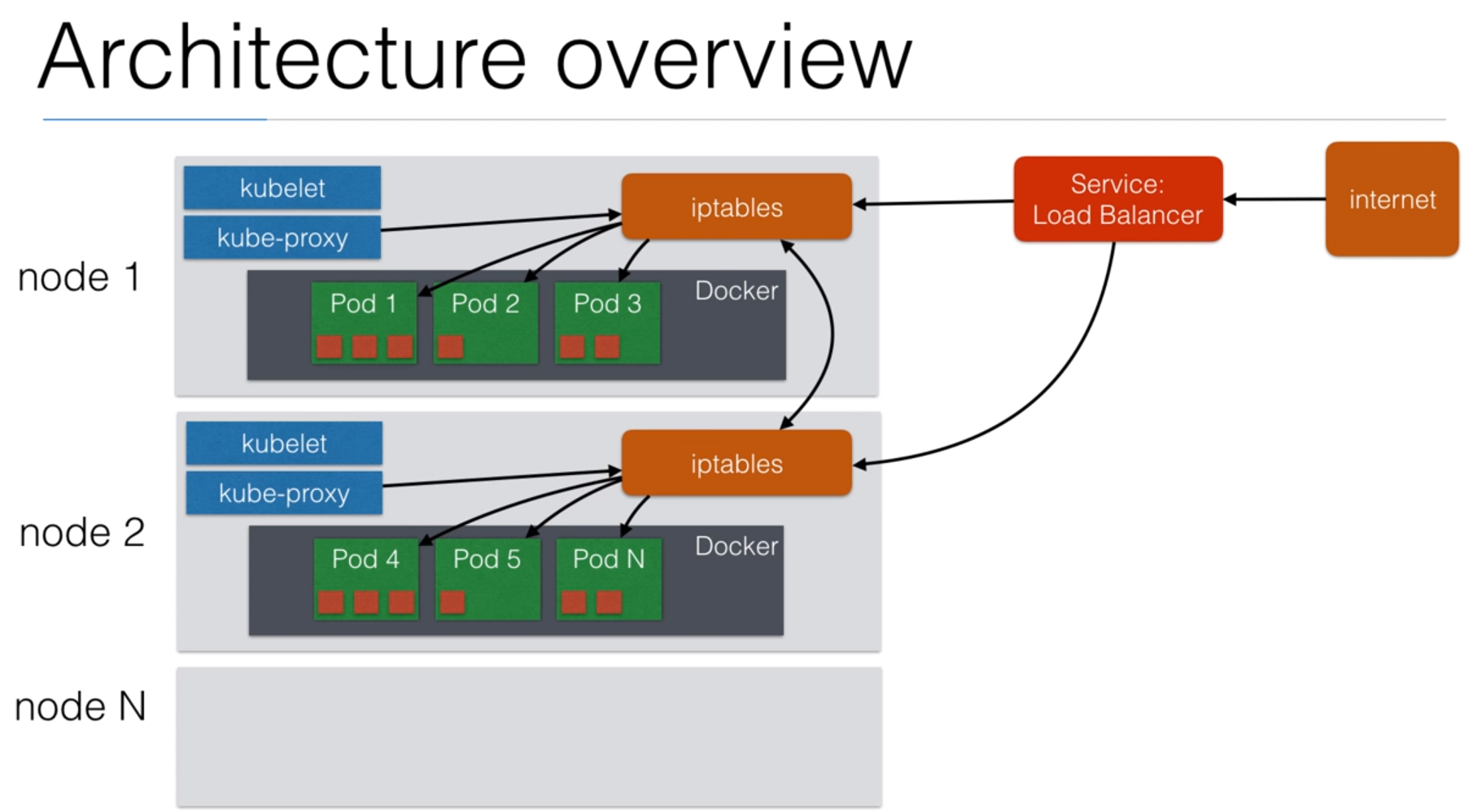

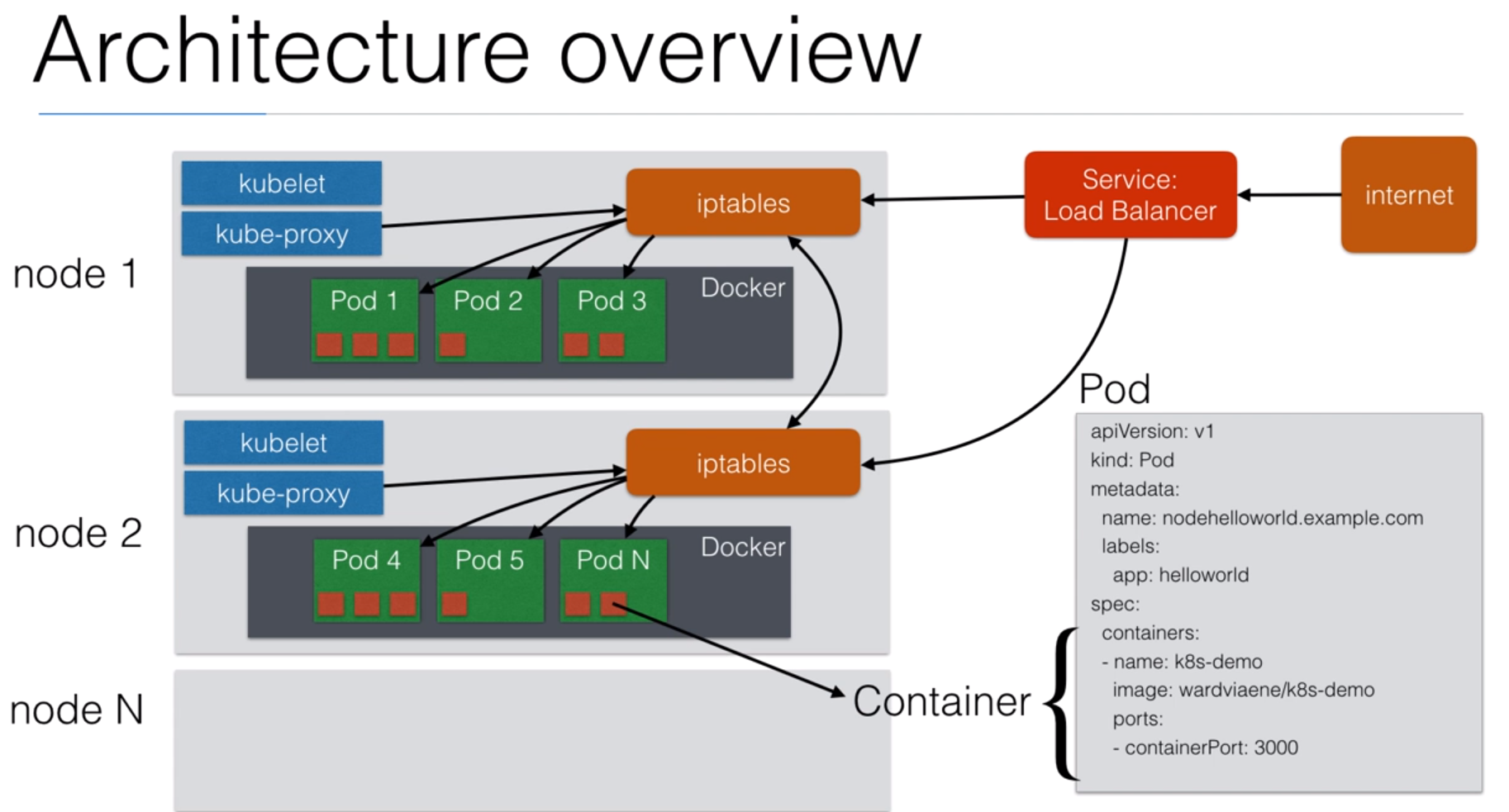

- 26. Node Architecture

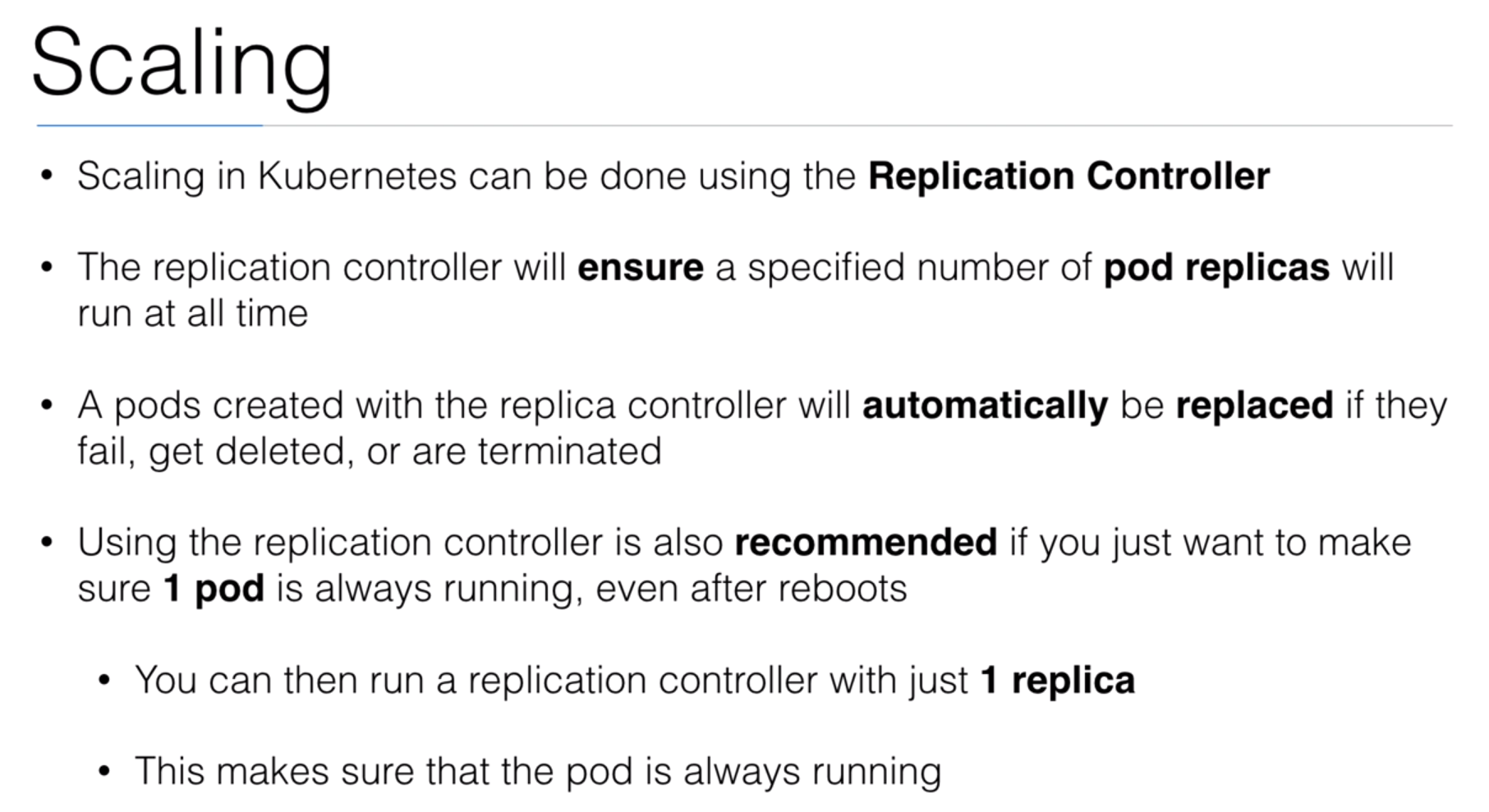

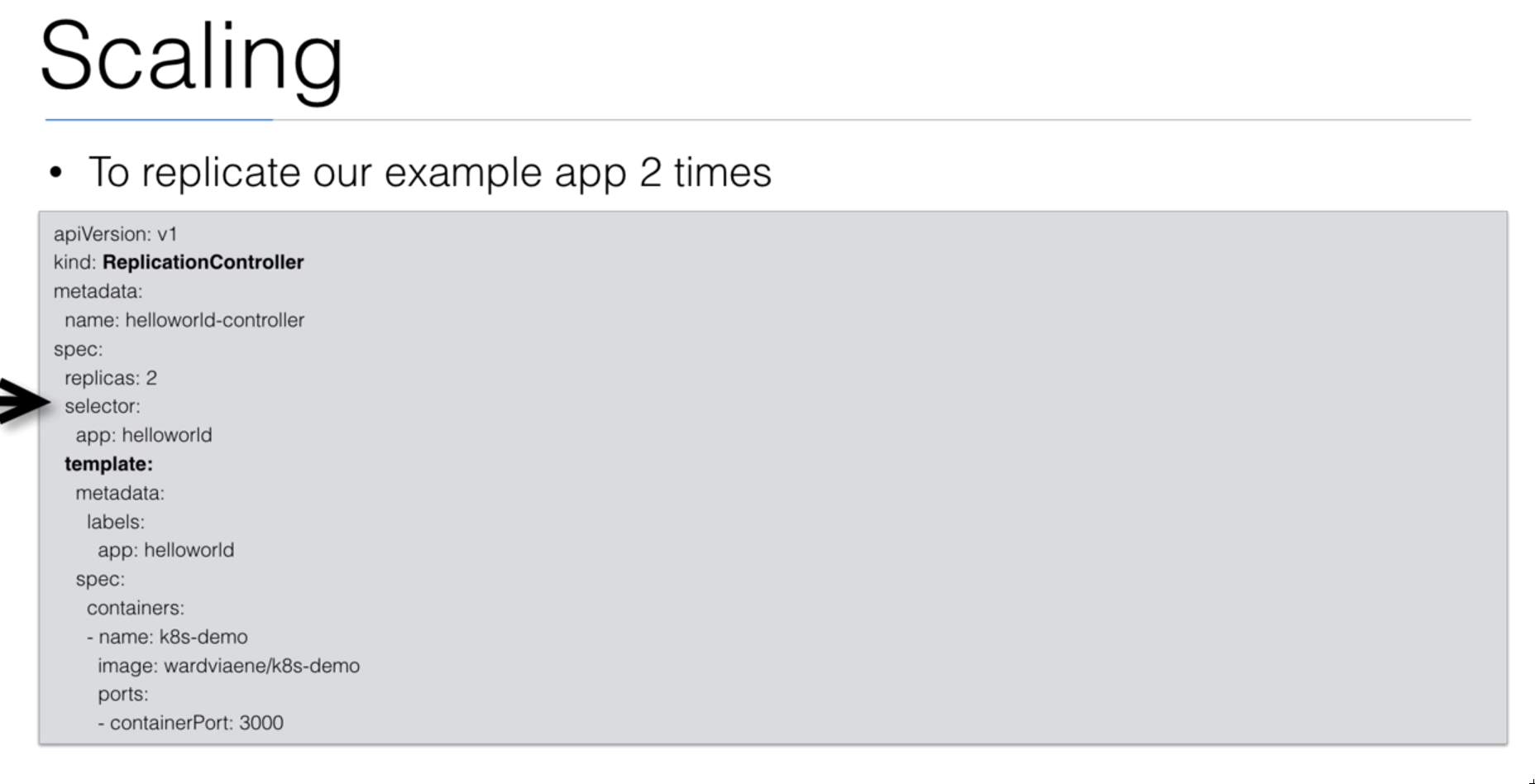

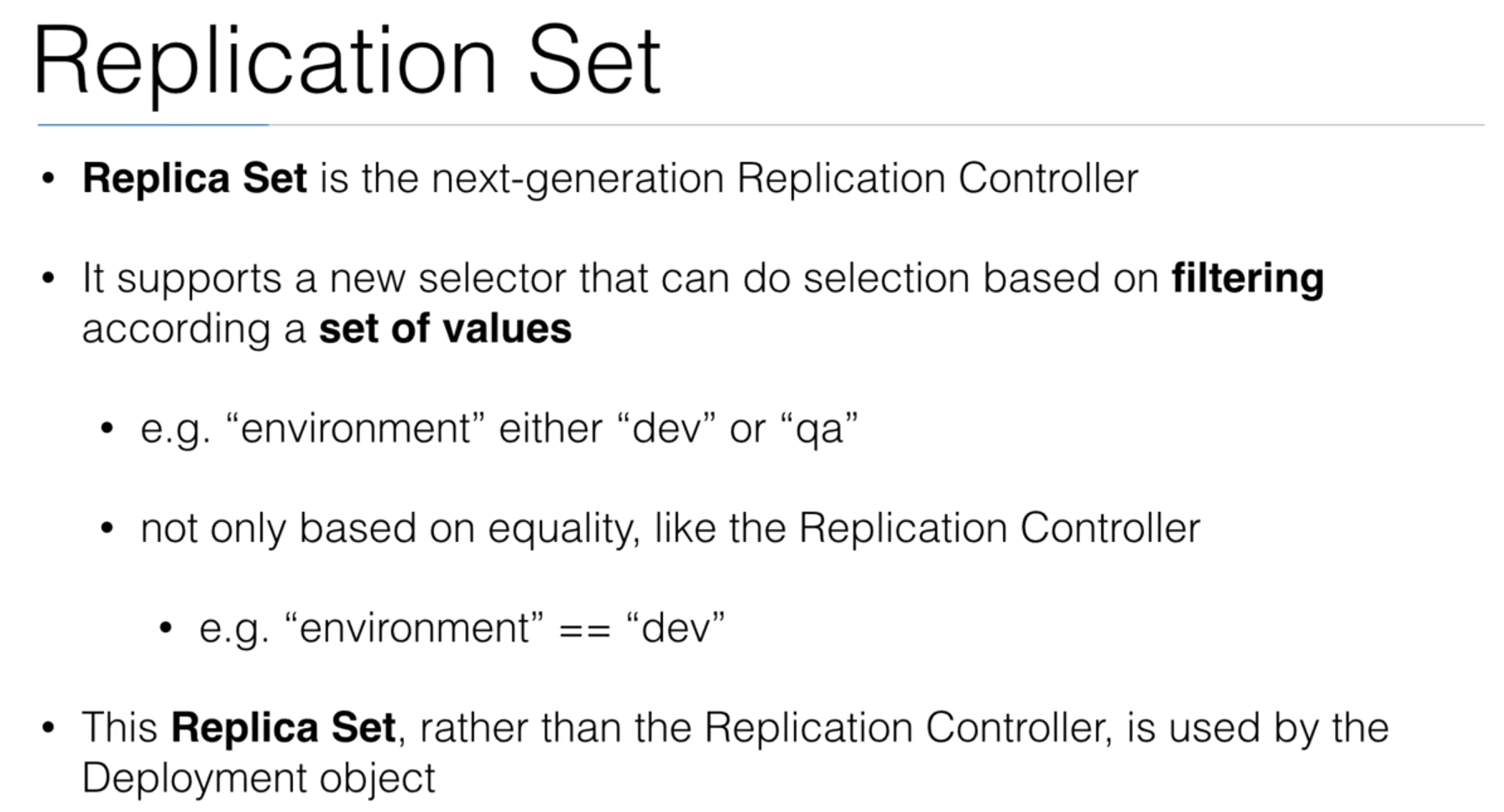

- 27. Replication Controller

- 28. Demo: Replication Controller

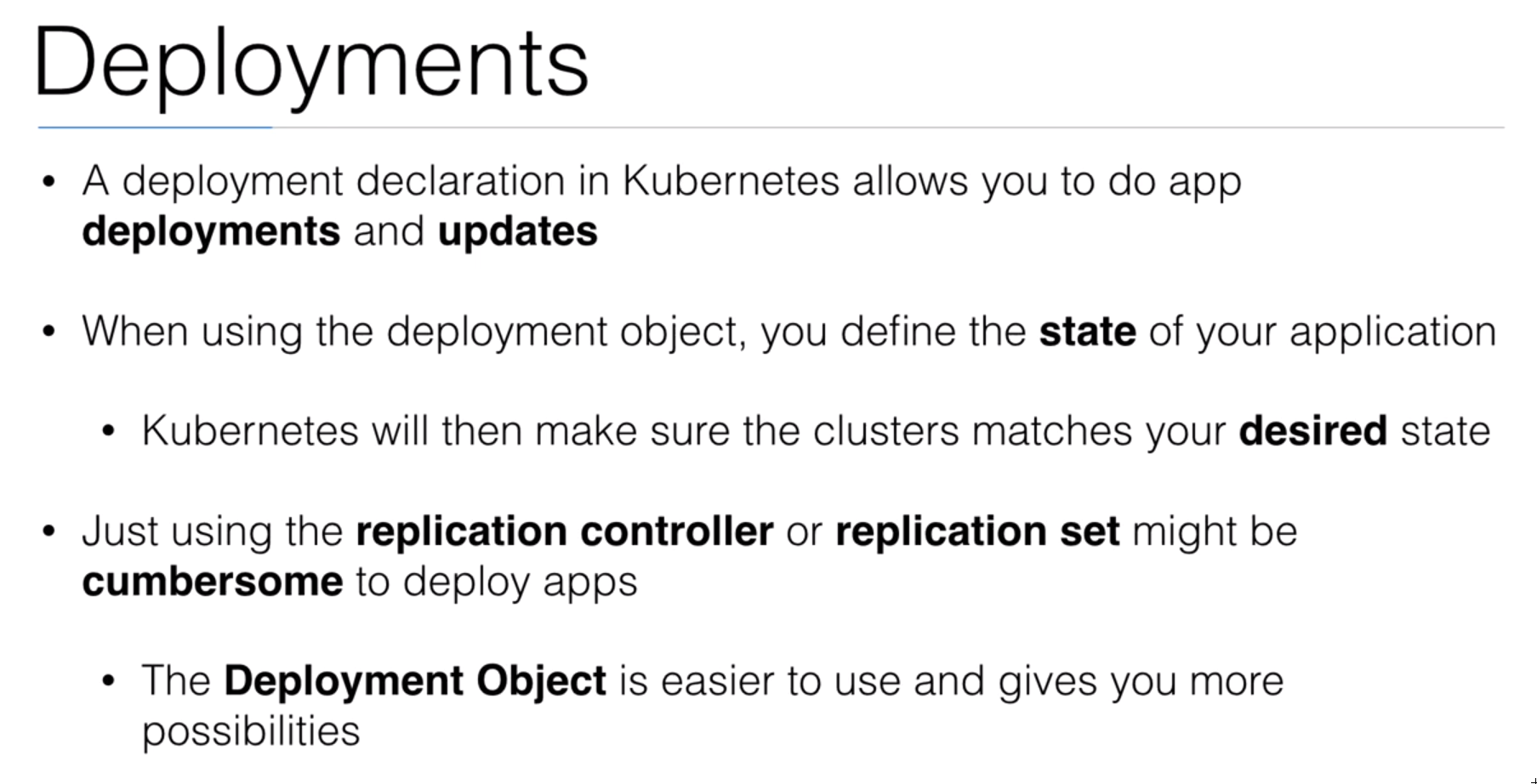

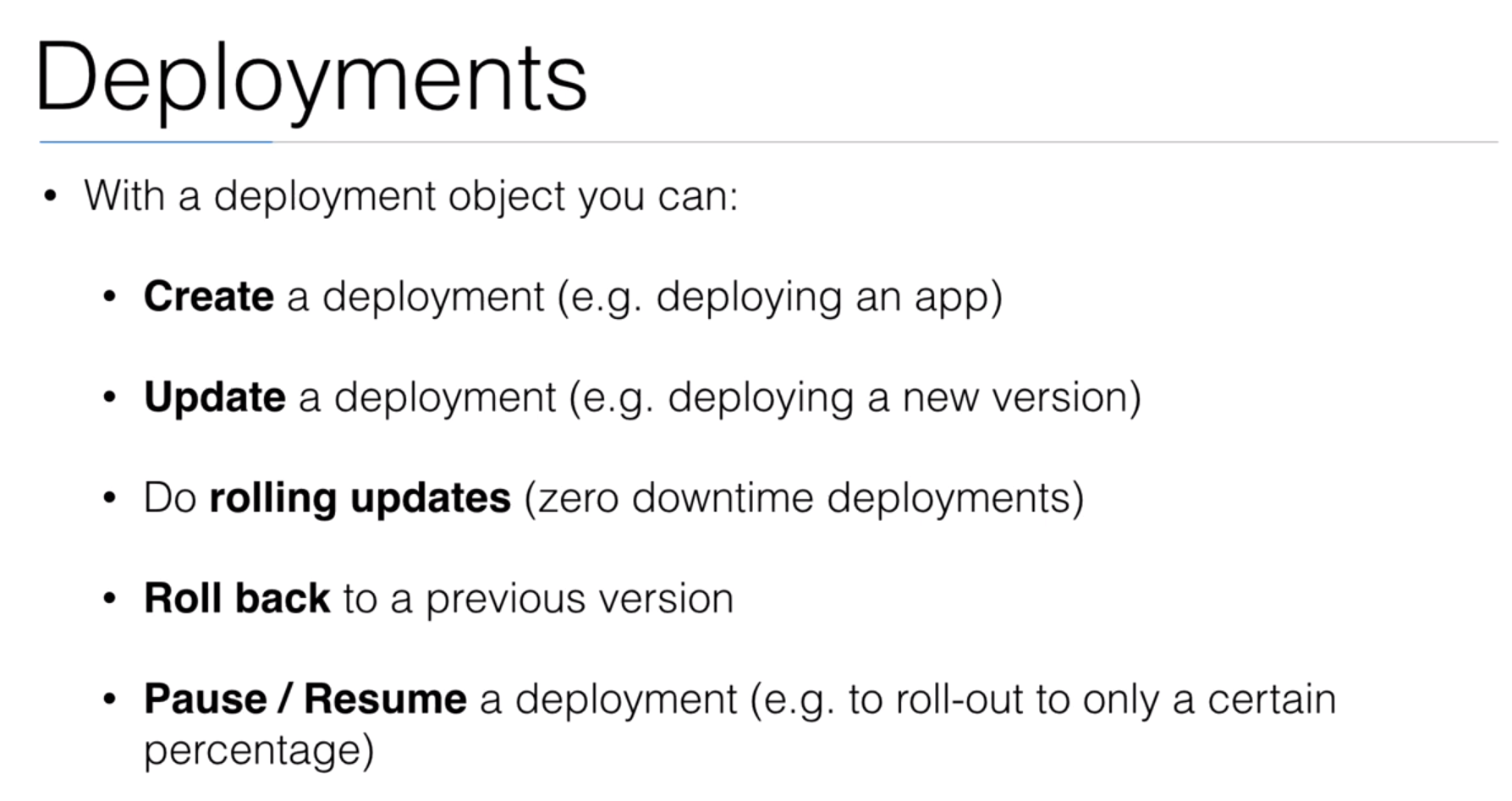

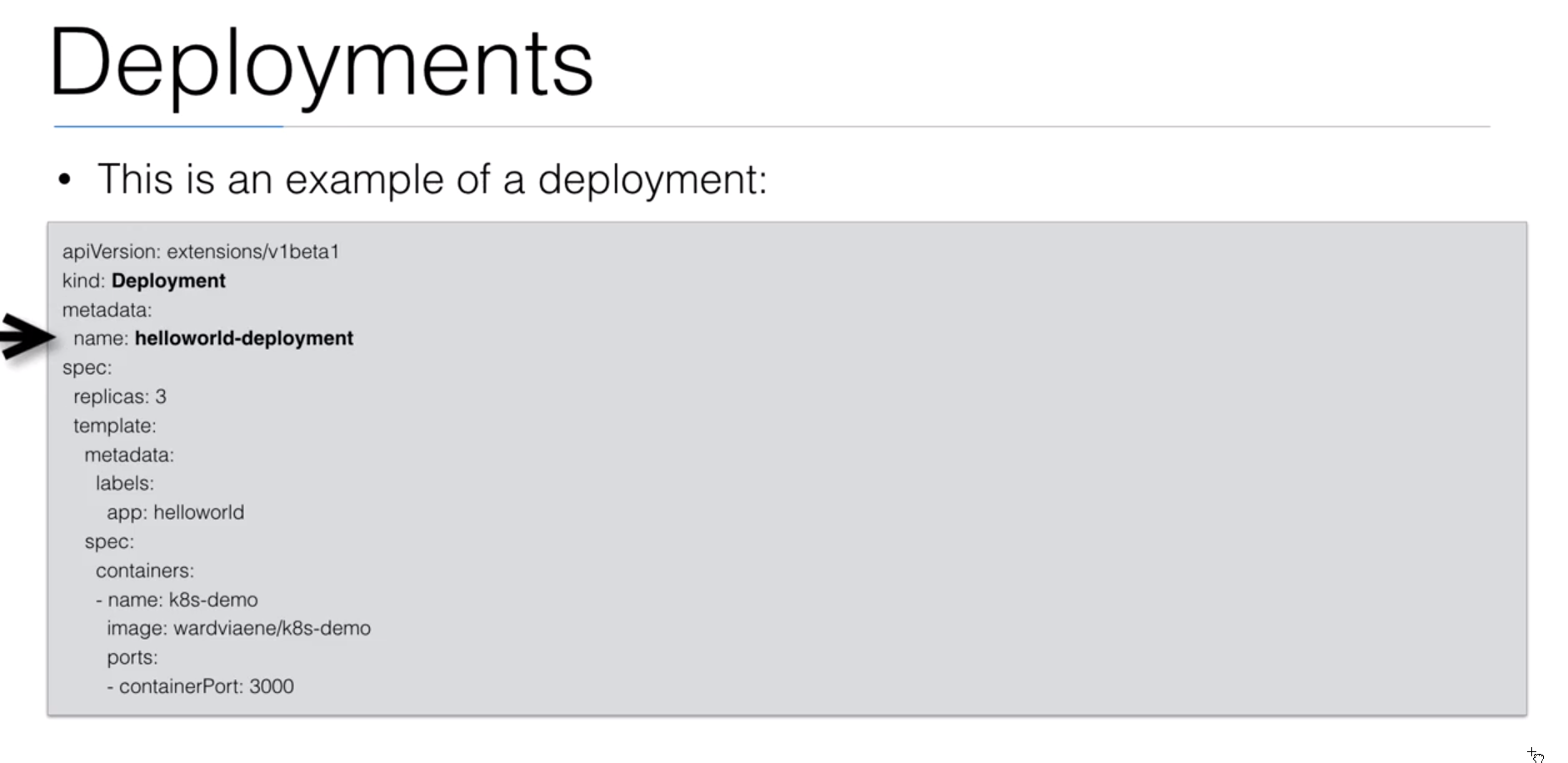

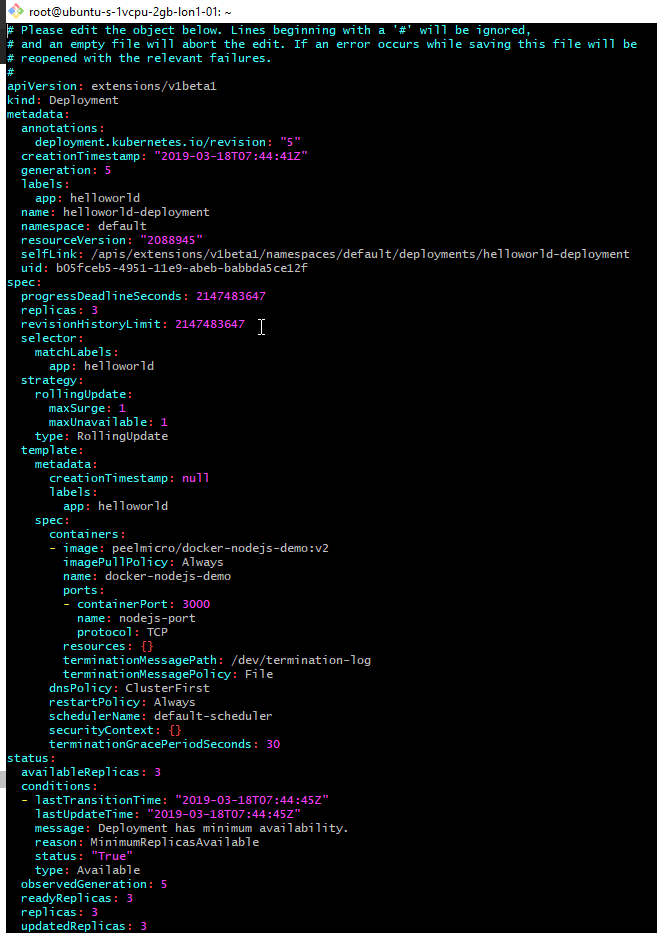

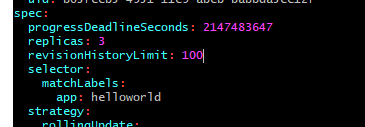

- 29. Deployments

- 30. Demo: Deployments

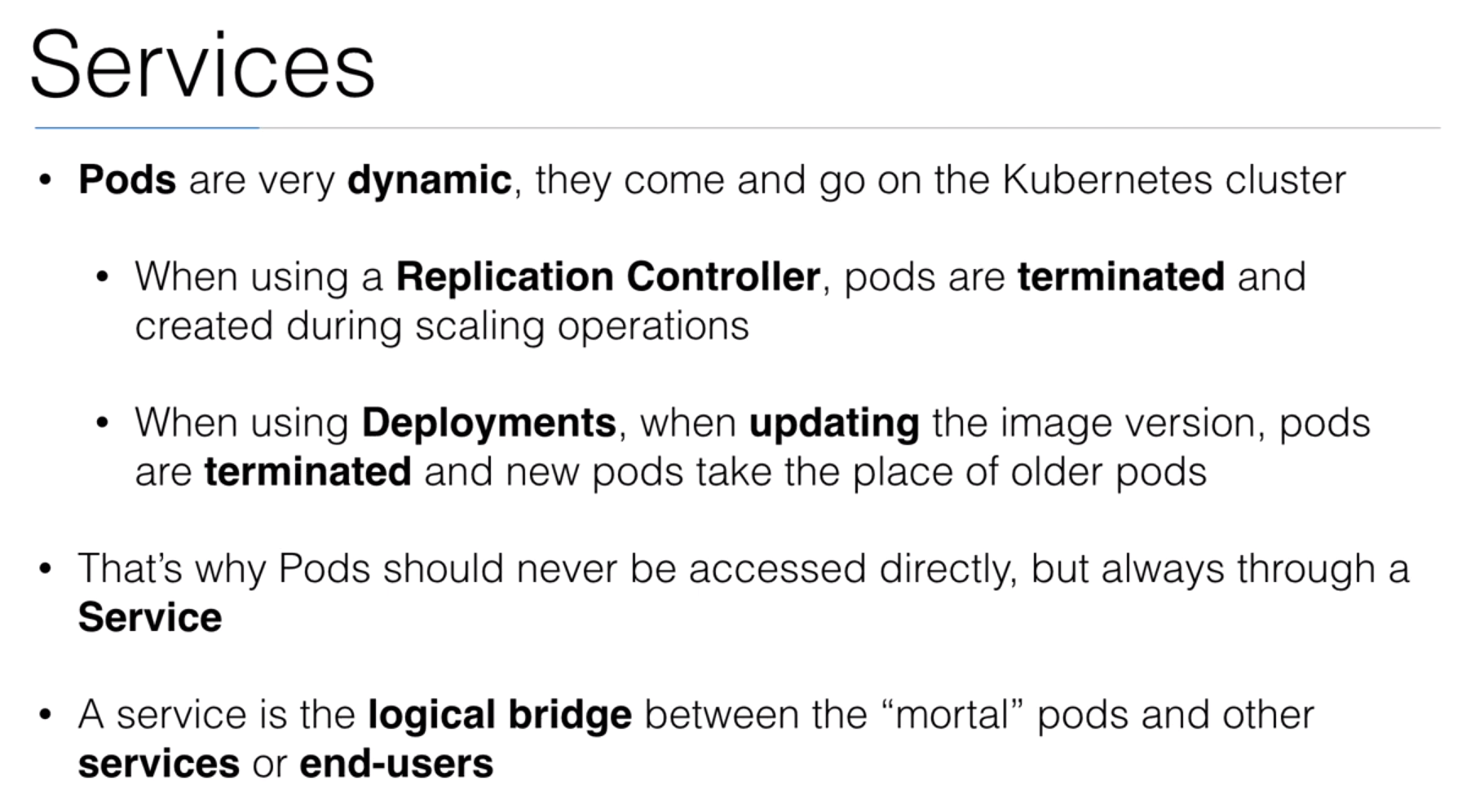

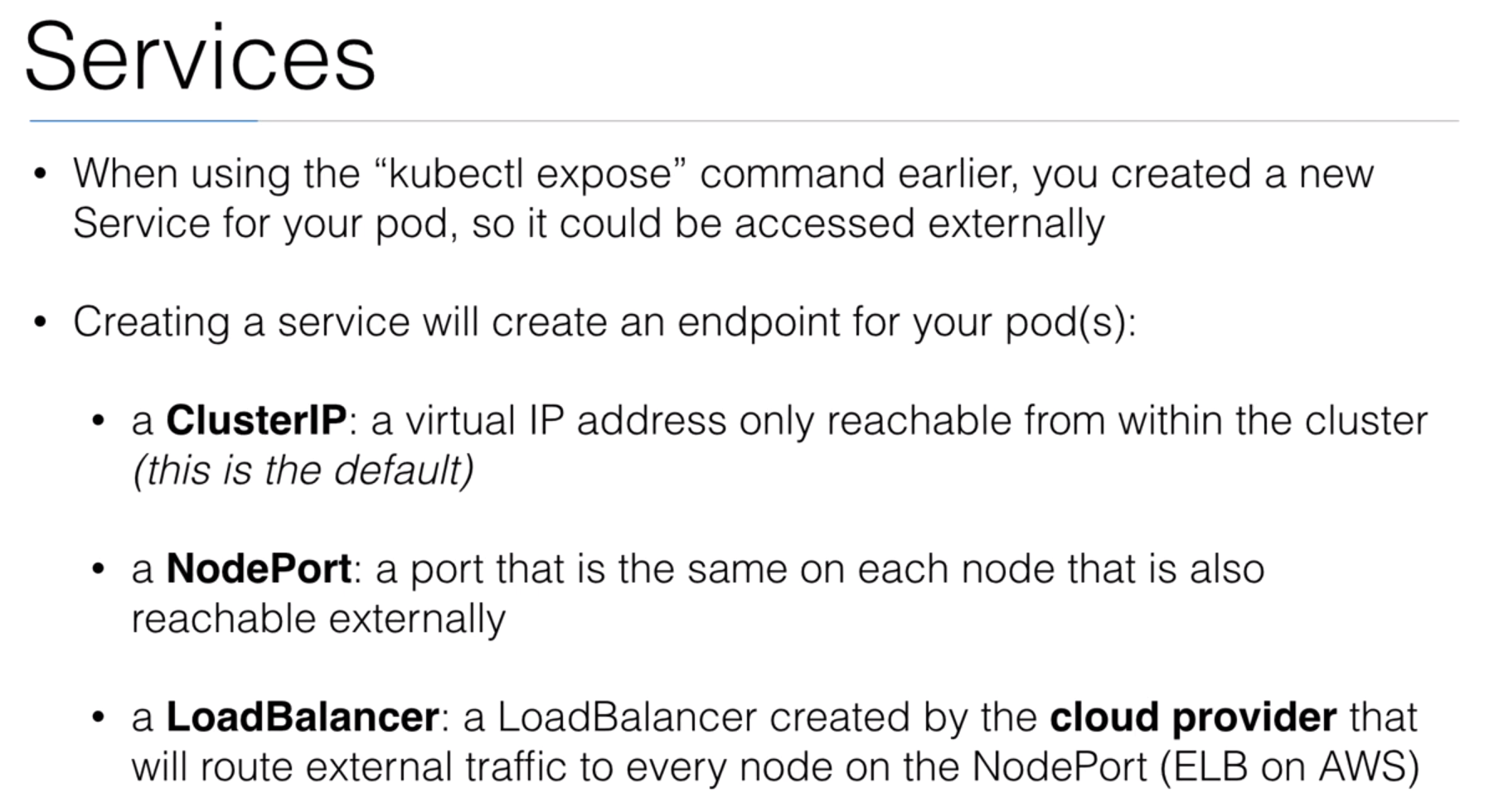

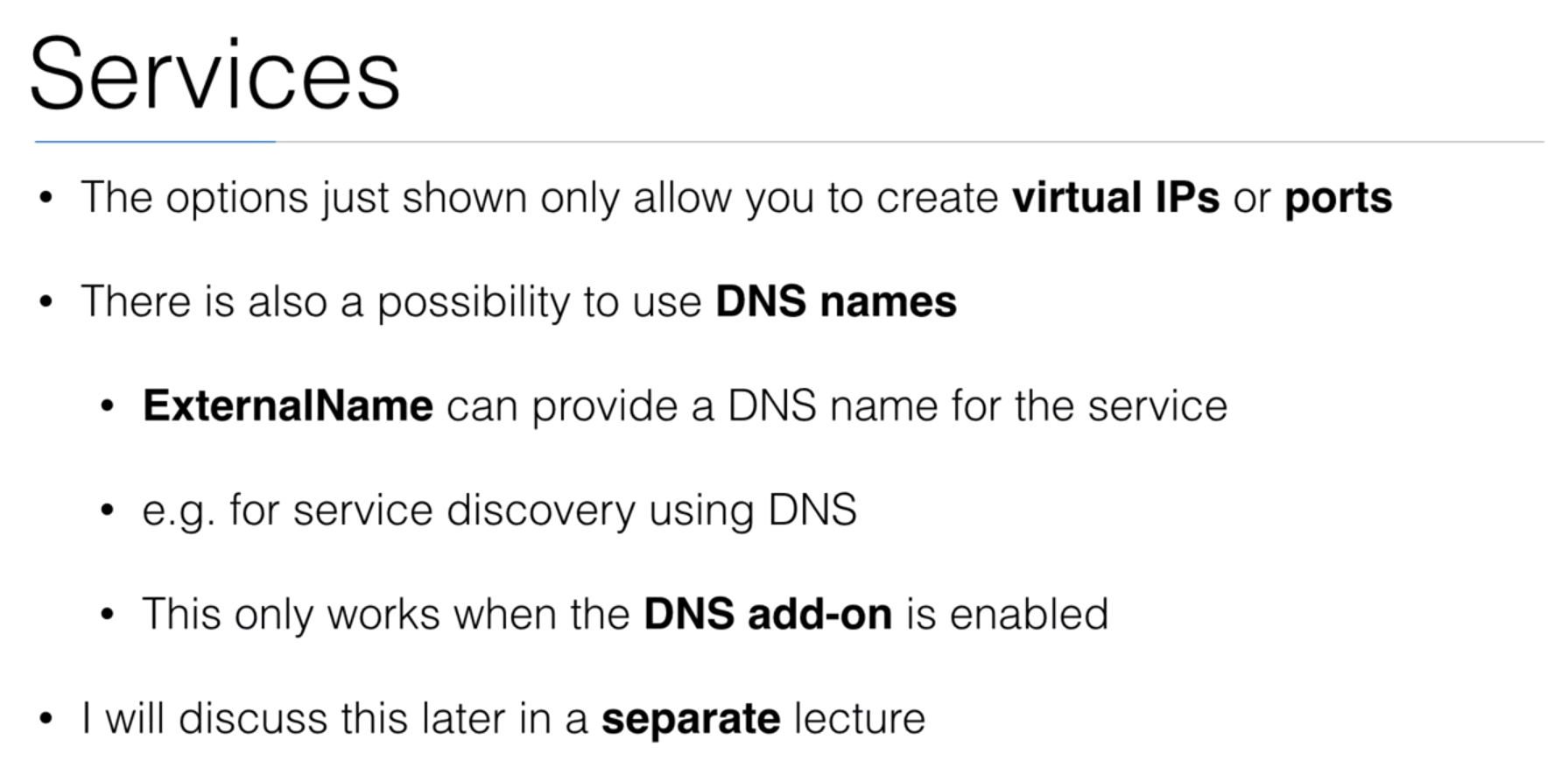

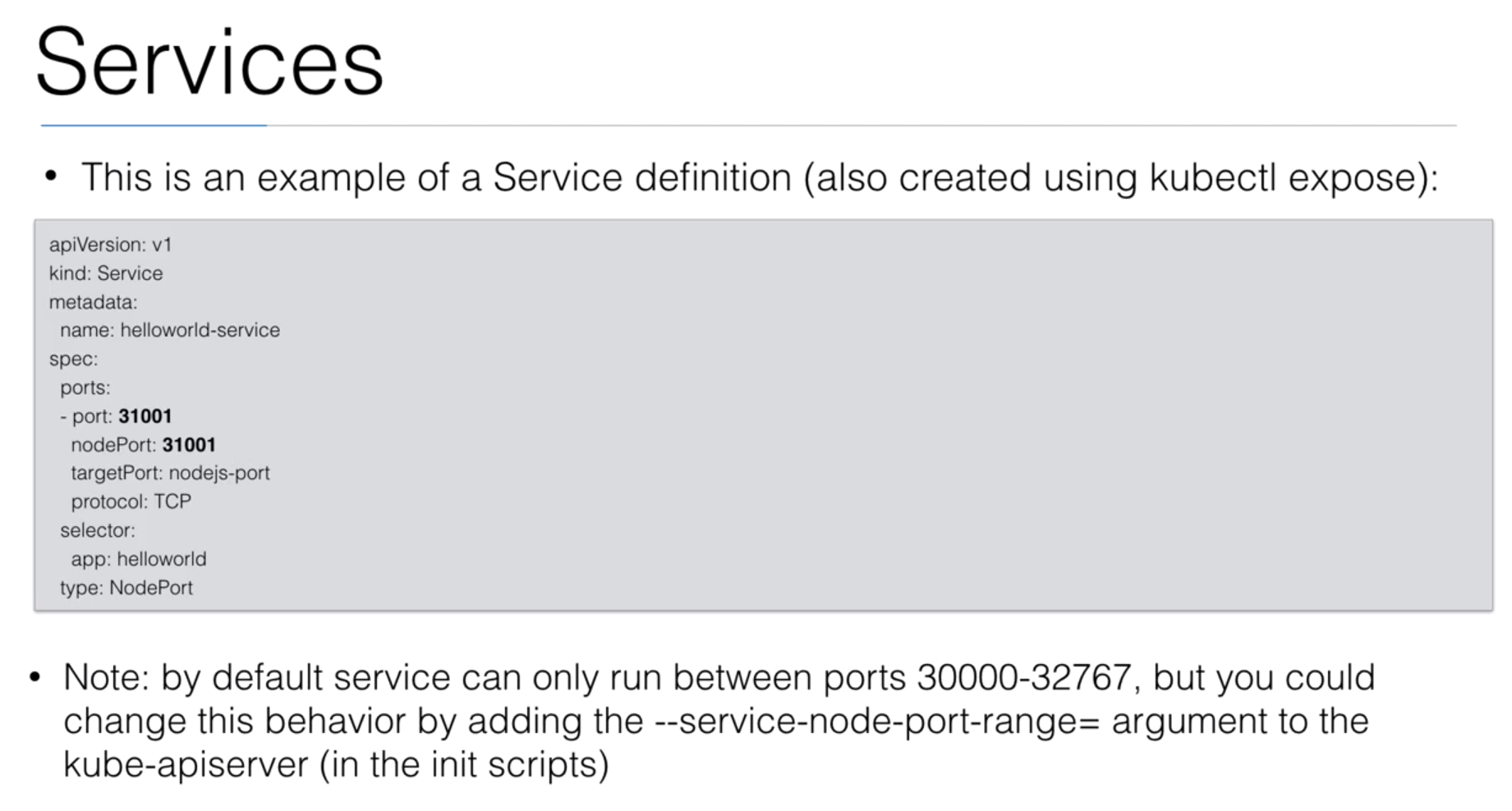

- 31. Services

- 32. Demo: Services

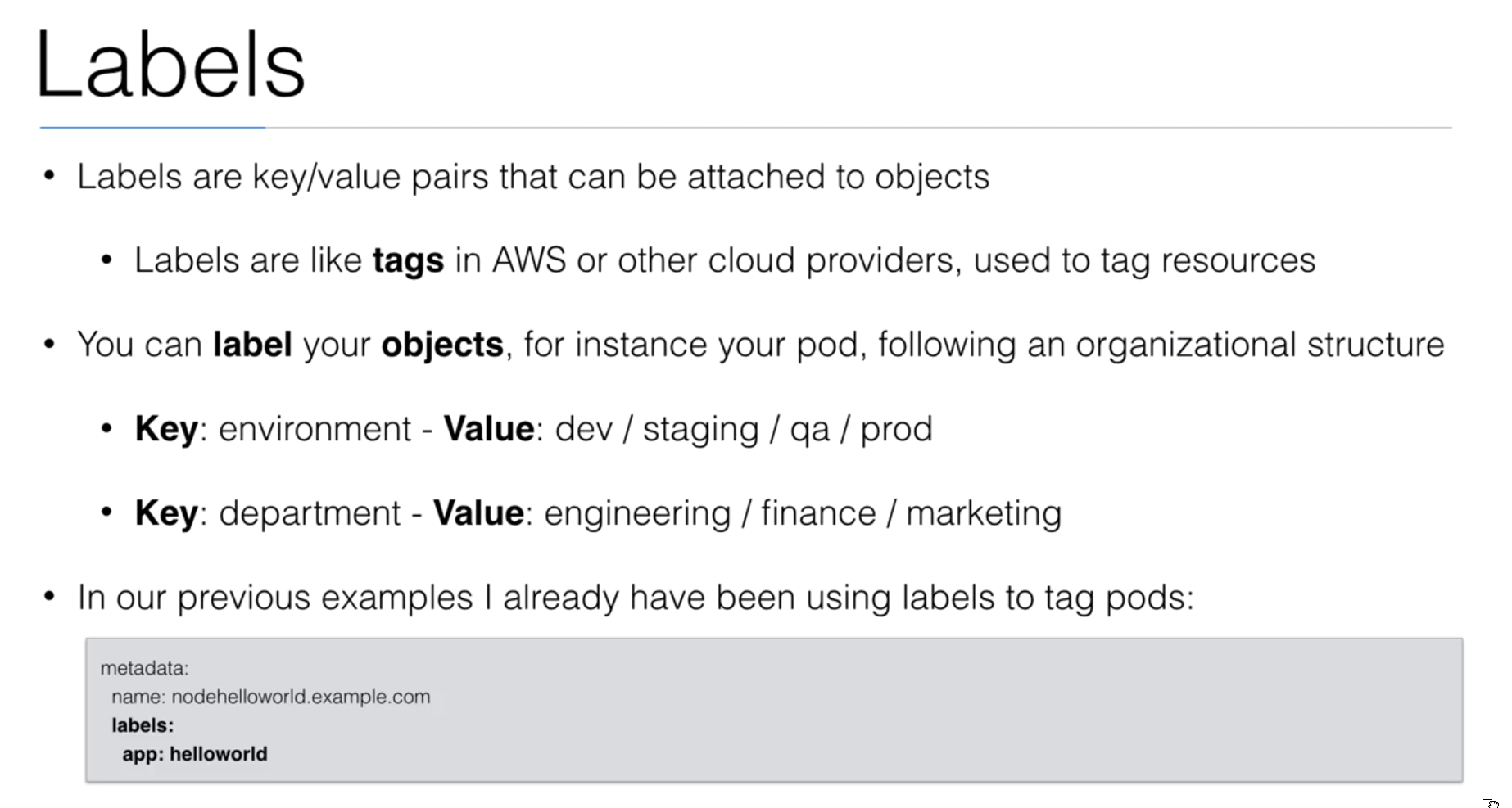

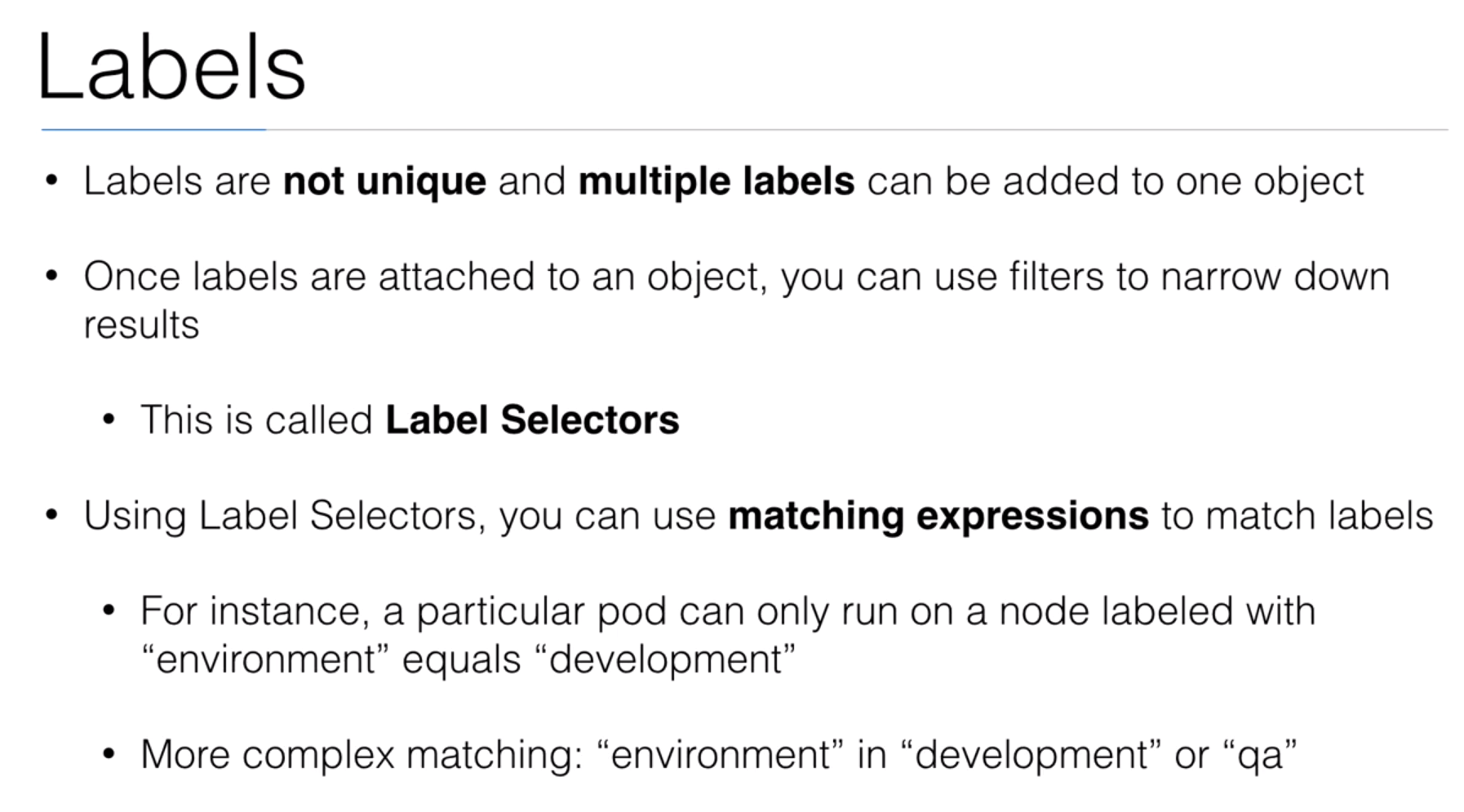

- 33. Labels

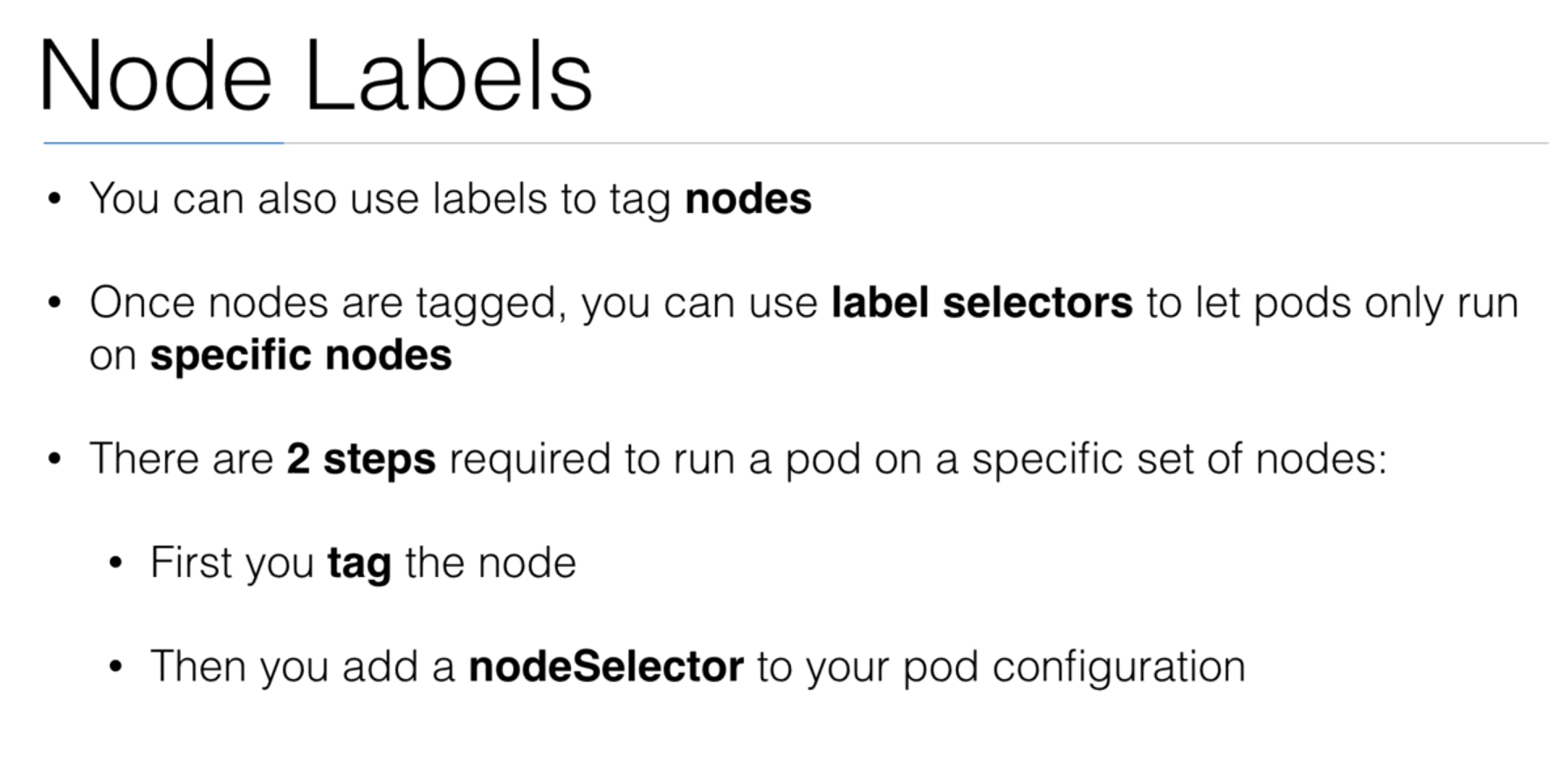

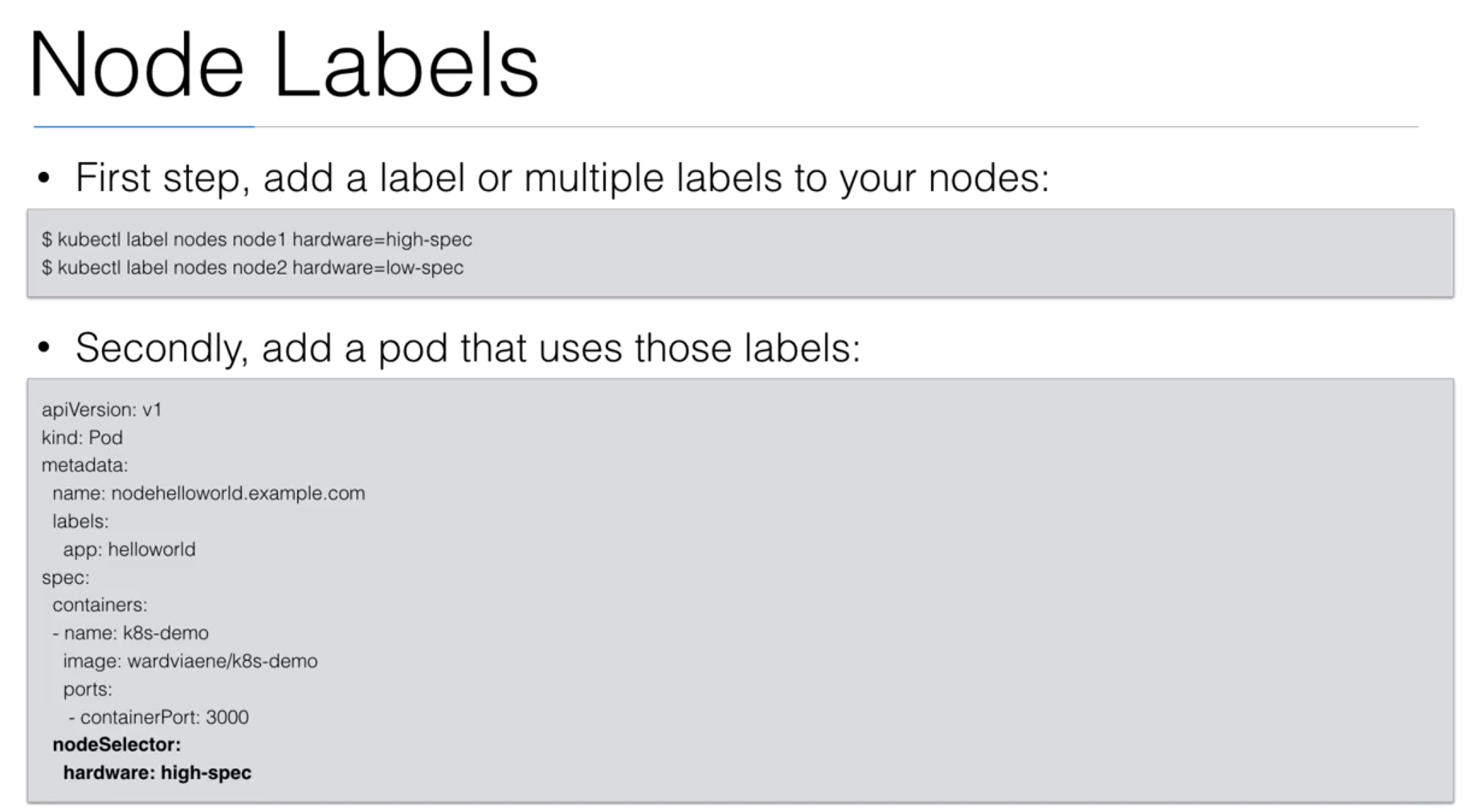

- 34. Demo: NodeSelector using Labels

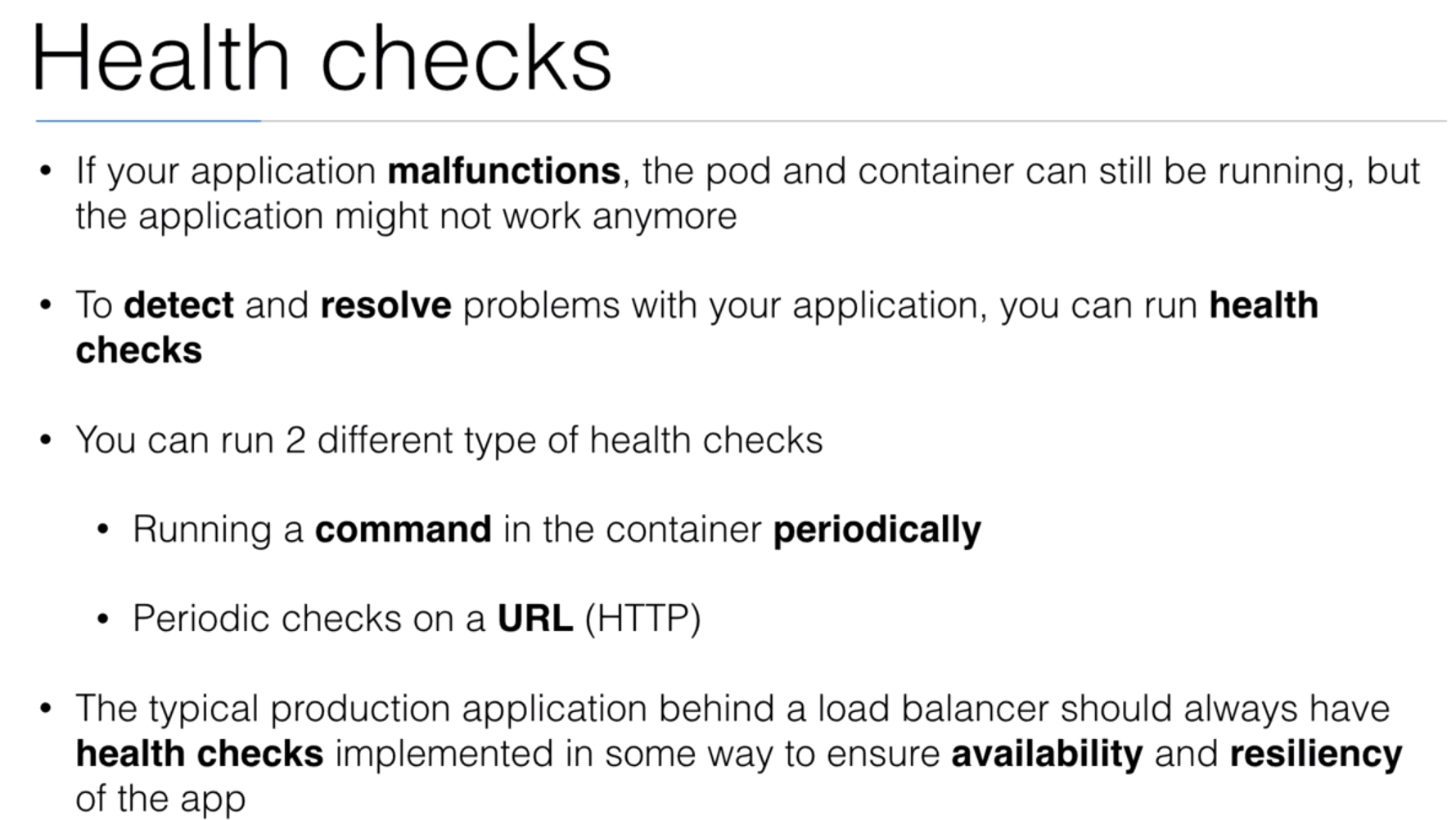

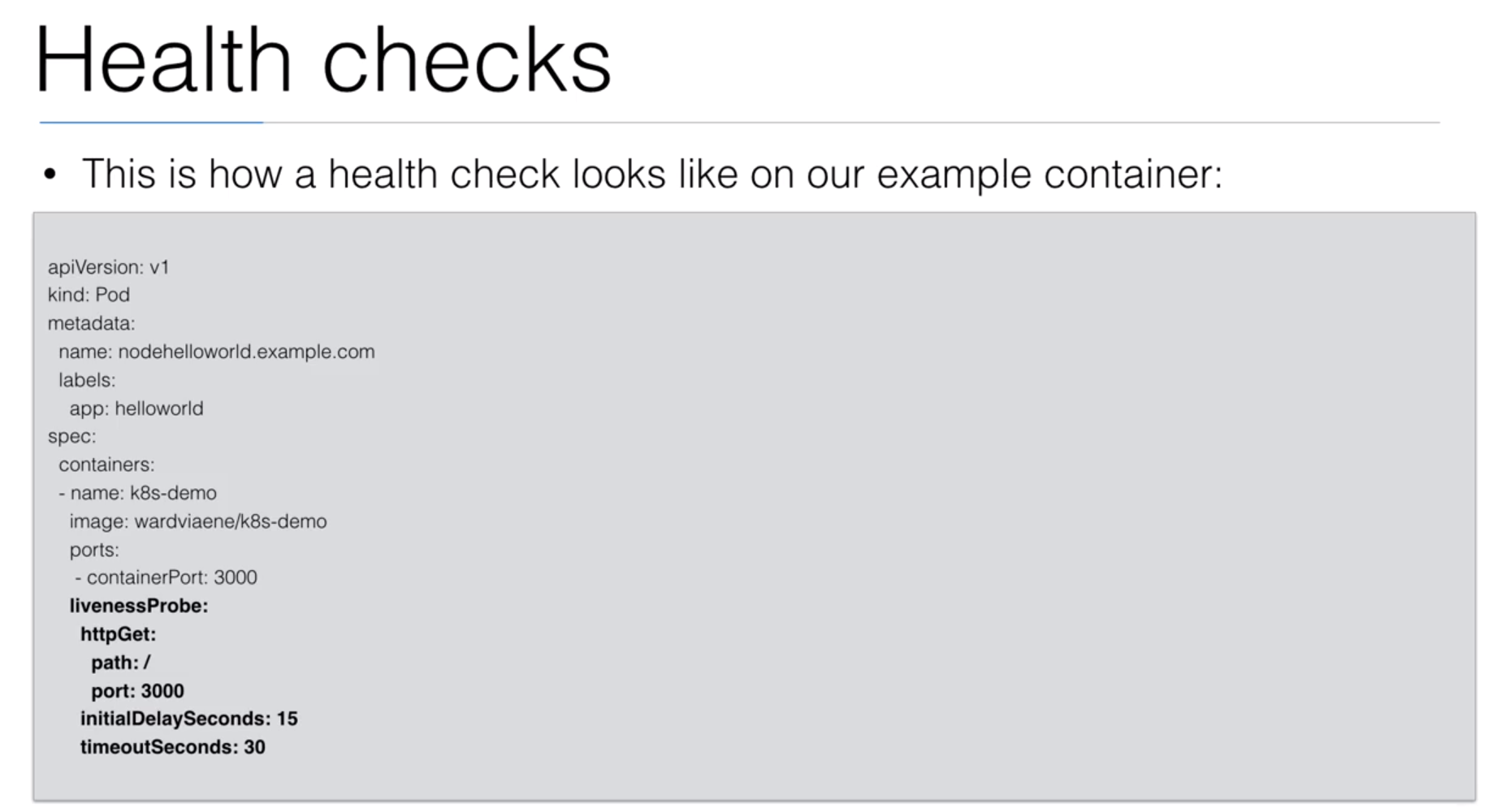

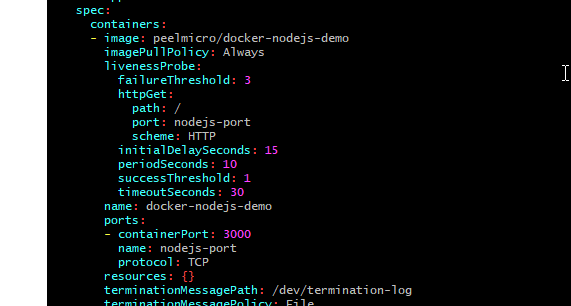

- 35. Healthchecks

- 36. Demo: Healthchecks

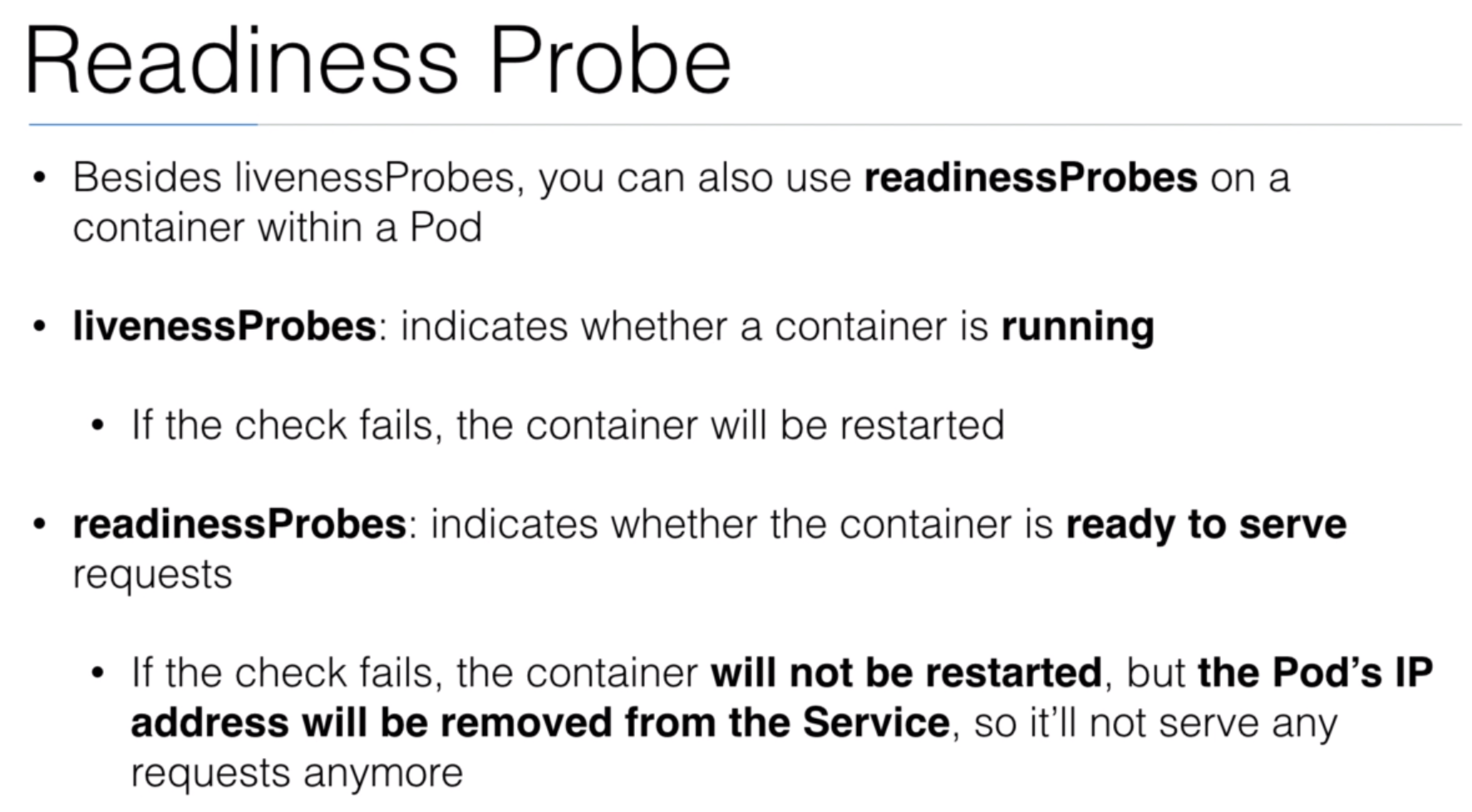

- 37. Readiness Probe

- 38. Demo: Liveness and Readiness probe

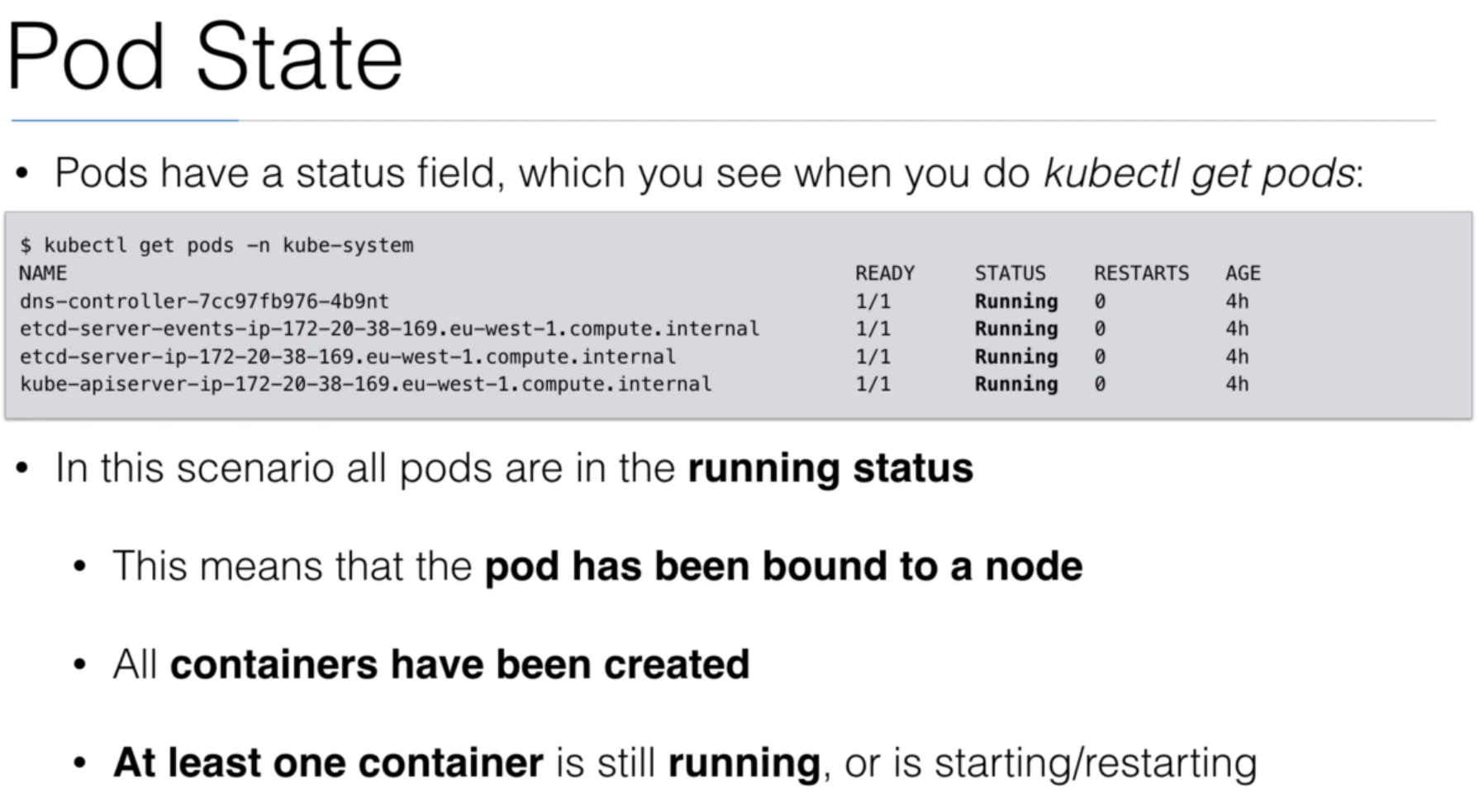

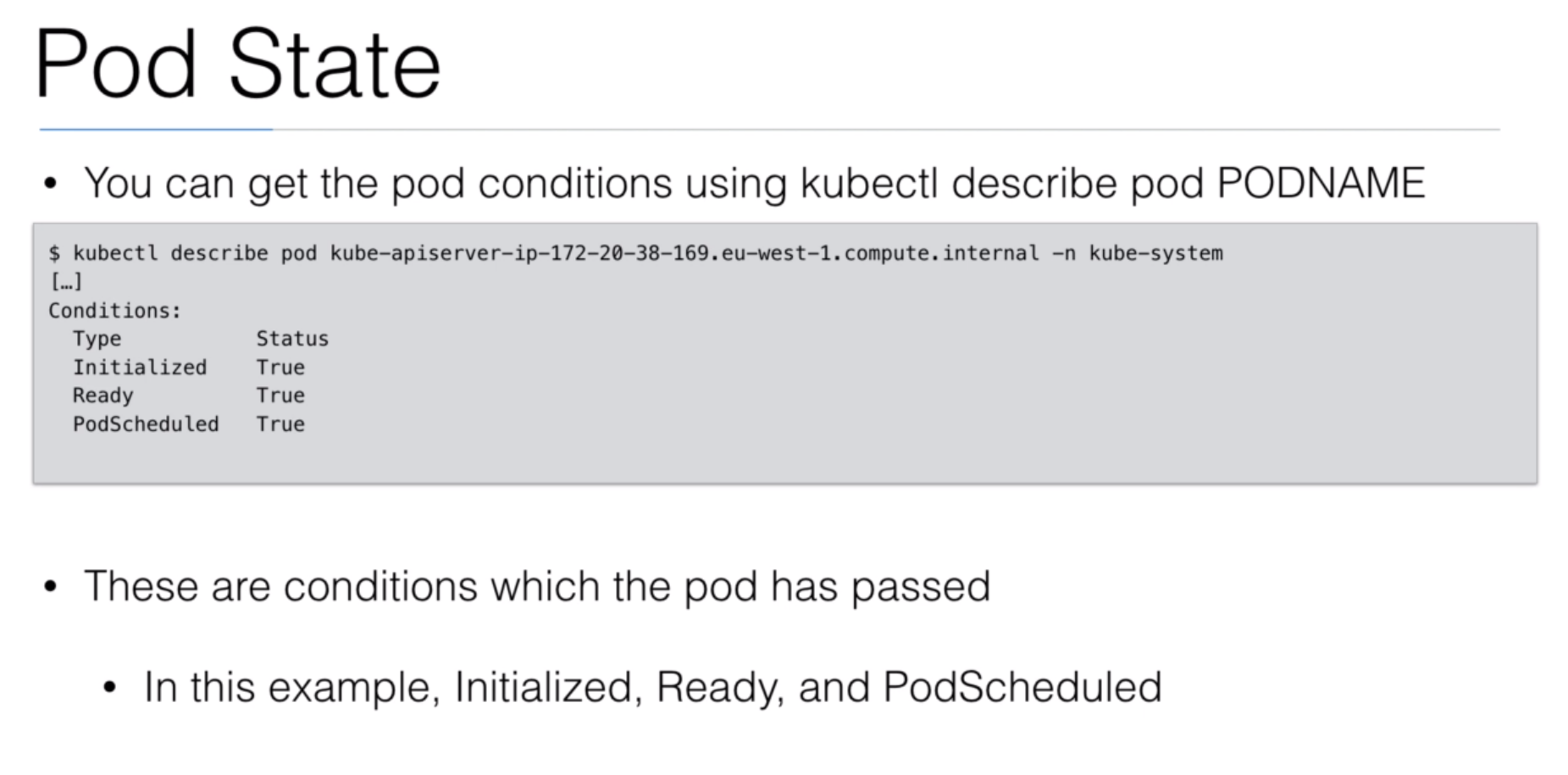

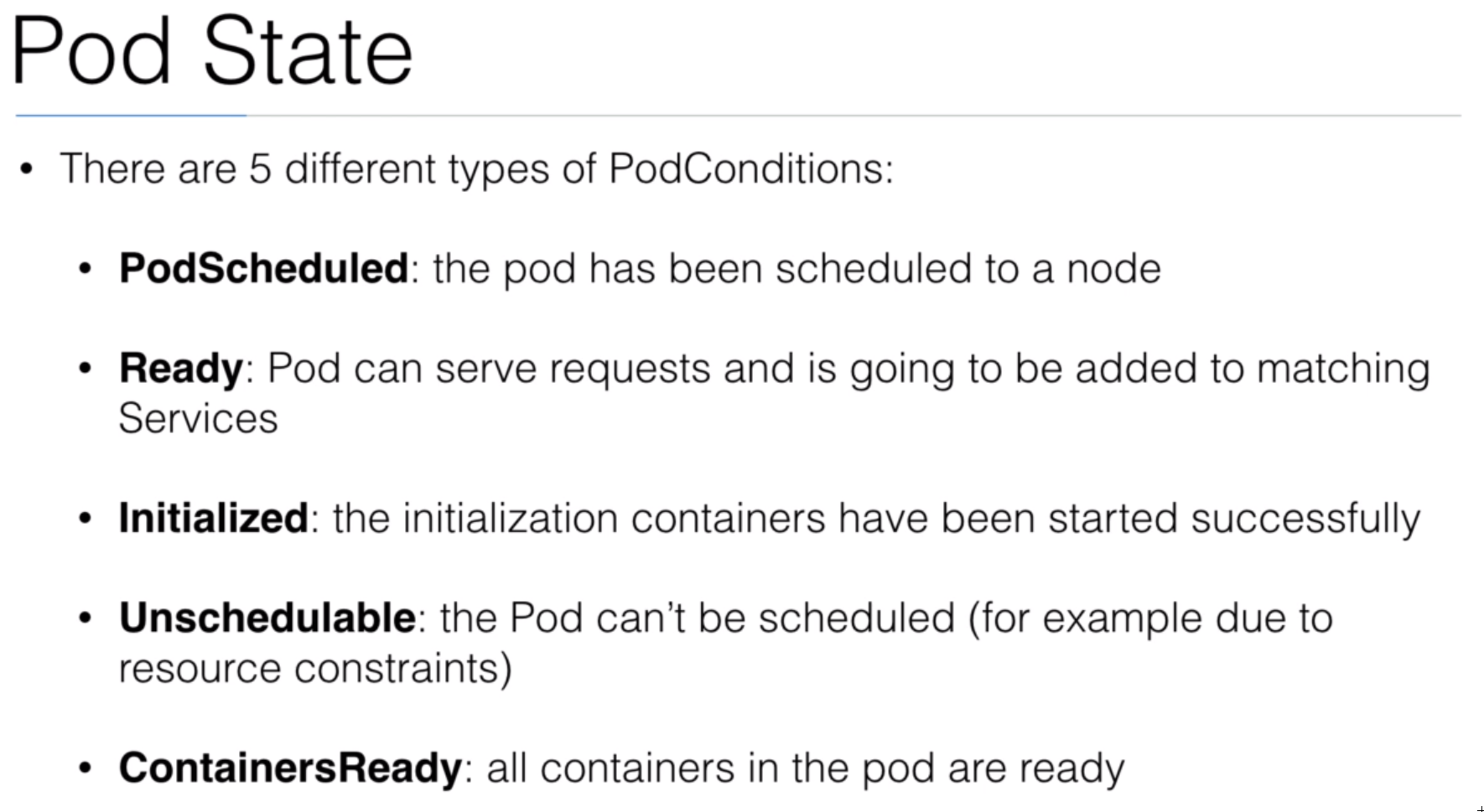

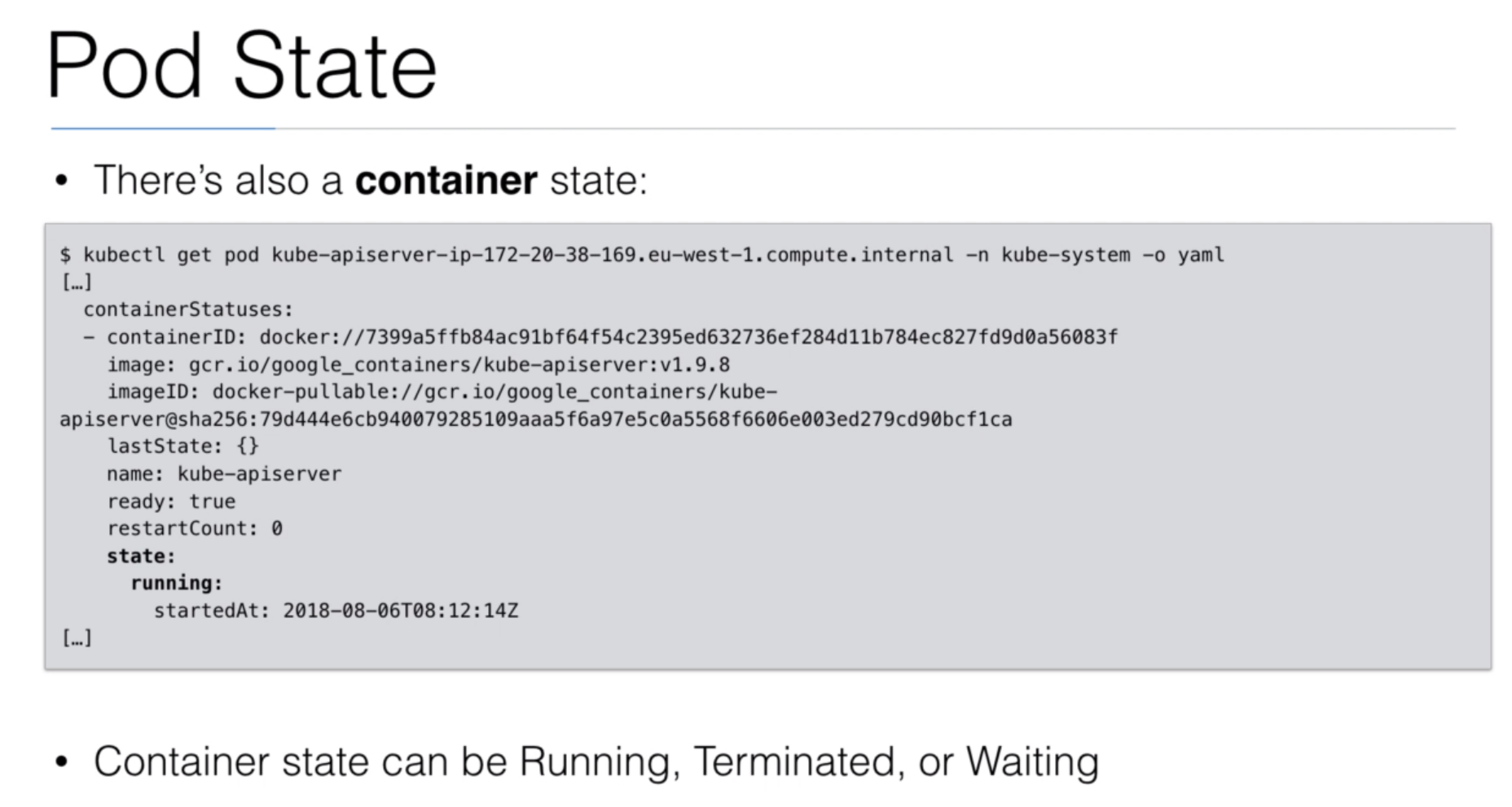

- 39. Pod State

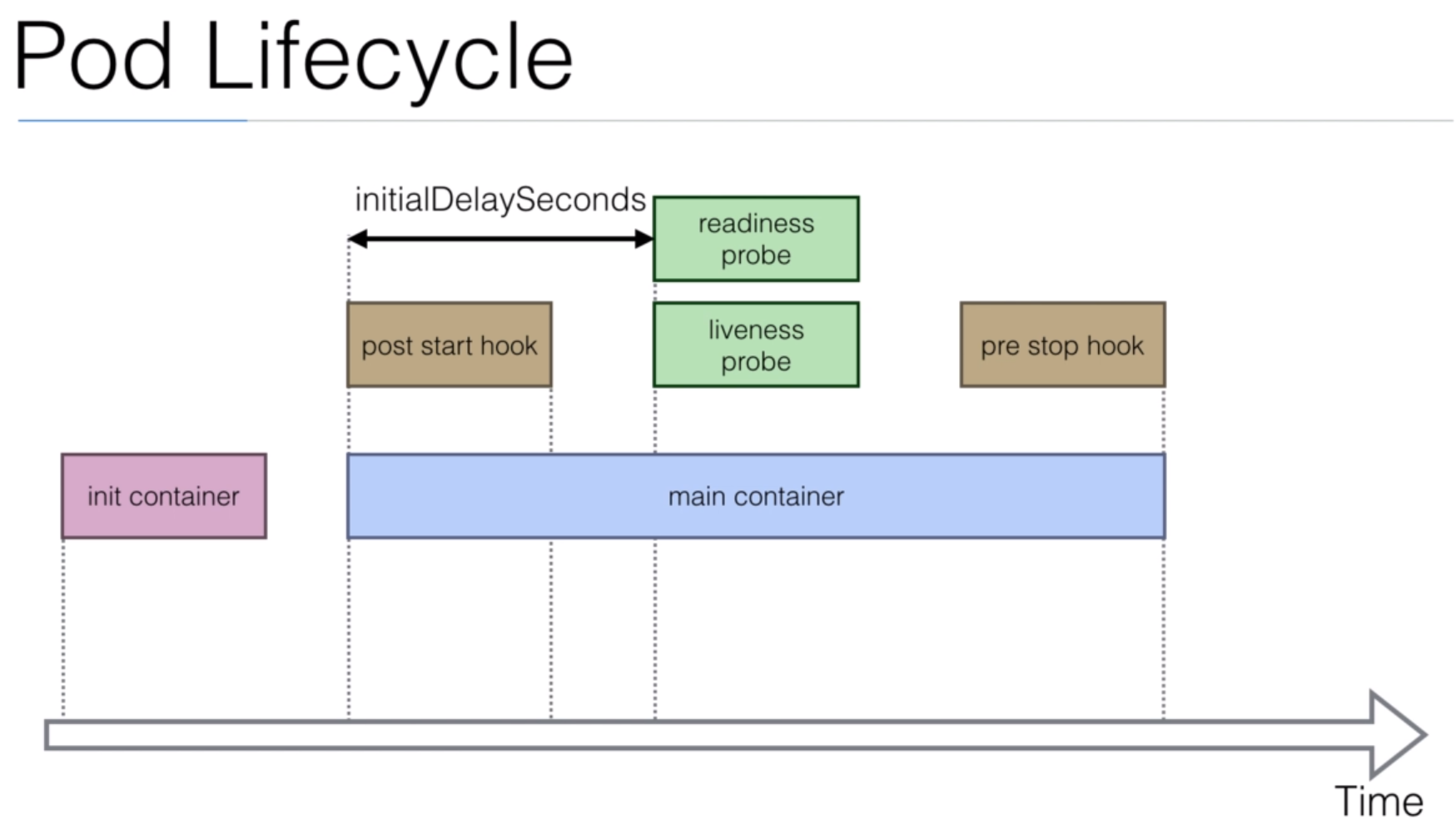

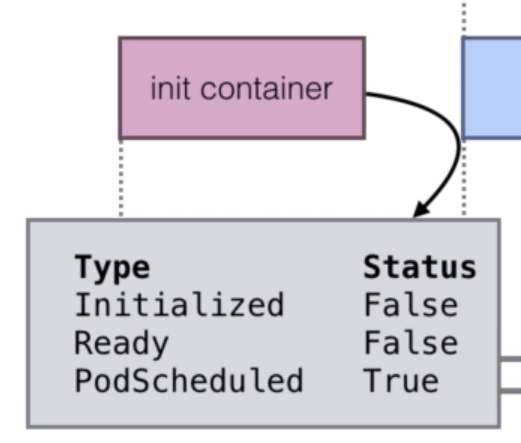

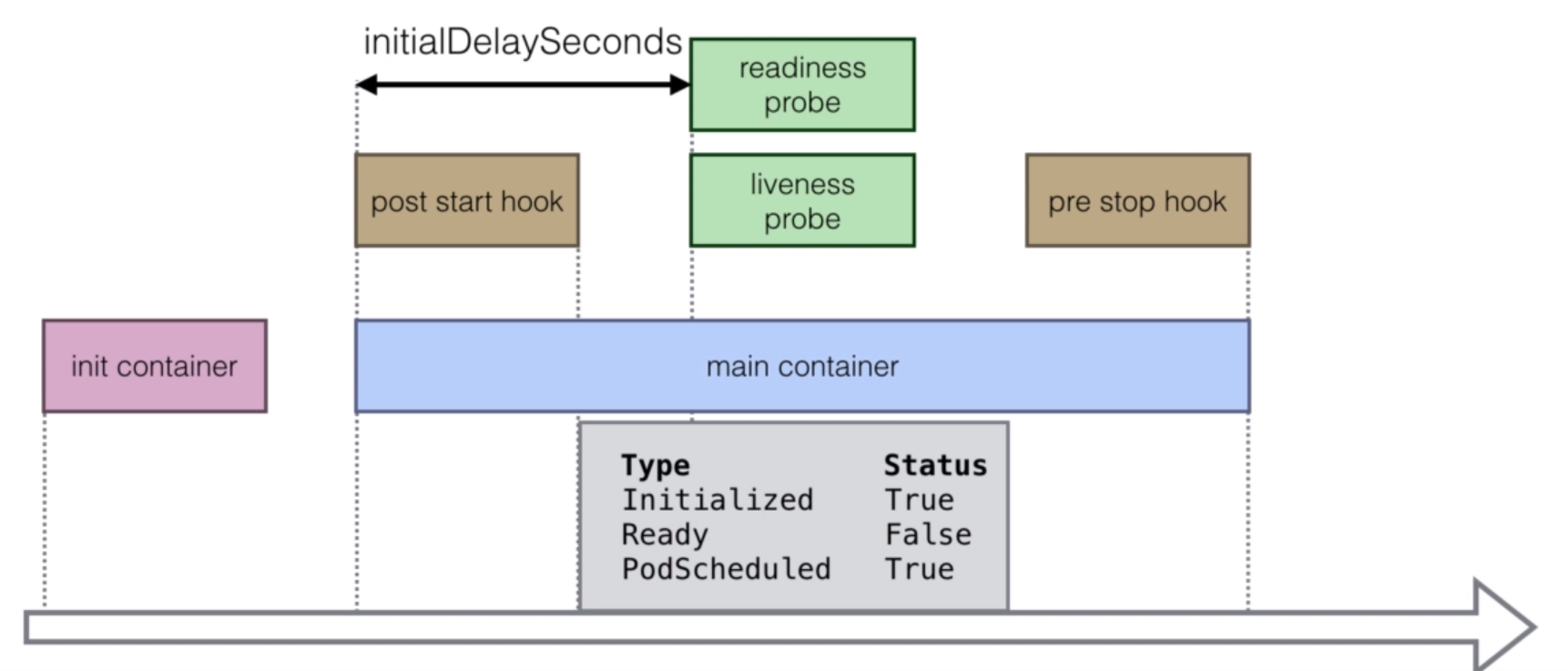

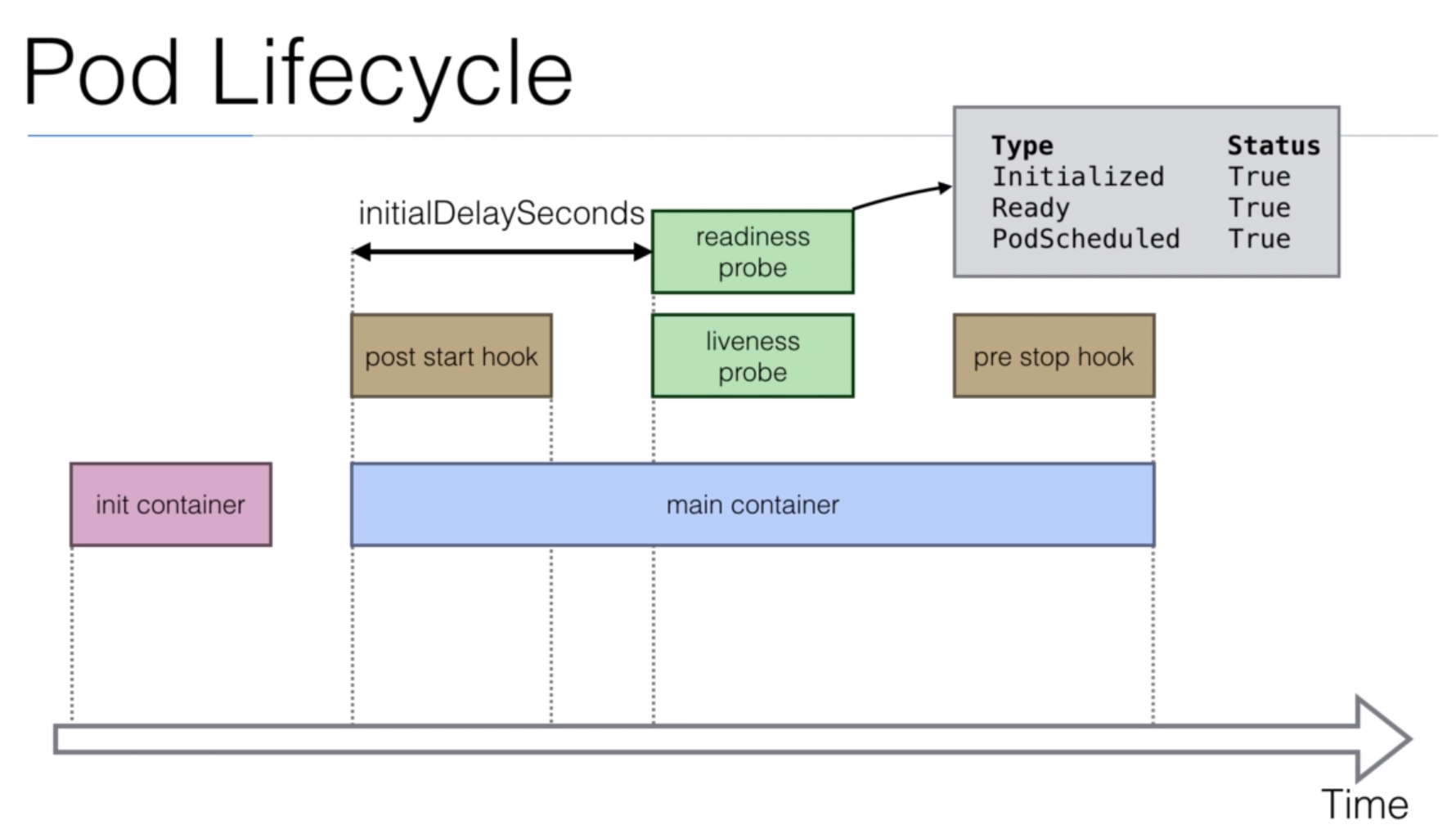

- 40. Pod Lifecycle

What I've learned

- Install and configure Kubernetes (on your laptop/desktop or production grade cluster on AWS)

- Use Docker Client (with kubernetes), kubeadm, kops, or minikube to setup your cluster

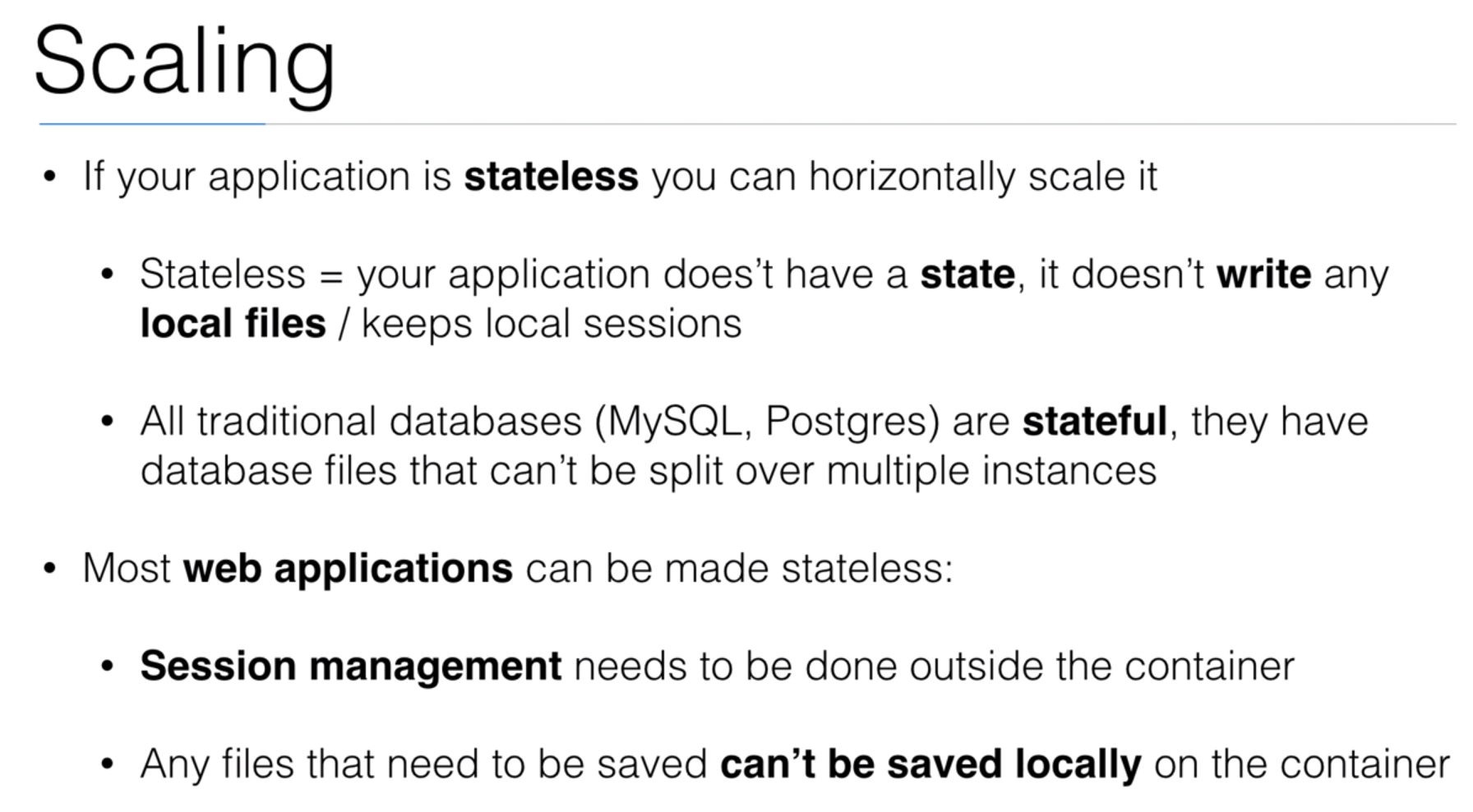

- Be able to run stateless and stateful applications on Kubernetes

- Use Healthchecks, Secrets, ConfigMaps, placement strategies using Node/Pod affinity / anti-affinity

- Use StatefulSets to deploy a Cassandra cluster on Kubernetes

- Add users, set quotas/limits, do node maintenance, setup monitoring

- Use Volumes to provide persistence to your containers

- Be able to scale your apps using metrics

- Package applications with Helm and write your own Helm charts for your applications

- Automatically build and deploy your own Helm Charts using Jenkins

- Install and use kubeless to run functions (Serverless) on Kubernetes

- Install and use Istio to deploy a service mesh on Kubernetes

- Deployment concepts in Kubernetes by using HELM and HELMFILE

Section: 0. Introduction

1. Course Introduction

2. Support and Course Materials

3. Procedure Document

Kubernetes Procedure Document Github repository [Read this first]

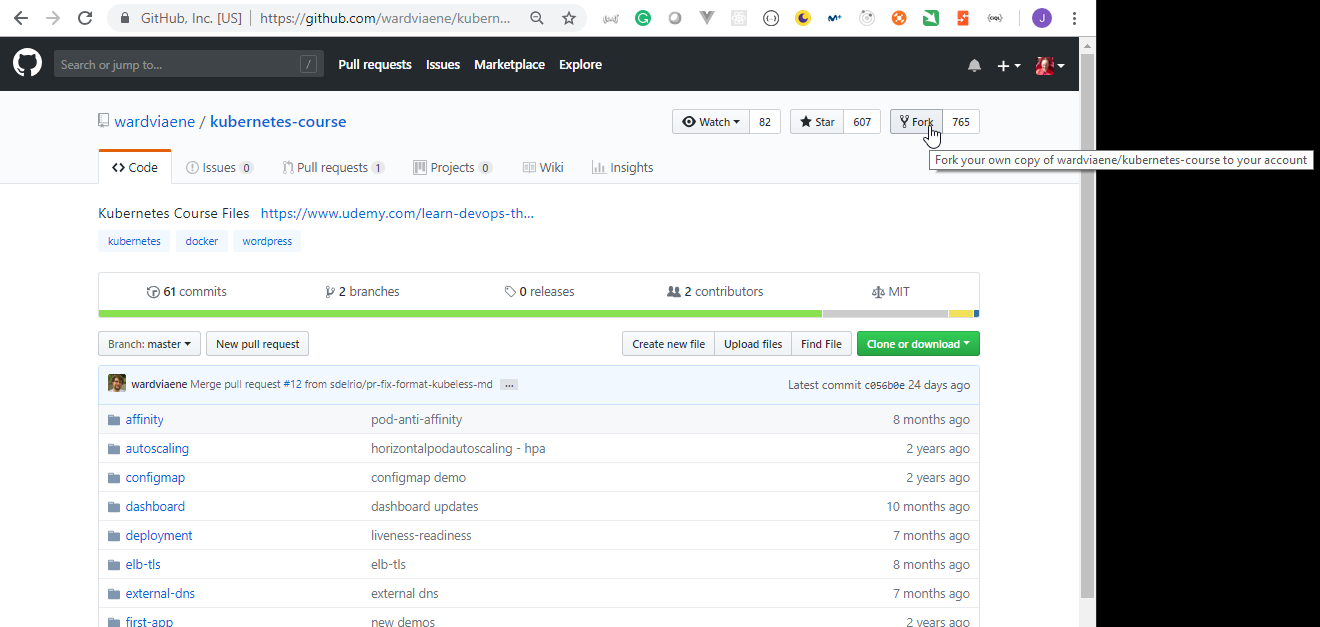

Download all the course material from: https://github.com/wardviaene/kubernetes-course

Kubernetes releases minor version updates of its distribution every 3 months

Rather than updating the scripts in the video lectures, the repository in Github is updated if any script need changes

The changes are often very minor, the API is very stable. Often API versions like v1betaX change to v1betaX+1 or to v1 (stable)

All the scripts you can find in the repository should work with the latest version of Kubernetes, if you have any issues, contact me through one of the channels listed below

Slides The slides can be downloaded from: https://d3jb1lt6v0nddd.cloudfront.net/udemy/Learn+DevOps+-+Kubernetes.pdf

Questions?

Send me a message

Use Q&A

Join our facebook group: https://www.facebook.com/groups/840062592768074/

Download Kubectl

Linux: https://storage.googleapis.com/kubernetes-release/release/v1.11.0/bin/linux/amd64/kubectl

MacOS: https://storage.googleapis.com/kubernetes-release/release/v1.11.0/bin/darwin/amd64/kubectl

Windows: https://storage.googleapis.com/kubernetes-release/release/v1.11.0/bin/windows/amd64/kubectl.exe

Or use a packaged version for your OS: see https://kubernetes.io/docs/tasks/tools/install-kubectl/

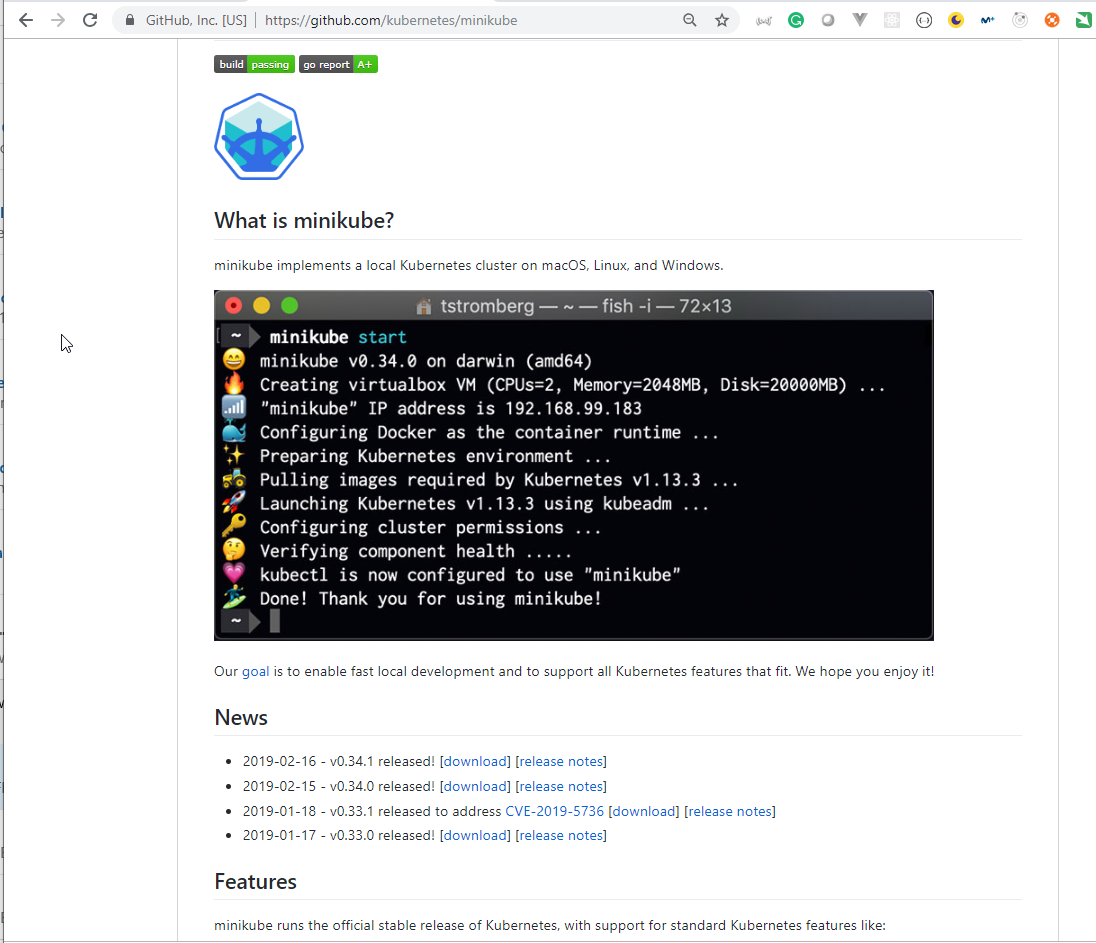

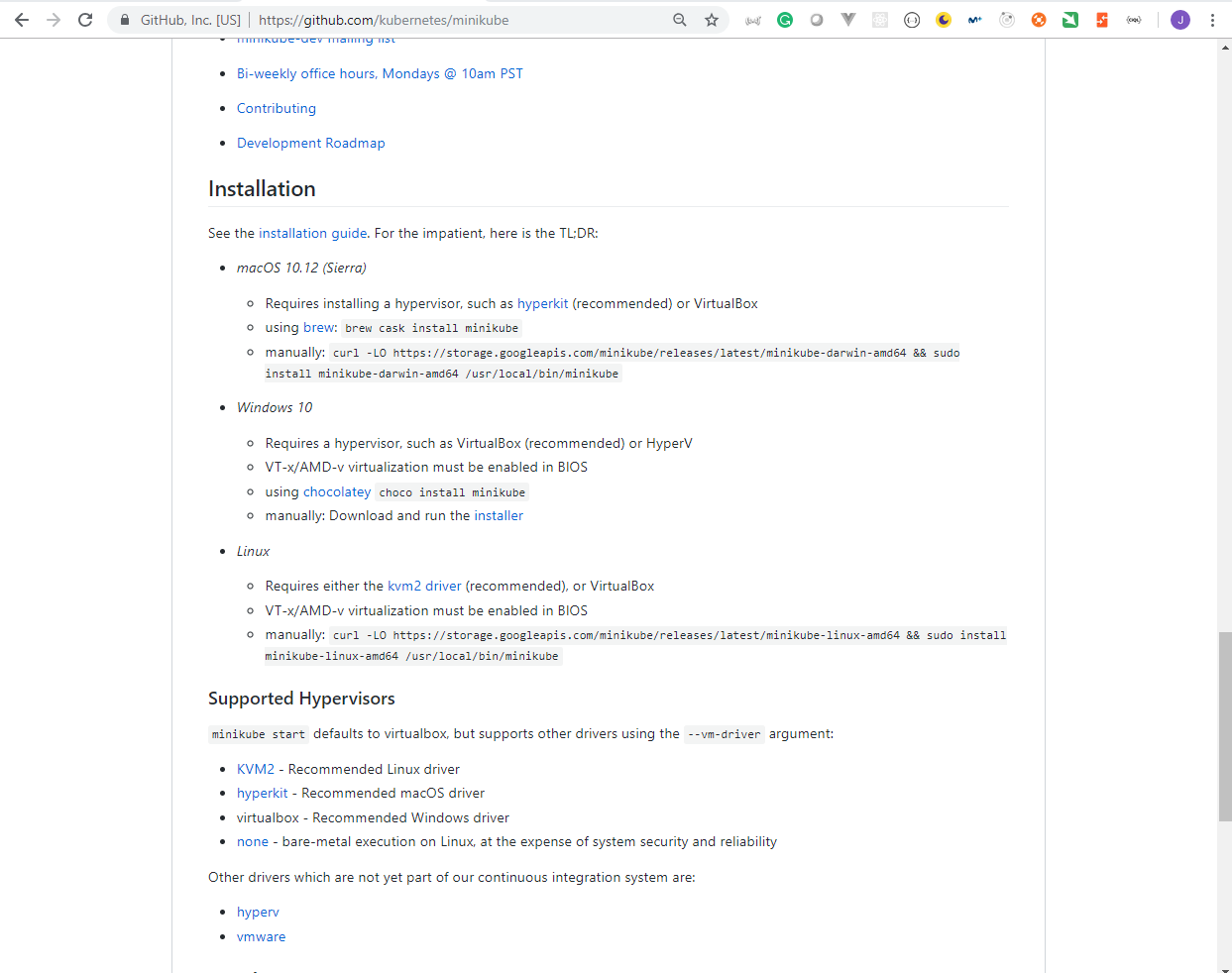

Minikube

Project URL: https://github.com/kubernetes/minikube

Latest Release and download instructions: https://github.com/kubernetes/minikube/releases

VirtualBox: http://www.virtualbox.org

Minikube on windows:

Download the latest minikube-version.exe

Rename the file to minikube.exe and put it in C:\minikube

Open a cmd (search for the app cmd or powershell)

Run: cd C:\minikube and enter minikube start

Test your cluster commands Make sure your cluster is running, you can check with minikube status.

If your cluster is not running, enter minikube start first.

kubectl run hello-minikube --image=k8s.gcr.io/echoserver:1.4 --port=8080 kubectl expose deployment hello-minikube --type=NodePort

minikube service hello-minikube --url

open a browser and go to that url

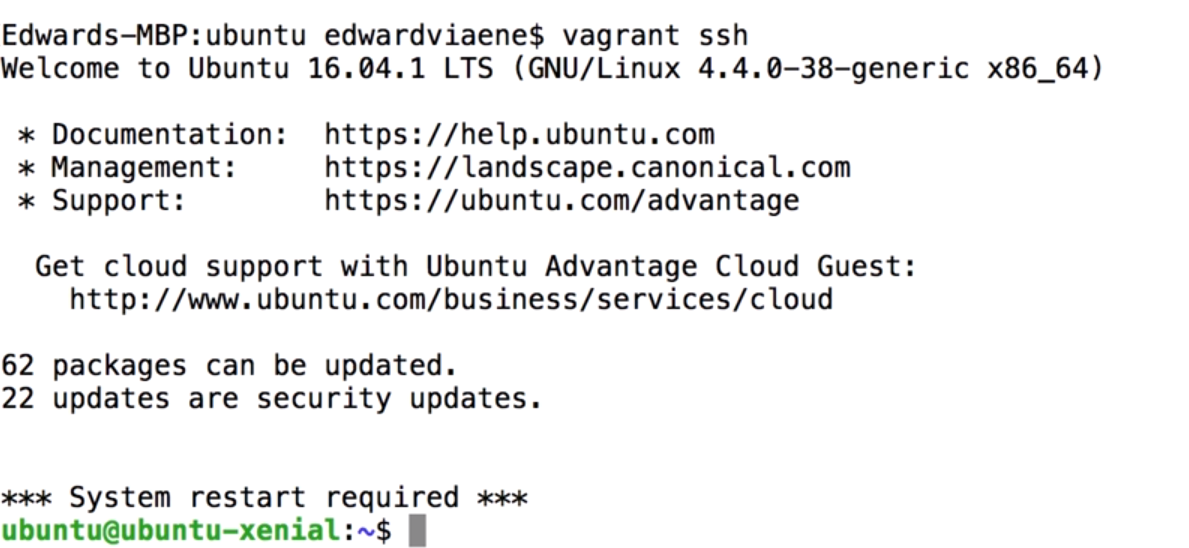

Kops Project URL

- https://github.com/kubernetes/kops

Free DNS Service

Sign up at http://freedns.afraid.org/

Choose for subdomain hosting

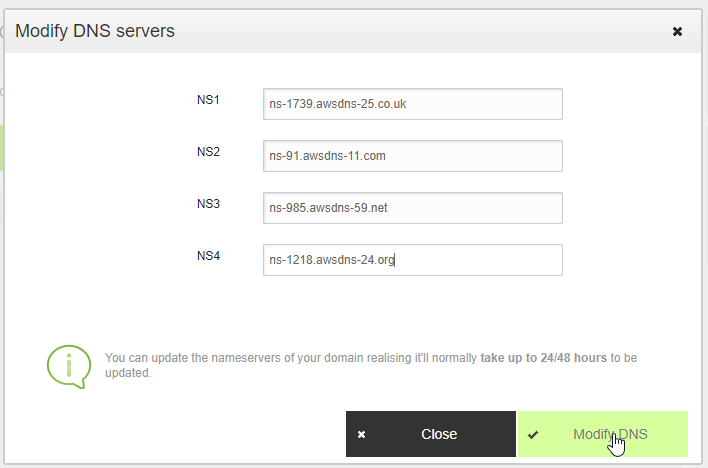

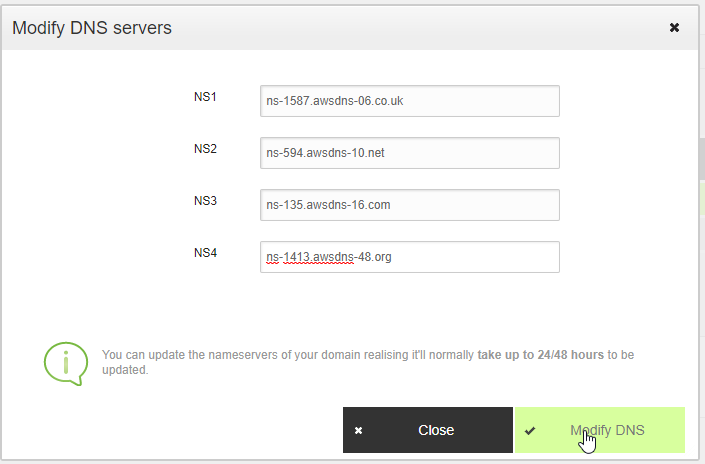

Enter the AWS nameservers given to you in route53 as nameservers for the subdomain

http://www.dot.tk/ provides a free .tk domain name you can use and you can point it to the amazon AWS nameservers

Namecheap.com often has promotions for tld’s like .co for just a couple of bucks

Cluster Commands

kops create cluster --name=kubernetes.newtech.academy --state=s3://kops-state-b429b --zones=eu-west-1a --node-count=2 --node-size=t2.micro --master-size=t2.micro --dns-zone=kubernetes.newtech.academy

kops update cluster kubernetes.newtech.academy --yes --state=s3://kops-state-b429b

kops delete cluster --name kubernetes.newtech.academy --state=s3://kops-state-b429b

kops delete cluster --name kubernetes.newtech.academy --state=s3://kops-state-b429b --yes

Kubernetes from scratch

You can setup your cluster manually from scratch

If you’re planning to deploy on AWS / Google / Azure, use the tools that are fit for these platforms

If you have an unsupported cloud platform, and you still want Kubernetes, you can install it manually

CoreOS + Kubernetes: ###a href="https://coreos.com/kubernetes/docs/latest/getting-started.html">https://coreos.com/kubernetes/docs/latest/getting-started.html

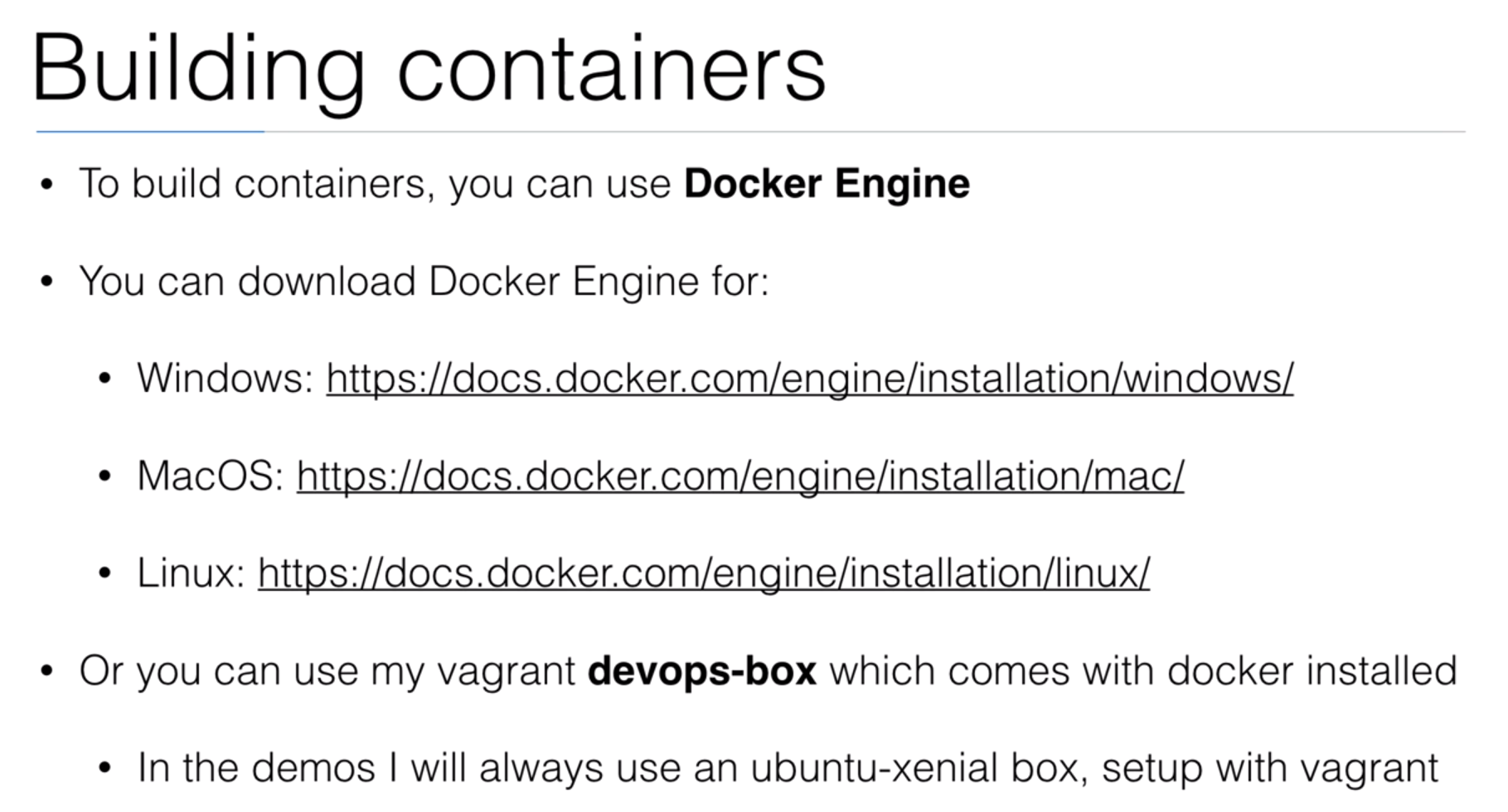

Docker You can download Docker Engine for:

Windows: https://docs.docker.com/engine/installation/windows/

MacOS: https://docs.docker.com/engine/installation/mac/

Linux: https://docs.docker.com/engine/installation/linux/

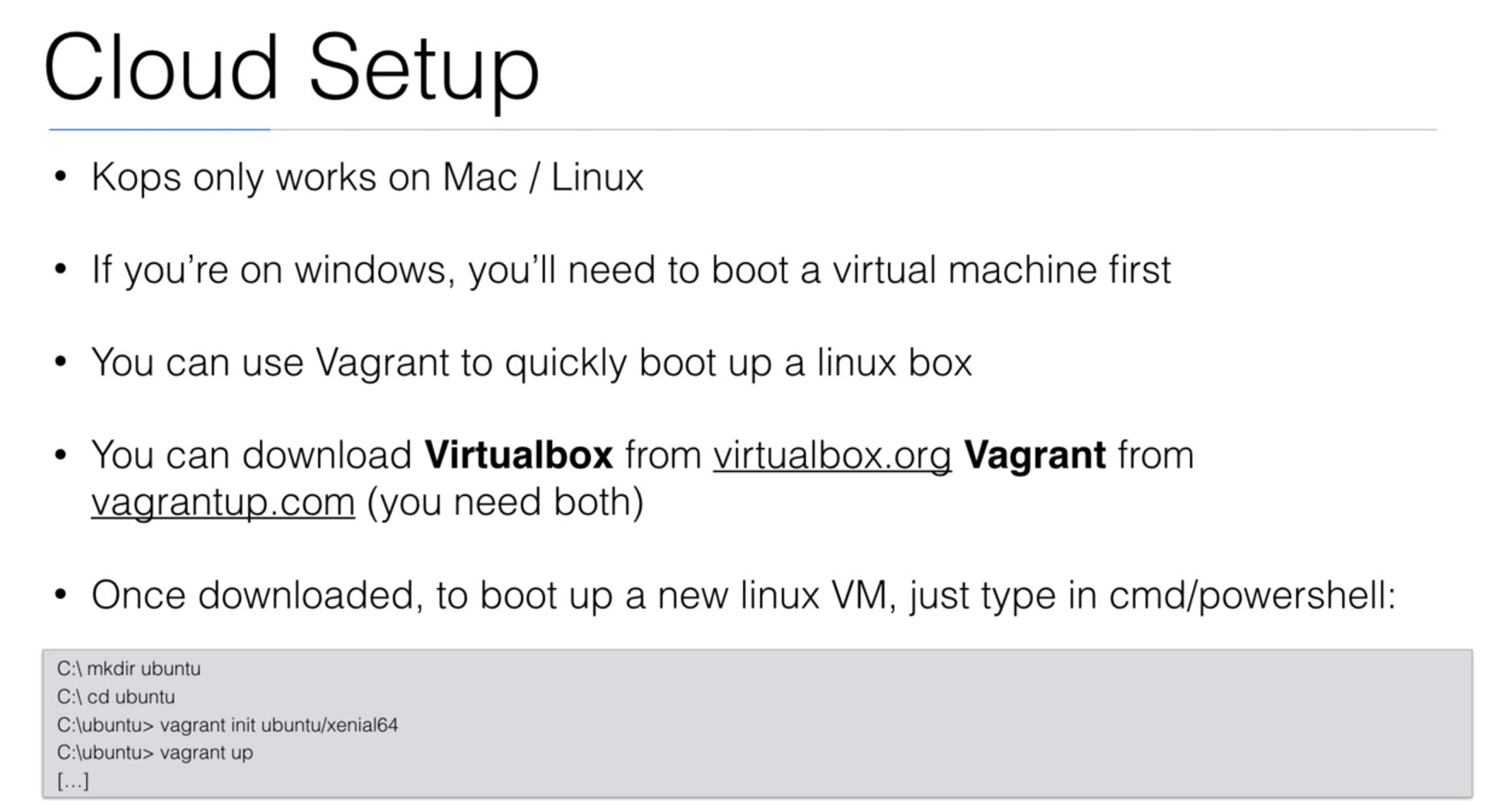

DevOps box

Virtualbox: http://www.virtualbox.org

Vagrant: http://www.vagrantup.com

DevOps box: https://github.com/wardviaene/devops-box

Launch commands (in terminal / cmd / powershell):

cd devops-box/

vagrant up

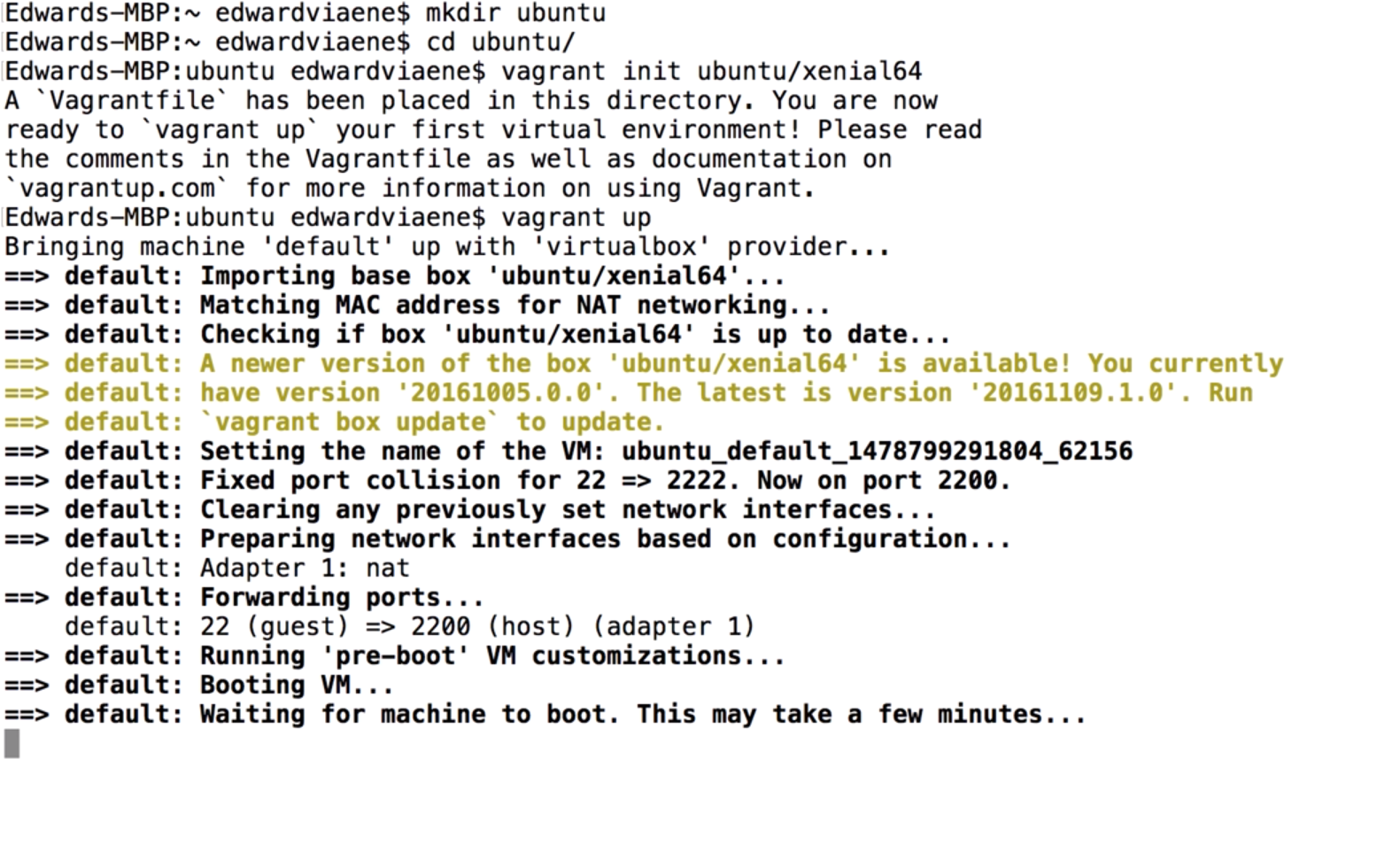

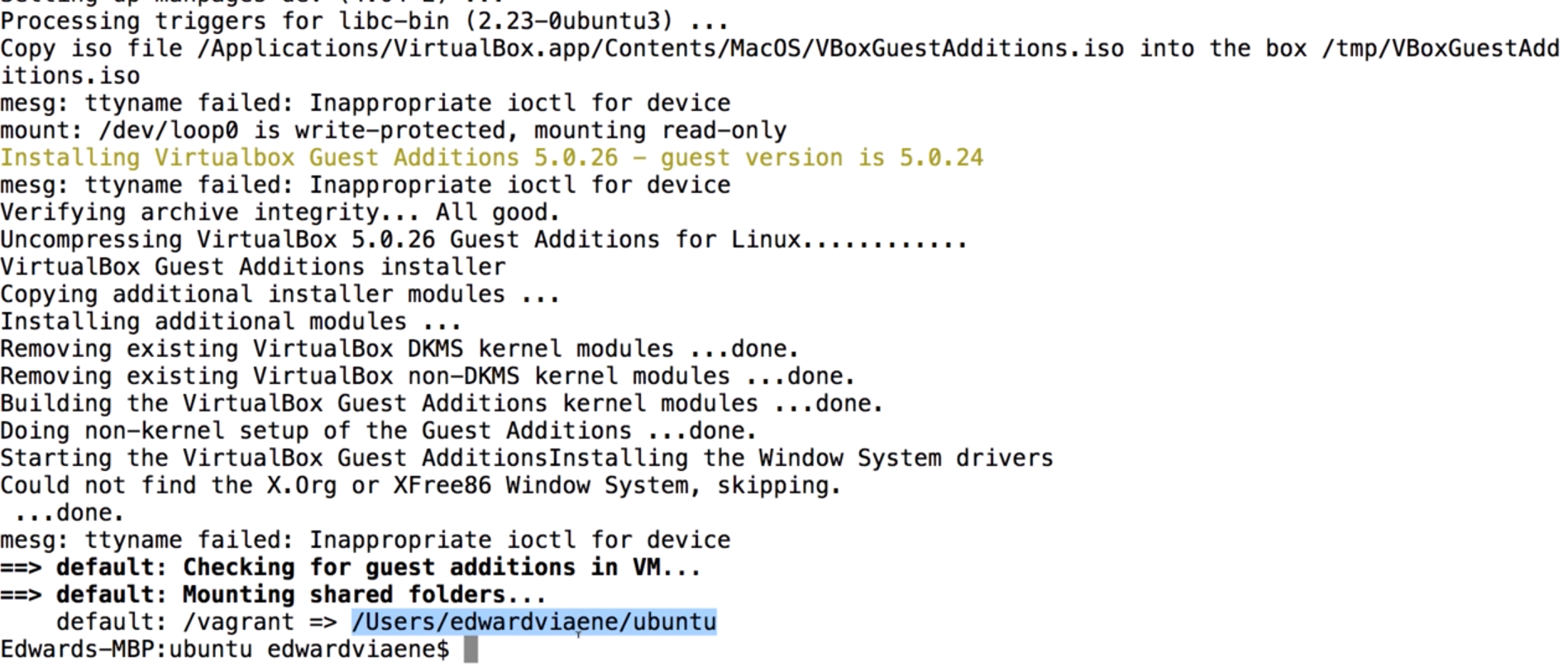

Launch commands for a plain ubuntu box:

mkdir ubuntu

vagrant init ubuntu/xenial64

vagrant up

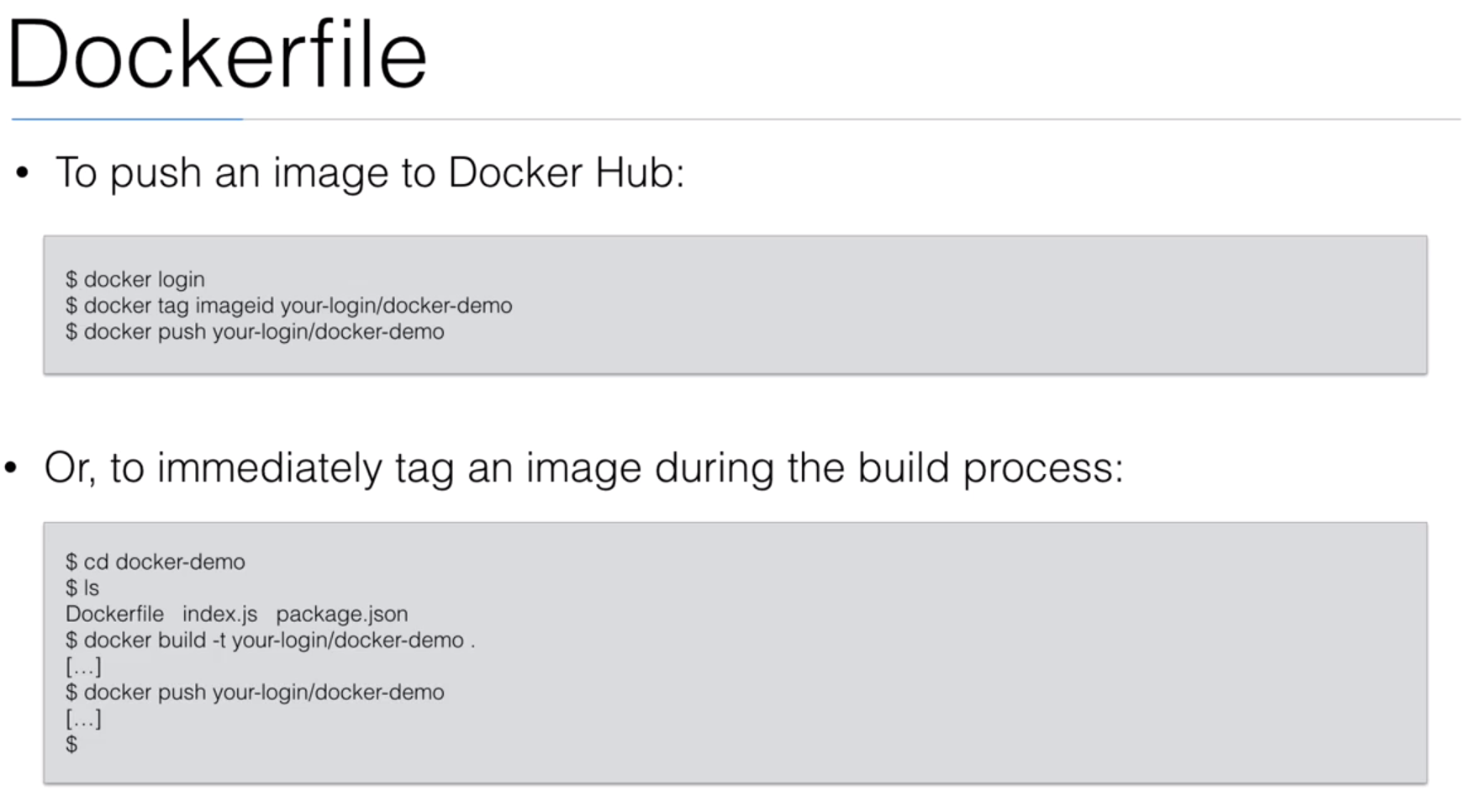

Cheatsheet: Docker commands

| description | command |

|---|---|

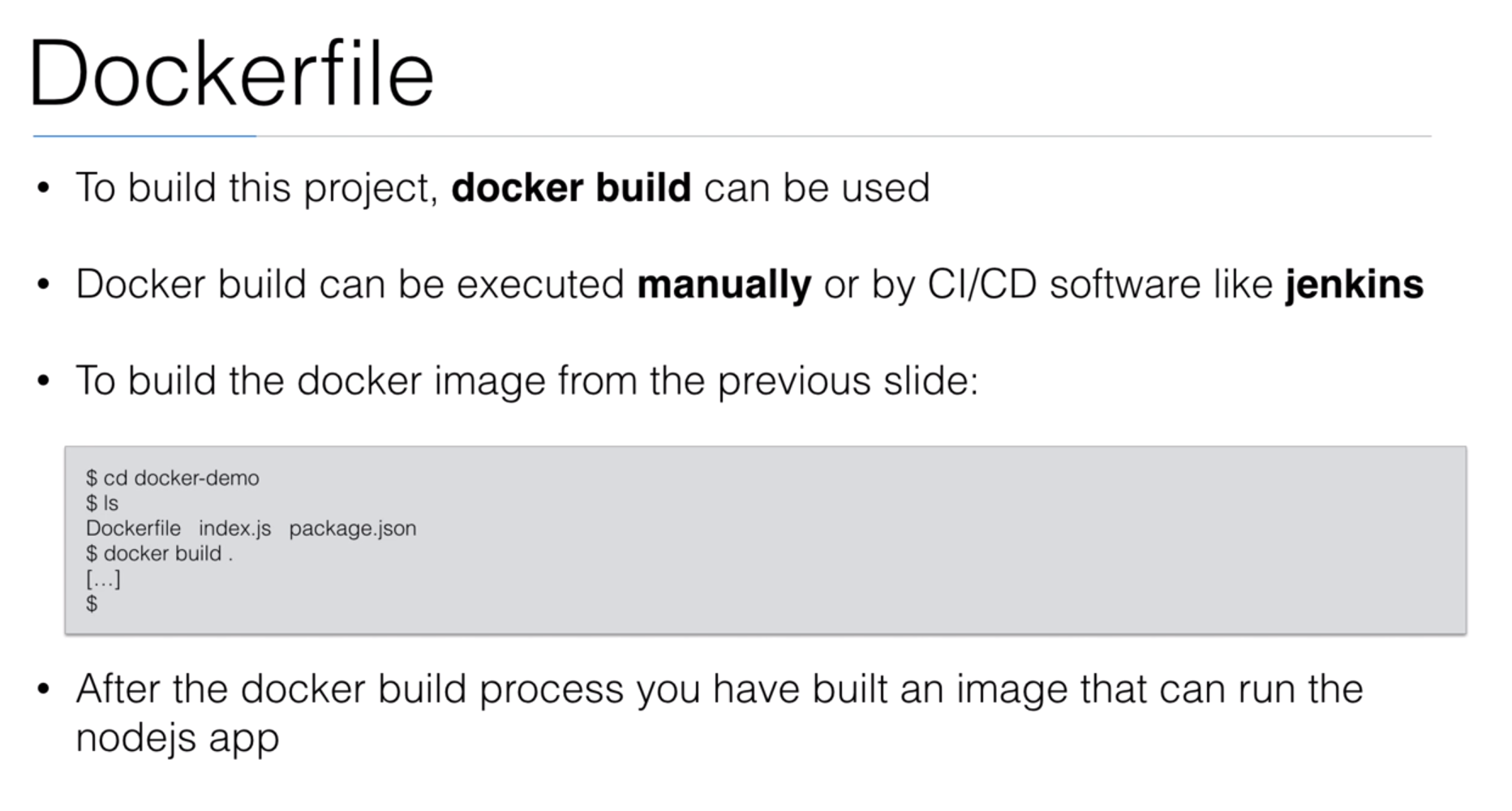

| Build image | docker build. |

| Build & Tag | docker build -t wardviaene/k8s-demo:latest . |

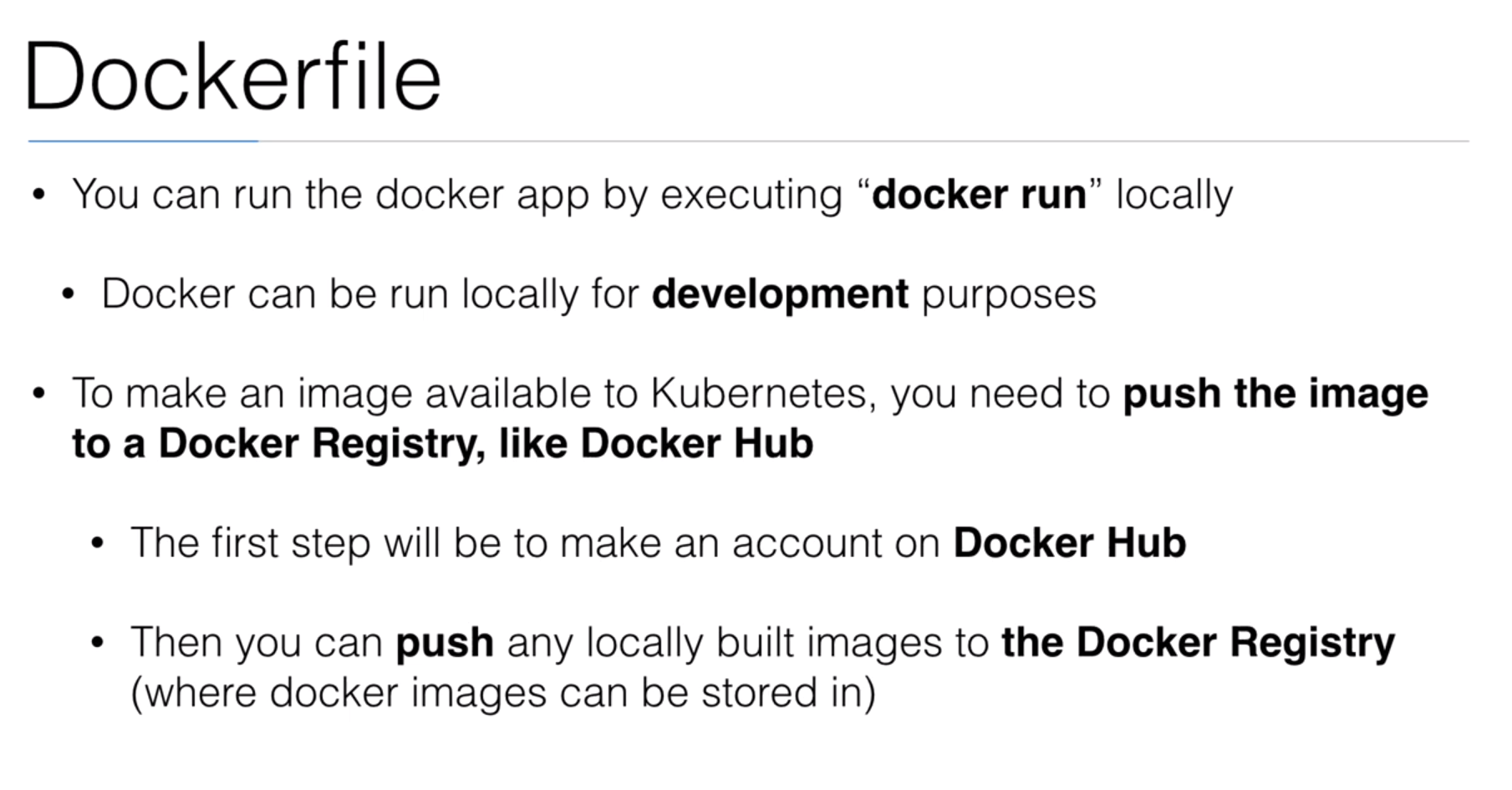

| Tag image | docker tag imageid wardviaene/k8s-demo |

| Push image | docker push wardviaene/k8s-demo |

| List images | docker images |

| List all containers | docker ps -a |

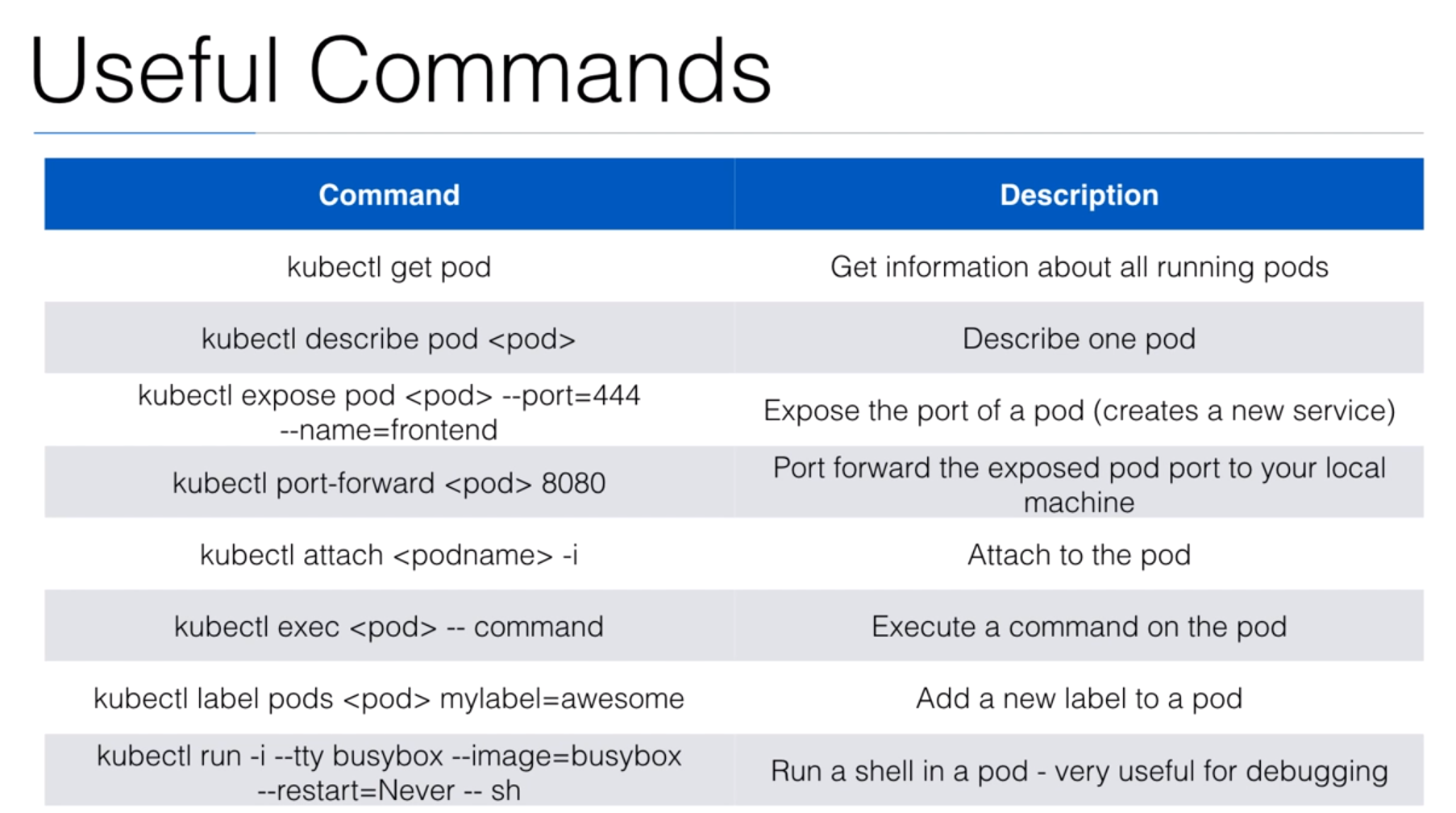

Cheatsheet: Kubernetes commands

| command | description |

|---|---|

| kubectl get pod | Get information about all running pods |

kubectl describe pod <pod> | Describe one pod |

kubectl expose pod <pod> --port=444 --name=frontend | Expose the port of a pod (creates a new service) |

kubectl port-forward <pod> 8080 | Port forward the exposed pod port to your local machine |

kubectl attach <podname> -i | Attach to the pod |

kubectl exec <pod> -- command | Execute a command on the pod |

kubectl label pods <pod> mylabel=awesome | Add a new label to a pod |

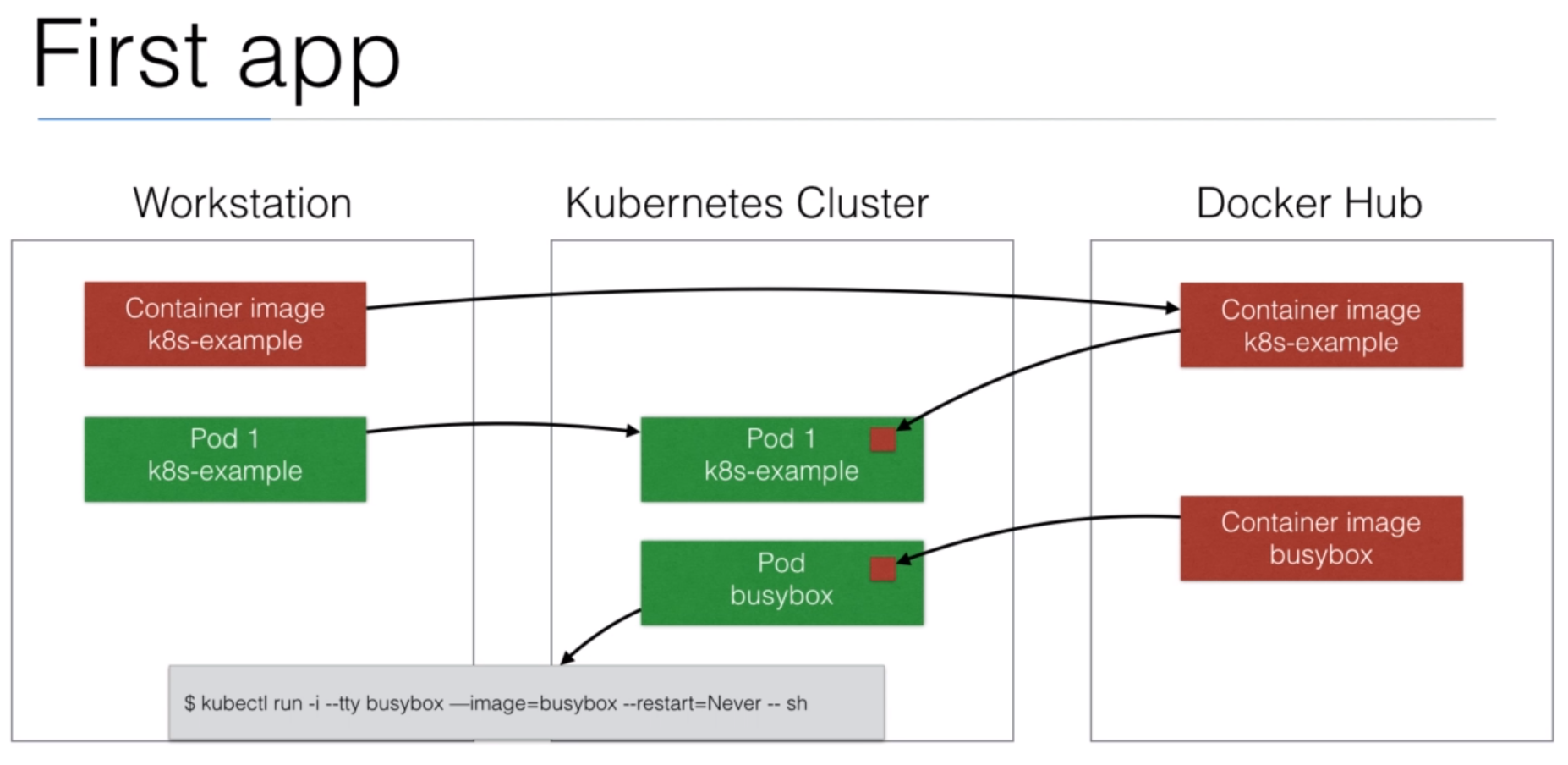

| kubectl run -i --tty busybox --image=busybox --restart=Never -- sh | Run a shell in a pod - very |

| kubectl get deployments | Get information on current deployments |

| kubectl get rs | Get information about the replica sets |

| kubectl get pods --show-labels | get pods, and also show labels attached to those pods |

| kubectl rollout status deployment/helloworld-deployment | Get deployment status |

| kubectl set image deployment/helloworld-deployment k8s-demo=k8s-demo:2 | Run k8s-demo with the image label version 2 |

| kubectl edit deployment/helloworld-deployment | Edit the deployment object |

| kubectl rollout status deployment/helloworld-deployment | Get the status of the rollout |

| kubectl rollout history deployment/helloworld-deployment | Get the rollout history |

| kubectl rollout undo deployment/helloworld-deployment | Rollback to previous version |

| kubectl rollout undo deployment/helloworld-deployment --to-revision=n | Rollback to any version version |

AWS Commands

- aws ec2 create-volume --size 10 --region us-east-1 --availability-zone us-east-1a --volume-type gp2

Certificates

| description | command |

|---|---|

| Creating a new key for a new user | openssl genrsa -out myuser.pem 2048 |

| Creating a certificate request | openssl req -new -key myuser.pem -out myuser-csr.pem -subj "/CN=myuser/O=myteam/" |

| Creating a certificate | openssl x509 -req -in myuser-csr.pem -CA /path/to/kubernetes/ca.crt -CAkey /path/to/kubernetes/ca.key -CAcreateserial -out myuser.crt -days 10000 |

Abbreviations used

| Resource type | Abbreviated alias |

|---|---|

| configmaps | cm |

| customresourcedefinition | crd |

| daemonsets | ds |

| deployments | deploy |

| horizontalpodautoscalers | hpa |

| ingresses | ing |

| limitranges | limits |

| namespaces | ns |

| nodes | no |

| persistentvolumeclaims | pvc |

| persistentvolumes | pv |

| pods | po |

| replicasets | rs |

| replicationcontrollers | rc |

| resourcequotas | quota |

| serviceaccounts | sa |

| services | svc |

Section: 1. Introduction to Kubernetes

4. Kubernetes Introduction

5. Containers Introduction

6. Kubernetes Setup

7. Local Setup with minikube

8. Demo: Minikube

- We need to go to minikube

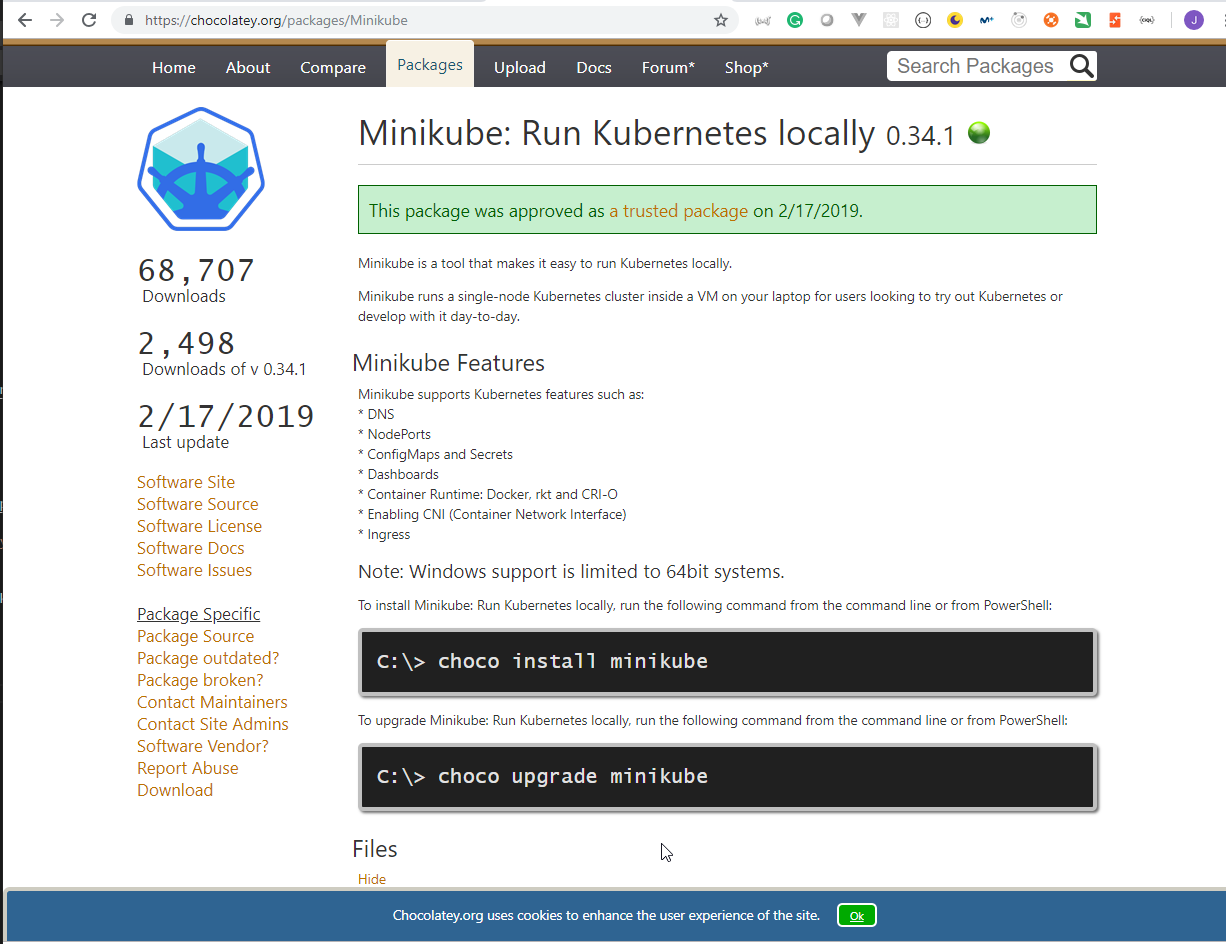

I'm going to install Minikube using Chocolatey

The package will be Minikube: Run Kubernetes locally

C:\Work\Training\Pre\Docker\learn-devops-the-complete-kubernetes-course>choco install minikube

Chocolatey v0.10.11

Installing the following packages:

minikube

By installing you accept licenses for the packages.

Progress: Downloading kubernetes-cli 1.13.3... 100%

Progress: Downloading Minikube 0.34.1... 100%

kubernetes-cli v1.13.3 [Approved]

kubernetes-cli package files install completed. Performing other installation steps.

The package kubernetes-cli wants to run 'chocolateyInstall.ps1'.

Note: If you don't run this script, the installation will fail.

Note: To confirm automatically next time, use '-y' or consider:

choco feature enable -n allowGlobalConfirmation

Do you want to run the script?([Y]es/[N]o/[P]rint): Y

Extracting 64-bit C:\ProgramData\chocolatey\lib\kubernetes-cli\tools\kubernetes-client-windows-amd64.tar.gz to C:\ProgramData\chocolatey\lib\kubernetes-cli\tools...

C:\ProgramData\chocolatey\lib\kubernetes-cli\tools

Extracting 64-bit C:\ProgramData\chocolatey\lib\kubernetes-cli\tools\kubernetes-client-windows-amd64.tar to C:\ProgramData\chocolatey\lib\kubernetes-cli\tools...

C:\ProgramData\chocolatey\lib\kubernetes-cli\tools

ShimGen has successfully created a shim for kubectl.exe

The install of kubernetes-cli was successful.

Software installed to 'C:\ProgramData\chocolatey\lib\kubernetes-cli\tools'

Minikube v0.34.1 [Approved]

minikube package files install completed. Performing other installation steps.

ShimGen has successfully created a shim for minikube.exe

The install of minikube was successful.

Software install location not explicitly set, could be in package or

default install location if installer.

Chocolatey installed 2/2 packages.

See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

C:\Work\Training\Pre\Docker\learn-devops-the-complete-kubernetes-course>minikube

Minikube is a CLI tool that provisions and manages single-node Kubernetes clusters optimized for development workflows.

Usage:

minikube [command]

Available Commands:

addons Modify minikube's kubernetes addons

cache Add or delete an image from the local cache.

completion Outputs minikube shell completion for the given shell (bash or zsh)

config Modify minikube config

dashboard Access the kubernetes dashboard running within the minikube cluster

delete Deletes a local kubernetes cluster

docker-env Sets up docker env variables; similar to '$(docker-machine env)'

help Help about any command

ip Retrieves the IP address of the running cluster

logs Gets the logs of the running instance, used for debugging minikube, not user code

mount Mounts the specified directory into minikube

profile Profile sets the current minikube profile

service Gets the kubernetes URL(s) for the specified service in your local cluster

ssh Log into or run a command on a machine with SSH; similar to 'docker-machine ssh'

ssh-key Retrieve the ssh identity key path of the specified cluster

start Starts a local kubernetes cluster

status Gets the status of a local kubernetes cluster

stop Stops a running local kubernetes cluster

tunnel tunnel makes services of type LoadBalancer accessible on localhost

update-check Print current and latest version number

update-context Verify the IP address of the running cluster in kubeconfig.

version Print the version of minikube

Flags:

--alsologtostderr log to standard error as well as files

-b, --bootstrapper string The name of the cluster bootstrapper that will set up the kubernetes cluster. (default "kubeadm")

-h, --help help for minikube

--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)

--log_dir string If non-empty, write log files in this directory

--logtostderr log to standard error instead of files

-p, --profile string The name of the minikube VM being used.

This can be modified to allow for multiple minikube instances to be run independently (default "minikube")

--stderrthreshold severity logs at or above this threshold go to stderr (default 2)

-v, --v Level log level for V logs

--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging

Use "minikube [command] --help" for more information about a command.

C:\Work\Training\Pre\Docker\learn-devops-the-complete-kubernetes-course>minikube start

o minikube v0.34.1 on windows (amd64)

> Creating virtualbox VM (CPUs=2, Memory=2048MB, Disk=20000MB) ...

@ Downloading Minikube ISO ...

184.30 MB / 184.30 MB [============================================] 100.00% 0s

! Unable to start VM: create: precreate: VBoxManage not found. Make sure VirtualBox is installed and VBoxManage is in the path

* Sorry that minikube crashed. If this was unexpected, we would love to hear from you:

- https://github.com/kubernetes/minikube/issues/new

::: Warning The previous error is happening because we have Hyper-V installed that is needed to have Docker installed in Windows :::

- We need to follow the next steps:

- Identify physical network adapters ( Ethernet and/or Wifi) using the

Get-NetAdaptercommand from thePowerShell command line

Windows PowerShell

Copyright (C) Microsoft Corporation. All rights reserved.

PS C:\Windows\system32> Get-NetAdapter

Name InterfaceDescription ifIndex Status MacAddress LinkSpeed

---- -------------------- ------- ------ ---------- ---------

Citrix Virtual Adapter Microsoft KM-TEST Loopback Adapter 23 Up 02-00-4C-4F-4F-50 1.2 Gbps

WiFi Intel(R) Dual Band Wireless-AC 8260 21 Up E4-B3-18-1A-4B-4A 57.8 Mbps

Bluetooth Network Conn... Bluetooth Device (Personal Area Netw... 20 Disconnected E4-B3-18-1A-4B-4E 3 Mbps

vEthernet (DockerNAT) Hyper-V Virtual Ethernet Adapter #2 52 Up 00-15-5D-F7-32-04 10 Gbps

vEthernet (Default Swi... Hyper-V Virtual Ethernet Adapter 32 Up 3A-15-9D-D2-EE-71 10 Gbps

Npcap Loopback Adapter Npcap Loopback Adapter 13 Up 02-00-4C-4F-4F-50 1.2 Gbps

Ethernet 2 TAP Adapter OAS NDIS 6.0 11 Disconnected 00-FF-46-D0-98-F6 100 Mbps

Ethernet 3 Cisco AnyConnect Secure Mobility Cli... 5 Disabled 00-05-9A-3C-7A-00 862.4 Mbps

Ethernet Intel(R) Ethernet Connection I219-LM 3 Disconnected 84-7B-EB-38-FE-EB 0 bps

- Create external-virtual-switch using the following command

New-VMSwitch -Name MyMinikubeCluster -AllowManagement $True -NetAdapterName WiFi

PS C:\Windows\system32> New-VMSwitch -Name MyMinikubeCluster -AllowManagement $True -NetAdapterName WiFi

Name SwitchType NetAdapterInterfaceDescription

---- ---------- ------------------------------

MyMinikubeCluster External Intel(R) Dual Band Wireless-AC 8260

- Execute the following command

minikube start --vm-driver=hyperv --hyperv-virtual-switch=MyMinikubeCluster

C:\Work\Training\Pre\Docker\learn-devops-the-complete-kubernetes-course>minikube start --vm-driver=hyperv --hyperv-virtual-switch=MyMinikubeCluster

o minikube v0.34.1 on windows (amd64)

> Creating hyperv VM (CPUs=2, Memory=2048MB, Disk=20000MB) ...

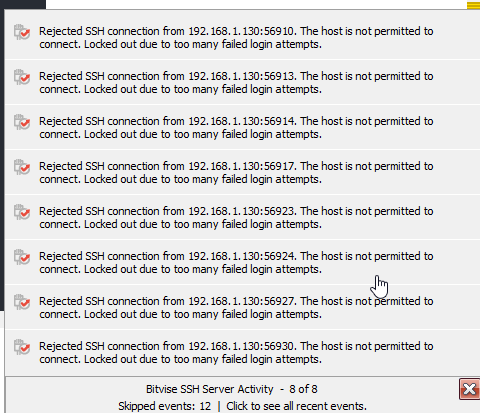

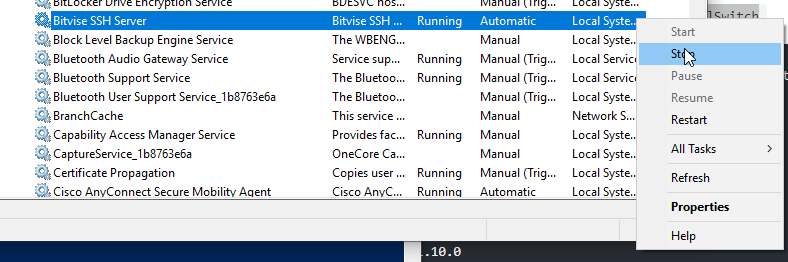

- If

BitWise SSH Serverserver is running on Windows it must be stopped.

C:\Work\Training\Pre\Docker\learn-devops-the-complete-kubernetes-course>minikube start --vm-driver=hyperv --hyperv-virtual-switch=MyMinikubeCluster

o minikube v0.34.1 on windows (amd64)

> Creating hyperv VM (CPUs=2, Memory=2048MB, Disk=20000MB) ...

- "minikube" IP address is 192.168.0.112

- Configuring Docker as the container runtime ...

- Preparing Kubernetes environment ...

@ Downloading kubelet v1.13.3

@ Downloading kubeadm v1.13.3

! Failed to update cluster: downloading binaries: copy: wait: remote command exited without exit status or exit signal

* Sorry that minikube crashed. If this was unexpected, we would love to hear from you:

- https://github.com/kubernetes/minikube/issues/new

- It is retried

C:\Work\Training\Pre\Docker\learn-devops-the-complete-kubernetes-course>minikube start --vm-driver=hyperv --hyperv-virtual-switch=MyMinikubeCluster

o minikube v0.34.1 on windows (amd64)

i Tip: Use 'minikube start -p <name>' to create a new cluster, or 'minikube delete' to delete this one.

: Re-using the currently running hyperv VM for "minikube" ...

: Waiting for SSH access ...

- "minikube" IP address is 192.168.1.145

- Configuring Docker as the container runtime ...

- Preparing Kubernetes environment ...

! Failed to update cluster: downloading binaries: copy: wait: remote command exited without exit status or exit signal

* Sorry that minikube crashed. If this was unexpected, we would love to hear from you:

- https://github.com/kubernetes/minikube/issues/new

- The minikube VM cannot be deleted from

hyperv

C:\Work\Training\Pre\Docker\learn-devops-the-complete-kubernetes-course>minikube delete

- Powering off "minikube" via SSH ...

x Deleting "minikube" from hyperv ...

! Failed to delete cluster: exit status 1

* Sorry that minikube crashed. If this was unexpected, we would love to hear from you:

- https://github.com/kubernetes/minikube/issues/new

- After rebooting the computer.

C:\Windows\system32>minikube start --vm-driver=hyperv --hyperv-virtual-switch=MyMinikubeCluster

o minikube v0.34.1 on windows (amd64)

i Tip: Use 'minikube start -p <name>' to create a new cluster, or 'minikube delete' to delete this one.

: Restarting existing hyperv VM for "minikube" ...

: Waiting for SSH access ...

- "minikube" IP address is 192.168.0.119

- Configuring Docker as the container runtime ...

- Preparing Kubernetes environment ...

- Pulling images required by Kubernetes v1.13.3 ...

: Relaunching Kubernetes v1.13.3 using kubeadm ...

: Waiting for kube-proxy to come back up ...

! Error restarting cluster: restarting kube-proxy: waiting for kube-proxy to be up for configmap update: timed out waiting for the condition

* Sorry that minikube crashed. If this was unexpected, we would love to hear from you:

- https://github.com/kubernetes/minikube/issues/new

- I delete

Minikubefromhyperv

C:\Windows\system32>minikube delete

- Powering off "minikube" via SSH ...

x Deleting "minikube" from hyperv ...

- The "minikube" cluster has been deleted.

- It is created again

C:\Windows\system32>minikube start --vm-driver=hyperv --hyperv-virtual-switch=MyMinikubeCluster

o minikube v0.34.1 on windows (amd64)

> Creating hyperv VM (CPUs=2, Memory=2048MB, Disk=20000MB) ...

- "minikube" IP address is 192.168.0.120

- Configuring Docker as the container runtime ...

- Preparing Kubernetes environment ...

- Pulling images required by Kubernetes v1.13.3 ...

- Launching Kubernetes v1.13.3 using kubeadm ...

- Configuring cluster permissions ...

- Verifying component health .....

+ kubectl is now configured to use "minikube"

= Done! Thank you for using minikube!

C:\Windows\system32>minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.0.108

- Check if

kubectlis working properly

C:\Windows\system32>kubectl

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

run-container Run a particular image on the cluster. This command is deprecated, use "run" instead

Basic Commands (Intermediate):

get Display one or many resources

explain Documentation of resources

edit Edit a resource on the server

delete Delete resources by filenames, stdin, resources and names, or by resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

rolling-update Perform a rolling update of the given ReplicationController

scale Set a new size for a Deployment, ReplicaSet, Replication Controller, or Job

autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands:

certificate Modify certificate resources.

cluster-info Display cluster info

top Display Resource (CPU/Memory/Storage) usage.

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands:

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers.

auth Inspect authorization

Advanced Commands:

apply Apply a configuration to a resource by filename or stdin

patch Update field(s) of a resource using strategic merge patch

replace Replace a resource by filename or stdin

convert Convert config files between different API versions

Settings Commands:

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash or zsh)

Other Commands:

api-versions Print the supported API versions on the server, in the form of "group/version"

config Modify kubeconfig files

help Help about any command

plugin Runs a command-line plugin

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

- Run the following command to see if it's working properly

C:\Windows\system32>kubectl run hello-minikube --image=grc.io/google_containers/echoserver:1.4 --port=8080

deployment.apps "hello-minikube" created

- Expose the image now

C:\Windows\system32>kubectl expose deployment hello-minikube --type=NodePort

service "hello-minikube" exposed

- Service it with

minikube

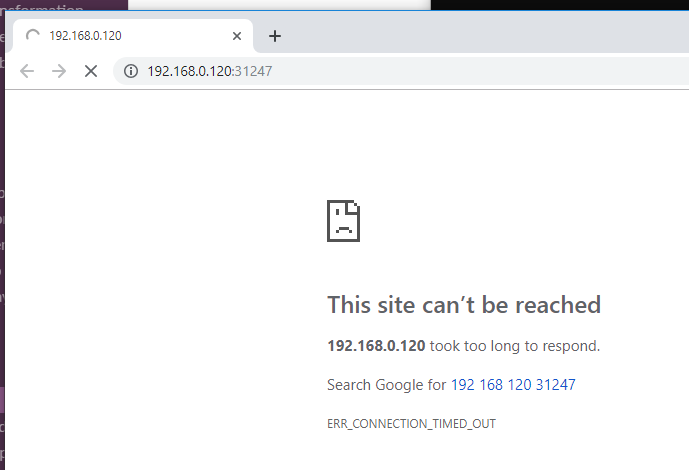

C:\Windows\system32>minikube service hello-minikube --url

http://192.168.0.120:31247

- It is not working

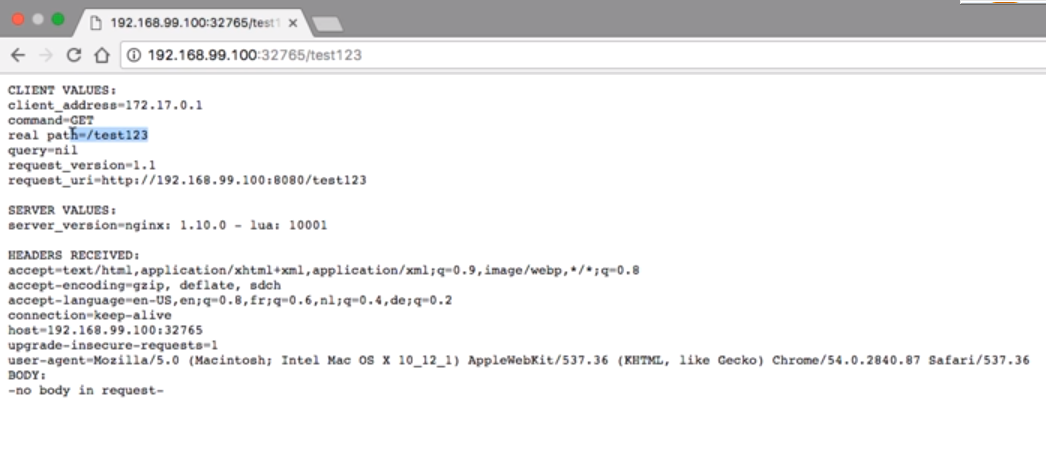

- It should be something like this:

C:\Windows\system32>minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.0.108

C:\Windows\system32>kubectl run hello-minikube --image=grc.io/google_containers/echoserver:1.4 --port=8080

Error from server (AlreadyExists): deployments.extensions "hello-minikube" already exists

C:\Windows\system32>kubectl expose deployment hello-minikube --type=NodePort

Error from server (AlreadyExists): services "hello-minikube" already exists

C:\Windows\system32>minikube service hello-minikube --url

http://192.168.0.108:31247

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-6d4b4cd58f-4ntdb 0/1 ImagePullBackOff 0 9h

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-6d4b4cd58f-4ntdb 0/1 ImagePullBackOff 0 9h

C:\Windows\system32>kubectl delete pod hello-minikube-6d4b4cd58f-4ntdb

pod "hello-minikube-6d4b4cd58f-4ntdb" deleted

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-6d4b4cd58f-tjpfw 0/1 ImagePullBackOff 0 3s

C:\Windows\system32>kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-minikube NodePort 10.104.203.11 <none> 8080:31247/TCP 9h

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11h

C:\Windows\system32>kubectl delete service hello-minikube

service "hello-minikube" deleted

C:\Windows\system32>kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11h

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-6d4b4cd58f-tjpfw 0/1 ImagePullBackOff 0 1m

C:\Windows\system32>kubectl delete pod hello-minikube-6d4b4cd58f-4ntdb

Error from server (NotFound): pods "hello-minikube-6d4b4cd58f-4ntdb" not found

C:\Windows\system32>kubectl delete pod hello-minikube-6d4b4cd58f-tjpfw

pod "hello-minikube-6d4b4cd58f-tjpfw" deleted

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-6d4b4cd58f-b99dq 0/1 ErrImagePull 0 3s

hello-minikube-6d4b4cd58f-tjpfw 0/1 Terminating 0 1m

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-6d4b4cd58f-b99dq 0/1 ImagePullBackOff 0 8s

C:\Windows\system32>kubectl get deployments

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

hello-minikube 1 1 1 0 9h

C:\Windows\system32>kubectl delete deployment hello-minikube

deployment.extensions "hello-minikube" deleted

C:\Windows\system32>kubectl get deployments

No resources found.

C:\Windows\system32>kubectl get pods

No resources found.

- The problem is related to the image

C:\Windows\system32>kubectl run hello-minikube --image=grc.io/google_containers/echoserver:1.4 --port=8080

deployment.apps "hello-minikube" created

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-6d4b4cd58f-2776d 0/1 ErrImagePull 0 4s

- Trying with the example copied from kubectl for Docker Users

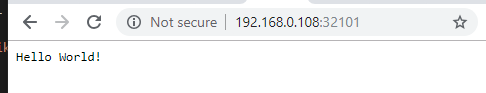

C:\Windows\system32>kubectl run hello-minikube --image=gcr.io/hello-minikube-zero-install/hello-node --port=8080

deployment.apps "hello-minikube" created

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-574f46546c-9s7sk 0/1 ContainerCreating 0 7s

C:\Windows\system32>kubectl get deployments

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

hello-minikube 1 1 1 1 2m

C:\Windows\system32>kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-minikube-574f46546c-9s7sk 1/1 Running 0 2m

C:\Windows\system32>kubectl expose deployment hello-minikube --type=NodePort

service "hello-minikube" exposed

C:\Windows\system32>minikube service hello-minikube --url

http://192.168.0.108:32101

- If we want to stop

minikubewe have to useminikube stop.

C:\Windows\system32>minikube stop

: Stopping "minikube" in hyperv ...

: Stopping "minikube" in hyperv ...

- If it doesn't work we can use:

$ minikube ssh "sudo poweroff"

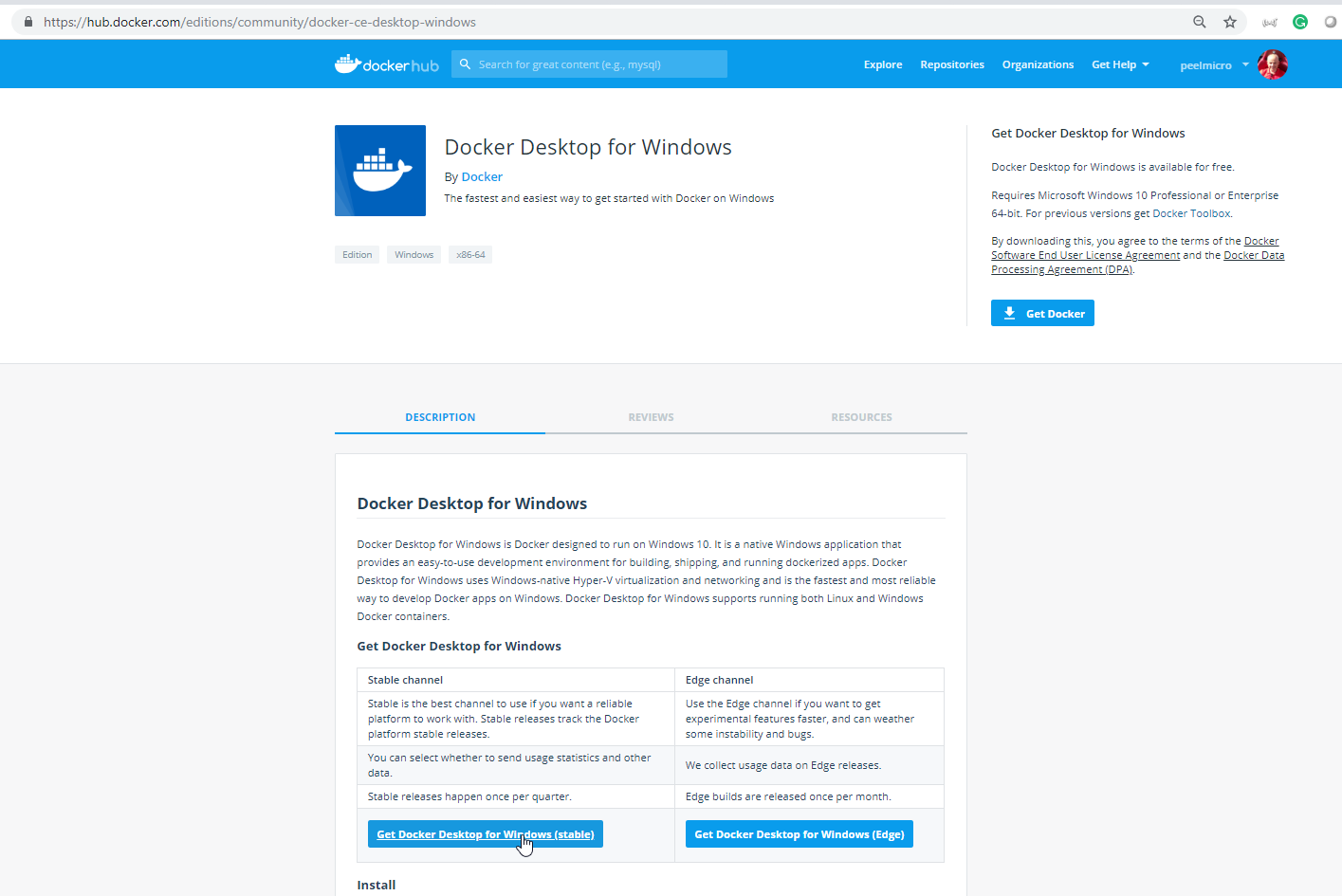

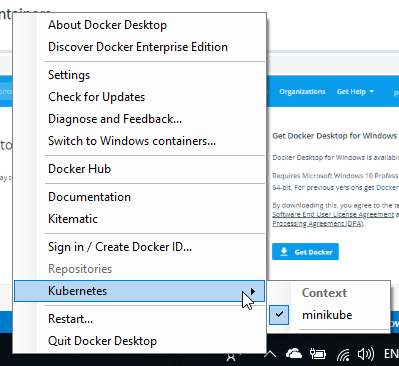

9. Installing Kubernetes using the Docker Client

- We can install

Windows Docker Clientfrom Install Docker Desktop for Windows

C:\Windows\system32>kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready master 12h v1.13.3

C:\Windows\system32>kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* minikube minikube minikube

10. Minikube vs Docker Client vs Kops vs Kubeadm

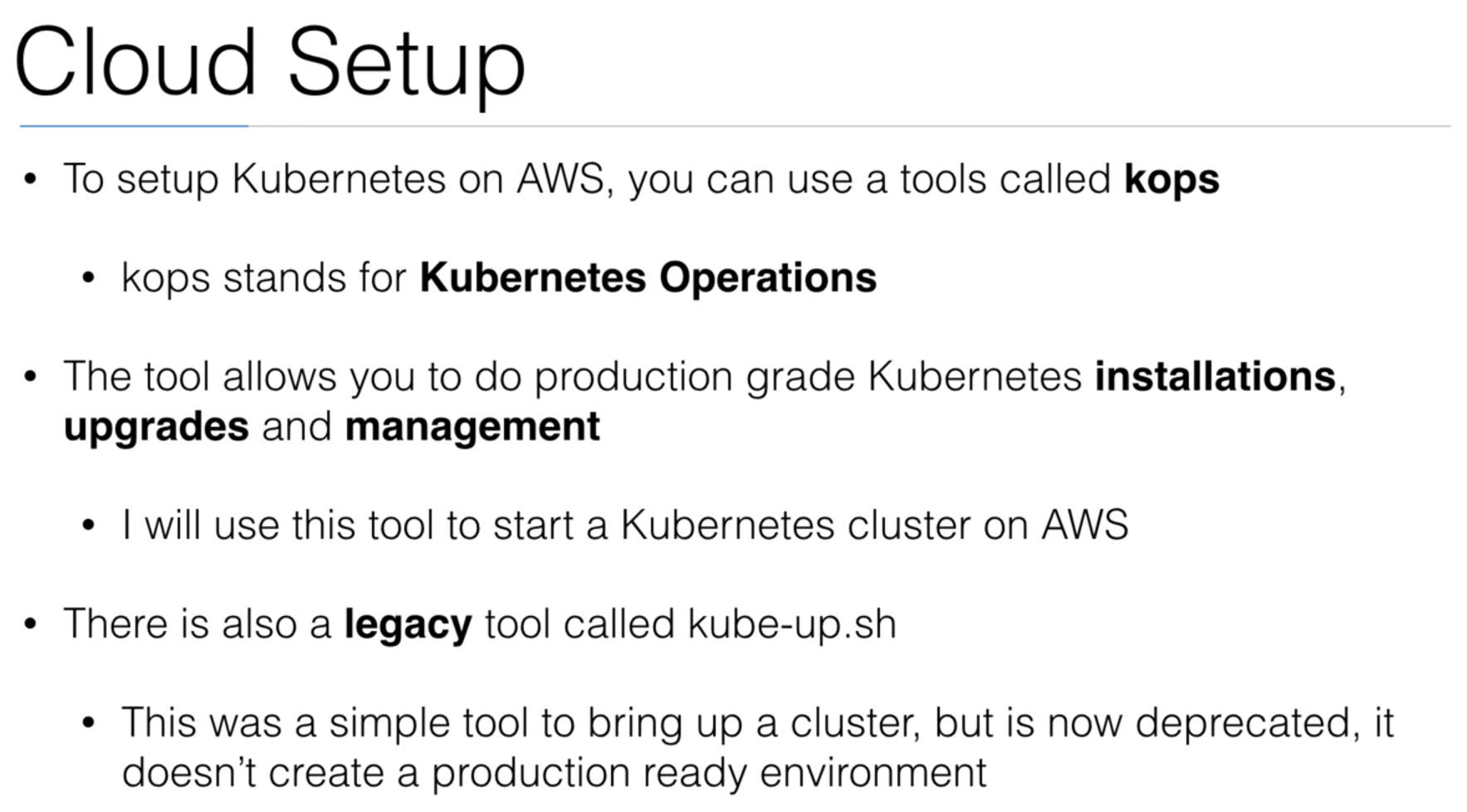

11. Introduction to Kops

12. Demo: Preparing kops install

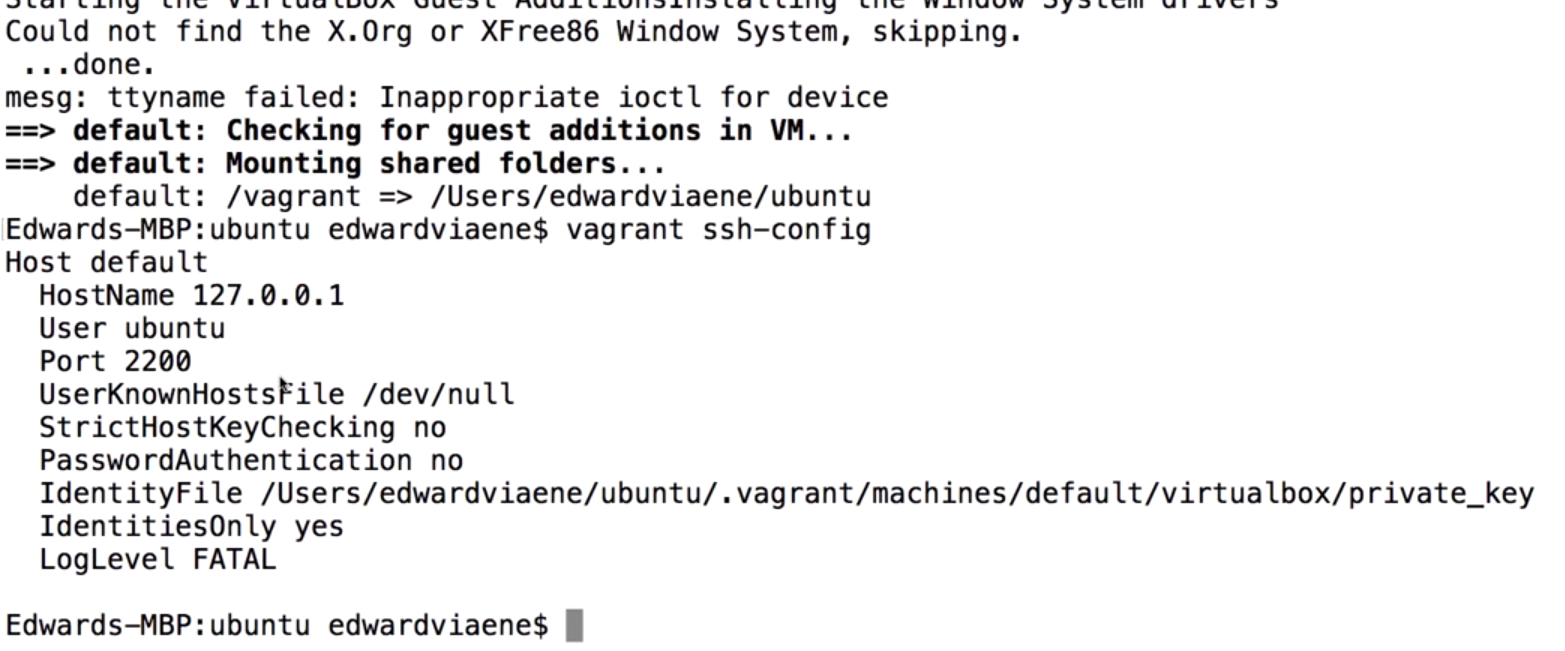

It can be installed only in

LinuxandMac. We need havevagrantpreviously installed.Exeute the

vagrant init ubuntu/xenial64command

- Execute

vagrant ssh-configfor Linux that needs anIdentifyFile

- On

Macwe can executevagrant ssh

Have a look at Section: 8. Installing Kubernetes using kubeadm. We are going to use the Digital Ocean server from now on.

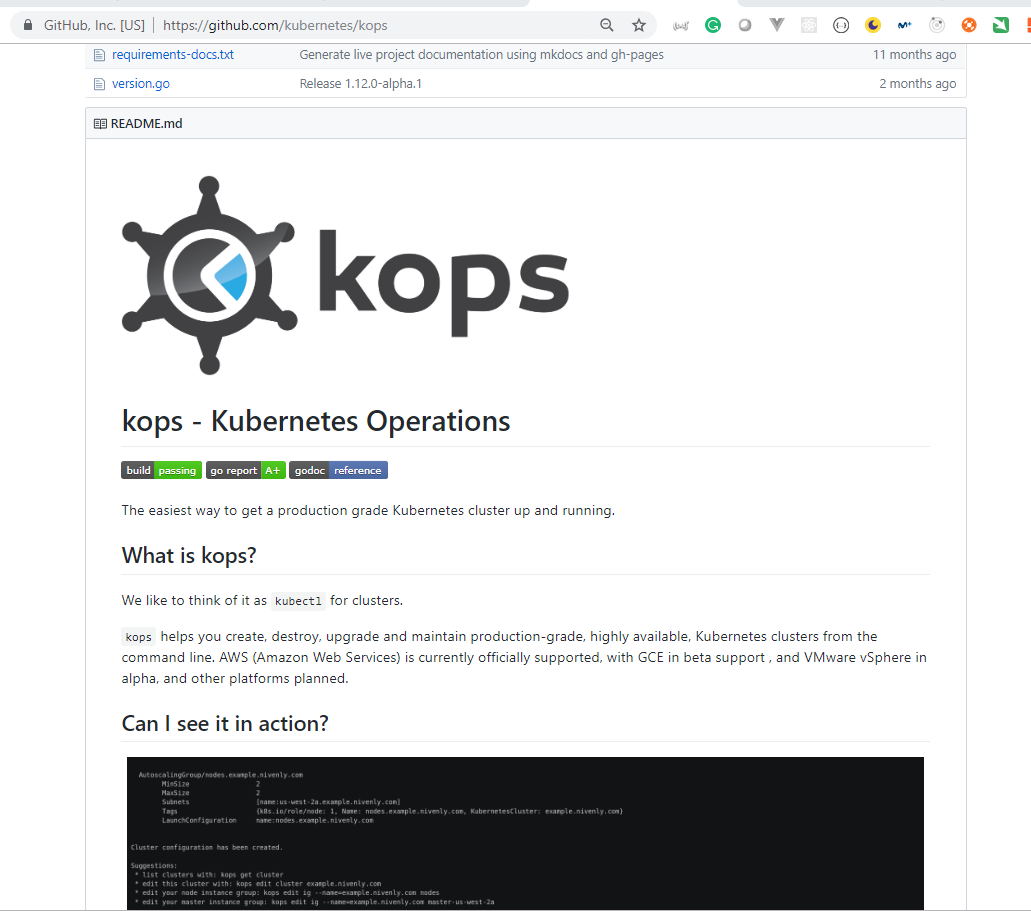

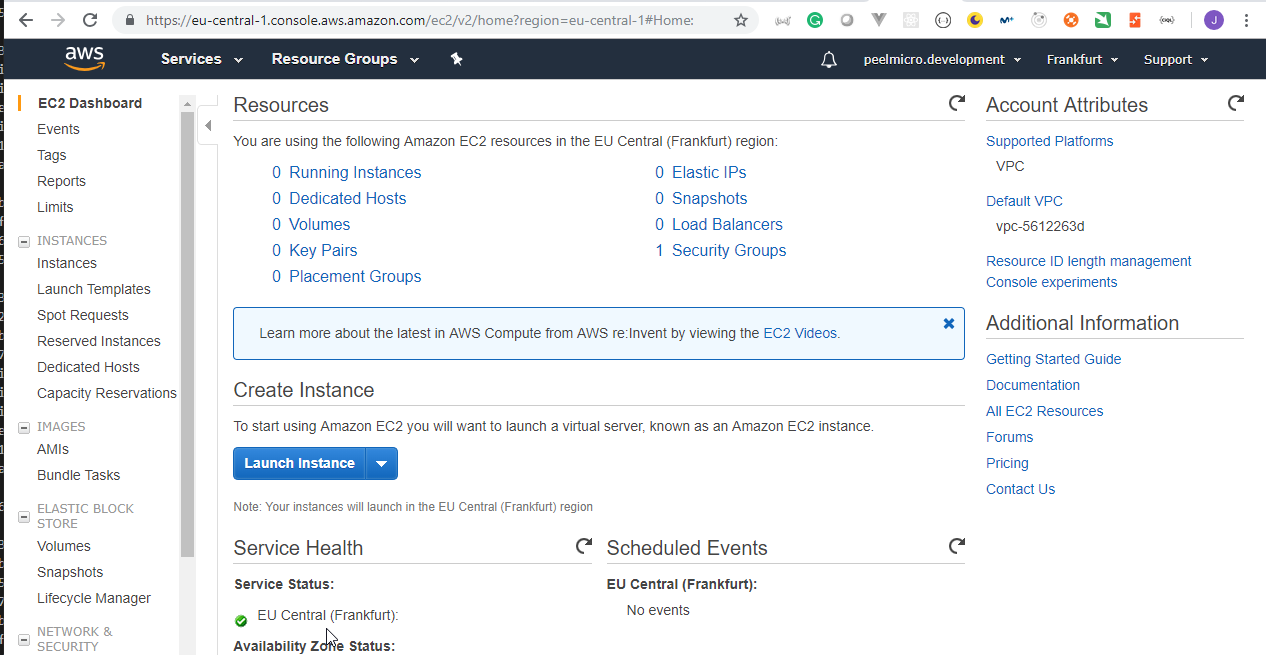

13. Demo: Preparing AWS for kops install

What is kops? We like to think of it as kubectl for clusters.

kops helps you create, destroy, upgrade and maintain production-grade, highly available, Kubernetes clusters from the command line. AWS (Amazon Web Services) is currently officially supported, with GCE in beta support , and VMware vSphere in alpha, and other platforms planned.

Installing kopsis explained in the 9. Install kops chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Ensure that

kopsis working properly.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops

kops is Kubernetes ops.

kops is the easiest way to get a production grade Kubernetes cluster up and running. We like to think of it as kubectl for clusters.

kops helps you create, destroy, upgrade and maintain production-grade, highly available, Kubernetes clusters from the command line. AWS (Amazon Web Services) is currently officially supported, with GCE and VMware vSphere in alpha support.

Usage:

kops [command]

Available Commands:

completion Output shell completion code for the given shell (bash or zsh).

create Create a resource by command line, filename or stdin.

delete Delete clusters,instancegroups, or secrets.

describe Describe a resource.

edit Edit clusters and other resources.

export Export configuration.

get Get one or many resources.

help Help about any command

import Import a cluster.

replace Replace cluster resources.

rolling-update Rolling update a cluster.

set Set fields on clusters and other resources.

toolbox Misc infrequently used commands.

update Update a cluster.

upgrade Upgrade a kubernetes cluster.

validate Validate a kops cluster.

version Print the kops version information.

Flags:

--alsologtostderr log to standard error as well as files

--config string yaml config file (default is $HOME/.kops.yaml)

-h, --help help for kops

--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)

--log_dir string If non-empty, write log files in this directory

--logtostderr log to standard error instead of files (default false)

--name string Name of cluster. Overrides KOPS_CLUSTER_NAME environment variable

--state string Location of state storage (kops 'config' file). Overrides KOPS_STATE_STORE environment variable

--stderrthreshold severity logs at or above this threshold go to stderr (default 2)

-v, --v Level log level for V logs

--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging

Use "kops [command] --help" for more information about a command.

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

Installing awscliis explained in the 4. Install aws utility chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Ensure

awscliis working properly:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# aws --version

aws-cli/1.16.116 Python/3.6.7 Linux/4.15.0-46-generic botocore/1.12.106

Creating and IAM user and configuring awscliis explained in the 5. Configure aws with proper credentials chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Ensure the awscli is configured correctly

root@ubuntu-s-1vcpu-2gb-lon1-01:~# ls -ahl ~/.aws/

total 16K

drwxr-xr-x 2 root root 4.0K Mar 4 19:46 .

drwx------ 17 root root 4.0K Mar 14 06:06 ..

-rw-r--r-- 1 root root 39 Mar 4 19:46 config

-rw-r--r-- 1 root root 112 Mar 4 19:45 credentials

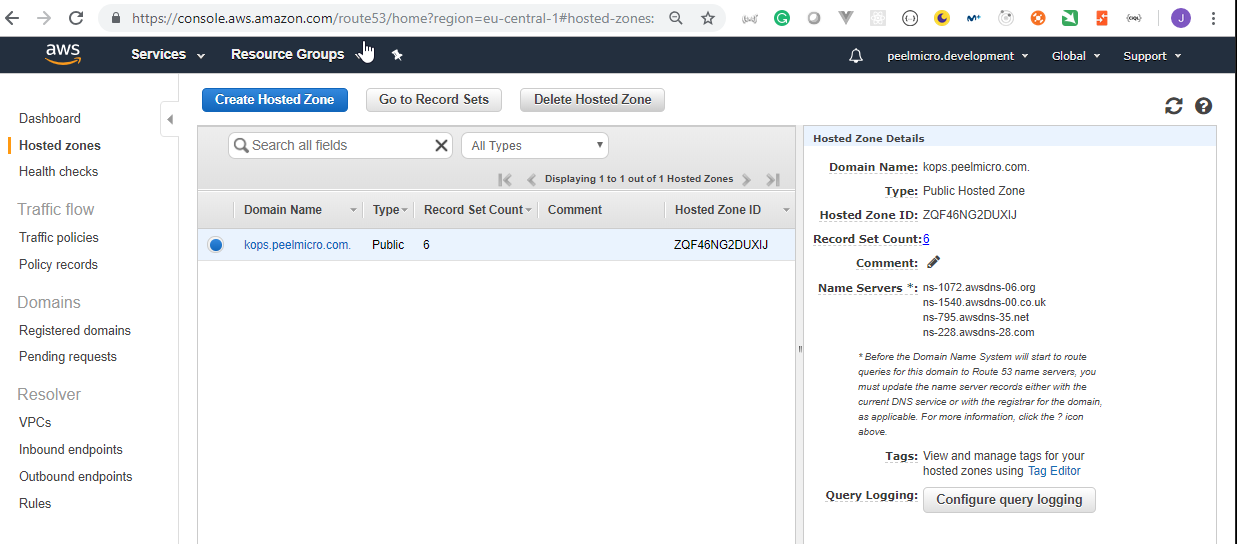

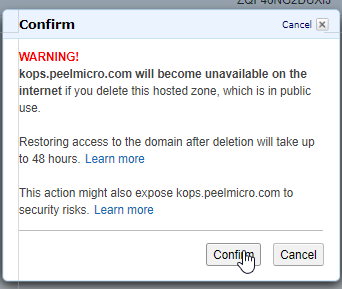

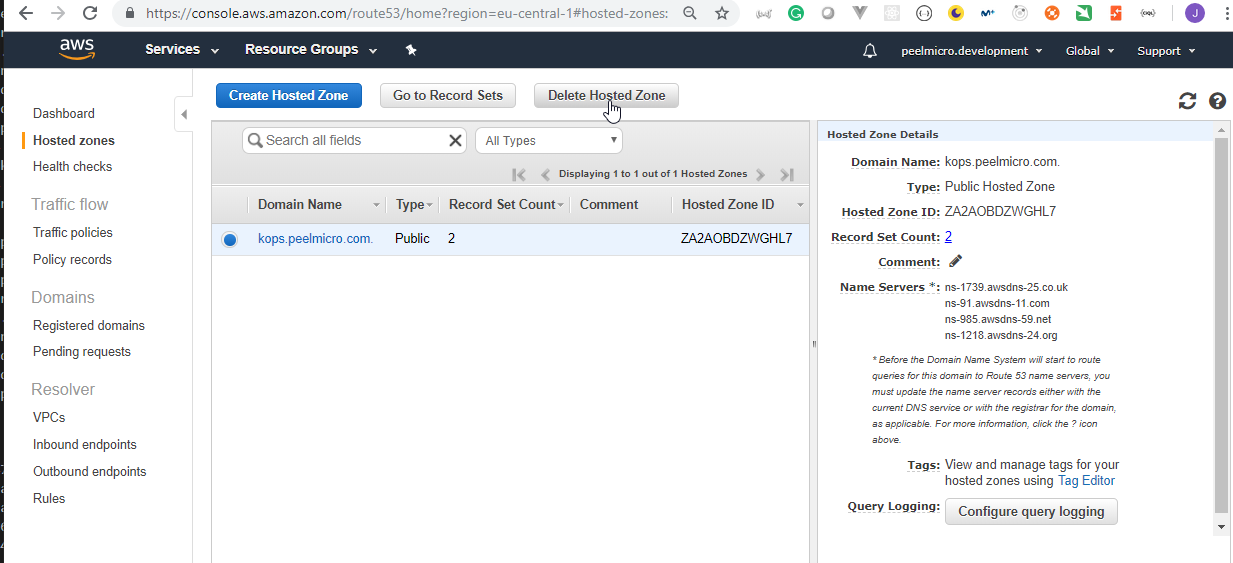

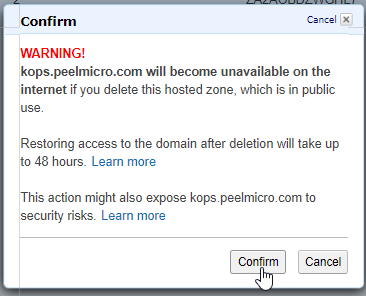

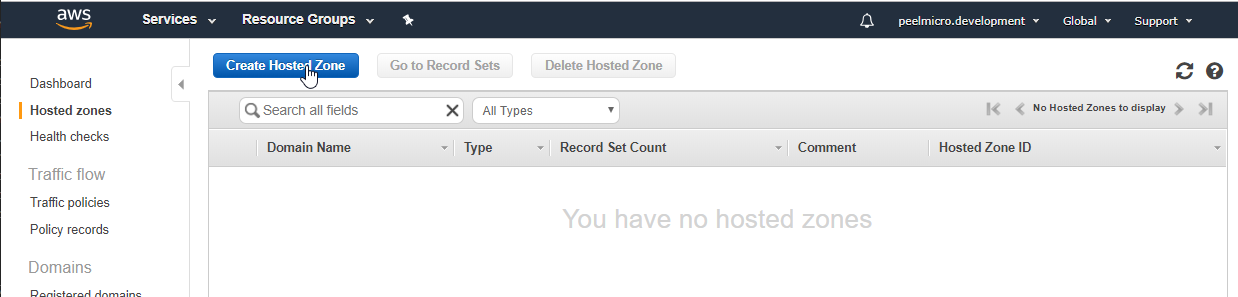

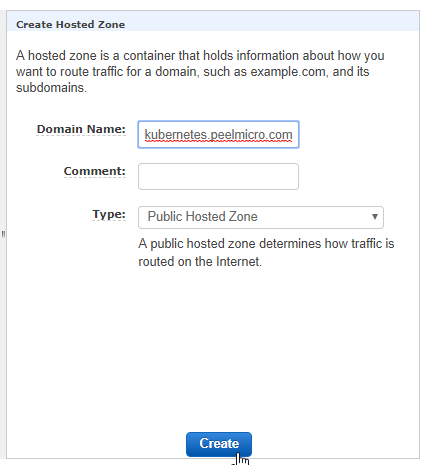

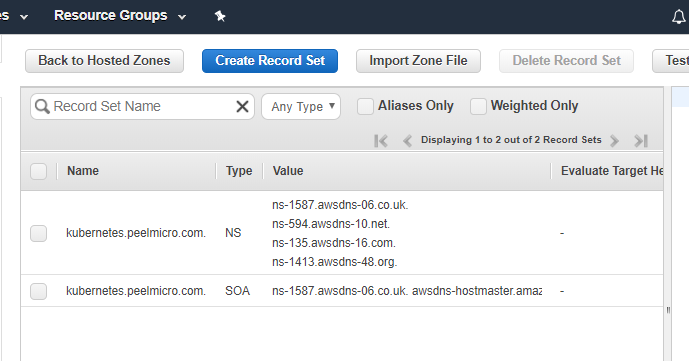

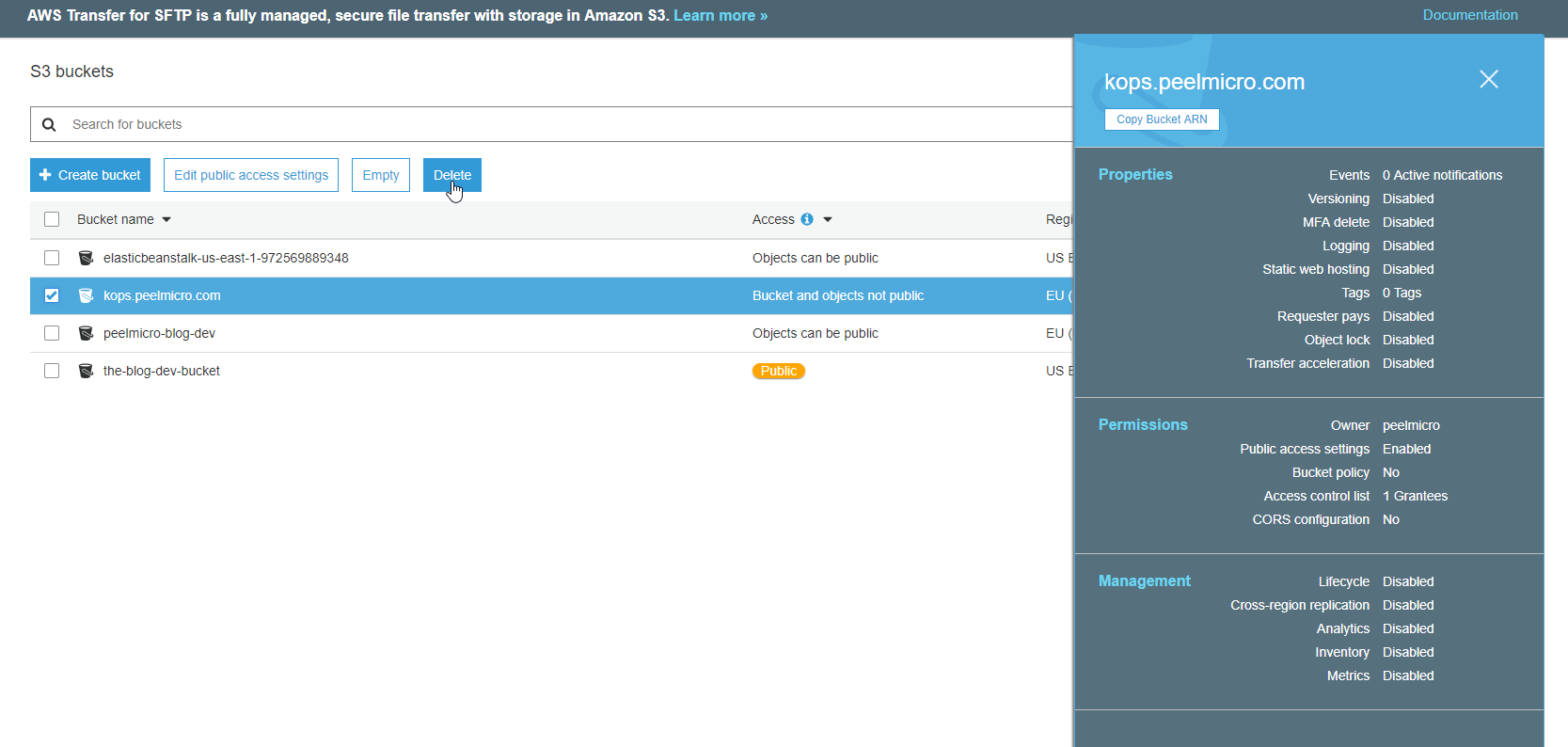

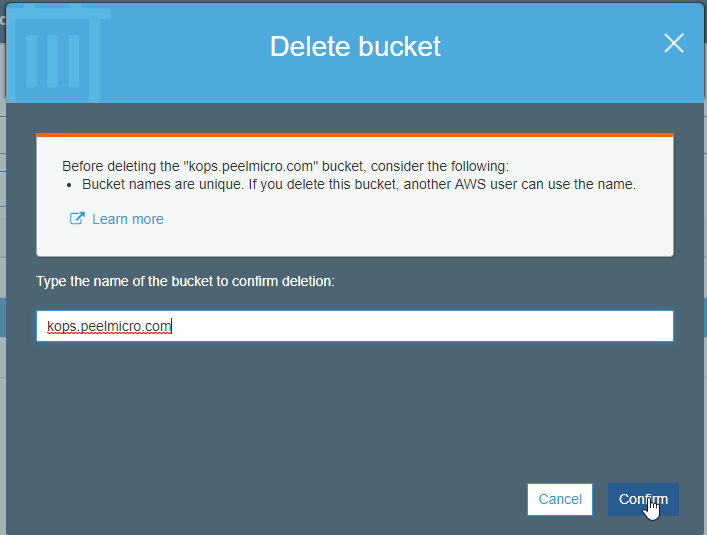

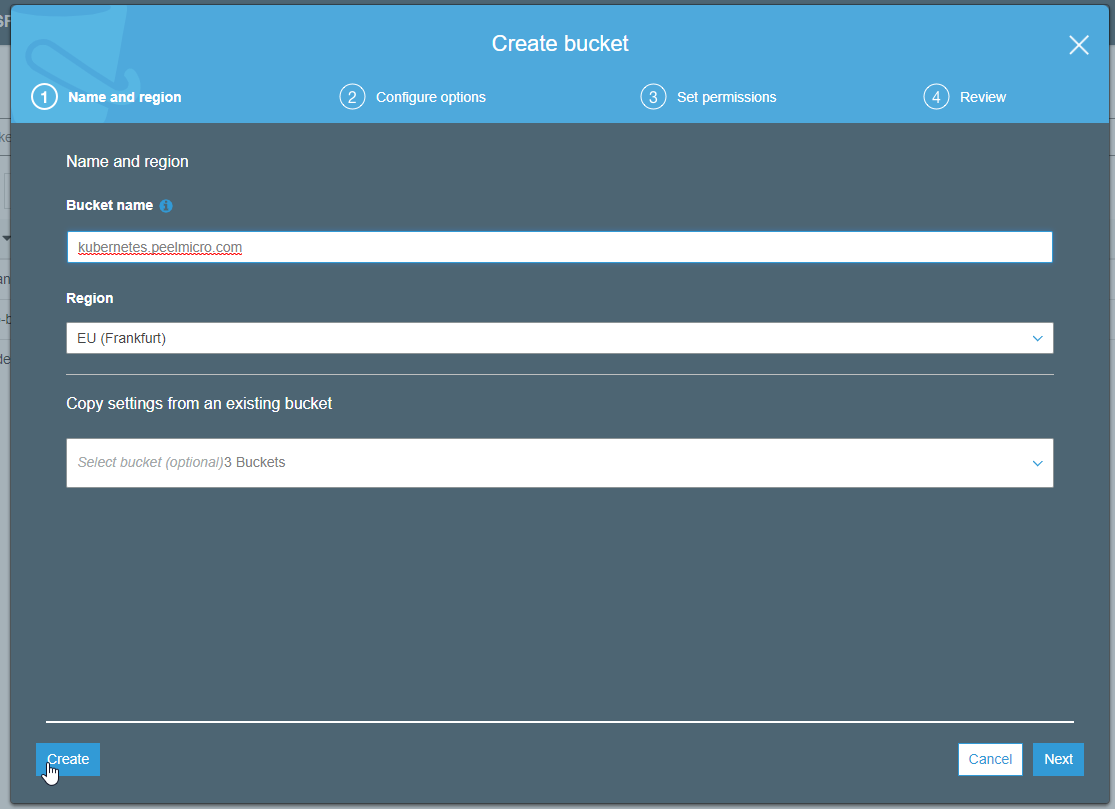

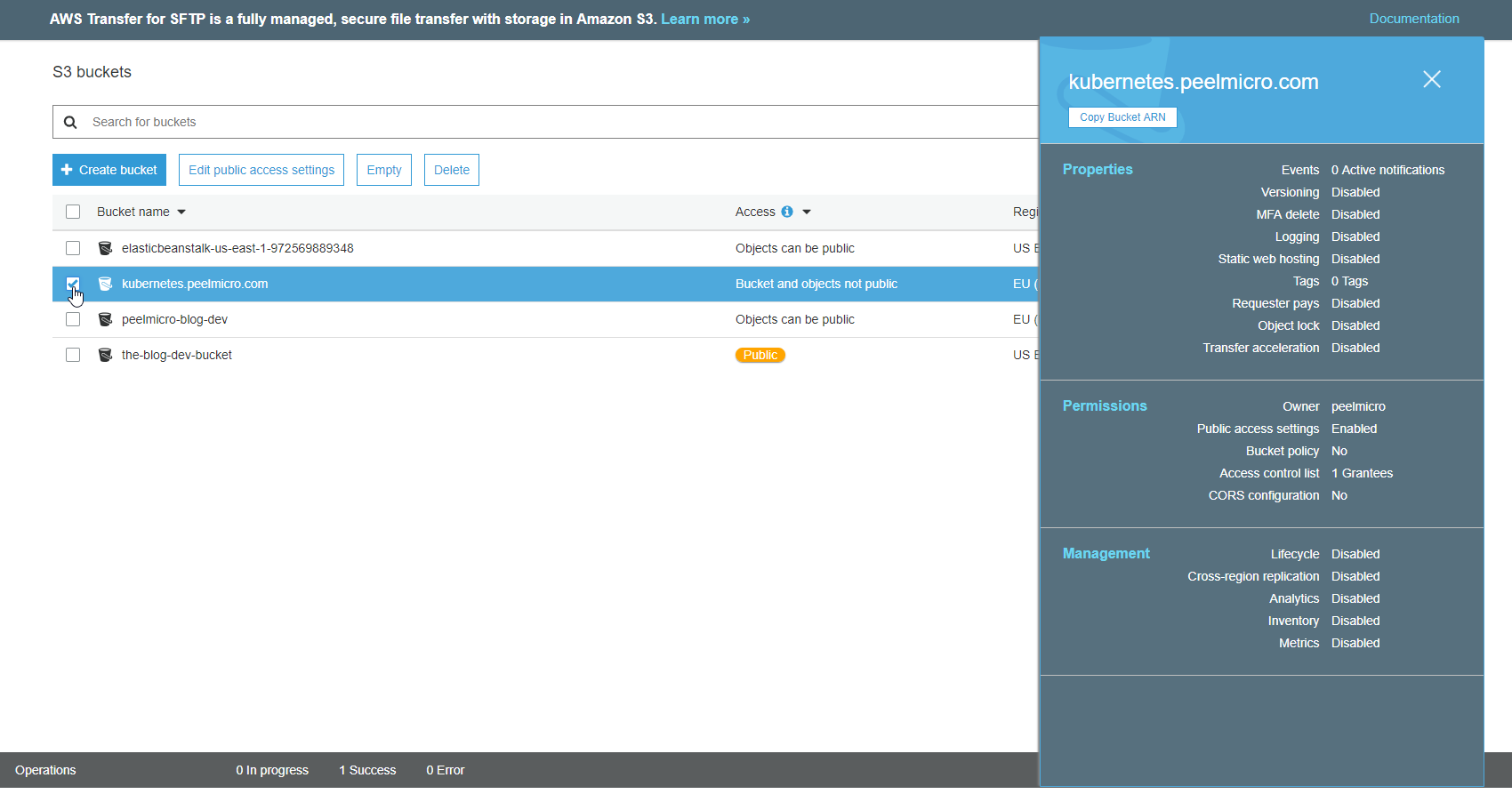

Creating the S3 bucketis explained in the 15. How to use kops and create Kubernetes cluster chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Creating the Route53 DNSis explained in the 16. How to use kops and create Kubernetes cluster (Continue) - Why hosted zone chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.

14. Demo: DNS Troubleshooting (Optional)

- Install

hostif it is not already installed

root@ubuntu-s-1vcpu-2gb-lon1-01:~# apt install bind9-host

Reading package lists... Done

Building dependency tree

Reading state information... Done

bind9-host is already the newest version (1:9.11.3+dfsg-1ubuntu1.5).

The following package was automatically installed and is no longer required:

grub-pc-bin

Use 'apt autoremove' to remove it.

0 upgraded, 0 newly installed, 0 to remove and 16 not upgraded.

- Execute the following command

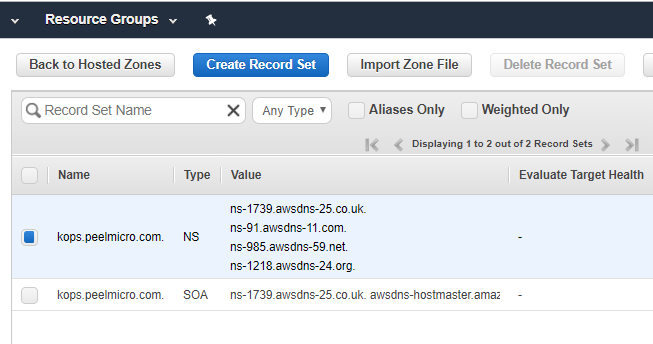

root@ubuntu-s-1vcpu-2gb-lon1-01:~# host -t NS kops.peelmicro.com

kops.peelmicro.com name server ns-1540.awsdns-00.co.uk.

kops.peelmicro.com name server ns-228.awsdns-28.com.

kops.peelmicro.com name server ns-795.awsdns-35.net.

kops.peelmicro.com name server ns-1072.awsdns-06.org.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# whois peelmicro.com

Command 'whois' not found, but can be installed with:

apt install whois

root@ubuntu-s-1vcpu-2gb-lon1-01:~# apt install whois

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following package was automatically installed and is no longer required:

grub-pc-bin

Use 'apt autoremove' to remove it.

The following NEW packages will be installed:

whois

0 upgraded, 1 newly installed, 0 to remove and 16 not upgraded.

Need to get 43.7 kB of archives.

After this operation, 262 kB of additional disk space will be used.

Get:1 http://mirrors.digitalocean.com/ubuntu bionic/main amd64 whois amd64 5.3.0 [43.7 kB]

Fetched 43.7 kB in 1s (83.3 kB/s)

Selecting previously unselected package whois.

(Reading database ... 97507 files and directories currently installed.)

Preparing to unpack .../archives/whois_5.3.0_amd64.deb ...

Unpacking whois (5.3.0) ...

Setting up whois (5.3.0) ...

Processing triggers for man-db (2.8.3-2ubuntu0.1) ...

root@ubuntu-s-1vcpu-2gb-lon1-01:~# whois peelmicro.com

Domain Name: PEELMICRO.COM

Registry Domain ID: 2337209531_DOMAIN_COM-VRSN

Registrar WHOIS Server: whois.acens.net

Registrar URL: http://https://www.acens.com

Updated Date: 2019-03-06T19:56:04Z

Creation Date: 2018-11-26T21:27:15Z

Registry Expiry Date: 2019-11-26T21:27:15Z

Registrar: Acens Technologies, S.L.U.

Registrar IANA ID: 140

Registrar Abuse Contact Email: abuse@acens.net

Registrar Abuse Contact Phone: 911418583

Domain Status: ok https://icann.org/epp#ok

Name Server: NS-1072.AWSDNS-06.ORG

Name Server: NS-1540.AWSDNS-00.CO.UK

Name Server: NS-228.AWSDNS-28.COM

Name Server: NS-795.AWSDNS-35.NET

DNSSEC: unsigned

URL of the ICANN Whois Inaccuracy Complaint Form: https://www.icann.org/wicf/

>>> Last update of whois database: 2019-03-14T17:48:51Z <<<

For more information on Whois status codes, please visit https://icann.org/epp

NOTICE: The expiration date displayed in this record is the date the

registrar's sponsorship of the domain name registration in the registry is

currently set to expire. This date does not necessarily reflect the expiration

date of the domain name registrant's agreement with the sponsoring

registrar. Users may consult the sponsoring registrar's Whois database to

view the registrar's reported date of expiration for this registration.

TERMS OF USE: You are not authorized to access or query our Whois

database through the use of electronic processes that are high-volume and

automated except as reasonably necessary to register domain names or

modify existing registrations; the Data in VeriSign Global Registry

Services' ("VeriSign") Whois database is provided by VeriSign for

information purposes only, and to assist persons in obtaining information

about or related to a domain name registration record. VeriSign does not

guarantee its accuracy. By submitting a Whois query, you agree to abide

by the following terms of use: You agree that you may use this Data only

for lawful purposes and that under no circumstances will you use this Data

to: (1) allow, enable, or otherwise support the transmission of mass

unsolicited, commercial advertising or solicitations via e-mail, telephone,

or facsimile; or (2) enable high volume, automated, electronic processes

that apply to VeriSign (or its computer systems). The compilation,

repackaging, dissemination or other use of this Data is expressly

prohibited without the prior written consent of VeriSign. You agree not to

use electronic processes that are automated and high-volume to access or

query the Whois database except as reasonably necessary to register

domain names or modify existing registrations. VeriSign reserves the right

to restrict your access to the Whois database in its sole discretion to ensure

operational stability. VeriSign may restrict or terminate your access to the

Whois database for failure to abide by these terms of use. VeriSign

reserves the right to modify these terms at any time.

The Registry database contains ONLY .COM, .NET, .EDU domains and

Registrars.

Domain Name: peelmicro.com

Registrar WHOIS Server: whois.acens.net

Registrar URL: http://www.acens.com/

Updated Date: 2019-03-06T20:38:28Z

Creation Date: 2018-11-26T22:27:15Z

Registrar Registration Expiration Date: 2019-11-26T22:27:15Z

Registrar: acens Technologies, S.L.U.

Registrar IANA ID: 140

Registrar Abuse Contact Email: abuse@acens.net

Registrar Abuse Contact Phone:+34.911418583

Domain Status: ok http://www.icann.org/epp#ok

Registrant Organization: Juan Pablo Perez Martin de la Fuente

Registrant State/Province: Madrid

Registrant Country: ES

Name Server: ns-228.awsdns-28.com

Name Server: ns-795.awsdns-35.net

Name Server: ns-1540.awsdns-00.co.uk

Name Server: ns-1072.awsdns-06.org

URL of the ICANN WHOIS Data Problem Reporting System: http://wdprs.internic.net/

>>> Last update of WHOIS database:2019-03-06T20:38:28Z<<<

For more information on Whois status codes, please visit

https://www.icann.org/resources/pages/epp-status-codes-2014-06-16-en

acens's WHOIS database is provided by acens Technologies for information

purposes only, proving information about or related to a domain name

registration record.

Acens makes this information available "as is," and does not guarantee

its accuracy.

By submitting a WHOIS query, you agree that you will use this data only for

lawful purposes and that, under no circumstances will you use this data to:

(1) allow, enable, or otherwise support the transmission of mass unsolicited,

commercial advertising or solicitations via direct mail, electronic mail, or

by telephone; or (2) enable high volume, automated, electronic processes that

apply to acens (or its systems). The compilation, repackaging,

dissemination or other use of this data is expressly prohibited without the

prior written consent of acens.

acens reserves the right to modify these terms at any time. By

submitting this query, you agree to abide by these terms.

NOTE: THE WHOIS DATABASE IS A CONTACT DATABASE ONLY. LACK OF A DOMAIN RECORD

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

15. Demo: Cluster setup on AWS using kops

Installing kubectlis explained in the 7. Install kubectl chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Ensure

kubectlis working properly:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Basic Commands (Intermediate):

explain Documentation of resources

get Display one or many resources

edit Edit a resource on the server

delete Delete resources by filenames, stdin, resources and names, or by resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a Deployment, ReplicaSet, Replication Controller, or Job

autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands:

certificate Modify certificate resources.

cluster-info Display cluster info

top Display Resource (CPU/Memory/Storage) usage.

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands:

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers.

auth Inspect authorization

Advanced Commands:

diff Diff live version against would-be applied version

apply Apply a configuration to a resource by filename or stdin

patch Update field(s) of a resource using strategic merge patch

replace Replace a resource by filename or stdin

wait Experimental: Wait for a specific condition on one or many resources.

convert Convert config files between different API versions

Settings Commands:

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash or zsh)

Other Commands:

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config Modify kubeconfig files

plugin Provides utilities for interacting with plugins.

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

Generating the ssh keysis explained in the 16. How to use kops and create Kubernetes cluster (Continue) - Why hosted zone chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Execute the following command to create the

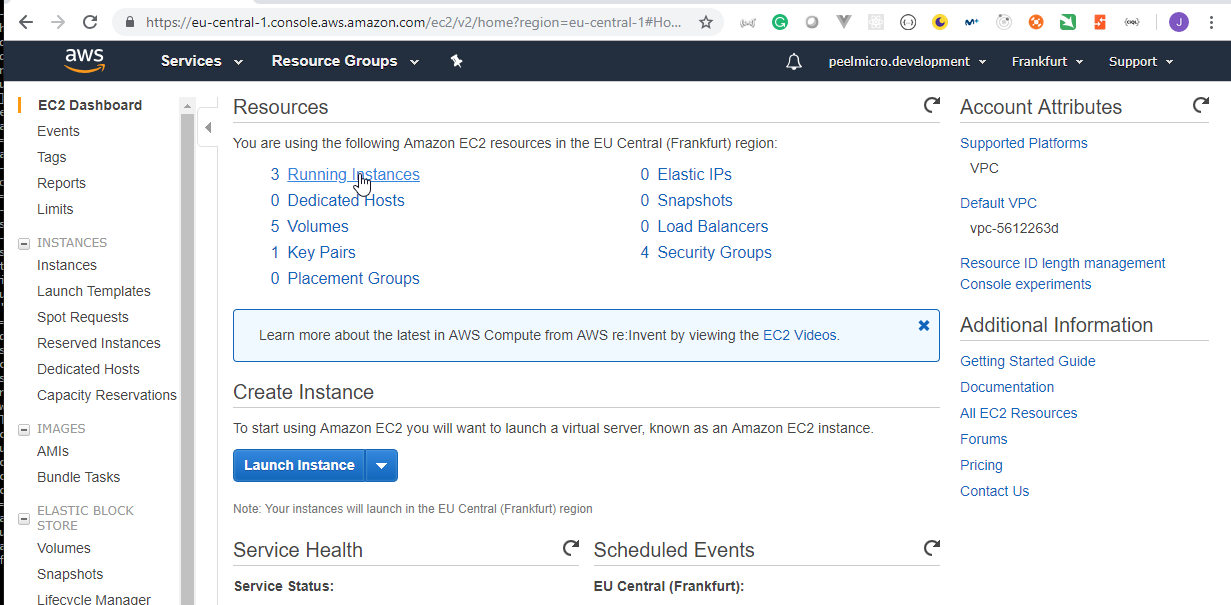

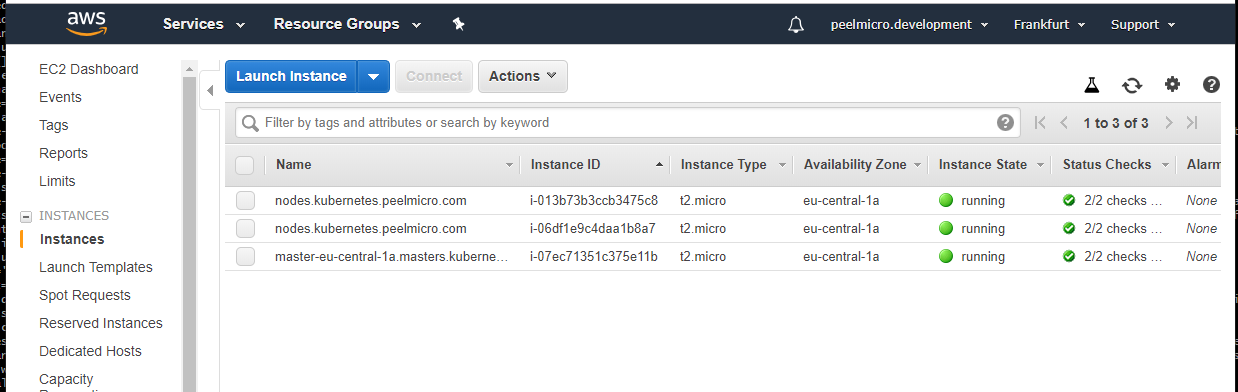

kubernetes.peelmicro.comcluster.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops create cluster --name=kubernetes.peelmicro.com --state=s3://kops.peelmicro.com --zones=eu-central-1a --node-count=2 --node-size=t2.micro --master-size=t2.micro --dns-zone=kops.peelmicro.com

I0314 19:34:10.887881 16716 create_cluster.go:480] Inferred --cloud=aws from zone "eu-central-1a"

I0314 19:34:11.015019 16716 subnets.go:184] Assigned CIDR 172.20.32.0/19 to subnet eu-central-1a

Previewing changes that will be made:

SSH public key must be specified when running with AWS (create with `kops create secret --name kubernetes.peelmicro.com sshpublickey admin -i ~/.ssh/id_rsa.pub`)

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops create cluster --name=kubernetes.peelmicro.com --state=s3://kops.peelmicro.com --zones=eu-central-1a --node-count=2 --node-size=t2.micro --master-size=t2.micro --dns-zone=kops.peelmicro.com --ssh-public-key=~/.ssh/udemy_devopsinuse.pub

I0314 19:34:38.567654 16726 create_cluster.go:1351] Using SSH public key: /root/.ssh/udemy_devopsinuse.pub

cluster "kubernetes.peelmicro.com" already exists; use 'kops update cluster' to apply changes

- We can edit the cluster with the command:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops edit cluster kubernetes.peelmicro.com --state=s3://kops.peelmicro.com

Edit cancelled, no changes made.

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: kops/v1alpha2

kind: Cluster

metadata:

creationTimestamp: 2019-03-14T19:34:11Z

name: kubernetes.peelmicro.com

spec:

api:

dns: {}

authorization:

rbac: {}

channel: stable

cloudProvider: aws

configBase: s3://kops.peelmicro.com/kubernetes.peelmicro.com

dnsZone: kops.peelmicro.com

etcdClusters:

- etcdMembers:

- instanceGroup: master-eu-central-1a

name: a

name: main

- etcdMembers:

- instanceGroup: master-eu-central-1a

name: a

name: events

iam:

allowContainerRegistry: true

legacy: false

kubernetesApiAccess:

- 0.0.0.0/0

kubernetesVersion: 1.10.12

masterInternalName: api.internal.kubernetes.peelmicro.com

masterPublicName: api.kubernetes.peelmicro.com

networkCIDR: 172.20.0.0/16

networking:

kubenet: {}

nonMasqueradeCIDR: 100.64.0.0/10

sshAccess:

- 0.0.0.0/0

subnets:

- cidr: 172.20.32.0/19

name: eu-central-1a

type: Public

zone: eu-central-1a

topology:

dns:

type: Public

masters: public

nodes: public

- We can apply the changes bu running

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops update cluster kubernetes.peelmicro.com --state=s3://kops.peelmicro.com --yes

SSH public key must be specified when running with AWS (create with `kops create secret --name kubernetes.peelmicro.com sshpublickey admin -i ~/.ssh/id_rsa.pub`)

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops update cluster kubernetes.peelmicro.com --state=s3://kops.peelmicro.com --yes --ssh-public-key=~/.ssh/udemy_devopsinuse.pub

--ssh-public-key on update is deprecated - please use `kops create secret --name kubernetes.peelmicro.com sshpublickey admin -i ~/.ssh/id_rsa.pub` instead

I0314 19:42:48.235944 16850 update_cluster.go:194] Using SSH public key: /root/.ssh/udemy_devopsinuse.pub

I0314 19:42:49.919270 16850 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0314 19:42:50.842222 16850 vfs_castore.go:735] Issuing new certificate: "ca"

I0314 19:42:51.046608 16850 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator-ca"

I0314 19:42:51.278184 16850 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0314 19:42:53.400536 16850 vfs_castore.go:735] Issuing new certificate: "kubelet-api"

I0314 19:42:53.478418 16850 vfs_castore.go:735] Issuing new certificate: "apiserver-proxy-client"

I0314 19:42:53.846653 16850 vfs_castore.go:735] Issuing new certificate: "kubecfg"

I0314 19:42:54.037072 16850 vfs_castore.go:735] Issuing new certificate: "kube-scheduler"

I0314 19:42:54.052120 16850 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator"

I0314 19:42:54.130151 16850 vfs_castore.go:735] Issuing new certificate: "kops"

I0314 19:42:54.168203 16850 vfs_castore.go:735] Issuing new certificate: "kube-proxy"

I0314 19:42:54.173589 16850 vfs_castore.go:735] Issuing new certificate: "kube-controller-manager"

I0314 19:42:54.215336 16850 vfs_castore.go:735] Issuing new certificate: "kubelet"

I0314 19:42:54.453859 16850 vfs_castore.go:735] Issuing new certificate: "master"

I0314 19:42:54.763595 16850 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0314 19:42:55.041665 16850 launchconfiguration.go:380] waiting for IAM instance profile "nodes.kubernetes.peelmicro.com" to be ready

I0314 19:42:55.049094 16850 launchconfiguration.go:380] waiting for IAM instance profile "masters.kubernetes.peelmicro.com" to be ready

I0314 19:43:05.438629 16850 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0314 19:43:06.005742 16850 executor.go:103] Tasks: 73 done / 73 total; 0 can run

I0314 19:43:06.005985 16850 dns.go:153] Pre-creating DNS records

W0314 19:43:06.544176 16850 apply_cluster.go:871] unable to pre-create DNS records - cluster startup may be slower: Error pre-creating DNS records: InvalidChangeBatch: [RRSet with DNS name etcd-a.internal.kubernetes.peelmicro.com. is not permitted in zone kops.peelmicro.com., RRSet with DNS name etcd-events-a.internal.kubernetes.peelmicro.com. is not permitted in zone kops.peelmicro.com., RRSet with DNS name api.kubernetes.peelmicro.com. is not permitted in zone kops.peelmicro.com., RRSet with DNS name api.internal.kubernetes.peelmicro.com. is not permitted in zone kops.peelmicro.com.]

status code: 400, request id: 637d2987-4691-11e9-b83a-f7a972cb8e1e

I0314 19:43:06.702979 16850 update_cluster.go:290] Exporting kubecfg for cluster

kops has set your kubectl context to kubernetes.peelmicro.com

Cluster is starting. It should be ready in a few minutes.

Suggestions:

* validate cluster: kops validate cluster

* list nodes: kubectl get nodes --show-labels

* ssh to the master: ssh -i ~/.ssh/id_rsa admin@api.kubernetes.peelmicro.com

* the admin user is specific to Debian. If not using Debian please use the appropriate user based on your OS.

* read about installing addons at: https://github.com/kubernetes/kops/blob/master/docs/addons.md.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get node

Unable to connect to the server: dial tcp: lookup api.kubernetes.peelmicro.com on 127.0.0.53:53: server misbehaving

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get node

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops validate cluster kubernetes.peelmicro.com --state=s3://kops.peelmicro.com

Validating cluster kubernetes.peelmicro.com

unexpected error during validation: unable to resolve Kubernetes cluster API URL dns: lookup api.kubernetes.peelmicro.com on 127.0.0.53:53: server misbehaving

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops delete cluster kubernetes.peelmicro.com --state=s3://kops.peelmicro.com

TYPE NAME ID

autoscaling-config master-eu-central-1a.masters.kubernetes.peelmicro.com-20190314194254 master-eu-central-1a.masters.kubernetes.peelmicro.com-20190314194254

autoscaling-config nodes.kubernetes.peelmicro.com-20190314194254 nodes.kubernetes.peelmicro.com-20190314194254

autoscaling-group master-eu-central-1a.masters.kubernetes.peelmicro.com master-eu-central-1a.masters.kubernetes.peelmicro.com

autoscaling-group nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

dhcp-options kubernetes.peelmicro.com dopt-0b3961207ed2a4293

iam-instance-profile masters.kubernetes.peelmicro.com masters.kubernetes.peelmicro.com

iam-instance-profile nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

iam-role masters.kubernetes.peelmicro.com masters.kubernetes.peelmicro.com

iam-role nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

instance master-eu-central-1a.masters.kubernetes.peelmicro.com i-05be1b77d432406a6

instance nodes.kubernetes.peelmicro.com i-0779d823131099d08

instance nodes.kubernetes.peelmicro.com i-0f7cc4411d2cb68df

internet-gateway kubernetes.peelmicro.com igw-08d2b2b3f9d3ceced

keypair kubernetes.kubernetes.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26 kubernetes.kubernetes.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26

route-table kubernetes.peelmicro.com rtb-097d41b0935bfc9bc

security-group masters.kubernetes.peelmicro.com sg-0e075cff6f3e32f9a

security-group nodes.kubernetes.peelmicro.com sg-0ef870833df6cb1d1

subnet eu-central-1a.kubernetes.peelmicro.com subnet-078a1178da1bf3bf9

volume a.etcd-events.kubernetes.peelmicro.com vol-0ca4d5f45f3bd2bb7

volume a.etcd-main.kubernetes.peelmicro.com vol-0f8e14474af8c38be

vpc kubernetes.peelmicro.com vpc-02860679234152f4d

Must specify --yes to delete cluster

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops delete cluster kubernetes.peelmicro.com --state=s3://kops.peelmicro.com --yes

TYPE NAME ID

autoscaling-config master-eu-central-1a.masters.kubernetes.peelmicro.com-20190314194254 master-eu-central-1a.masters.kubernetes.peelmicro.com-20190314194254

autoscaling-config nodes.kubernetes.peelmicro.com-20190314194254 nodes.kubernetes.peelmicro.com-20190314194254

autoscaling-group master-eu-central-1a.masters.kubernetes.peelmicro.com master-eu-central-1a.masters.kubernetes.peelmicro.com

autoscaling-group nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

dhcp-options kubernetes.peelmicro.com dopt-0b3961207ed2a4293

iam-instance-profile masters.kubernetes.peelmicro.com masters.kubernetes.peelmicro.com

iam-instance-profile nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

iam-role masters.kubernetes.peelmicro.com masters.kubernetes.peelmicro.com

iam-role nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

instance master-eu-central-1a.masters.kubernetes.peelmicro.com i-05be1b77d432406a6

instance nodes.kubernetes.peelmicro.com i-0779d823131099d08

instance nodes.kubernetes.peelmicro.com i-0f7cc4411d2cb68df

internet-gateway kubernetes.peelmicro.com igw-08d2b2b3f9d3ceced

keypair kubernetes.kubernetes.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26 kubernetes.kubernetes.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26

route-table kubernetes.peelmicro.com rtb-097d41b0935bfc9bc

security-group masters.kubernetes.peelmicro.com sg-0e075cff6f3e32f9a

security-group nodes.kubernetes.peelmicro.com sg-0ef870833df6cb1d1

subnet eu-central-1a.kubernetes.peelmicro.com subnet-078a1178da1bf3bf9

volume a.etcd-events.kubernetes.peelmicro.com vol-0ca4d5f45f3bd2bb7

volume a.etcd-main.kubernetes.peelmicro.com vol-0f8e14474af8c38be

vpc kubernetes.peelmicro.com vpc-02860679234152f4d

autoscaling-group:master-eu-central-1a.masters.kubernetes.peelmicro.com ok

keypair:kubernetes.kubernetes.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26 ok

autoscaling-group:nodes.kubernetes.peelmicro.com ok

instance:i-05be1b77d432406a6 ok

instance:i-0f7cc4411d2cb68df ok

instance:i-0779d823131099d08 ok

internet-gateway:igw-08d2b2b3f9d3ceced still has dependencies, will retry

iam-instance-profile:masters.kubernetes.peelmicro.com ok

iam-instance-profile:nodes.kubernetes.peelmicro.com ok

iam-role:masters.kubernetes.peelmicro.com ok

iam-role:nodes.kubernetes.peelmicro.com ok

autoscaling-config:nodes.kubernetes.peelmicro.com-20190314194254 ok

autoscaling-config:master-eu-central-1a.masters.kubernetes.peelmicro.com-20190314194254 ok

subnet:subnet-078a1178da1bf3bf9 still has dependencies, will retry

volume:vol-0f8e14474af8c38be still has dependencies, will retry

volume:vol-0ca4d5f45f3bd2bb7 still has dependencies, will retry

security-group:sg-0ef870833df6cb1d1 still has dependencies, will retry

security-group:sg-0e075cff6f3e32f9a still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

subnet:subnet-078a1178da1bf3bf9

volume:vol-0ca4d5f45f3bd2bb7

security-group:sg-0e075cff6f3e32f9a

security-group:sg-0ef870833df6cb1d1

internet-gateway:igw-08d2b2b3f9d3ceced

volume:vol-0f8e14474af8c38be

route-table:rtb-097d41b0935bfc9bc

vpc:vpc-02860679234152f4d

dhcp-options:dopt-0b3961207ed2a4293

volume:vol-0f8e14474af8c38be still has dependencies, will retry

volume:vol-0ca4d5f45f3bd2bb7 still has dependencies, will retry

subnet:subnet-078a1178da1bf3bf9 still has dependencies, will retry

internet-gateway:igw-08d2b2b3f9d3ceced still has dependencies, will retry

security-group:sg-0e075cff6f3e32f9a still has dependencies, will retry

security-group:sg-0ef870833df6cb1d1 still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

dhcp-options:dopt-0b3961207ed2a4293

volume:vol-0ca4d5f45f3bd2bb7

subnet:subnet-078a1178da1bf3bf9

security-group:sg-0e075cff6f3e32f9a

vpc:vpc-02860679234152f4d

security-group:sg-0ef870833df6cb1d1

internet-gateway:igw-08d2b2b3f9d3ceced

volume:vol-0f8e14474af8c38be

route-table:rtb-097d41b0935bfc9bc

volume:vol-0f8e14474af8c38be still has dependencies, will retry

volume:vol-0ca4d5f45f3bd2bb7 still has dependencies, will retry

subnet:subnet-078a1178da1bf3bf9 still has dependencies, will retry

internet-gateway:igw-08d2b2b3f9d3ceced still has dependencies, will retry

security-group:sg-0e075cff6f3e32f9a still has dependencies, will retry

security-group:sg-0ef870833df6cb1d1 still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

dhcp-options:dopt-0b3961207ed2a4293

subnet:subnet-078a1178da1bf3bf9

volume:vol-0ca4d5f45f3bd2bb7

security-group:sg-0e075cff6f3e32f9a

internet-gateway:igw-08d2b2b3f9d3ceced

volume:vol-0f8e14474af8c38be

route-table:rtb-097d41b0935bfc9bc

vpc:vpc-02860679234152f4d

security-group:sg-0ef870833df6cb1d1

subnet:subnet-078a1178da1bf3bf9 still has dependencies, will retry

volume:vol-0ca4d5f45f3bd2bb7 still has dependencies, will retry

internet-gateway:igw-08d2b2b3f9d3ceced still has dependencies, will retry

volume:vol-0f8e14474af8c38be still has dependencies, will retry

security-group:sg-0ef870833df6cb1d1 still has dependencies, will retry

security-group:sg-0e075cff6f3e32f9a still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

volume:vol-0ca4d5f45f3bd2bb7

subnet:subnet-078a1178da1bf3bf9

security-group:sg-0e075cff6f3e32f9a

route-table:rtb-097d41b0935bfc9bc

vpc:vpc-02860679234152f4d

security-group:sg-0ef870833df6cb1d1

internet-gateway:igw-08d2b2b3f9d3ceced

volume:vol-0f8e14474af8c38be

dhcp-options:dopt-0b3961207ed2a4293

subnet:subnet-078a1178da1bf3bf9 still has dependencies, will retry

volume:vol-0f8e14474af8c38be still has dependencies, will retry

volume:vol-0ca4d5f45f3bd2bb7 still has dependencies, will retry

internet-gateway:igw-08d2b2b3f9d3ceced ok

security-group:sg-0ef870833df6cb1d1 still has dependencies, will retry

security-group:sg-0e075cff6f3e32f9a still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

security-group:sg-0e075cff6f3e32f9a

vpc:vpc-02860679234152f4d

security-group:sg-0ef870833df6cb1d1

volume:vol-0f8e14474af8c38be

route-table:rtb-097d41b0935bfc9bc

dhcp-options:dopt-0b3961207ed2a4293

volume:vol-0ca4d5f45f3bd2bb7

subnet:subnet-078a1178da1bf3bf9

volume:vol-0f8e14474af8c38be ok

volume:vol-0ca4d5f45f3bd2bb7 ok

subnet:subnet-078a1178da1bf3bf9 ok

security-group:sg-0ef870833df6cb1d1 ok

security-group:sg-0e075cff6f3e32f9a ok

route-table:rtb-097d41b0935bfc9bc ok

vpc:vpc-02860679234152f4d ok

dhcp-options:dopt-0b3961207ed2a4293 ok

Deleted kubectl config for kubernetes.peelmicro.com

Deleted cluster: "kubernetes.peelmicro.com"

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- create the standard

id_rsakey pair.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# ssh-keygen -f .ssh/id_rsa

Generating public/private rsa key pair.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in .ssh/id_rsa.

Your public key has been saved in .ssh/id_rsa.pub.

The key fingerprint is:

SHA256:4XAQxQGcmEUodyCcUrCxcuG1PTUuTN3Eiqmk3B5Dbpc root@ubuntu-s-1vcpu-2gb-lon1-01

The key's randomart image is:

+---[RSA 2048]----+

|o++.oOBB==. |

|.=++==++o.o |

|+.oo..*o+. |

|.. o o*.. |

| . * . .S |

| o B E |

| o + |

| . |

| |

+----[SHA256]-----+

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- Create the missing DNS records remove by mistake:

| Name | Type | Value |

|---|---|---|

| api.kops.peelmicro.com | A | 3.120.179.89 |

| api.internal.kops.peelmicro.com | A | 172.20.45.184 |

| etcd-a.internal.kops.peelmicro.com | A | 172.20.45.184 |

| etcd-events-a.internal.kops.peelmicro.com | A | 172.20.45.184 |

- With the following command it shows what it is going to create but it doesn't create anything yet.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops create cluster --name=kubernetes.peelmicro.com --state=s3://kops.peelmicro.com --zones=eu-central-1a --node-count=2 --node-size=t2.micro --master-size=t2.micro --dns-zone=kops.peelmicro.com

I0315 05:59:35.451940 31325 create_cluster.go:480] Inferred --cloud=aws from zone "eu-central-1a"

I0315 05:59:35.561569 31325 subnets.go:184] Assigned CIDR 172.20.32.0/19 to subnet eu-central-1a

I0315 05:59:35.966584 31325 create_cluster.go:1351] Using SSH public key: /root/.ssh/id_rsa.pub

Previewing changes that will be made:

I0315 05:59:37.701485 31325 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0315 05:59:38.118814 31325 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0315 05:59:38.312099 31325 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0315 05:59:38.470466 31325 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0315 05:59:38.509213 31325 executor.go:103] Tasks: 73 done / 73 total; 0 can run

Will create resources:

AutoscalingGroup/master-eu-central-1a.masters.kubernetes.peelmicro.com

MinSize 1

MaxSize 1

Subnets [name:eu-central-1a.kubernetes.peelmicro.com]

Tags {k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: master-eu-central-1a, k8s.io/role/master: 1, Name: master-eu-central-1a.masters.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

Granularity 1Minute

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

LaunchConfiguration name:master-eu-central-1a.masters.kubernetes.peelmicro.com

AutoscalingGroup/nodes.kubernetes.peelmicro.com

MinSize 2

MaxSize 2

Subnets [name:eu-central-1a.kubernetes.peelmicro.com]

Tags {k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: nodes, k8s.io/role/node: 1, Name: nodes.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

Granularity 1Minute

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

LaunchConfiguration name:nodes.kubernetes.peelmicro.com

DHCPOptions/kubernetes.peelmicro.com

DomainName eu-central-1.compute.internal

DomainNameServers AmazonProvidedDNS

Shared false

Tags {Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

EBSVolume/a.etcd-events.kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

VolumeType gp2

SizeGB 20

Encrypted false

Tags {KubernetesCluster: kubernetes.peelmicro.com, k8s.io/etcd/events: a/a, k8s.io/role/master: 1, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: a.etcd-events.kubernetes.peelmicro.com}

EBSVolume/a.etcd-main.kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

VolumeType gp2

SizeGB 20

Encrypted false

Tags {k8s.io/etcd/main: a/a, k8s.io/role/master: 1, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: a.etcd-main.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

IAMInstanceProfile/masters.kubernetes.peelmicro.com

Shared false

IAMInstanceProfile/nodes.kubernetes.peelmicro.com

Shared false

IAMInstanceProfileRole/masters.kubernetes.peelmicro.com

InstanceProfile name:masters.kubernetes.peelmicro.com id:masters.kubernetes.peelmicro.com

Role name:masters.kubernetes.peelmicro.com

IAMInstanceProfileRole/nodes.kubernetes.peelmicro.com

InstanceProfile name:nodes.kubernetes.peelmicro.com id:nodes.kubernetes.peelmicro.com

Role name:nodes.kubernetes.peelmicro.com

IAMRole/masters.kubernetes.peelmicro.com

ExportWithID masters

IAMRole/nodes.kubernetes.peelmicro.com

ExportWithID nodes

IAMRolePolicy/masters.kubernetes.peelmicro.com

Role name:masters.kubernetes.peelmicro.com

IAMRolePolicy/nodes.kubernetes.peelmicro.com

Role name:nodes.kubernetes.peelmicro.com

InternetGateway/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

Shared false

Tags {Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

Keypair/apiserver-aggregator

Signer name:apiserver-aggregator-ca id:cn=apiserver-aggregator-ca

Subject cn=aggregator

Type client

Format v1alpha2

Keypair/apiserver-aggregator-ca

Subject cn=apiserver-aggregator-ca

Type ca

Format v1alpha2

Keypair/apiserver-proxy-client

Signer name:ca id:cn=kubernetes

Subject cn=apiserver-proxy-client

Type client

Format v1alpha2

Keypair/ca

Subject cn=kubernetes

Type ca

Format v1alpha2

Keypair/kops

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kops

Type client

Format v1alpha2

Keypair/kube-controller-manager

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-controller-manager

Type client

Format v1alpha2

Keypair/kube-proxy

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-proxy

Type client

Format v1alpha2

Keypair/kube-scheduler

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-scheduler

Type client

Format v1alpha2

Keypair/kubecfg

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kubecfg

Type client

Format v1alpha2

Keypair/kubelet

Signer name:ca id:cn=kubernetes

Subject o=system:nodes,cn=kubelet

Type client

Format v1alpha2

Keypair/kubelet-api

Signer name:ca id:cn=kubernetes

Subject cn=kubelet-api

Type client

Format v1alpha2

Keypair/master

AlternateNames [100.64.0.1, 127.0.0.1, api.internal.kubernetes.peelmicro.com, api.kubernetes.peelmicro.com, kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.cluster.local]

Signer name:ca id:cn=kubernetes

Subject cn=kubernetes-master

Type server

Format v1alpha2

LaunchConfiguration/master-eu-central-1a.masters.kubernetes.peelmicro.com

ImageID kope.io/k8s-1.10-debian-jessie-amd64-hvm-ebs-2018-08-17

InstanceType t2.micro

SSHKey name:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e id:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

SecurityGroups [name:masters.kubernetes.peelmicro.com]

AssociatePublicIP true

IAMInstanceProfile name:masters.kubernetes.peelmicro.com id:masters.kubernetes.peelmicro.com

RootVolumeSize 64

RootVolumeType gp2

SpotPrice

LaunchConfiguration/nodes.kubernetes.peelmicro.com

ImageID kope.io/k8s-1.10-debian-jessie-amd64-hvm-ebs-2018-08-17

InstanceType t2.micro

SSHKey name:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e id:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

SecurityGroups [name:nodes.kubernetes.peelmicro.com]

AssociatePublicIP true

IAMInstanceProfile name:nodes.kubernetes.peelmicro.com id:nodes.kubernetes.peelmicro.com

RootVolumeSize 128

RootVolumeType gp2

SpotPrice

ManagedFile/kubernetes.peelmicro.com-addons-bootstrap

Location addons/bootstrap-channel.yaml

ManagedFile/kubernetes.peelmicro.com-addons-core.addons.k8s.io

Location addons/core.addons.k8s.io/v1.4.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-dns-controller.addons.k8s.io-k8s-1.6

Location addons/dns-controller.addons.k8s.io/k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-dns-controller.addons.k8s.io-pre-k8s-1.6

Location addons/dns-controller.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-kube-dns.addons.k8s.io-k8s-1.6

Location addons/kube-dns.addons.k8s.io/k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-kube-dns.addons.k8s.io-pre-k8s-1.6

Location addons/kube-dns.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-limit-range.addons.k8s.io

Location addons/limit-range.addons.k8s.io/v1.5.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-rbac.addons.k8s.io-k8s-1.8

Location addons/rbac.addons.k8s.io/k8s-1.8.yaml

ManagedFile/kubernetes.peelmicro.com-addons-storage-aws.addons.k8s.io-v1.6.0

Location addons/storage-aws.addons.k8s.io/v1.6.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-storage-aws.addons.k8s.io-v1.7.0

Location addons/storage-aws.addons.k8s.io/v1.7.0.yaml

Route/0.0.0.0/0

RouteTable name:kubernetes.peelmicro.com

CIDR 0.0.0.0/0

InternetGateway name:kubernetes.peelmicro.com

RouteTable/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

Shared false

Tags {kubernetes.io/cluster/kubernetes.peelmicro.com: owned, kubernetes.io/kops/role: public, Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

RouteTableAssociation/eu-central-1a.kubernetes.peelmicro.com

RouteTable name:kubernetes.peelmicro.com

Subnet name:eu-central-1a.kubernetes.peelmicro.com

SSHKey/kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

KeyFingerprint 9a:fa:b7:ad:4e:62:1b:16:a4:6b:a5:8f:8f:86:59:f6

Secret/admin

Secret/kube

Secret/kube-proxy

Secret/kubelet

Secret/system:controller_manager

Secret/system:dns

Secret/system:logging

Secret/system:monitoring

Secret/system:scheduler

SecurityGroup/masters.kubernetes.peelmicro.com

Description Security group for masters

VPC name:kubernetes.peelmicro.com

RemoveExtraRules [port=22, port=443, port=2380, port=2381, port=4001, port=4002, port=4789, port=179]

Tags {KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: masters.kubernetes.peelmicro.com}

SecurityGroup/nodes.kubernetes.peelmicro.com

Description Security group for nodes

VPC name:kubernetes.peelmicro.com

RemoveExtraRules [port=22]

Tags {Name: nodes.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

SecurityGroupRule/all-master-to-master

SecurityGroup name:masters.kubernetes.peelmicro.com

SourceGroup name:masters.kubernetes.peelmicro.com

SecurityGroupRule/all-master-to-node

SecurityGroup name:nodes.kubernetes.peelmicro.com

SourceGroup name:masters.kubernetes.peelmicro.com

SecurityGroupRule/all-node-to-node

SecurityGroup name:nodes.kubernetes.peelmicro.com

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/https-external-to-master-0.0.0.0/0

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 443

ToPort 443

SecurityGroupRule/master-egress

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-egress

SecurityGroup name:nodes.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-to-master-tcp-1-2379

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 1

ToPort 2379

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-tcp-2382-4000

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 2382

ToPort 4000

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-tcp-4003-65535

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 4003

ToPort 65535

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-udp-1-65535

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol udp

FromPort 1

ToPort 65535

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/ssh-external-to-master-0.0.0.0/0

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

SecurityGroupRule/ssh-external-to-node-0.0.0.0/0

SecurityGroup name:nodes.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

Subnet/eu-central-1a.kubernetes.peelmicro.com

ShortName eu-central-1a

VPC name:kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

CIDR 172.20.32.0/19

Shared false

Tags {Name: eu-central-1a.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, kubernetes.io/role/elb: 1, SubnetType: Public}

VPC/kubernetes.peelmicro.com

CIDR 172.20.0.0/16

EnableDNSHostnames true

EnableDNSSupport true

Shared false

Tags {Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

VPCDHCPOptionsAssociation/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

DHCPOptions name:kubernetes.peelmicro.com

Must specify --yes to apply changes

Cluster configuration has been created.

Suggestions:

* list clusters with: kops get cluster

* edit this cluster with: kops edit cluster kubernetes.peelmicro.com

* edit your node instance group: kops edit ig --name=kubernetes.peelmicro.com nodes

* edit your master instance group: kops edit ig --name=kubernetes.peelmicro.com master-eu-central-1a

Finally configure your cluster with: kops update cluster kubernetes.peelmicro.com --yes

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

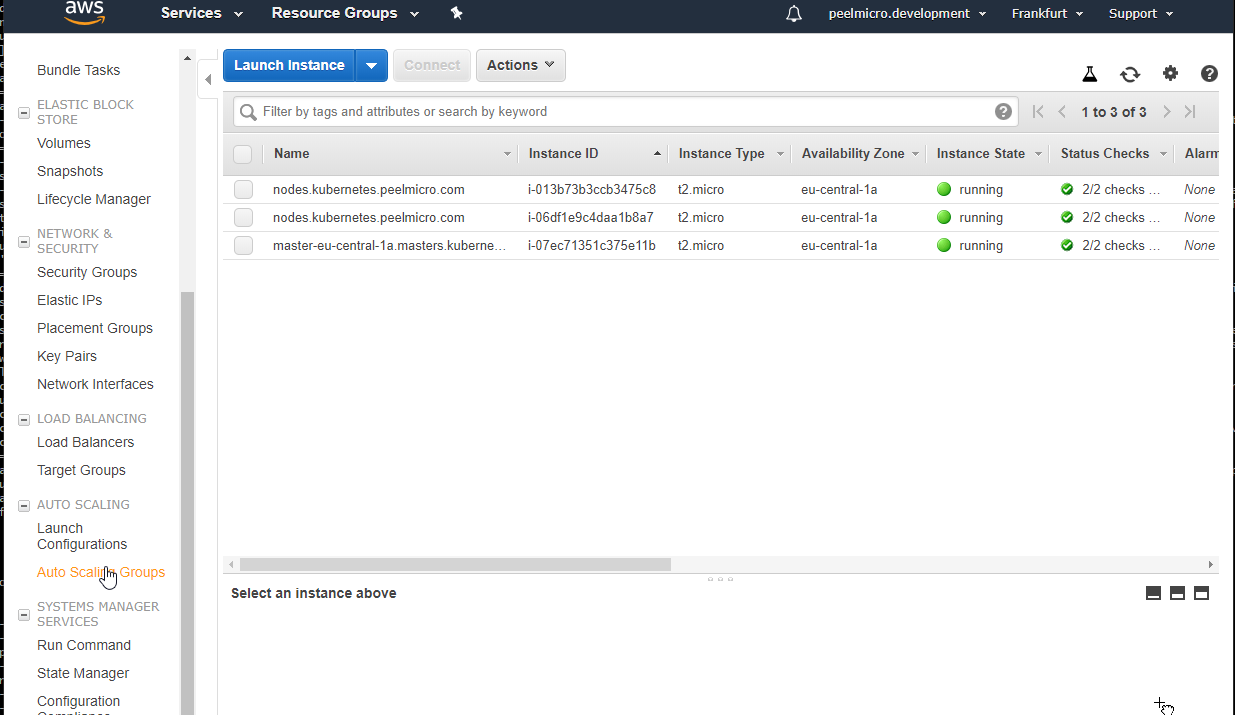

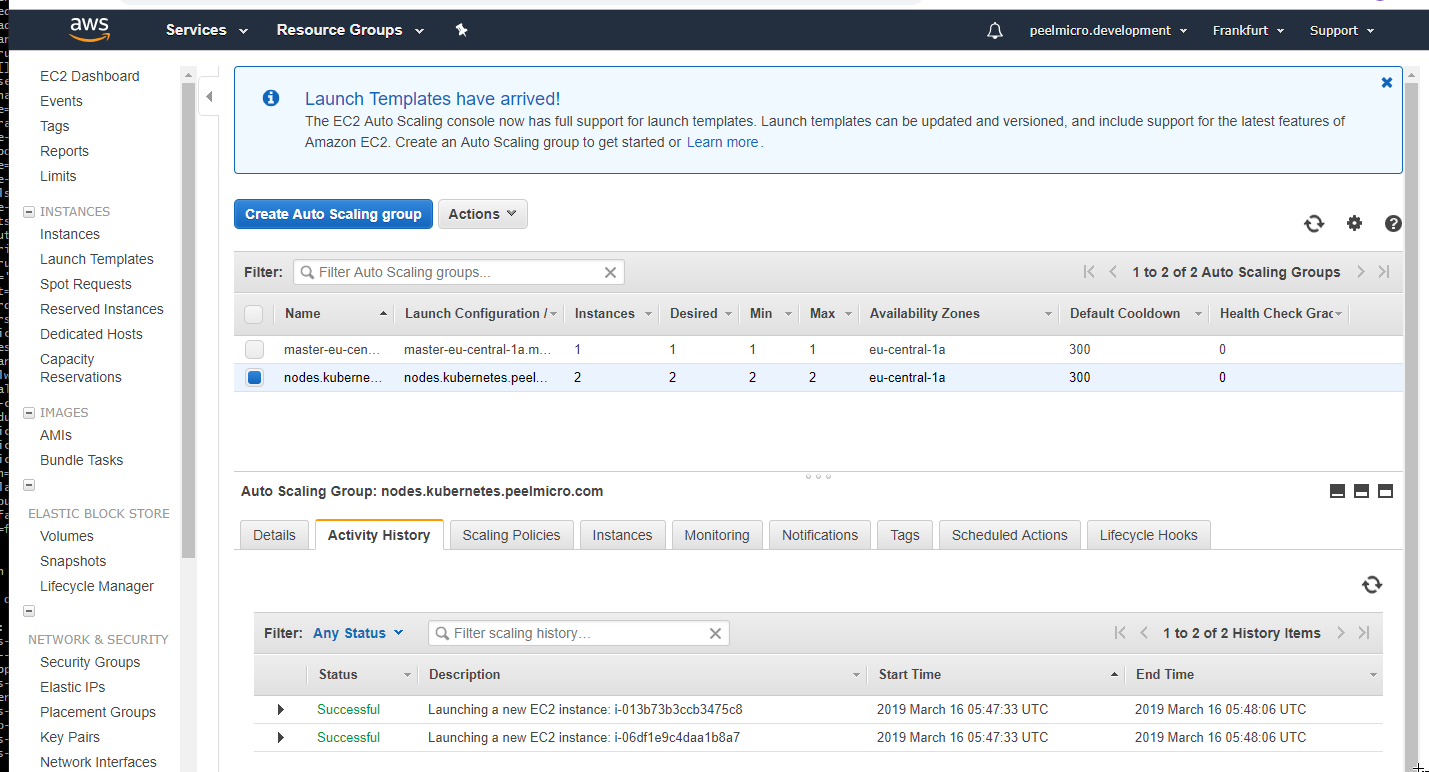

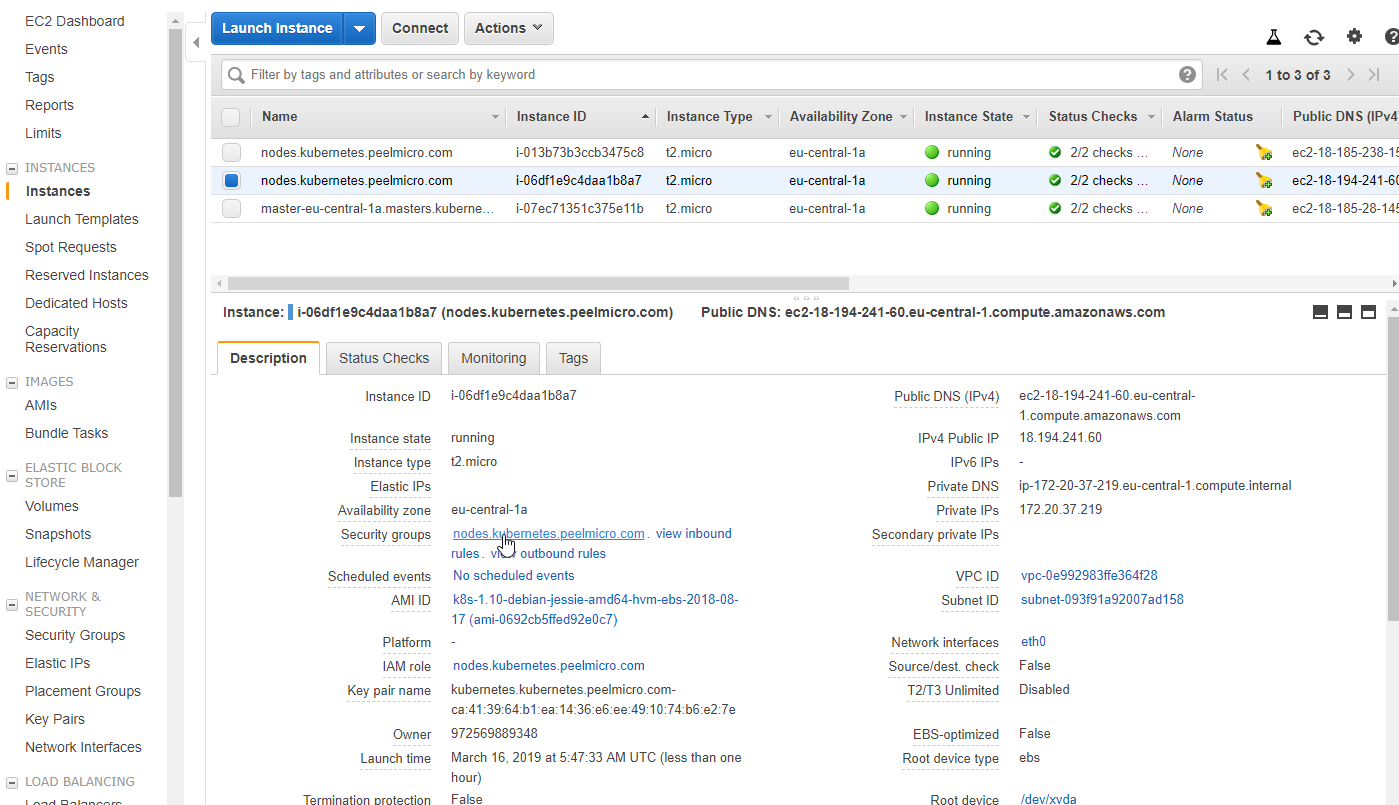

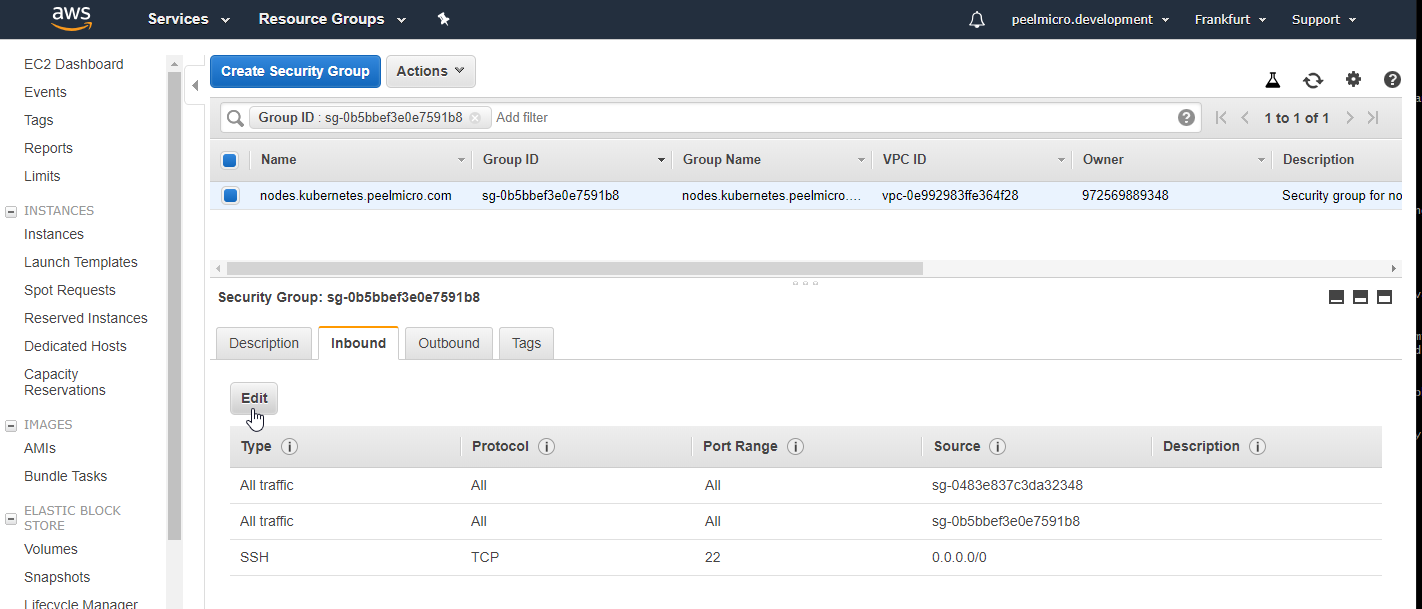

- To edit the cluster before executing it run the following command:

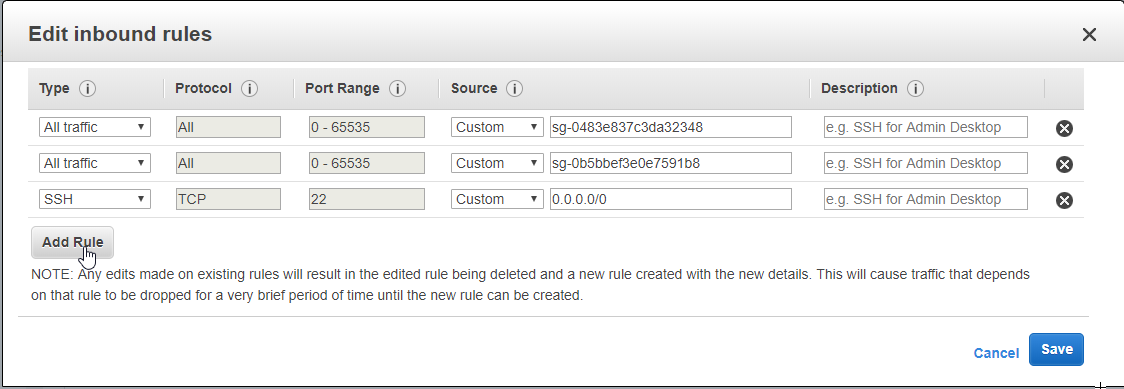

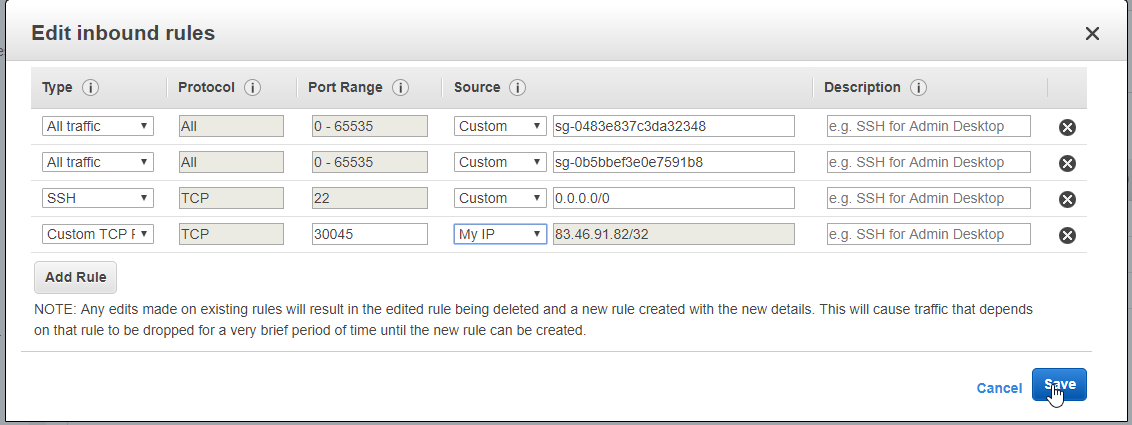

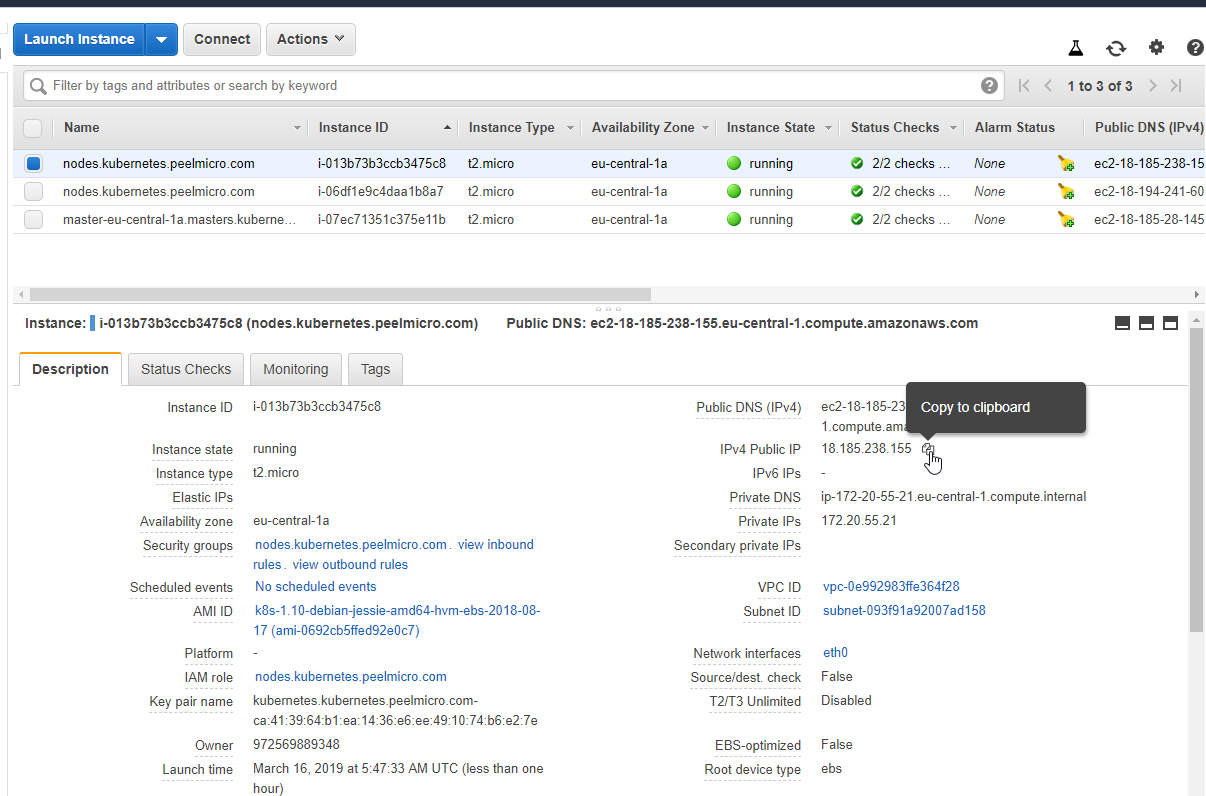

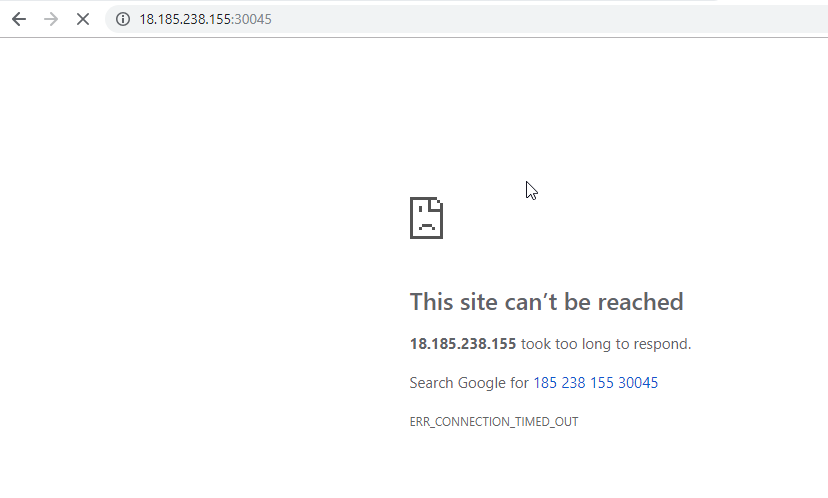

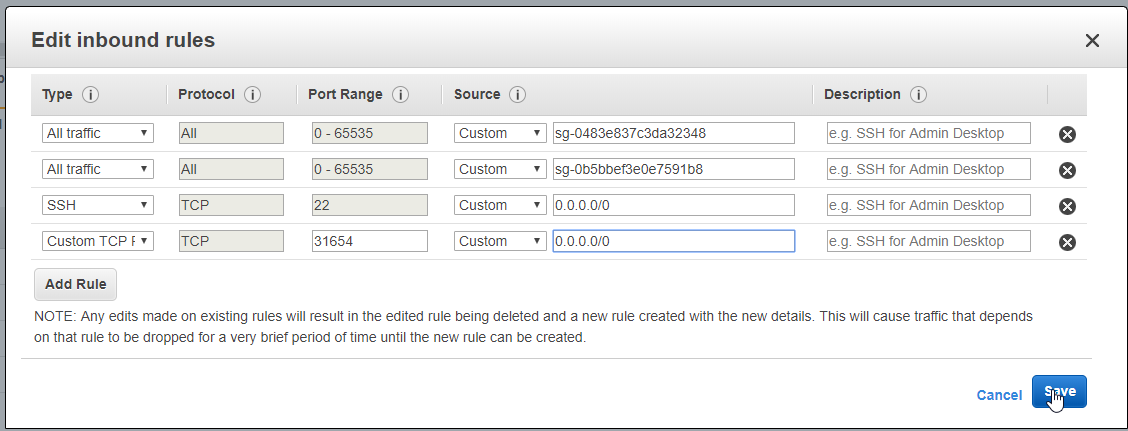

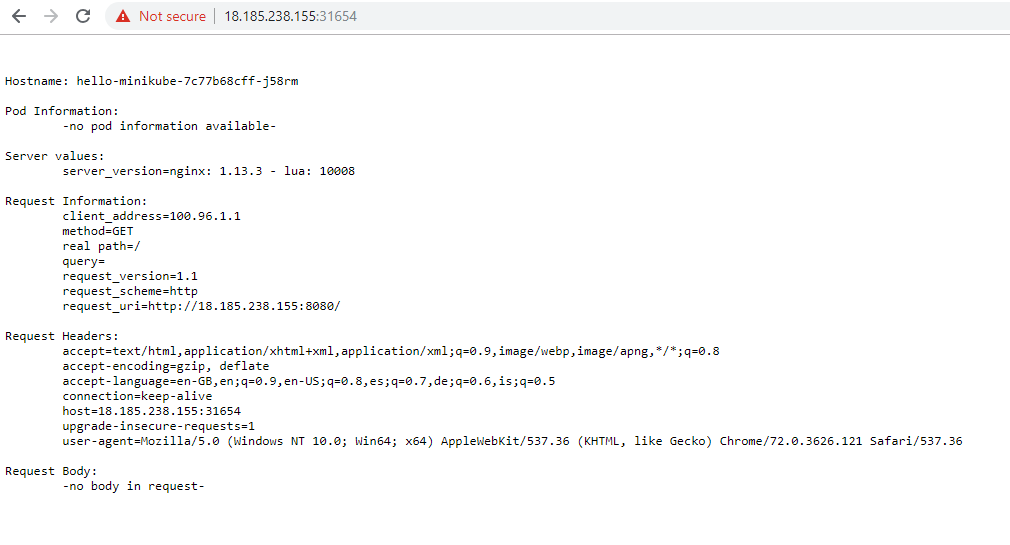

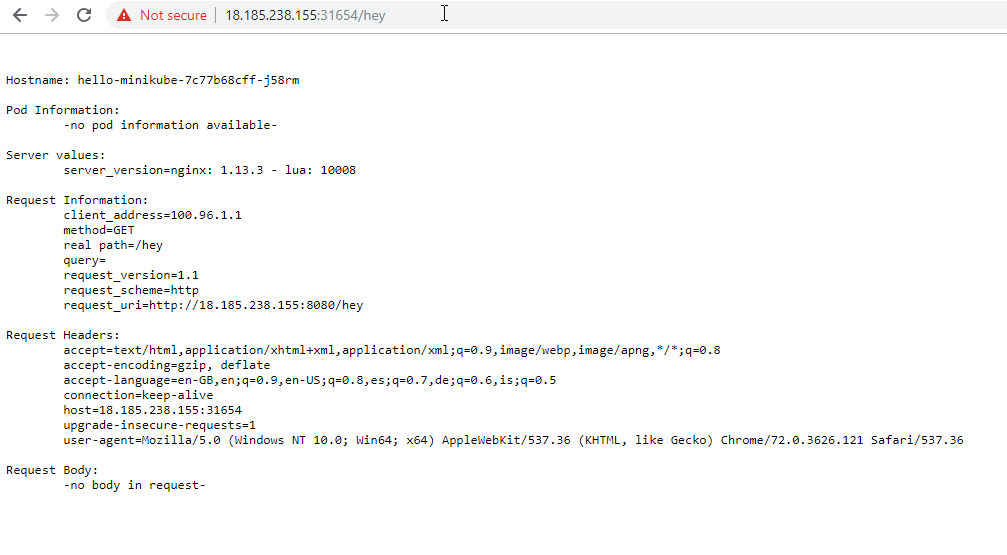

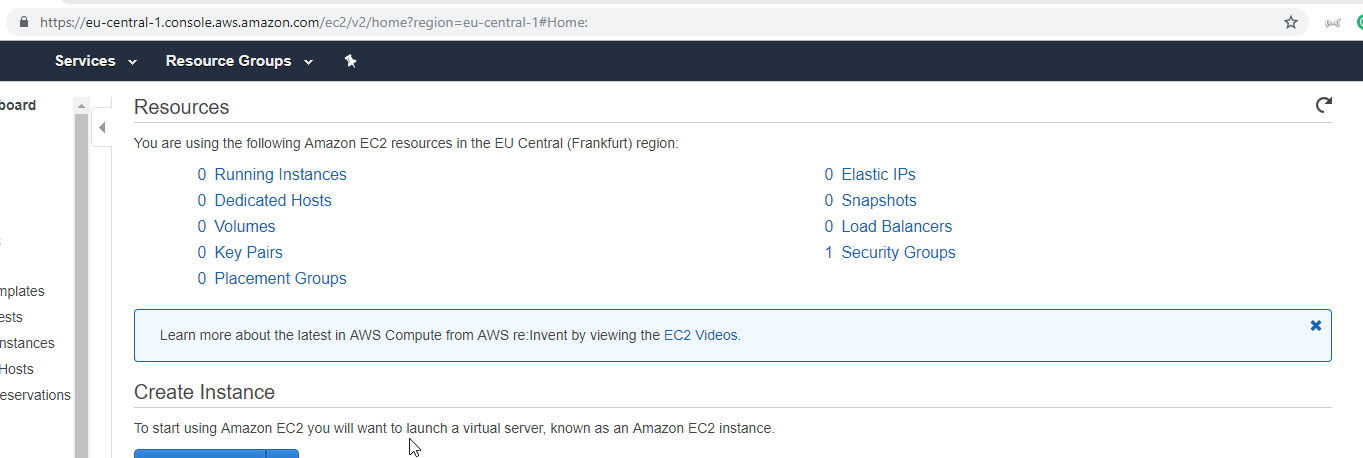

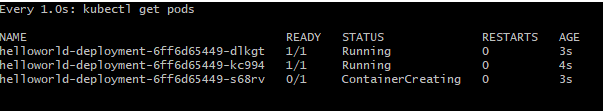

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops edit cluster kubernetes.peelmicro.com --state=s3://kops.peelmicro.com