Docker and Kubernetes: The Complete Guide

Github Repositories

The Docker and Kubernetes: The Complete Guide Udemy course explains how to build, test, and deploy Docker applications with Kubernetes while learning production-style development workflows.

Table of contents

Related projects

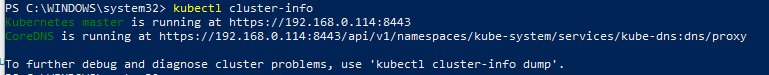

| Project | Dates | Source Code |

|---|---|---|

| .NET Core version of the "Docker and Kubernetes: The Complete Guide" Udemy course | Nov 2018 - Dic 2018 | dotnet-core-multi-docker |

| Java version of the "Docker and Kubernetes: The Complete Guide" Udemy course | Nov 2018 - Dic 2018 | java-multi-docker |

| Python version of the "Docker and Kubernetes: The Complete Guide" Udemy course | Nov 2018 - Dic 2018 | python-multi-docker |

What I've learned

- Docker from scratch, no previous experience required

- Master the Docker CLI to inspect and debug running containers

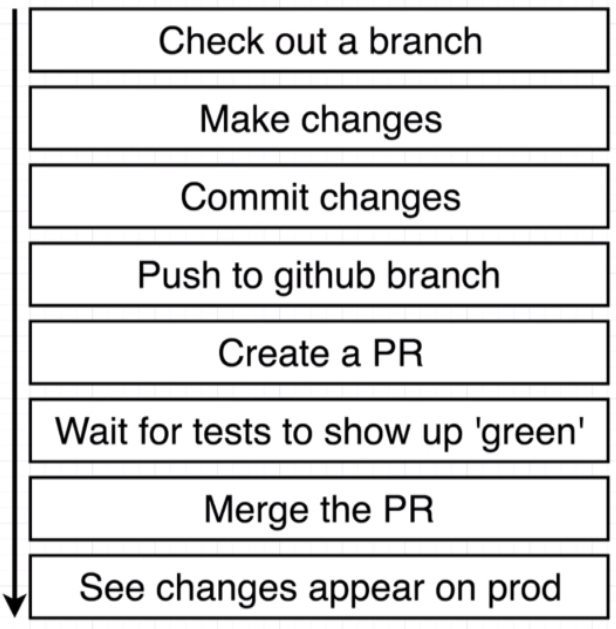

- Build a CI + CD pipeline from scratch with Github, Travis CI, and AWS

- Understand the purpose and theory of Kubernetes by building a complex app

- Automatically deploy your code when it is pushed to Github!

First steps with Docker

- Check the current version of Docker

C:\WINDOWS\system32>docker version

Client:

Version: 18.06.1-ce

API version: 1.38

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:21:34 2018

OS/Arch: windows/amd64

Experimental: false

Server:

Engine:

Version: 18.06.1-ce

API version: 1.38 (minimum version 1.12)

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:29:02 2018

OS/Arch: linux/amd64

Experimental: false

- Ensure

Dockeris working running the standardhello-worldDocker Image

C:\WINDOWS\system32>docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

d1725b59e92d: Pull complete

Digest: sha256:0add3ace90ecb4adbf7777e9aacf18357296e799f81cabc9fde470971e499788

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Info

The first time we execute Docker run hello-world

The hello-world image is not in the Image cache

- Execute the

hello-worldDocker Image a second time

C:\WINDOWS\system32>docker run hello-world

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Info

The second time we execute Docker run hello-world

The hello-world image is in the Image chache

- Execute the

runcommand with aditional information

Command

docker run <image name> command!

<image name> - name of the image to use for this container

command! - Default comand override

C:\WINDOWS\system32>docker run busybox echo hi there

Unable to find image 'busybox:latest' locally

latest: Pulling from library/busybox

90e01955edcd: Pull complete

Digest: sha256:2a03a6059f21e150ae84b0973863609494aad70f0a80eaeb64bddd8d92465812

Status: Downloaded newer image for busybox:latest

hi there

C:\WINDOWS\system32>docker run busybox echo hi there

hi there

C:\WINDOWS\system32>docker run busybox echo bye there

bye there

- The

busy boximage has these default folders

C:\WINDOWS\system32>docker run busybox ls

bin

dev

etc

home

proc

root

sys

tmp

usr

var

BusyBox: The Swiss Army Knife of Embedded Linux

BusyBox combines tiny versions of many common UNIX utilities into a single small executable. It provides replacements for most of the utilities you usually find in GNU fileutils, shellutils, etc.

The utilities in BusyBox generally have fewer options than their full-featured GNU cousins; however, the options that are included provide the expected functionality and behave very much like their GNU counterparts.

BusyBox provides a fairly complete environment for any small or embedded system.

BusyBox has been written with size-optimization and limited resources in mind. It is also extremely modular so you can easily include or exclude commands (or features) at compile time. This makes it easy to customize

your embedded systems. To create a working system, just add some device nodes in /dev, a few configuration files in /etc, and a Linux kernel.

BusyBox is maintained by Denys Vlasenko, and licensed under the GNU GENERAL PUBLIC LICENSE version 2.

C:\WINDOWS\system32>docker run busybox pwd

/

C:\WINDOWS\system32>docker run hello-world ls

docker: Error response from daemon: OCI runtime create failed: container_linux.go:348: starting container process caused "exec: \"ls\": executable file not found in $PATH": unknown.

C:\WINDOWS\system32>docker run hello-world echo hi there

docker: Error response from daemon: OCI runtime create failed: container_linux.go:348: starting container process caused "exec: \"echo\": executable file not found in $PATH": unknown.

- The

pscommand

Command

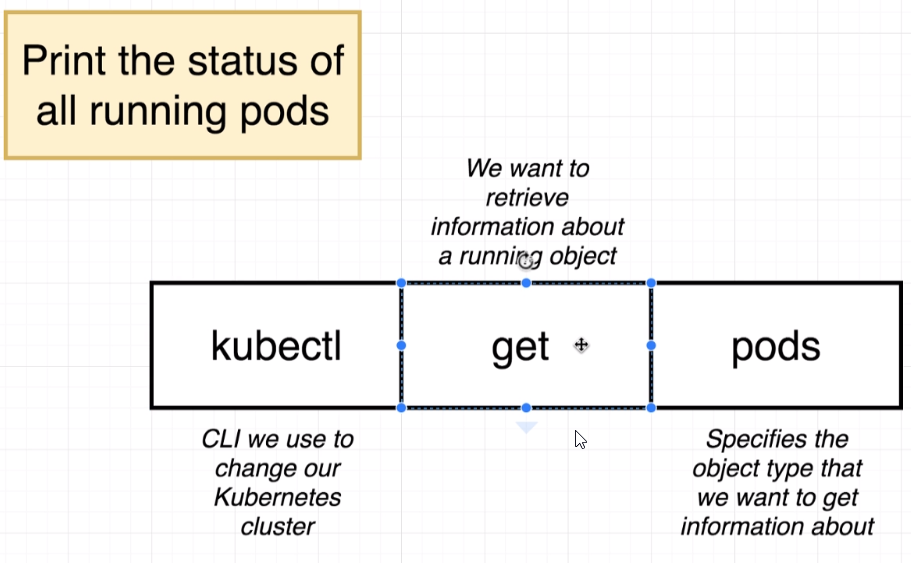

docker ps

Show all the running docker containers

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

- When we executed

docker run hello-worldordocker run busybox lsthey were just executed and the stopped

C:\WINDOWS\system32>docker run busybox ping google.com

PING google.com (216.58.214.174): 56 data bytes

64 bytes from 216.58.214.174: seq=0 ttl=37 time=42.866 ms

64 bytes from 216.58.214.174: seq=1 ttl=37 time=17.430 ms

64 bytes from 216.58.214.174: seq=2 ttl=37 time=106.816 ms

64 bytes from 216.58.214.174: seq=3 ttl=37 time=15.636 ms

Execute this in another command window

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

539c07bb9ba1 busybox "ping google.com" 26 seconds ago Up 25 seconds brave_montalcini

Show all the containers ever created

C:\WINDOWS\system32>docker ps --all

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

539c07bb9ba1 busybox "ping google.com" 3 minutes ago Up 3 minutes brave_montalcini

93f37c435c75 hello-world "ping google.com" 3 minutes ago Created dazzling_lalande

0dcaa0fa4c5d hello-world "echo hi there" 10 minutes ago Created condescending_borg

61d50ae7440b hello-world "ls" 10 minutes ago Created unruffled_keller

688787c6cae1 busybox "pwd" 12 minutes ago Exited (0) 12 minutes ago epic_yalow

91caad0cc3c5 busybox "pwa" 12 minutes ago Created dreamy_tesla

3fabe6651653 busybox "ls" 14 minutes ago Exited (0) 14 minutes ago laughing_lamarr

8a7fe5df5622 busybox "echo hi there" 16 minutes ago Exited (0) 16 minutes ago xenodochial_wescoff

6822eb029994 busybox "echo hi there" 16 minutes ago Exited (0) 16 minutes ago vigilant_bhaskara

fdc4c936f5b4 hello-world "/hello" 45 minutes ago Exited (0) 45 minutes ago optimistic_murdock

86bd4b57b368 hello-world "/hello" About an hour ago Exited (0) About an hour ago vibrant_shtern

- The Docker

run,createandstartcommands

Command

docker run = docker create + docker start

docker create: docker create [image name]

docker start: docker start [container id]

C:\WINDOWS\system32>docker create hello-world

f4b5c1e7846623378ec54a684f26f358ee2df2ee735381cf2cf68b1e3d52b9ee

C:\WINDOWS\system32>docker start -a f4b5c1e7846623378ec54a684f26f358ee2df2ee735381cf2cf68b1e3d52b9ee

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

C:\WINDOWS\system32>docker start f4b5c1e7846623378ec54a684f26f358ee2df2ee735381cf2cf68b1e3d52b9ee

f4b5c1e7846623378ec54a684f26f358ee2df2ee735381cf2cf68b1e3d52b9ee

-a parameter is needed to attach the container and its output

C:\WINDOWS\system32>docker ps --all

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f4b5c1e78466 hello-world "/hello" 3 minutes ago Exited (0) About a minute ago distracted_keller

539c07bb9ba1 busybox "ping google.com" 14 minutes ago Up 14 minutes brave_montalcini

93f37c435c75 hello-world "ping google.com" 14 minutes ago Created

This container id belongs to the one executed and exited some time ago

3fabe6651653 busybox "ls" 14 minutes ago Exited (0) 14 minutes ago laughing_lamarr

C:\WINDOWS\system32>docker start -a 3fabe6651653

bin

dev

etc

home

proc

root

sys

tmp

usr

var

C:\WINDOWS\system32>docker start -a 3fabe6651653 ls

you cannot start and attach multiple containers at once

- The Docker

logscommand

Command

docker logs [container id]

Get logs from a container

C:\WINDOWS\system32>docker logs 539c07bb9ba1

PING google.com (216.58.214.174): 56 data bytes

64 bytes from 216.58.214.174: seq=0 ttl=37 time=42.866 ms

64 bytes from 216.58.214.174: seq=1 ttl=37 time=17.430 ms

64 bytes from 216.58.214.174: seq=2 ttl=37 time=106.816 ms

64 bytes from 216.58.214.174: seq=3 ttl=37 time=15.636 ms

64 bytes from 216.58.214.174: seq=4 ttl=37 time=16.969 ms

64 bytes from 216.58.214.174: seq=5 ttl=37 time=16.502 ms

64 bytes from 216.58.214.174: seq=6 ttl=37 time=22.690 ms

64 bytes from 216.58.214.174: seq=7 ttl=37 time=26.402 ms

C:\WINDOWS\system32>docker create busybox echo hi there

e22ff84a283dc9a695acdfe775904d63c44a59be8bb83ae4f5b865313e07270c

C:\WINDOWS\system32>docker start e22ff84a283dc9a695acdfe775904d63c44a59be8bb83ae4f5b865313e07270c

e22ff84a283dc9a695acdfe775904d63c44a59be8bb83ae4f5b865313e07270c

C:\WINDOWS\system32>docker logs e22ff84a283dc9a695acdfe775904d63c44a59be8bb83ae4f5b865313e07270c

hi there

info

Docker logs never make the container run again, it just gets the output

- The Docker

stopcommand

Command

docker stop [container id]

Stop a container

It can make some cleanup, so it's safer

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

539c07bb9ba1 busybox "ping google.com" 34 minutes ago Up 34 minutes brave_montalcini

C:\WINDOWS\system32>docker stop 539c07bb9ba1

539c07bb9ba1

It takes a while

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

- The

killcommand

Command

docker kill [container id]

Kills a container

It's the same as stop but it executed inmediatelly

C:\WINDOWS\system32>docker start 539c07bb9ba1

539c07bb9ba1

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

539c07bb9ba1 busybox "ping google.com" 42 minutes ago Up 2 seconds brave_montalcini

C:\WINDOWS\system32>docker kill 539c07bb9ba1

539c07bb9ba1

It's executed inmediatelly

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

Working with redis

- Execute redis installed on the Operating System

C:\WINDOWS\system32>redis-server

[16152] 01 Nov 17:05:47.520 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

[16152] 01 Nov 17:05:47.527 # Creating Server TCP listening socket *:6379: bind: No such file or directory

C:\WINDOWS\system32>redis-cli

127.0.0.1:6379> set mynumber 5

OK

127.0.0.1:6379> get mynumber

"5"

127.0.0.1:6379>

C:\WINDOWS\system32>redis-cli shutdown

It shoutdowns Redis

- Execute

redisthrough docker

C:\WINDOWS\system32>docker run redis

Unable to find image 'redis:latest' locally

latest: Pulling from library/redis

f17d81b4b692: Pull complete

b32474098757: Pull complete

8980cabe8bc2: Pull complete

2719bdbf9516: Pull complete

f306130d78e3: Pull complete

3e09204f8155: Pull complete

Digest: sha256:481678b4b5ea1cb4e8d38ed6677b2da9b9e057cf7e1b6c988ba96651c6f6eff3

Status: Downloaded newer image for redis:latest

1:C 01 Nov 2018 17:17:58.832 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 01 Nov 2018 17:17:58.832 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 01 Nov 2018 17:17:58.832 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1:M 01 Nov 2018 17:17:58.834 * Running mode=standalone, port=6379.

1:M 01 Nov 2018 17:17:58.834 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 01 Nov 2018 17:17:58.834 # Server initialized

1:M 01 Nov 2018 17:17:58.834 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 01 Nov 2018 17:17:58.834 * Ready to accept connections

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f949774360a8 redis "docker-entrypoint.s…" 4 minutes ago Up 4 minutes 6379/tcp eloquent_goodall

- The Docker

execcommand

Command

docker exec -t [container id] [command]

exec = run another command

-it = allow us to provide input to the container

container id = ID of the container

command = Comand to execute

-it = -i -t

-i = send to STDIN

-t = allows to iterate with the terminal nicely

C:\WINDOWS\system32>docker exec -it f949774360a8 redis-cli

127.0.0.1:6379> set myvalue 5

OK

127.0.0.1:6379> get myvalue

"5"

127.0.0.1:6379>

C:\WINDOWS\system32>docker exec f949774360a8 redis-cli

If we just put

-ithe iteration is not that nice as with-it

C:\WINDOWS\system32>docker exec -i f949774360a8 redis-cli

set myvalue 5

OK

get myvalue

5

use

shto have full terminal access on the container

C:\WINDOWS\system32>docker exec -it f949774360a8 sh

# cd ~/

# ls

# cd /

# ls

bin boot data dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

# echo hi there

hi there

# export b=5

# echo $b

5

# redis-cli

127.0.0.1:6379>

#

# CTRL-D to exit

some containers allows to use

bashapart fromsh

C:\WINDOWS\system32>docker run -it busybox sh

/ # ls

bin dev etc home proc root sys tmp usr var

/ # ping google.com

PING google.com (172.217.16.238): 56 data bytes

64 bytes from 172.217.16.238: seq=0 ttl=37 time=27.981 ms

64 bytes from 172.217.16.238: seq=1 ttl=37 time=16.725 ms

64 bytes from 172.217.16.238: seq=2 ttl=37 time=16.971 ms

^C

--- google.com ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 16.725/20.559/27.981 ms

/ #

Each container can not iterate with the other one

- Open in two different terminal the same command

C:\WINDOWS\system32>docker run -it busybox sh

/ # ls

bin dev etc home proc root sys tmp usr var

/ # touch hithere

C:\WINDOWS\system32>docker run -it busybox sh

/ # ls

bin dev etc home proc root sys tmp usr var

/ #

=> Open in another terminal

C:\WINDOWS\system32>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

261f3e65f035 busybox "sh" 16 seconds ago Up 15 seconds nervous_lamport

3a4c383ab4e1 busybox "sh" 59 seconds ago Up 58 seconds objective_curran

f949774360a8 redis "docker-entrypoint.s…" 32 minutes ago Up 32 minutes 6379/tcp eloquent_goodall

- Create a file in the first container

/ # ls

bin dev etc home proc root sys tmp usr var

/ # touch hithere

/ # ls

bin dev etc hithere home proc root sys tmp usr var

/ #

- Execute

lsin the second container and it is not there

/ # ls

bin dev etc home proc root sys tmp usr var

/ #

Create Docker Images

- Create a

DockerfileDocker file.

I) It is used to define how our container should behave.

II) We need to specify a base image.

III) We need to run some comands to install aditional programs.

IV) We need to specify command to run on contaner startup.

Dockerfile

# Use an existing docker image as a base

FROM alpine

# Download and install a dependency

RUN apk add --update redis

# Tell the image what to do when it starts as a container

CMD ["redis-server"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker build .

Sending build context to Docker daemon 2.048kB

Step 1/3 : FROM alpine

latest: Pulling from library/alpine

4fe2ade4980c: Pull complete

Digest: sha256:621c2f39f8133acb8e64023a94dbdf0d5ca81896102b9e57c0dc184cadaf5528

Status: Downloaded newer image for alpine:latest

---> 196d12cf6ab1

Step 2/3 : RUN apk add --update redis

---> Running in 21a0eabbb20c

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/main/x86_64/APKINDEX.tar.gz

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/community/x86_64/APKINDEX.tar.gz

(1/1) Installing redis (4.0.11-r0)

Executing redis-4.0.11-r0.pre-install

Executing redis-4.0.11-r0.post-install

Executing busybox-1.28.4-r1.trigger

OK: 6 MiB in 14 packages

Removing intermediate container 21a0eabbb20c

---> 6f4006beaf6b

Step 3/3 : CMD ["redis-server"]

---> Running in 55933d5c7b05

Removing intermediate container 55933d5c7b05

---> 8ade2b72740d

Successfully built 8ade2b72740d

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker run 8ade2b72740d

1:C 01 Nov 18:08:53.521 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 01 Nov 18:08:53.521 # Redis version=4.0.11, bits=64, commit=bca38d14, modified=0, pid=1, just started

1:C 01 Nov 18:08:53.521 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1:M 01 Nov 18:08:53.522 * Running mode=standalone, port=6379.

1:M 01 Nov 18:08:53.522 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 01 Nov 18:08:53.522 # Server initialized

1:M 01 Nov 18:08:53.522 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 01 Nov 18:08:53.523 * Ready to accept connections

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9e62c378abbb 8ade2b72740d "redis-server" 36 seconds ago Up 34 seconds gracious_shannon

261f3e65f035 busybox "sh" 19 minutes ago Up 19 minutes nervous_lamport

3a4c383ab4e1 busybox "sh" 20 minutes ago Up 20 minutes objective_curran

f949774360a8 redis "docker-entrypoint.s…" About an hour ago Up About an hour 6379/tcp eloquent_goodall

- Explanation of the dockerfile

FROM alpine

- Image used as a base

- We've used alpine because it has already installed redis

RUN apk add --update redis

- Execute commands inside out custom image

- apk is the package manager used by alpine

- It updates the version of redis to the latest one

CMD ["redis-server"]

- What should be executed when it starts as a container

Dockerfile

# Use an existing docker image as a base

FROM alpine

# Download and install a dependency

RUN apk add --update redis

RUN apk add --update gcc

# Tell the image what to do when it starts as a container

CMD ["redis-server"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker build .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM alpine

---> 196d12cf6ab1

Step 2/4 : RUN apk add --update redis

---> Using cache

---> 6f4006beaf6b

Step 3/4 : RUN apk add --update gcc

---> Running in 4cdfb1cbbd93

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/main/x86_64/APKINDEX.tar.gz

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/community/x86_64/APKINDEX.tar.gz

(1/11) Installing binutils (2.30-r5)

(2/11) Installing gmp (6.1.2-r1)

(3/11) Installing isl (0.18-r0)

(4/11) Installing libgomp (6.4.0-r9)

(5/11) Installing libatomic (6.4.0-r9)

(6/11) Installing pkgconf (1.5.3-r0)

(7/11) Installing libgcc (6.4.0-r9)

(8/11) Installing mpfr3 (3.1.5-r1)

(9/11) Installing mpc1 (1.0.3-r1)

(10/11) Installing libstdc++ (6.4.0-r9)

(11/11) Installing gcc (6.4.0-r9)

Executing busybox-1.28.4-r1.trigger

OK: 90 MiB in 25 packages

Removing intermediate container 4cdfb1cbbd93

---> 41ed7952af36

Step 4/4 : CMD ["redis-server"]

---> Running in 5141e8d05a56

Removing intermediate container 5141e8d05a56

---> cf895461d982

Successfully built cf895461d982

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

- In this new image it uses the previous image created because there were no changes:

Using cache - In this new image when the

RUN apk add --update gccis executed no new intermediate container is created but theRunning in 4cdfb1cbbd93is shown - If we run again the command it will use everything from the cache

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker build .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM alpine

---> 196d12cf6ab1

Step 2/4 : RUN apk add --update redis

---> Using cache

---> 6f4006beaf6b

Step 3/4 : RUN apk add --update gcc

---> Using cache

---> 41ed7952af36

Step 4/4 : CMD ["redis-server"]

---> Using cache

---> cf895461d982

Successfully built cf895461d982

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

The order of the RUN commands has been changed

# Use an existing docker image as a base

FROM alpine

# Download and install a dependency

RUN apk add --update gcc

RUN apk add --update redis

# Tell the image what to do when it starts as a container

CMD ["redis-server]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker build .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM alpine

---> 196d12cf6ab1

Step 2/4 : RUN apk add --update gcc

---> Running in f2691b9eebd0

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/main/x86_64/APKINDEX.tar.gz

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/community/x86_64/APKINDEX.tar.gz

(1/11) Installing binutils (2.30-r5)

(2/11) Installing gmp (6.1.2-r1)

(3/11) Installing isl (0.18-r0)

(4/11) Installing libgomp (6.4.0-r9)

(5/11) Installing libatomic (6.4.0-r9)

(6/11) Installing pkgconf (1.5.3-r0)

(7/11) Installing libgcc (6.4.0-r9)

(8/11) Installing mpfr3 (3.1.5-r1)

(9/11) Installing mpc1 (1.0.3-r1)

(10/11) Installing libstdc++ (6.4.0-r9)

(11/11) Installing gcc (6.4.0-r9)

Executing busybox-1.28.4-r1.trigger

OK: 89 MiB in 24 packages

Removing intermediate container f2691b9eebd0

---> d7dfb5b27d69

Step 3/4 : RUN apk add --update redis

---> Running in 1dac349b07d5

(1/1) Installing redis (4.0.11-r0)

Executing redis-4.0.11-r0.pre-install

Executing redis-4.0.11-r0.post-install

Executing busybox-1.28.4-r1.trigger

OK: 90 MiB in 25 packages

Removing intermediate container 1dac349b07d5

---> 3f1ebdfcebf7

Step 4/4 : CMD ["redis-server"]

---> Running in fa0e5627654d

Removing intermediate container fa0e5627654d

---> 5fa87062b1fa

Successfully built 5fa87062b1fa

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

It cannot use the cached version

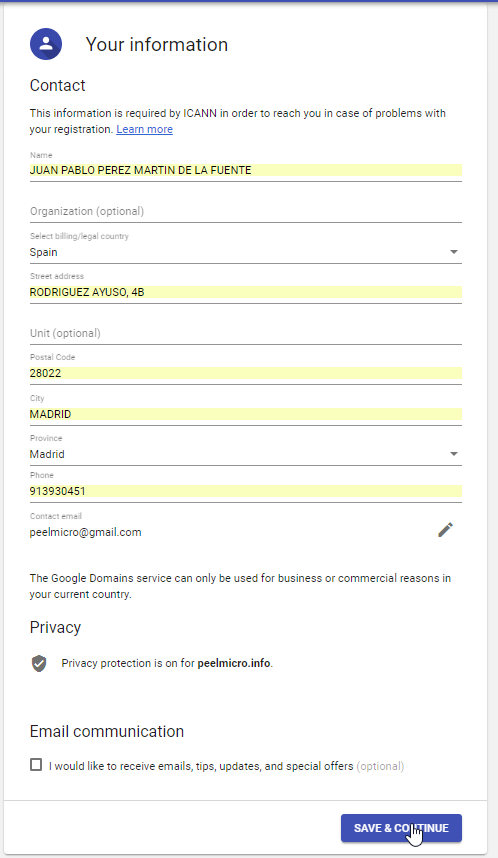

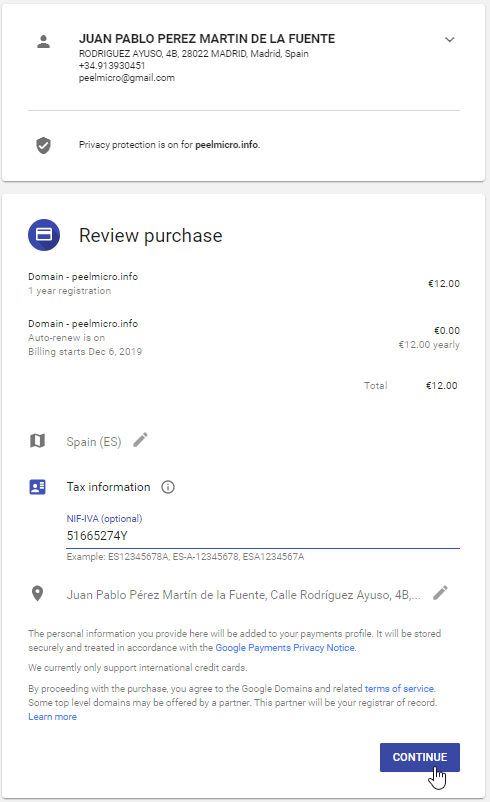

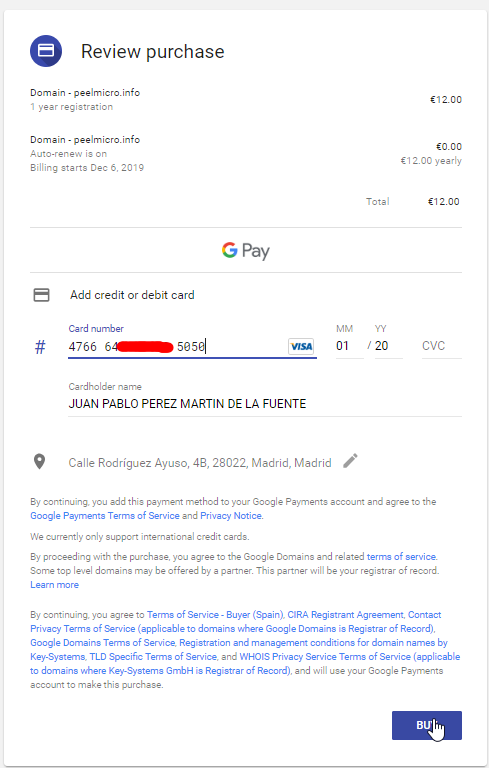

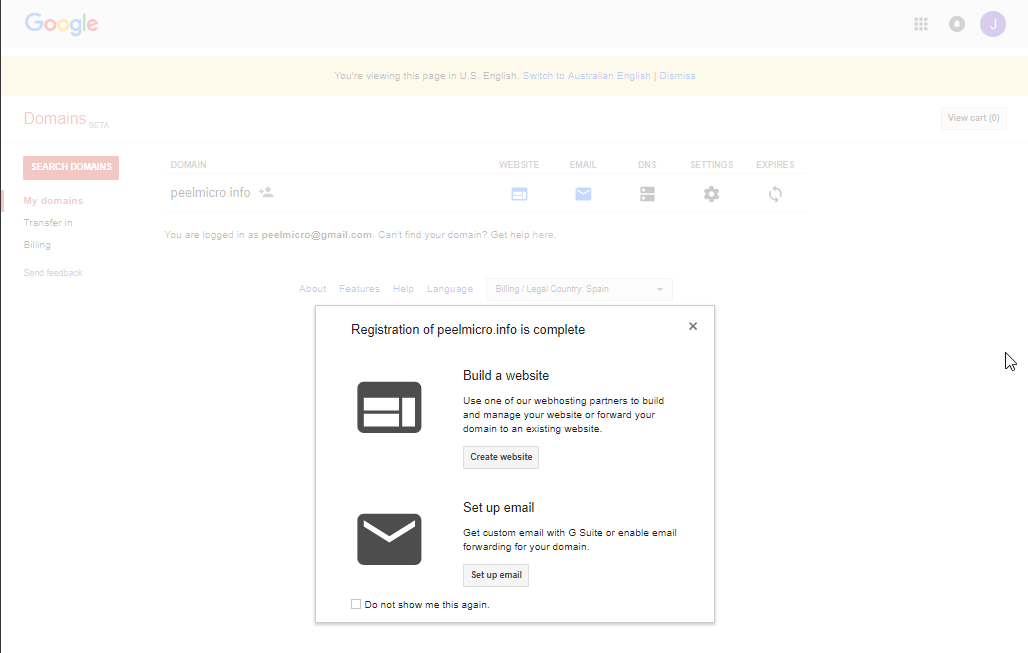

- The

build -tcommand

Command

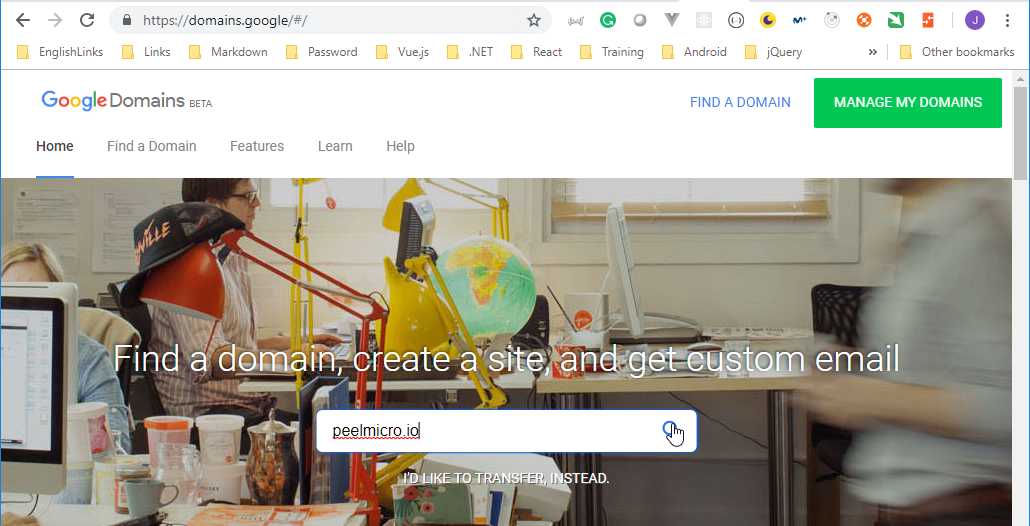

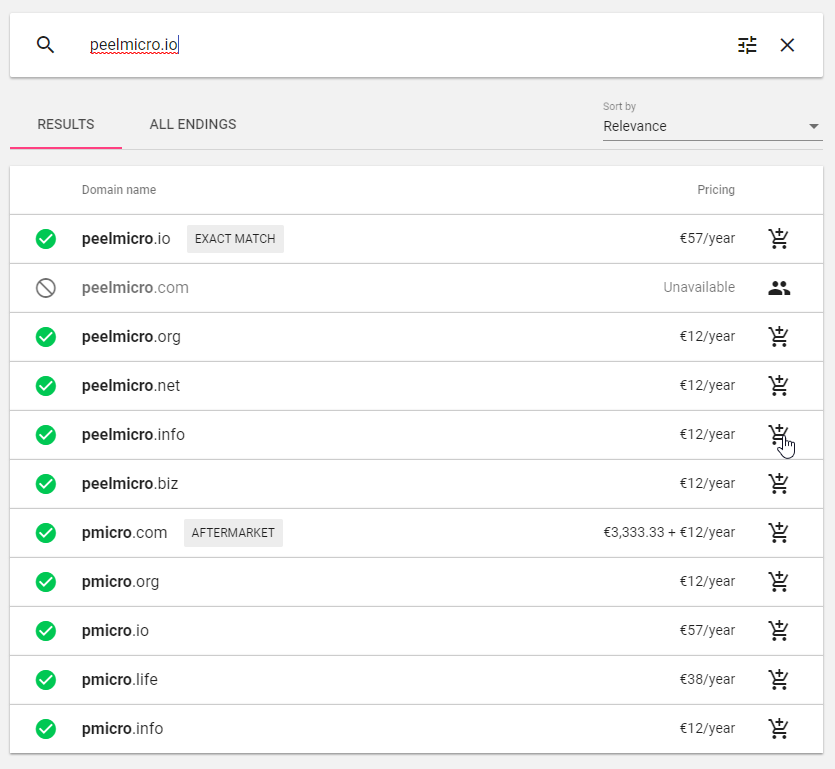

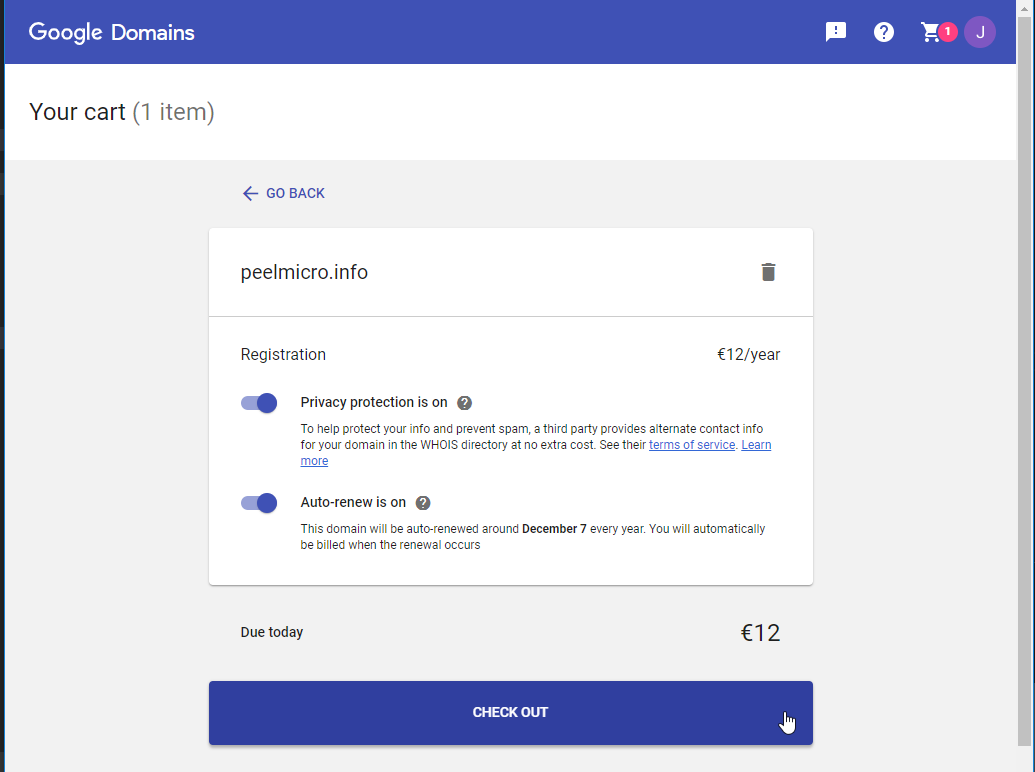

docker build -t peelmicro/redis:latest .

-t = tag the container

peelmicro = your docker id

redis = repo/project name

latest = version

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker build -t peelmicro/redis:latest .

Sending build context to Docker daemon 3.072kB

Step 1/4 : FROM alpine

---> 196d12cf6ab1

Step 2/4 : RUN apk add --update gcc

---> Using cache

---> d7dfb5b27d69

Step 3/4 : RUN apk add --update redis

---> Using cache

---> 3f1ebdfcebf7

Step 4/4 : CMD ["redis-server"]

---> Using cache

---> 5fa87062b1fa

Successfully built 5fa87062b1fa

Successfully tagged peelmicro/redis:latest

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker run peelmicro/redis:latest

1:C 01 Nov 19:07:35.509 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 01 Nov 19:07:35.509 # Redis version=4.0.11, bits=64, commit=bca38d14, modified=0, pid=1, just started

1:C 01 Nov 19:07:35.509 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1:M 01 Nov 19:07:35.511 * Running mode=standalone, port=6379.

1:M 01 Nov 19:07:35.511 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 01 Nov 19:07:35.511 # Server initialized

1:M 01 Nov 19:07:35.511 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 01 Nov 19:07:35.511 * Ready to accept connections

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

83db4887b686 peelmicro/redis:latest "redis-server" 16 seconds ago Up 15 seconds heuristic_khorana

9e62c378abbb 8ade2b72740d "redis-server" About an hour ago Up About an hour gracious_shannon

261f3e65f035 busybox "sh" About an hour ago Up About an hour nervous_lamport

3a4c383ab4e1 busybox "sh" About an hour ago Up About an hour objective_curran

f949774360a8 redis "docker-entrypoint.s…" 2 hours ago Up 2 hours 6379/tcp eloquent_goodall

uan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker run redis

1:C 01 Nov 2018 19:10:57.726 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 01 Nov 2018 19:10:57.726 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 01 Nov 2018 19:10:57.726 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1:M 01 Nov 2018 19:10:57.728 * Running mode=standalone, port=6379.

1:M 01 Nov 2018 19:10:57.728 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 01 Nov 2018 19:10:57.728 # Server initialized

1:M 01 Nov 2018 19:10:57.728 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 01 Nov 2018 19:10:57.728 * Ready to accept connections

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2bbc2b41ef7a redis "docker-entrypoint.s…" 44 seconds ago Up 42 seconds 6379/tcp silly_turing

83db4887b686 peelmicro/redis:latest "redis-server" 4 minutes ago Up 4 minutes heuristic_khorana

9e62c378abbb 8ade2b72740d "redis-server" About an hour ago Up About an hour gracious_shannon

261f3e65f035 busybox "sh" About an hour ago Up About an hour nervous_lamport

3a4c383ab4e1 busybox "sh" About an hour ago Up About an hour objective_curran

f949774360a8 redis "docker-entrypoint.s…" 2 hours ago Up 2 hours 6379/tcp eloquent_goodall

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker run redis:latest

1:C 01 Nov 2018 19:12:10.672 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 01 Nov 2018 19:12:10.672 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 01 Nov 2018 19:12:10.672 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1:M 01 Nov 2018 19:12:10.673 * Running mode=standalone, port=6379.

1:M 01 Nov 2018 19:12:10.673 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 01 Nov 2018 19:12:10.674 # Server initialized

1:M 01 Nov 2018 19:12:10.674 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 01 Nov 2018 19:12:10.674 * Ready to accept connections

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8d31733b2855 redis:latest "docker-entrypoint.s…" 17 seconds ago Up 15 seconds 6379/tcp cranky_knuth

2bbc2b41ef7a redis "docker-entrypoint.s…" About a minute ago Up About a minute 6379/tcp silly_turing

83db4887b686 peelmicro/redis:latest "redis-server" 4 minutes ago Up 4 minutes heuristic_khorana

9e62c378abbb 8ade2b72740d "redis-server" About an hour ago Up About an hour gracious_shannon

261f3e65f035 busybox "sh" About an hour ago Up About an hour nervous_lamport

3a4c383ab4e1 busybox "sh" About an hour ago Up About an hour objective_curran

f949774360a8 redis "docker-entrypoint.s…" 2 hours ago Up 2 hours 6379/tcp eloquent_goodall

- Create an

Imagefrom aContainer

1st command terminal window

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker run -it alpine sh

/ # apk add --update redis

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/main/x86_64/APKINDEX.tar.gz

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/community/x86_64/APKINDEX.tar.gz

(1/1) Installing redis (4.0.11-r0)

Executing redis-4.0.11-r0.pre-install

Executing redis-4.0.11-r0.post-install

Executing busybox-1.28.4-r1.trigger

OK: 6 MiB in 14 packages

/ #

2nd terminal window

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4f2ee675c090 alpine "sh" About a minute ago Up About a minute admiring_mcclintock

8d31733b2855 redis:latest "docker-entrypoint.s…" 6 minutes ago Up 6 minutes 6379/tcp cranky_knuth

2bbc2b41ef7a redis "docker-entrypoint.s…" 7 minutes ago Up 7 minutes 6379/tcp silly_turing

83db4887b686 peelmicro/redis:latest "redis-server" 11 minutes ago Up 11 minutes heuristic_khorana

9e62c378abbb 8ade2b72740d "redis-server" About an hour ago Up About an hour gracious_shannon

261f3e65f035 busybox "sh" About an hour ago Up About an hour nervous_lamport

3a4c383ab4e1 busybox "sh" About an hour ago Up About an hour objective_curran

f949774360a8 redis "docker-entrypoint.s…" 2 hours ago Up 2 hours 6379/tcp eloquent_goodall

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker commit -c 'CMD ["redis-server"]' 4f2ee675c090

sha256:535591b32510273b59703ec5cf447c66ec9afbe0ce34d5466d6d4464d00b9d1a

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/redis-image

$ docker run 535591b32510273

1:C 01 Nov 19:20:47.535 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 01 Nov 19:20:47.535 # Redis version=4.0.11, bits=64, commit=bca38d14, modified=0, pid=1, just started

1:C 01 Nov 19:20:47.535 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1:M 01 Nov 19:20:47.537 * Running mode=standalone, port=6379.

1:M 01 Nov 19:20:47.537 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 01 Nov 19:20:47.537 # Server initialized

1:M 01 Nov 19:20:47.537 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 01 Nov 19:20:47.537 * Ready to accept connections

Create a simple Node.js App

- Create the

Dockerfile

Dockerfile

# Specify a base image

FROM alpine

# Install some dependencies

RUN npm install

# Default command

CMD ["npm","run"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build .

Sending build context to Docker daemon 4.096kB

Step 1/3 : FROM alpine

---> 196d12cf6ab1

Step 2/3 : RUN npm install

---> Running in 841c5671d340

/bin/sh: npm: not found

The command '/bin/sh -c npm install' returned a non-zero code: 127

The reason is that alpine doesn't have npm installed

info

https://hub.docker.com/ is the website where all the images are available

- Create a new

Dockerfile

Dockerfile

# Specify a base image

FROM node:alpine

# Install some dependencies

RUN npm install

# Default command

CMD ["npm","run"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build .

Sending build context to Docker daemon 4.096kB

Step 1/3 : FROM node:alpine

alpine: Pulling from library/node

4fe2ade4980c: Already exists

4fe6fc3d4500: Pull complete

d48fd3011df1: Pull complete

Digest: sha256:9df9329306194c156863c74e97e43b54aee3884940b971e87c5c1db2f82c766a

Status: Downloaded newer image for node:alpine

---> 5d526f8ba00b

Step 2/3 : RUN npm install

---> Running in f0f6345b2d3a

npm WARN saveError ENOENT: no such file or directory, open '/package.json'

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN enoent ENOENT: no such file or directory, open '/package.json'

npm WARN !invalid#2 No description

npm WARN !invalid#2 No repository field.

npm WARN !invalid#2 No README data

npm WARN !invalid#2 No license field.

up to date in 1.231s

found 0 vulnerabilities

Removing intermediate container f0f6345b2d3a

---> 58f6ce2365f6

Step 3/3 : CMD ["npm","run"]

---> Running in d4e01958a4f3

Removing intermediate container d4e01958a4f3

---> cb0b5d05328c

Successfully built cb0b5d05328c

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

- Explanation of

COPYinside aDocerkfile

Dockerfile Command

COPY ./ ./

./ = origin file(s) relative to the build context

./ = target file(s) inside the container

- Create a new

Dockerfile

Dockerfile

# Specify a base image

FROM node:alpine

# copy the files

COPY ./ ./

# Install some dependencies

RUN npm install

# Default command

CMD ["npm","run"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build .

Sending build context to Docker daemon 4.096kB

Step 1/4 : FROM node:alpine

---> 5d526f8ba00b

Step 2/4 : COPY ./ ./

---> eae571c3ac77

Step 3/4 : RUN npm install

---> Running in 9d795f17346c

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN !invalid#2 No description

npm WARN !invalid#2 No repository field.

npm WARN !invalid#2 No license field.

added 48 packages from 36 contributors and audited 121 packages in 2.339s

found 0 vulnerabilities

Removing intermediate container 9d795f17346c

---> dc904dd0be0f

Step 4/4 : CMD ["npm","run"]

---> Running in 1b8a3aed0891

Removing intermediate container 1b8a3aed0891

---> 5d9d6e469f59

Successfully built 5d9d6e469f59

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

WARN messages are fine in this case

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build -t peelmicro/simpleweb .

Sending build context to Docker daemon 4.096kB

Step 1/4 : FROM node:alpine

---> 5d526f8ba00b

Step 2/4 : COPY ./ ./

---> Using cache

---> eae571c3ac77

Step 3/4 : RUN npm install

---> Using cache

---> dc904dd0be0f

Step 4/4 : CMD ["npm","run"]

---> Using cache

---> 5d9d6e469f59

Successfully built 5d9d6e469f59

Successfully tagged peelmicro/simpleweb:latest

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

- Create aother new

Dockerfile

Dockerfile

# Specify a base image

FROM node:alpine

# copy the files

COPY ./ ./

# Install some dependencies

RUN npm install

# Default command

CMD ["npm","start"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build -t peelmicro/simpleweb .

Sending build context to Docker daemon 4.096kB

Step 1/4 : FROM node:alpine

---> 5d526f8ba00b

Step 2/4 : COPY ./ ./

---> 9fd68808fa08

Step 3/4 : RUN npm install

---> Running in 8f4531e81b9d

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN !invalid#2 No description

npm WARN !invalid#2 No repository field.

npm WARN !invalid#2 No license field.

added 48 packages from 36 contributors and audited 121 packages in 2.256s

found 0 vulnerabilities

Removing intermediate container 8f4531e81b9d

---> 20ea798c1e44

Step 4/4 : CMD ["npm","start"]

---> Running in ba96c0069e87

Removing intermediate container ba96c0069e87

---> 851be26af7ec

Successfully built 851be26af7ec

Successfully tagged peelmicro/simpleweb:latest

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

- Run the

Image

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker run peelmicro/simpleweb

> @ start /

> node index.js

Listening on port 8080

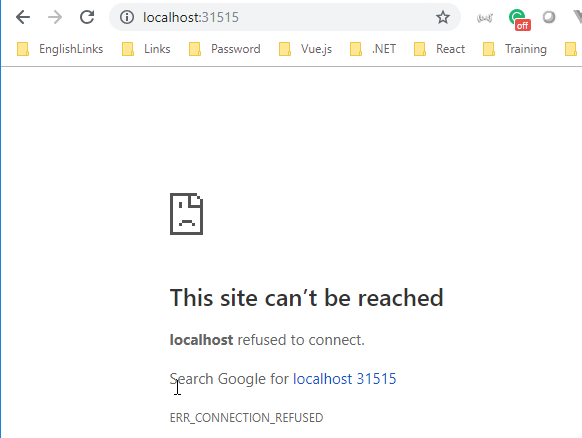

From Google Chrome

http://localhost:8080/

This site can’t be reached localhost refused to connect.

Did you mean http://localhost-8080.com/?

Search Google for localhost 8080

ERR_CONNECTION_REFUSED

- The

-pparameter

Command

Docker RUN with Port Mapping

docker run -p 8080 : 8080 [image id]

-p: port routings

8080: Route incoming request to this port on local host to ...

8080: ... this port inside the container

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker run -p 8080:8080 peelmicro/simpleweb

C:\Program Files\Docker\Docker\Resources\bin\docker.exe: Error response from daemon: driver failed programming external connectivity on endpoint optimistic_tesla (aebdca7e7ff39ee18e16316cec31601e215b35903a5a007155422fe5e54e79c9): Error starting userland proxy: mkdir /port/tcp:0.0.0.0:8080:tcp:172.17.0.12:8080: input/output error.

https://stackoverflow.com/a/49694417/1059286

After restarting Docker

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker run -p 8080:8080 peelmicro/simpleweb

> @ start /

> node index.js

Listening on port 8080

From Google Chrome

http://localhost:8080/

How are you doing

- The

system prunecommand

Command

docker system prune

remove all stopped containers

C:\WINDOWS\system32>docker system prune

WARNING! This will remove:

- all stopped containers

- all networks not used by at least one container

- all dangling images

- all build cache

Are you sure you want to continue? [y/N]

Are you sure you want to continue? [y/N] Y

Deleted Containers:

f4b5c1e7846623378ec54a684f26f358ee2df2ee735381cf2cf68b1e3d52b9ee

93f37c435c75f0764ead7bd64a80f821e99137608eb4faa709af881b86a68288

0dcaa0fa4c5d4e7f538cb14e96bffb96a64933389582a439fb51f594d9a4963c

61d50ae7440bd9edbdad3314b7596755f00f125c266b8dac6db5a8d0d506a0a7

688787c6cae12cd5f39e125b2e50e6ab390a5986054121e128ba13a34c5c5a62

91caad0cc3c51d9e54ba2274284fcaf9929a985076bac32287e7f163c206b082

3fabe66516533e0eaaec980fd7d6ee2fff3cce364472b44e02f9fb182adb9e75

8a7fe5df56225a36dfd97f3860ed375fc173114c274eb999e595d0dc3583b371

6822eb029994175a1111f8c245d1375a83d008ce4d91687affc46081d248a6b7

fdc4c936f5b4de01ac70090b5dacf326fcb5b7358893cd62f20d510f0c0f78d6

86bd4b57b368be9484a724e74dbabde76ed6ef3747e72b1185da1148ea504b54

Total reclaimed space: 0B

C:\WINDOWS\system32>docker ps --all

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

539c07bb9ba1 busybox "ping google.com" 24 minutes ago Up 24 minutes brave_montalcini

C:\WINDOWS\system32>docker start -a 3fabe6651653

Error: No such container: 3fabe6651653

- The command to stop all running containers

Command

Command to stop all running containers

It has to be executed from PowerShell

docker ps -a -q | ForEach { docker stop $_ }

PS C:\Users\juan.pablo.perez> docker ps -a -q | ForEach { docker stop $_ }

8d6fafb2b9f0

de4c4b0f9d27

5217140b3127

a0f483bdd133

3cda1c5a4971

841c5671d340

abfba8b6c667

4f2ee675c090

8d31733b2855

2bbc2b41ef7a

83db4887b686

9e62c378abbb

261f3e65f035

3a4c383ab4e1

1867016e9a47

f949774360a8

e22ff84a283d

539c07bb9ba1

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker run -p 5000:8080 peelmicro/simpleweb

> @ start /

> node index.js

Listening on port 8080

From Google Chrome

http://localhost:5000/

How are you doing

- The

WORKDIRcommand inside aDockerfile

Dockerfile Command

WORKDIR /usr/app

WORKDIR = set the working directory

/user/app = any following command will be executed relative to this path in the container

if the folder does not exist it is created automatically

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker run -it peelmicro/simpleweb sh

/ # ls

Dockerfile etc lib node_modules package.json run sys var

bin home media opt proc sbin tmp

dev index.js mnt package-lock.json root srv usr

/ #

- Create a new

Dockerfile

Dockerfile

# Specify a base image

FROM node:alpine

# set the working directory

WORKDIR /usr/app

# copy the files

COPY ./ ./

# Install some dependencies

RUN npm install

# Default command

CMD ["npm","start"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build -t peelmicro/simpleweb .

Sending build context to Docker daemon 4.096kB

Step 1/5 : FROM node:alpine

---> 5d526f8ba00b

Step 2/5 : WORKDIR /usr/app

---> Running in d5bb3ae1a8a2

Removing intermediate container d5bb3ae1a8a2

---> 7fcbaff7124f

Step 3/5 : COPY ./ ./

---> fa9a772252a5

Step 4/5 : RUN npm install

---> Running in 1853568177da

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN app No description

npm WARN app No repository field.

npm WARN app No license field.

added 48 packages from 36 contributors and audited 121 packages in 2.619s

found 0 vulnerabilities

Removing intermediate container 1853568177da

---> 87fbefff9181

Step 5/5 : CMD ["npm","start"]

---> Running in 403e1700f7f0

Removing intermediate container 403e1700f7f0

---> 1a9f4a7f50b1

Successfully built 1a9f4a7f50b1

Successfully tagged peelmicro/simpleweb:latest

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker run -it peelmicro/simpleweb sh

/usr/app # ls

Dockerfile index.js node_modules package-lock.json package.json

/usr/app #

- Avoid running npm install when we create a new image and there were no changes on the package.json file

Dockerfile

# Specify a base image

FROM node:alpine

# set the working directory

WORKDIR /usr/app

# Install dependencies --> this way it will executed again only if there is a change on the package.json file

COPY ./package.json ./

RUN npm install

# copy the files

COPY ./ ./

# Default command

CMD ["npm","start"]

1st time.

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build -t peelmicro/simpleweb .

Sending build context to Docker daemon 4.096kB

Step 1/6 : FROM node:alpine

---> 5d526f8ba00b

Step 2/6 : WORKDIR /usr/app

---> Using cache

---> 7fcbaff7124f

Step 3/6 : COPY ./package.json ./

---> 1ee76df824eb

Step 4/6 : RUN npm install

---> Running in 5392c1826277

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN app No description

npm WARN app No repository field.

npm WARN app No license field.

added 48 packages from 36 contributors and audited 121 packages in 3.147s

found 0 vulnerabilities

Removing intermediate container 5392c1826277

---> 72566a6bc881

Step 5/6 : COPY ./ ./

---> 9d80c78fd6bc

Step 6/6 : CMD ["npm","start"]

---> Running in c4382c677d87

Removing intermediate container c4382c677d87

---> f83b709e6fcb

Successfully built f83b709e6fcb

Successfully tagged peelmicro/simpleweb:latest

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

2nd time (changed index.js)

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/simpleweb

$ docker build -t peelmicro/simpleweb .

Sending build context to Docker daemon 4.096kB

Step 1/6 : FROM node:alpine

---> 5d526f8ba00b

Step 2/6 : WORKDIR /usr/app

---> Using cache

---> 7fcbaff7124f

Step 3/6 : COPY ./package.json ./

---> Using cache

---> 1ee76df824eb

Step 4/6 : RUN npm install

---> Using cache

---> 72566a6bc881

Step 5/6 : COPY ./ ./

---> dc6ee2549a36

Step 6/6 : CMD ["npm","start"]

---> Running in 3bd73fdd1932

Removing intermediate container 3bd73fdd1932

---> 54c8ea31a310

Successfully built 54c8ea31a310

Successfully tagged peelmicro/simpleweb:latest

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

Use of Docker Compose

Docker Composeis use to manage multiple containers and the interaction among them

- Separate CLI that gets installed along with Docker-compose

- Used to start multiple Docket containers at the same time.

- Automates some of the long-winded arguments we were passing to 'docker run'

- Similarities between Docker CLI and Docker-Compose CLI

| Docker | Docker Compose |

|---|---|

| docker run myimage docker build . | docker-compose up |

| docker build . docker run myimage | docker-compose up --build |

- Define a new

Dockerfile

Dockerfile

# Specify a base image

FROM node:alpine

# set the working directory

WORKDIR /app

# Install dependencies --> this way it will executed again only if there is a change on the package.json file

COPY ./package.json .

RUN npm install

# copy the files

COPY . .

# Default command

CMD ["npm","start"]

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visists

$ docker build -t peelmicro/visists .

Sending build context to Docker daemon 4.096kB

Step 1/6 : FROM node:alpine

---> 5d526f8ba00b

Step 2/6 : WORKDIR /app

---> Running in 3b9e753724b0

Removing intermediate container 3b9e753724b0

---> e7ae20d6064b

Step 3/6 : COPY ./package.json .

---> 08694a86a661

Step 4/6 : RUN npm install

---> Running in 3d6bb048c706

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN app No description

npm WARN app No repository field.

npm WARN app No license field.

added 52 packages from 40 contributors and audited 125 packages in 4.505s

found 0 vulnerabilities

Removing intermediate container 3d6bb048c706

---> 04e4c55002a6

Step 5/6 : COPY . .

---> ec0e30f04b69

Step 6/6 : CMD ["npm","start"]

---> Running in f4b1b4fc0718

Removing intermediate container f4b1b4fc0718

---> ebe155eb36bf

Successfully built ebe155eb36bf

Successfully tagged peelmicro/visists:latest

SECURITY WARNING: You are building a Docker image from Windows against a non-Windows Docker host. All files and directories added to build context will have '-rwxr-xr-x' permissions. It is recommended to double check and reset permissions for sensitive files and directories.

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visists

$ docker run peelmicro/visists

> @ start /app

> node index.js

Listening on port 8081

events.js:167

throw er; // Unhandled 'error' event

^

Error: Redis connection to 127.0.0.1:6379 failed - connect ECONNREFUSED 127.0.0.1:6379

at TCPConnectWrap.afterConnect [as oncomplete] (net.js:1117:14)

Emitted 'error' event at:

at RedisClient.on_error (/app/node_modules/redis/index.js:406:14)

at Socket.<anonymous> (/app/node_modules/redis/index.js:279:14)

at Socket.emit (events.js:182:13)

at emitErrorNT (internal/streams/destroy.js:82:8)

at emitErrorAndCloseNT (internal/streams/destroy.js:50:3)

at process.internalTickCallback (internal/process/next_tick.js:72:19)

npm ERR! code ELIFECYCLE

npm ERR! errno 1

npm ERR! @ start: `node index.js`

npm ERR! Exit status 1

npm ERR!

npm ERR! Failed at the @ start script.

npm ERR! This is probably not a problem with npm. There is likely additional logging output above.

npm ERR! A complete log of this run can be found in:

npm ERR! /root/.npm/_logs/2018-11-02T17_30_20_511Z-debug.log

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visists

docker run redis

1:C 02 Nov 2018 17:31:39.373 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 02 Nov 2018 17:31:39.373 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 02 Nov 2018 17:31:39.373 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1:M 02 Nov 2018 17:31:39.376 * Running mode=standalone, port=6379.

1:M 02 Nov 2018 17:31:39.376 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1:M 02 Nov 2018 17:31:39.376 # Server initialized

1:M 02 Nov 2018 17:31:39.376 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 02 Nov 2018 17:31:39.376 * Ready to accept connections

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visists

$ docker run peelmicro/visists

> @ start /app

> node index.js

Listening on port 8081

events.js:167

throw er; // Unhandled 'error' event

^

Error: Redis connection to 127.0.0.1:6379 failed - connect ECONNREFUSED 127.0.0.1:6379

at TCPConnectWrap.afterConnect [as oncomplete] (net.js:1117:14)

Emitted 'error' event at:

at RedisClient.on_error (/app/node_modules/redis/index.js:406:14)

at Socket.<anonymous> (/app/node_modules/redis/index.js:279:14)

at Socket.emit (events.js:182:13)

at emitErrorNT (internal/streams/destroy.js:82:8)

at emitErrorAndCloseNT (internal/streams/destroy.js:50:3)

at process.internalTickCallback (internal/process/next_tick.js:72:19)

npm ERR! code ELIFECYCLE

npm ERR! errno 1

npm ERR! @ start: `node index.js`

npm ERR! Exit status 1

npm ERR!

npm ERR! Failed at the @ start script.

npm ERR! This is probably not a problem with npm. There is likely additional logging output above.

npm ERR! A complete log of this run can be found in:

npm ERR! /root/.npm/_logs/2018-11-02T17_32_55_268Z-debug.log

- Create a `docker-compose.yml' file

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visists

$ dir

Dockerfile docker-compose.yml index.js package.json

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visists

$ docker-compose up

Creating network "visists_default" with the default driver

Building node-app

Step 1/6 : FROM node:alpine

---> 5d526f8ba00b

Step 2/6 : WORKDIR /app

---> Using cache

---> e7ae20d6064b

Step 3/6 : COPY ./package.json .

---> 91421b036188

Step 4/6 : RUN npm install

---> Running in 57980dd9ae2c

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN app No description

npm WARN app No repository field.

npm WARN app No license field.

added 52 packages from 40 contributors and audited 125 packages in 4.673s

found 0 vulnerabilities

Removing intermediate container 57980dd9ae2c

---> 232517ae145a

Step 5/6 : COPY . .

---> 42c38cdbaf45

Step 6/6 : CMD ["npm","start"]

---> Running in ad82fd6289aa

Removing intermediate container ad82fd6289aa

---> 2582fc91df0d

Successfully built 2582fc91df0d

Successfully tagged visists_node-app:latest

WARNING: Image for service node-app was built because it did not already exist. To rebuild this image you must use `docker-compose build` or `docker-compose up --build`.

Creating visists_redis-server_1 ... done

Creating visists_node-app_1 ... done

Attaching to visists_node-app_1, visists_redis-server_1

redis-server_1 | 1:C 02 Nov 2018 18:13:26.332 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-server_1 | 1:C 02 Nov 2018 18:13:26.332 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

redis-server_1 | 1:C 02 Nov 2018 18:13:26.332 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis-server_1 | 1:M 02 Nov 2018 18:13:26.335 * Running mode=standalone, port=6379.

redis-server_1 | 1:M 02 Nov 2018 18:13:26.336 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

redis-server_1 | 1:M 02 Nov 2018 18:13:26.336 # Server initialized

redis-server_1 | 1:M 02 Nov 2018 18:13:26.336 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot.

Redis must be restarted after THP is disabled.

redis-server_1 | 1:M 02 Nov 2018 18:13:26.336 * Ready to accept connections

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker-compose up

Creating network "visits_default" with the default driver

Building node-app

Step 1/6 : FROM node:alpine

---> 5d526f8ba00b

Step 2/6 : WORKDIR /app

---> Using cache

---> e7ae20d6064b

Step 3/6 : COPY ./package.json .

---> Using cache

---> 91421b036188

Step 4/6 : RUN npm install

---> Using cache

---> 232517ae145a

Step 5/6 : COPY . .

---> 30eaaa1a1270

Step 6/6 : CMD ["npm","start"]

---> Running in c308972d4cd7

Removing intermediate container c308972d4cd7

---> 93df6e4f0893

Successfully built 93df6e4f0893

Successfully tagged visits_node-app:latest

WARNING: Image for service node-app was built because it did not already exist. To rebuild this image you must use `docker-compose build` or `docker-compose up --build`.

Creating visits_redis-server_1 ... done

Creating visits_node-app_1 ... done

Attaching to visits_redis-server_1, visits_node-app_1

redis-server_1 | 1:C 02 Nov 2018 18:20:50.705 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-server_1 | 1:C 02 Nov 2018 18:20:50.705 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

redis-server_1 | 1:C 02 Nov 2018 18:20:50.705 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis-server_1 | 1:M 02 Nov 2018 18:20:50.706 * Running mode=standalone, port=6379.

redis-server_1 | 1:M 02 Nov 2018 18:20:50.706 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

redis-server_1 | 1:M 02 Nov 2018 18:20:50.706 # Server initialized

redis-server_1 | 1:M 02 Nov 2018 18:20:50.706 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot.

Redis must be restarted after THP is disabled.

redis-server_1 | 1:M 02 Nov 2018 18:20:50.706 * Ready to accept connections

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

node-app_1 | /app/index.js:15

node-app_1 | client.set('visits', parsetInt(visits) + 1);

node-app_1 | ^

node-app_1 |

node-app_1 | ReferenceError: parsetInt is not defined

node-app_1 | at Command.client.get [as callback] (/app/index.js:15:12)

node-app_1 | at normal_reply (/app/node_modules/redis/index.js:726:21)

node-app_1 | at RedisClient.return_reply (/app/node_modules/redis/index.js:824:9)

node-app_1 | at JavascriptRedisParser.returnReply (/app/node_modules/redis/index.js:192:18)

node-app_1 | at JavascriptRedisParser.execute (/app/node_modules/redis-parser/lib/parser.js:574:12)

node-app_1 | at Socket.<anonymous> (/app/node_modules/redis/index.js:274:27)

node-app_1 | at Socket.emit (events.js:182:13)

node-app_1 | at addChunk (_stream_readable.js:283:12)

node-app_1 | at readableAddChunk (_stream_readable.js:264:11)

node-app_1 | at Socket.Readable.push (_stream_readable.js:219:10)

node-app_1 | npm ERR! code ELIFECYCLE

node-app_1 | npm ERR! errno 1

node-app_1 | npm ERR! @ start: `node index.js`

node-app_1 | npm ERR! Exit status 1

node-app_1 | npm ERR!

node-app_1 | npm ERR! Failed at the @ start script.

node-app_1 | npm ERR! This is probably not a problem with npm. There is likely additional logging output above.

node-app_1 |

node-app_1 | npm ERR! A complete log of this run can be found in:

node-app_1 | npm ERR! /root/.npm/_logs/2018-11-02T18_21_01_950Z-debug.log

visits_node-app_1 exited with code 1

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker-compose up --build

Building node-app

Step 1/6 : FROM node:alpine

---> 5d526f8ba00b

Step 2/6 : WORKDIR /app

---> Using cache

---> e7ae20d6064b

Step 3/6 : COPY ./package.json .

---> Using cache

---> 91421b036188

Step 4/6 : RUN npm install

---> Using cache

---> 232517ae145a

Step 5/6 : COPY . .

---> c549b2d6e194

Step 6/6 : CMD ["npm","start"]

---> Running in 3fe1bcd7df39

Removing intermediate container 3fe1bcd7df39

---> cb86be5b3ceb

Successfully built cb86be5b3ceb

Successfully tagged visits_node-app:latest

Recreating visits_node-app_1 ... done

Starting visits_redis-server_1 ... done

Attaching to visits_redis-server_1, visits_node-app_1

redis-server_1 | 1:C 02 Nov 2018 18:23:29.699 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-server_1 | 1:C 02 Nov 2018 18:23:29.699 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

redis-server_1 | 1:C 02 Nov 2018 18:23:29.699 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis-server_1 | 1:M 02 Nov 2018 18:23:29.700 * Running mode=standalone, port=6379.

redis-server_1 | 1:M 02 Nov 2018 18:23:29.700 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

redis-server_1 | 1:M 02 Nov 2018 18:23:29.700 # Server initialized

redis-server_1 | 1:M 02 Nov 2018 18:23:29.700 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot.

Redis must be restarted after THP is disabled.

redis-server_1 | 1:M 02 Nov 2018 18:23:29.700 * DB loaded from disk: 0.000 seconds

redis-server_1 | 1:M 02 Nov 2018 18:23:29.700 * Ready to accept connections

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

Open on Goglle Chrome: http://localhost:4001/

Number of visits is 6

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f4706298bb04 visits_node-app "npm start" 3 minutes ago Up 3 minutes 0.0.0.0:4001->8081/tcp visits_node-app_1

61d71c423eaf redis "docker-entrypoint.s…" 6 minutes ago Up 3 minutes 6379/tcp visits_redis-server_1

- The

dDocker Compose command

Docker Compose command

docker-compose up -d

d = Launch it in background

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker-compose up -d

Creating network "visits_default" with the default driver

Creating visits_node-app_1 ... done

Creating visits_redis-server_1 ... done

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b30f750c3cf2 redis "docker-entrypoint.s…" 27 seconds ago Up 25 seconds 6379/tcp visits_redis-server_1

33a55c425cbb visits_node-app "npm start" 27 seconds ago Up 25 seconds 0.0.0.0:4001->8081/tcp visits_node-app_

- The

downDocker Compose command

Docker Compose command

docker-compose down

down = Stop containers

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker-compose down

Stopping visits_node-app_1 ... done

Stopping visits_redis-server_1 ... done

Removing visits_node-app_1 ... done

Removing visits_redis-server_1 ... done

Removing network visits_default

- Make the App crash

- Change the

index.jsfile

index.js

const express = require('express');

const redis = require('redis');

const process = require('process');

const app = express();

// host: 'Name of the container' <-- Docker compose takes care of it (it will resolve it for us)

const client = redis.createClient({

host: 'redis-server',

port: 6379

});

client.set('visits', 0);

app.get('/', (req, res) => {

// This line is going to make the app crash

process.exit(0);

client.get('visits', (err, visits) => {

res.send('Number of visits is '+ visits);

client.set('visits', parseInt(visits) + 1);

})

});

app.listen(8081, () => {

console.log('Listening on port 8081');

});

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker-compose up --build

Building node-app

Step 1/6 : FROM node:alpine

---> 5d526f8ba00b

Step 2/6 : WORKDIR /app

---> Using cache

---> e7ae20d6064b

Step 3/6 : COPY ./package.json .

---> Using cache

---> 91421b036188

Step 4/6 : RUN npm install

---> Using cache

---> 232517ae145a

Step 5/6 : COPY . .

---> b21b01bd1e8a

Step 6/6 : CMD ["npm","start"]

---> Running in 1daf9c67335f

Removing intermediate container 1daf9c67335f

---> fc315004ce35

Successfully built fc315004ce35

Successfully tagged visits_node-app:latest

visits_redis-server_1 is up-to-date

Recreating visits_node-app_1 ... done

Attaching to visits_redis-server_1, visits_node-app_1

redis-server_1 | 1:C 02 Nov 2018 18:31:52.764 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-server_1 | 1:C 02 Nov 2018 18:31:52.764 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

redis-server_1 | 1:C 02 Nov 2018 18:31:52.764 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis-server_1 | 1:M 02 Nov 2018 18:31:52.765 * Running mode=standalone, port=6379.

redis-server_1 | 1:M 02 Nov 2018 18:31:52.765 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

redis-server_1 | 1:M 02 Nov 2018 18:31:52.765 # Server initialized

redis-server_1 | 1:M 02 Nov 2018 18:31:52.766 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot.

Redis must be restarted after THP is disabled.

redis-server_1 | 1:M 02 Nov 2018 18:31:52.766 * Ready to accept connections

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

- Browse to http://localhost:4001/

This page isn’t working localhost didn’t send any data.

ERR_EMPTY_RESPONSE

node-app_1 |

node-app_1 | Listening on port 8081

visits_node-app_1 exited with code 0

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b30f750c3cf2 redis "docker-entrypoint.s…" 7 minutes ago Up 7 minutes 6379/tcp visits_redis-server_1

- The

restartDocker Compose entry

Docker Compose entry

no - Never attempt to restart this . container if it stops or crashes

always - If this container stops for any reason always attempts to restart it

on-failure - Only restart if the container stops with an error code

unless-stopped - Always restart unless we (the developers) forcibly stop it

- Modify the

docker-compose.ymlfile

docker-compose.yml

version: '3'

services:

redis-server:

image: 'redis'

node-app:

restart: always

build: .

ports:

- "4001:8081"

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker-compose up

Recreating visits_node-app_1 ...

Recreating visits_node-app_1 ... done

Attaching to visits_redis-server_1, visits_node-app_1

redis-server_1 | 1:C 02 Nov 2018 18:31:52.764 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-server_1 | 1:C 02 Nov 2018 18:31:52.764 # Redis version=5.0.0, bits=64, commit=00000000, modified=0, pid=1, just started

- Browse to http://localhost:4001/

This page isn’t working localhost didn’t send any data.

ERR_EMPTY_RESPONSE

visits_node-app_1 exited with code 0

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

visits_node-app_1 exited with code 0

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

node-app_1 |

node-app_1 | > @ start /app

node-app_1 | > node index.js

node-app_1 |

node-app_1 | Listening on port 8081

Juan.Pablo.Perez@RIMDUB-0232 MINGW64 ~/OneDrive/Training/Docker/DockerAndKubernetes.TheCompleteGuide/visits

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8c69374d1c39 visits_node-app "npm start" About a minute ago Up 39 seconds 0.0.0.0:4001->8081/tcp visits_node-app_1

b30f750c3cf2 redis "docker-entrypoint.s…" 16 minutes ago Up 16 minutes 6379/tcp visits_redis-server_1

redis-server_1 | 1:C 02 Nov 2018 18:31:52.764 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis-server_1 | 1:M 02 Nov 2018 18:31:52.765 * Running mode=standalone, port=6379.

redis-server_1 | 1:M 02 Nov 2018 18:31:52.765 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.