Learn DevOps Helm/Helmfile Kubernetes deployment

Github Repositories

The Learn DevOps Helm/Helmfile Kubernetes deployment Udemy course help learn DevOps Helm/Helmfile Kubernetes deployment with practical HELM CHART examples.

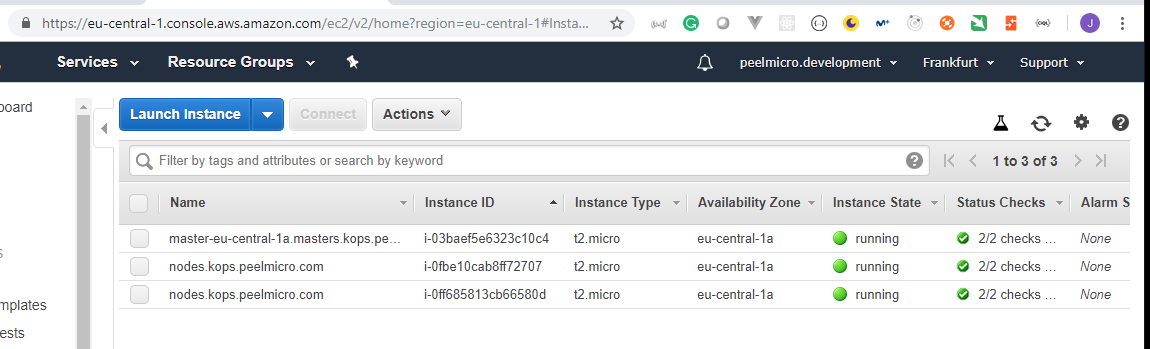

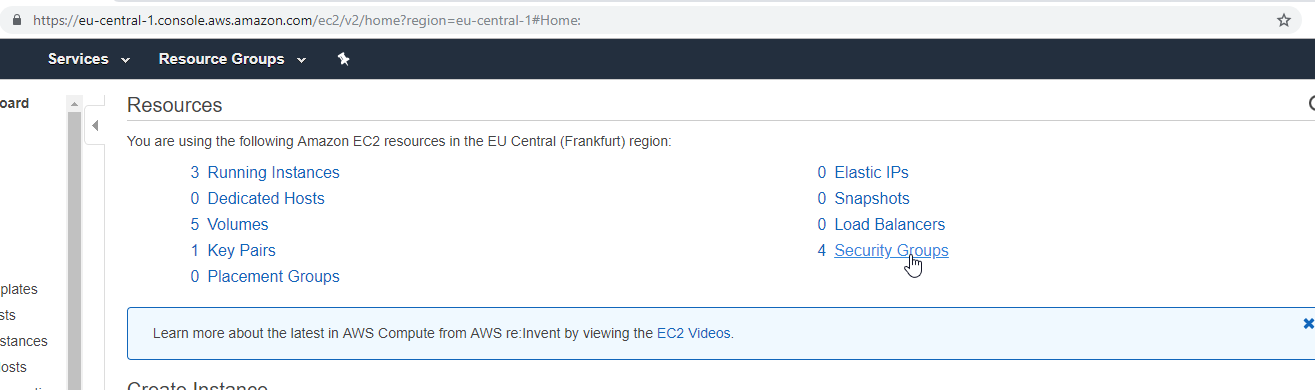

Table of contents

- What I've learned

- Section: 1. Introduction

- 1. Welcome to course

- 2. Materials: Delete/destroy all the AWS resources every time you do not use them

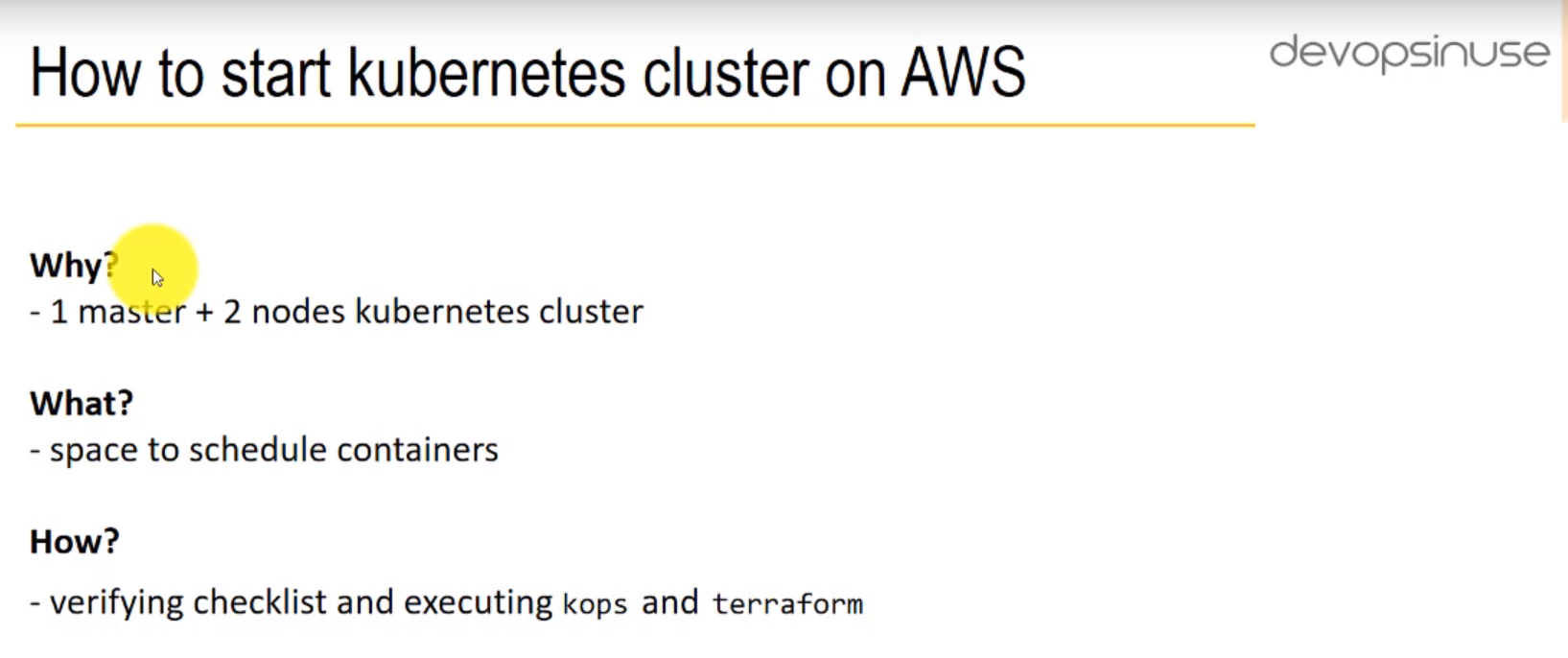

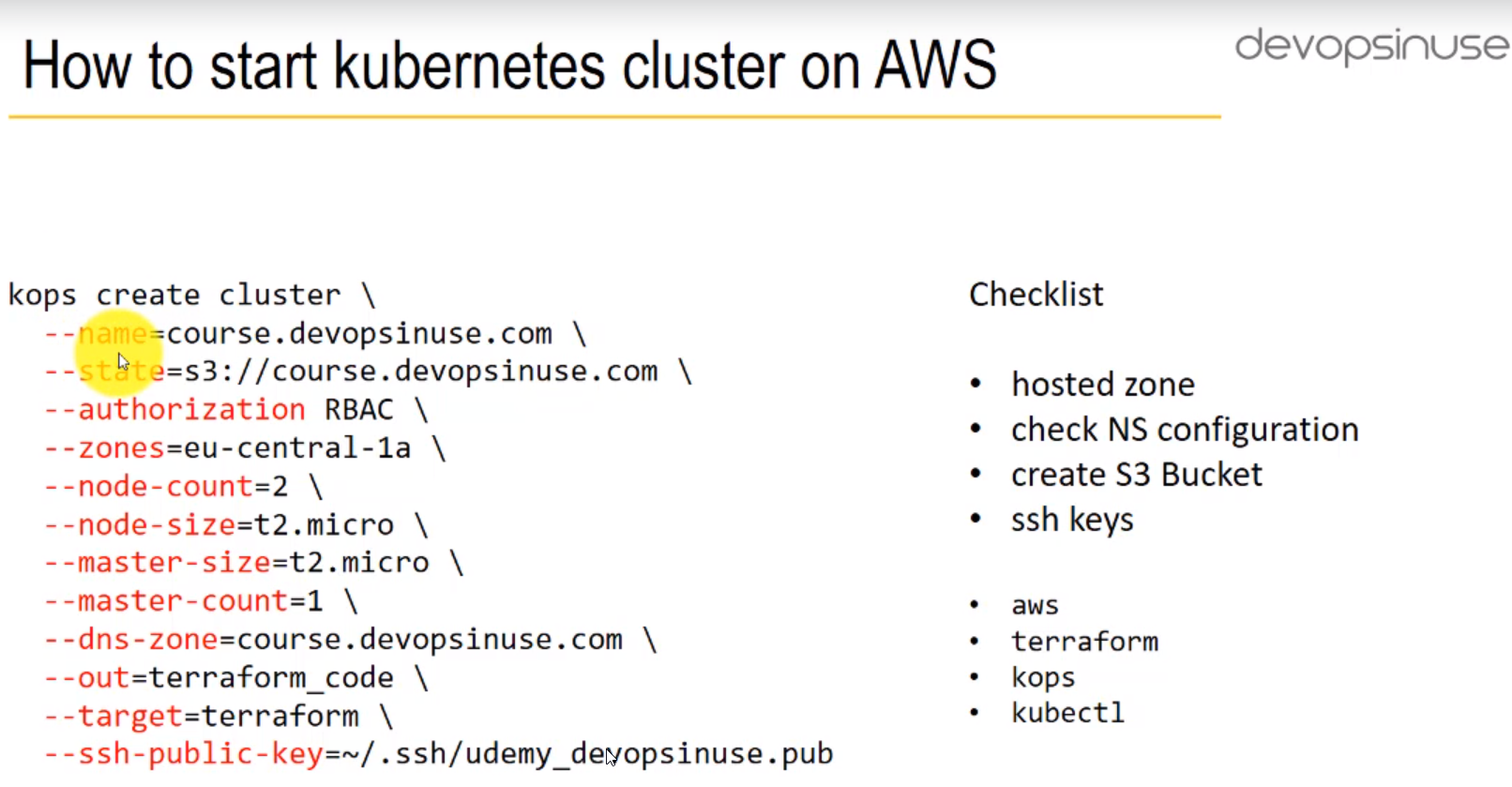

- 3. How to start kubernetes cluster on AWS

- 4. How to create Hosted Zone on AWS

- 5. How to setup communication kops to AWS via aws

- 6. Materials: How to install KOPS binary

- 7. How to install kops

- 8. How to create S3 bucket in AWS

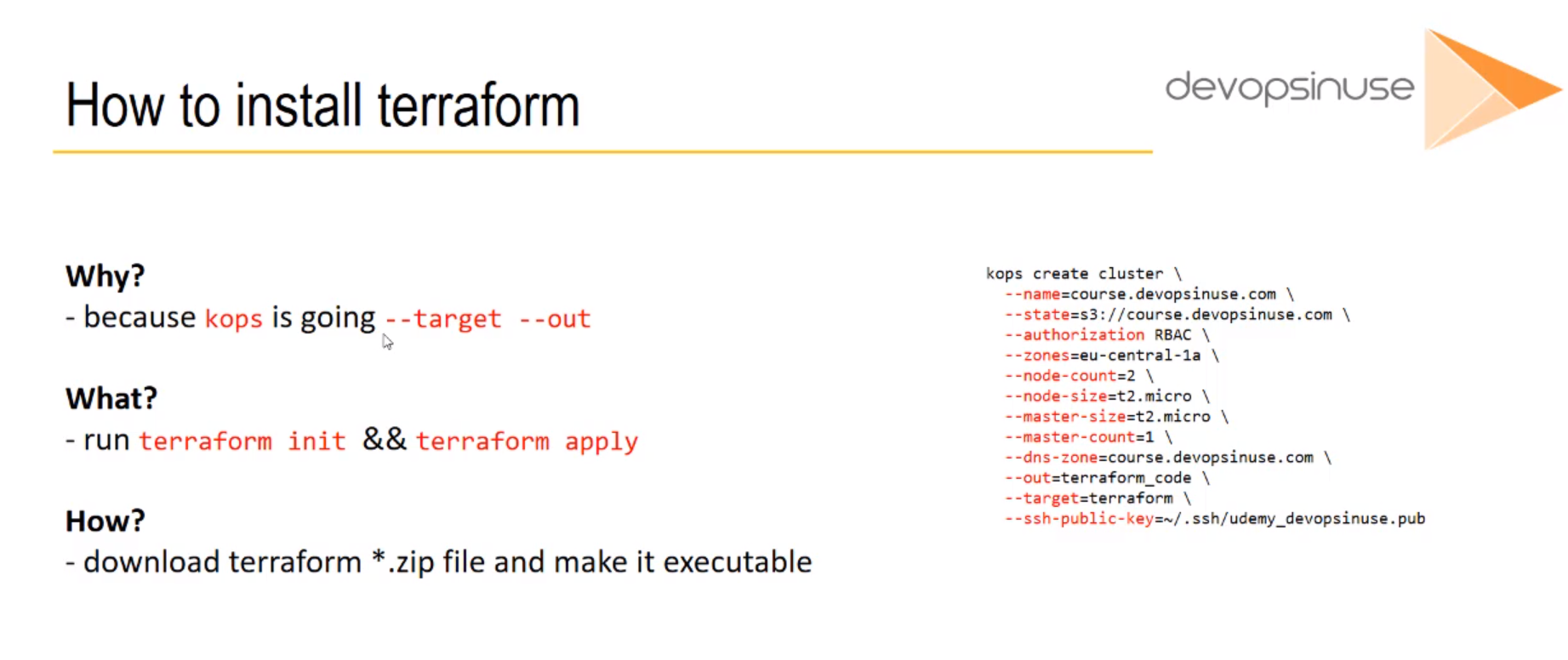

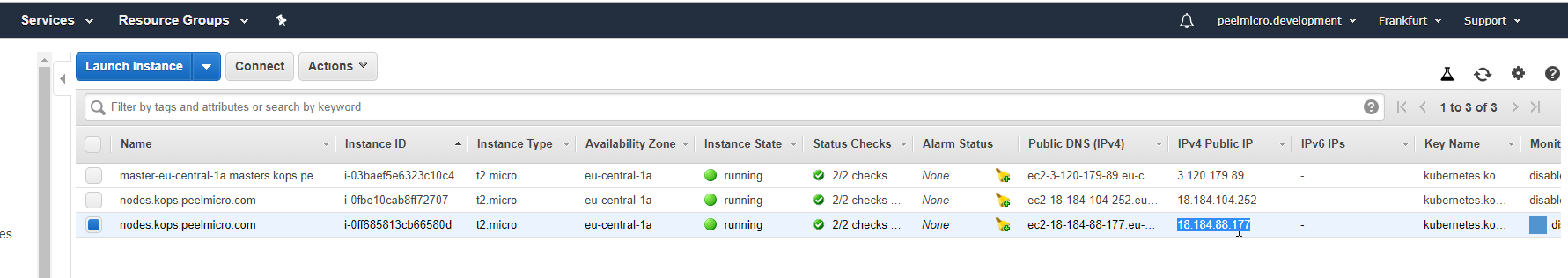

- 9. Materials: How to install TERRAFORM binary

- 10. How to install Terraform binary

- 11. Materials: How to install KUBECTL binary

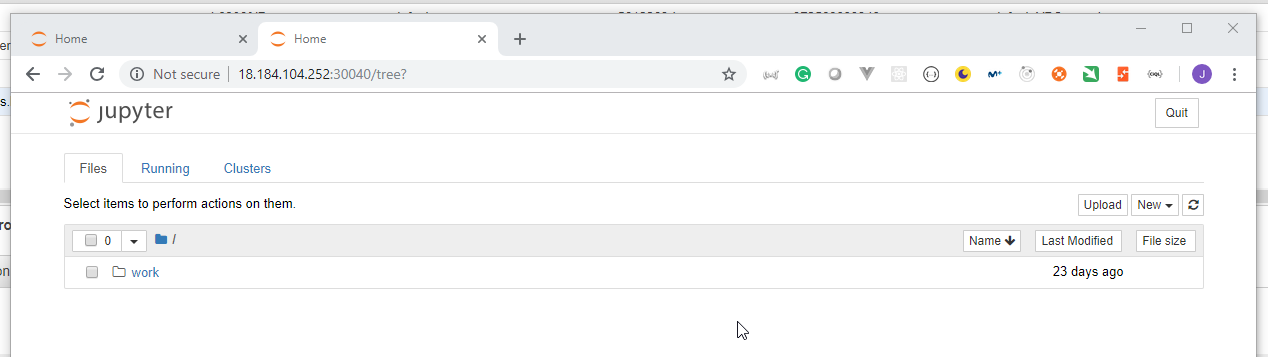

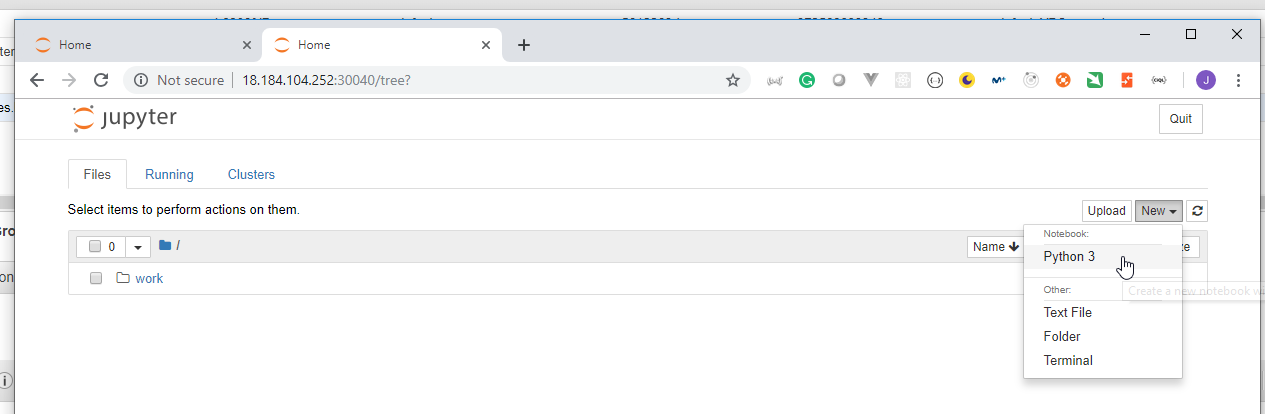

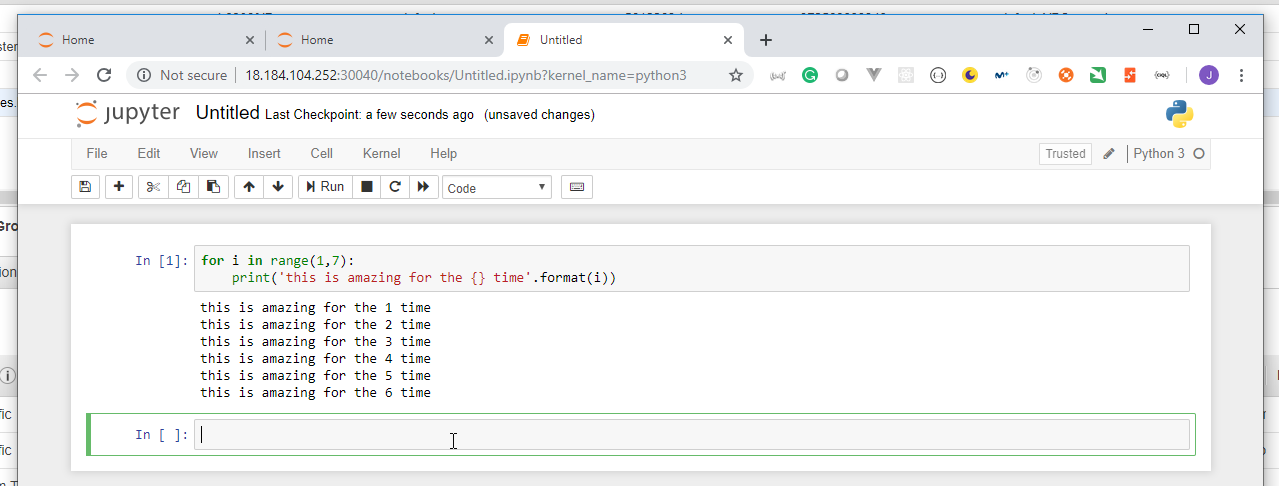

- 12. How to install Kubectl binary

- 13. Materials: How to start Kubernetes cluster

- 14. How to lunch kubernetes cluster on AWS by using kops and terraform

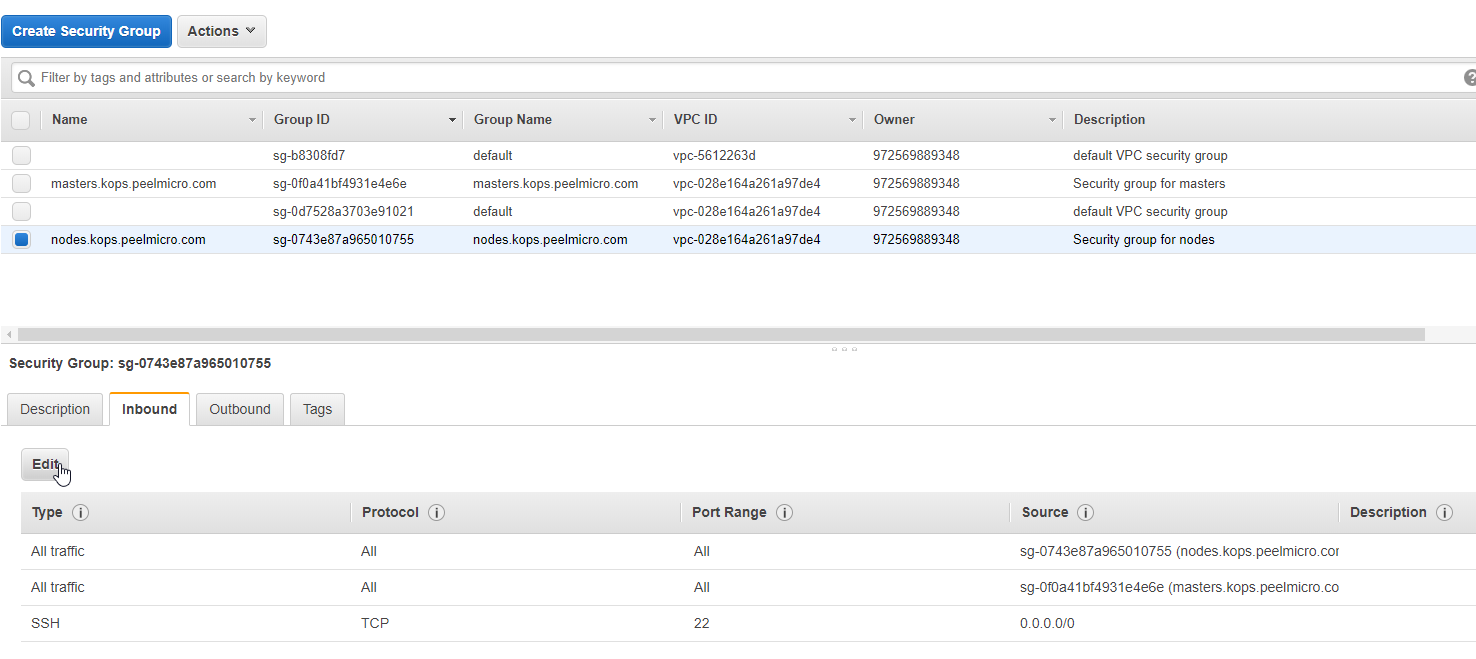

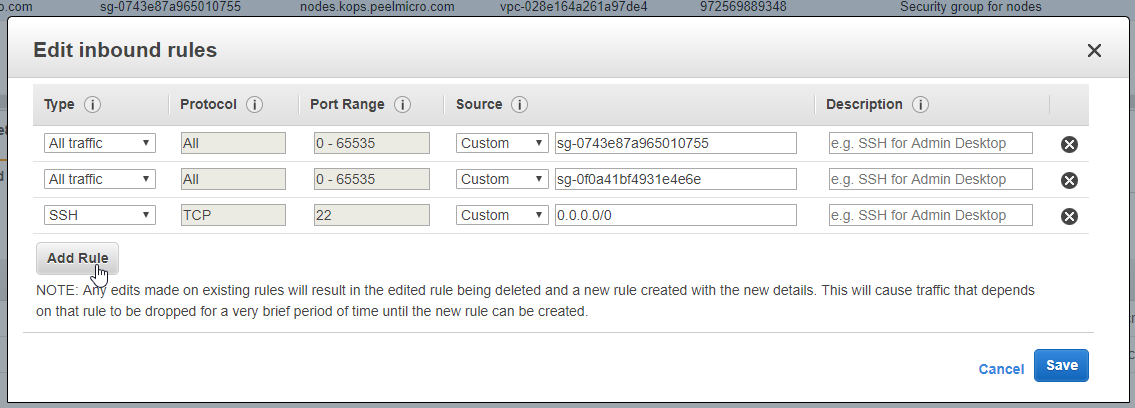

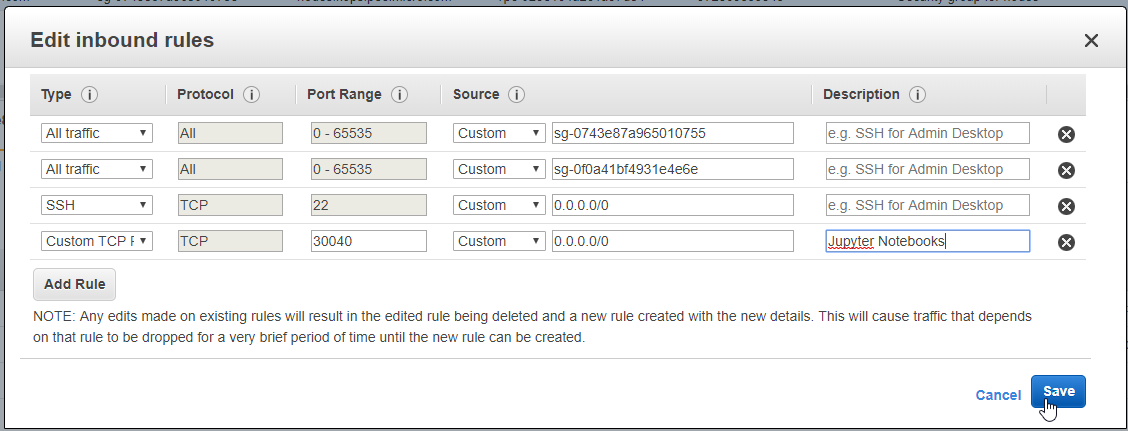

- Section: 2. Jupyter Notebooks

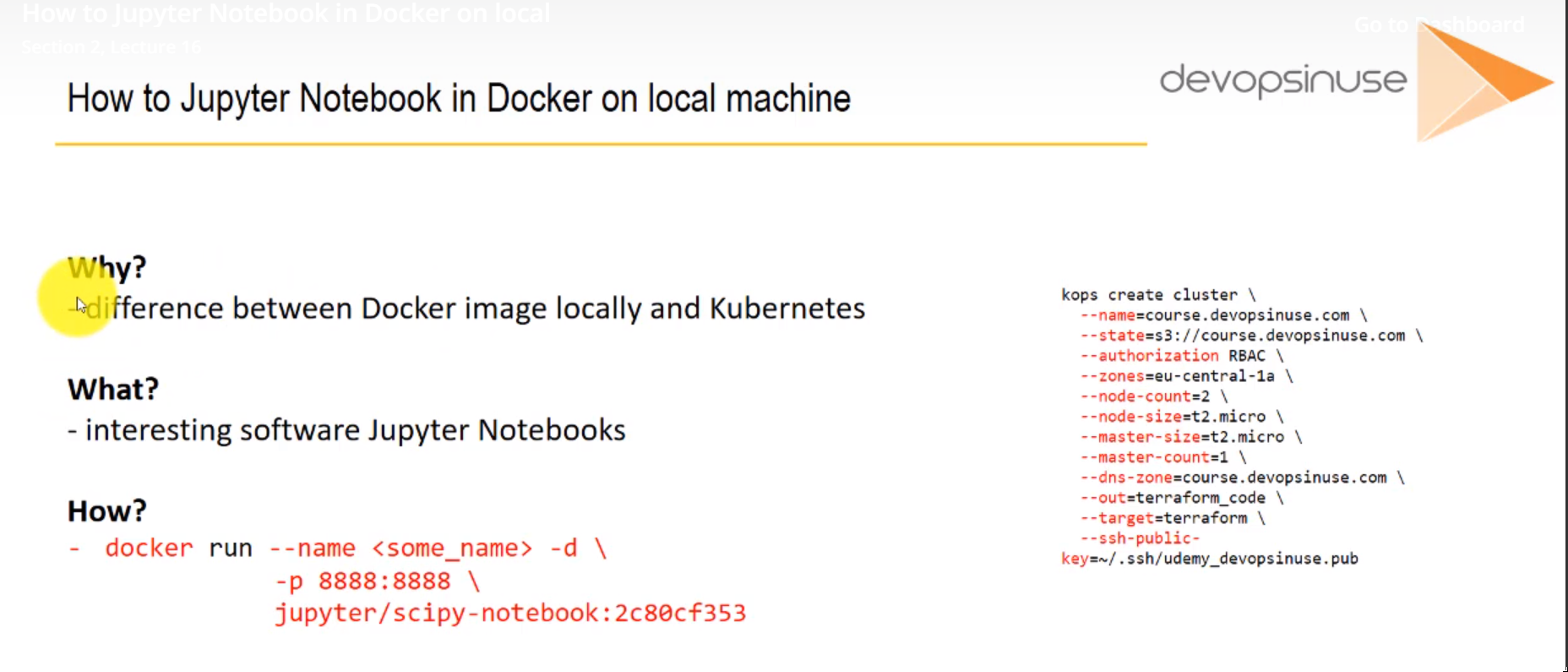

- 15. Materials: How to run Jupyter Notebooks locally as Docker image

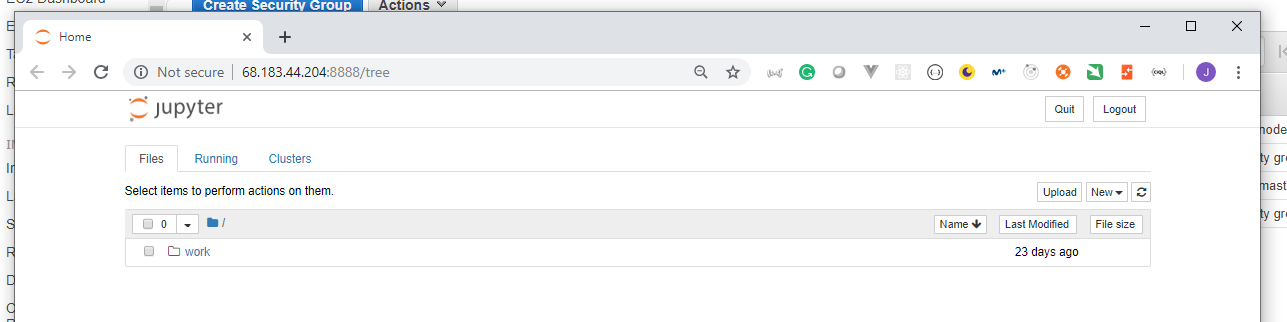

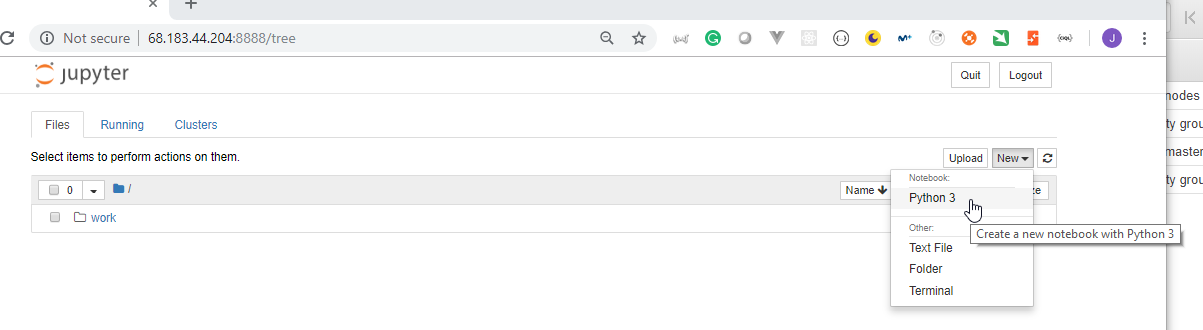

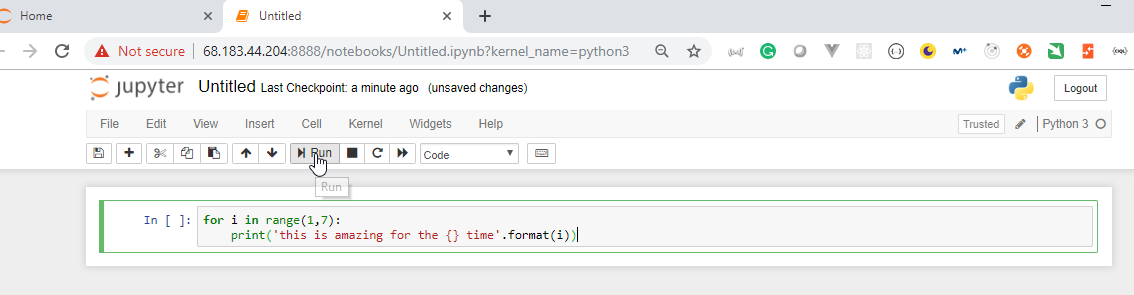

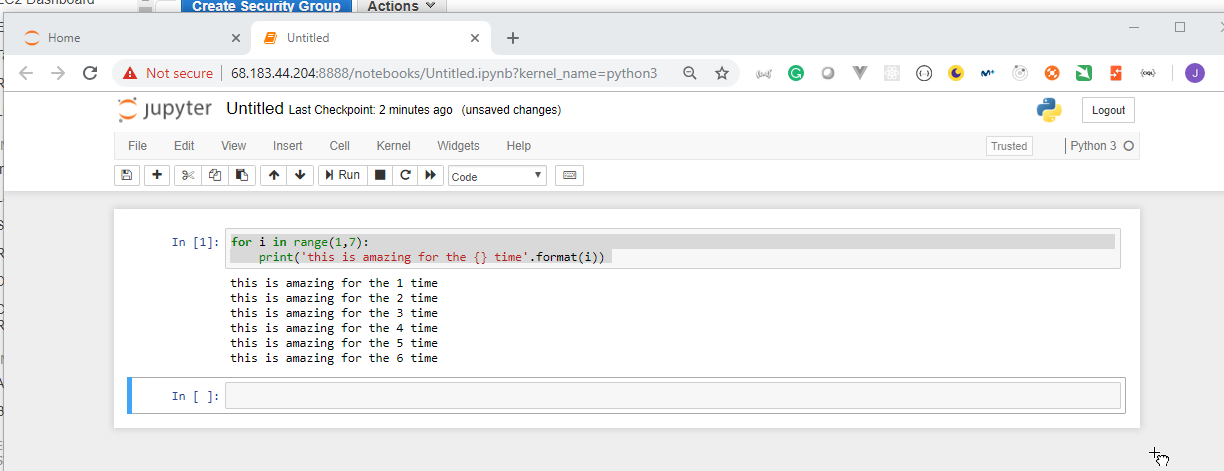

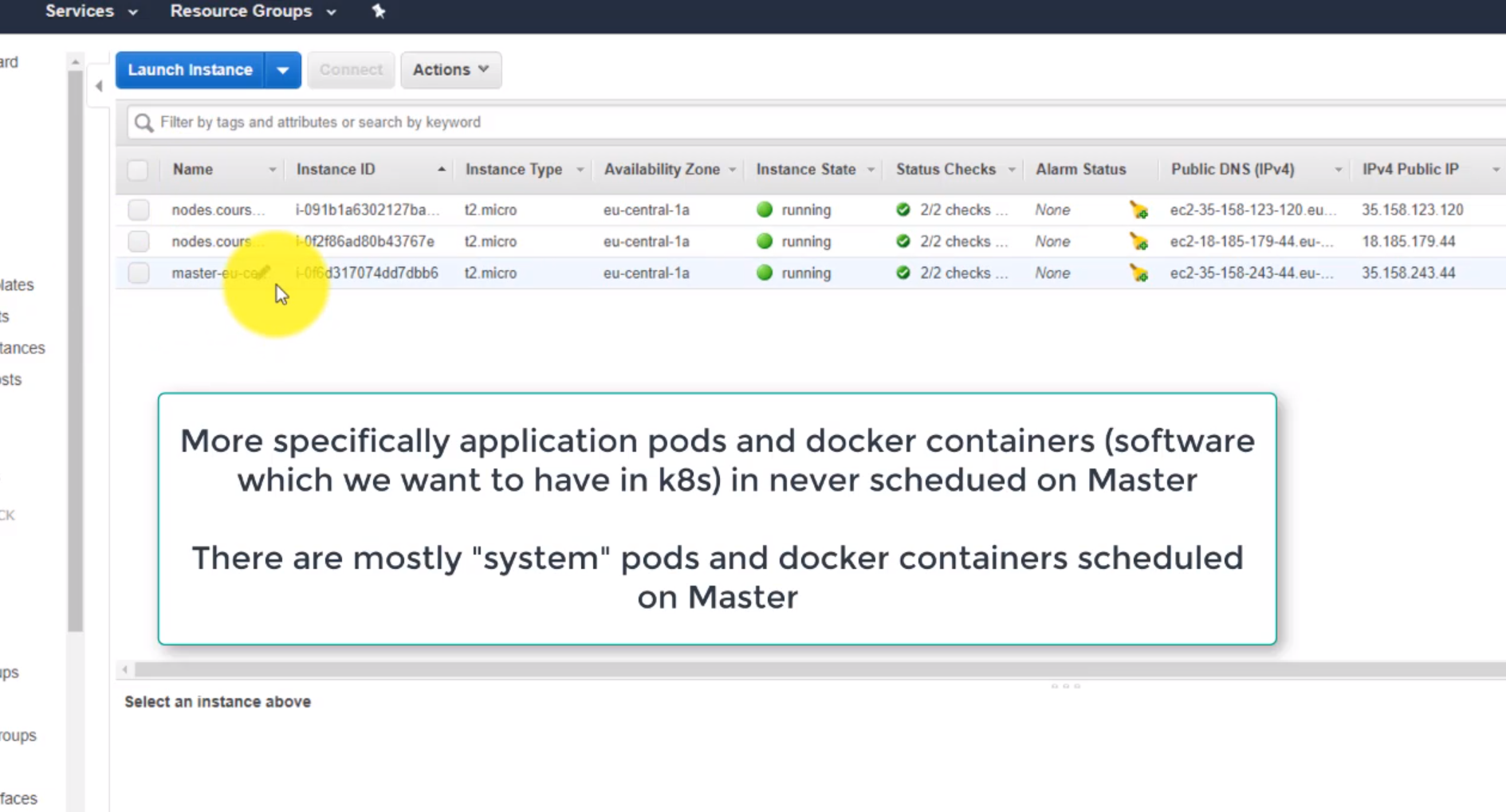

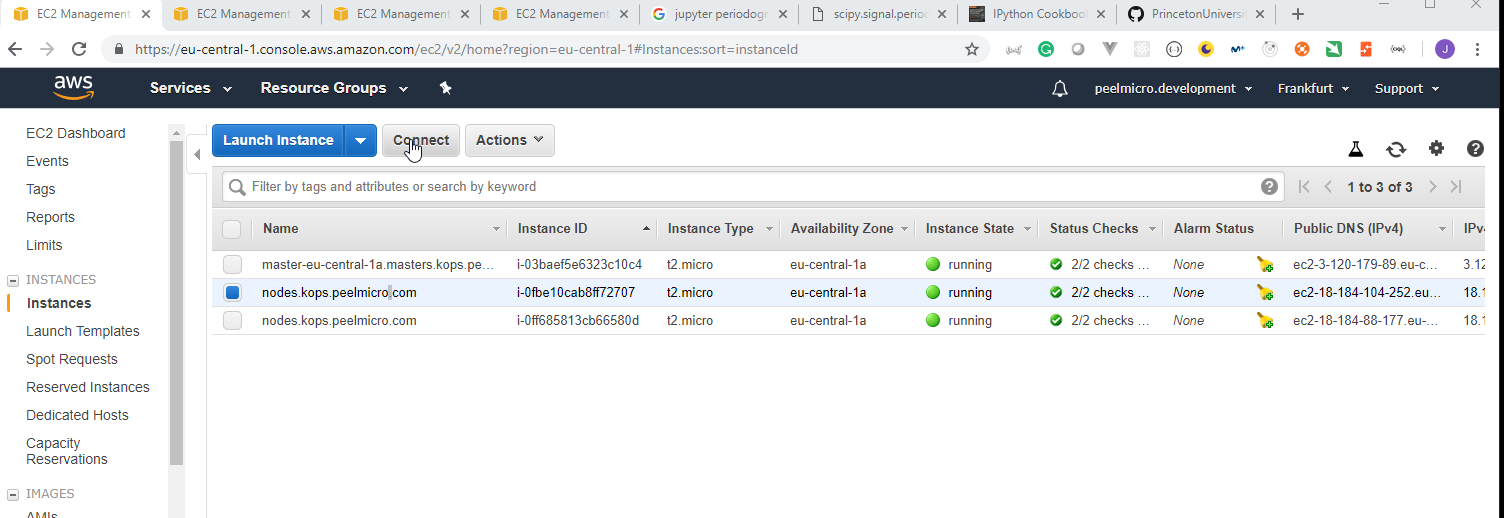

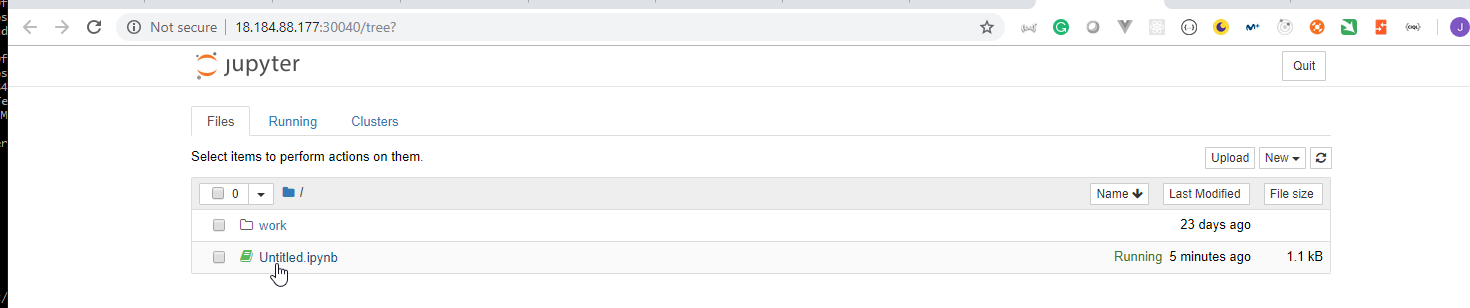

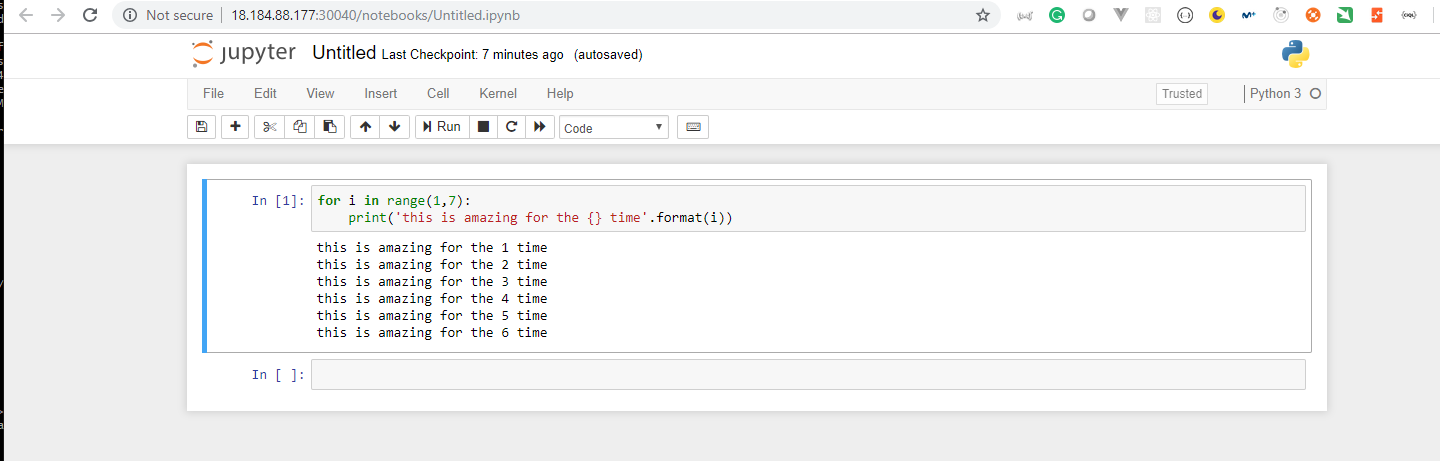

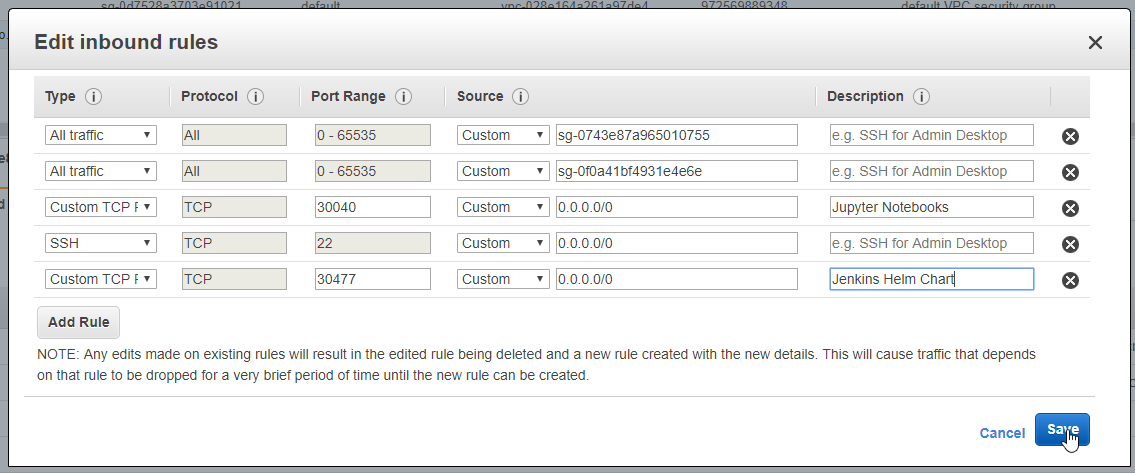

- 16. How to Run Jupyter Notebooks in Docker on local

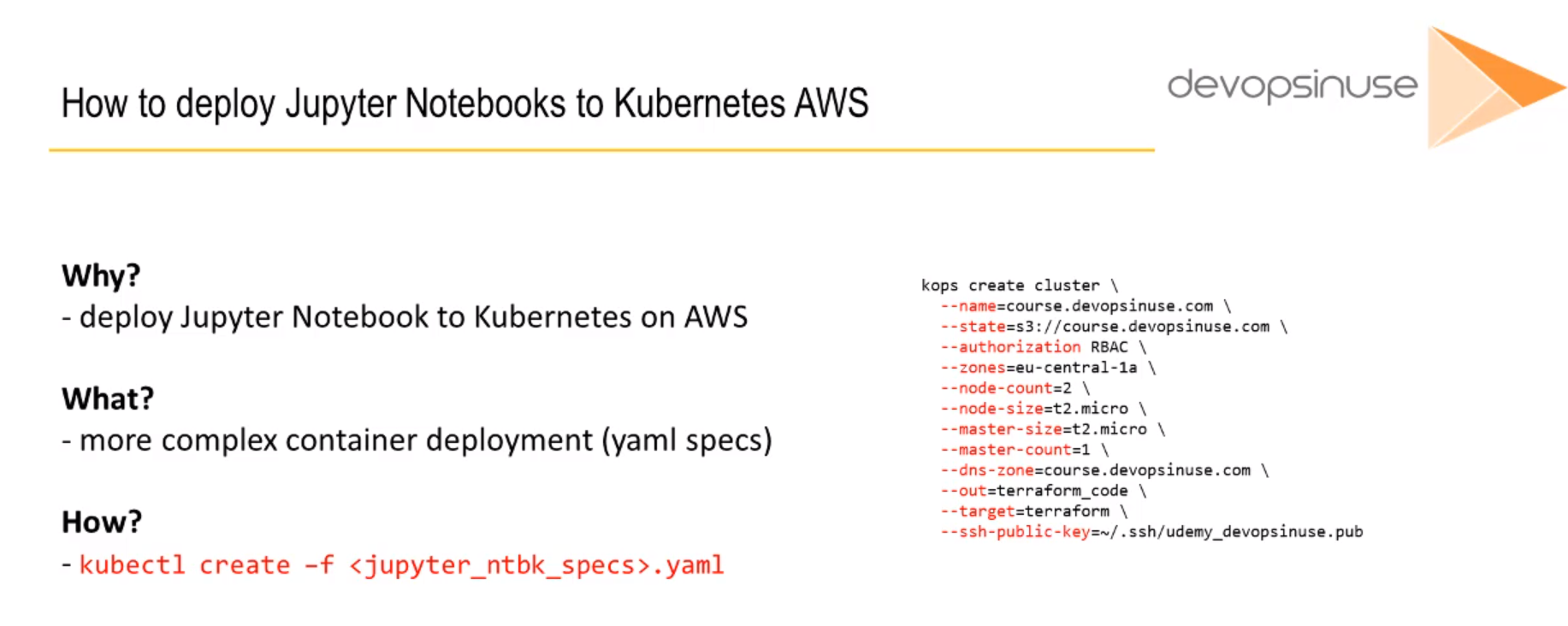

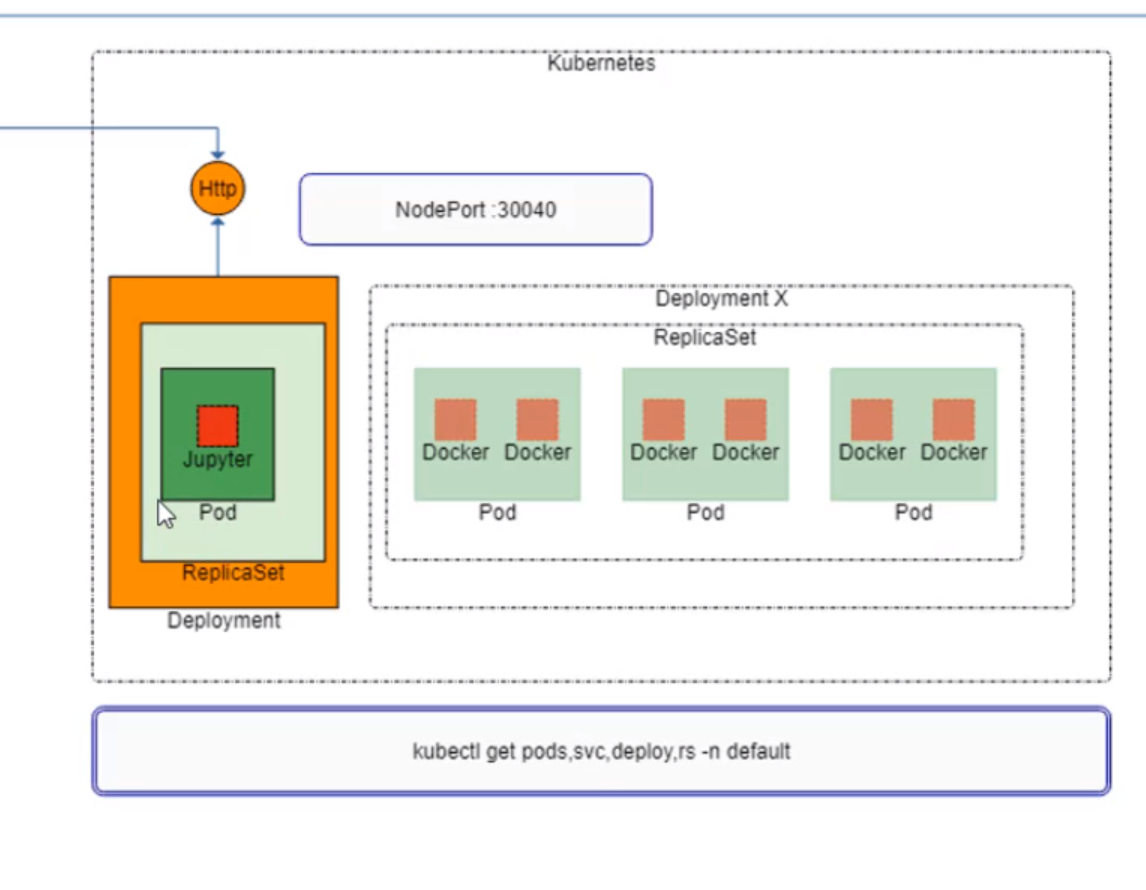

- 17. How to deploy Jupyter Notebooks to Kubernetes AWS (Part 1)

- 18. Materials: How to deploy Juypyter Notebooks to Kubernetes via YAML file

- 19. How to deploy Jupyter Notebooks to Kubernetes AWS (Part 2)

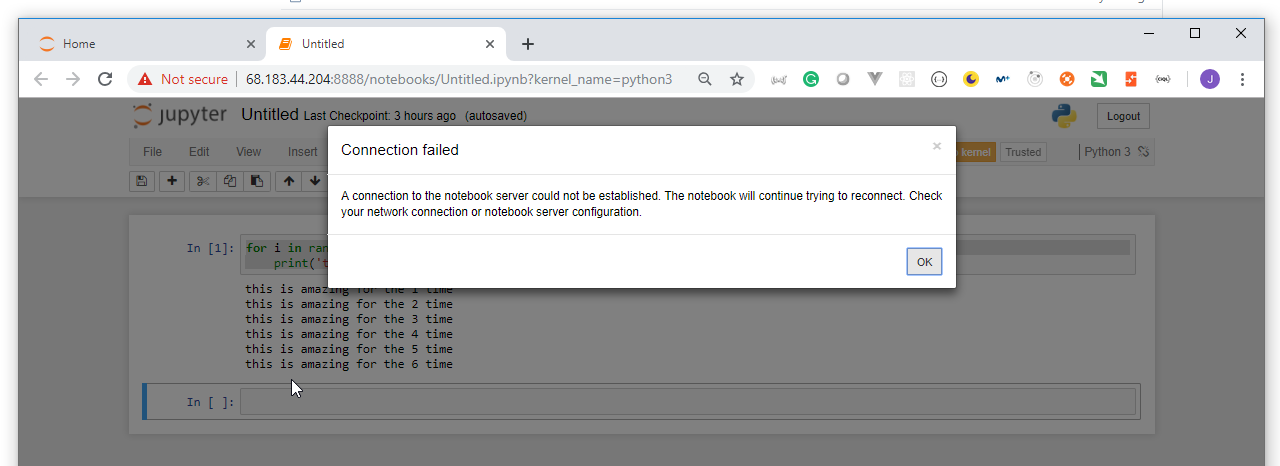

- 20. How to deploy Jupyter Notebooks to Kubernetes AWS (Part 3)

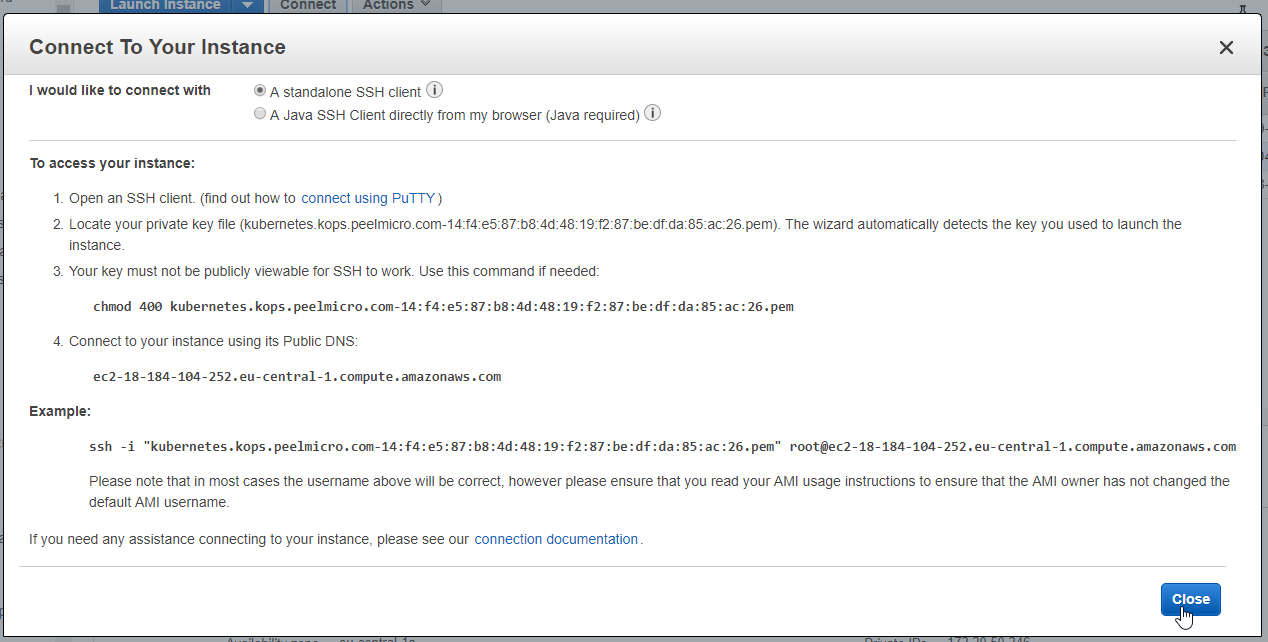

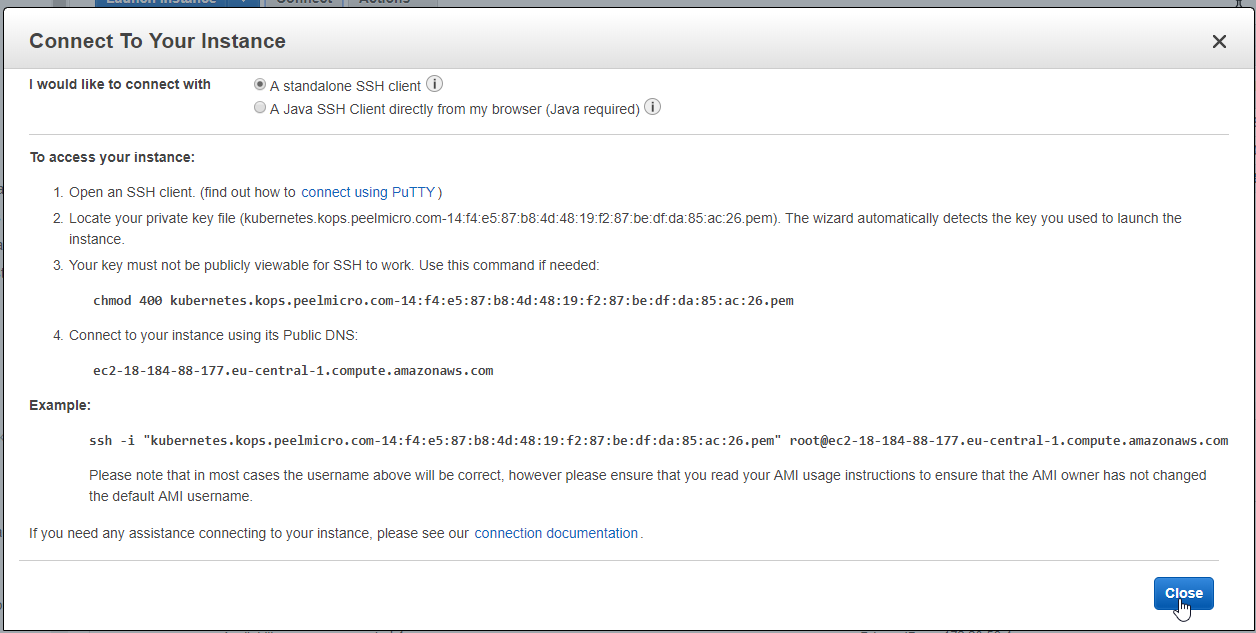

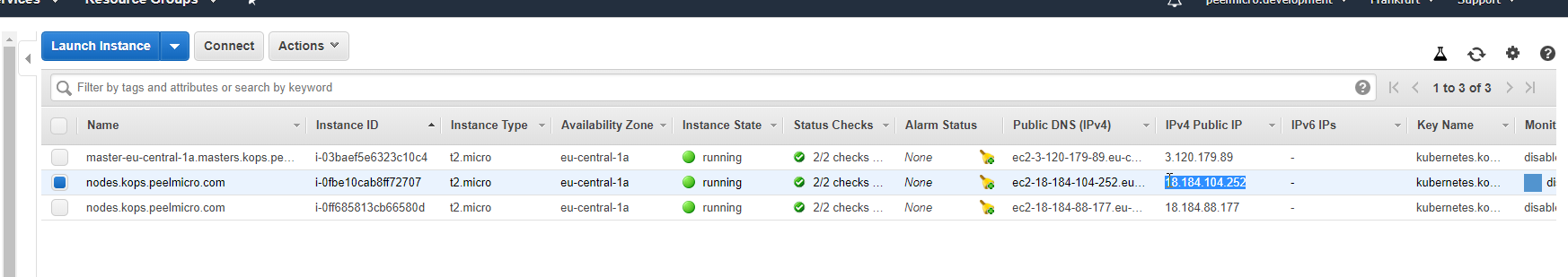

- 21. Materials: How to SSH to the physical servers in AWS

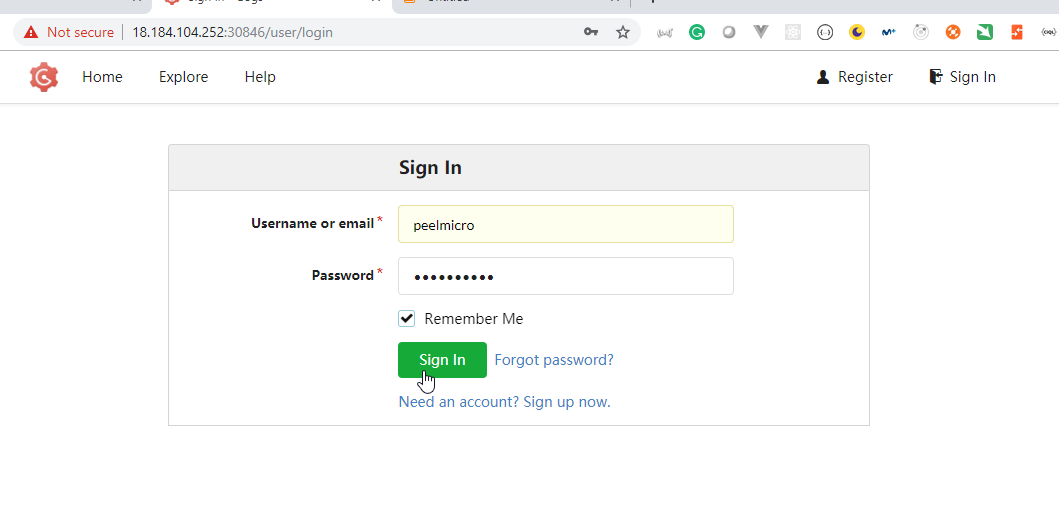

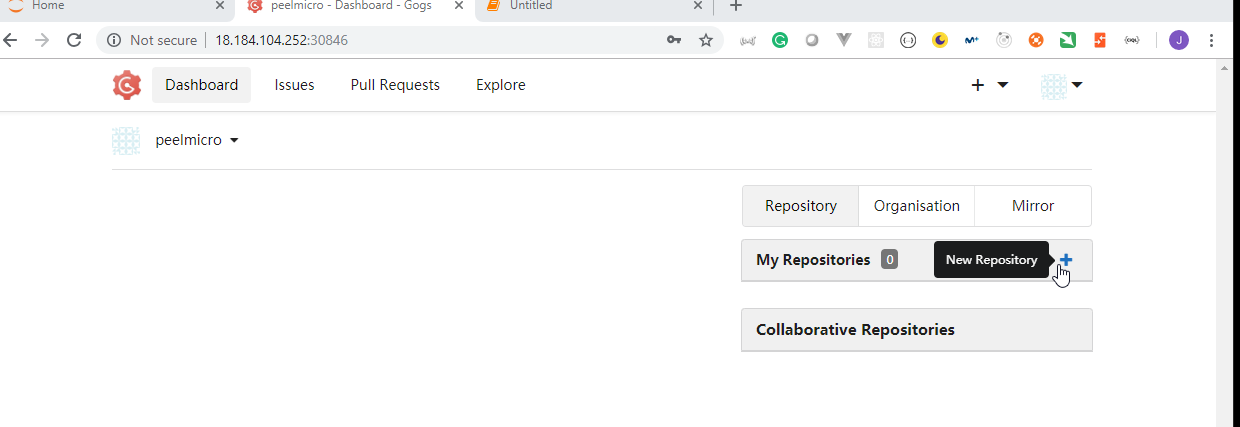

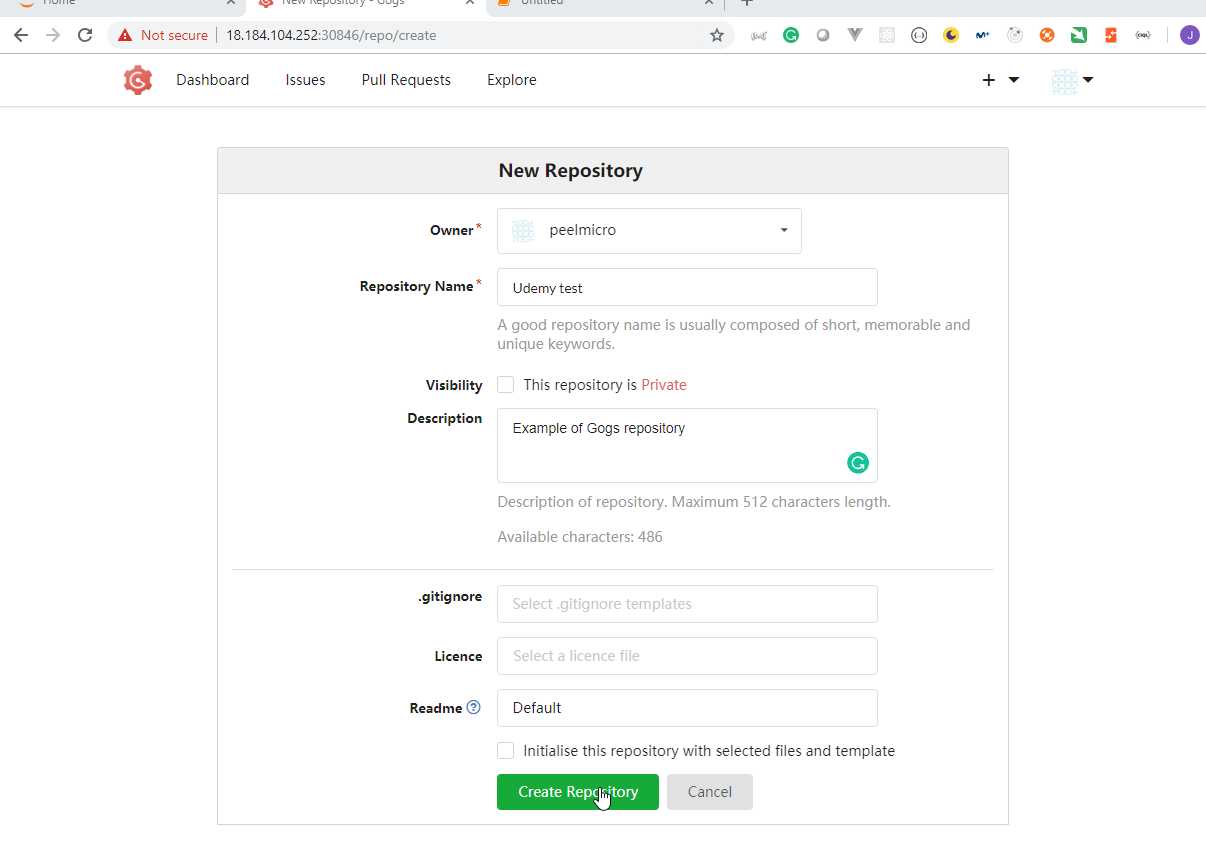

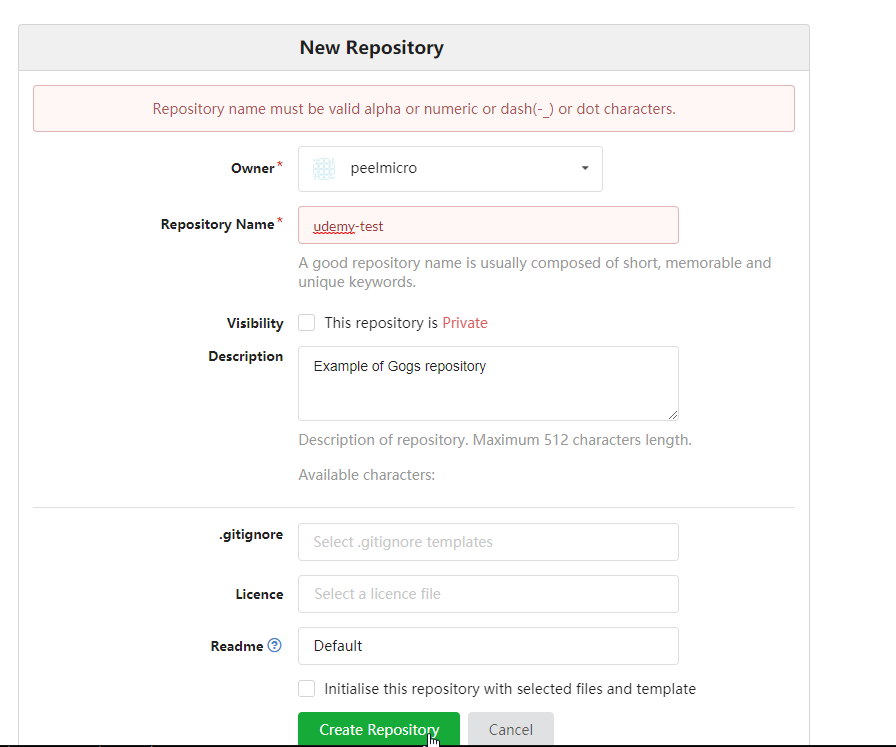

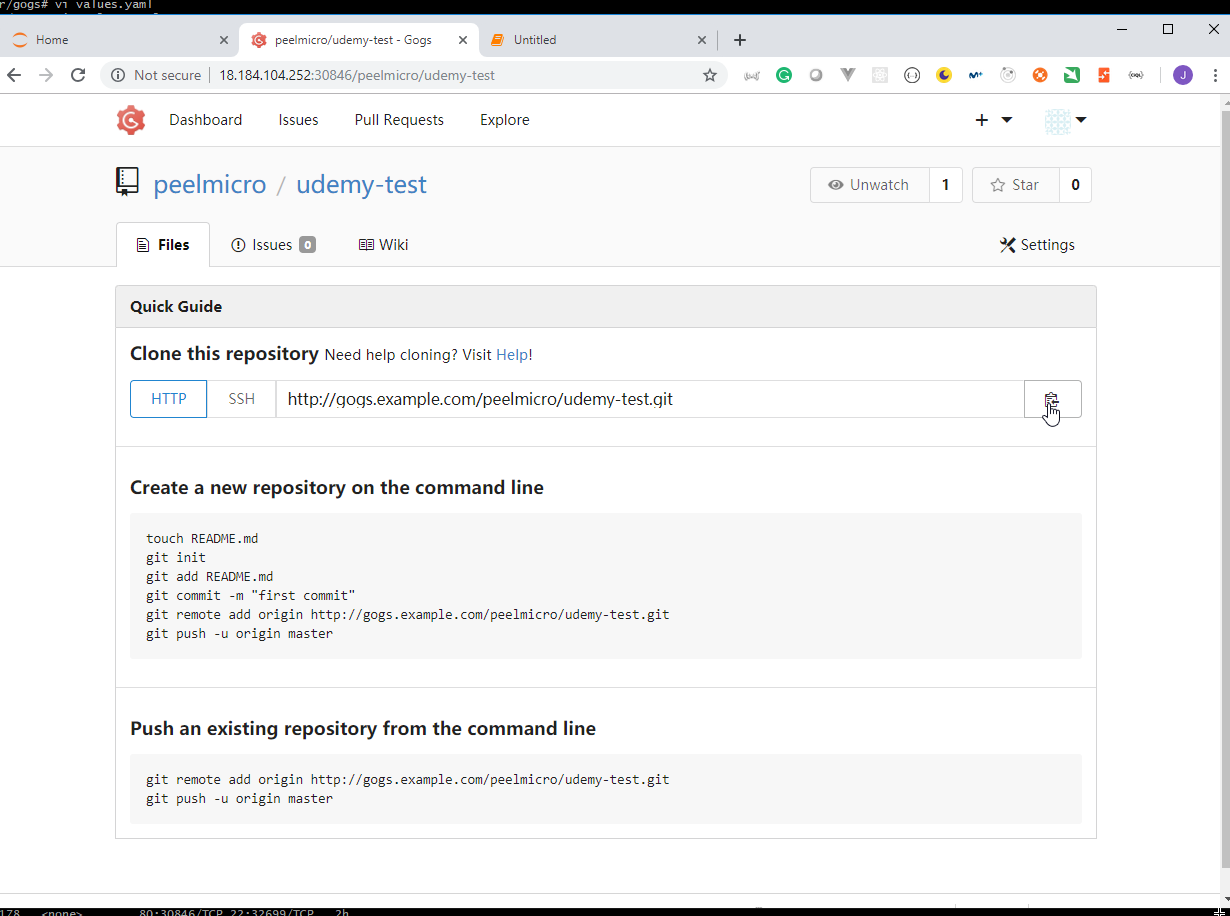

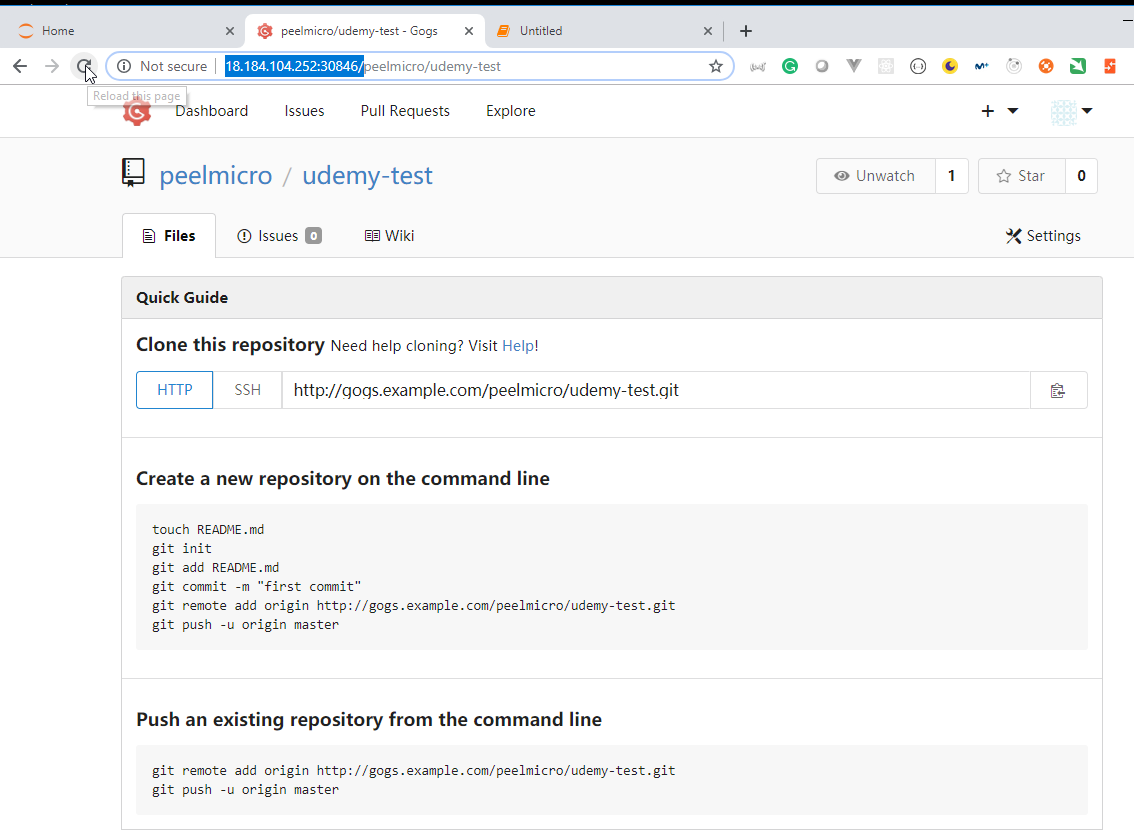

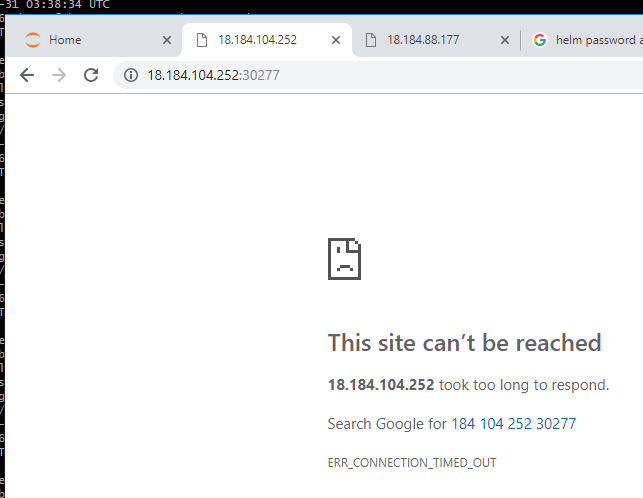

- 22. How to deploy Jupyter Notebooks to Kubernetes AWS (Part 4)

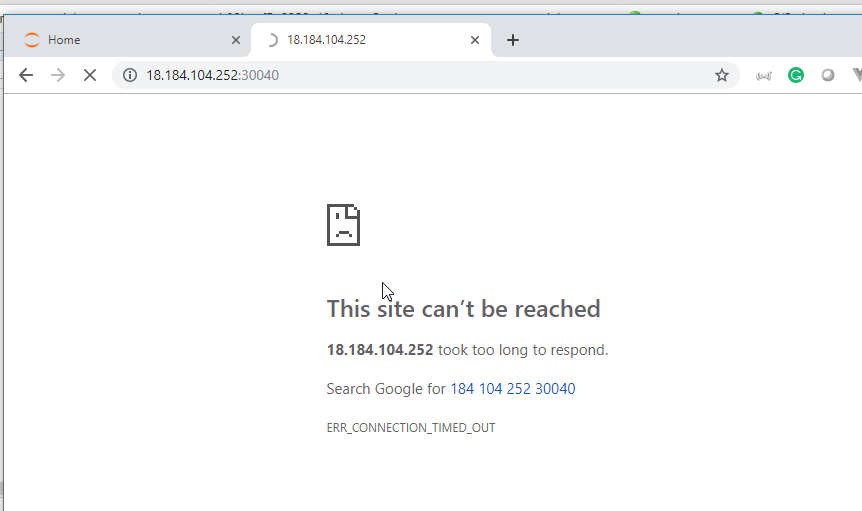

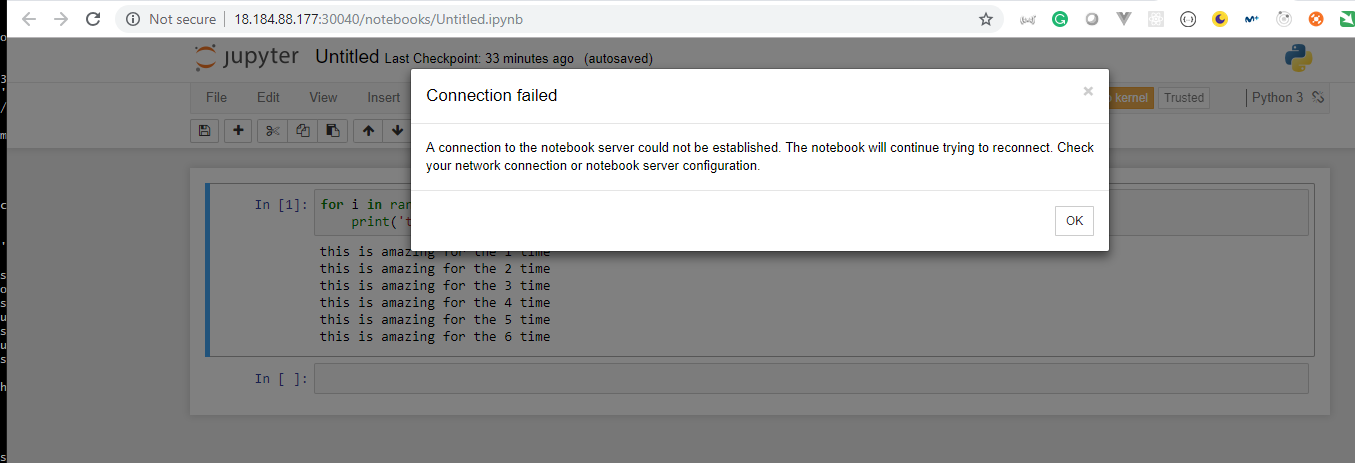

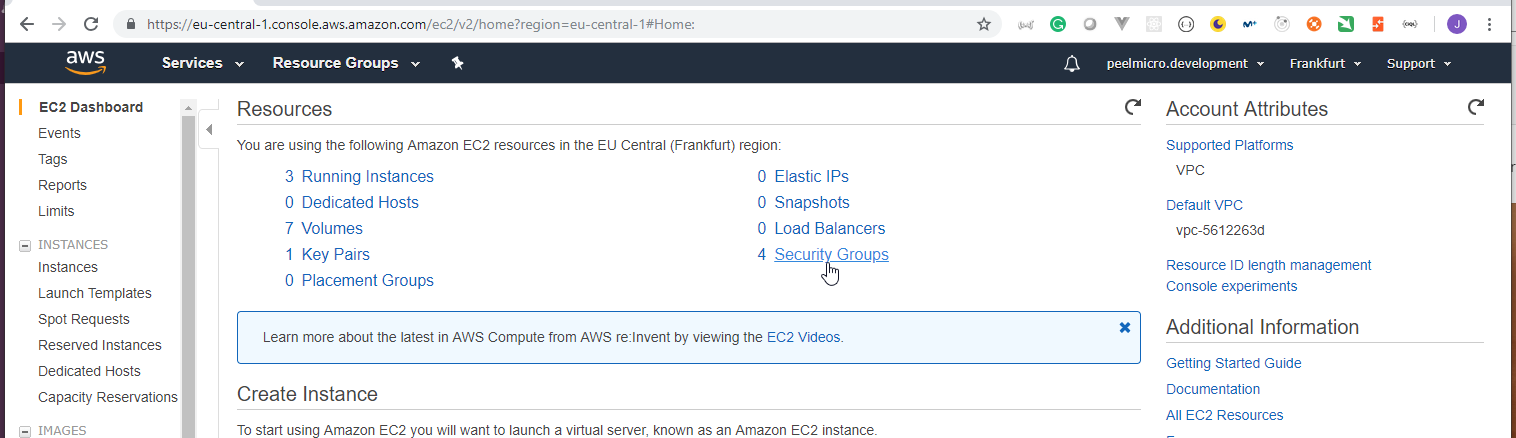

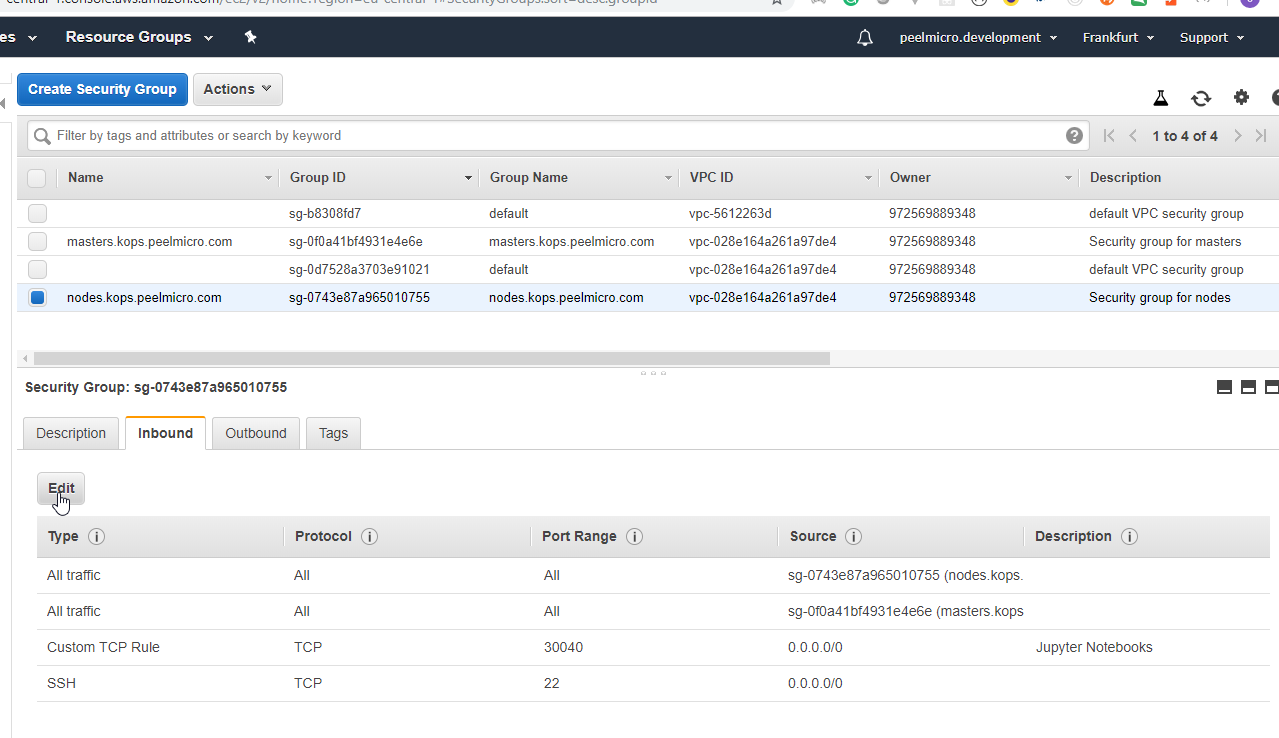

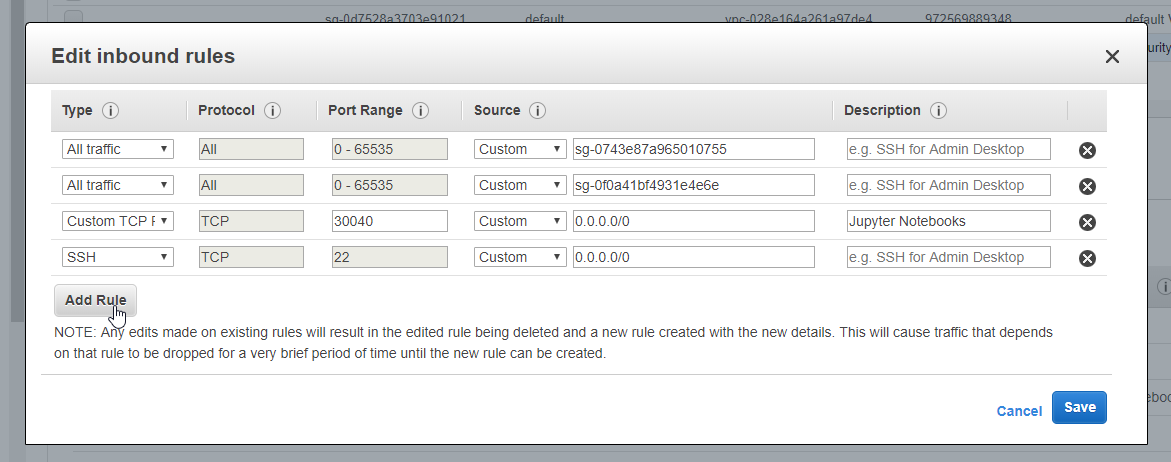

- 23. How to deploy Jupyter Notebooks to Kubernetes AWS (Part 5)

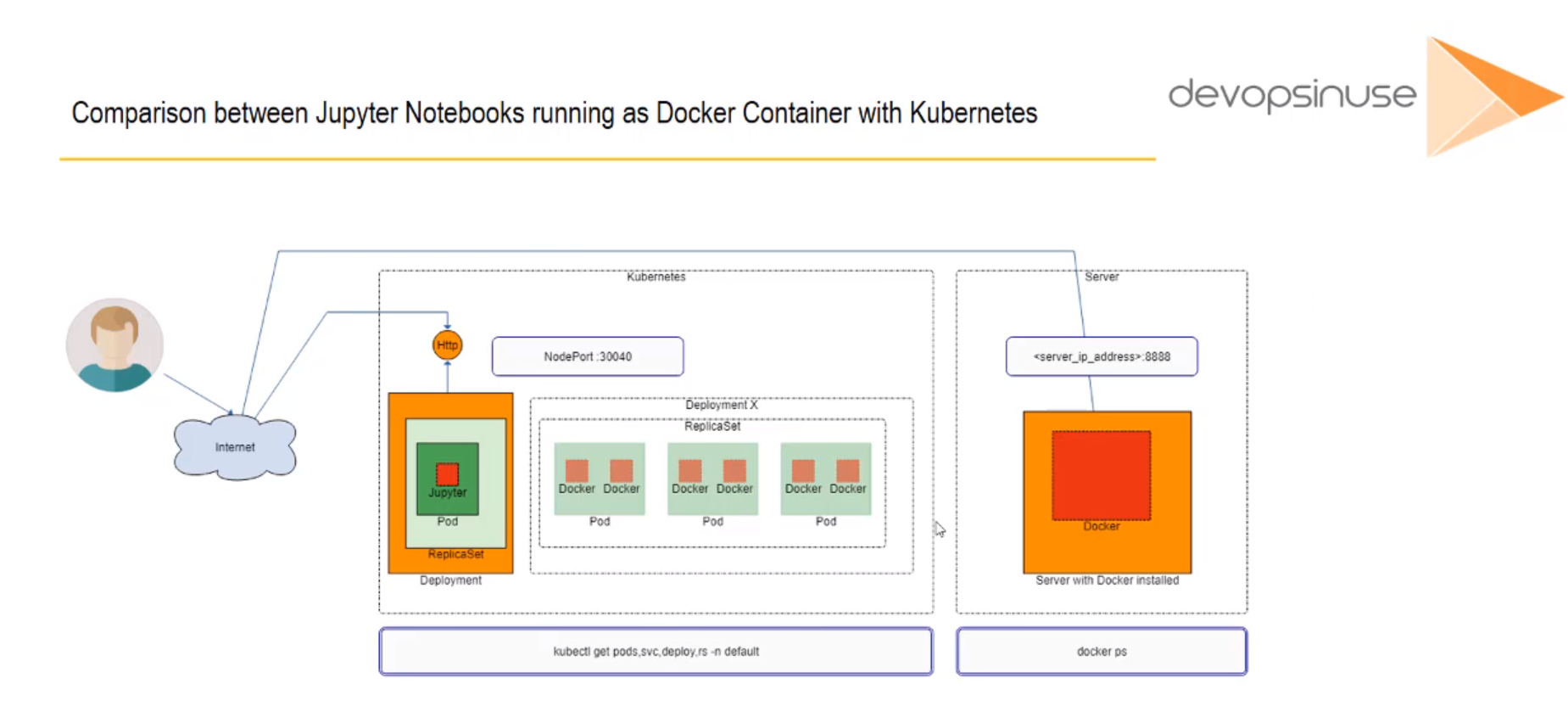

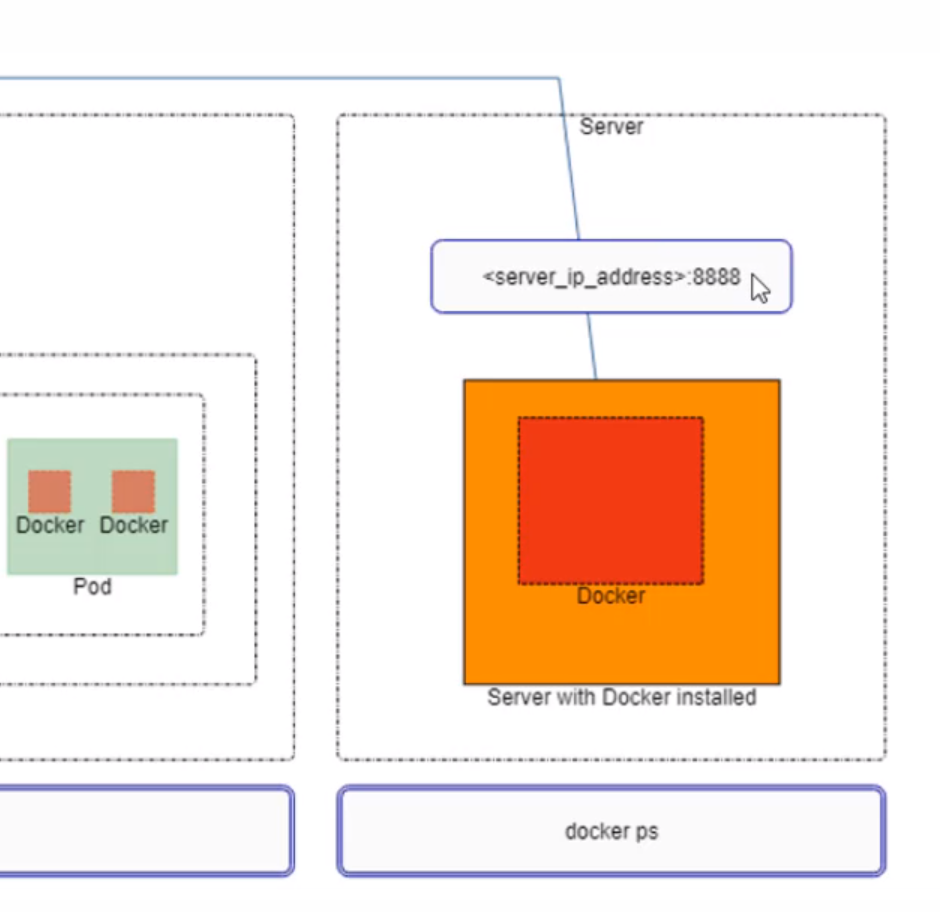

- 24. Comparison between Jupyter Notebooks running as Docker Container with Kubernetes

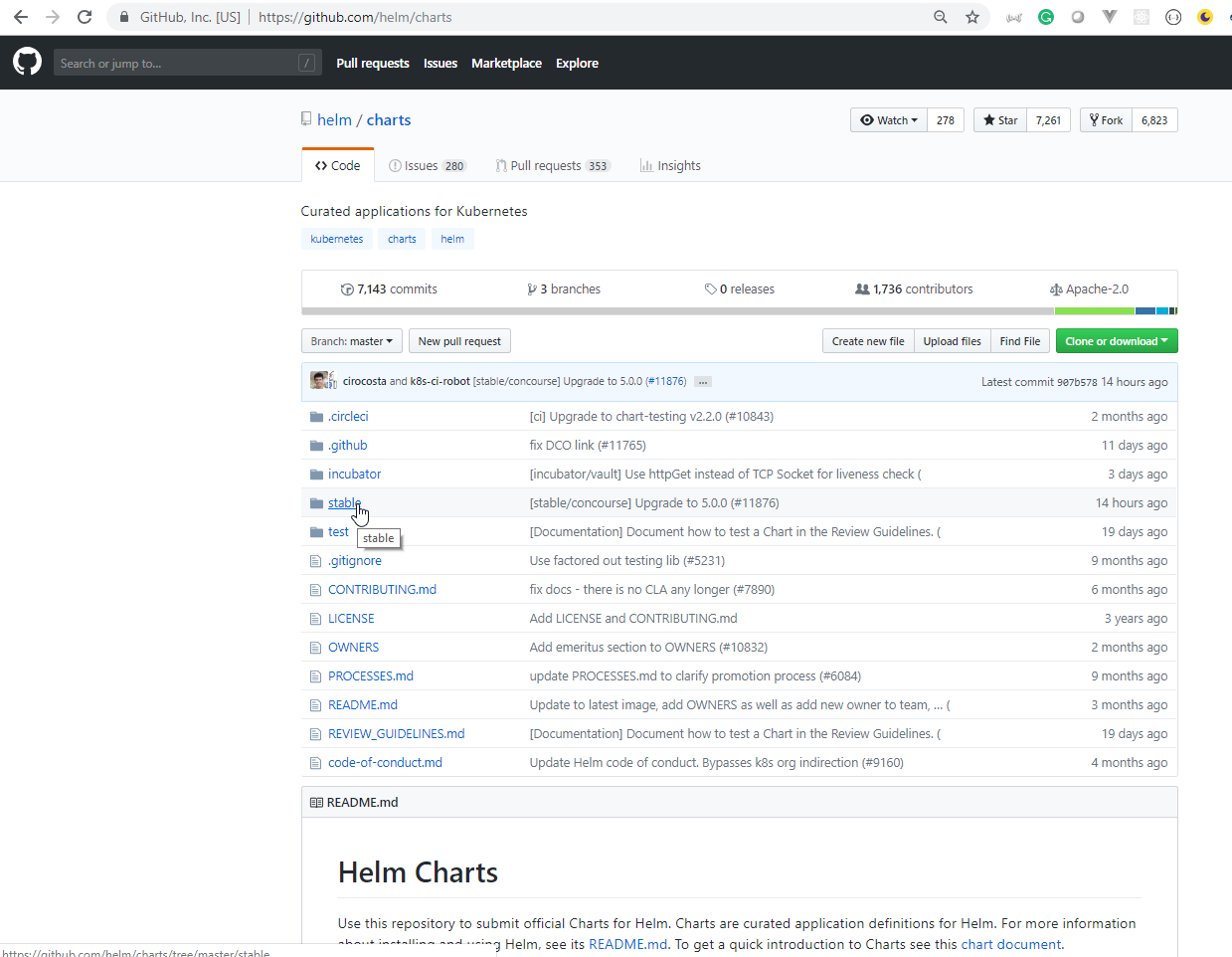

- Section: 3. Introduction to Helm Charts

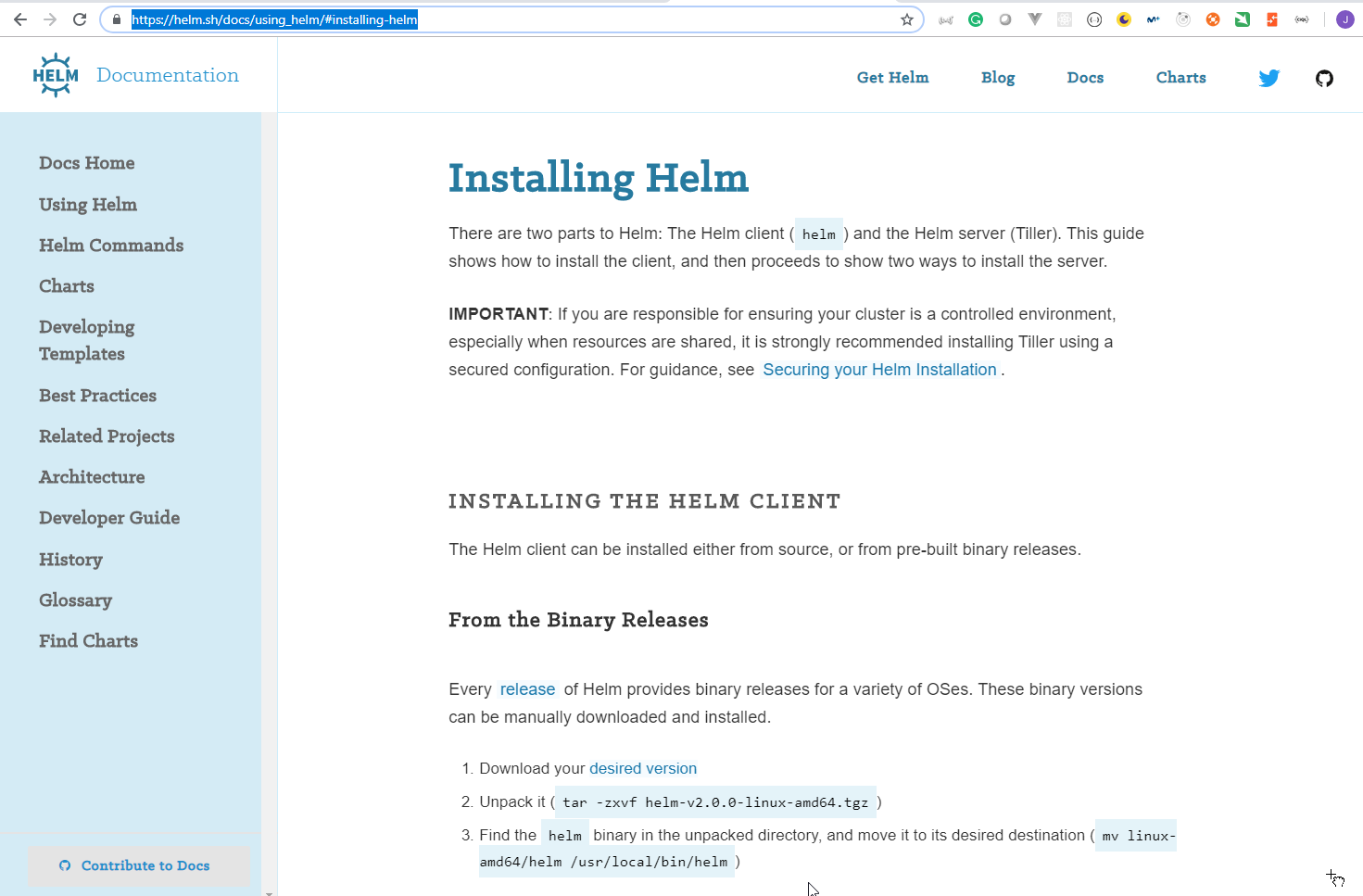

- 25. Materials: Install HELM binary and activate HELM user account in your cluster

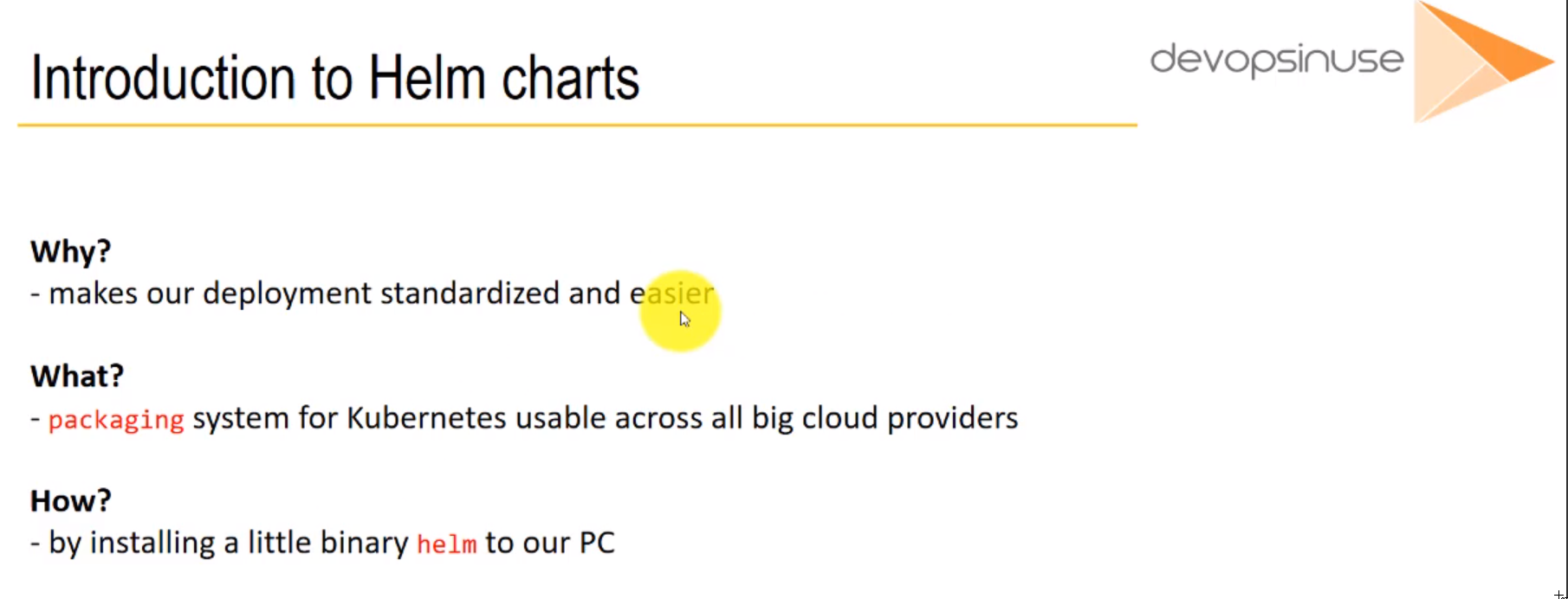

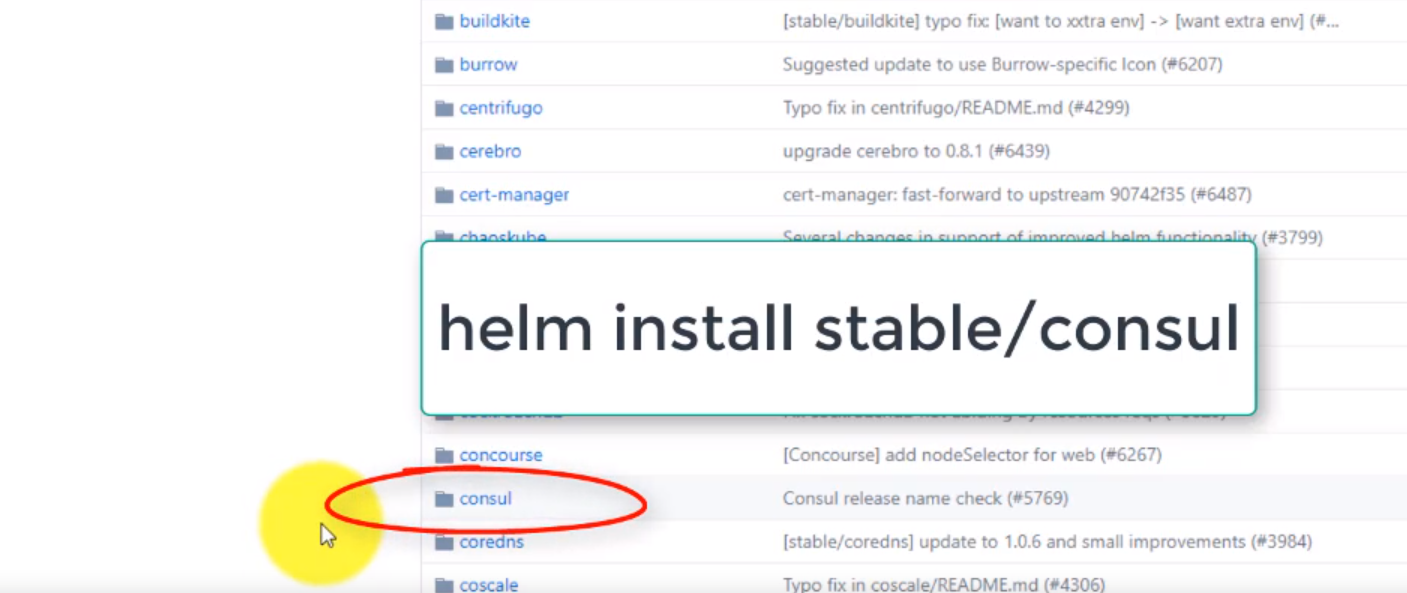

- 26. Introduction to Helm charts

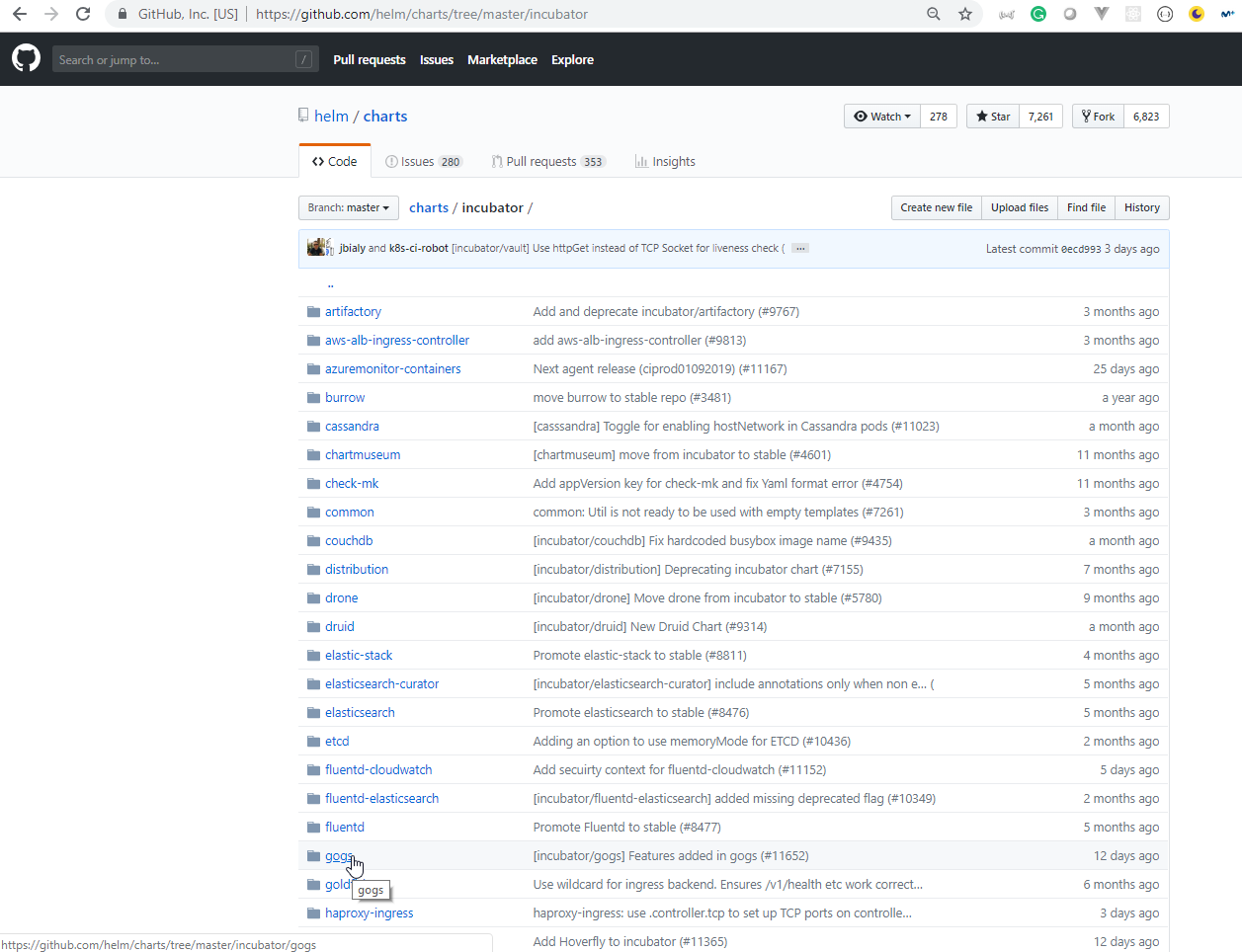

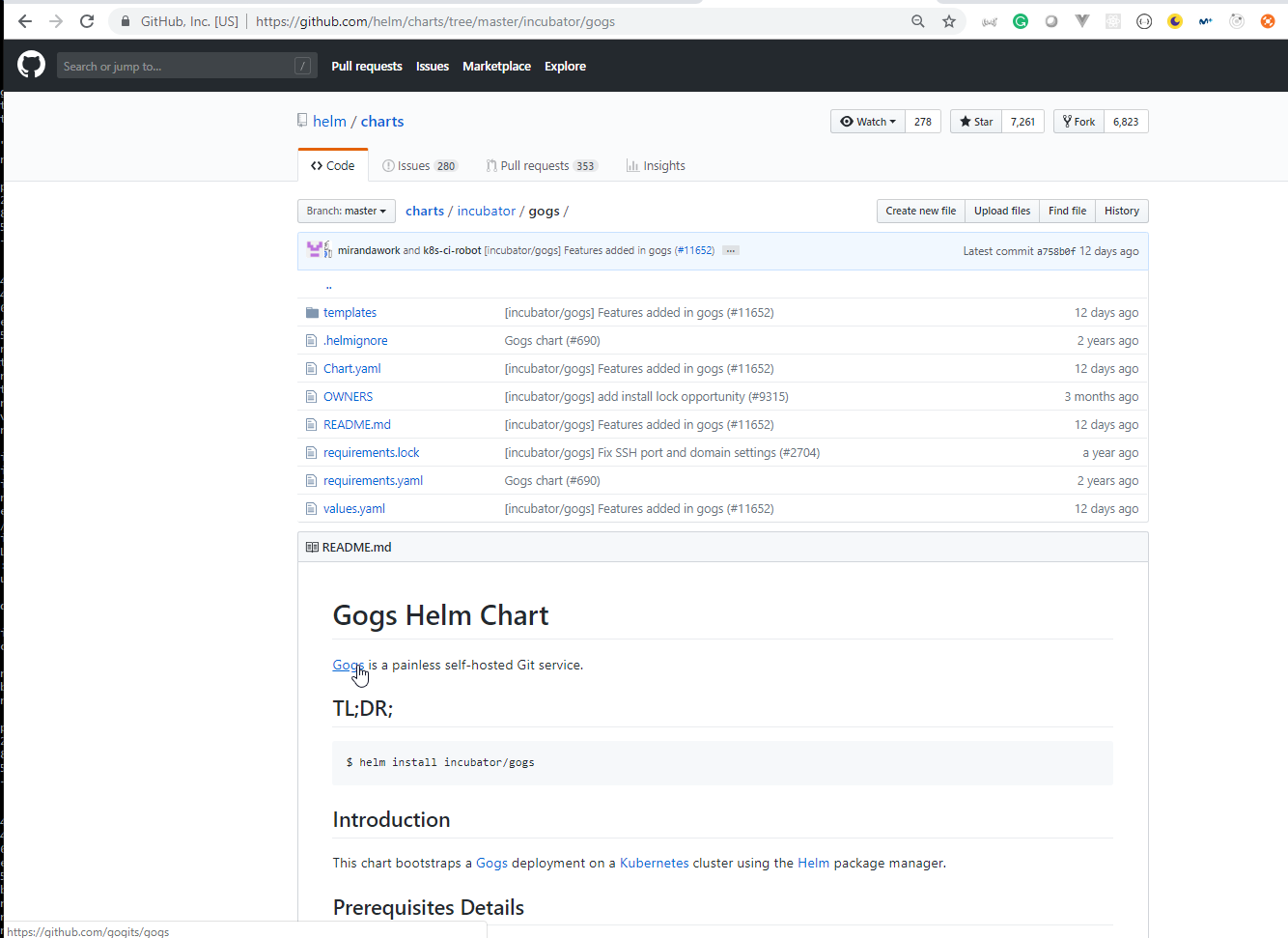

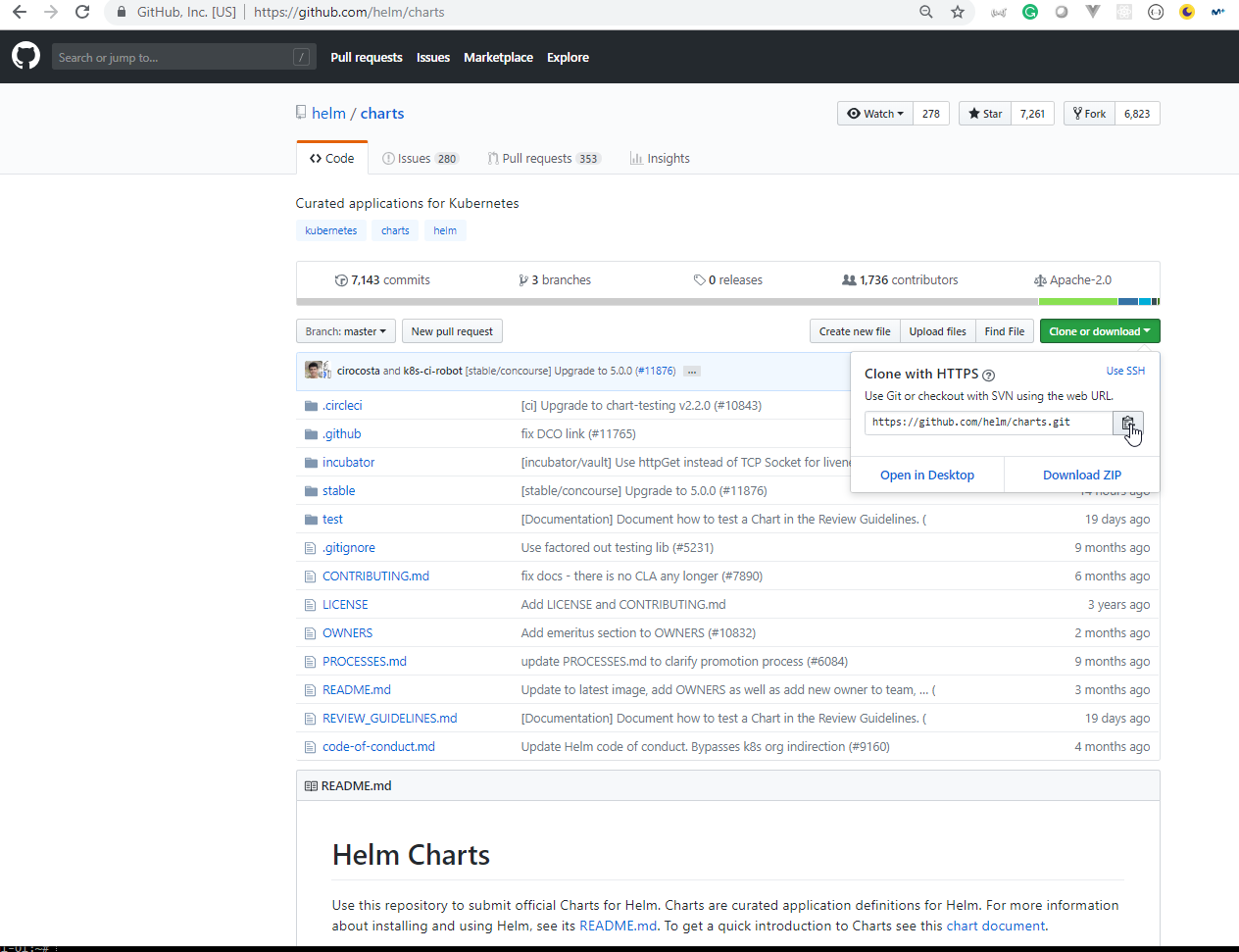

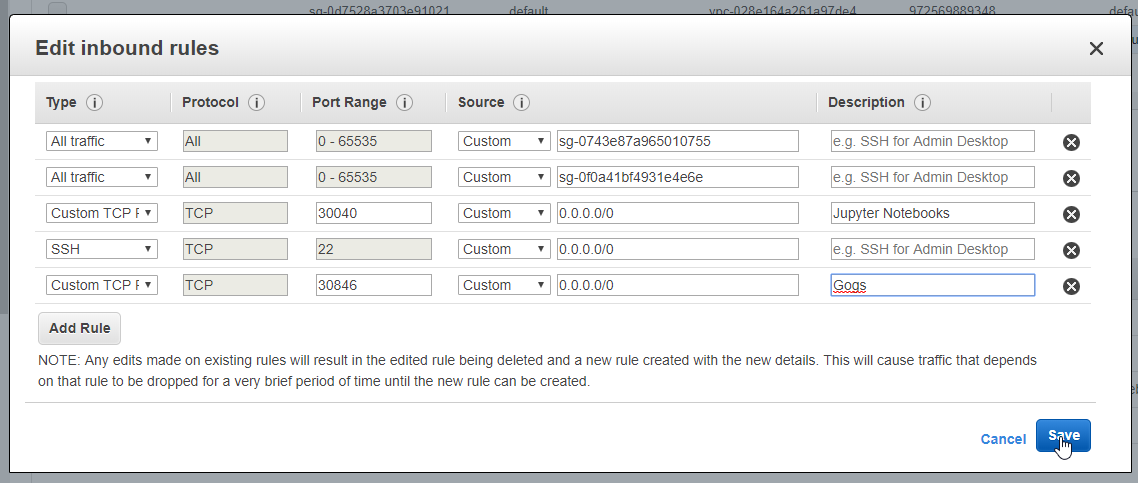

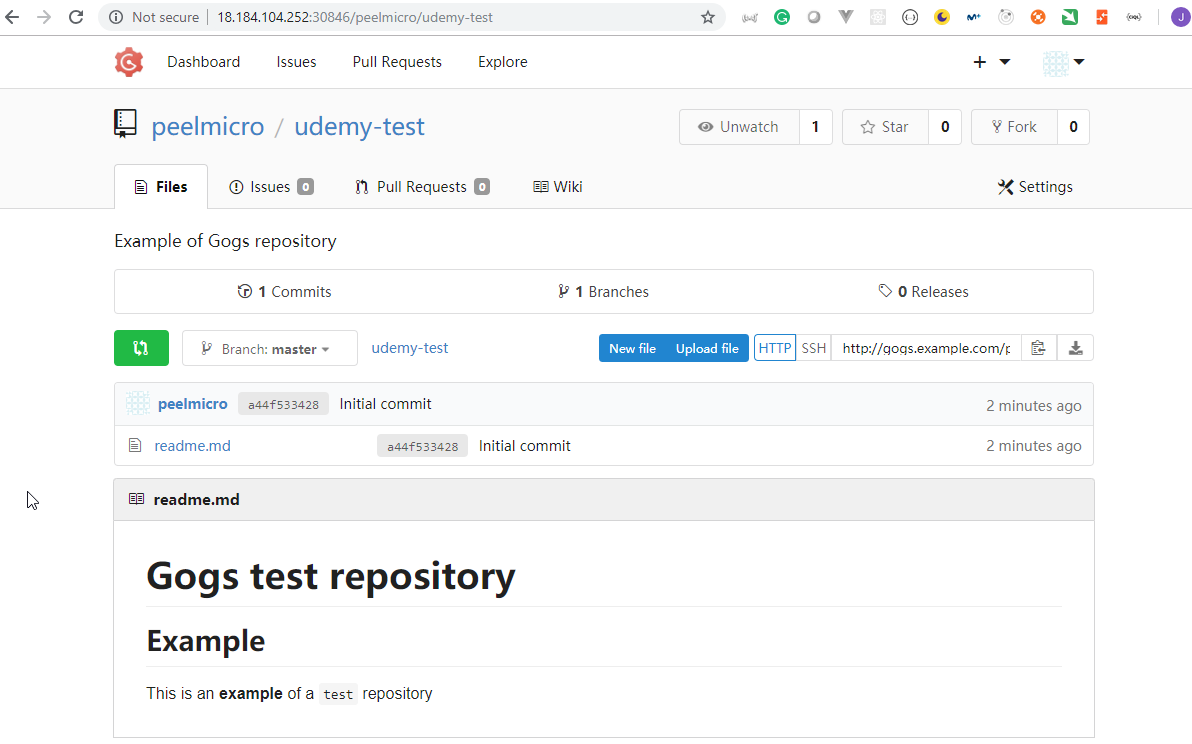

- 27. Materials: Run GOGS helm deployment for the first time

- 28. How to use Helm for the first time

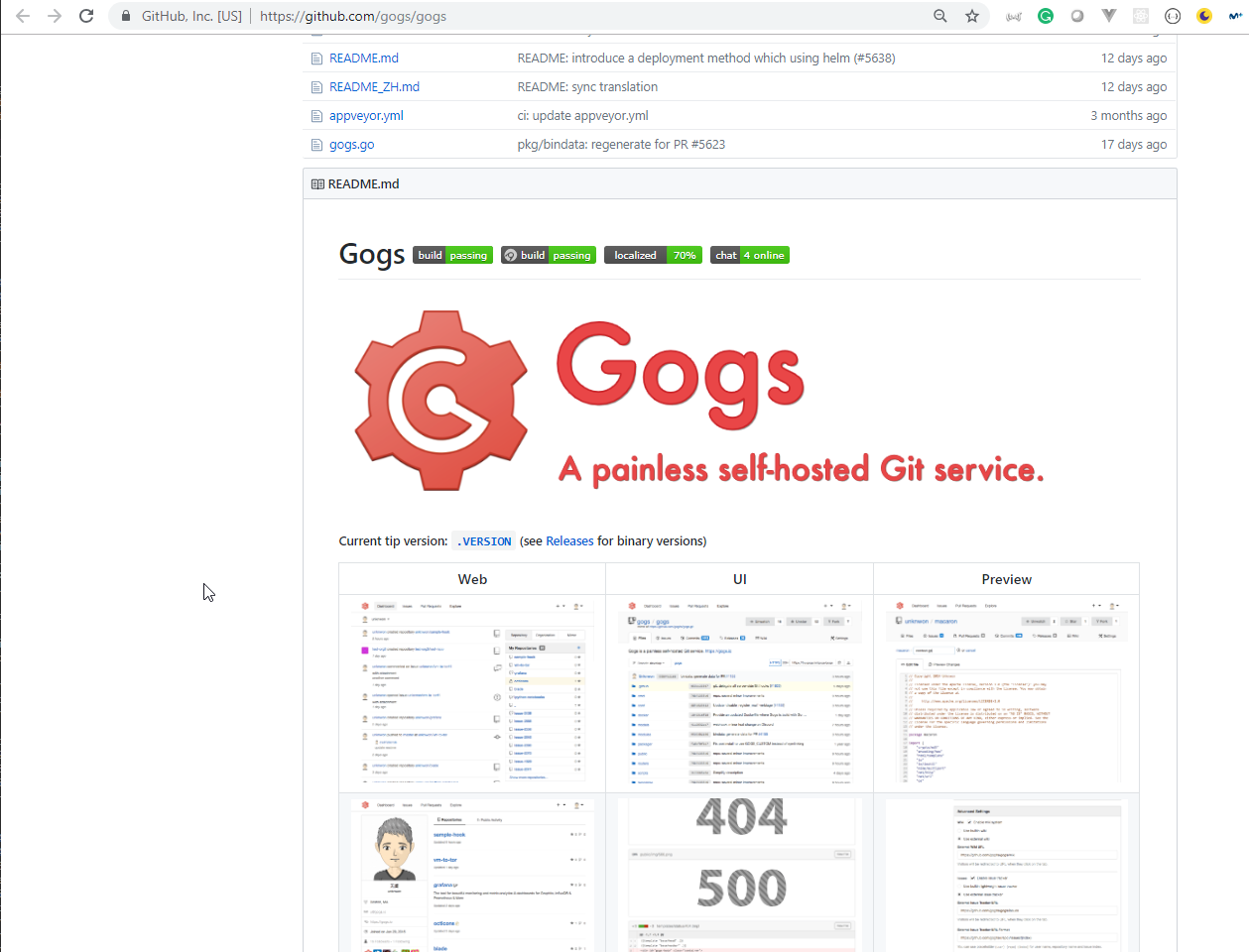

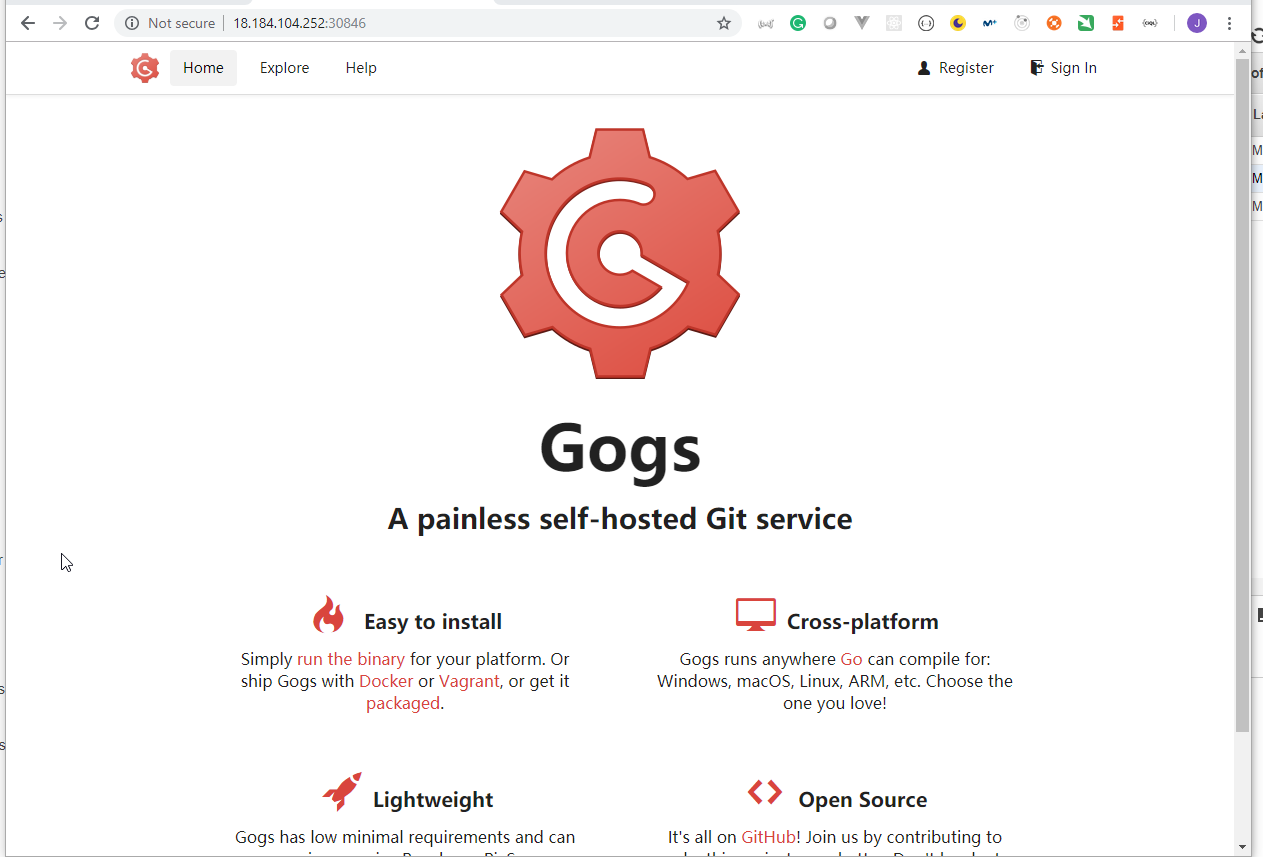

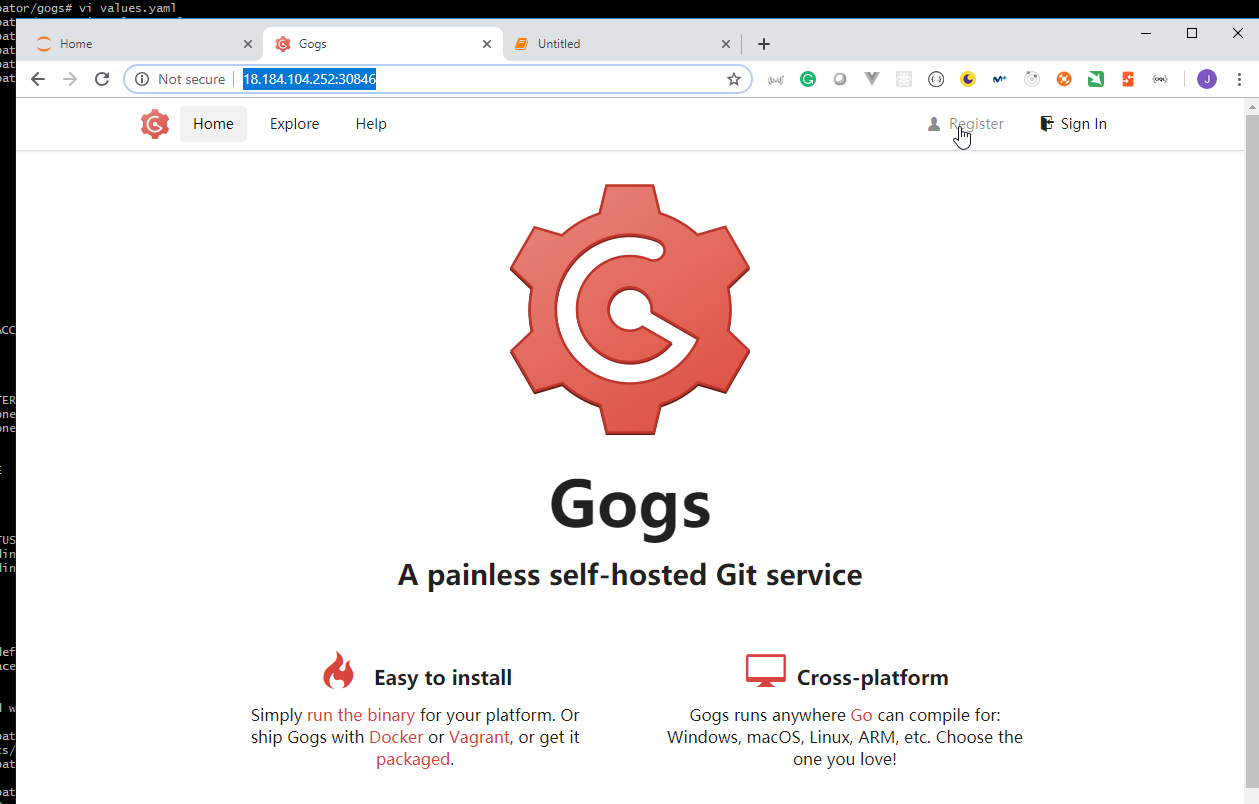

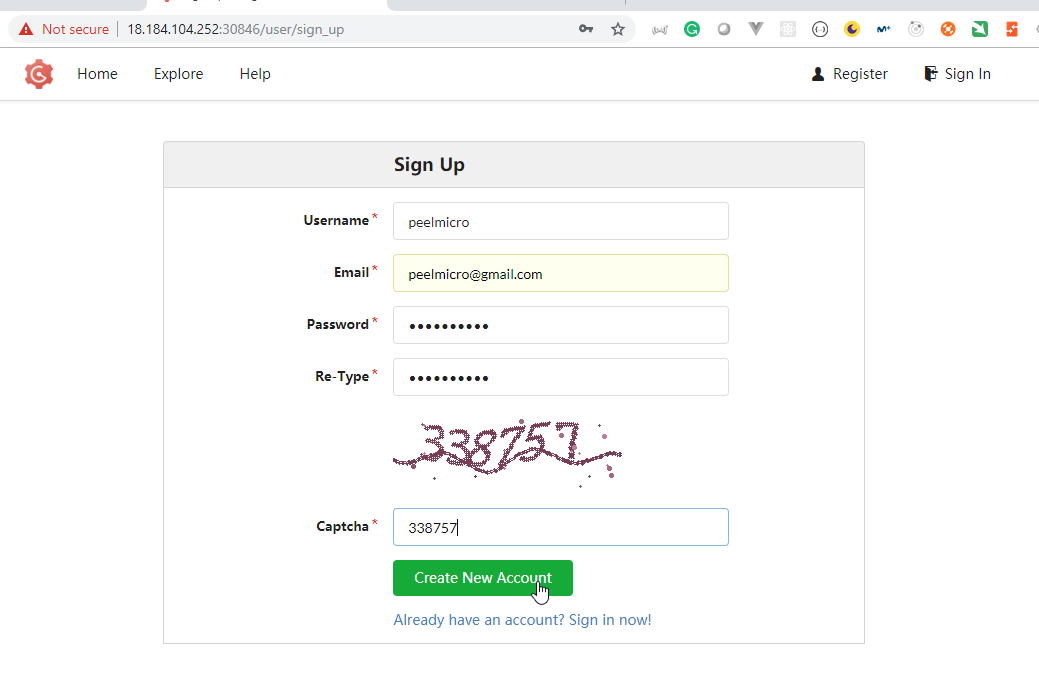

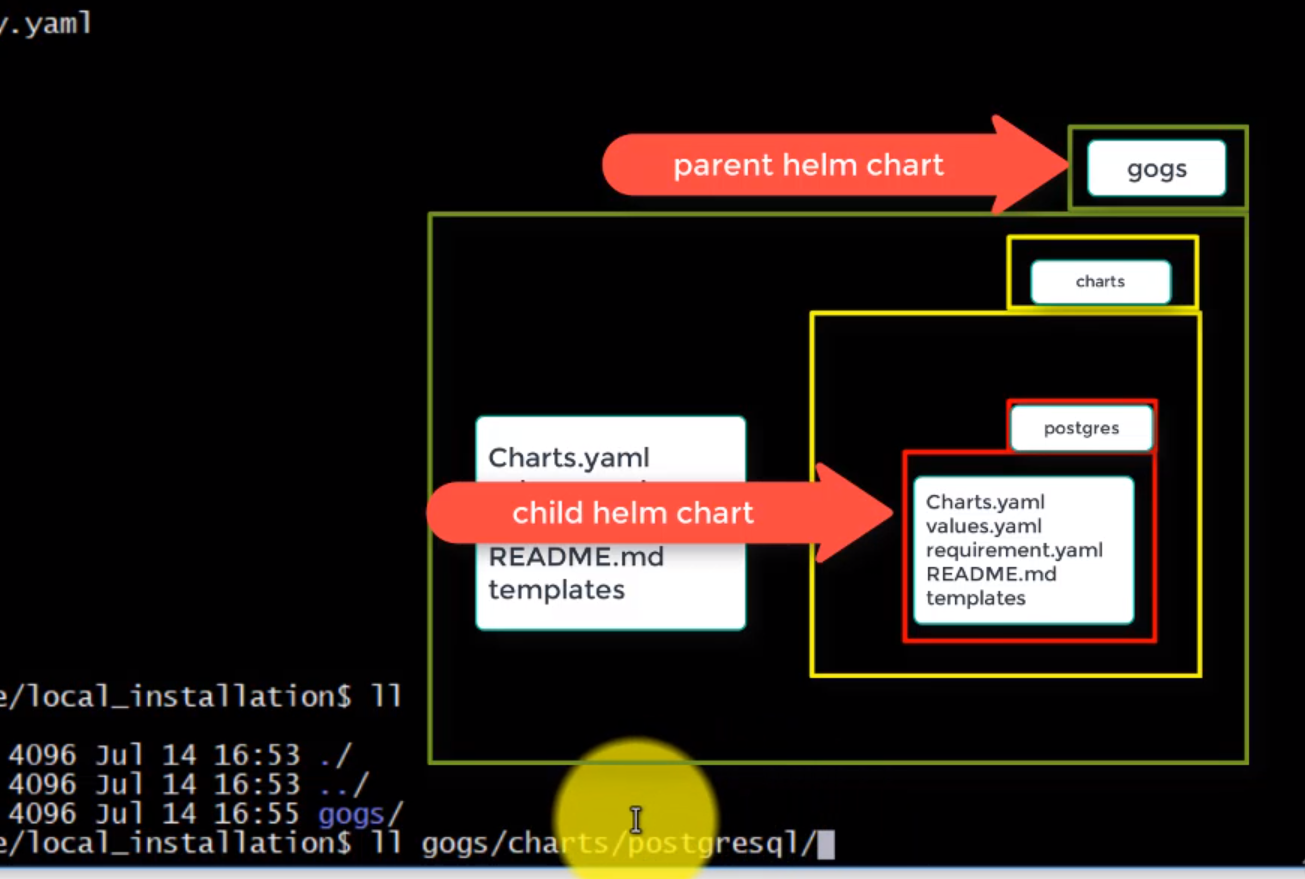

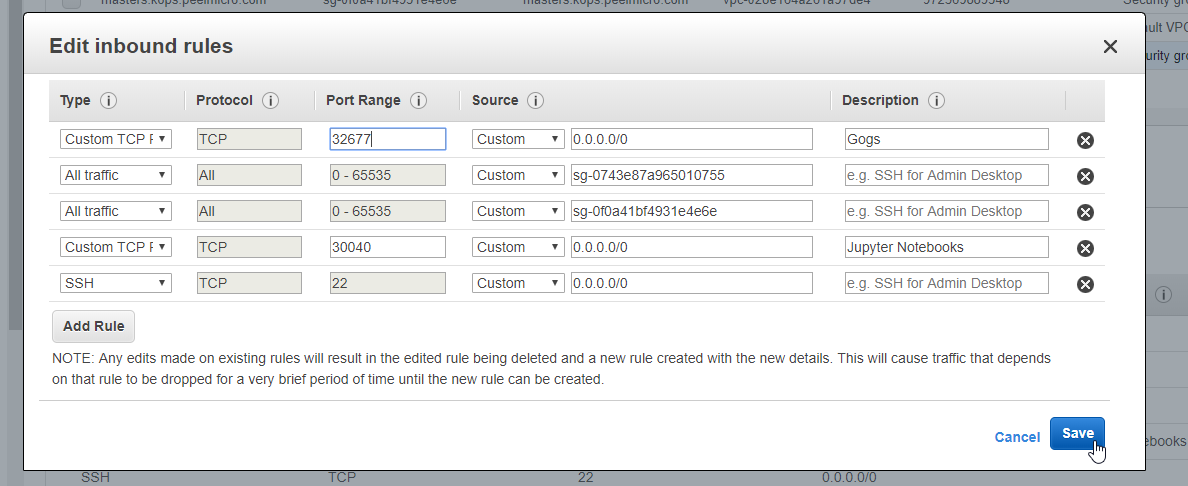

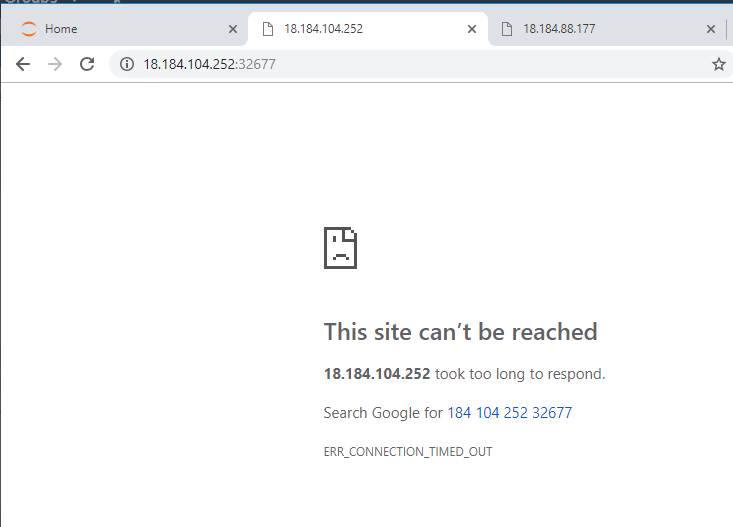

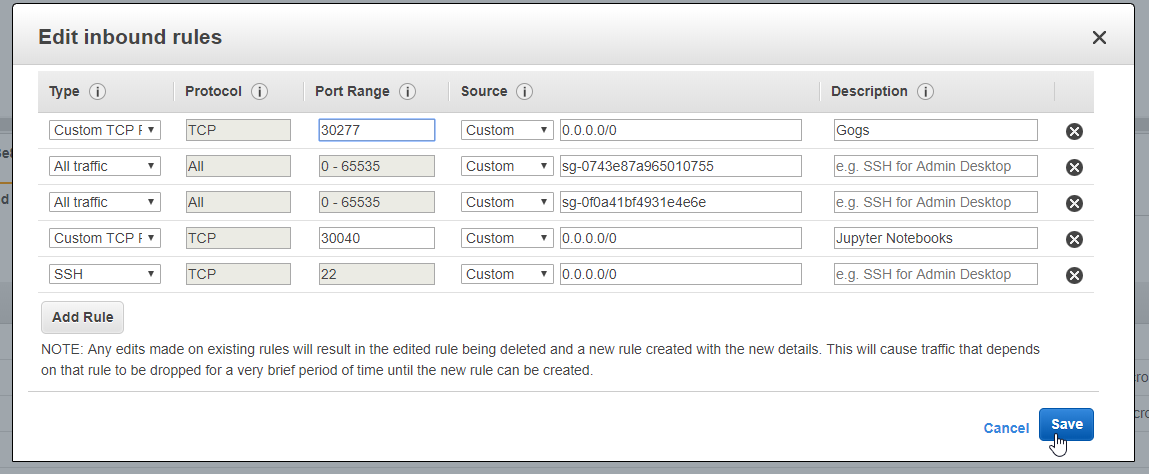

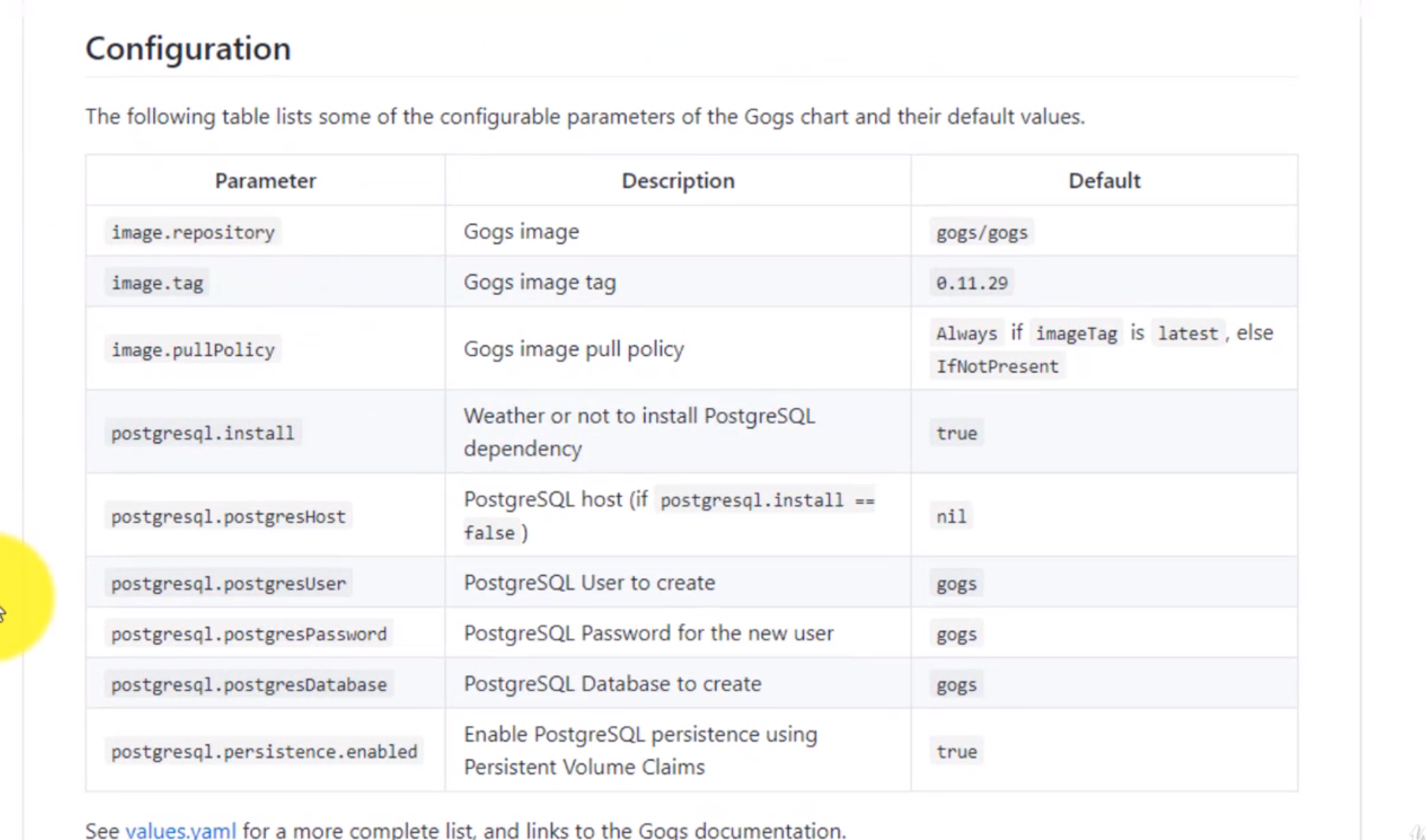

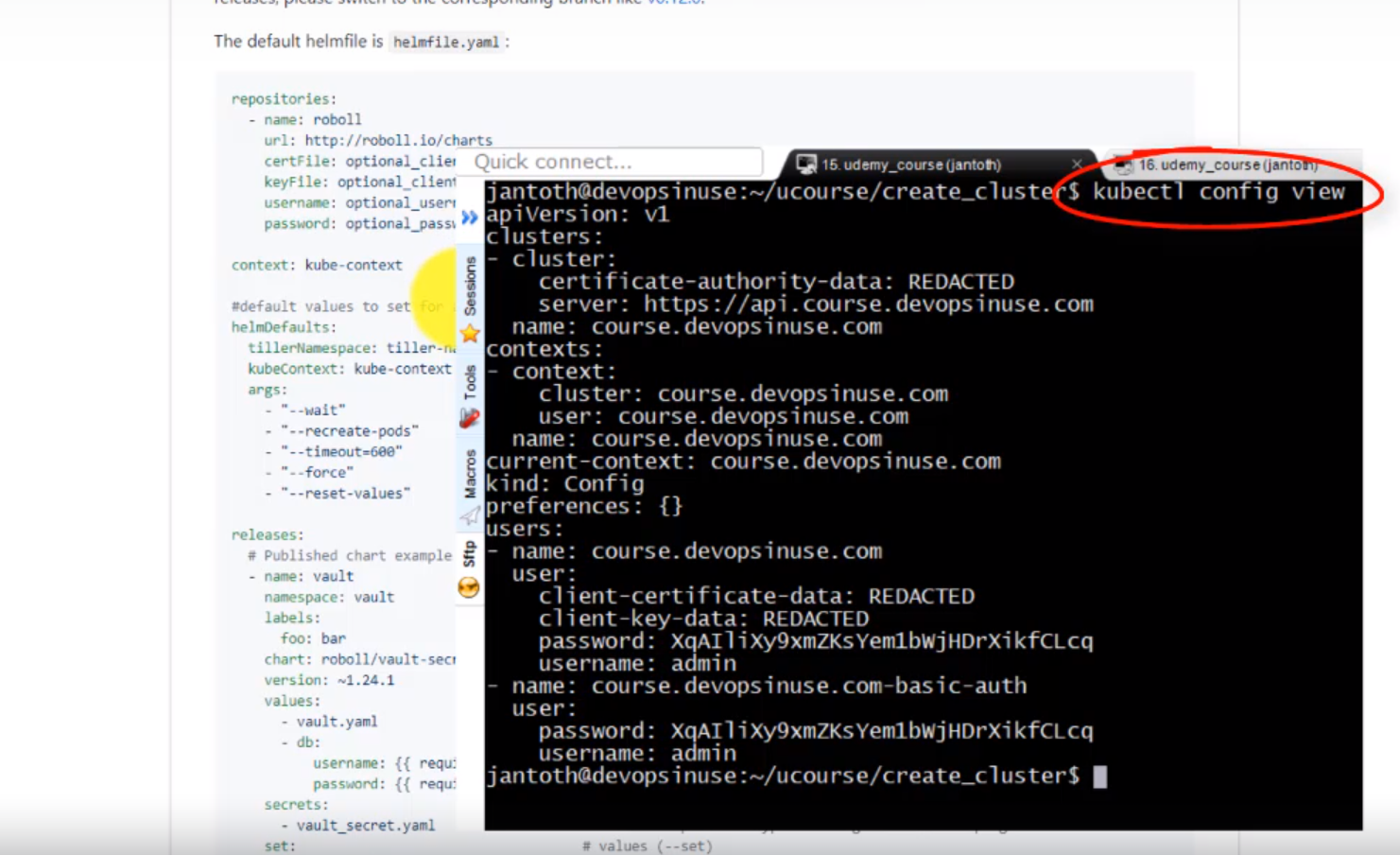

- 29. How to understand helm Gogs deployment

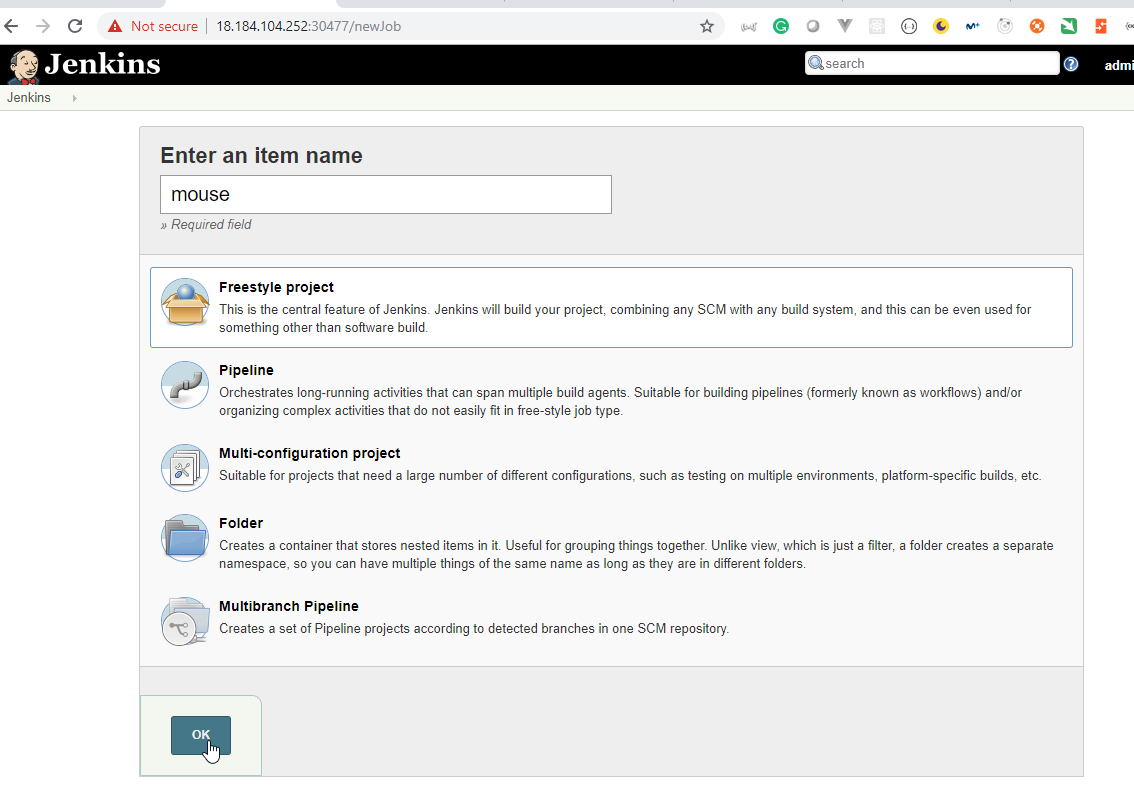

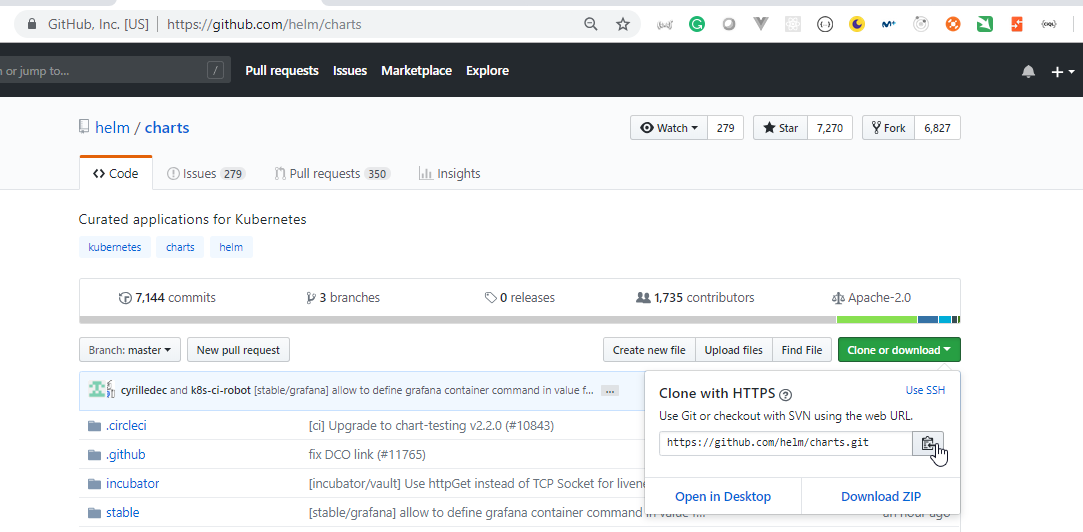

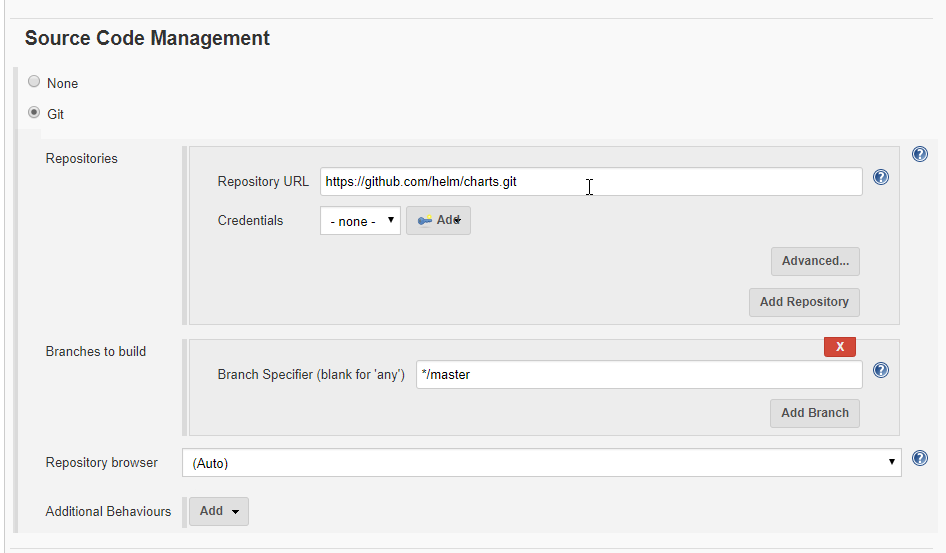

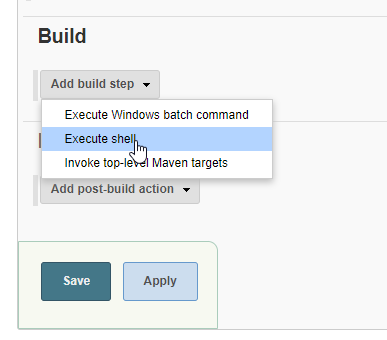

- 30. Materials: How to use HELM to deploy GOGS from locally downloaded HELM CHARTS

- 31. How to deploy Gogs from local repository

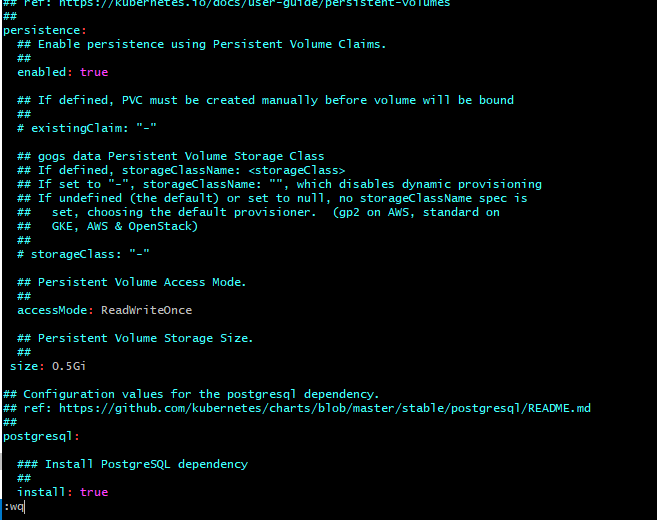

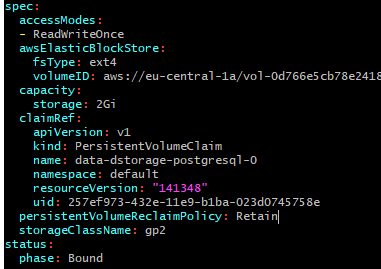

- 32. Materials: How to understand persistentVolumeClaim and persistentVolumes

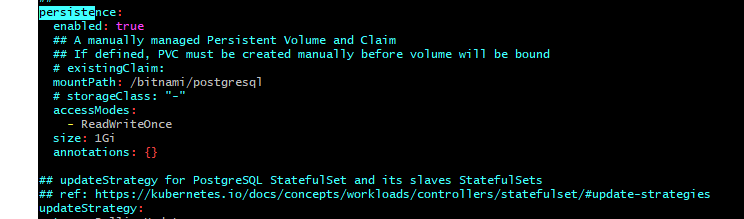

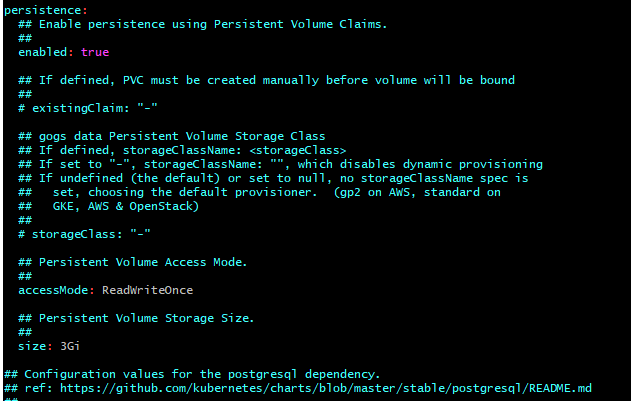

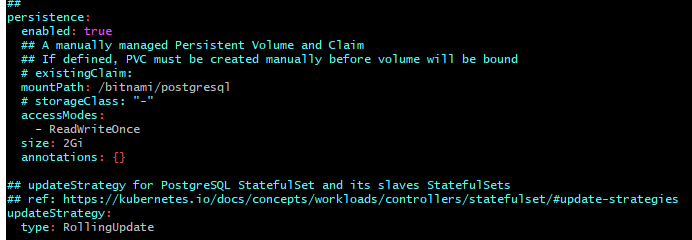

- 33. How to make your data persistent

- 34. Lets summarize on Gogs helm chart deployment

- Section: 4. Exploring Helmfile deployment in Kubernetes

- 35. Materials: How to install HELMFILE binary to your machine

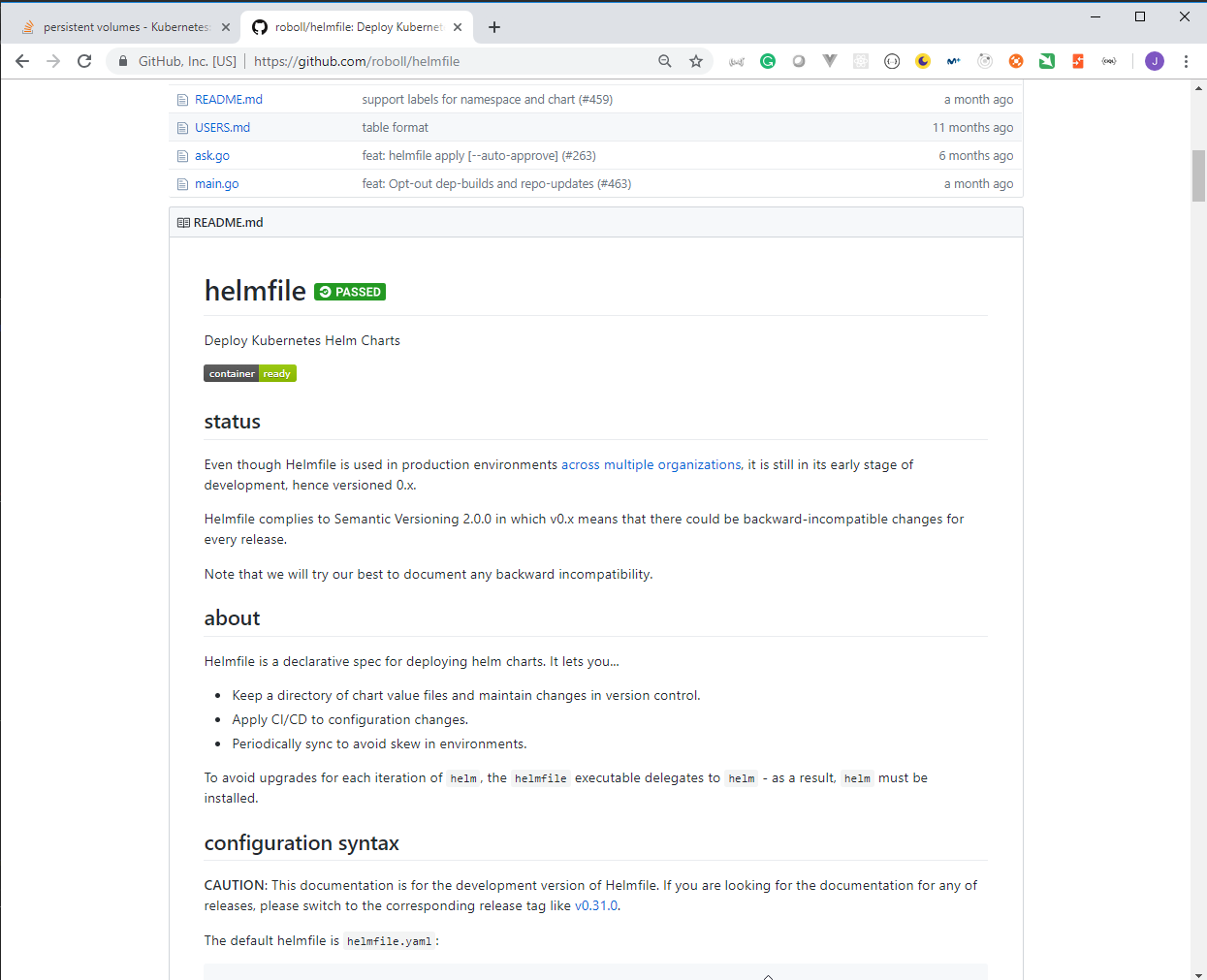

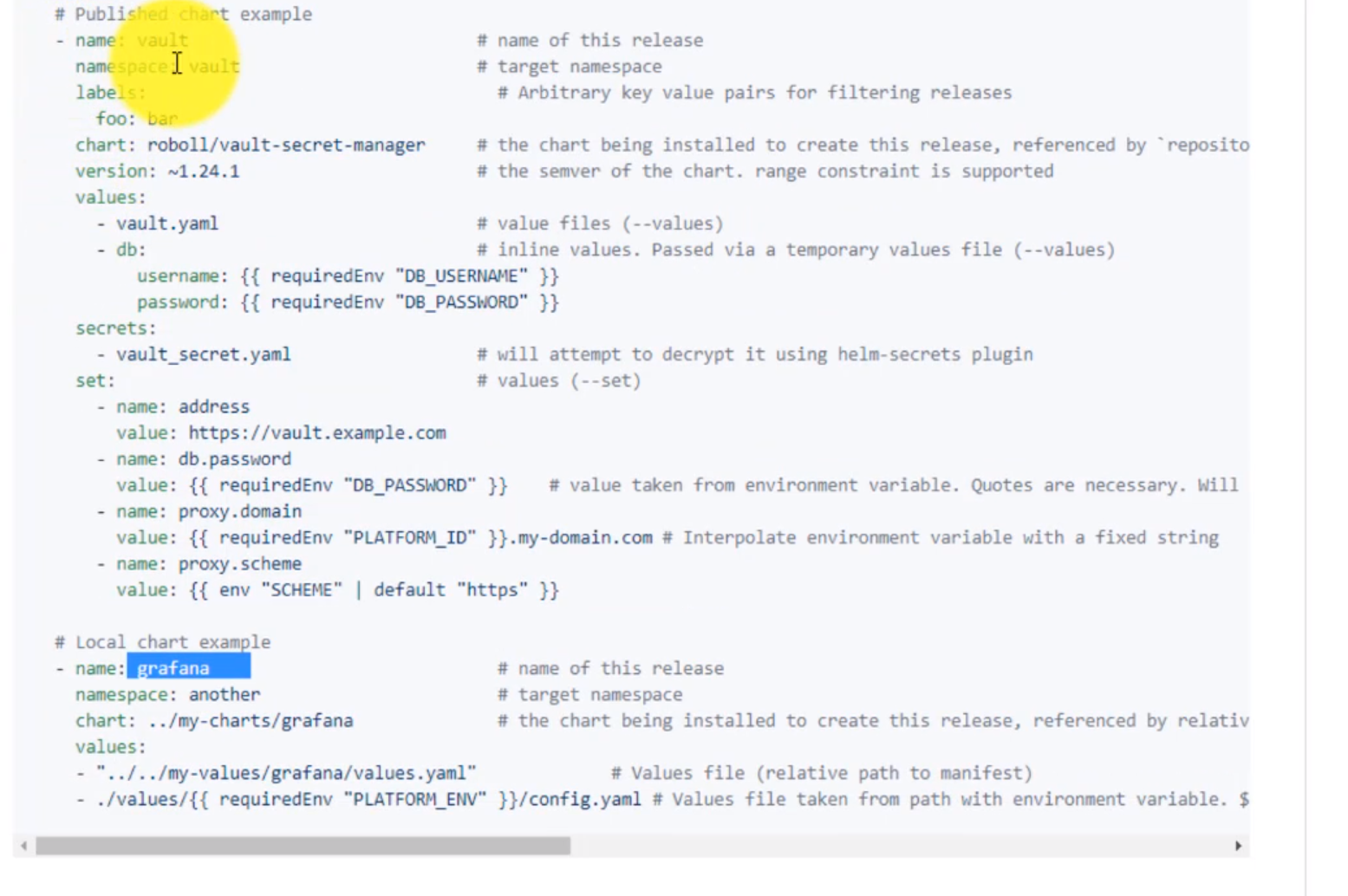

- 36. Introduction to Helmfile

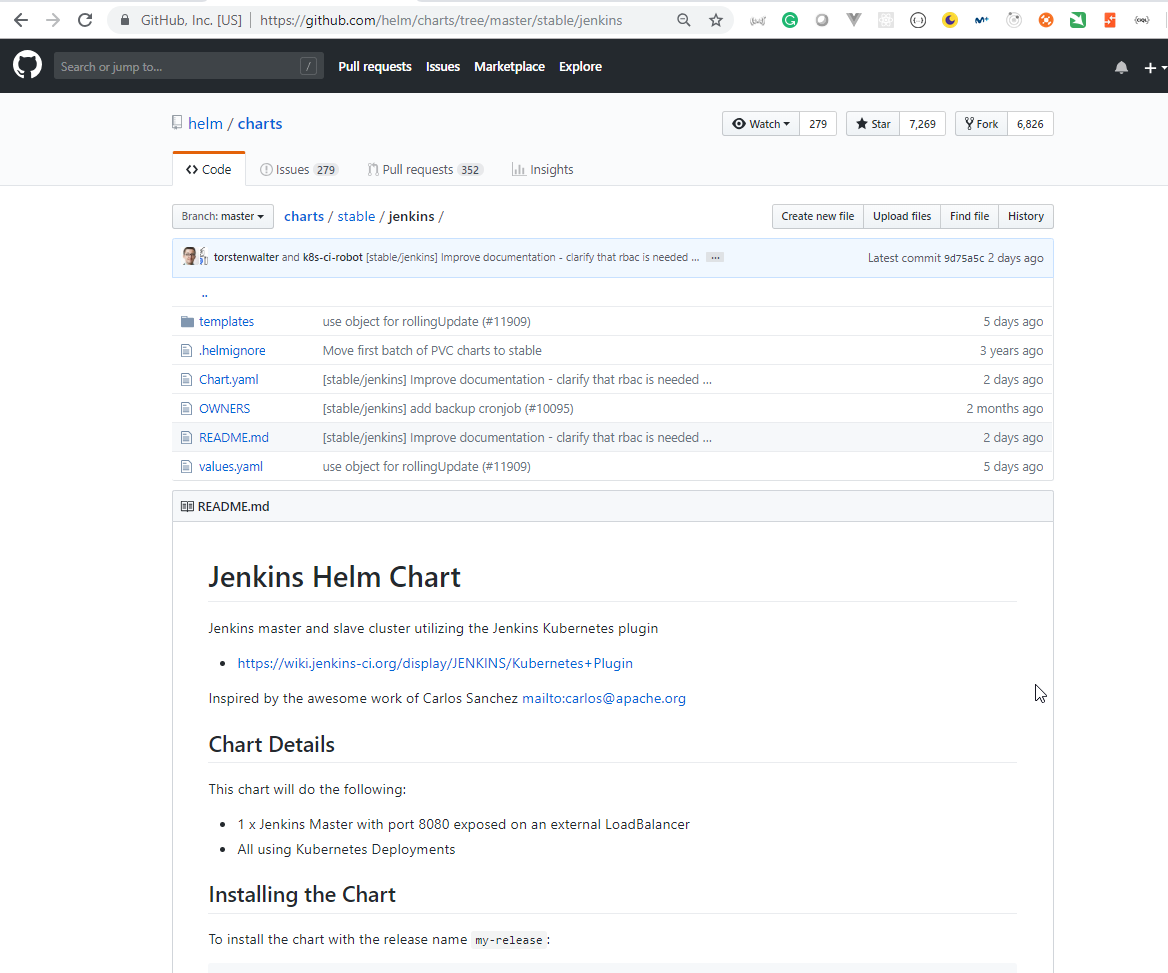

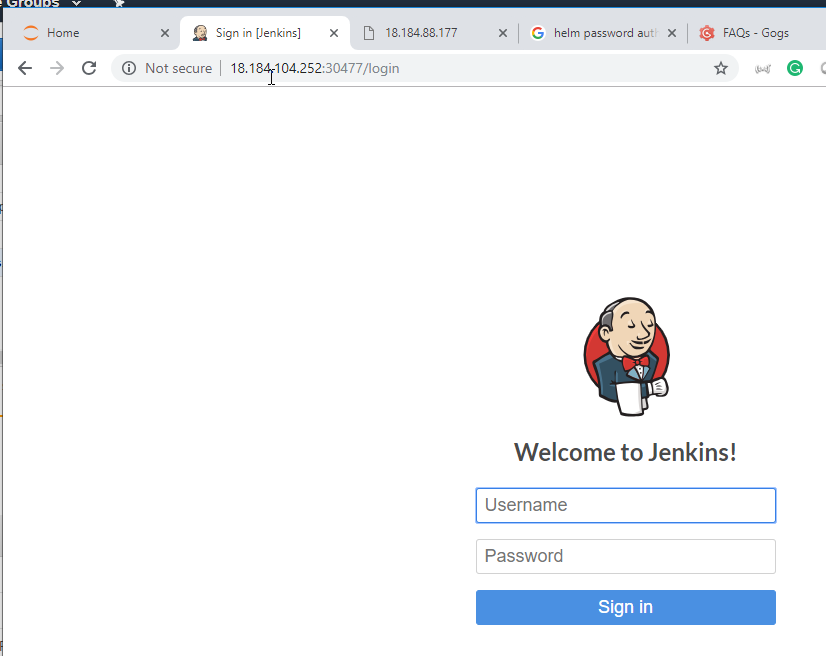

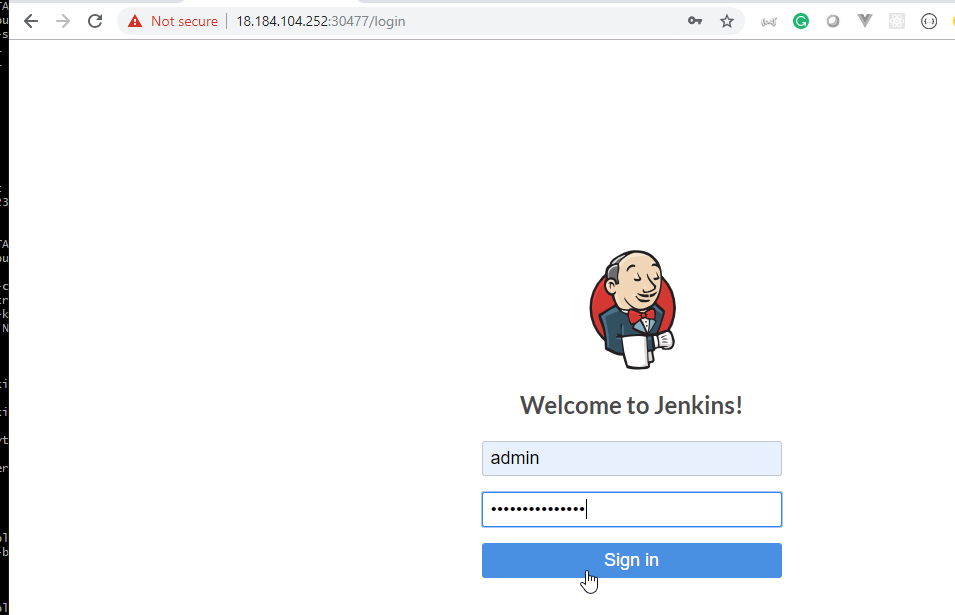

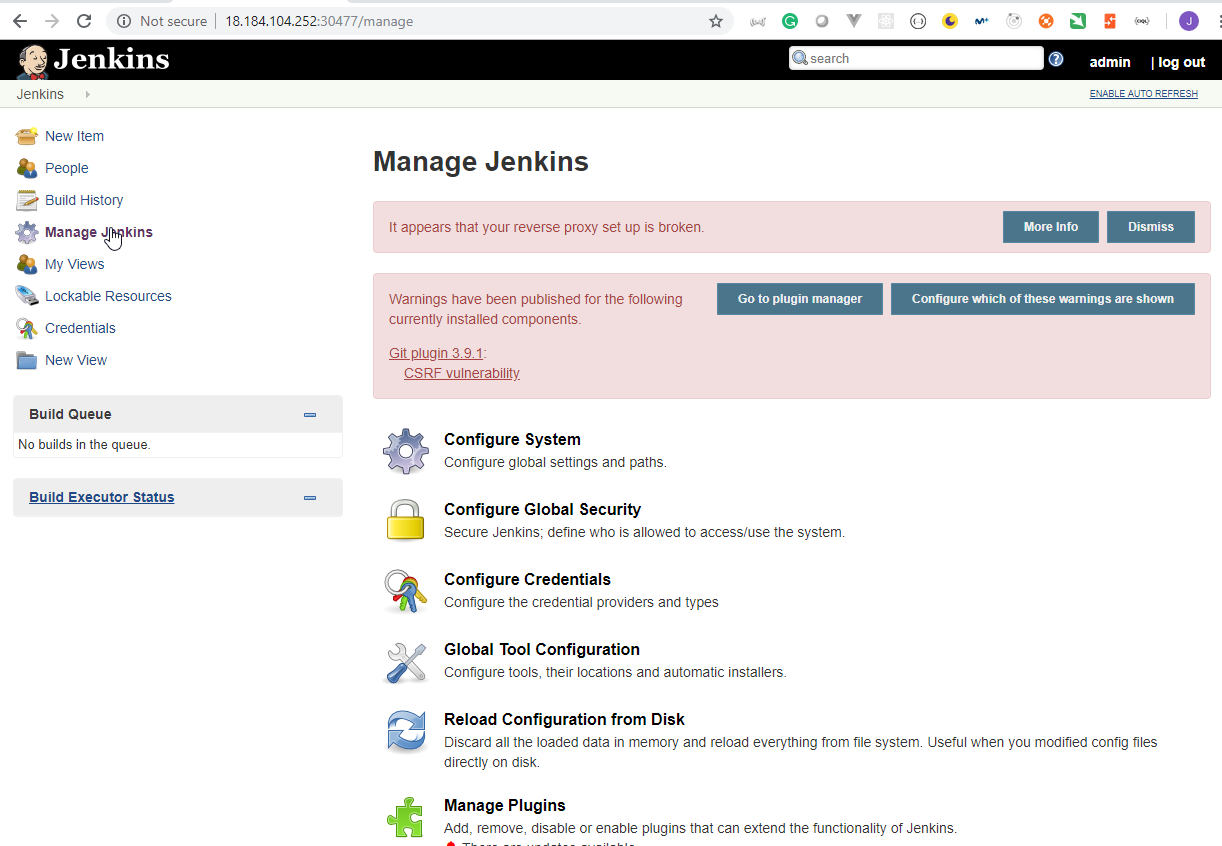

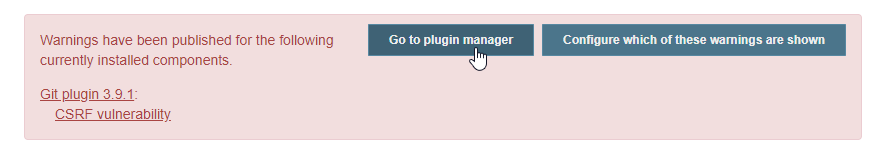

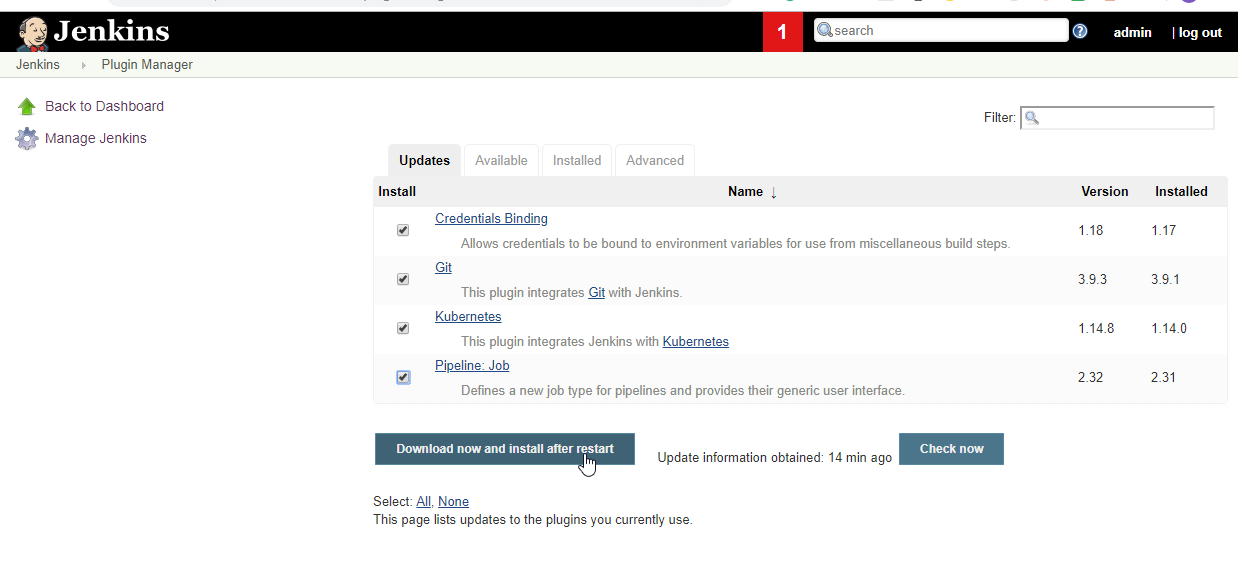

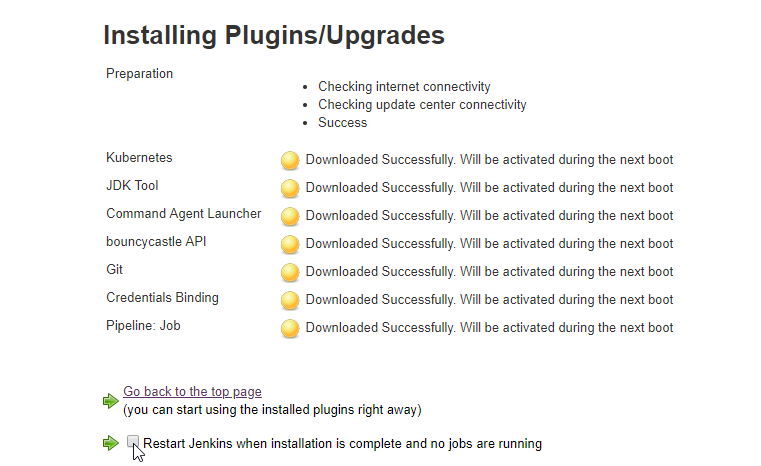

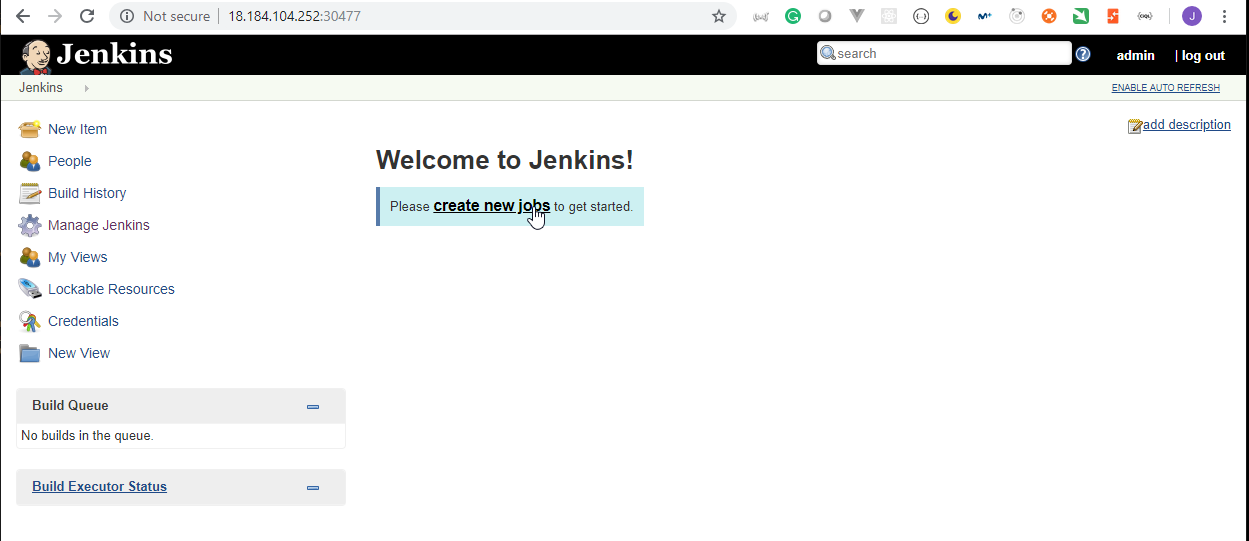

- 37. How to deploy Jenkins by using Helmfile (Part 1)

- 38. How to deploy Jenkins by using Helmfile (Part 2)

- 39. Materials: Create HELMFILE specification for Jenkins deployment

- 40. How to use helmfile to deploy Jenkins helm chart for the first time (Part 1)

- 41. Materials: Useful commands Jenkins deployment

- 42. How to use helmfile to deploy Jenkins helm chart for the first time (Part 2)

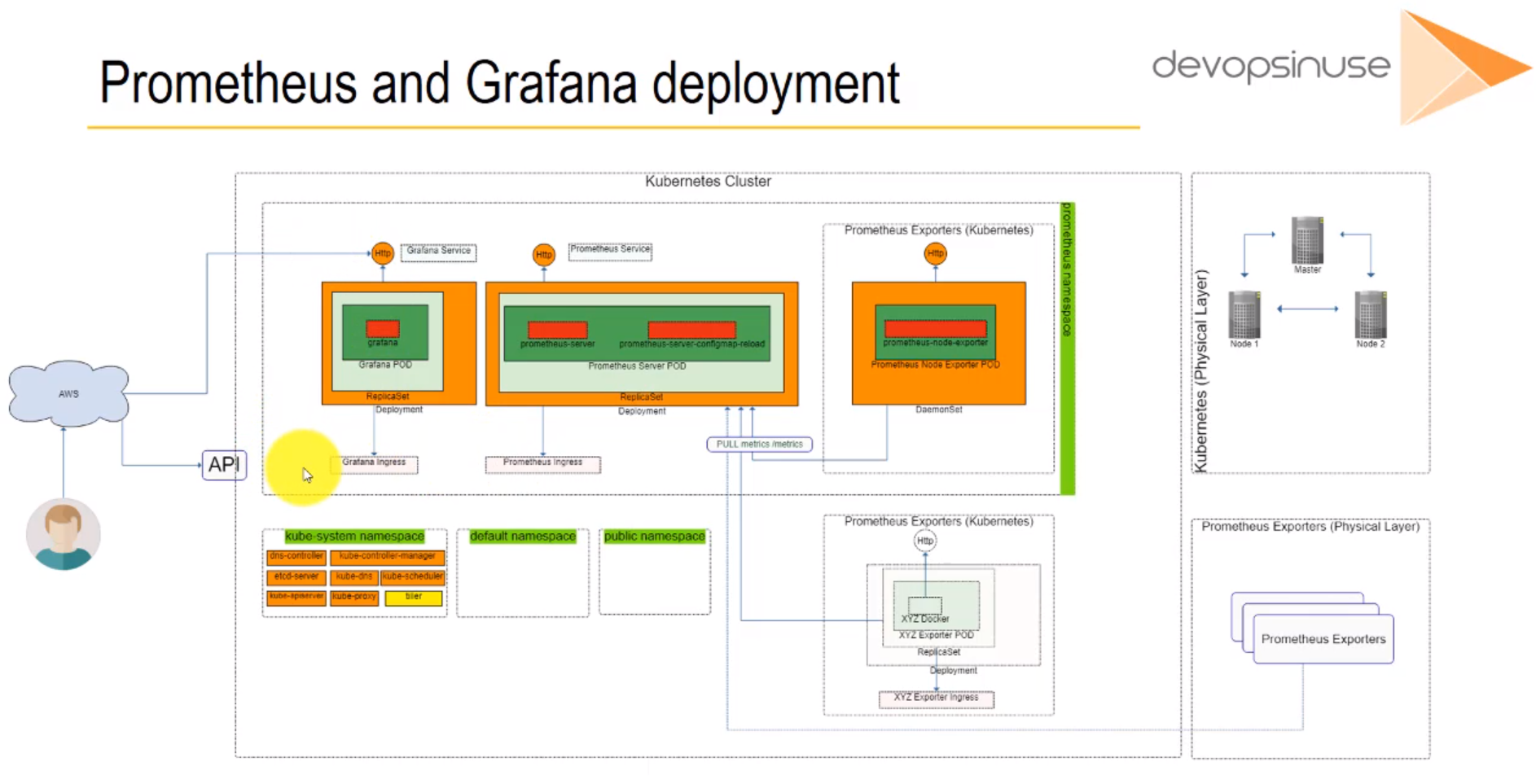

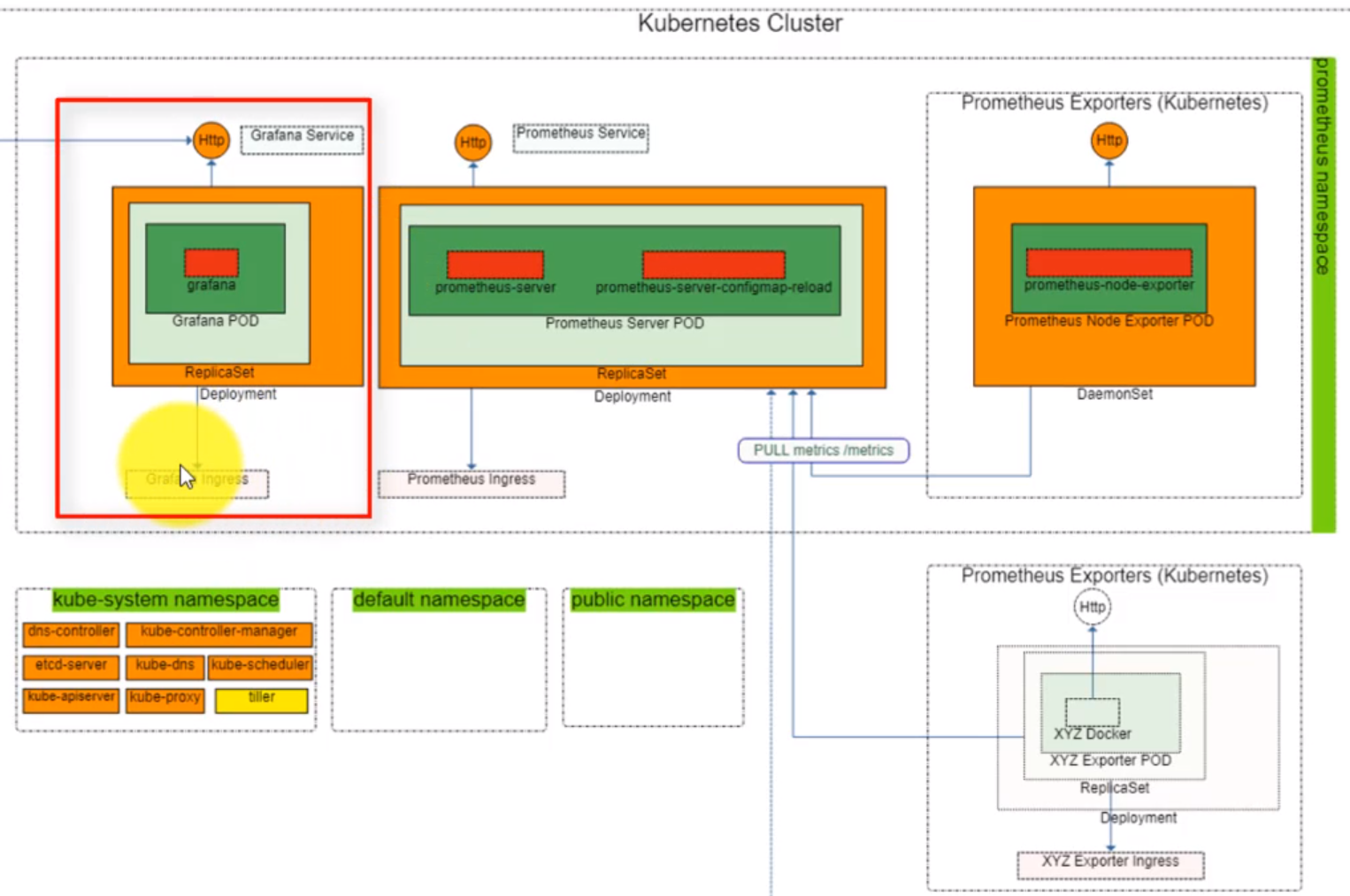

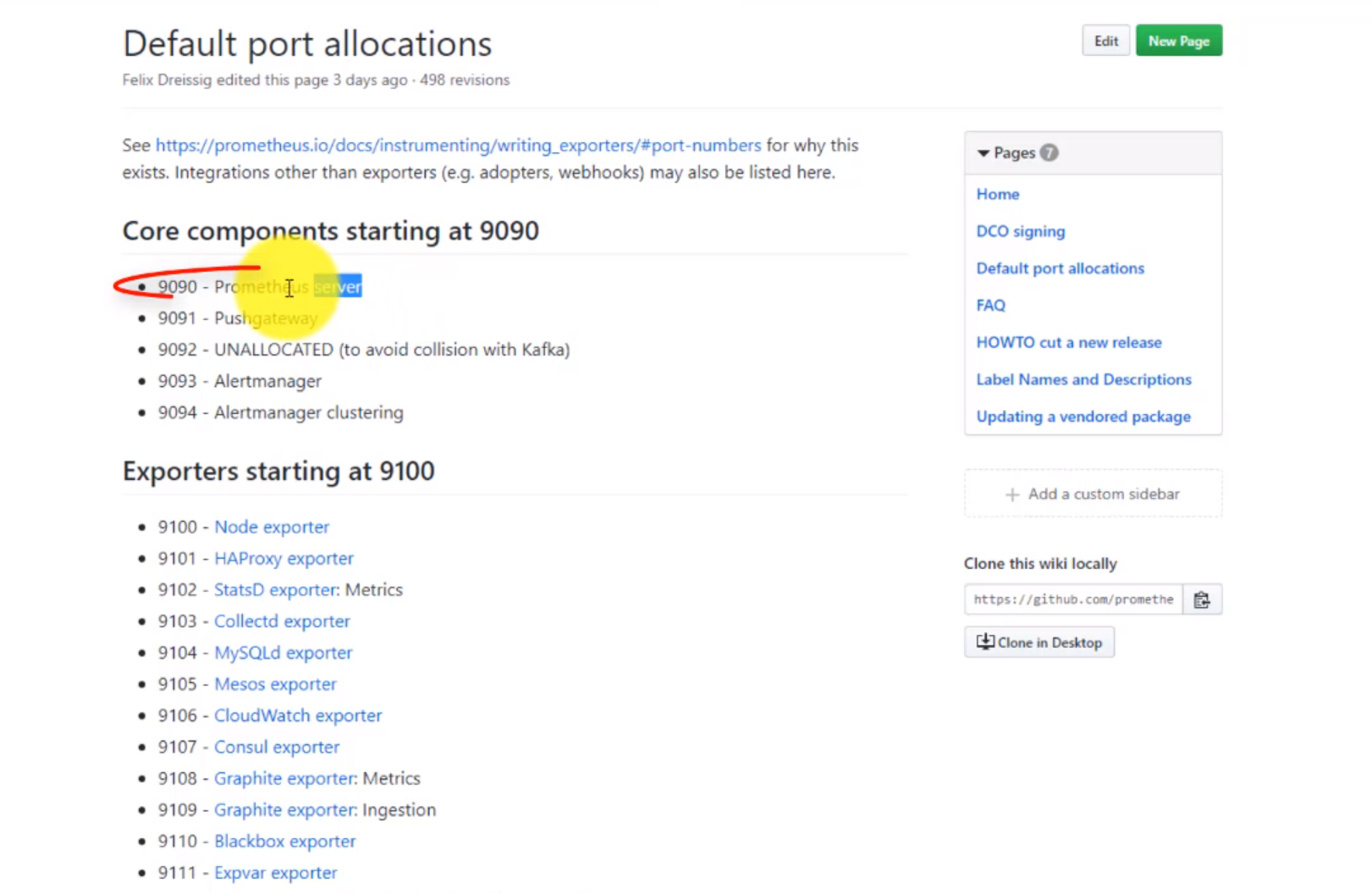

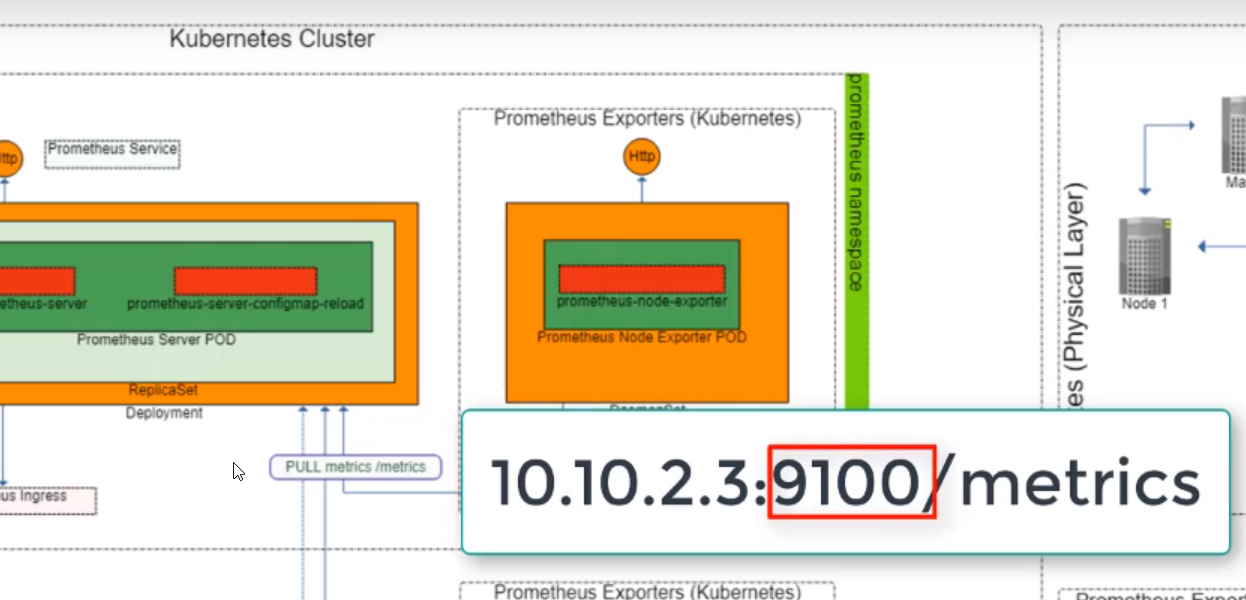

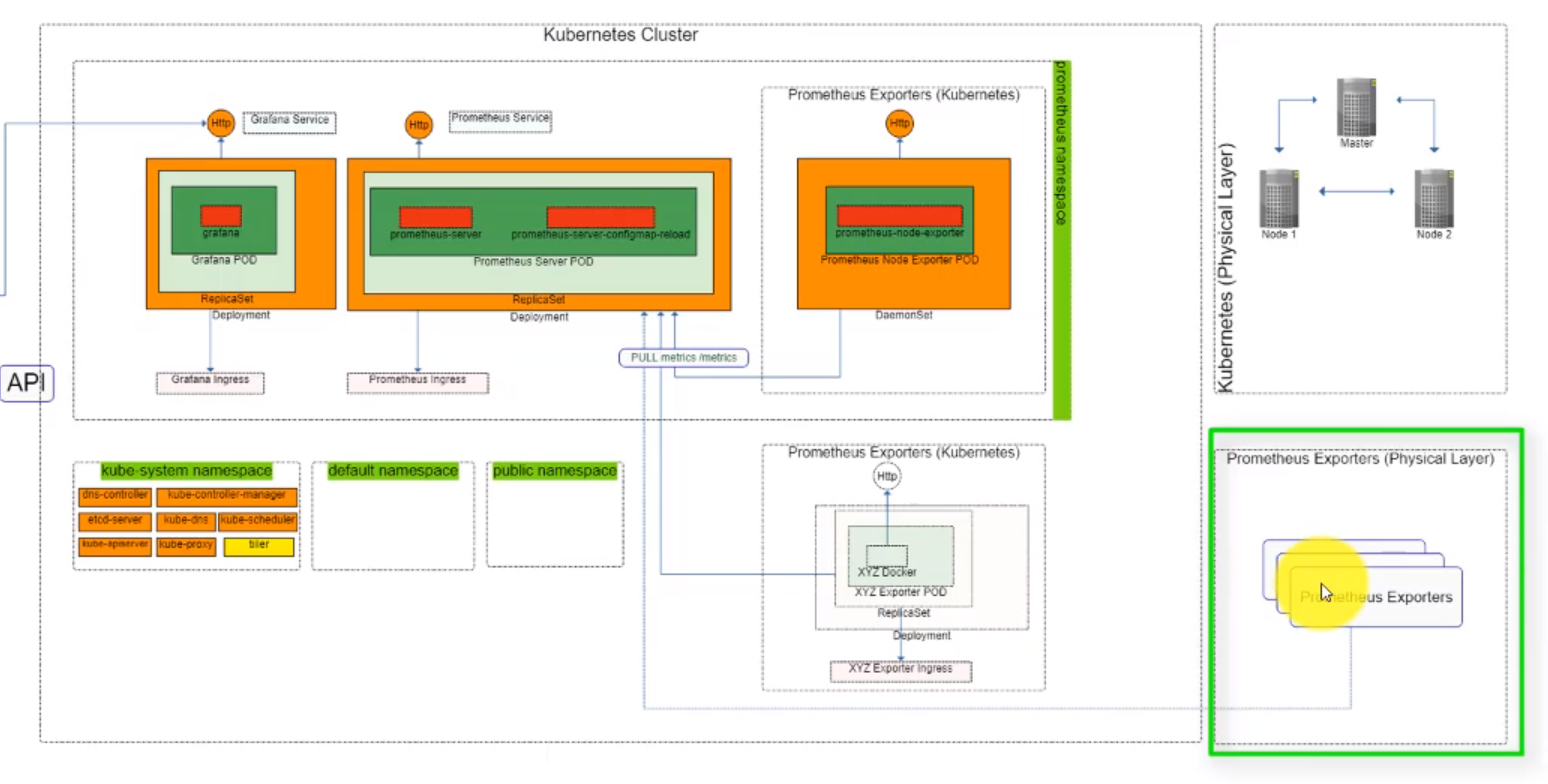

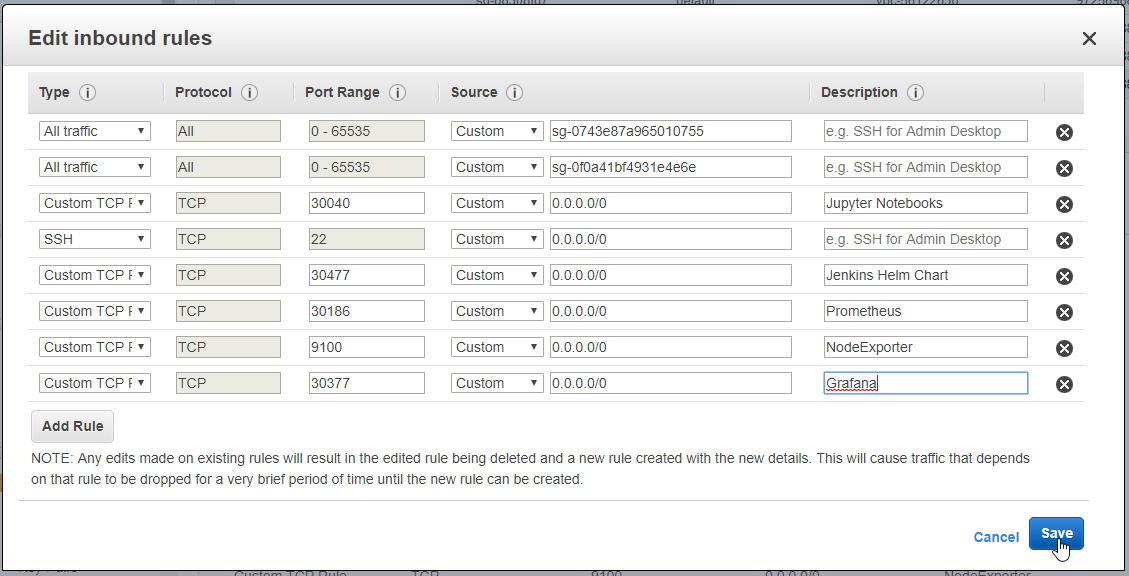

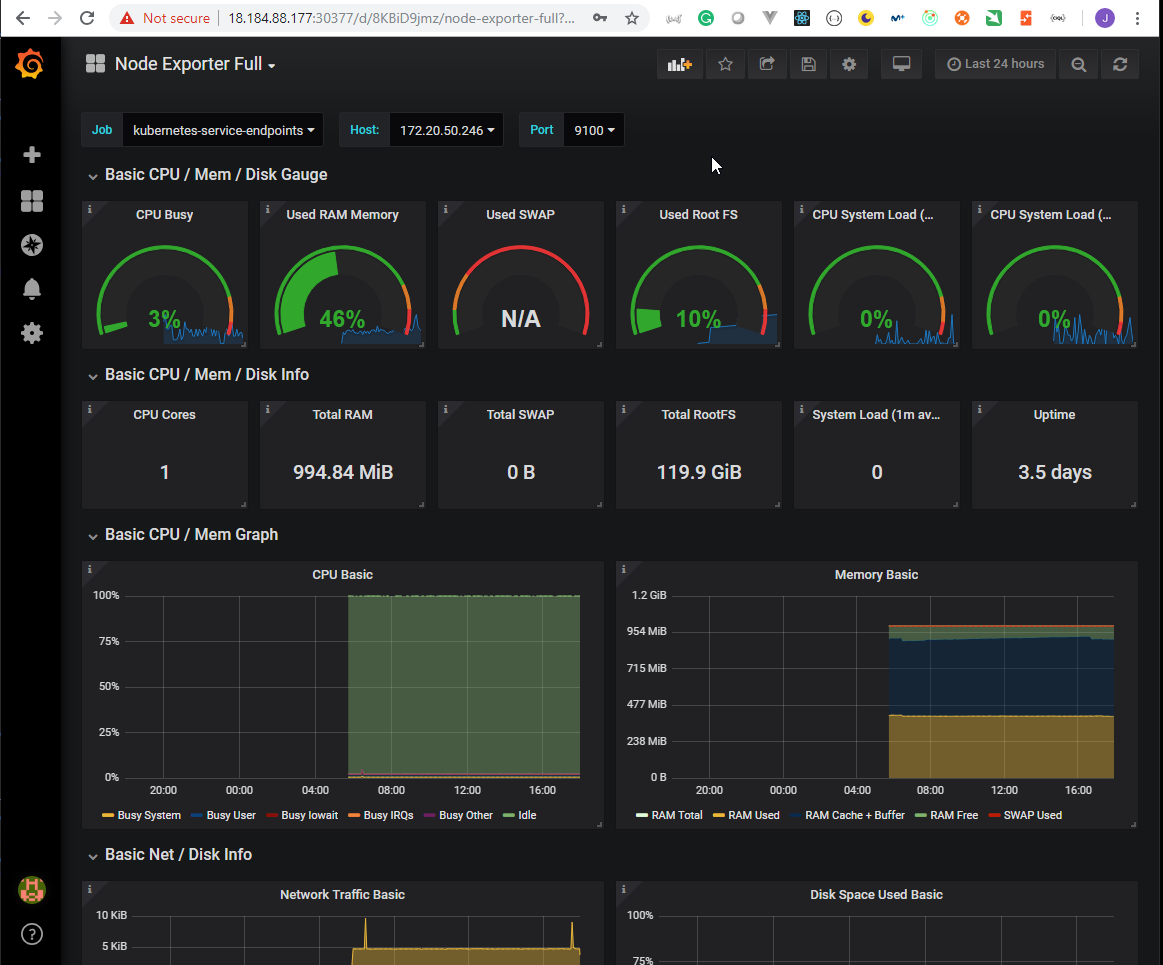

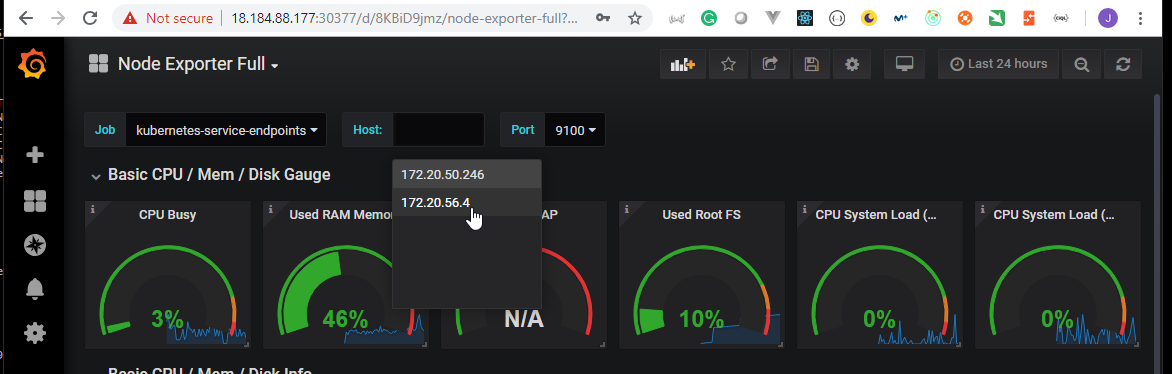

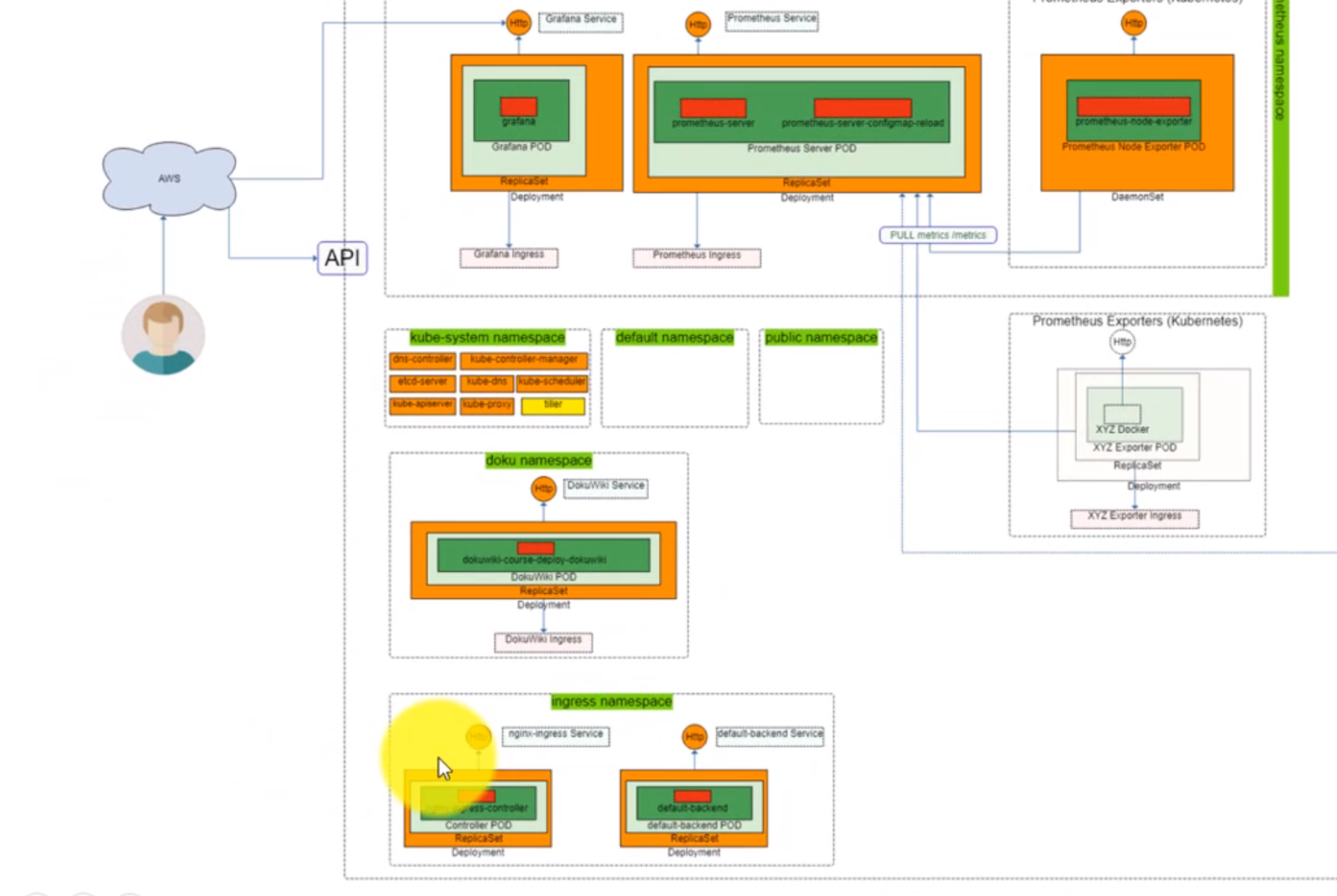

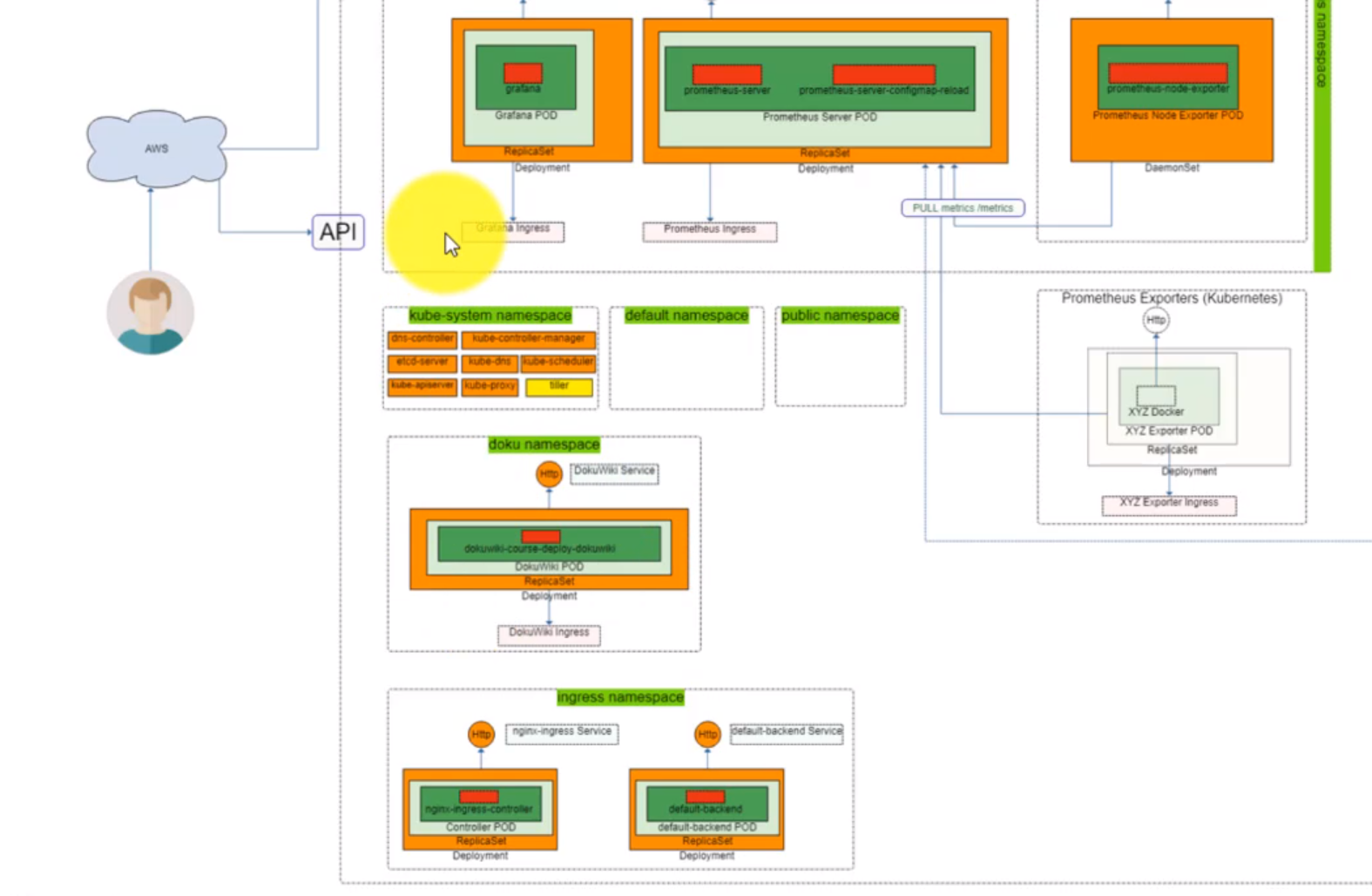

- Section: 5. Grafana and Prometheus HELMFILE deployment

- 43. Introduction to Prometheus and Grafana deployment by using helmfile (Grafana)

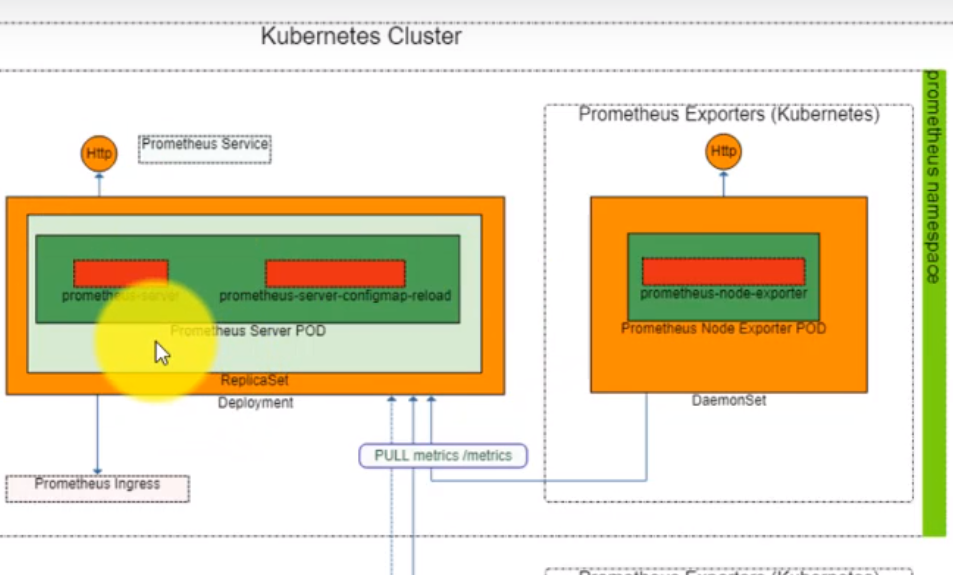

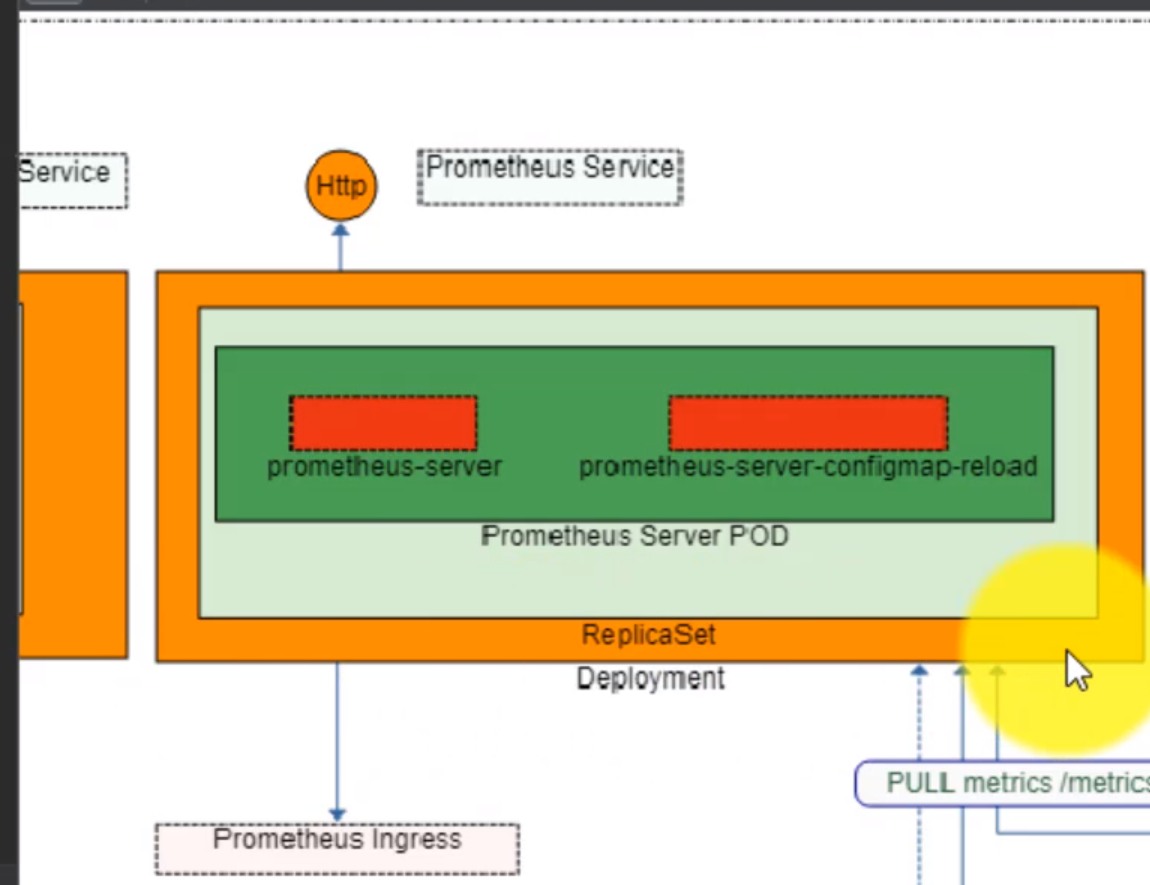

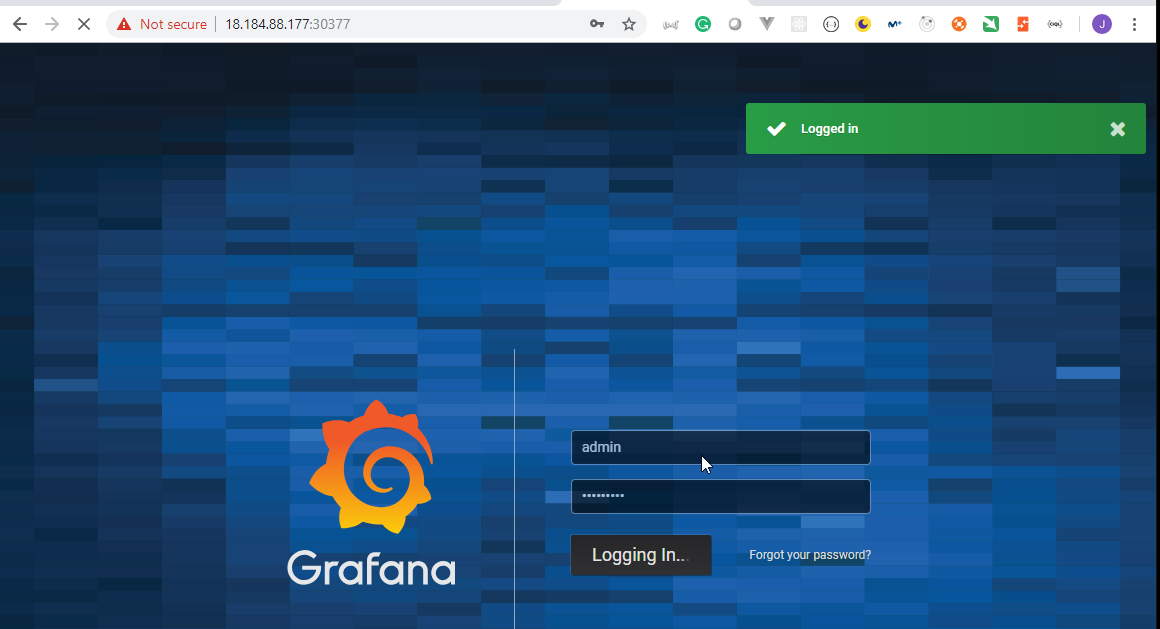

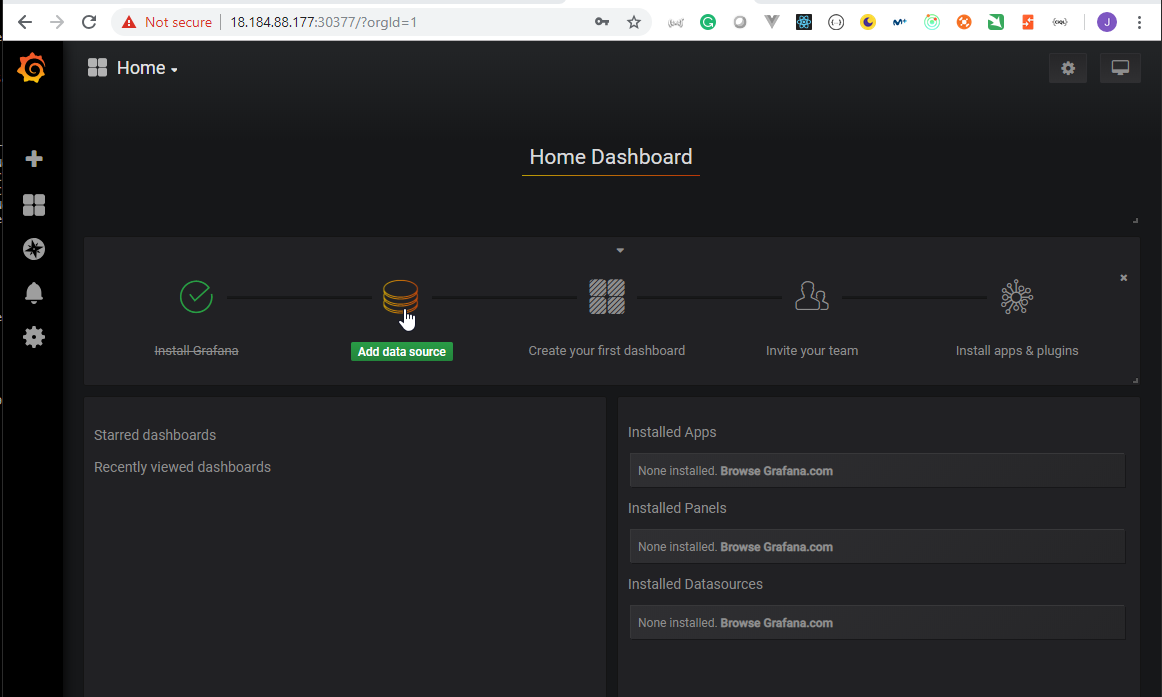

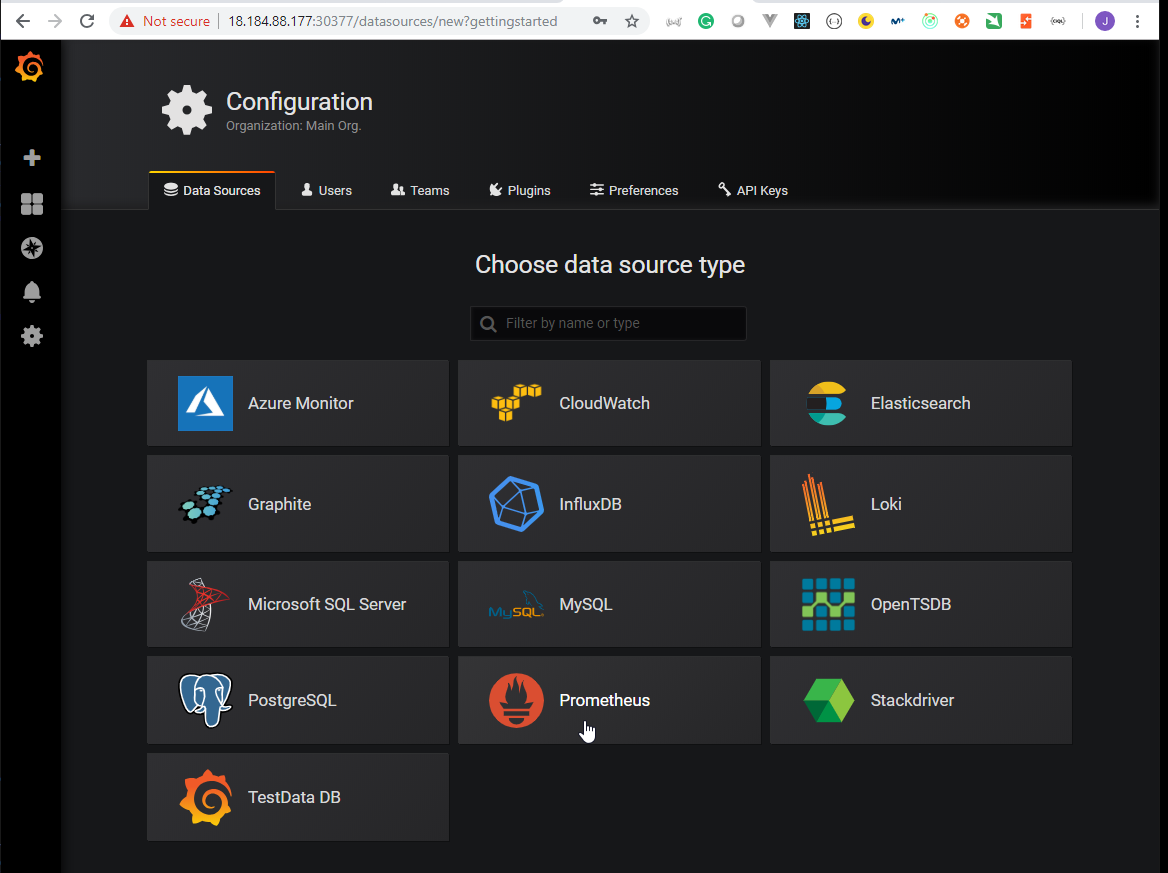

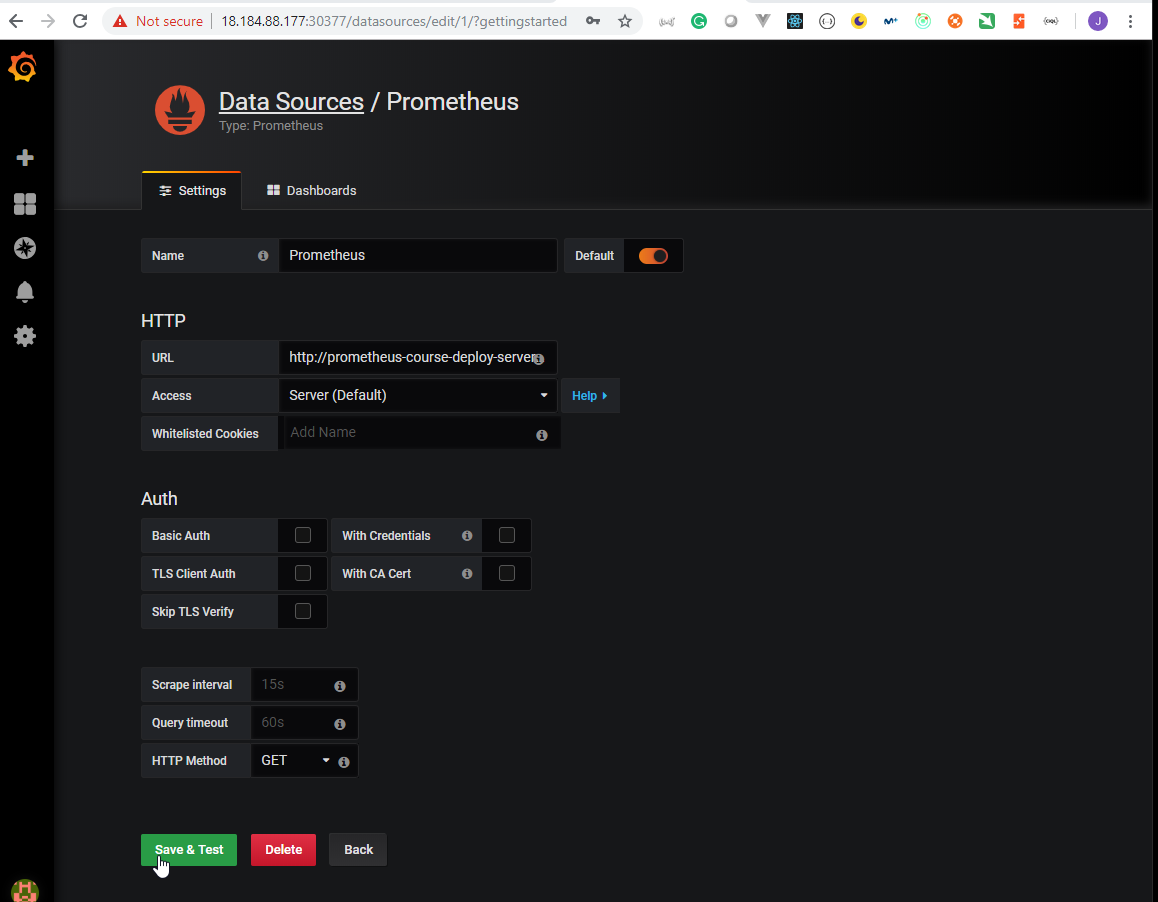

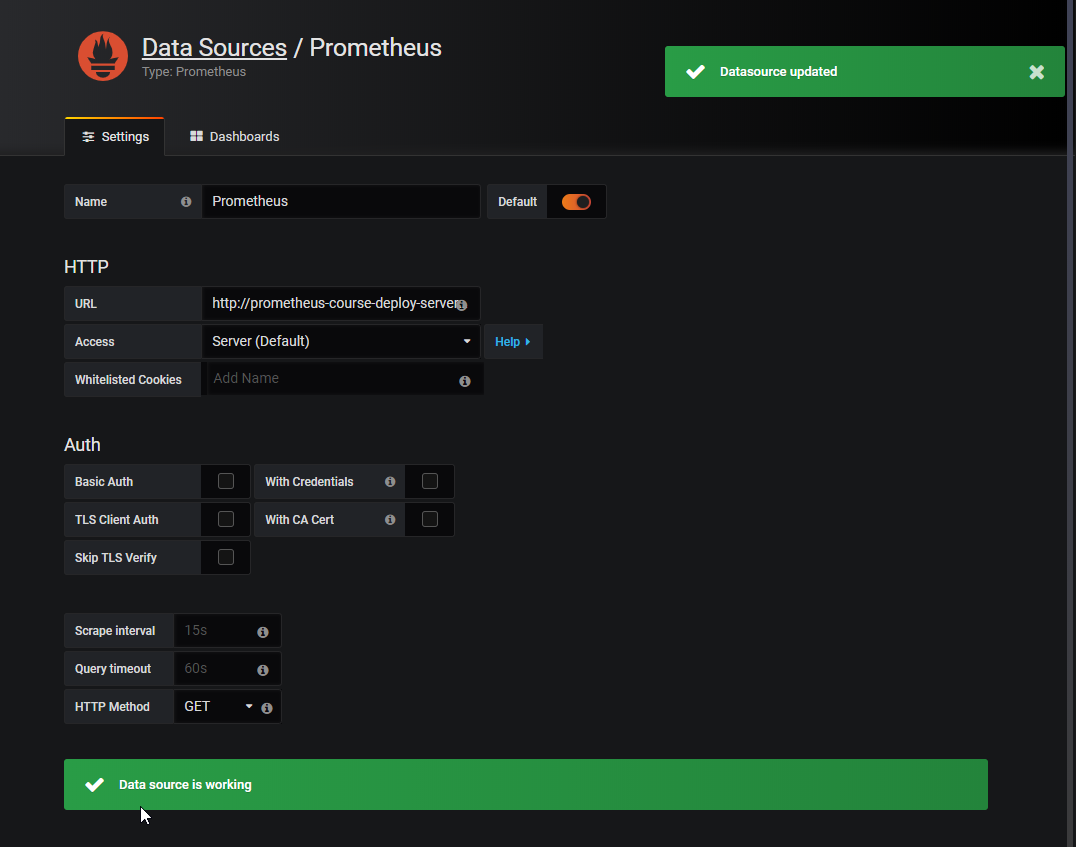

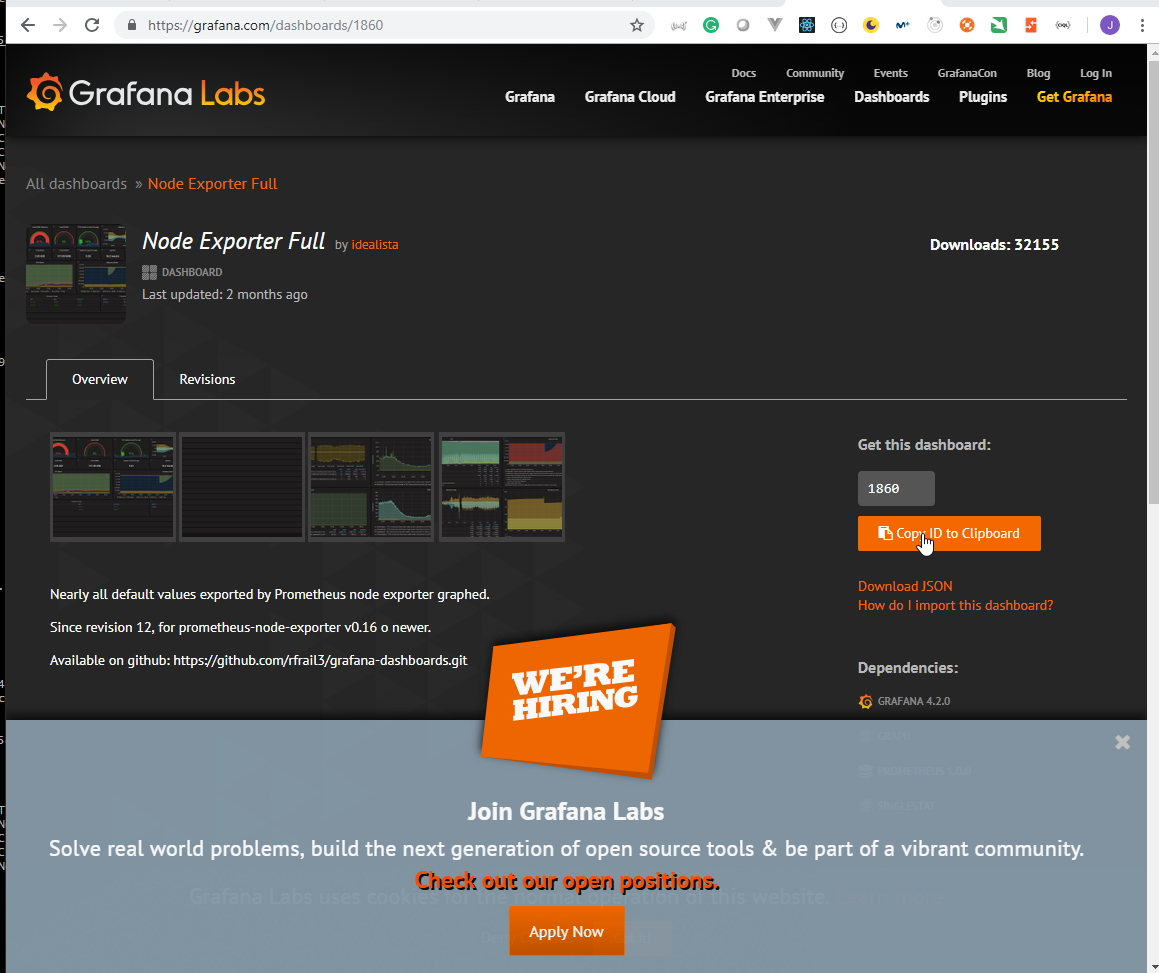

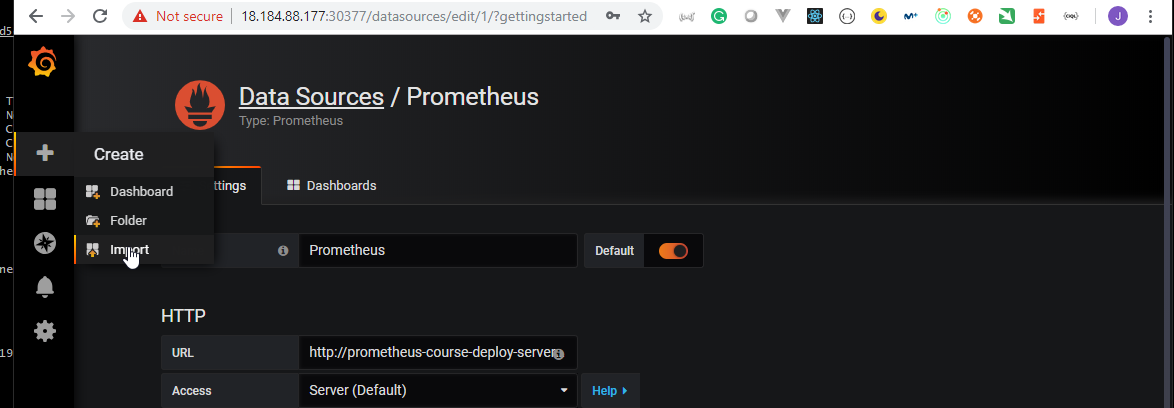

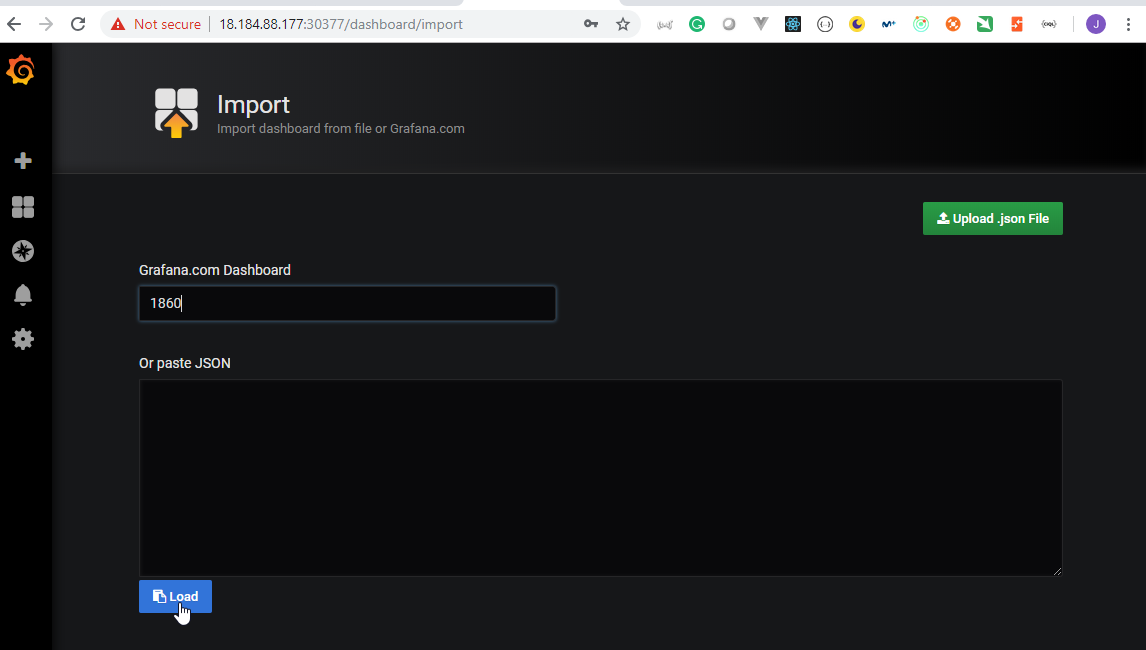

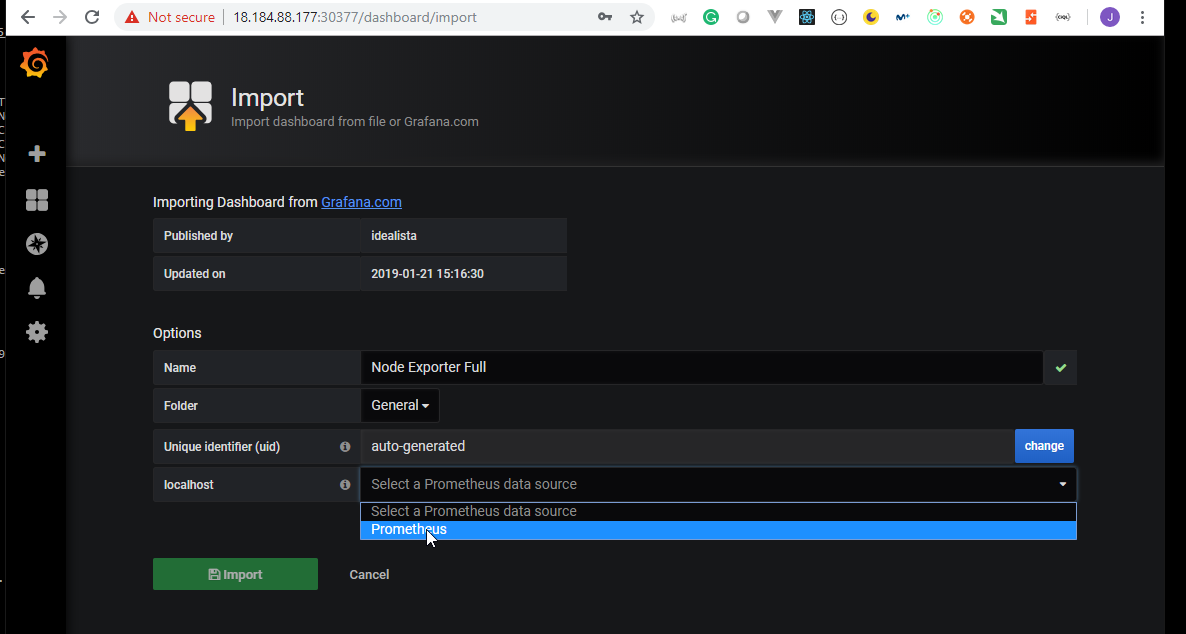

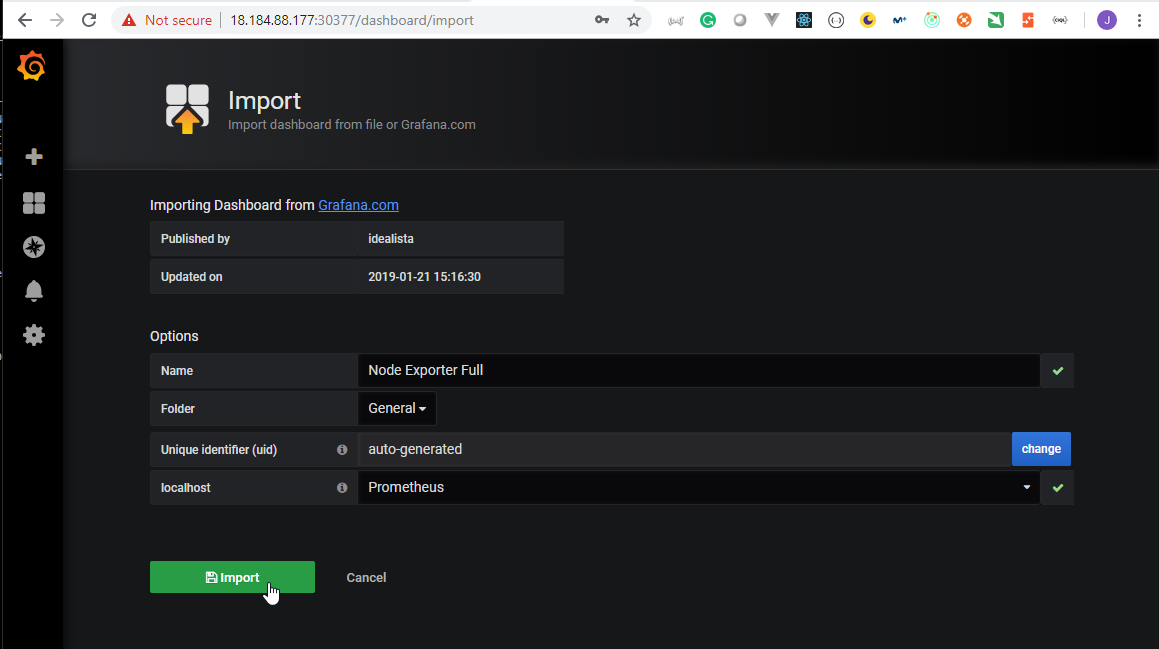

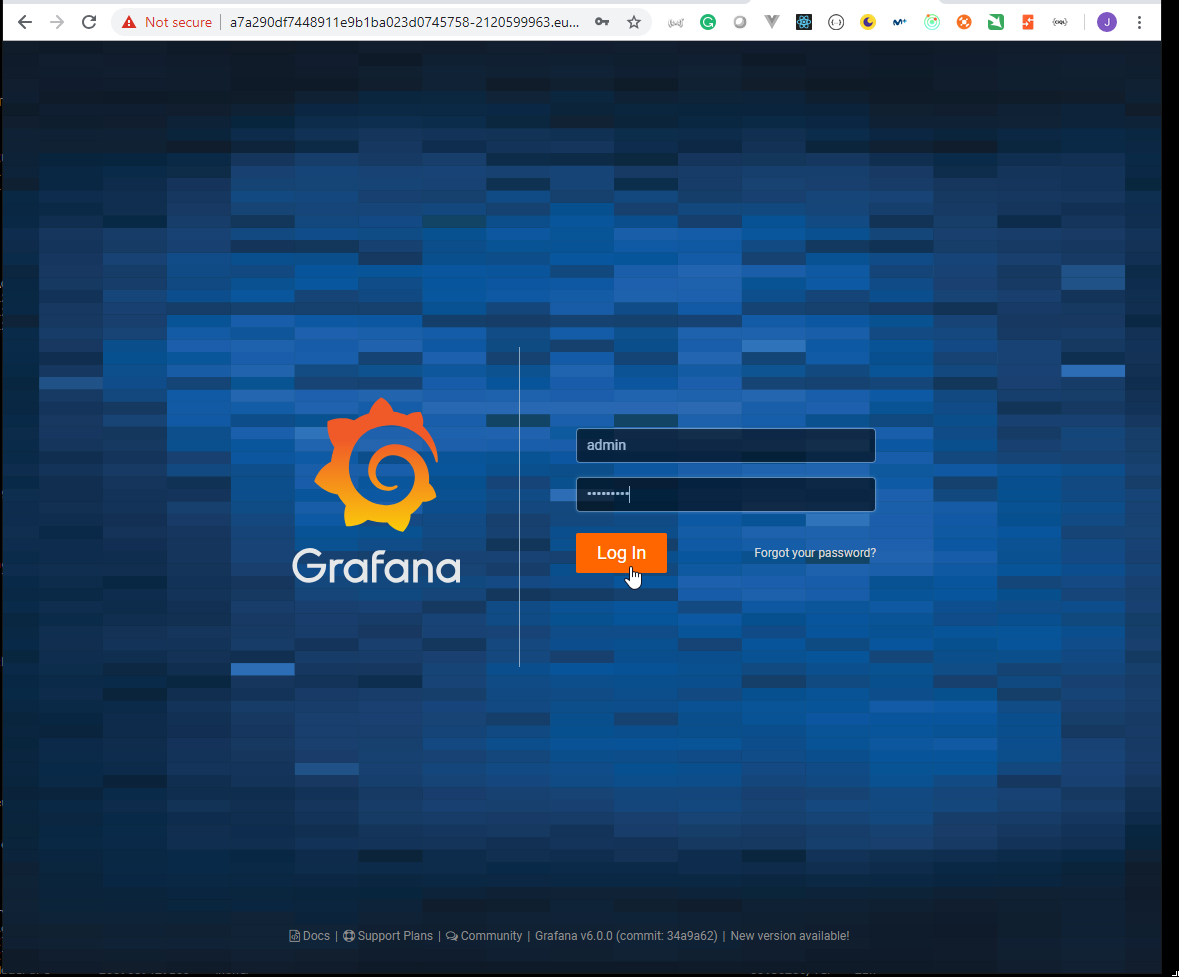

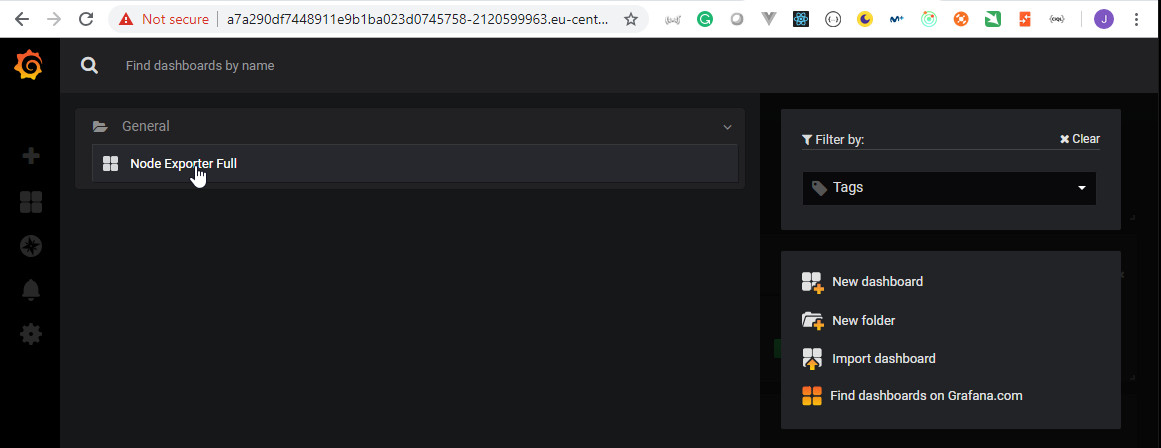

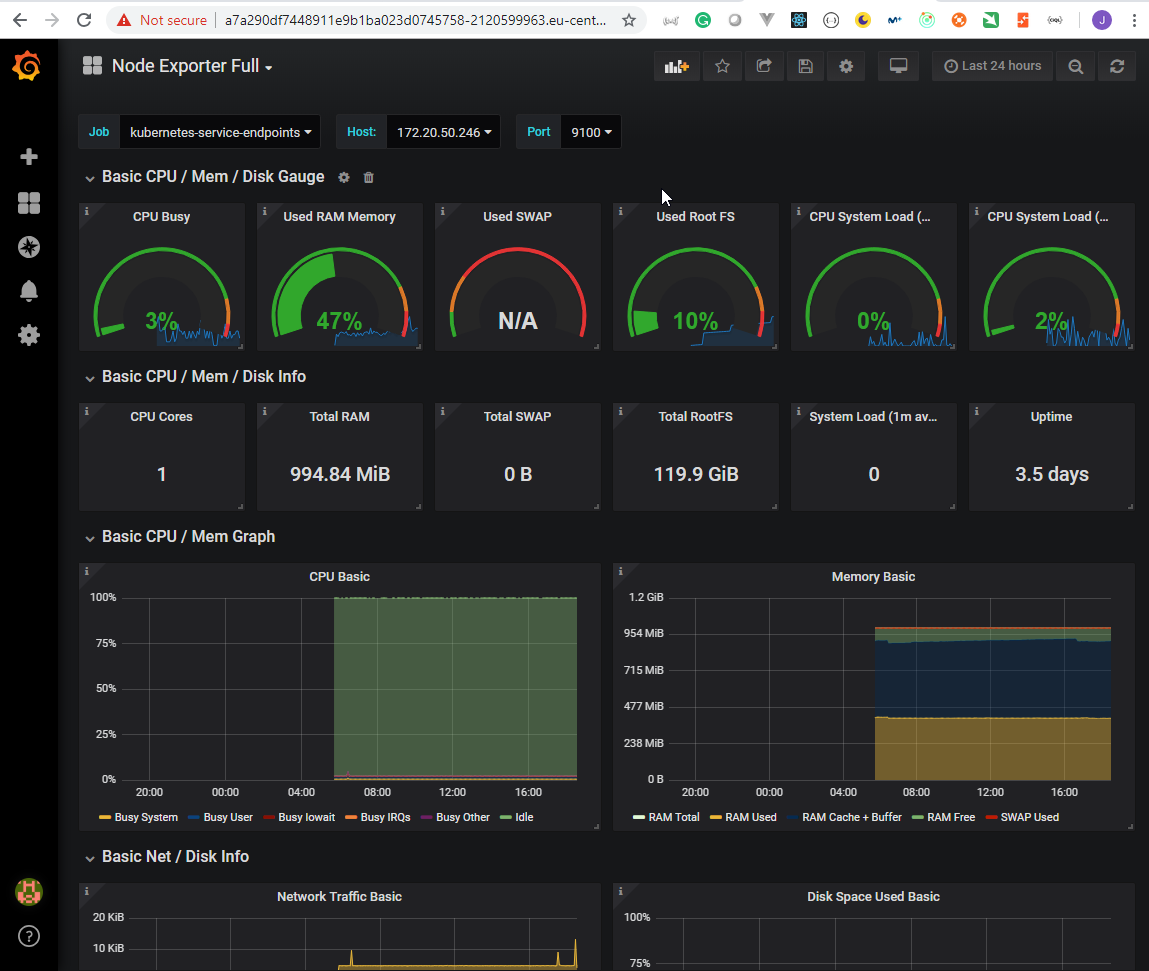

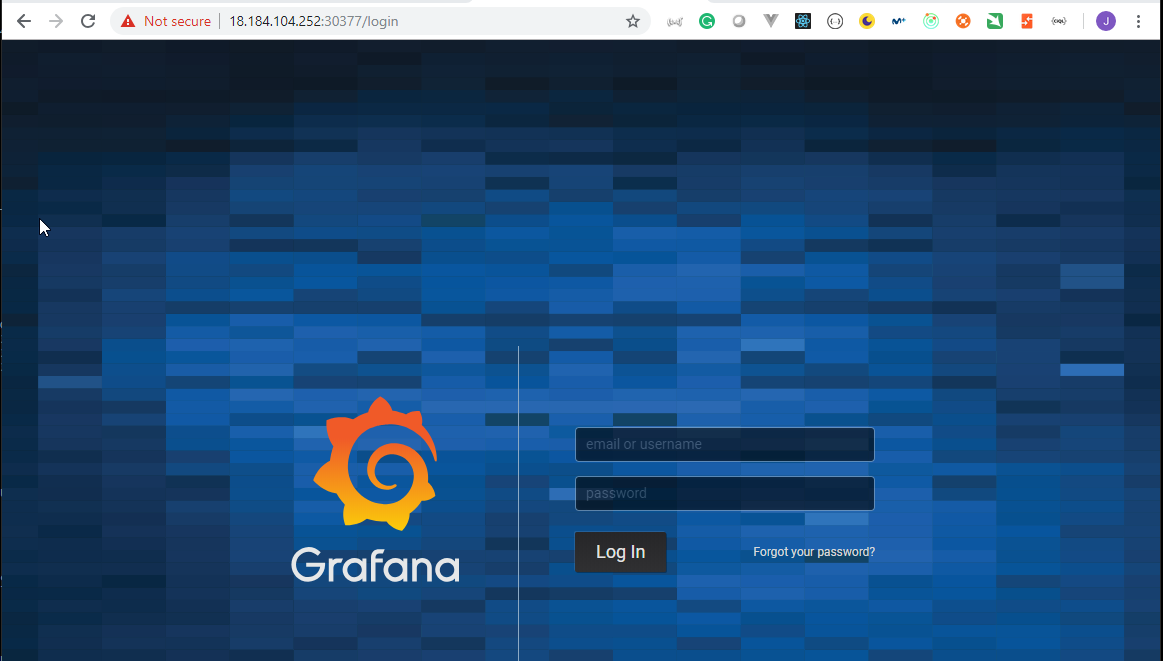

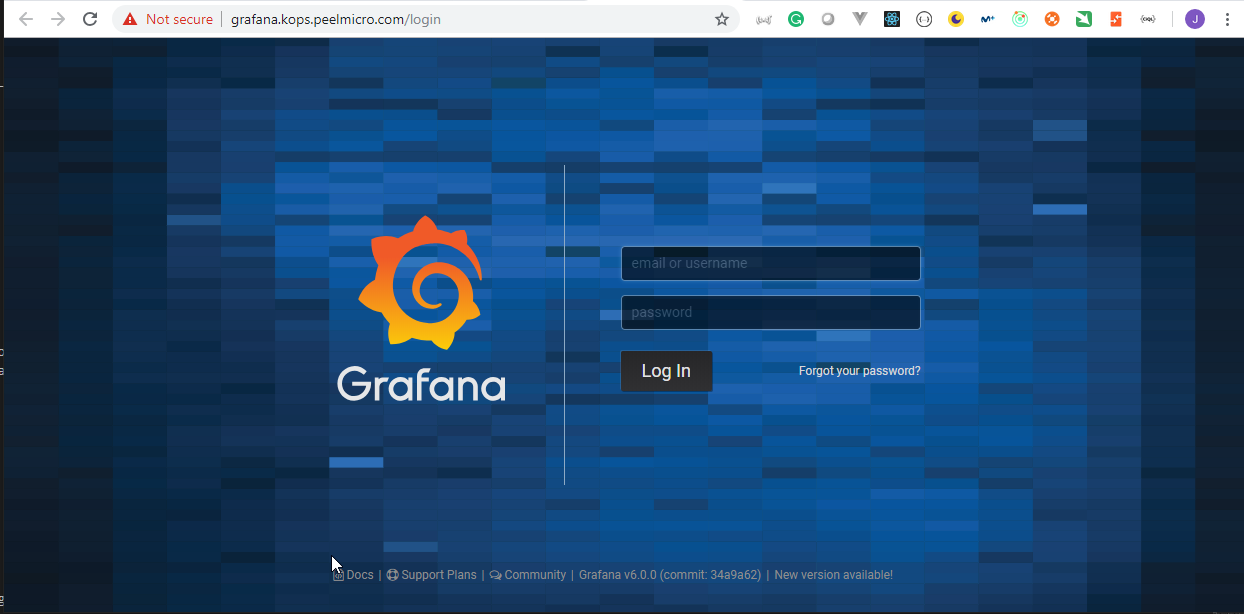

- 44. Prometheus and Grafana deployment by using helmfile (Prometheus part)

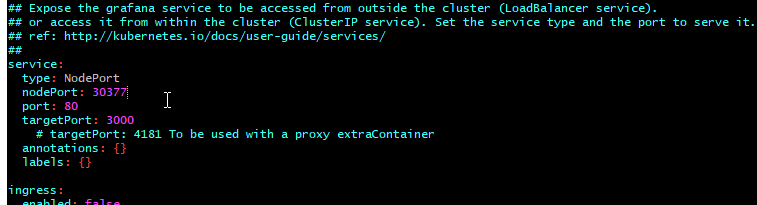

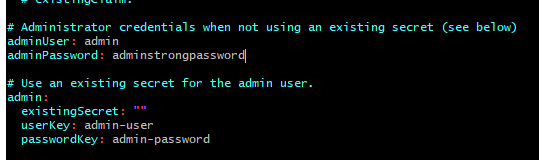

- 45. Prepare Helm charts for Grafana deployment by using helmfile

- 46. Prepare Helm charts for Prometheus deployment by using helmfile

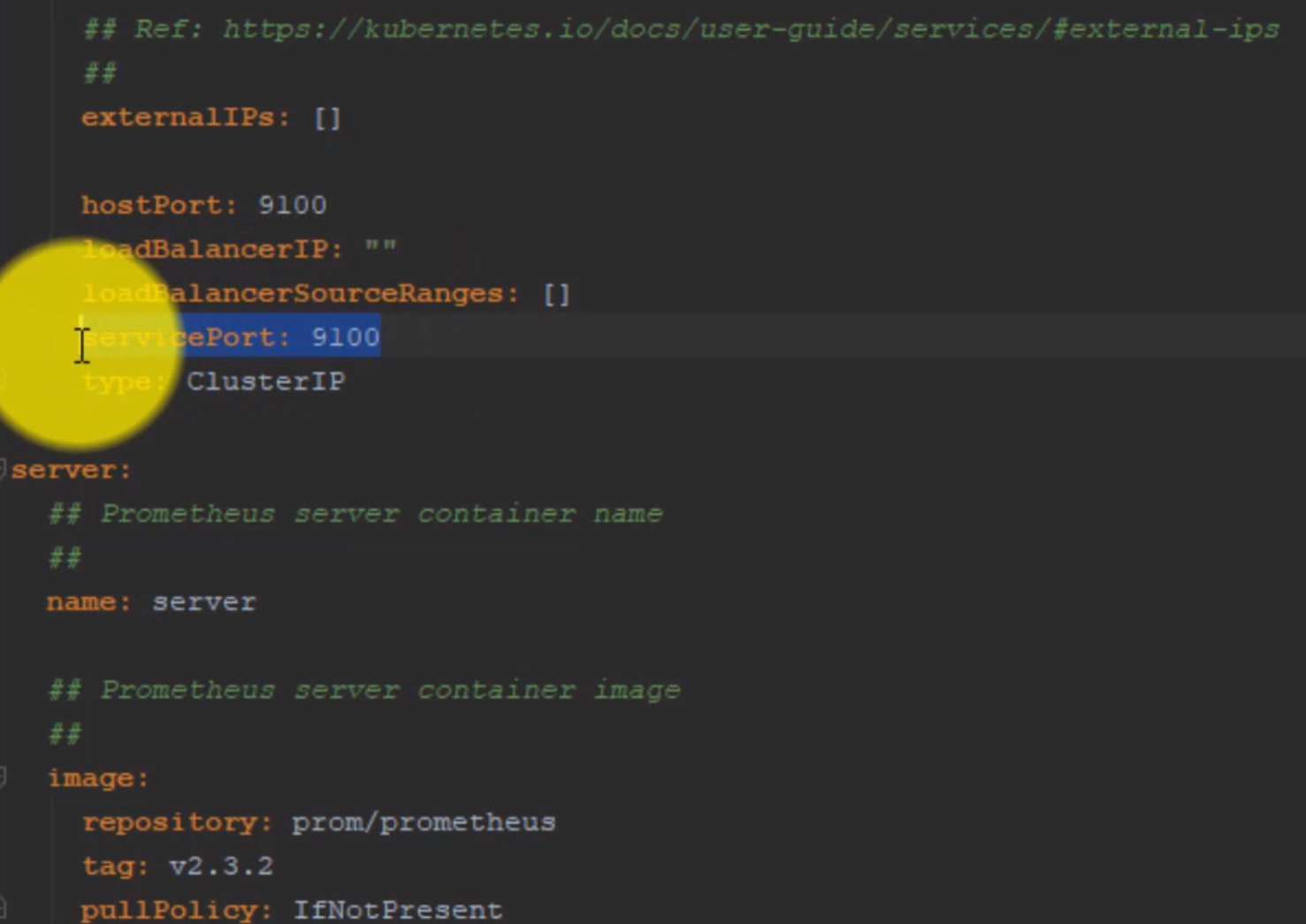

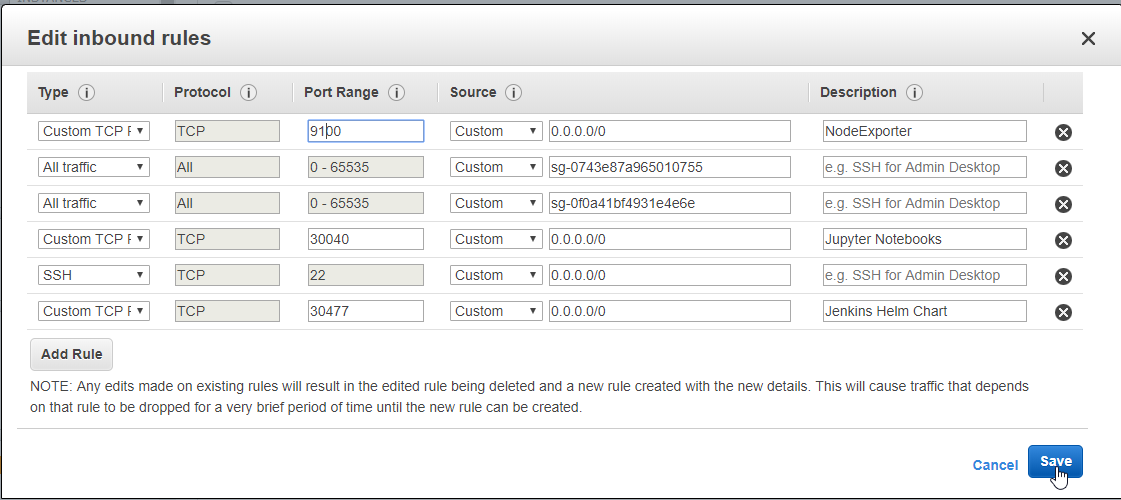

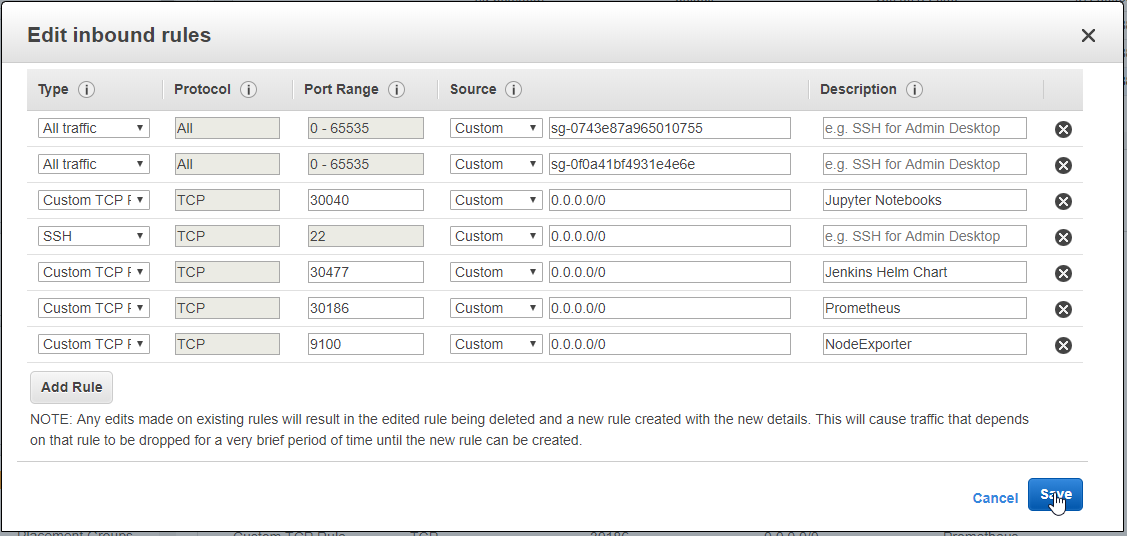

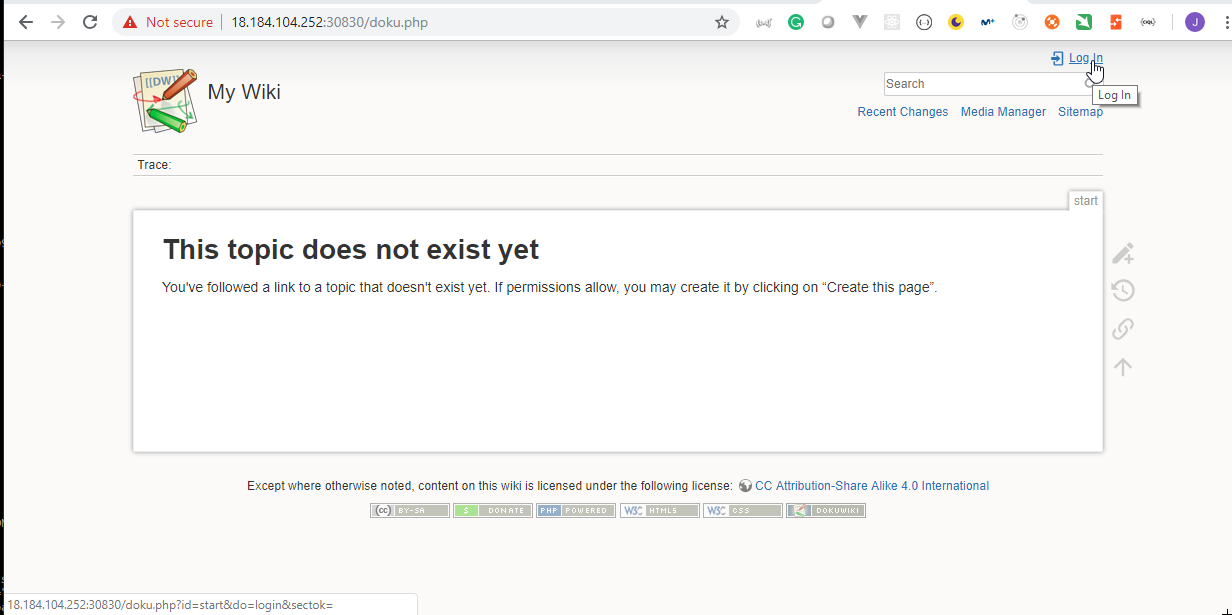

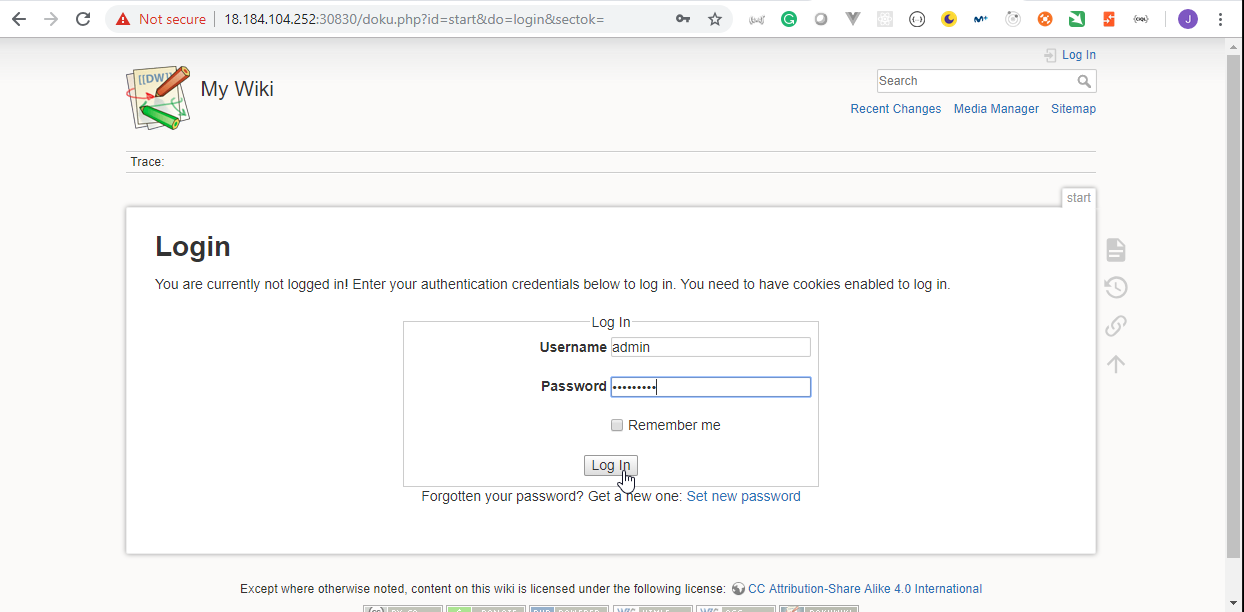

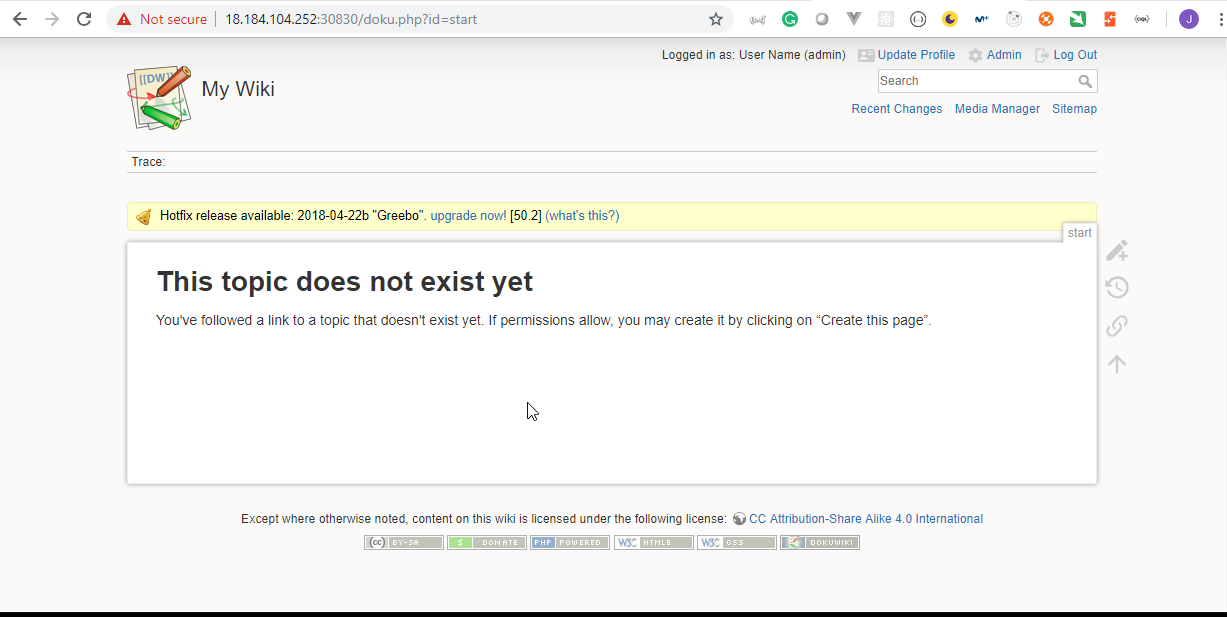

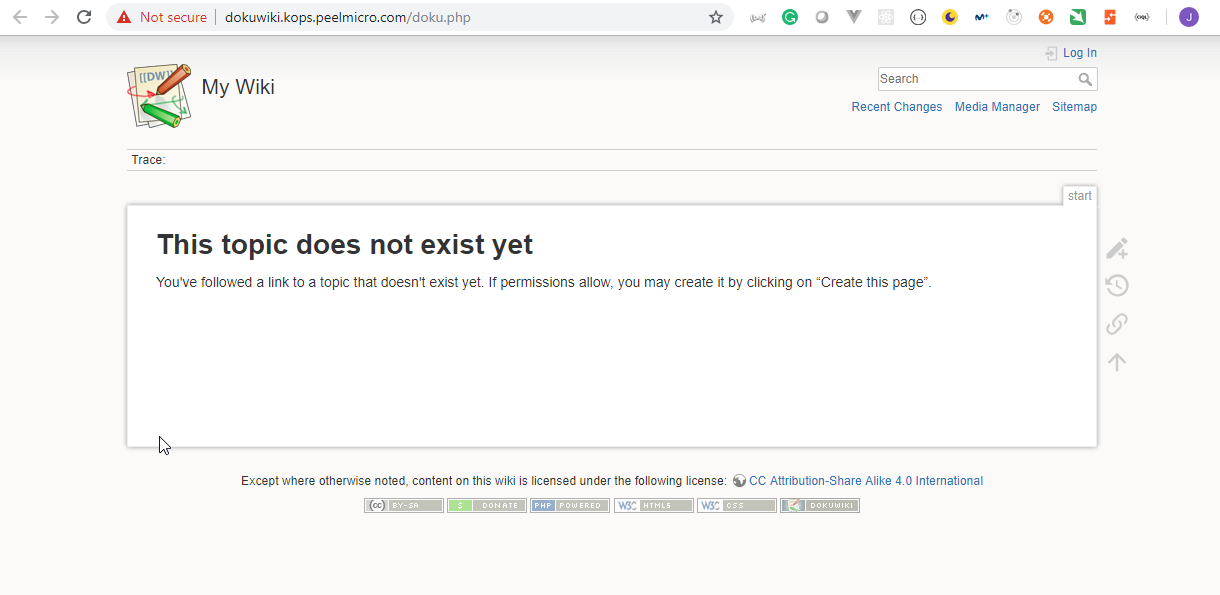

- 47. Prepare Helm charts for Prometheus Node Exporter deployment by using helmfile

- 48. Copy Prometheus and Grafana Helm Charts specifications to server

- 49. Materials: Helmfile specification for Grafana and Prometheus deployment

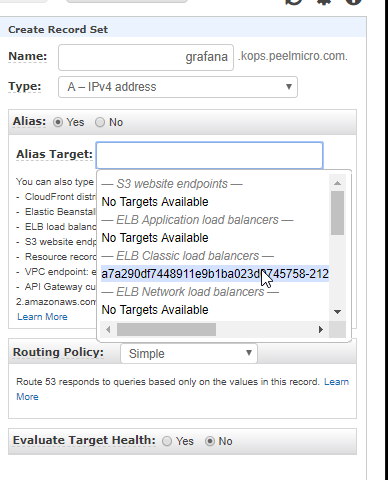

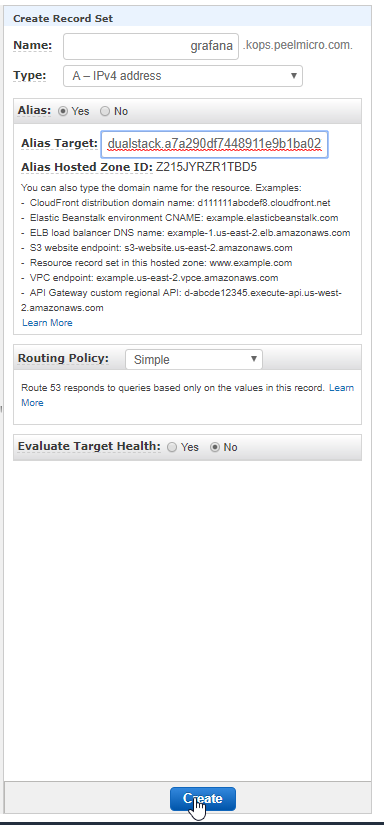

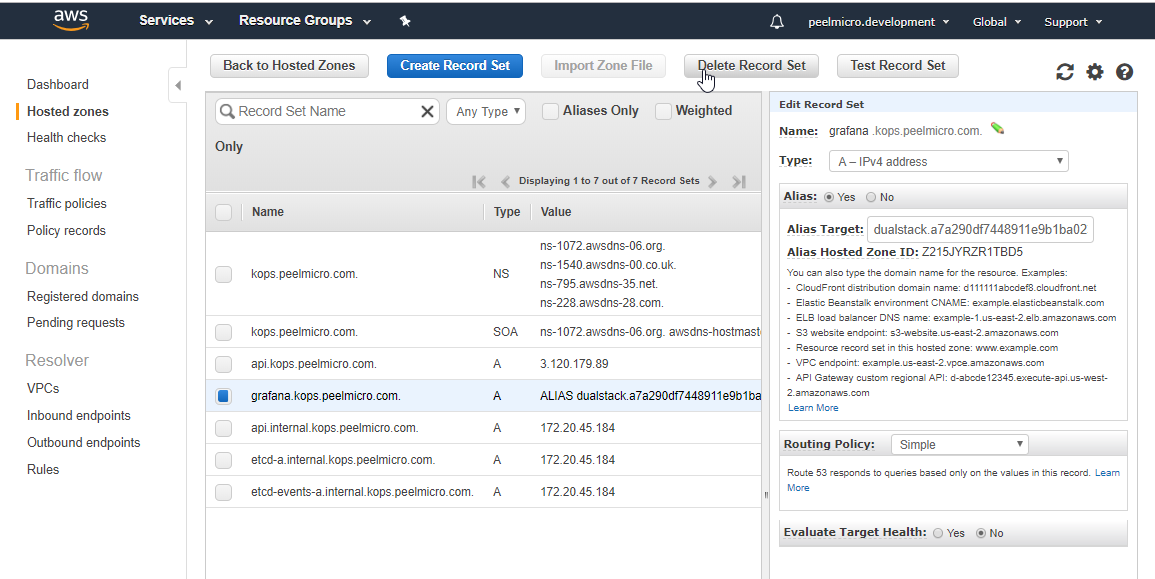

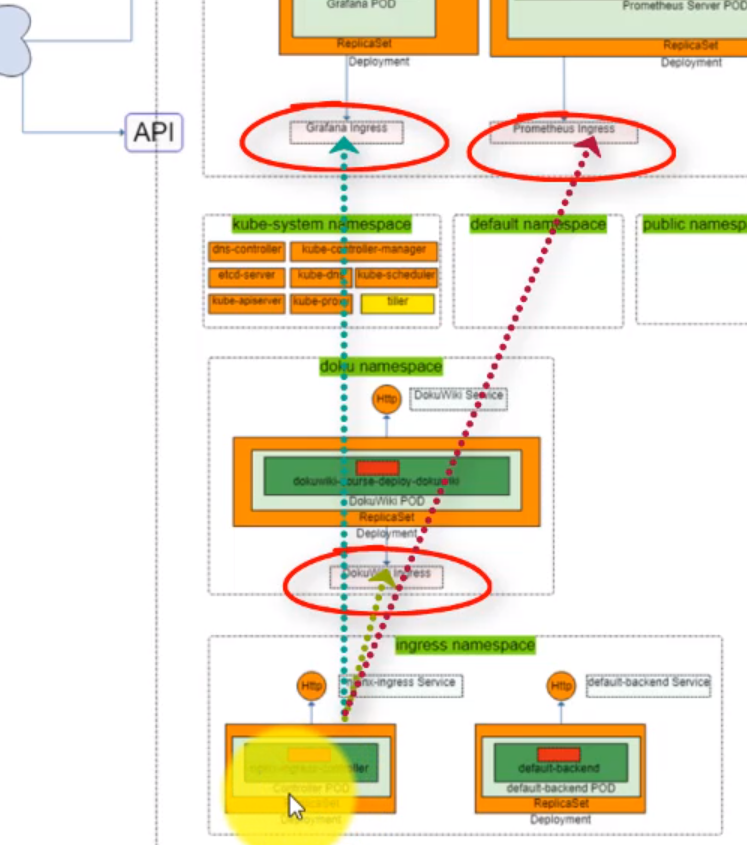

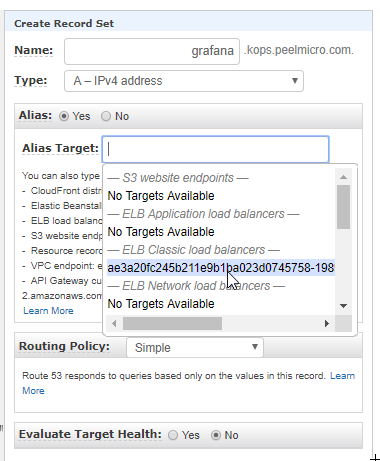

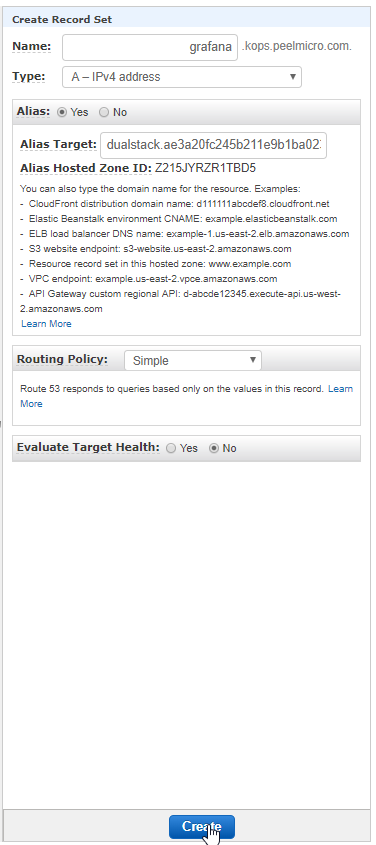

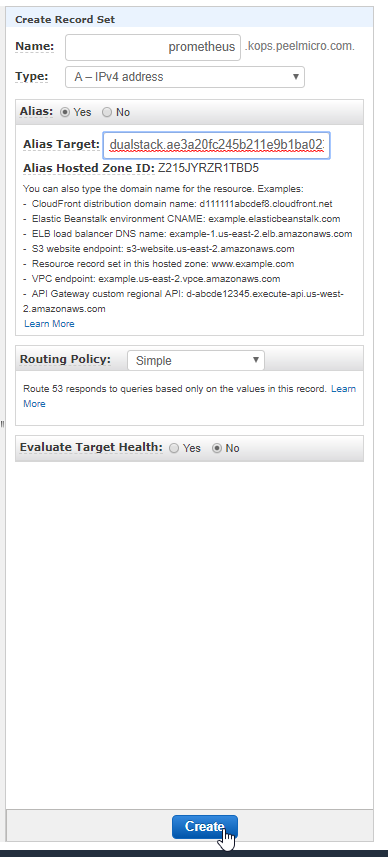

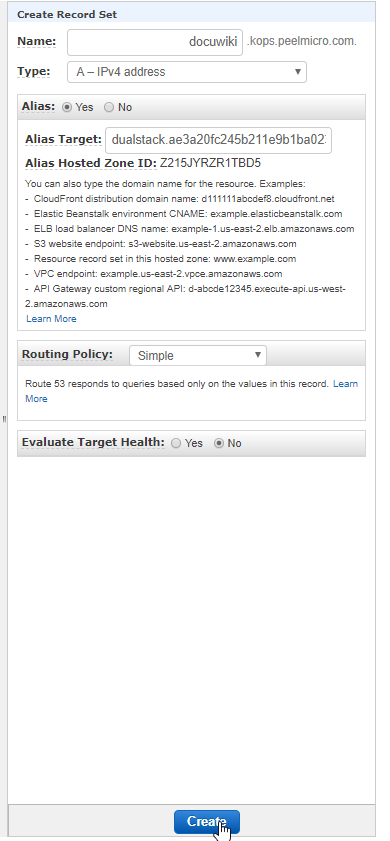

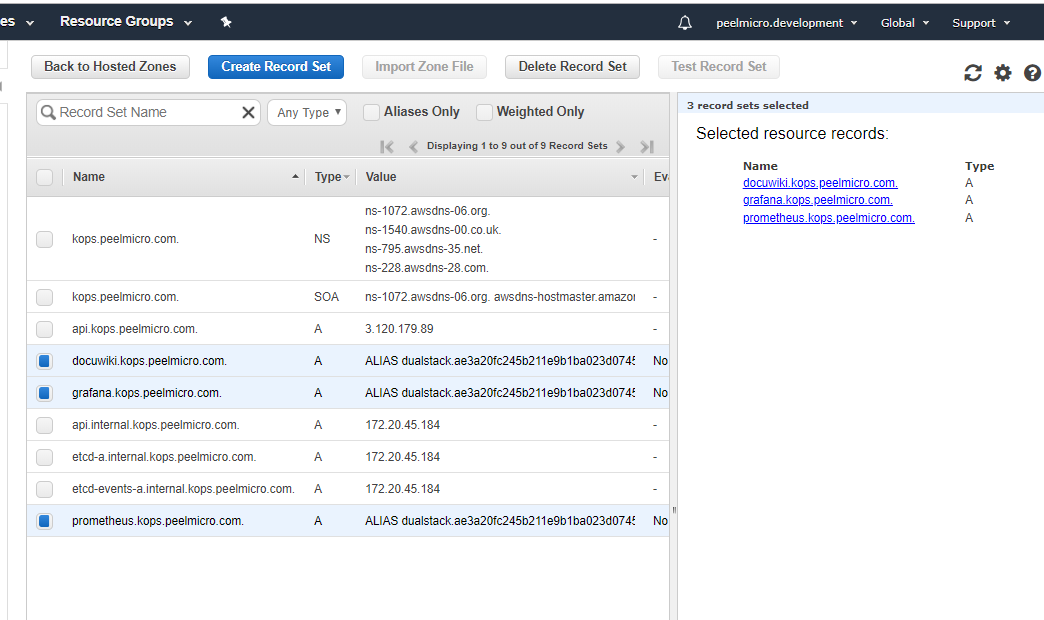

- 50. Process Grafana and Prometheus helmfile deployment

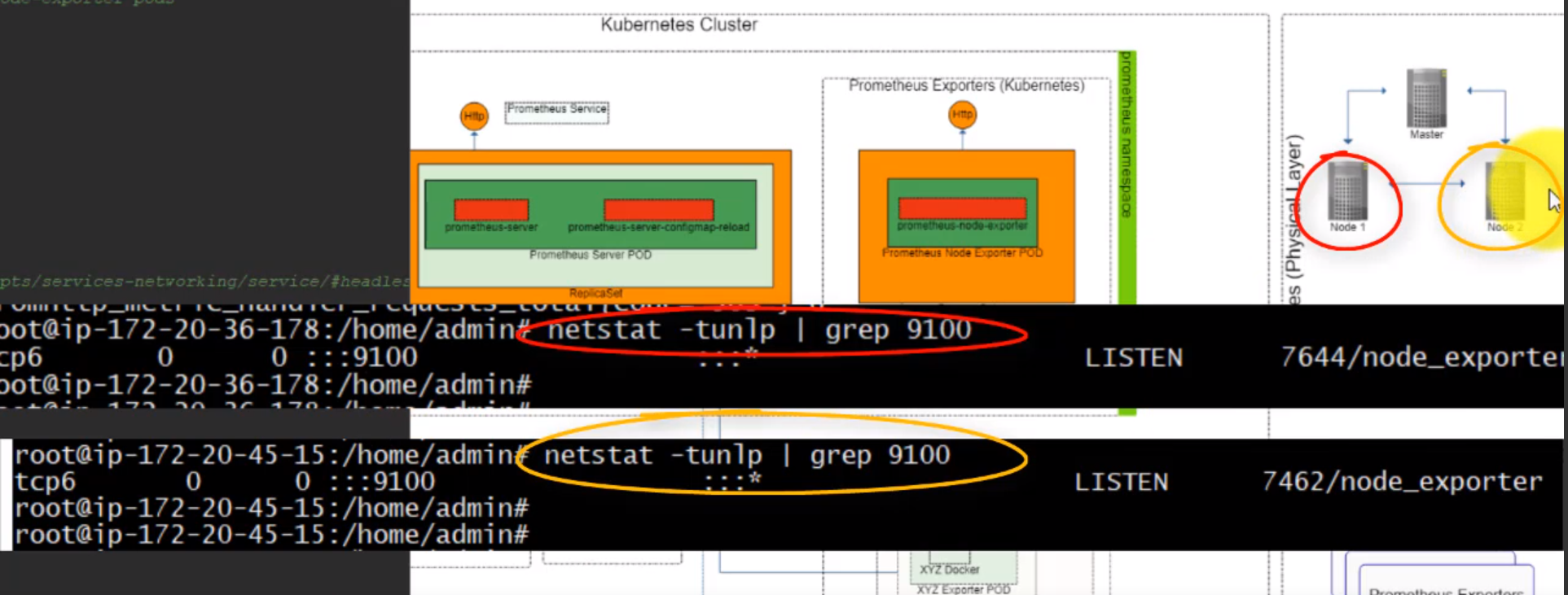

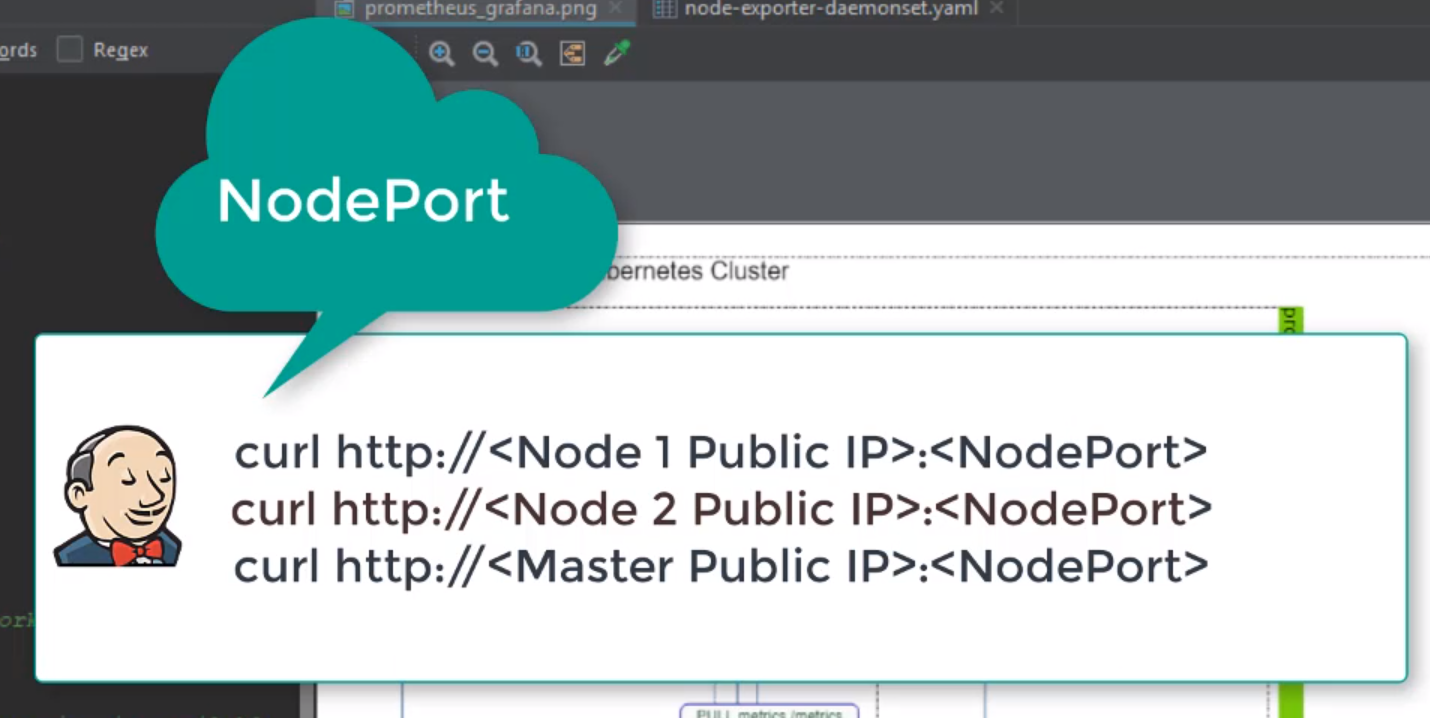

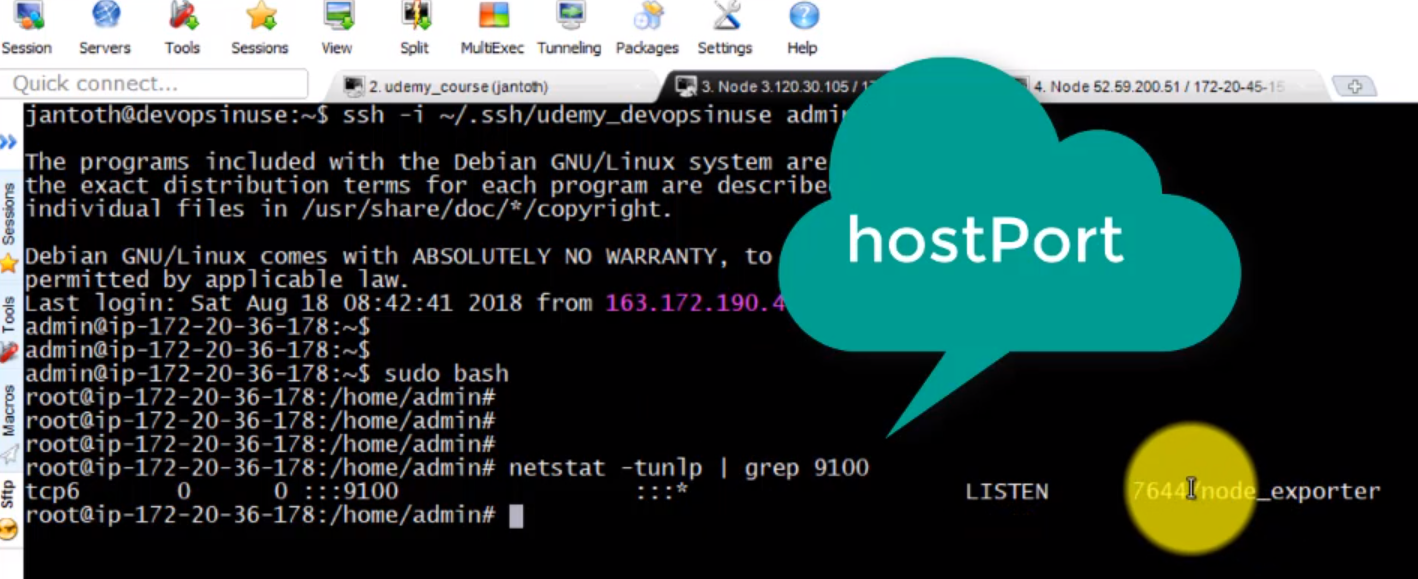

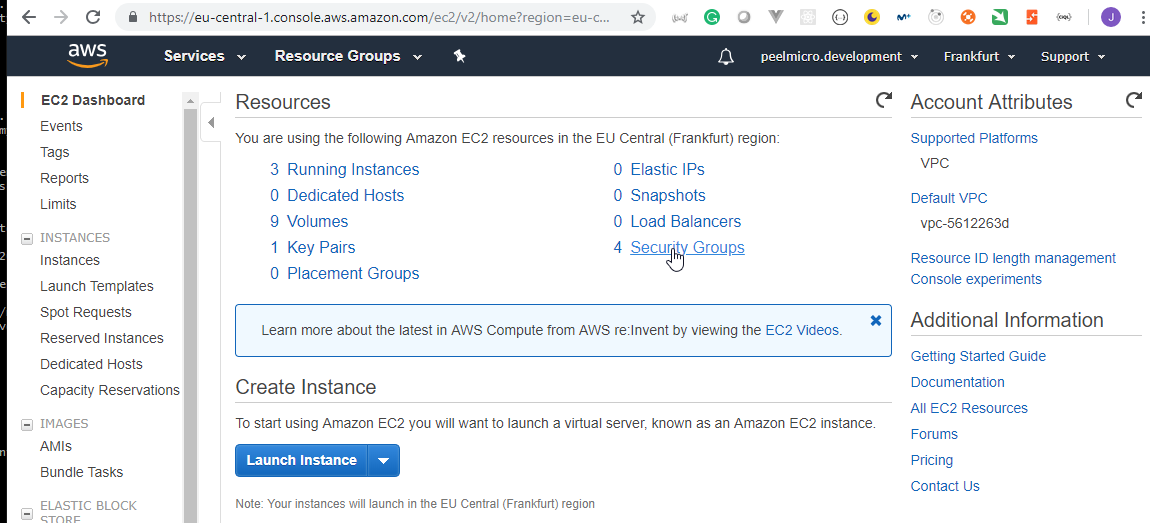

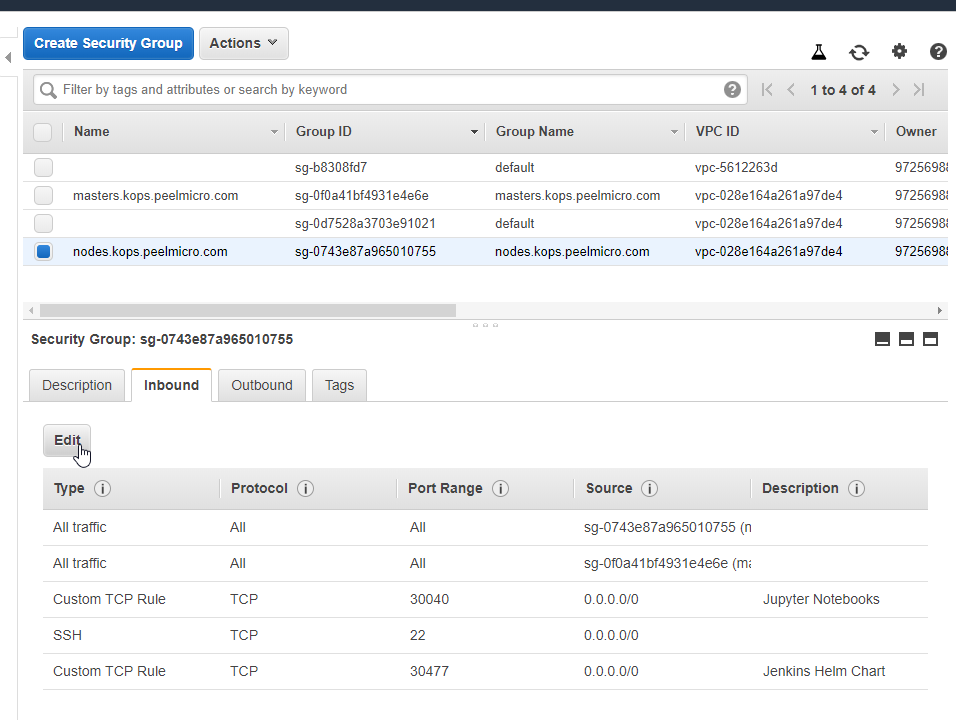

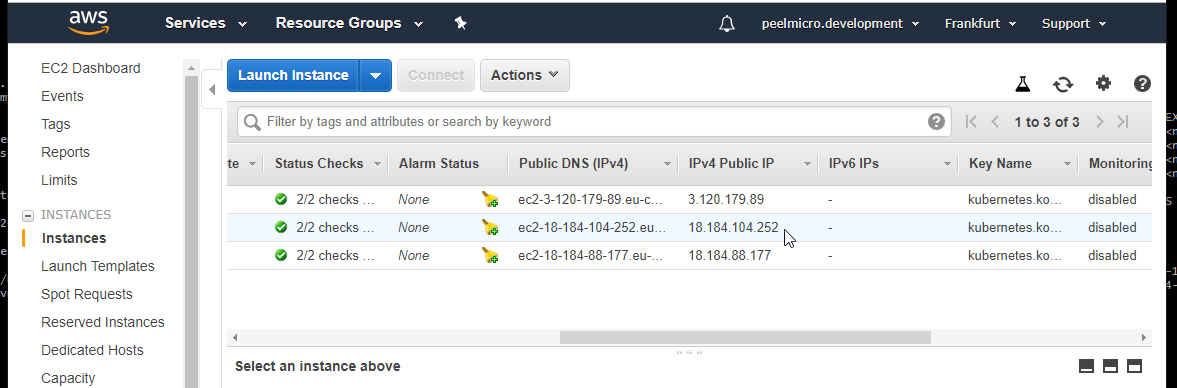

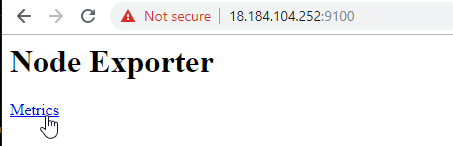

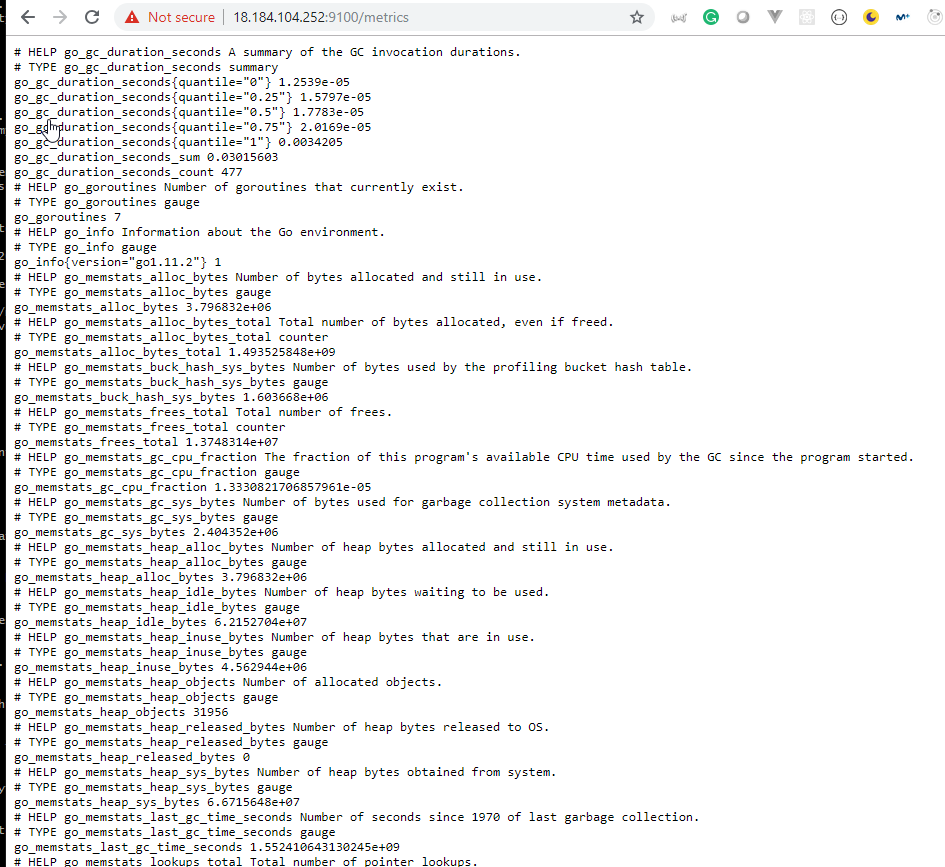

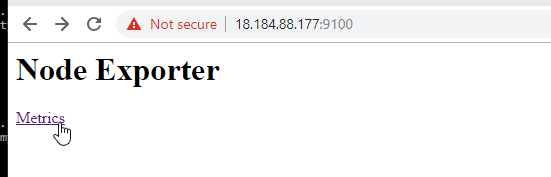

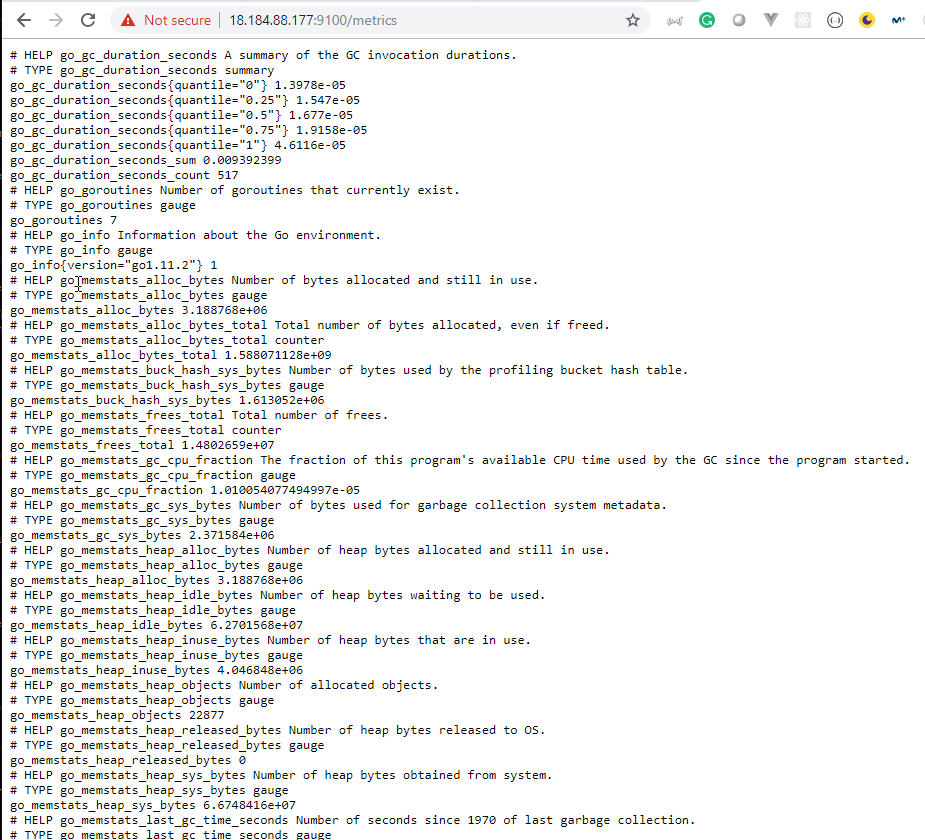

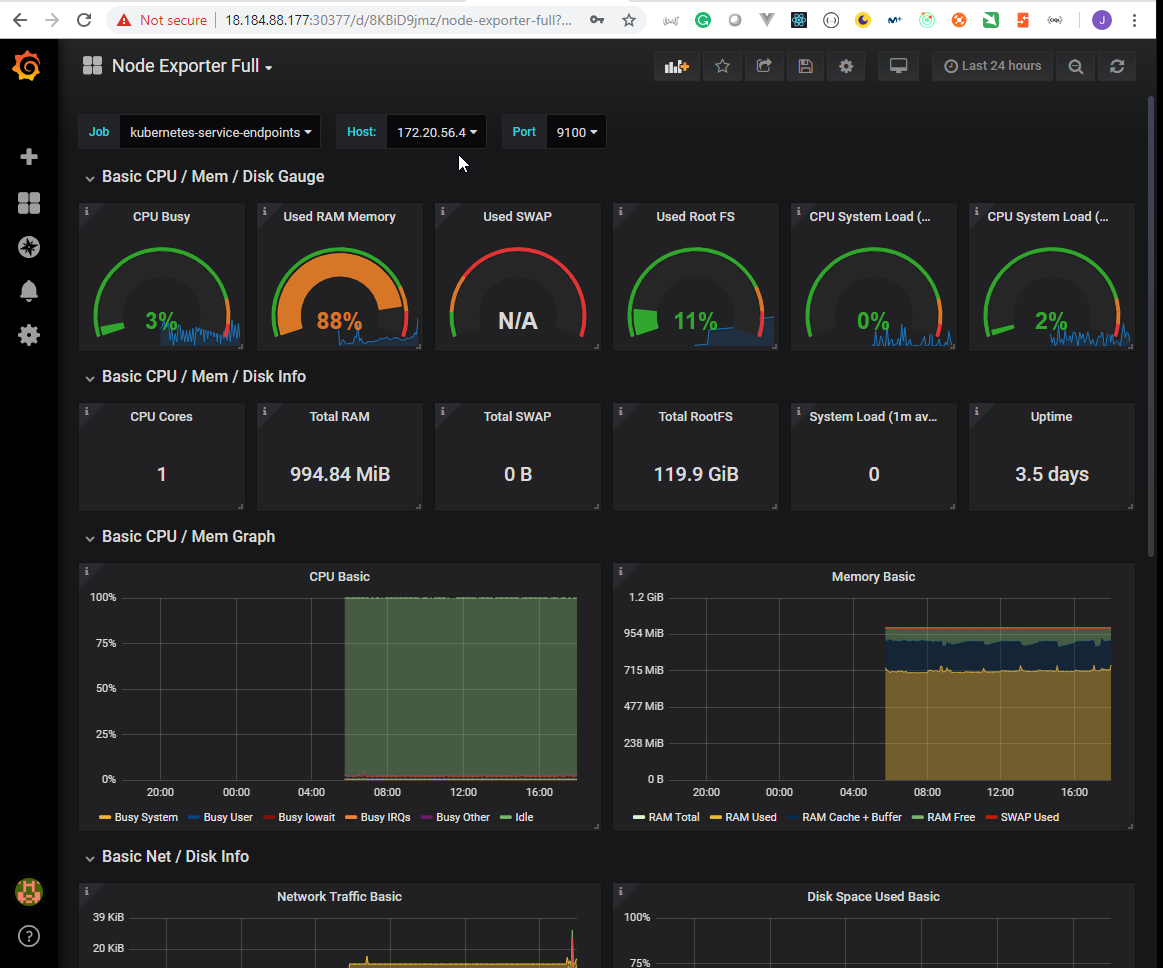

- 51. Exploring Prometheus Node Exporter

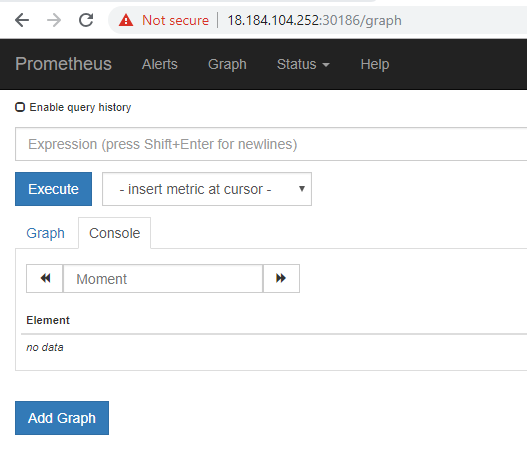

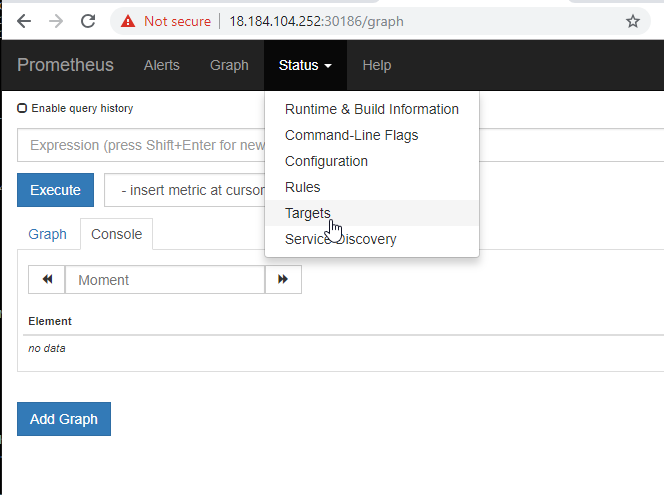

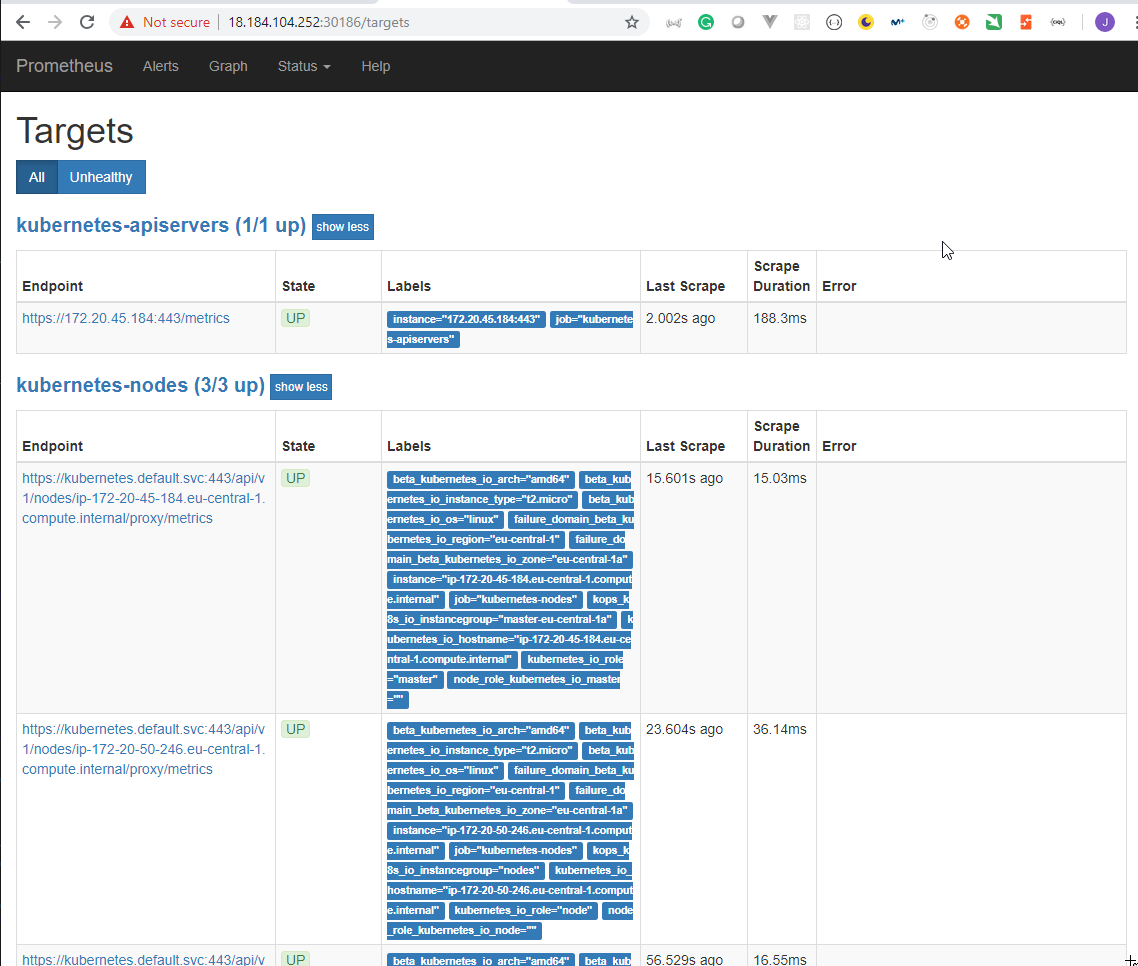

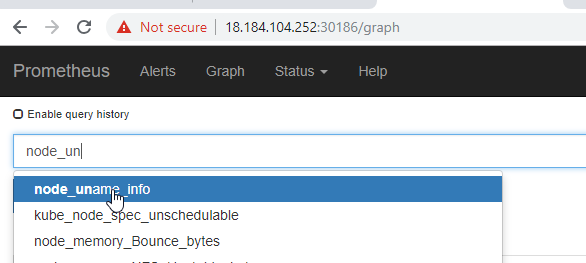

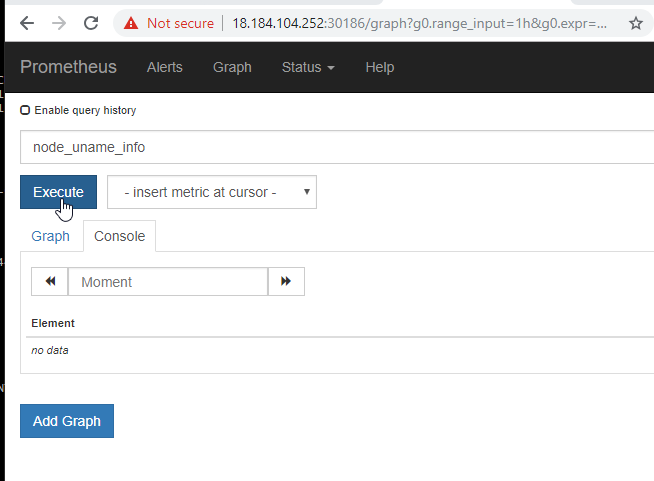

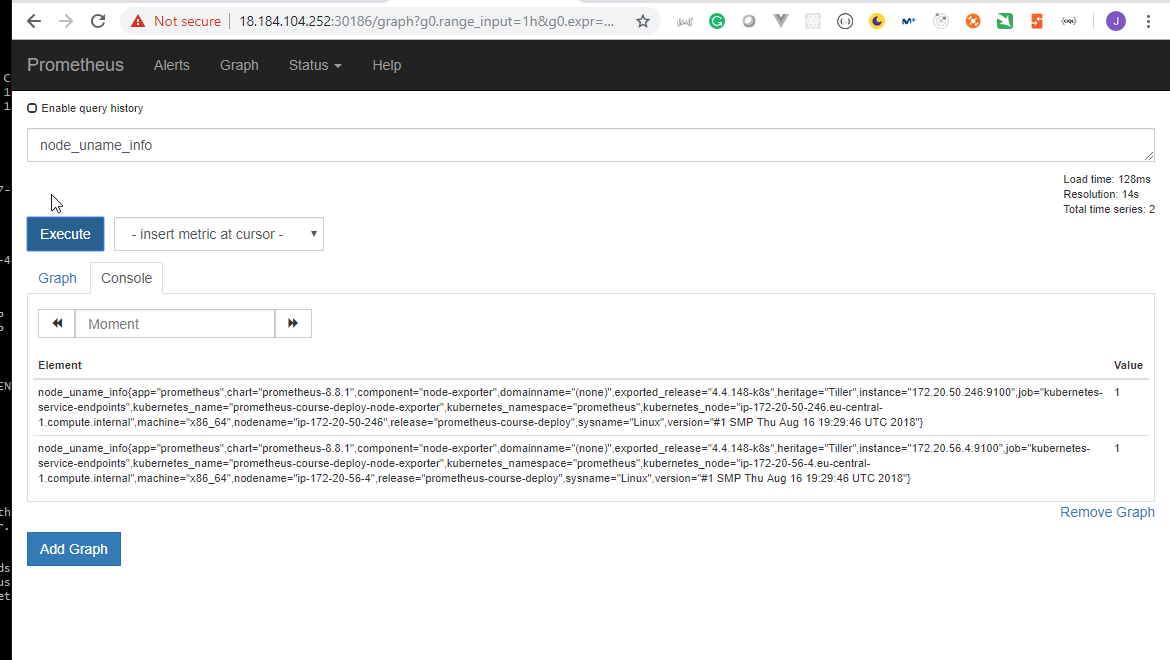

- 52. Explore Prometheus Web User Interface

- 53. Explore Grafana Web User Interface

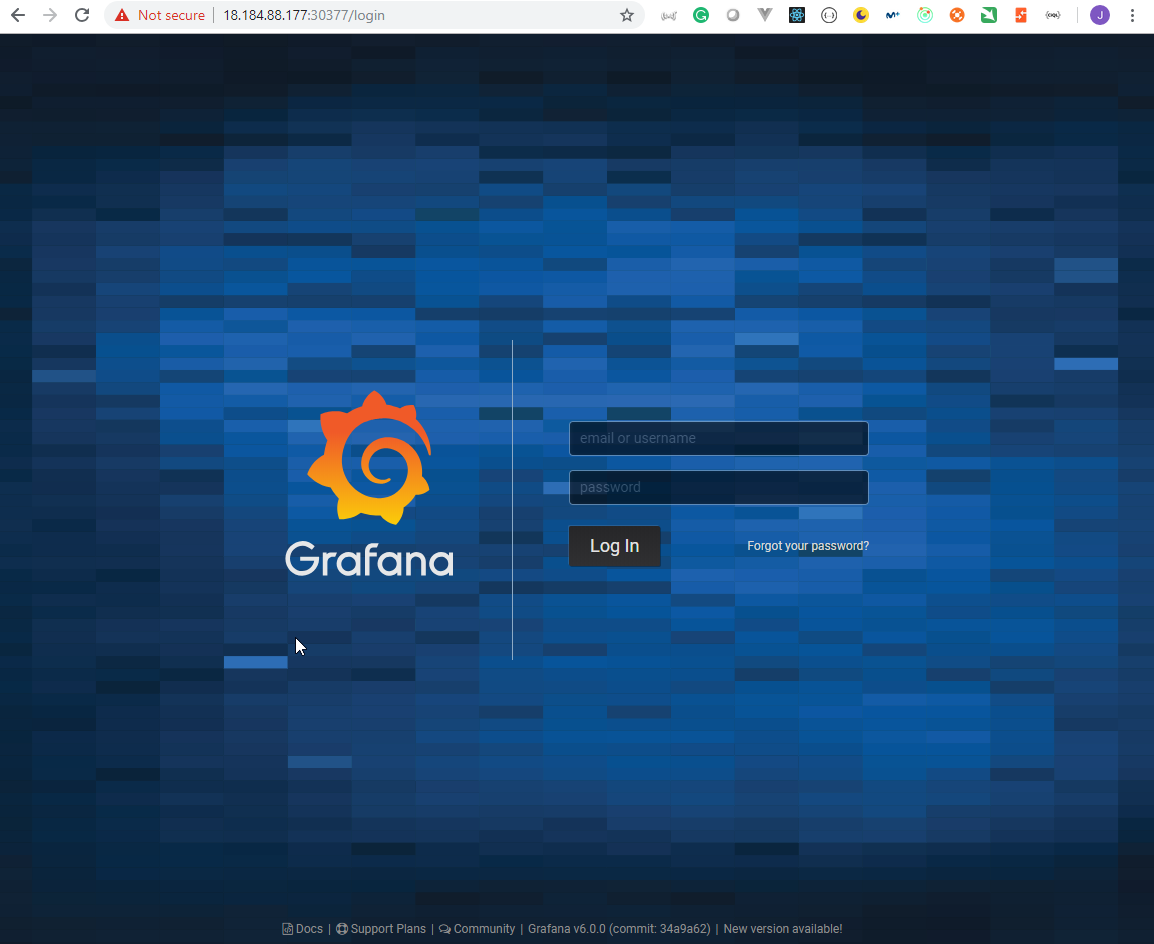

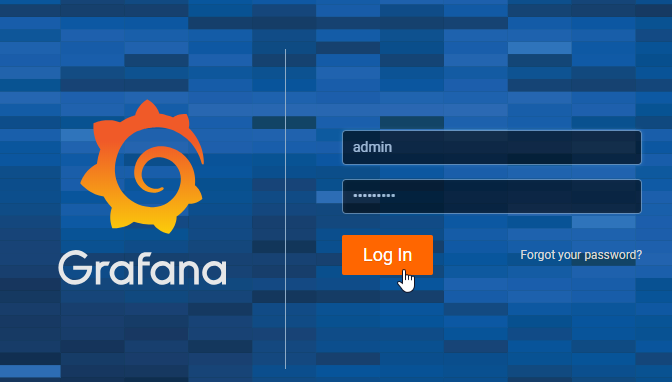

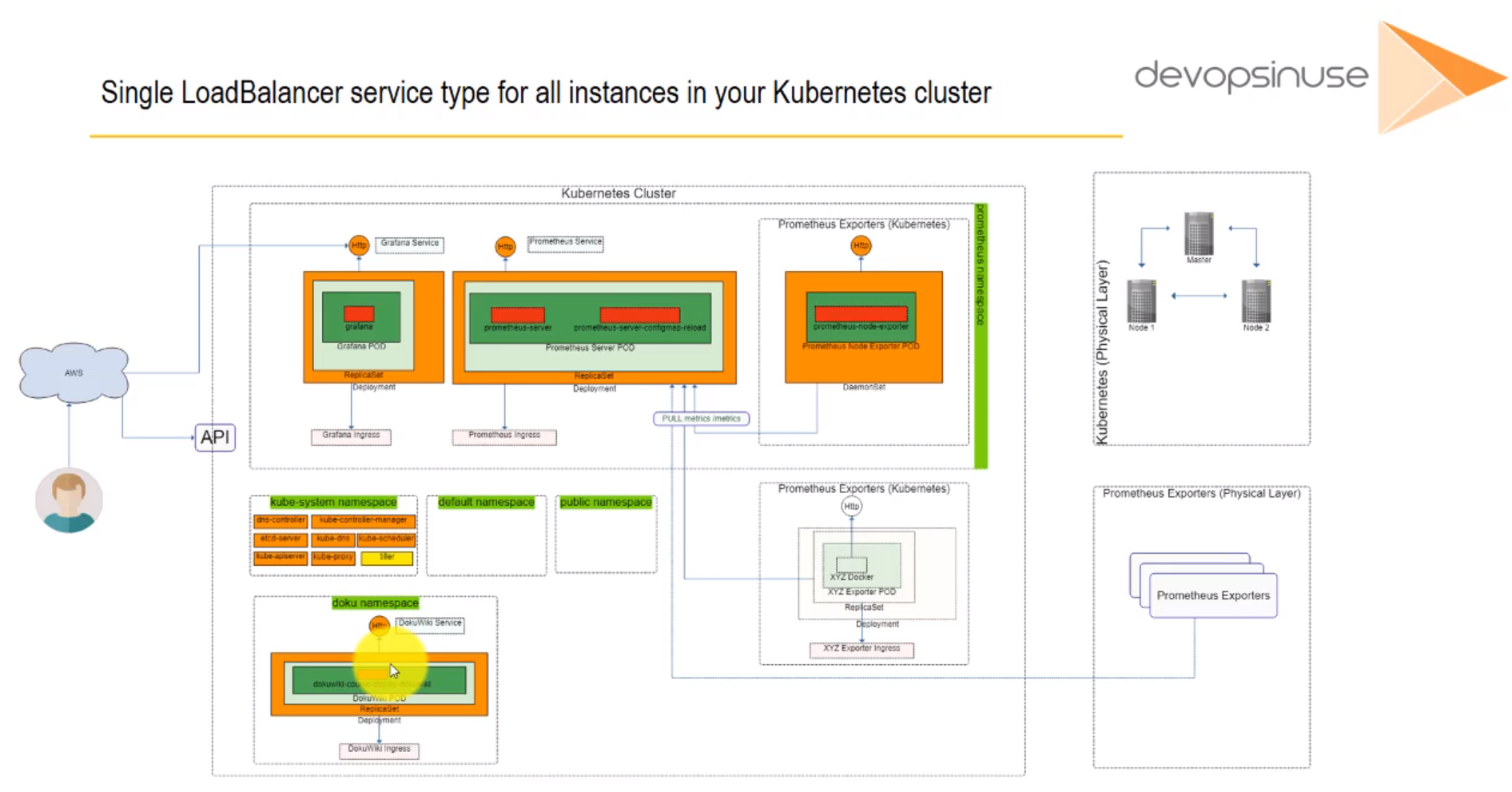

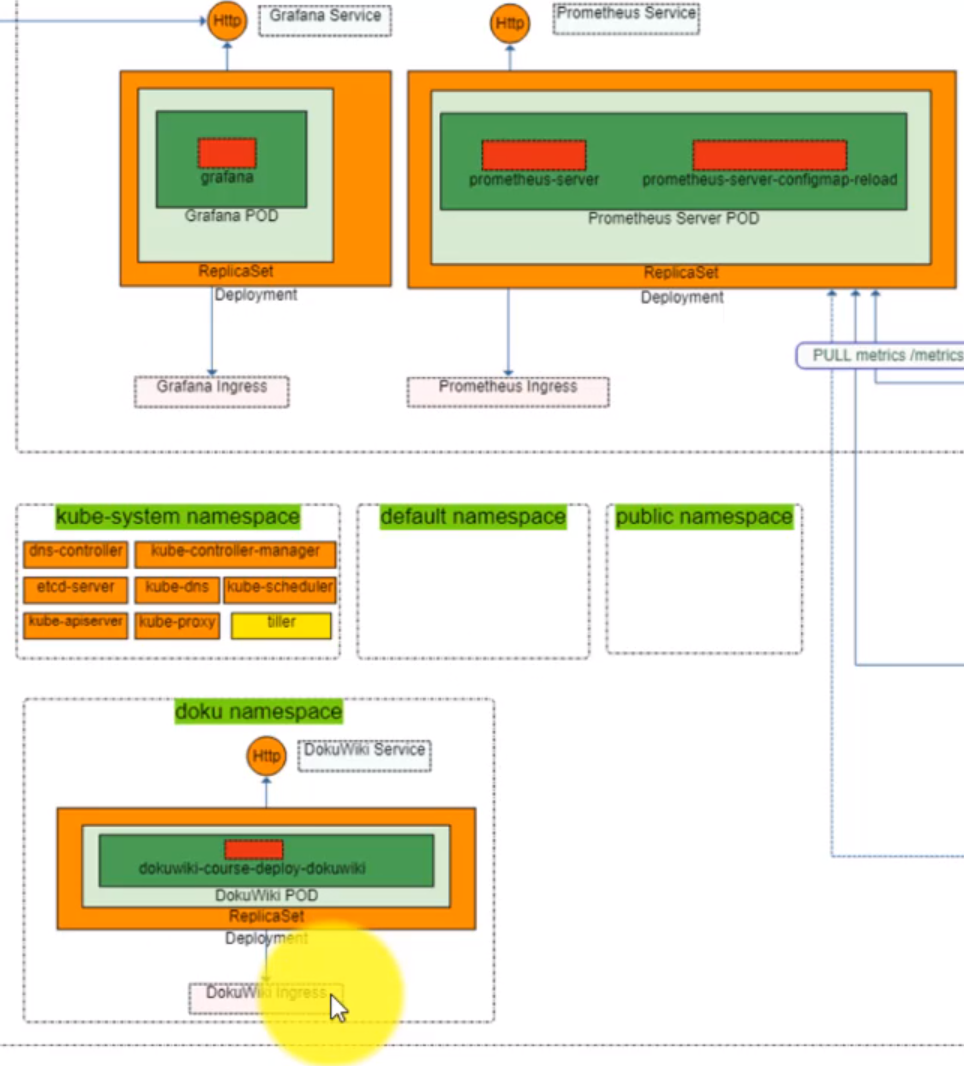

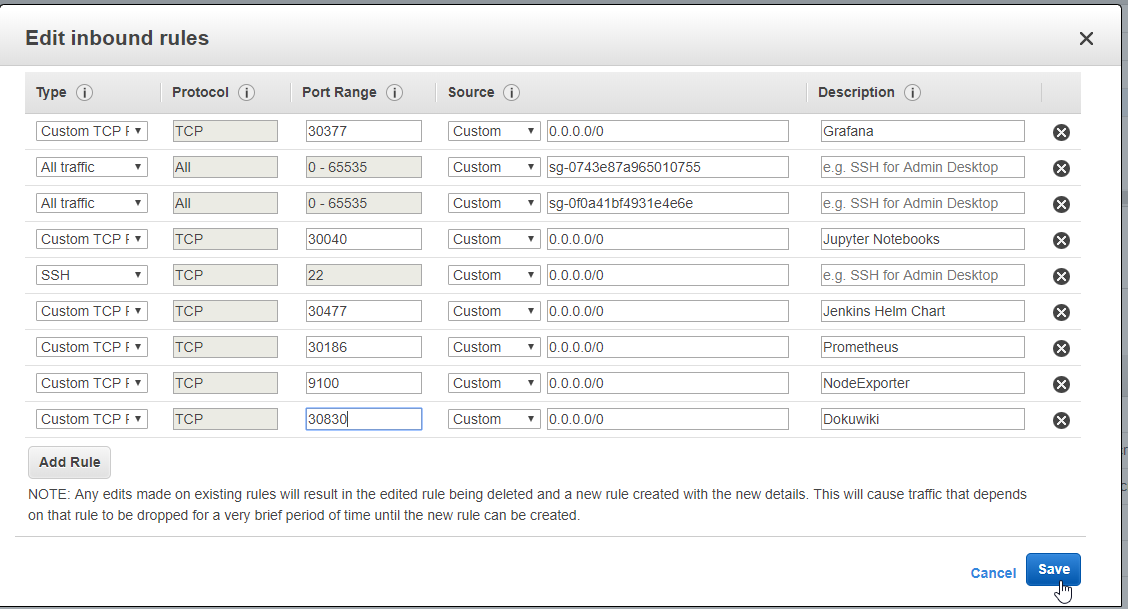

- Section: 6. Ingress and LoadBalancer type of service for your Kubernetes cluster

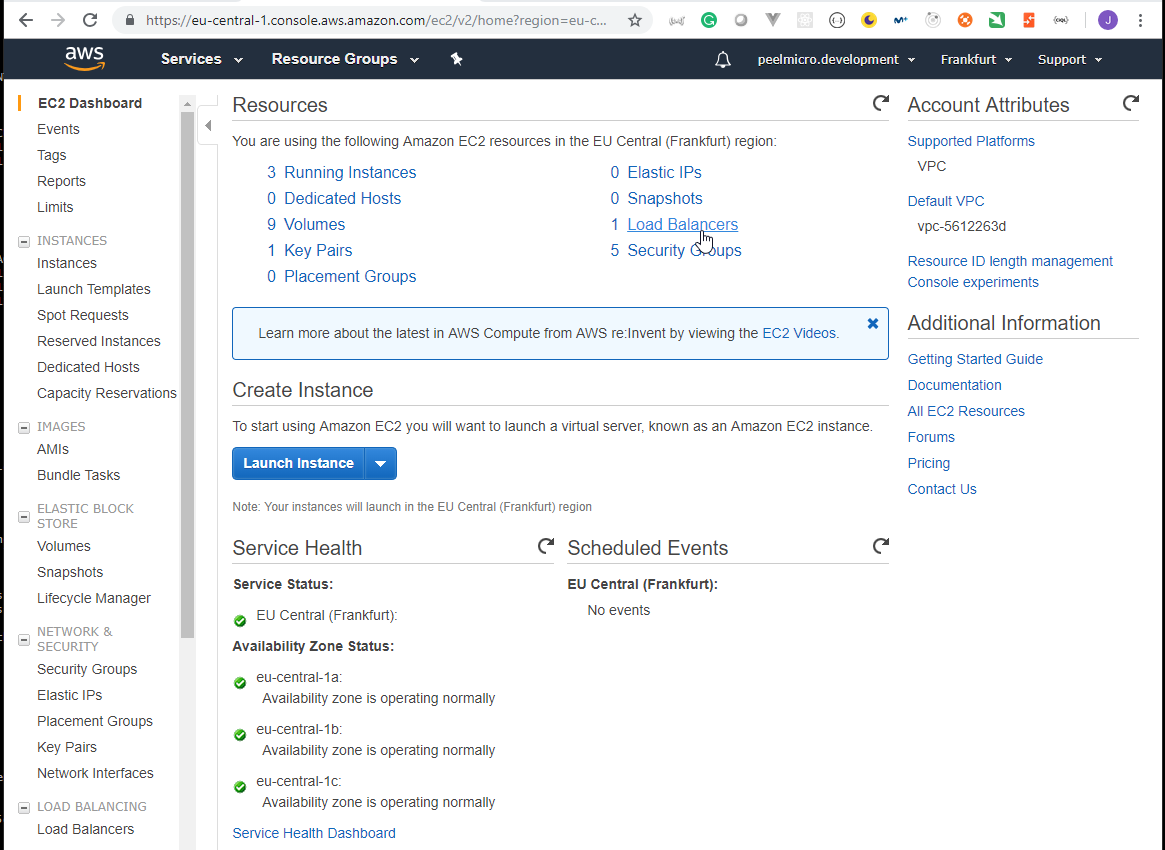

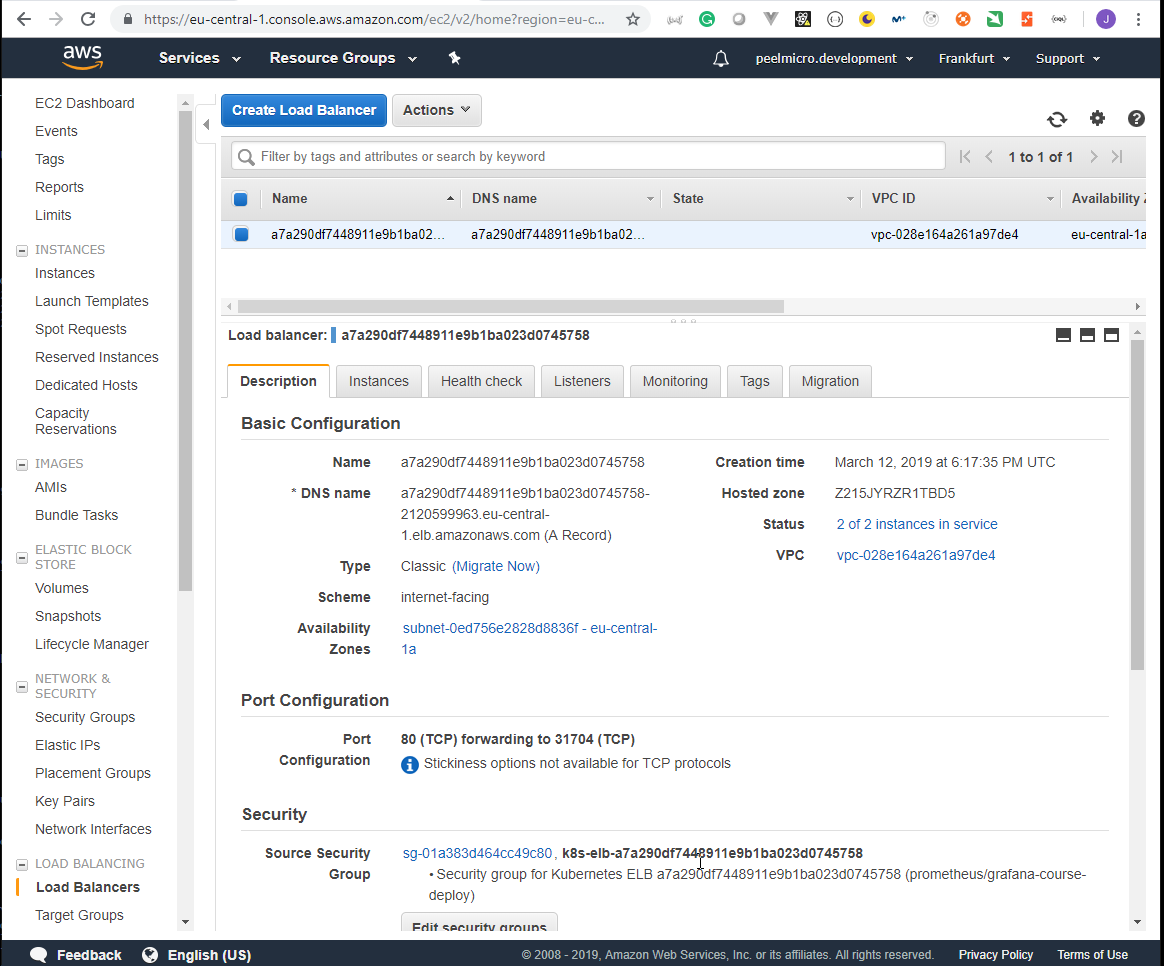

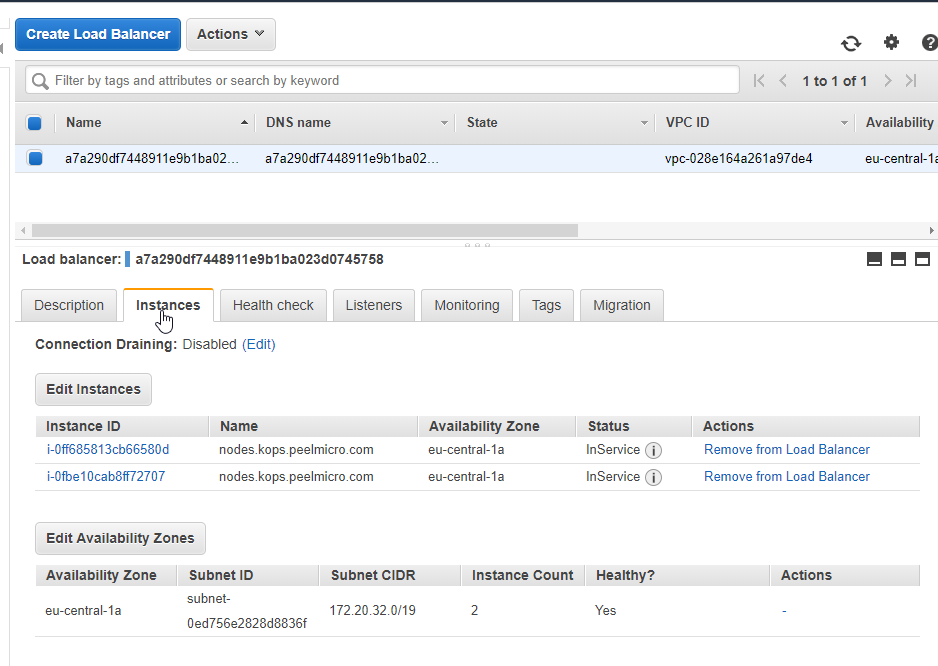

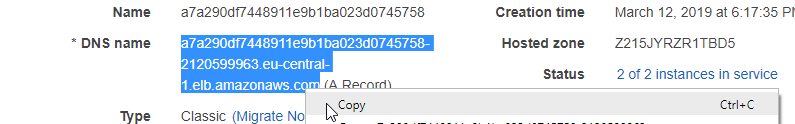

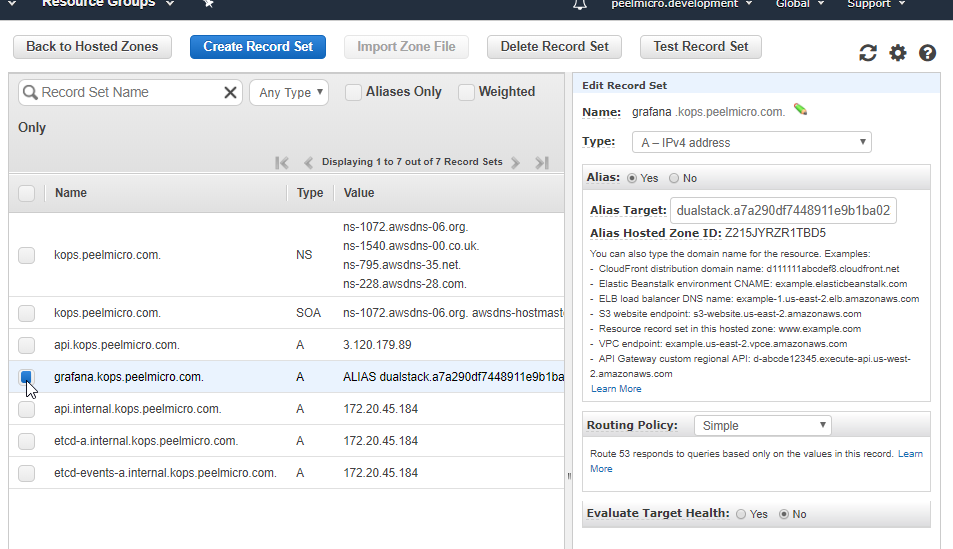

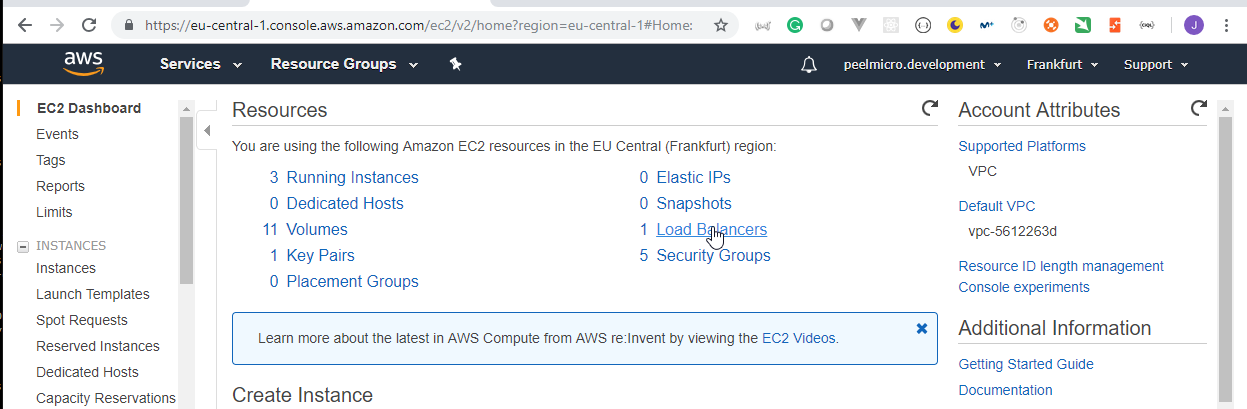

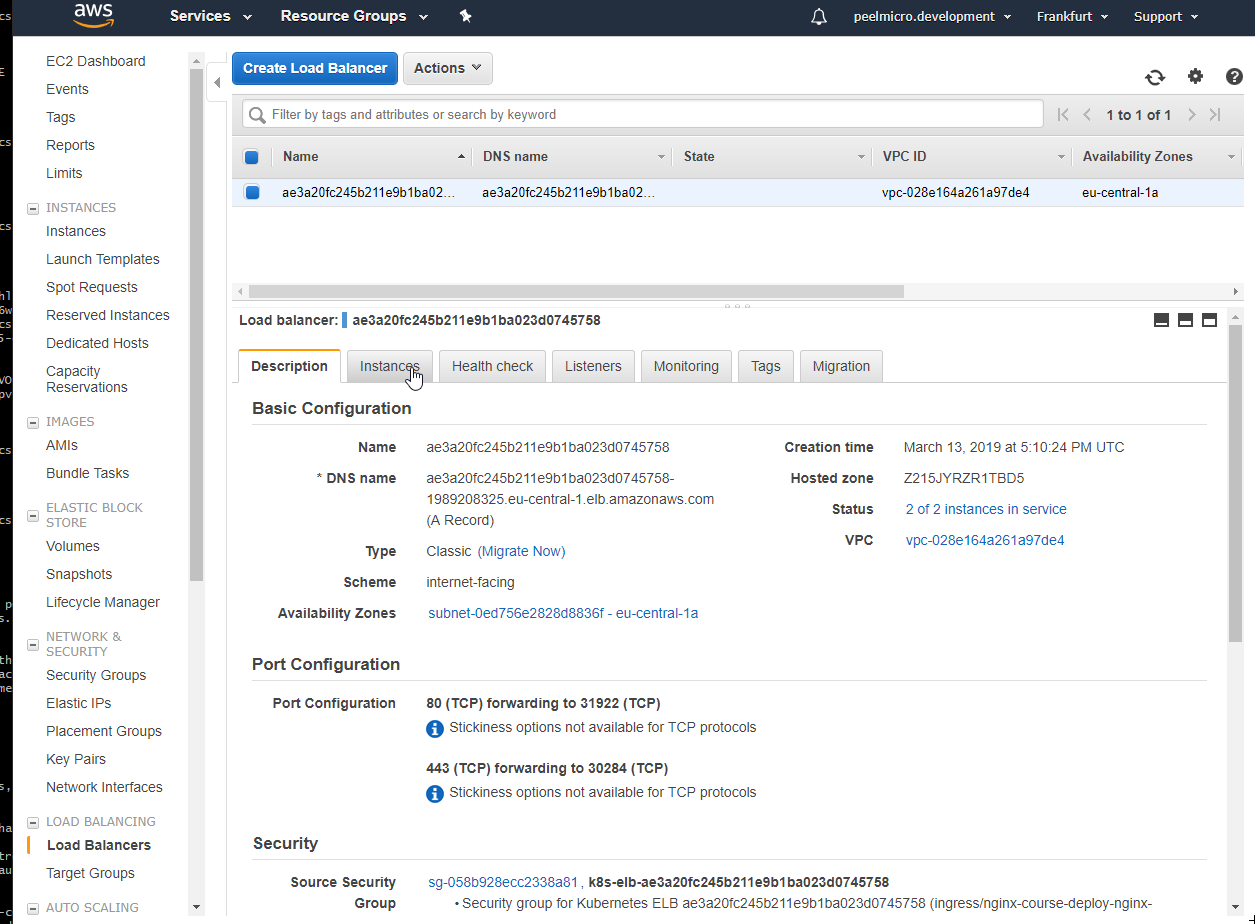

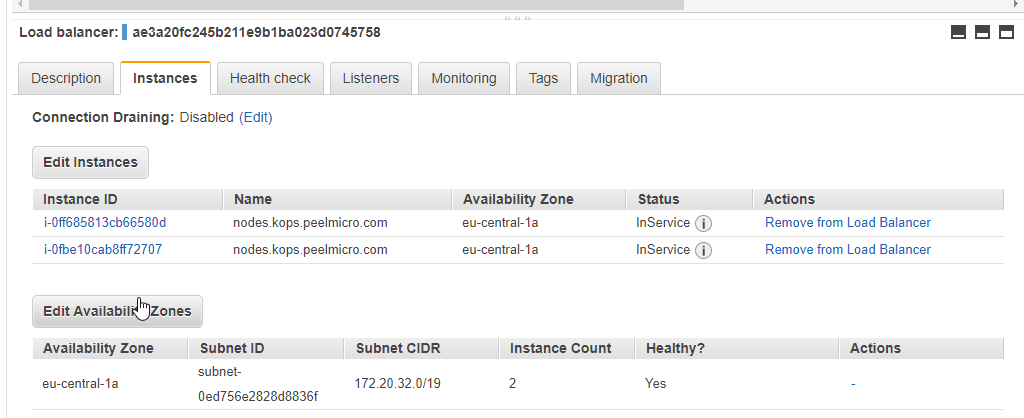

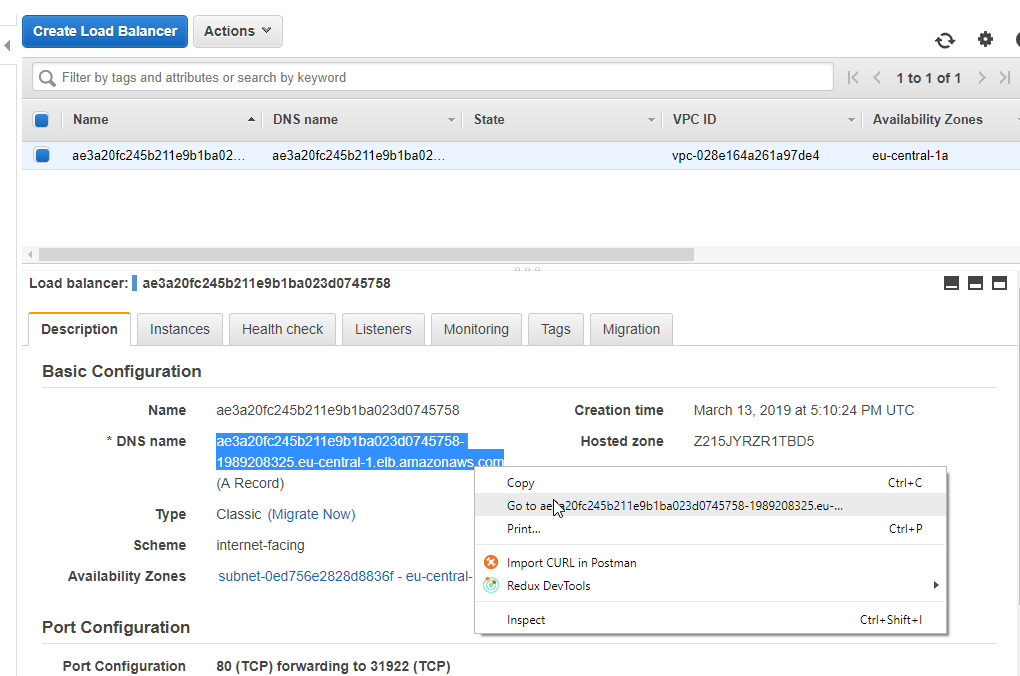

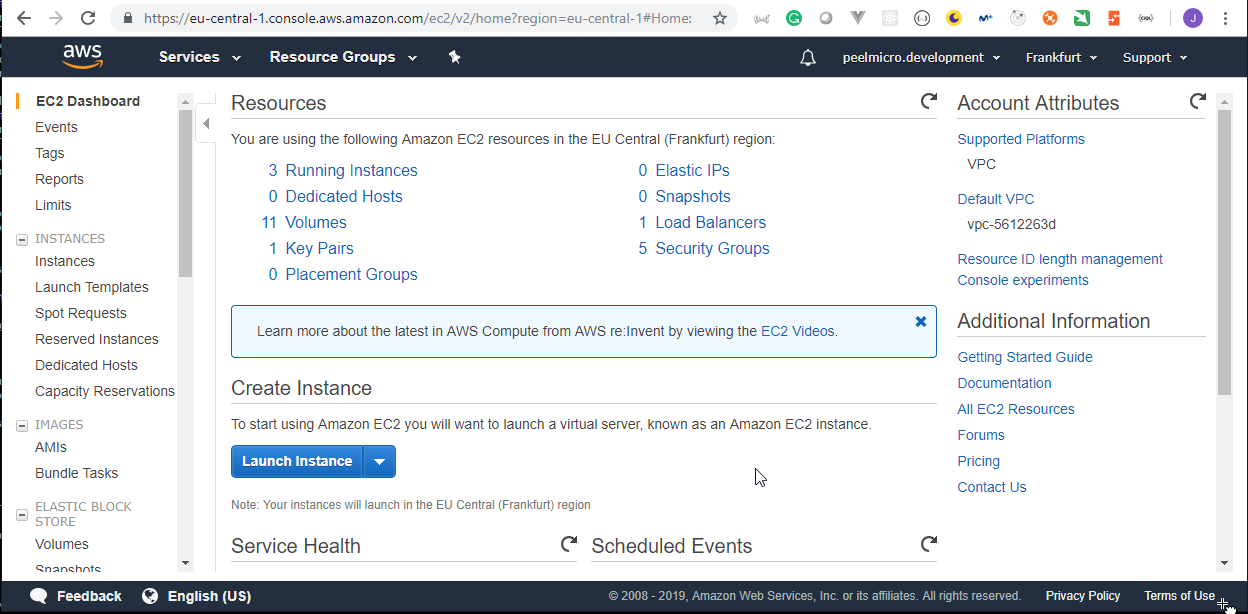

- 54. LoadBalancer Grafana Service

- 55. Materials: Helmfile specification to add DokuWiki deployment

- 56. Single LoadBalancer service type for all instances in your K8s (DokuWiki)

- 57. Materials: Helmfile specification to add nginx-ingress Helm Chart deployment

- 58. Nginx Ingress Controller Pod

- 59. Configure Ingress Kubernetes Objects for Grafana, Prometheus and DokuWiki

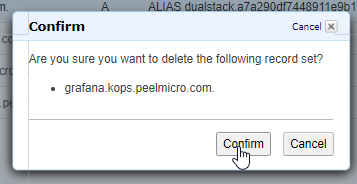

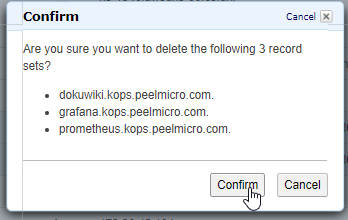

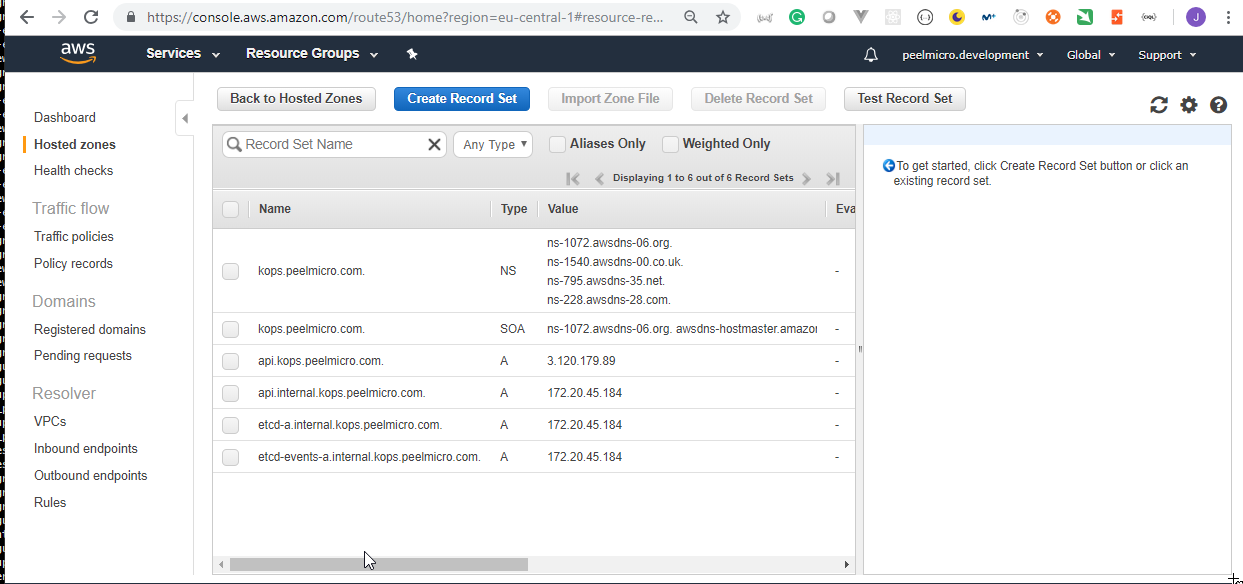

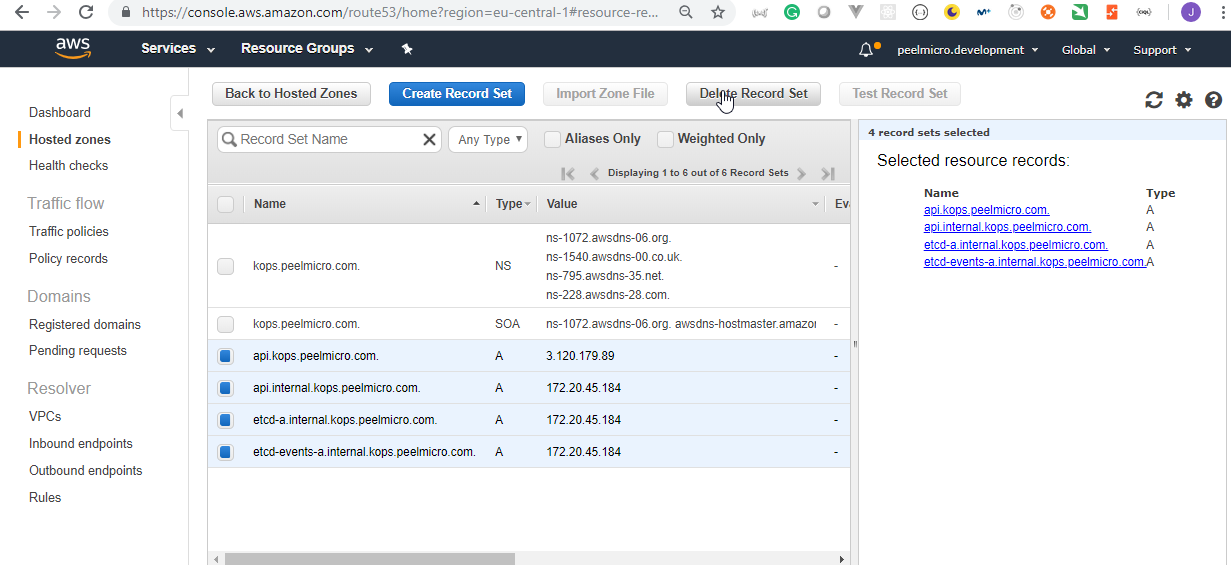

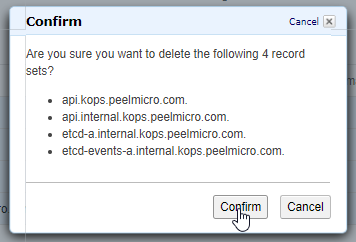

- 60. Important: Clean up Kubernetes cluster and all the AWS resources

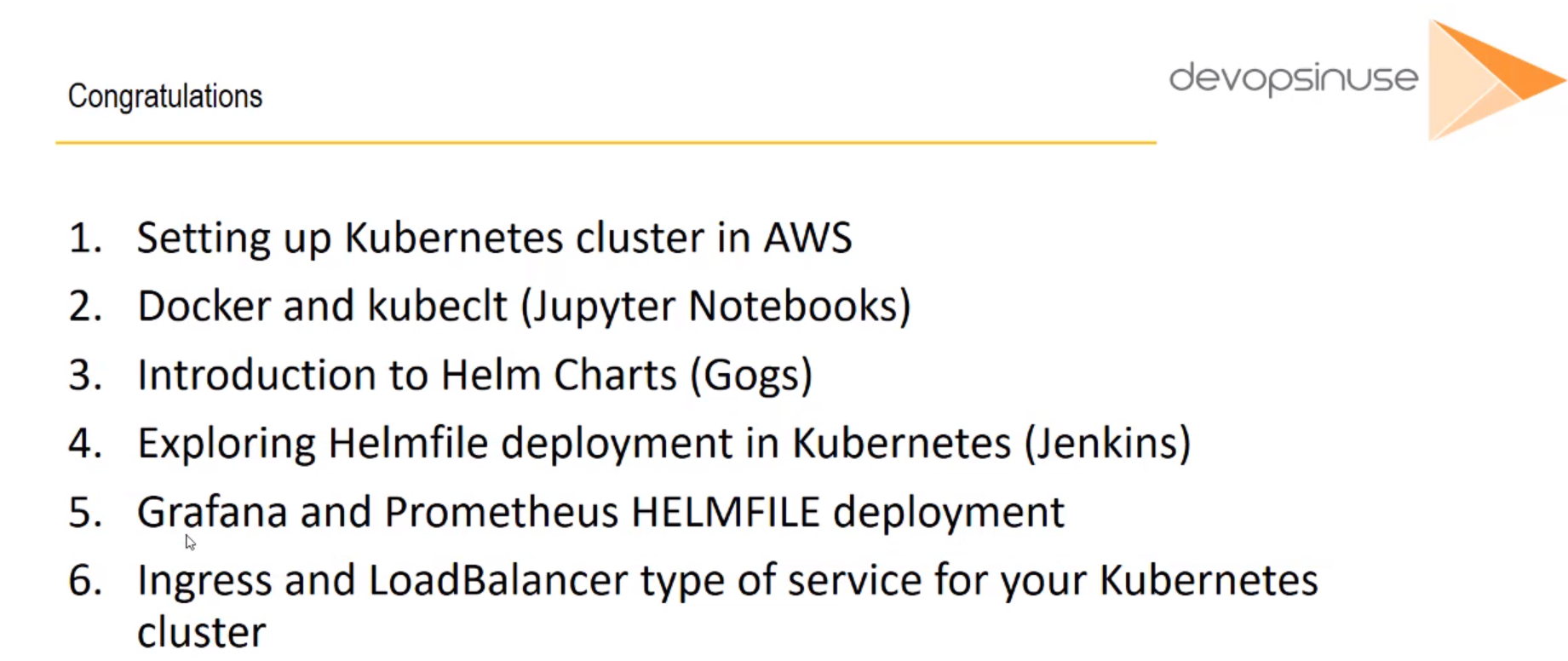

- 61. Congratulations

What I've learned

- Deployment concepts in Kubernetes by using HELM and HELMFILE

- How to work and interact with Kubernetes orchestration platform.

- Deploy Kubernetes cluster in AWS by using kops and terraform.

- How to use and adjust Helm charts (standard deployment methodology).

Section: 1. Introduction

1. Welcome to course

WelcomeToCourse2

WelcomeToCourse3

WelcomeToCourse2

WelcomeToCourse3

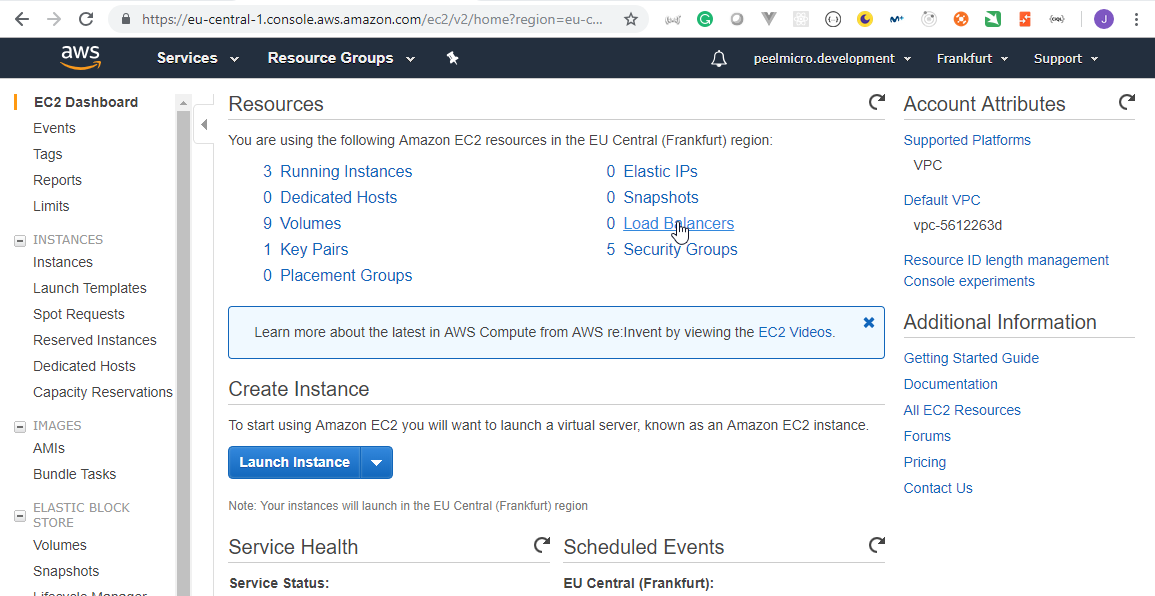

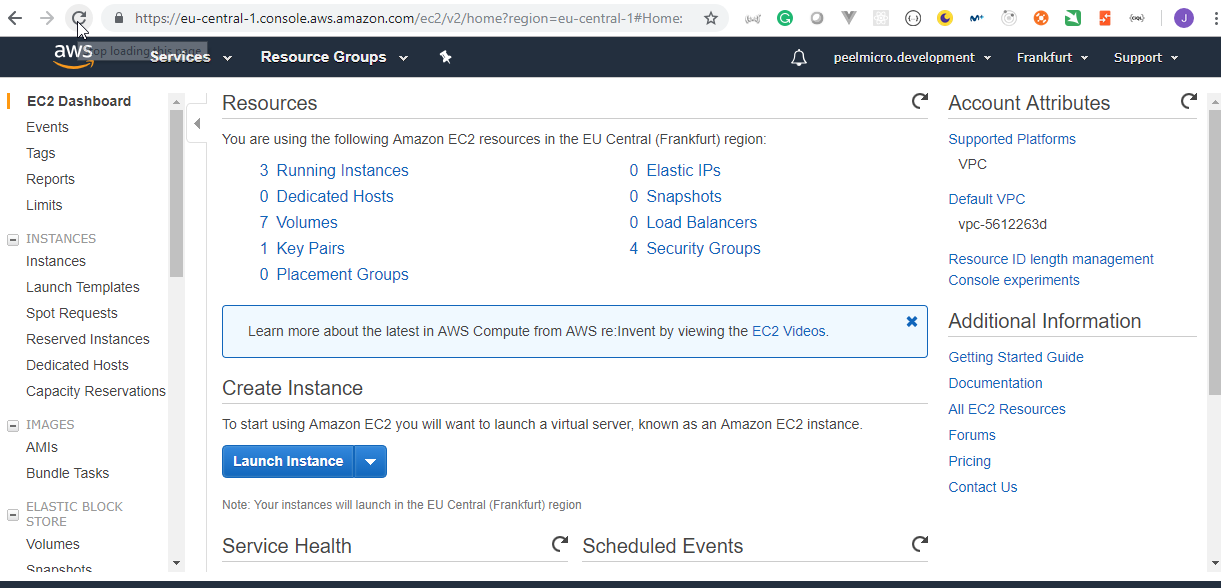

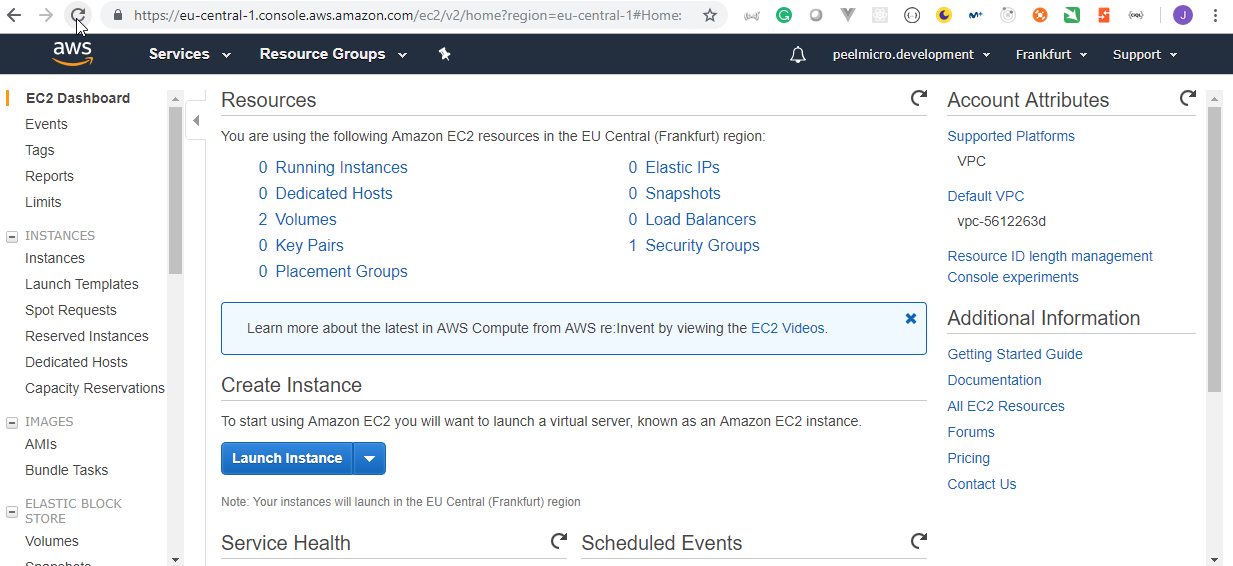

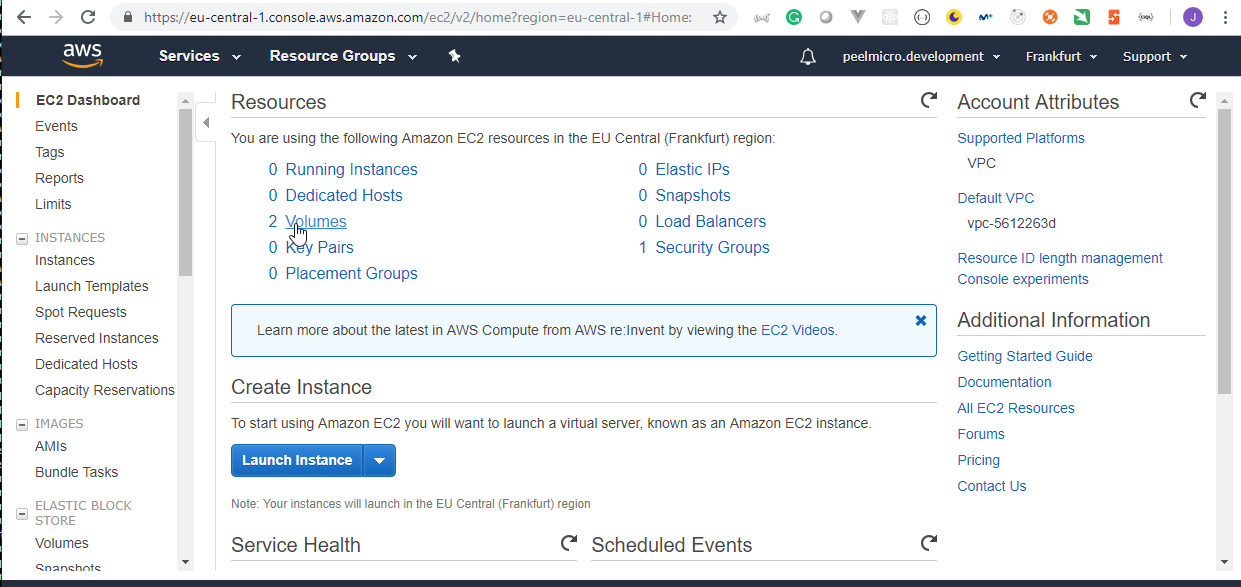

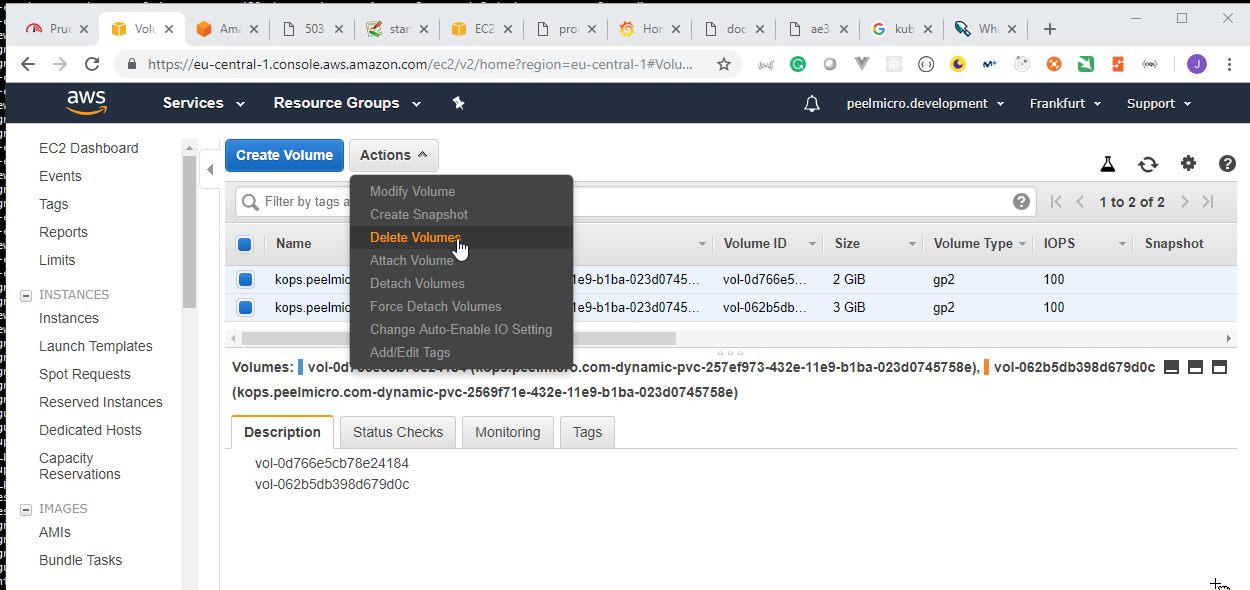

2. Materials: Delete/destroy all the AWS resources every time you do not use them

Note: I assume that if you are going through this course during several days - You always destroy all resources in AWS.

It means that you stop your Kubernetes cluster every time you are not working on it. The easiest way is to do it via terraform cd /.../.../.../terraform_code ; terraform destroy # hit yes

Destroy/delete manually if terraform can't do that:

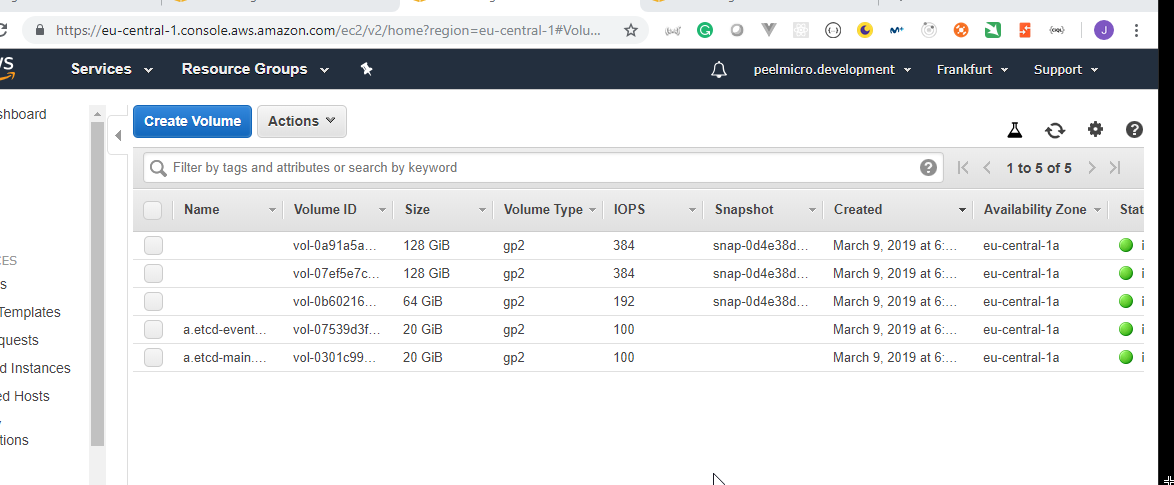

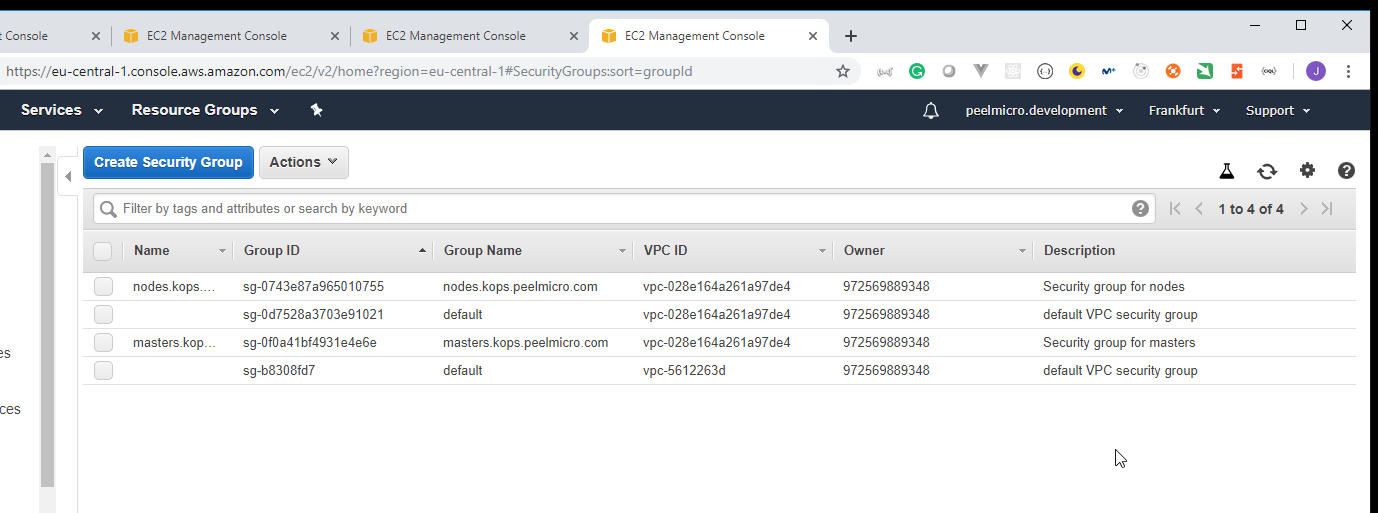

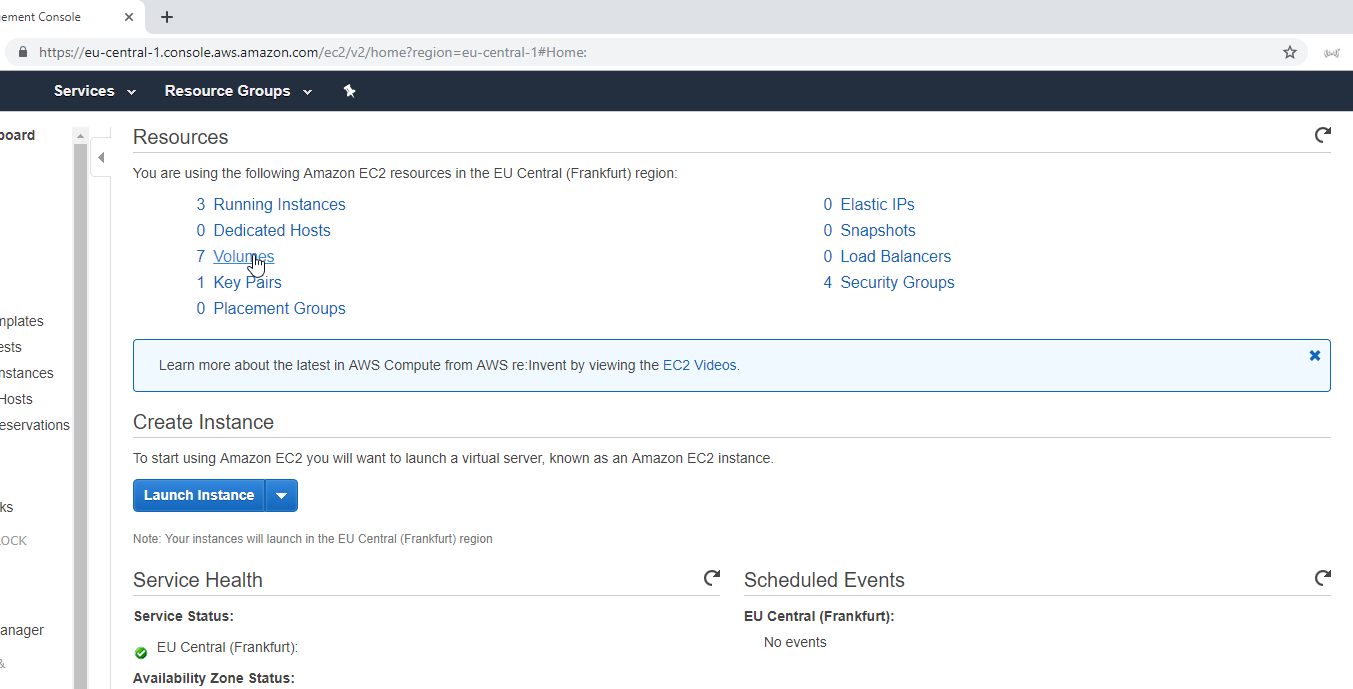

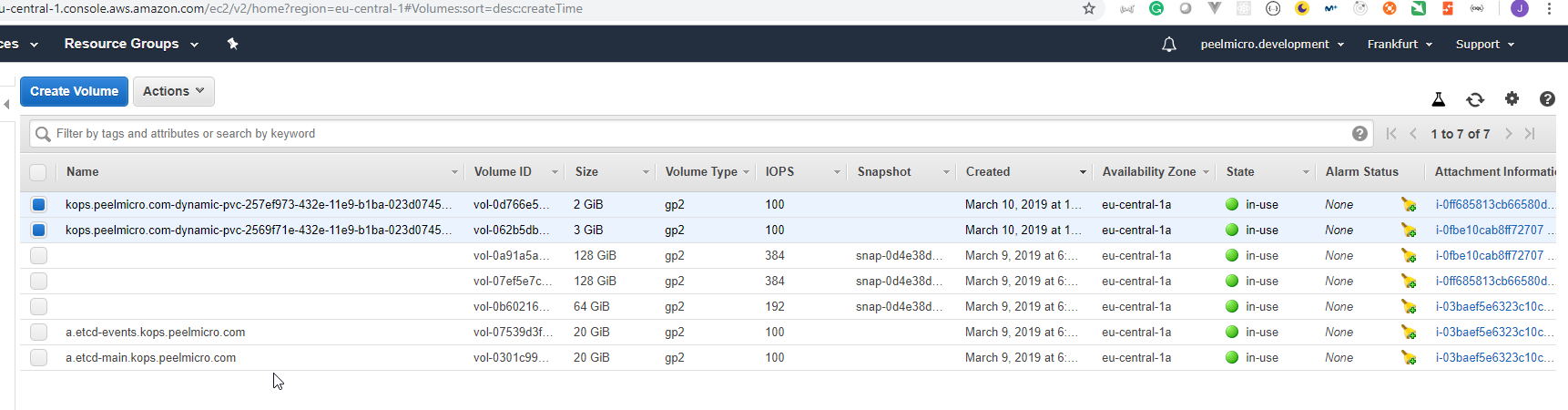

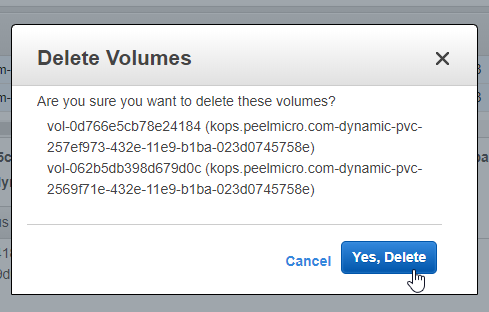

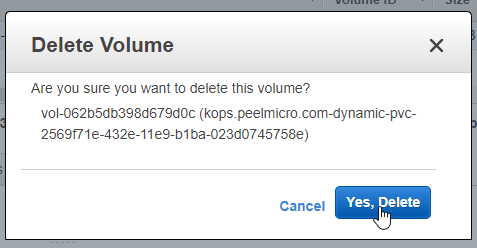

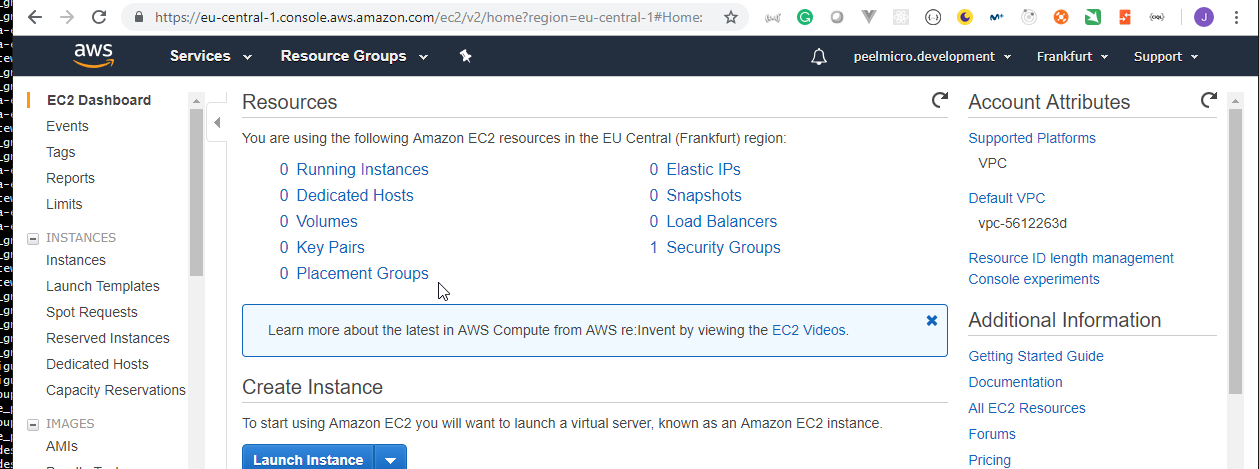

VOLUMES

LoadBalancer/s (if exists)

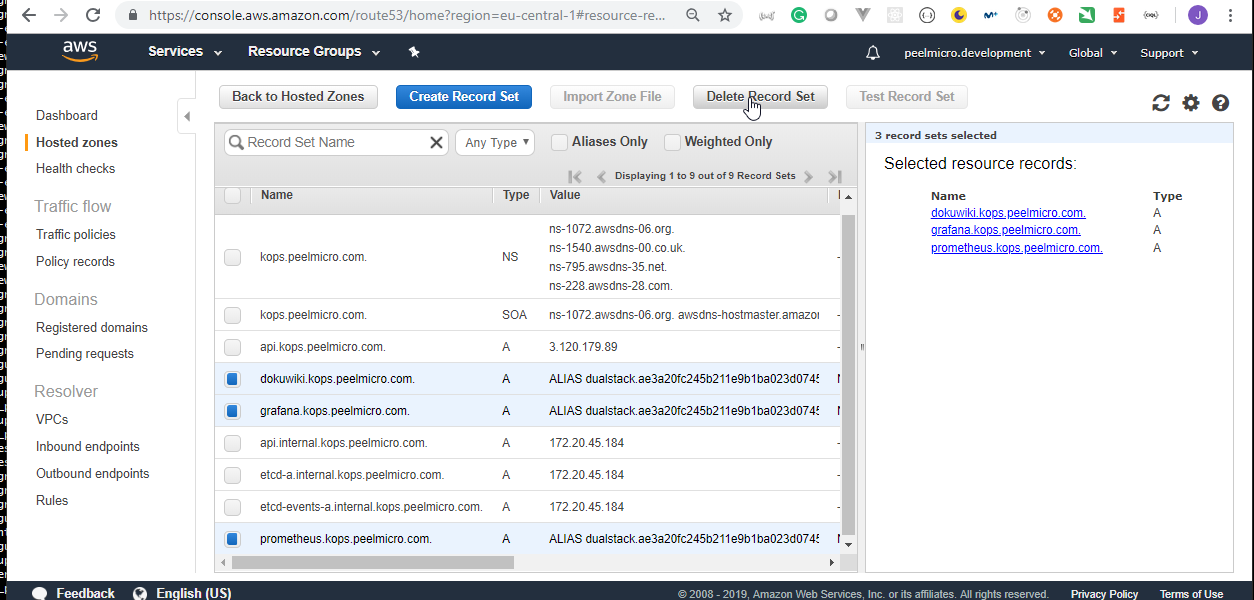

RecordSet/s (custom RecordSet/s)

EC2 instances

network resources

...

Except:

S3 bucket (delete once you do not want to use this free 1 YEAR account anymore, or you are done with this course.)

Hosted Zone (delete once you do not want to use this free 1 YEAR account anymore, or you are done with this course.)

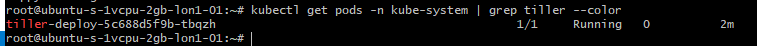

Please do not forget redeploy tiller pod by using of this commands every time you are starting your Kubernetes cluster.

# Start your Kubernetes cluster

cd /.../.../.../terraform_code

terraform apply

# Crete service account && initiate tiller pod in your Kubernetes cluster

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

# kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

helm init --service-account tiller --upgrade

3. How to start kubernetes cluster on AWS

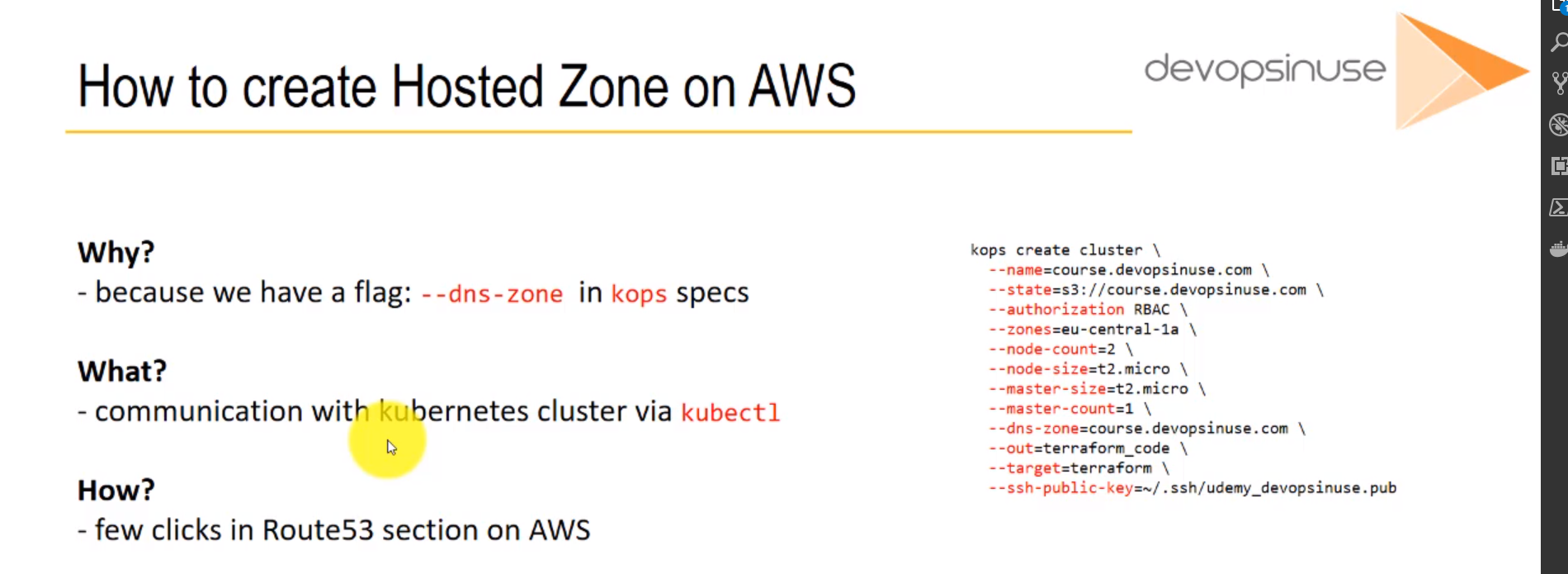

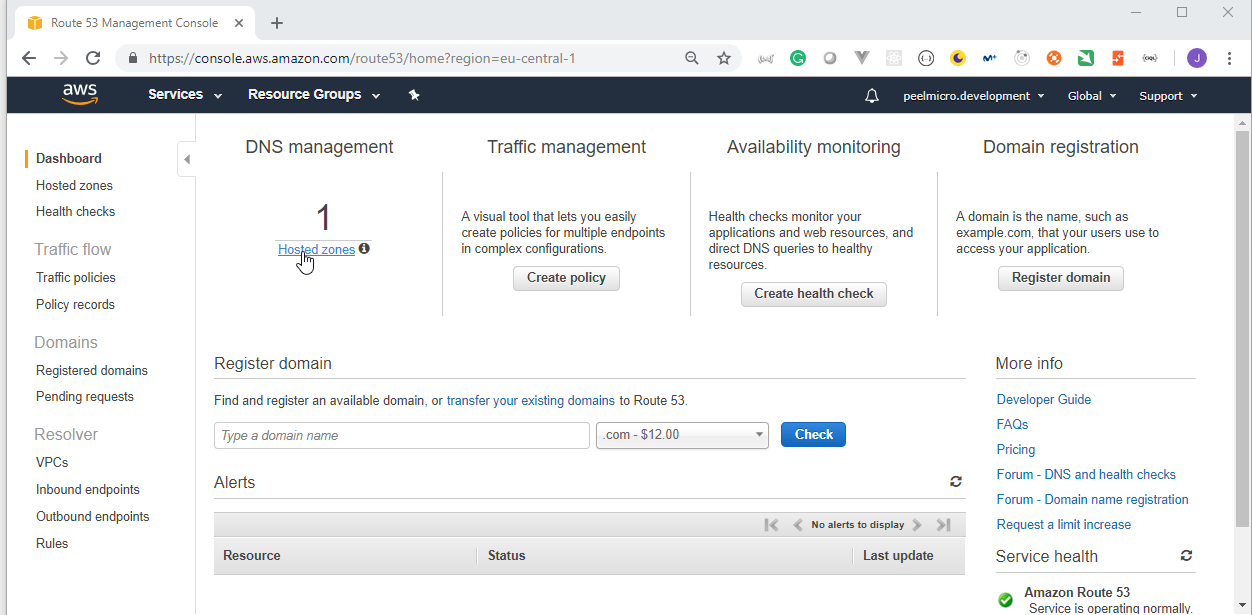

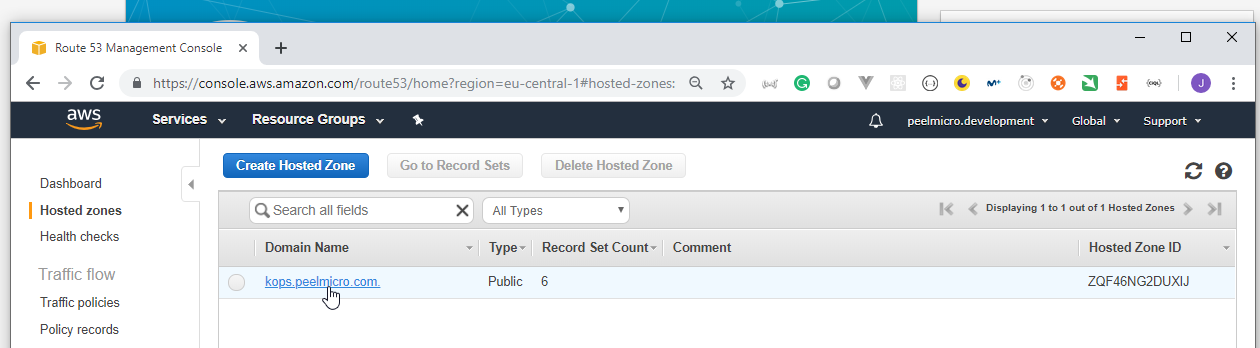

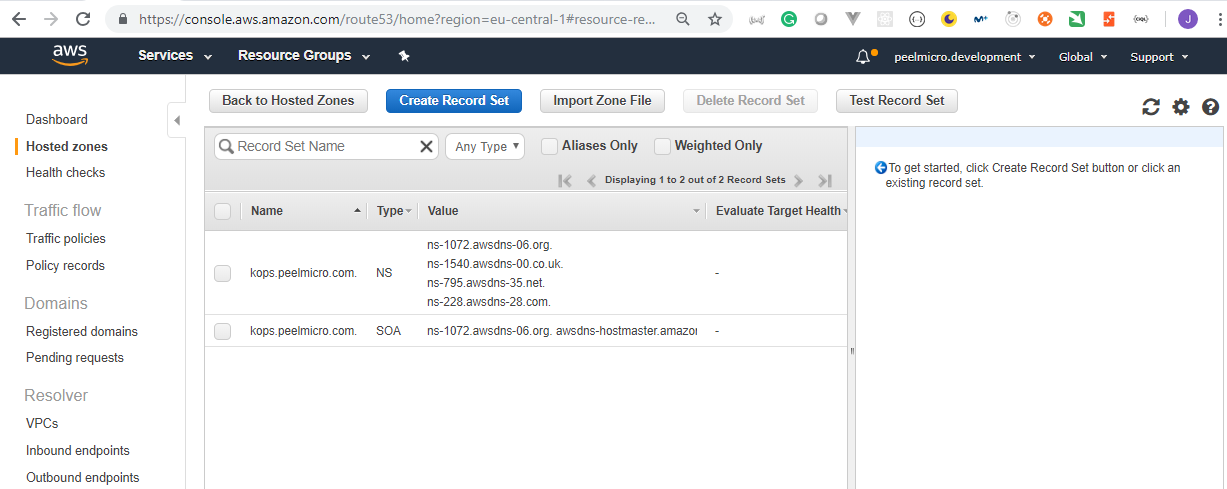

4. How to create Hosted Zone on AWS

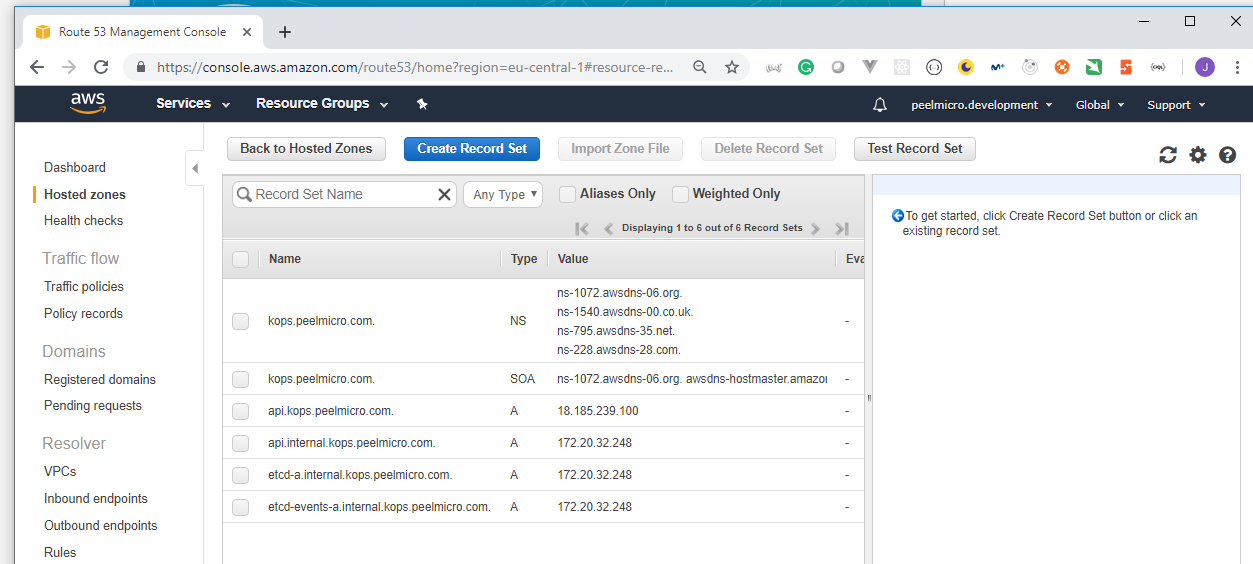

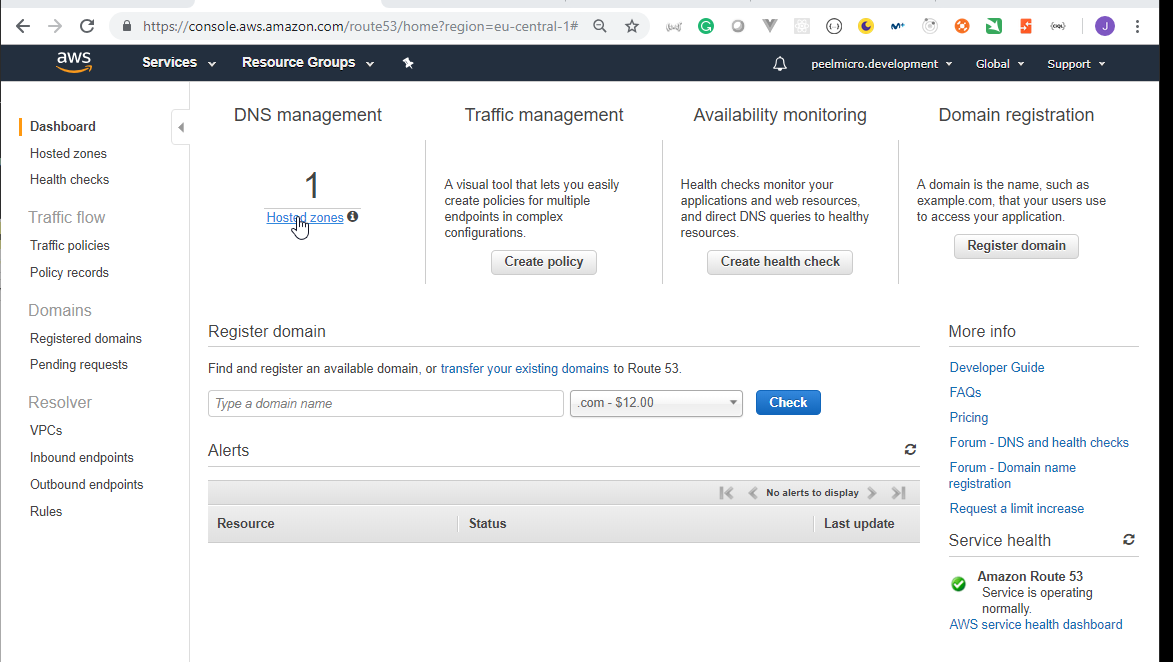

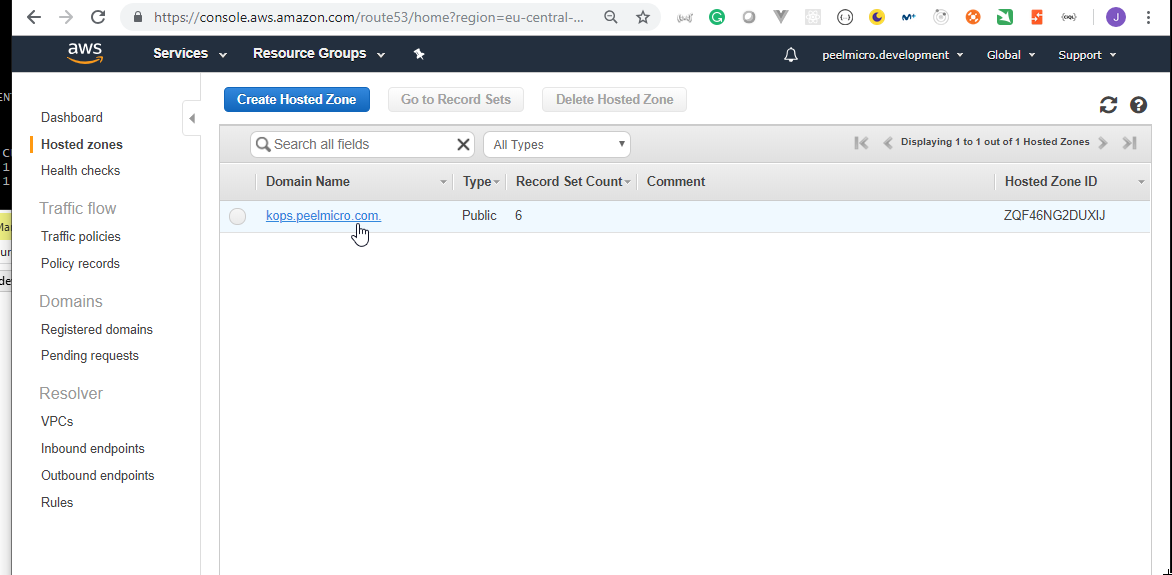

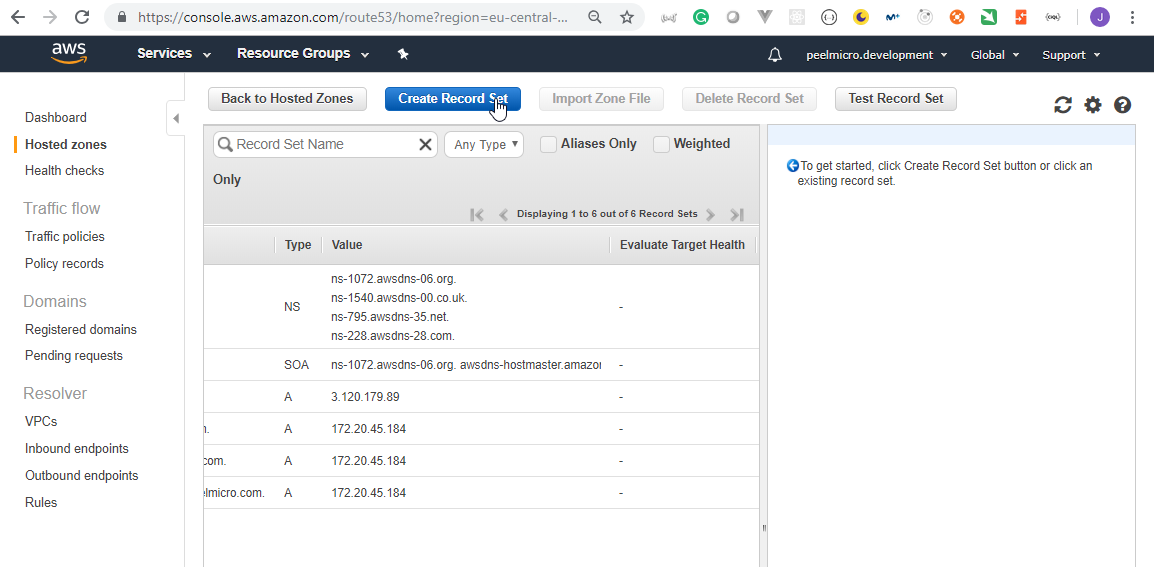

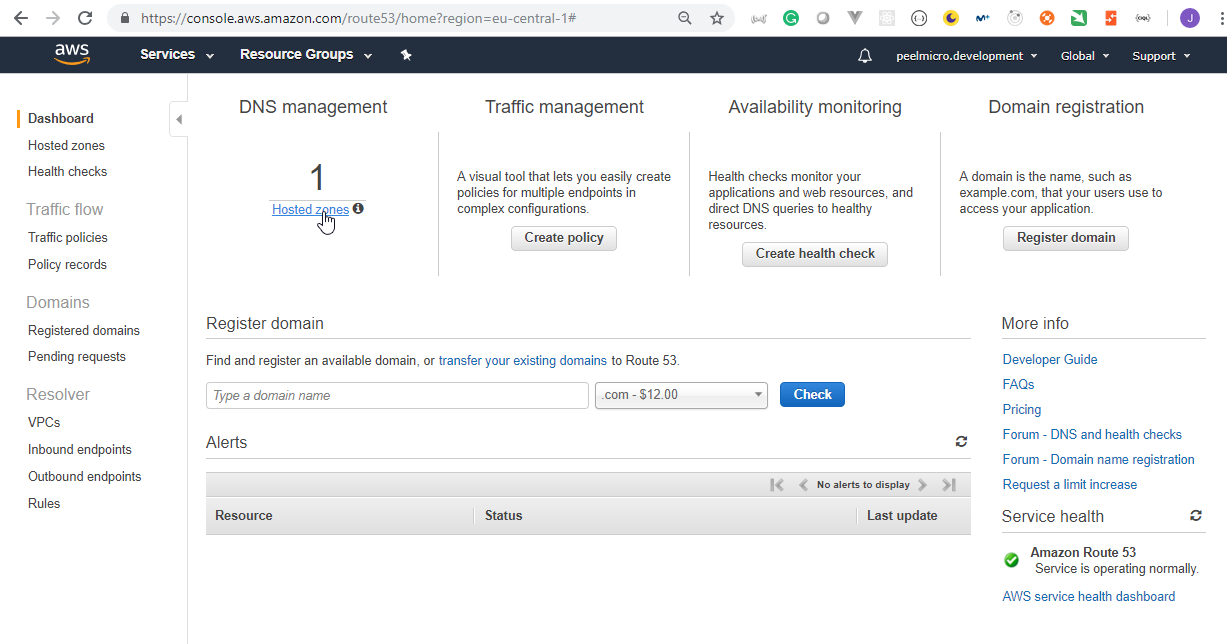

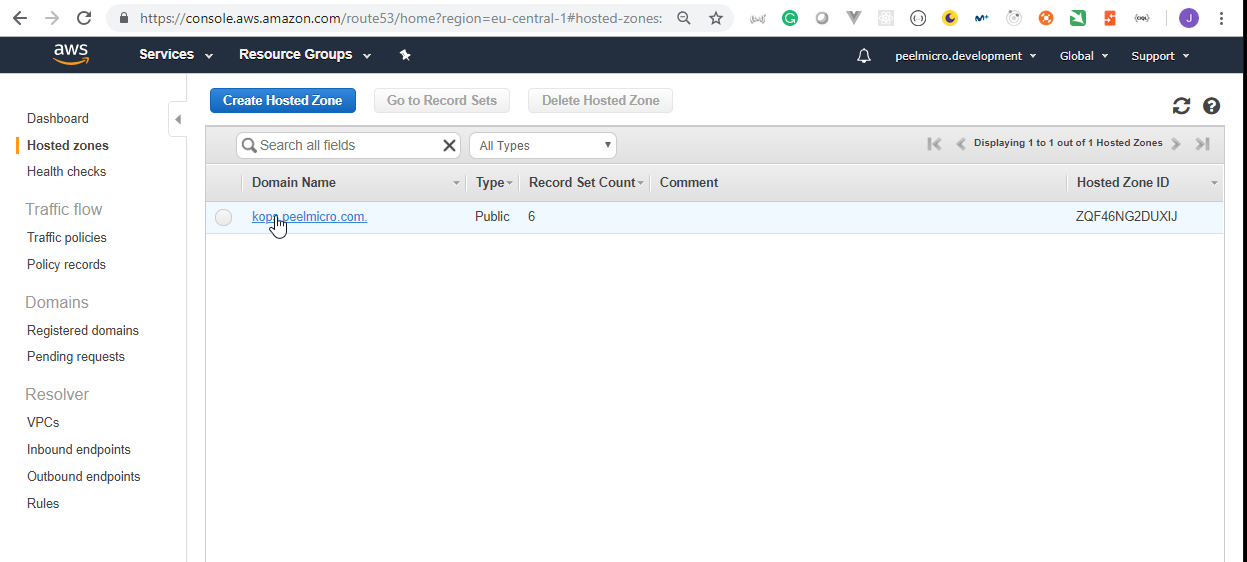

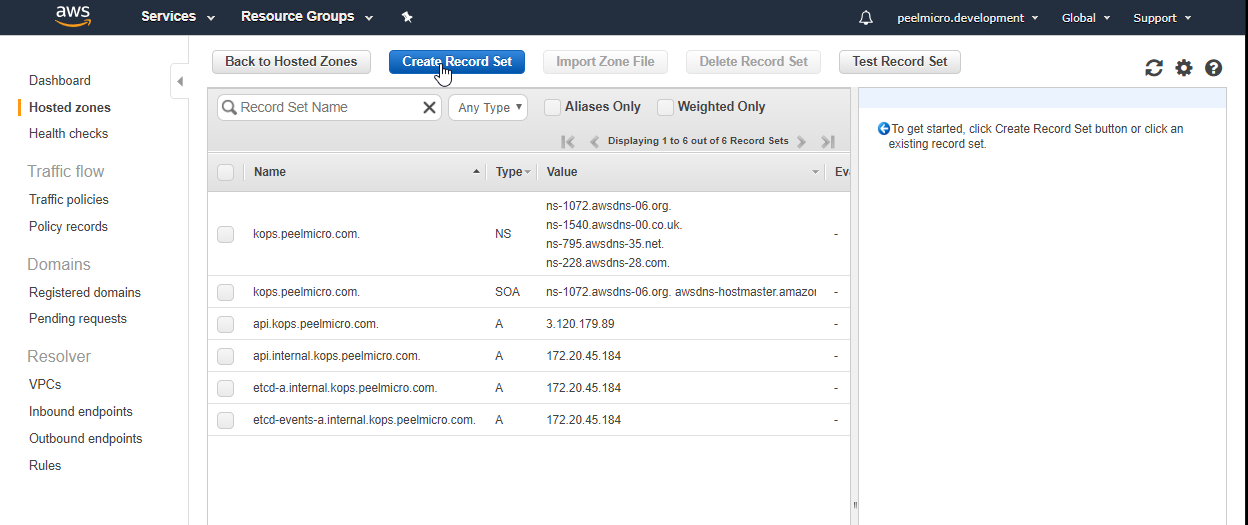

Hosted Zoneis explained in 16. How to use kops and create Kubernetes cluster (Continue) - Why hosted zone chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Go to AWS Route 53 Console

HowToCreateHostedZoneOnAWS5

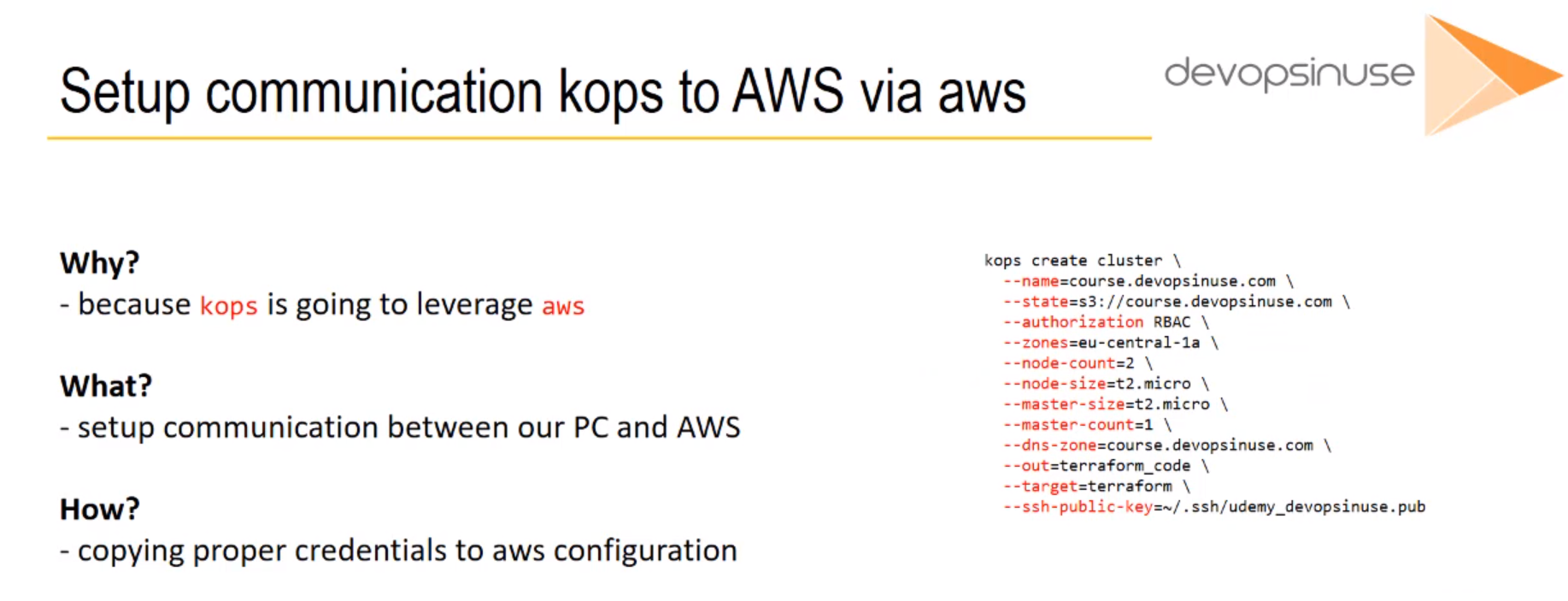

5. How to setup communication kops to AWS via aws

Installing aws utilityis explained in 4. Install aws utility chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.We can ensure it is working by puting the

awscommand:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# aws

usage: aws [options] <command> <subcommand> [<subcommand> ...] [parameters]

To see help text, you can run:

aws help

aws <command> help

aws <command> <subcommand> help

aws: error: the following arguments are required: command

- We can ensure the utility is configured properly by executing the following command:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# aws ec2 describe-instances

{

"Reservations": []

}

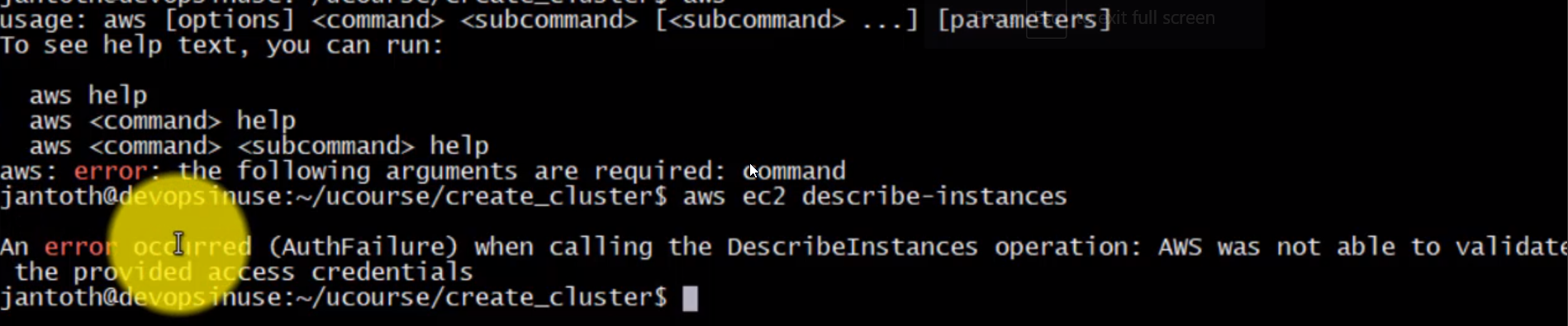

- If it were not properly configured something like this should be shown:

HowToSetupCommunicationKopsToAwsViaAws3

HowToSetupCommunicationKopsToAwsViaAws4

HowToSetupCommunicationKopsToAwsViaAws3

HowToSetupCommunicationKopsToAwsViaAws4

6. Materials: How to install KOPS binary

Simple shell function for kops installation

Kubernetes documentation: https://kubernetes.io/docs/getting-started-guides/kops/

Copy and paste this code:

function install_kops {

if [ -z $(which kops) ]

then

curl -LO https://github.com/kubernetes/kops/releases/download/$(curl -s https://api.github.com/repos/kubernetes/kops/releases/latest | grep tag_name | cut -d '"' -f 4)/kops-linux-amd64

chmod +x kops-linux-amd64

mv kops-linux-amd64 /usr/local/bin/kops

else

echo "kops is most likely installed"

fi

}

install_kops

Hit enter and kops binary should be automatically installed to your Linux machine.

Install kops on MacOS:

curl -OL https://github.com/kubernetes/kops/releases/download/1.8.0/kops-darwin-amd64

chmod +x kops-darwin-amd64

mv kops-darwin-amd64 /usr/local/bin/kops

# you can also install using Homebrew

brew update && brew install kops

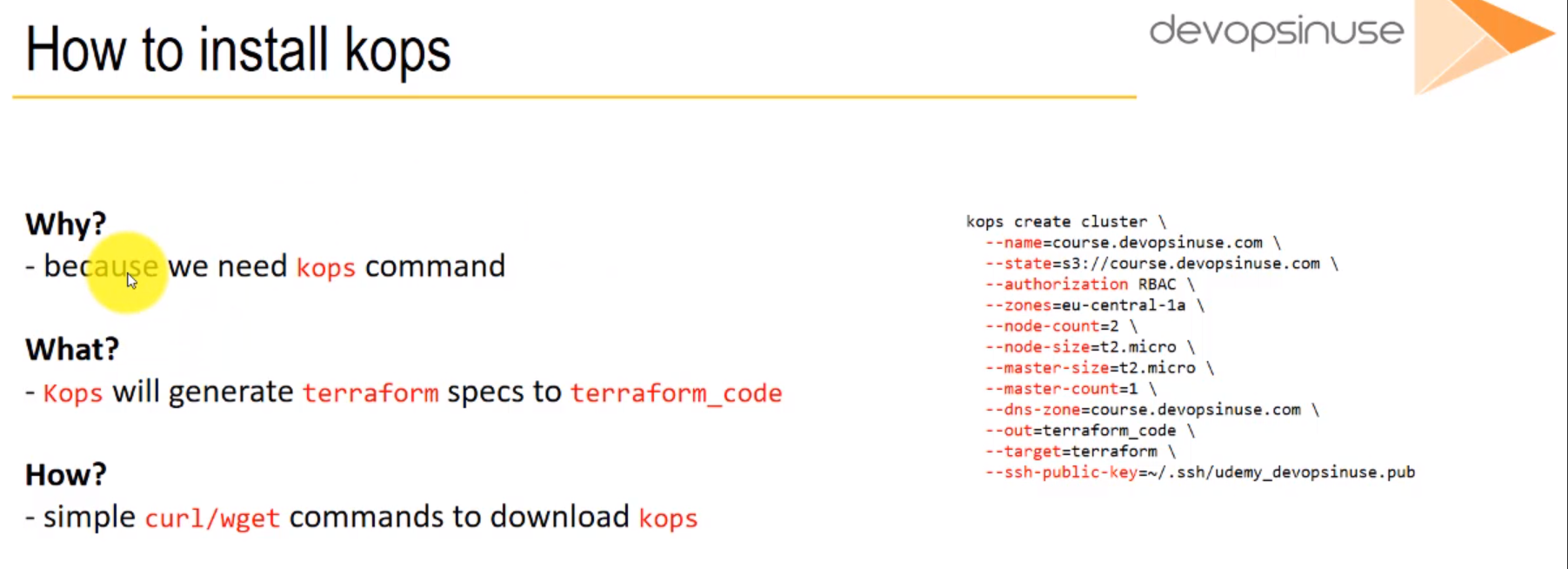

7. How to install kops

Installing kopsis explained in 9. Install kops chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.We can check if we have already installed kops by using the following command:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# which kops

/usr/local/bin/kops

- We can run the

kopscommand to ensure it is working:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops

kops is Kubernetes ops.

kops is the easiest way to get a production grade Kubernetes cluster up and running. We like to think of it as kubectl for clusters.

kops helps you create, destroy, upgrade and maintain production-grade, highly available, Kubernetes clusters from the command line. AWS (Amazon Web Services) is currently officially supported, with GCE and VMware vSphere in alpha support.

Usage:

kops [command]

Available Commands:

completion Output shell completion code for the given shell (bash or zsh).

create Create a resource by command line, filename or stdin.

delete Delete clusters,instancegroups, or secrets.

describe Describe a resource.

edit Edit clusters and other resources.

export Export configuration.

get Get one or many resources.

help Help about any command

import Import a cluster.

replace Replace cluster resources.

rolling-update Rolling update a cluster.

set Set fields on clusters and other resources.

toolbox Misc infrequently used commands.

update Update a cluster.

upgrade Upgrade a kubernetes cluster.

validate Validate a kops cluster.

version Print the kops version information.

Flags:

--alsologtostderr log to standard error as well as files

--config string yaml config file (default is $HOME/.kops.yaml)

-h, --help help for kops

--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)

--log_dir string If non-empty, write log files in this directory

--logtostderr log to standard error instead of files (default false)

--name string Name of cluster. Overrides KOPS_CLUSTER_NAME environment variable

--state string Location of state storage (kops 'config' file). Overrides KOPS_STATE_STORE environment variable

--stderrthreshold severity logs at or above this threshold go to stderr (default 2)

-v, --v Level log level for V logs

--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging

Use "kops [command] --help" for more information about a command.

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

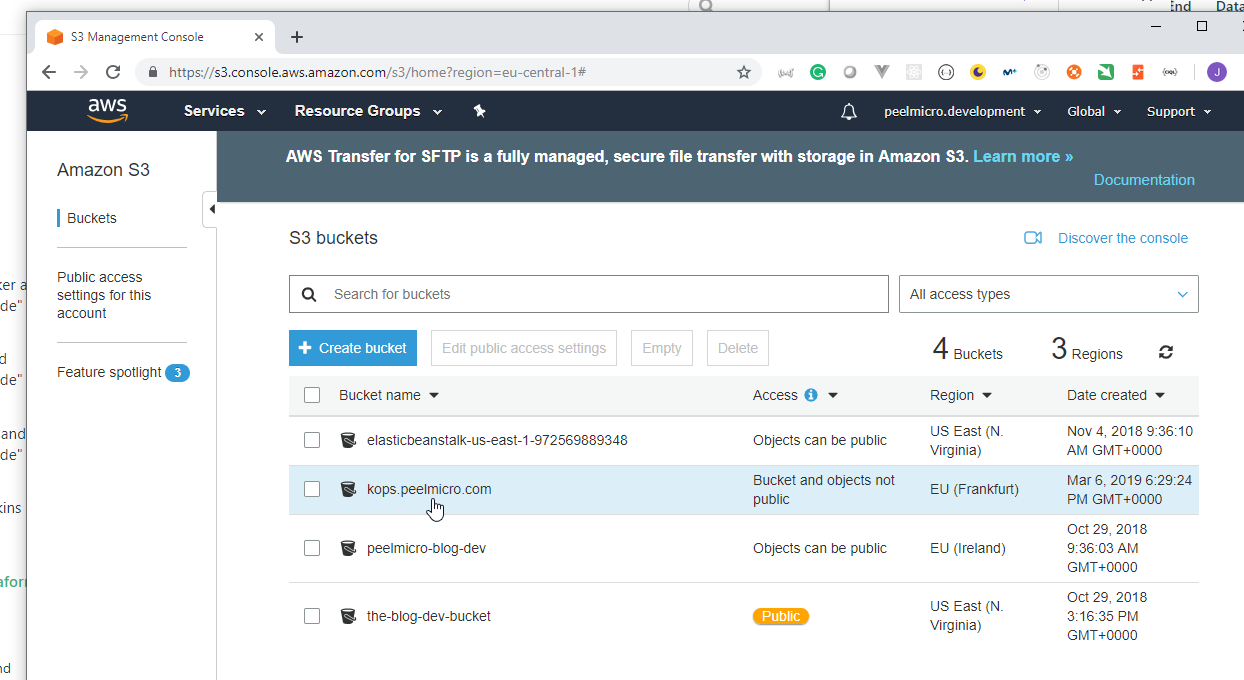

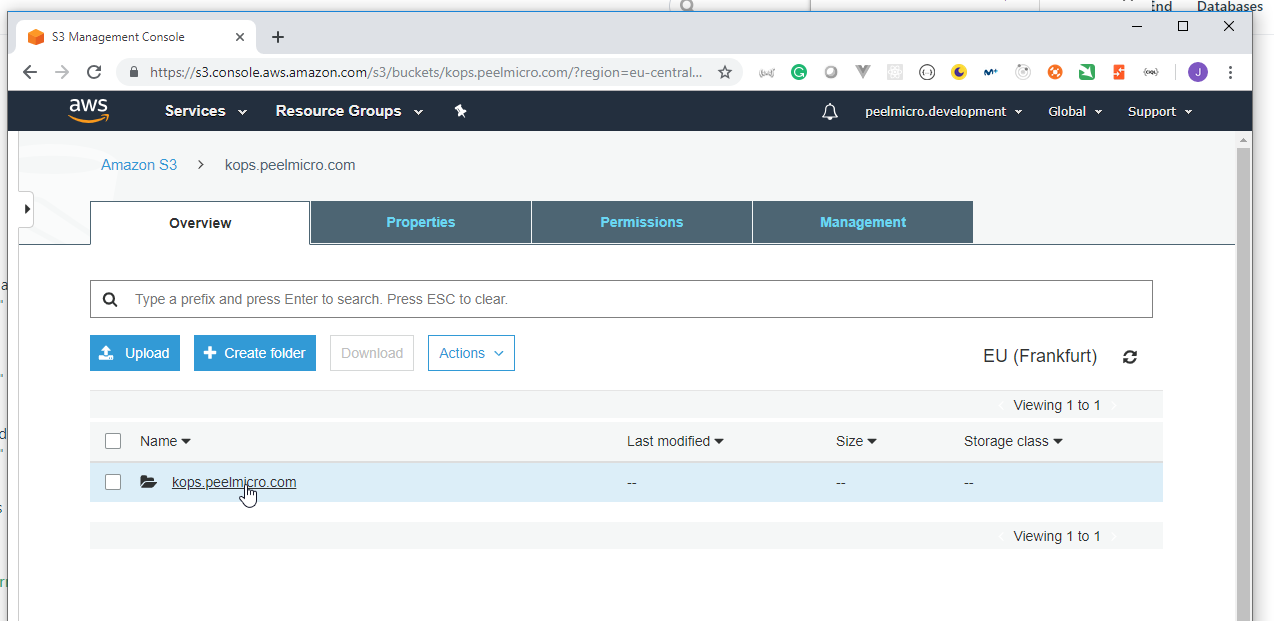

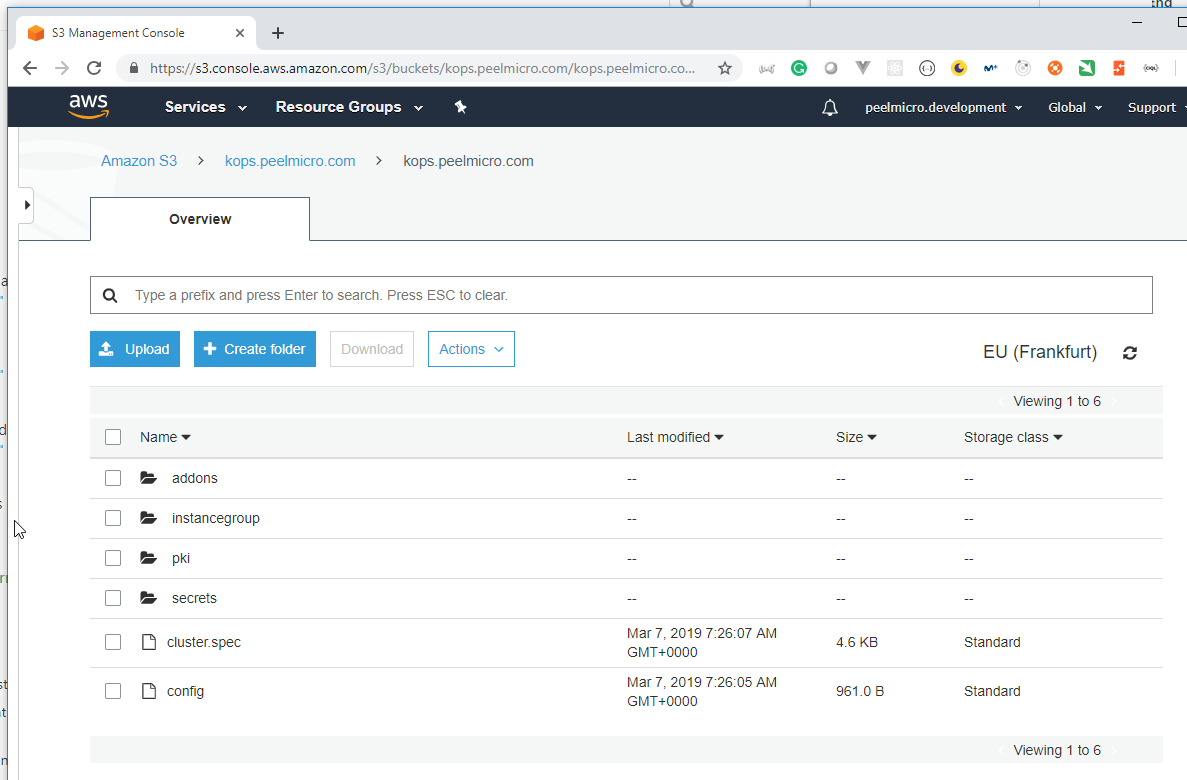

8. How to create S3 bucket in AWS

HowToCreateS3BucketInAws

Creating the S3 bucketis explained in the 15. How to use kops and create Kubernetes cluster chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.

9. Materials: How to install TERRAFORM binary

Here is the bash function to install terrafrom:

TERRAFORM_ZIP_FILE=terraform_0.11.7_linux_amd64.zip

TERRAFORM=https://releases.hashicorp.com/terraform/0.11.7

TERRAFORM_BIN=terraform

function install_terraform {

if [ -z $(which $TERRAFORM_BIN) ]

then

wget ${TERRAFORM}/${TERRAFORM_ZIP_FILE}

unzip ${TERRAFORM_ZIP_FILE}

sudo mv ${TERRAFORM_BIN} /usr/local/bin/${TERRAFORM_BIN}

rm -rf ${TERRAFORM_ZIP_FILE}

else

echo "Terraform is most likely installed"

fi

}

install_terraform

Alternatively:

Install terraform on MacOS:

1) Download ZIP file

wget https://releases.hashicorp.com/terraform/0.11.7/terraform_0.11.7_darwin_amd64.zip

2) unzip this ZIP package

3) copy it to your executable path

Install terraform on Windows:

1) Download ZIP file

wget https://releases.hashicorp.com/terraform/0.11.7/terraform_0.11.7_windows_amd64.zip

2) unzip this ZIP package

3) copy it to your executable path

10. How to install Terraform binary

Installing terraformis explained in the 11. Install terraform chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Check if

terraformis already installed.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# which terraform

/usr/local/bin/terraform

- Ensure it can be executed.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# terraform

Usage: terraform [-version] [-help] <command> [args]

The available commands for execution are listed below.

The most common, useful commands are shown first, followed by

less common or more advanced commands. If you're just getting

started with Terraform, stick with the common commands. For the

other commands, please read the help and docs before usage.

Common commands:

apply Builds or changes infrastructure

console Interactive console for Terraform interpolations

destroy Destroy Terraform-managed infrastructure

env Workspace management

fmt Rewrites config files to canonical format

get Download and install modules for the configuration

graph Create a visual graph of Terraform resources

import Import existing infrastructure into Terraform

init Initialize a Terraform working directory

output Read an output from a state file

plan Generate and show an execution plan

providers Prints a tree of the providers used in the configuration

push Upload this Terraform module to Atlas to run

refresh Update local state file against real resources

show Inspect Terraform state or plan

taint Manually mark a resource for recreation

untaint Manually unmark a resource as tainted

validate Validates the Terraform files

version Prints the Terraform version

workspace Workspace management

All other commands:

debug Debug output management (experimental)

force-unlock Manually unlock the terraform state

state Advanced state management

11. Materials: How to install KUBECTL binary

How to install kubectl binary to Linux like OS

Copy and paste this code to your command line:

KUBECTL_BIN=kubectl

function install_kubectl {

if [ -z $(which $KUBECTL_BIN) ]

then

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/$KUBECTL_BIN

chmod +x ${KUBECTL_BIN}

sudo mv ${KUBECTL_BIN} /usr/local/bin/${KUBECTL_BIN}

else

echo "Kubectl is most likely installed"

fi

}

Run this command:

install_kubectl

By now you should be able to use kubectl command.

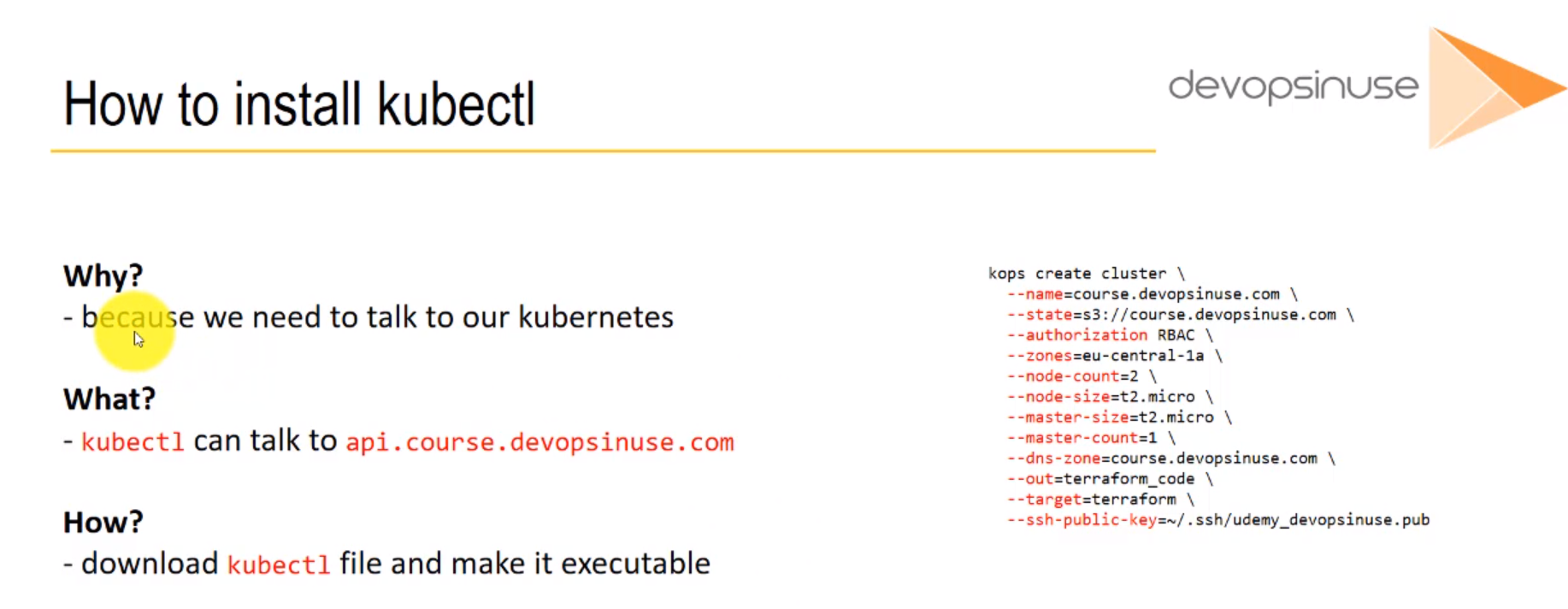

12. How to install Kubectl binary

Installing Kubectlis explained in the 7. Install kubectl chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.Check it it is already installed:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# which kubectl

/snap/bin/kubectl

- Ensure it works correctly

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Basic Commands (Intermediate):

explain Documentation of resources

get Display one or many resources

edit Edit a resource on the server

delete Delete resources by filenames, stdin, resources and names, or by resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a Deployment, ReplicaSet, Replication Controller, or Job

autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands:

certificate Modify certificate resources.

cluster-info Display cluster info

top Display Resource (CPU/Memory/Storage) usage.

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands:

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers.

auth Inspect authorization

Advanced Commands:

diff Diff live version against would-be applied version

apply Apply a configuration to a resource by filename or stdin

patch Update field(s) of a resource using strategic merge patch

replace Replace a resource by filename or stdin

wait Experimental: Wait for a specific condition on one or many resources.

convert Convert config files between different API versions

Settings Commands:

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash or zsh)

Other Commands:

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config Modify kubeconfig files

plugin Provides utilities for interacting with plugins.

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

13. Materials: How to start Kubernetes cluster

How to start Kubernetes cluster by using Kops and Terraform

SSH_KEYS=~/.ssh/udemy_devopsinuse

if [ ! -f "$SSH_KEYS" ]

then

echo -e "\nCreating SSH keys ..."

ssh-keygen -t rsa -C "udemy.course" -N '' -f ~/.ssh/udemy_devopsinuse

else

echo -e "\nSSH keys are already in place!"

fi

echo -e "\nCreating kubernetes cluster ...\n"

kops create cluster \

--name=course.<example>.com \

--state=s3://course.<example>.com \

--authorization RBAC \

--zones=<define-zone> \

--node-count=2 \

--node-size=t2.micro \

--master-size=t2.micro \

--master-count=1 \

--dns-zone=course.<example>.com \

--out=terraform_code \

--target=terraform \

--ssh-public-key=~/.ssh/udemy_devopsinuse.pub

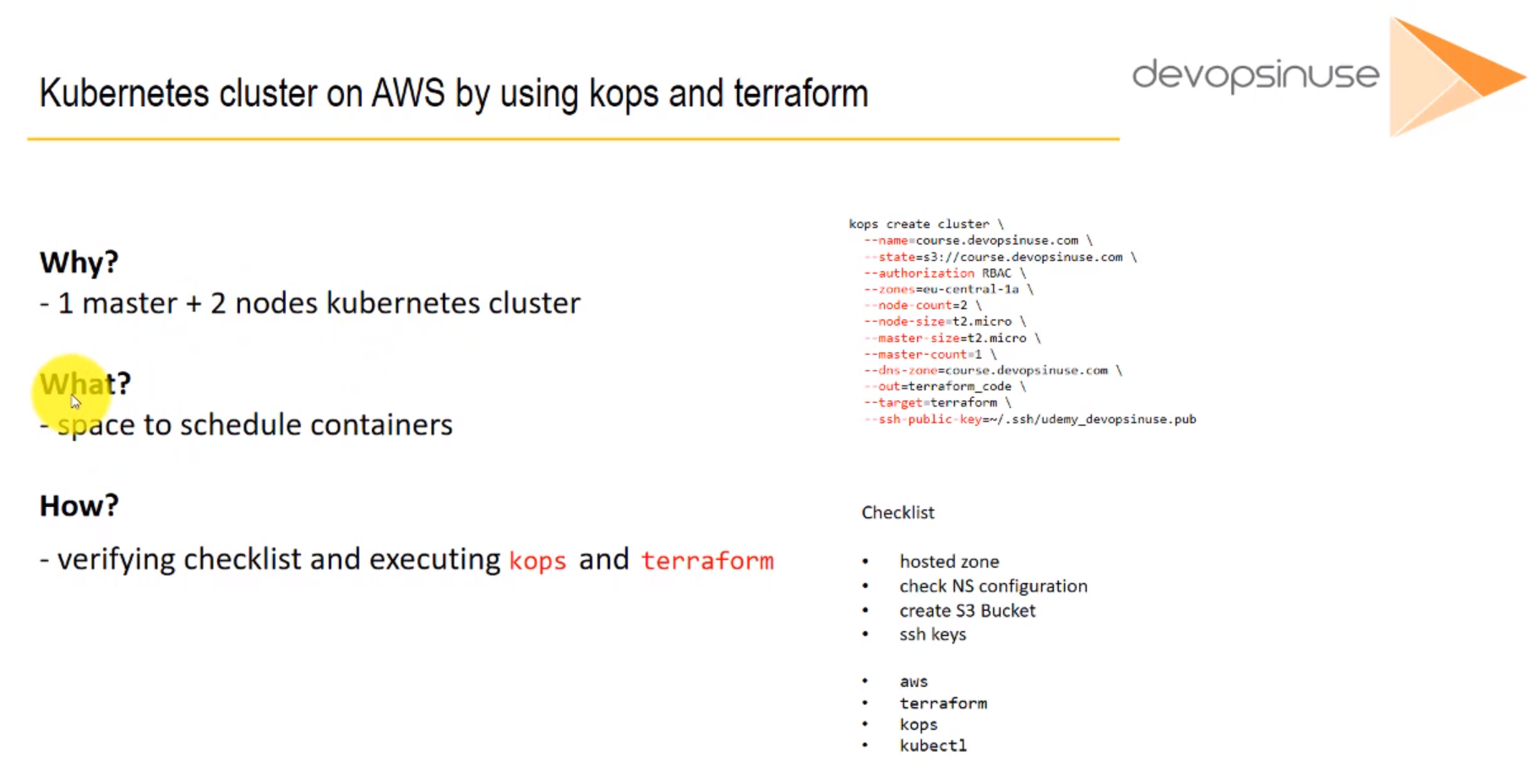

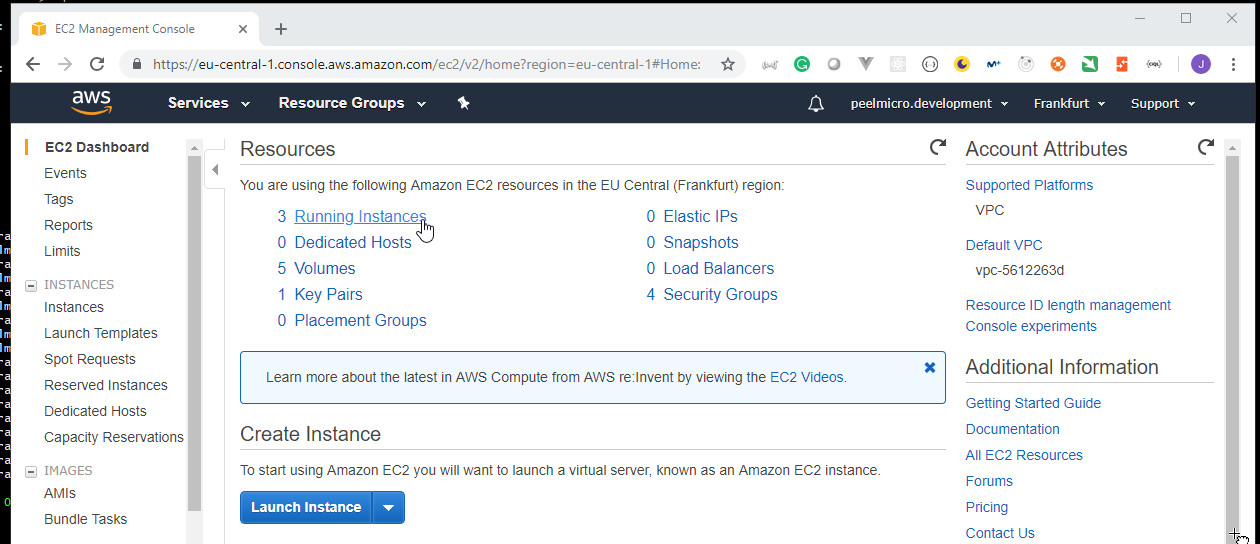

14. How to lunch kubernetes cluster on AWS by using kops and terraform

Lunching kubernetes cluster on AWSis explained in the 17. How to use kops and create Kubernetes cluster (Demo) chapter of the Learn Devops Kubernetes deployment by kops and terraform Udemy course.

- Ensure the keys are already created.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# ls ~/.ssh

authorized_keys known_hosts udemy_devopsinuse udemy_devopsinuse.pub

- Ensure the

kops_cluster.shdocument is already created.

root@ubuntu-s-1vcpu-2gb-lon1-01:~/kops_cluster# ls

devopsinuse_terraform kops_cluster.sh

root@ubuntu-s-1vcpu-2gb-lon1-01:~/kops_cluster# cat kops_cluster.sh

kops create cluster \

--name=kops.peelmicro.com \

--state=s3://kops.peelmicro.com \

--authorization RBAC \

--zones=eu-central-1a \

--node-count=2 \

--node-size=t2.micro \

--master-size=t2.micro \

--master-count=1 \

--dns-zone=kops.peelmicro.com \

--out=devopsinuse_terraform \

--target=terraform \

--ssh-public-key=~/.ssh/udemy_devopsinuse.pub

- Ensure the

devopsinuse_terraformfolder is already created and it has thekuvernetes.tfinside.

root@ubuntu-s-1vcpu-2gb-lon1-01:~/kops_cluster# ls

devopsinuse_terraform kops_cluster.sh

root@ubuntu-s-1vcpu-2gb-lon1-01:~/kops_cluster# ls devopsinuse_terraform/

data kubernetes.tf terraform.tfstate terraform.tfstate.backup

- Execute

terraform plan

root@ubuntu-s-1vcpu-2gb-lon1-01:~/kops_cluster/devopsinuse_terraform# terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ aws_autoscaling_group.master-eu-central-1a-masters-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zones.#: <computed>

default_cooldown: <computed>

desired_capacity: <computed>

enabled_metrics.#: "8"

enabled_metrics.119681000: "GroupStandbyInstances"

enabled_metrics.1940933563: "GroupTotalInstances"

enabled_metrics.308948767: "GroupPendingInstances"

enabled_metrics.3267518000: "GroupTerminatingInstances"

enabled_metrics.3394537085: "GroupDesiredCapacity"

enabled_metrics.3551801763: "GroupInServiceInstances"

enabled_metrics.4118539418: "GroupMinSize"

enabled_metrics.4136111317: "GroupMaxSize"

force_delete: "false"

health_check_grace_period: "300"

health_check_type: <computed>

launch_configuration: "${aws_launch_configuration.master-eu-central-1a-masters-kops-peelmicro-com.id}"

load_balancers.#: <computed>

max_size: "1"

metrics_granularity: "1Minute"

min_size: "1"

name: "master-eu-central-1a.masters.kops.peelmicro.com"

protect_from_scale_in: "false"

service_linked_role_arn: <computed>

tag.#: "4"

tag.1601041186.key: "k8s.io/role/master"

tag.1601041186.propagate_at_launch: "true"

tag.1601041186.value: "1"

tag.296694174.key: "k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup"

tag.296694174.propagate_at_launch: "true"

tag.296694174.value: "master-eu-central-1a"

tag.3218595536.key: "Name"

tag.3218595536.propagate_at_launch: "true"

tag.3218595536.value: "master-eu-central-1a.masters.kops.peelmicro.com"

tag.681420748.key: "KubernetesCluster"

tag.681420748.propagate_at_launch: "true"

tag.681420748.value: "kops.peelmicro.com"

target_group_arns.#: <computed>

vpc_zone_identifier.#: <computed>

wait_for_capacity_timeout: "10m"

+ aws_autoscaling_group.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zones.#: <computed>

default_cooldown: <computed>

desired_capacity: <computed>

enabled_metrics.#: "8"

enabled_metrics.119681000: "GroupStandbyInstances"

enabled_metrics.1940933563: "GroupTotalInstances"

enabled_metrics.308948767: "GroupPendingInstances"

enabled_metrics.3267518000: "GroupTerminatingInstances"

enabled_metrics.3394537085: "GroupDesiredCapacity"

enabled_metrics.3551801763: "GroupInServiceInstances"

enabled_metrics.4118539418: "GroupMinSize"

enabled_metrics.4136111317: "GroupMaxSize"

force_delete: "false"

health_check_grace_period: "300"

health_check_type: <computed>

launch_configuration: "${aws_launch_configuration.nodes-kops-peelmicro-com.id}"

load_balancers.#: <computed>

max_size: "2"

metrics_granularity: "1Minute"

min_size: "2"

name: "nodes.kops.peelmicro.com"

protect_from_scale_in: "false"

service_linked_role_arn: <computed>

tag.#: "4"

tag.1967977115.key: "k8s.io/role/node"

tag.1967977115.propagate_at_launch: "true"

tag.1967977115.value: "1"

tag.3438852489.key: "Name"

tag.3438852489.propagate_at_launch: "true"

tag.3438852489.value: "nodes.kops.peelmicro.com"

tag.681420748.key: "KubernetesCluster"

tag.681420748.propagate_at_launch: "true"

tag.681420748.value: "kops.peelmicro.com"

tag.859419842.key: "k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup"

tag.859419842.propagate_at_launch: "true"

tag.859419842.value: "nodes"

target_group_arns.#: <computed>

vpc_zone_identifier.#: <computed>

wait_for_capacity_timeout: "10m"

+ aws_ebs_volume.a-etcd-events-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zone: "eu-central-1a"

encrypted: "false"

iops: <computed>

kms_key_id: <computed>

size: "20"

snapshot_id: <computed>

tags.%: "5"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "a.etcd-events.kops.peelmicro.com"

tags.k8s.io/etcd/events: "a/a"

tags.k8s.io/role/master: "1"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

type: "gp2"

+ aws_ebs_volume.a-etcd-main-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zone: "eu-central-1a"

encrypted: "false"

iops: <computed>

kms_key_id: <computed>

size: "20"

snapshot_id: <computed>

tags.%: "5"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "a.etcd-main.kops.peelmicro.com"

tags.k8s.io/etcd/main: "a/a"

tags.k8s.io/role/master: "1"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

type: "gp2"

+ aws_iam_instance_profile.masters-kops-peelmicro-com

id: <computed>

arn: <computed>

create_date: <computed>

name: "masters.kops.peelmicro.com"

path: "/"

role: "masters.kops.peelmicro.com"

roles.#: <computed>

unique_id: <computed>

+ aws_iam_instance_profile.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

create_date: <computed>

name: "nodes.kops.peelmicro.com"

path: "/"

role: "nodes.kops.peelmicro.com"

roles.#: <computed>

unique_id: <computed>

+ aws_iam_role.masters-kops-peelmicro-com

id: <computed>

arn: <computed>

assume_role_policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Principal\": { \"Service\": \"ec2.amazonaws.com\"},\n \"Action\": \"sts:AssumeRole\"\n }\n ]\n}"

create_date: <computed>

force_detach_policies: "false"

max_session_duration: "3600"

name: "masters.kops.peelmicro.com"

path: "/"

unique_id: <computed>

+ aws_iam_role.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

assume_role_policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Principal\": { \"Service\": \"ec2.amazonaws.com\"},\n \"Action\": \"sts:AssumeRole\"\n }\n ]\n}"

create_date: <computed>

force_detach_policies: "false"

max_session_duration: "3600"

name: "nodes.kops.peelmicro.com"

path: "/"

unique_id: <computed>

+ aws_iam_role_policy.masters-kops-peelmicro-com

id: <computed>

name: "masters.kops.peelmicro.com"

policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:DescribeInstances\",\n \"ec2:DescribeRegions\",\n \"ec2:DescribeRouteTables\",\n \"ec2:DescribeSecurityGroups\",\n \"ec2:DescribeSubnets\",\n \"ec2:DescribeVolumes\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:CreateSecurityGroup\",\n \"ec2:CreateTags\",\n \"ec2:CreateVolume\",\n \"ec2:DescribeVolumesModifications\",\n \"ec2:ModifyInstanceAttribute\",\n \"ec2:ModifyVolume\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:AttachVolume\",\n \"ec2:AuthorizeSecurityGroupIngress\",\n \"ec2:CreateRoute\",\n \"ec2:DeleteRoute\",\n \"ec2:DeleteSecurityGroup\",\n \"ec2:DeleteVolume\",\n \"ec2:DetachVolume\",\n \"ec2:RevokeSecurityGroupIngress\"\n ],\n \"Resource\": [\n \"*\"\n ],\n \"Condition\": {\n \"StringEquals\": {\n \"ec2:ResourceTag/KubernetesCluster\": \"kops.peelmicro.com\"\n }\n }\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"autoscaling:DescribeAutoScalingGroups\",\n \"autoscaling:DescribeLaunchConfigurations\",\n \"autoscaling:DescribeTags\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"autoscaling:SetDesiredCapacity\",\n \"autoscaling:TerminateInstanceInAutoScalingGroup\",\n \"autoscaling:UpdateAutoScalingGroup\"\n ],\n \"Resource\": [\n \"*\"\n ],\n \"Condition\": {\n \"StringEquals\": {\n \"autoscaling:ResourceTag/KubernetesCluster\": \"kops.peelmicro.com\"\n }\n }\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"elasticloadbalancing:AddTags\",\n \"elasticloadbalancing:AttachLoadBalancerToSubnets\",\n \"elasticloadbalancing:ApplySecurityGroupsToLoadBalancer\",\n \"elasticloadbalancing:CreateLoadBalancer\",\n \"elasticloadbalancing:CreateLoadBalancerPolicy\",\n \"elasticloadbalancing:CreateLoadBalancerListeners\",\n \"elasticloadbalancing:ConfigureHealthCheck\",\n \"elasticloadbalancing:DeleteLoadBalancer\",\n \"elasticloadbalancing:DeleteLoadBalancerListeners\",\n \"elasticloadbalancing:DescribeLoadBalancers\",\n \"elasticloadbalancing:DescribeLoadBalancerAttributes\",\n \"elasticloadbalancing:DetachLoadBalancerFromSubnets\",\n \"elasticloadbalancing:DeregisterInstancesFromLoadBalancer\",\n \"elasticloadbalancing:ModifyLoadBalancerAttributes\",\n \"elasticloadbalancing:RegisterInstancesWithLoadBalancer\",\n \"elasticloadbalancing:SetLoadBalancerPoliciesForBackendServer\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:DescribeVpcs\",\n \"elasticloadbalancing:AddTags\",\n \"elasticloadbalancing:CreateListener\",\n \"elasticloadbalancing:CreateTargetGroup\",\n \"elasticloadbalancing:DeleteListener\",\n \"elasticloadbalancing:DeleteTargetGroup\",\n \"elasticloadbalancing:DescribeListeners\",\n \"elasticloadbalancing:DescribeLoadBalancerPolicies\",\n \"elasticloadbalancing:DescribeTargetGroups\",\n \"elasticloadbalancing:DescribeTargetHealth\",\n \"elasticloadbalancing:ModifyListener\",\n \"elasticloadbalancing:ModifyTargetGroup\",\n \"elasticloadbalancing:RegisterTargets\",\n \"elasticloadbalancing:SetLoadBalancerPoliciesOfListener\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"iam:ListServerCertificates\",\n \"iam:GetServerCertificate\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:GetBucketLocation\",\n \"s3:ListBucket\"\n ],\n \"Resource\": [\n \"arn:aws:s3:::kops.peelmicro.com\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:Get*\"\n ],\n \"Resource\": \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/*\"\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"route53:ChangeResourceRecordSets\",\n \"route53:ListResourceRecordSets\",\n \"route53:GetHostedZone\"\n ],\n \"Resource\": [\n \"arn:aws:route53:::hostedzone/ZQF46NG2DUXIJ\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"route53:GetChange\"\n ],\n \"Resource\": [\n \"arn:aws:route53:::change/*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"route53:ListHostedZones\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ecr:GetAuthorizationToken\",\n \"ecr:BatchCheckLayerAvailability\",\n \"ecr:GetDownloadUrlForLayer\",\n \"ecr:GetRepositoryPolicy\",\n \"ecr:DescribeRepositories\",\n \"ecr:ListImages\",\n \"ecr:BatchGetImage\"\n ],\n \"Resource\": [\n \"*\"\n ]\n }\n ]\n}"

role: "masters.kops.peelmicro.com"

+ aws_iam_role_policy.nodes-kops-peelmicro-com

id: <computed>

name: "nodes.kops.peelmicro.com"

policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:DescribeInstances\",\n \"ec2:DescribeRegions\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:GetBucketLocation\",\n \"s3:ListBucket\"\n ],\n \"Resource\": [\n \"arn:aws:s3:::kops.peelmicro.com\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:Get*\"\n ],\n \"Resource\": [\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/addons/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/cluster.spec\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/config\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/instancegroup/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/issued/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/private/kube-proxy/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/private/kubelet/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/ssh/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/secrets/dockerconfig\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ecr:GetAuthorizationToken\",\n \"ecr:BatchCheckLayerAvailability\",\n \"ecr:GetDownloadUrlForLayer\",\n \"ecr:GetRepositoryPolicy\",\n \"ecr:DescribeRepositories\",\n \"ecr:ListImages\",\n \"ecr:BatchGetImage\"\n ],\n \"Resource\": [\n \"*\"\n ]\n }\n ]\n}"

role: "nodes.kops.peelmicro.com"

+ aws_internet_gateway.kops-peelmicro-com

id: <computed>

owner_id: <computed>

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26

id: <computed>

fingerprint: <computed>

key_name: "kubernetes.kops.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26"

public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDjBXziCHemhPndqIdzwKXyTw32UdZs+OVUWvCpwy1pRubg8oZpbFXQ92uGvWVFhbIh8wYv7bgmxi6Gaw8xbdBiDoOwx18PdEgmbcFw7O0tXKUMoke/tn3izeUbliNyD21OwSMwkNoaUJqkBJ2fHKjOrDxUGP/5M6iLfgzXTD/6oDG2USLoHIZBQtRBivb/k8IbW6dAveHhziuG87KtcW0lti0n4denWJV8R6fMEXLEaOTbtD17LOfQGWK8la1IwmNVhPuKMSBUOjfNk2sVv7dRO6EL+zK8WvAagnRl15yX3i097Lg6ql5Hvukk1aeJ5QCZa78hnYYDFL6d1DHbOgi1 root@ubuntu-s-1vcpu-2gb-lon1-01"

+ aws_launch_configuration.master-eu-central-1a-masters-kops-peelmicro-com

id: <computed>

associate_public_ip_address: "true"

ebs_block_device.#: <computed>

ebs_optimized: <computed>

enable_monitoring: "false"

iam_instance_profile: "${aws_iam_instance_profile.masters-kops-peelmicro-com.id}"

image_id: "ami-0692cb5ffed92e0c7"

instance_type: "t2.micro"

key_name: "${aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26.id}"

name: <computed>

name_prefix: "master-eu-central-1a.masters.kops.peelmicro.com-"

root_block_device.#: "1"

root_block_device.0.delete_on_termination: "true"

root_block_device.0.iops: <computed>

root_block_device.0.volume_size: "64"

root_block_device.0.volume_type: "gp2"

security_groups.#: <computed>

user_data: "c4de9593d17ce259846182486013d03d8782e455"

+ aws_launch_configuration.nodes-kops-peelmicro-com

id: <computed>

associate_public_ip_address: "true"

ebs_block_device.#: <computed>

ebs_optimized: <computed>

enable_monitoring: "false"

iam_instance_profile: "${aws_iam_instance_profile.nodes-kops-peelmicro-com.id}"

image_id: "ami-0692cb5ffed92e0c7"

instance_type: "t2.micro"

key_name: "${aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26.id}"

name: <computed>

name_prefix: "nodes.kops.peelmicro.com-"

root_block_device.#: "1"

root_block_device.0.delete_on_termination: "true"

root_block_device.0.iops: <computed>

root_block_device.0.volume_size: "128"

root_block_device.0.volume_type: "gp2"

security_groups.#: <computed>

user_data: "0211e7563e5b67305d61bb6211bceef691e20c32"

+ aws_route.0-0-0-0--0

id: <computed>

destination_cidr_block: "0.0.0.0/0"

destination_prefix_list_id: <computed>

egress_only_gateway_id: <computed>

gateway_id: "${aws_internet_gateway.kops-peelmicro-com.id}"

instance_id: <computed>

instance_owner_id: <computed>

nat_gateway_id: <computed>

network_interface_id: <computed>

origin: <computed>

route_table_id: "${aws_route_table.kops-peelmicro-com.id}"

state: <computed>

+ aws_route_table.kops-peelmicro-com

id: <computed>

owner_id: <computed>

propagating_vgws.#: <computed>

route.#: <computed>

tags.%: "4"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

tags.kubernetes.io/kops/role: "public"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_route_table_association.eu-central-1a-kops-peelmicro-com

id: <computed>

route_table_id: "${aws_route_table.kops-peelmicro-com.id}"

subnet_id: "${aws_subnet.eu-central-1a-kops-peelmicro-com.id}"

+ aws_security_group.masters-kops-peelmicro-com

id: <computed>

arn: <computed>

description: "Security group for masters"

egress.#: <computed>

ingress.#: <computed>

name: "masters.kops.peelmicro.com"

owner_id: <computed>

revoke_rules_on_delete: "false"

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "masters.kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_security_group.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

description: "Security group for nodes"

egress.#: <computed>

ingress.#: <computed>

name: "nodes.kops.peelmicro.com"

owner_id: <computed>

revoke_rules_on_delete: "false"

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "nodes.kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_security_group_rule.all-master-to-master

id: <computed>

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

to_port: "0"

type: "ingress"

+ aws_security_group_rule.all-master-to-node

id: <computed>

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

to_port: "0"

type: "ingress"

+ aws_security_group_rule.all-node-to-node

id: <computed>

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "0"

type: "ingress"

+ aws_security_group_rule.https-external-to-master-0-0-0-0--0

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "443"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "443"

type: "ingress"

+ aws_security_group_rule.master-egress

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "0"

type: "egress"

+ aws_security_group_rule.node-egress

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "0"

type: "egress"

+ aws_security_group_rule.node-to-master-tcp-1-2379

id: <computed>

from_port: "1"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "2379"

type: "ingress"

+ aws_security_group_rule.node-to-master-tcp-2382-4000

id: <computed>

from_port: "2382"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "4000"

type: "ingress"

+ aws_security_group_rule.node-to-master-tcp-4003-65535

id: <computed>

from_port: "4003"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "65535"

type: "ingress"

+ aws_security_group_rule.node-to-master-udp-1-65535

id: <computed>

from_port: "1"

protocol: "udp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "65535"

type: "ingress"

+ aws_security_group_rule.ssh-external-to-master-0-0-0-0--0

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "22"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "22"

type: "ingress"

+ aws_security_group_rule.ssh-external-to-node-0-0-0-0--0

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "22"

protocol: "tcp"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "22"

type: "ingress"

+ aws_subnet.eu-central-1a-kops-peelmicro-com

id: <computed>

arn: <computed>

assign_ipv6_address_on_creation: "false"

availability_zone: "eu-central-1a"

availability_zone_id: <computed>

cidr_block: "172.20.32.0/19"

ipv6_cidr_block: <computed>

ipv6_cidr_block_association_id: <computed>

map_public_ip_on_launch: "false"

owner_id: <computed>

tags.%: "5"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "eu-central-1a.kops.peelmicro.com"

tags.SubnetType: "Public"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

tags.kubernetes.io/role/elb: "1"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_vpc.kops-peelmicro-com

id: <computed>

arn: <computed>

assign_generated_ipv6_cidr_block: "false"

cidr_block: "172.20.0.0/16"

default_network_acl_id: <computed>

default_route_table_id: <computed>

default_security_group_id: <computed>

dhcp_options_id: <computed>

enable_classiclink: <computed>

enable_classiclink_dns_support: <computed>

enable_dns_hostnames: "true"

enable_dns_support: "true"

instance_tenancy: "default"

ipv6_association_id: <computed>

ipv6_cidr_block: <computed>

main_route_table_id: <computed>

owner_id: <computed>

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

+ aws_vpc_dhcp_options.kops-peelmicro-com

id: <computed>

domain_name: "eu-central-1.compute.internal"

domain_name_servers.#: "1"

domain_name_servers.0: "AmazonProvidedDNS"

owner_id: <computed>

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

+ aws_vpc_dhcp_options_association.kops-peelmicro-com

id: <computed>

dhcp_options_id: "${aws_vpc_dhcp_options.kops-peelmicro-com.id}"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

Plan: 35 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

- Execute

terraform init

root@ubuntu-s-1vcpu-2gb-lon1-01:~/kops_cluster/devopsinuse_terraform# terraform init

Initializing provider plugins...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.aws: version = "~> 2.0"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

- Execute

terraform applyto deploy the cluster.

root@ubuntu-s-1vcpu-2gb-lon1-01:~/kops_cluster/devopsinuse_terraform# terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ aws_autoscaling_group.master-eu-central-1a-masters-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zones.#: <computed>

default_cooldown: <computed>

desired_capacity: <computed>

enabled_metrics.#: "8"

enabled_metrics.119681000: "GroupStandbyInstances"

enabled_metrics.1940933563: "GroupTotalInstances"

enabled_metrics.308948767: "GroupPendingInstances"

enabled_metrics.3267518000: "GroupTerminatingInstances"

enabled_metrics.3394537085: "GroupDesiredCapacity"

enabled_metrics.3551801763: "GroupInServiceInstances"

enabled_metrics.4118539418: "GroupMinSize"

enabled_metrics.4136111317: "GroupMaxSize"

force_delete: "false"

health_check_grace_period: "300"

health_check_type: <computed>

launch_configuration: "${aws_launch_configuration.master-eu-central-1a-masters-kops-peelmicro-com.id}"

load_balancers.#: <computed>

max_size: "1"

metrics_granularity: "1Minute"

min_size: "1"

name: "master-eu-central-1a.masters.kops.peelmicro.com"

protect_from_scale_in: "false"

service_linked_role_arn: <computed>

tag.#: "4"

tag.1601041186.key: "k8s.io/role/master"

tag.1601041186.propagate_at_launch: "true"

tag.1601041186.value: "1"

tag.296694174.key: "k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup"

tag.296694174.propagate_at_launch: "true"

tag.296694174.value: "master-eu-central-1a"

tag.3218595536.key: "Name"

tag.3218595536.propagate_at_launch: "true"

tag.3218595536.value: "master-eu-central-1a.masters.kops.peelmicro.com"

tag.681420748.key: "KubernetesCluster"

tag.681420748.propagate_at_launch: "true"

tag.681420748.value: "kops.peelmicro.com"

target_group_arns.#: <computed>

vpc_zone_identifier.#: <computed>

wait_for_capacity_timeout: "10m"

+ aws_autoscaling_group.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zones.#: <computed>

default_cooldown: <computed>

desired_capacity: <computed>

enabled_metrics.#: "8"

enabled_metrics.119681000: "GroupStandbyInstances"

enabled_metrics.1940933563: "GroupTotalInstances"

enabled_metrics.308948767: "GroupPendingInstances"

enabled_metrics.3267518000: "GroupTerminatingInstances"

enabled_metrics.3394537085: "GroupDesiredCapacity"

enabled_metrics.3551801763: "GroupInServiceInstances"

enabled_metrics.4118539418: "GroupMinSize"

enabled_metrics.4136111317: "GroupMaxSize"

force_delete: "false"

health_check_grace_period: "300"

health_check_type: <computed>

launch_configuration: "${aws_launch_configuration.nodes-kops-peelmicro-com.id}"

load_balancers.#: <computed>

max_size: "2"

metrics_granularity: "1Minute"

min_size: "2"

name: "nodes.kops.peelmicro.com"

protect_from_scale_in: "false"

service_linked_role_arn: <computed>

tag.#: "4"

tag.1967977115.key: "k8s.io/role/node"

tag.1967977115.propagate_at_launch: "true"

tag.1967977115.value: "1"

tag.3438852489.key: "Name"

tag.3438852489.propagate_at_launch: "true"

tag.3438852489.value: "nodes.kops.peelmicro.com"

tag.681420748.key: "KubernetesCluster"

tag.681420748.propagate_at_launch: "true"

tag.681420748.value: "kops.peelmicro.com"

tag.859419842.key: "k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup"

tag.859419842.propagate_at_launch: "true"

tag.859419842.value: "nodes"

target_group_arns.#: <computed>

vpc_zone_identifier.#: <computed>

wait_for_capacity_timeout: "10m"

+ aws_ebs_volume.a-etcd-events-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zone: "eu-central-1a"

encrypted: "false"

iops: <computed>

kms_key_id: <computed>

size: "20"

snapshot_id: <computed>

tags.%: "5"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "a.etcd-events.kops.peelmicro.com"

tags.k8s.io/etcd/events: "a/a"

tags.k8s.io/role/master: "1"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

type: "gp2"

+ aws_ebs_volume.a-etcd-main-kops-peelmicro-com

id: <computed>

arn: <computed>

availability_zone: "eu-central-1a"

encrypted: "false"

iops: <computed>

kms_key_id: <computed>

size: "20"

snapshot_id: <computed>

tags.%: "5"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "a.etcd-main.kops.peelmicro.com"

tags.k8s.io/etcd/main: "a/a"

tags.k8s.io/role/master: "1"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

type: "gp2"

+ aws_iam_instance_profile.masters-kops-peelmicro-com

id: <computed>

arn: <computed>

create_date: <computed>

name: "masters.kops.peelmicro.com"

path: "/"

role: "masters.kops.peelmicro.com"

roles.#: <computed>

unique_id: <computed>

+ aws_iam_instance_profile.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

create_date: <computed>

name: "nodes.kops.peelmicro.com"

path: "/"

role: "nodes.kops.peelmicro.com"

roles.#: <computed>

unique_id: <computed>

+ aws_iam_role.masters-kops-peelmicro-com

id: <computed>

arn: <computed>

assume_role_policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Principal\": { \"Service\": \"ec2.amazonaws.com\"},\n \"Action\": \"sts:AssumeRole\"\n }\n ]\n}"

create_date: <computed>

force_detach_policies: "false"

max_session_duration: "3600"

name: "masters.kops.peelmicro.com"

path: "/"

unique_id: <computed>

+ aws_iam_role.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

assume_role_policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Principal\": { \"Service\": \"ec2.amazonaws.com\"},\n \"Action\": \"sts:AssumeRole\"\n }\n ]\n}"

create_date: <computed>

force_detach_policies: "false"

max_session_duration: "3600"

name: "nodes.kops.peelmicro.com"

path: "/"

unique_id: <computed>

+ aws_iam_role_policy.masters-kops-peelmicro-com

id: <computed>

name: "masters.kops.peelmicro.com"

policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:DescribeInstances\",\n \"ec2:DescribeRegions\",\n \"ec2:DescribeRouteTables\",\n \"ec2:DescribeSecurityGroups\",\n \"ec2:DescribeSubnets\",\n \"ec2:DescribeVolumes\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:CreateSecurityGroup\",\n \"ec2:CreateTags\",\n \"ec2:CreateVolume\",\n \"ec2:DescribeVolumesModifications\",\n \"ec2:ModifyInstanceAttribute\",\n \"ec2:ModifyVolume\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:AttachVolume\",\n \"ec2:AuthorizeSecurityGroupIngress\",\n \"ec2:CreateRoute\",\n \"ec2:DeleteRoute\",\n \"ec2:DeleteSecurityGroup\",\n \"ec2:DeleteVolume\",\n \"ec2:DetachVolume\",\n \"ec2:RevokeSecurityGroupIngress\"\n ],\n \"Resource\": [\n \"*\"\n ],\n \"Condition\": {\n \"StringEquals\": {\n \"ec2:ResourceTag/KubernetesCluster\": \"kops.peelmicro.com\"\n }\n }\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"autoscaling:DescribeAutoScalingGroups\",\n \"autoscaling:DescribeLaunchConfigurations\",\n \"autoscaling:DescribeTags\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"autoscaling:SetDesiredCapacity\",\n \"autoscaling:TerminateInstanceInAutoScalingGroup\",\n \"autoscaling:UpdateAutoScalingGroup\"\n ],\n \"Resource\": [\n \"*\"\n ],\n \"Condition\": {\n \"StringEquals\": {\n \"autoscaling:ResourceTag/KubernetesCluster\": \"kops.peelmicro.com\"\n }\n }\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"elasticloadbalancing:AddTags\",\n \"elasticloadbalancing:AttachLoadBalancerToSubnets\",\n \"elasticloadbalancing:ApplySecurityGroupsToLoadBalancer\",\n \"elasticloadbalancing:CreateLoadBalancer\",\n \"elasticloadbalancing:CreateLoadBalancerPolicy\",\n \"elasticloadbalancing:CreateLoadBalancerListeners\",\n \"elasticloadbalancing:ConfigureHealthCheck\",\n \"elasticloadbalancing:DeleteLoadBalancer\",\n \"elasticloadbalancing:DeleteLoadBalancerListeners\",\n \"elasticloadbalancing:DescribeLoadBalancers\",\n \"elasticloadbalancing:DescribeLoadBalancerAttributes\",\n \"elasticloadbalancing:DetachLoadBalancerFromSubnets\",\n \"elasticloadbalancing:DeregisterInstancesFromLoadBalancer\",\n \"elasticloadbalancing:ModifyLoadBalancerAttributes\",\n \"elasticloadbalancing:RegisterInstancesWithLoadBalancer\",\n \"elasticloadbalancing:SetLoadBalancerPoliciesForBackendServer\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:DescribeVpcs\",\n \"elasticloadbalancing:AddTags\",\n \"elasticloadbalancing:CreateListener\",\n \"elasticloadbalancing:CreateTargetGroup\",\n \"elasticloadbalancing:DeleteListener\",\n \"elasticloadbalancing:DeleteTargetGroup\",\n \"elasticloadbalancing:DescribeListeners\",\n \"elasticloadbalancing:DescribeLoadBalancerPolicies\",\n \"elasticloadbalancing:DescribeTargetGroups\",\n \"elasticloadbalancing:DescribeTargetHealth\",\n \"elasticloadbalancing:ModifyListener\",\n \"elasticloadbalancing:ModifyTargetGroup\",\n \"elasticloadbalancing:RegisterTargets\",\n \"elasticloadbalancing:SetLoadBalancerPoliciesOfListener\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"iam:ListServerCertificates\",\n \"iam:GetServerCertificate\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:GetBucketLocation\",\n \"s3:ListBucket\"\n ],\n \"Resource\": [\n \"arn:aws:s3:::kops.peelmicro.com\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:Get*\"\n ],\n \"Resource\": \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/*\"\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"route53:ChangeResourceRecordSets\",\n \"route53:ListResourceRecordSets\",\n \"route53:GetHostedZone\"\n ],\n \"Resource\": [\n \"arn:aws:route53:::hostedzone/ZQF46NG2DUXIJ\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"route53:GetChange\"\n ],\n \"Resource\": [\n \"arn:aws:route53:::change/*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"route53:ListHostedZones\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ecr:GetAuthorizationToken\",\n \"ecr:BatchCheckLayerAvailability\",\n \"ecr:GetDownloadUrlForLayer\",\n \"ecr:GetRepositoryPolicy\",\n \"ecr:DescribeRepositories\",\n \"ecr:ListImages\",\n \"ecr:BatchGetImage\"\n ],\n \"Resource\": [\n \"*\"\n ]\n }\n ]\n}"

role: "masters.kops.peelmicro.com"

+ aws_iam_role_policy.nodes-kops-peelmicro-com

id: <computed>

name: "nodes.kops.peelmicro.com"

policy: "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ec2:DescribeInstances\",\n \"ec2:DescribeRegions\"\n ],\n \"Resource\": [\n \"*\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:GetBucketLocation\",\n \"s3:ListBucket\"\n ],\n \"Resource\": [\n \"arn:aws:s3:::kops.peelmicro.com\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"s3:Get*\"\n ],\n \"Resource\": [\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/addons/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/cluster.spec\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/config\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/instancegroup/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/issued/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/private/kube-proxy/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/private/kubelet/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/pki/ssh/*\",\n \"arn:aws:s3:::kops.peelmicro.com/kops.peelmicro.com/secrets/dockerconfig\"\n ]\n },\n {\n \"Effect\": \"Allow\",\n \"Action\": [\n \"ecr:GetAuthorizationToken\",\n \"ecr:BatchCheckLayerAvailability\",\n \"ecr:GetDownloadUrlForLayer\",\n \"ecr:GetRepositoryPolicy\",\n \"ecr:DescribeRepositories\",\n \"ecr:ListImages\",\n \"ecr:BatchGetImage\"\n ],\n \"Resource\": [\n \"*\"\n ]\n }\n ]\n}"

role: "nodes.kops.peelmicro.com"

+ aws_internet_gateway.kops-peelmicro-com

id: <computed>

owner_id: <computed>

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26

id: <computed>

fingerprint: <computed>

key_name: "kubernetes.kops.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26"

public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDjBXziCHemhPndqIdzwKXyTw32UdZs+OVUWvCpwy1pRubg8oZpbFXQ92uGvWVFhbIh8wYv7bgmxi6Gaw8xbdBiDoOwx18PdEgmbcFw7O0tXKUMoke/tn3izeUbliNyD21OwSMwkNoaUJqkBJ2fHKjOrDxUGP/5M6iLfgzXTD/6oDG2USLoHIZBQtRBivb/k8IbW6dAveHhziuG87KtcW0lti0n4denWJV8R6fMEXLEaOTbtD17LOfQGWK8la1IwmNVhPuKMSBUOjfNk2sVv7dRO6EL+zK8WvAagnRl15yX3i097Lg6ql5Hvukk1aeJ5QCZa78hnYYDFL6d1DHbOgi1 root@ubuntu-s-1vcpu-2gb-lon1-01"

+ aws_launch_configuration.master-eu-central-1a-masters-kops-peelmicro-com

id: <computed>

associate_public_ip_address: "true"

ebs_block_device.#: <computed>

ebs_optimized: <computed>

enable_monitoring: "false"

iam_instance_profile: "${aws_iam_instance_profile.masters-kops-peelmicro-com.id}"

image_id: "ami-0692cb5ffed92e0c7"

instance_type: "t2.micro"

key_name: "${aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26.id}"

name: <computed>

name_prefix: "master-eu-central-1a.masters.kops.peelmicro.com-"

root_block_device.#: "1"

root_block_device.0.delete_on_termination: "true"

root_block_device.0.iops: <computed>

root_block_device.0.volume_size: "64"

root_block_device.0.volume_type: "gp2"

security_groups.#: <computed>

user_data: "c4de9593d17ce259846182486013d03d8782e455"

+ aws_launch_configuration.nodes-kops-peelmicro-com

id: <computed>

associate_public_ip_address: "true"

ebs_block_device.#: <computed>

ebs_optimized: <computed>

enable_monitoring: "false"

iam_instance_profile: "${aws_iam_instance_profile.nodes-kops-peelmicro-com.id}"

image_id: "ami-0692cb5ffed92e0c7"

instance_type: "t2.micro"

key_name: "${aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26.id}"

name: <computed>

name_prefix: "nodes.kops.peelmicro.com-"

root_block_device.#: "1"

root_block_device.0.delete_on_termination: "true"

root_block_device.0.iops: <computed>

root_block_device.0.volume_size: "128"

root_block_device.0.volume_type: "gp2"

security_groups.#: <computed>

user_data: "0211e7563e5b67305d61bb6211bceef691e20c32"

+ aws_route.0-0-0-0--0

id: <computed>

destination_cidr_block: "0.0.0.0/0"

destination_prefix_list_id: <computed>

egress_only_gateway_id: <computed>

gateway_id: "${aws_internet_gateway.kops-peelmicro-com.id}"

instance_id: <computed>

instance_owner_id: <computed>

nat_gateway_id: <computed>

network_interface_id: <computed>

origin: <computed>

route_table_id: "${aws_route_table.kops-peelmicro-com.id}"

state: <computed>

+ aws_route_table.kops-peelmicro-com

id: <computed>

owner_id: <computed>

propagating_vgws.#: <computed>

route.#: <computed>

tags.%: "4"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

tags.kubernetes.io/kops/role: "public"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_route_table_association.eu-central-1a-kops-peelmicro-com

id: <computed>

route_table_id: "${aws_route_table.kops-peelmicro-com.id}"

subnet_id: "${aws_subnet.eu-central-1a-kops-peelmicro-com.id}"

+ aws_security_group.masters-kops-peelmicro-com

id: <computed>

arn: <computed>

description: "Security group for masters"

egress.#: <computed>

ingress.#: <computed>

name: "masters.kops.peelmicro.com"

owner_id: <computed>

revoke_rules_on_delete: "false"

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "masters.kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_security_group.nodes-kops-peelmicro-com

id: <computed>

arn: <computed>

description: "Security group for nodes"

egress.#: <computed>

ingress.#: <computed>

name: "nodes.kops.peelmicro.com"

owner_id: <computed>

revoke_rules_on_delete: "false"

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "nodes.kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_security_group_rule.all-master-to-master

id: <computed>

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

to_port: "0"

type: "ingress"

+ aws_security_group_rule.all-master-to-node

id: <computed>

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

to_port: "0"

type: "ingress"

+ aws_security_group_rule.all-node-to-node

id: <computed>

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "0"

type: "ingress"

+ aws_security_group_rule.https-external-to-master-0-0-0-0--0

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "443"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "443"

type: "ingress"

+ aws_security_group_rule.master-egress

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "0"

type: "egress"

+ aws_security_group_rule.node-egress

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "0"

protocol: "-1"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "0"

type: "egress"

+ aws_security_group_rule.node-to-master-tcp-1-2379

id: <computed>

from_port: "1"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "2379"

type: "ingress"

+ aws_security_group_rule.node-to-master-tcp-2382-4000

id: <computed>

from_port: "2382"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "4000"

type: "ingress"

+ aws_security_group_rule.node-to-master-tcp-4003-65535

id: <computed>

from_port: "4003"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "65535"

type: "ingress"

+ aws_security_group_rule.node-to-master-udp-1-65535

id: <computed>

from_port: "1"

protocol: "udp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

to_port: "65535"

type: "ingress"

+ aws_security_group_rule.ssh-external-to-master-0-0-0-0--0

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "22"

protocol: "tcp"

security_group_id: "${aws_security_group.masters-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "22"

type: "ingress"

+ aws_security_group_rule.ssh-external-to-node-0-0-0-0--0

id: <computed>

cidr_blocks.#: "1"

cidr_blocks.0: "0.0.0.0/0"

from_port: "22"

protocol: "tcp"

security_group_id: "${aws_security_group.nodes-kops-peelmicro-com.id}"

self: "false"

source_security_group_id: <computed>

to_port: "22"

type: "ingress"

+ aws_subnet.eu-central-1a-kops-peelmicro-com

id: <computed>

arn: <computed>

assign_ipv6_address_on_creation: "false"

availability_zone: "eu-central-1a"

availability_zone_id: <computed>

cidr_block: "172.20.32.0/19"

ipv6_cidr_block: <computed>

ipv6_cidr_block_association_id: <computed>

map_public_ip_on_launch: "false"

owner_id: <computed>

tags.%: "5"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "eu-central-1a.kops.peelmicro.com"

tags.SubnetType: "Public"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

tags.kubernetes.io/role/elb: "1"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

+ aws_vpc.kops-peelmicro-com

id: <computed>

arn: <computed>

assign_generated_ipv6_cidr_block: "false"

cidr_block: "172.20.0.0/16"

default_network_acl_id: <computed>

default_route_table_id: <computed>

default_security_group_id: <computed>

dhcp_options_id: <computed>

enable_classiclink: <computed>

enable_classiclink_dns_support: <computed>

enable_dns_hostnames: "true"

enable_dns_support: "true"

instance_tenancy: "default"

ipv6_association_id: <computed>

ipv6_cidr_block: <computed>

main_route_table_id: <computed>

owner_id: <computed>

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

+ aws_vpc_dhcp_options.kops-peelmicro-com

id: <computed>

domain_name: "eu-central-1.compute.internal"

domain_name_servers.#: "1"

domain_name_servers.0: "AmazonProvidedDNS"

owner_id: <computed>

tags.%: "3"

tags.KubernetesCluster: "kops.peelmicro.com"

tags.Name: "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "owned"

+ aws_vpc_dhcp_options_association.kops-peelmicro-com

id: <computed>

dhcp_options_id: "${aws_vpc_dhcp_options.kops-peelmicro-com.id}"

vpc_id: "${aws_vpc.kops-peelmicro-com.id}"

Plan: 35 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

- Confirm entering

yes

aws_iam_role.masters-kops-peelmicro-com: Creating...

arn: "" => "<computed>"

assume_role_policy: "" => "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Principal\": { \"Service\": \"ec2.amazonaws.com\"},\n \"Action\": \"sts:AssumeRole\"\n }\n ]\n}"

create_date: "" => "<computed>"

force_detach_policies: "" => "false"

max_session_duration: "" => "3600"

name: "" => "masters.kops.peelmicro.com"

path: "" => "/"

unique_id: "" => "<computed>"

aws_vpc.kops-peelmicro-com: Creating...

arn: "" => "<computed>"

assign_generated_ipv6_cidr_block: "" => "false"

cidr_block: "" => "172.20.0.0/16"

default_network_acl_id: "" => "<computed>"

default_route_table_id: "" => "<computed>"

default_security_group_id: "" => "<computed>"

dhcp_options_id: "" => "<computed>"

enable_classiclink: "" => "<computed>"

enable_classiclink_dns_support: "" => "<computed>"

enable_dns_hostnames: "" => "true"

enable_dns_support: "" => "true"

instance_tenancy: "" => "default"

ipv6_association_id: "" => "<computed>"

ipv6_cidr_block: "" => "<computed>"

main_route_table_id: "" => "<computed>"

owner_id: "" => "<computed>"

tags.%: "" => "3"

tags.KubernetesCluster: "" => "kops.peelmicro.com"

tags.Name: "" => "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "" => "owned"

aws_iam_role.nodes-kops-peelmicro-com: Creating...

arn: "" => "<computed>"

assume_role_policy: "" => "{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Principal\": { \"Service\": \"ec2.amazonaws.com\"},\n \"Action\": \"sts:AssumeRole\"\n }\n ]\n}"

create_date: "" => "<computed>"

force_detach_policies: "" => "false"

max_session_duration: "" => "3600"

name: "" => "nodes.kops.peelmicro.com"

path: "" => "/"

unique_id: "" => "<computed>"

aws_ebs_volume.a-etcd-events-kops-peelmicro-com: Creating...

arn: "" => "<computed>"

availability_zone: "" => "eu-central-1a"

encrypted: "" => "false"

iops: "" => "<computed>"

kms_key_id: "" => "<computed>"

size: "" => "20"

snapshot_id: "" => "<computed>"

tags.%: "" => "5"

tags.KubernetesCluster: "" => "kops.peelmicro.com"

tags.Name: "" => "a.etcd-events.kops.peelmicro.com"

tags.k8s.io/etcd/events: "" => "a/a"

tags.k8s.io/role/master: "" => "1"

tags.kubernetes.io/cluster/kops.peelmicro.com: "" => "owned"

type: "" => "gp2"

aws_ebs_volume.a-etcd-main-kops-peelmicro-com: Creating...

arn: "" => "<computed>"

availability_zone: "" => "eu-central-1a"

encrypted: "" => "false"

iops: "" => "<computed>"

kms_key_id: "" => "<computed>"

size: "" => "20"

snapshot_id: "" => "<computed>"

tags.%: "" => "5"

tags.KubernetesCluster: "" => "kops.peelmicro.com"

tags.Name: "" => "a.etcd-main.kops.peelmicro.com"

tags.k8s.io/etcd/main: "" => "a/a"

tags.k8s.io/role/master: "" => "1"

tags.kubernetes.io/cluster/kops.peelmicro.com: "" => "owned"

type: "" => "gp2"

aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26: Creating...

fingerprint: "" => "<computed>"

key_name: "" => "kubernetes.kops.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26"

public_key: "" => "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDjBXziCHemhPndqIdzwKXyTw32UdZs+OVUWvCpwy1pRubg8oZpbFXQ92uGvWVFhbIh8wYv7bgmxi6Gaw8xbdBiDoOwx18PdEgmbcFw7O0tXKUMoke/tn3izeUbliNyD21OwSMwkNoaUJqkBJ2fHKjOrDxUGP/5M6iLfgzXTD/6oDG2USLoHIZBQtRBivb/k8IbW6dAveHhziuG87KtcW0lti0n4denWJV8R6fMEXLEaOTbtD17LOfQGWK8la1IwmNVhPuKMSBUOjfNk2sVv7dRO6EL+zK8WvAagnRl15yX3i097Lg6ql5Hvukk1aeJ5QCZa78hnYYDFL6d1DHbOgi1 root@ubuntu-s-1vcpu-2gb-lon1-01"

aws_vpc_dhcp_options.kops-peelmicro-com: Creating...

domain_name: "" => "eu-central-1.compute.internal"

domain_name_servers.#: "" => "1"

domain_name_servers.0: "" => "AmazonProvidedDNS"

owner_id: "" => "<computed>"

tags.%: "" => "3"

tags.KubernetesCluster: "" => "kops.peelmicro.com"

tags.Name: "" => "kops.peelmicro.com"

tags.kubernetes.io/cluster/kops.peelmicro.com: "" => "owned"

aws_key_pair.kubernetes-kops-peelmicro-com-14f4e587b84d4819f287bedfda85ac26: Creation complete after 1s (ID: kubernetes.kops.peelmicro.com-14:f4:e5:87:b8:4d:48:19:f2:87:be:df:da:85:ac:26)

aws_vpc_dhcp_options.kops-peelmicro-com: Creation complete after 1s (ID: dopt-0c9dd22620832ee93)

aws_iam_role.masters-kops-peelmicro-com: Creation complete after 1s (ID: masters.kops.peelmicro.com)

aws_iam_role_policy.masters-kops-peelmicro-com: Creating...

name: "" => "masters.kops.peelmicro.com"