Learn DevOps: The Complete Kubernetes Course (Part 3)

Github Repositories

- learn-devops-the-complete-kubernetes-course.

- on-prem-or-cloud-agnostic-kubernetes.

- kubernetes-coursee.

- http-echo.

The Learn DevOps: The Complete Kubernetes Course Udemy course helps learn how Kubernetes will run and manage your containerized applications and to build, deploy, use, and maintain Kubernetes.

Other parts:

- Learn DevOps: The Complete Kubernetes Course (Part 1)

- Learn DevOps: The Complete Kubernetes Course (Part 2)

- Learn DevOps: The Complete Kubernetes Course (Part 4)

Table of contents

- What I've learned

- Section: 4. Kubernetes Administration

- 80. The Kubernetes Master Services

- 81. Resource Quotas

- 82. Namespaces

- 83. Demo: Namespace quotas

- 84. User Management

- 85. Demo: Adding Users

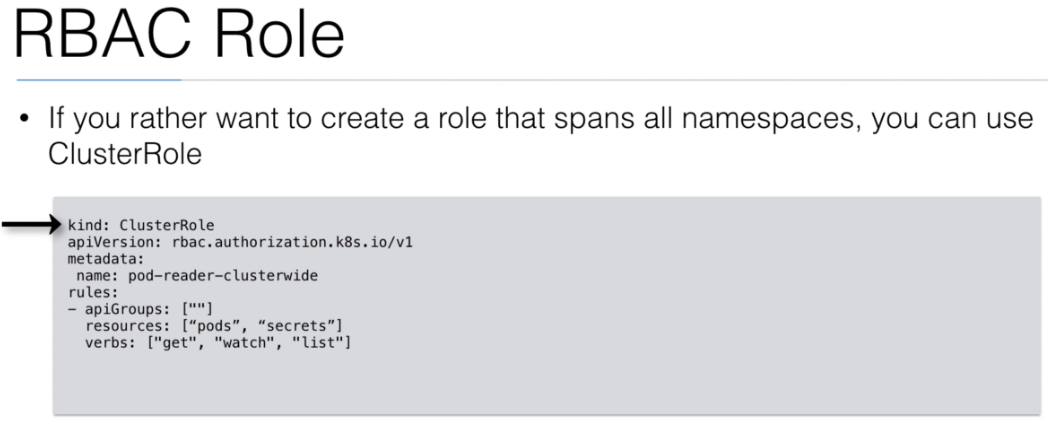

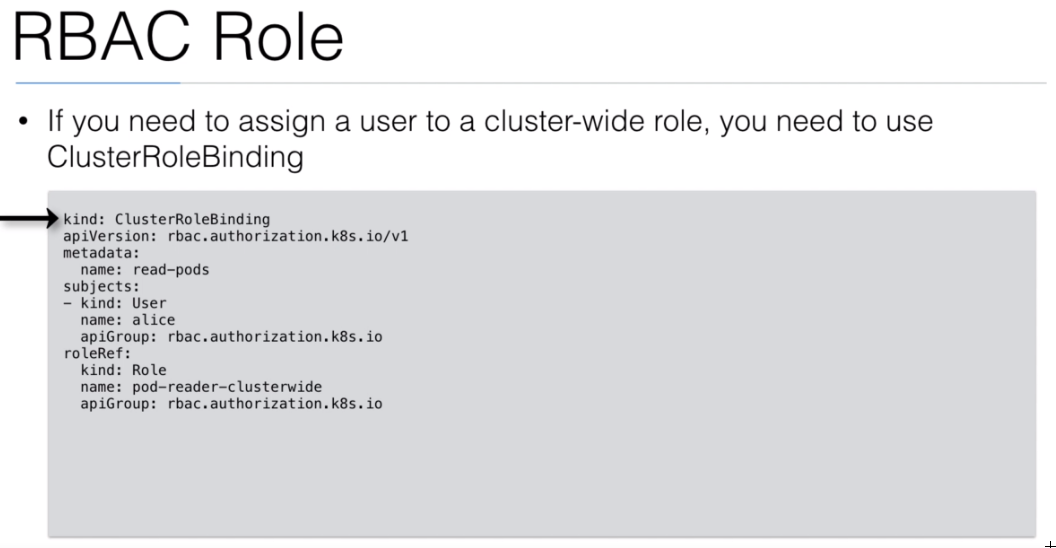

- 86. RBAC

- 87. Demo: RBAC

- 88. Networking

- 89. Node Maintenance

- 90. Demo: Node Maintenance

- 91. High Availability

- 92. Demo: High Availability

- 93. TLS on ELB using Annotations

- 94. Demo: TLS on ELB

- Section: 5. Packaging and Deploying on Kubernetes

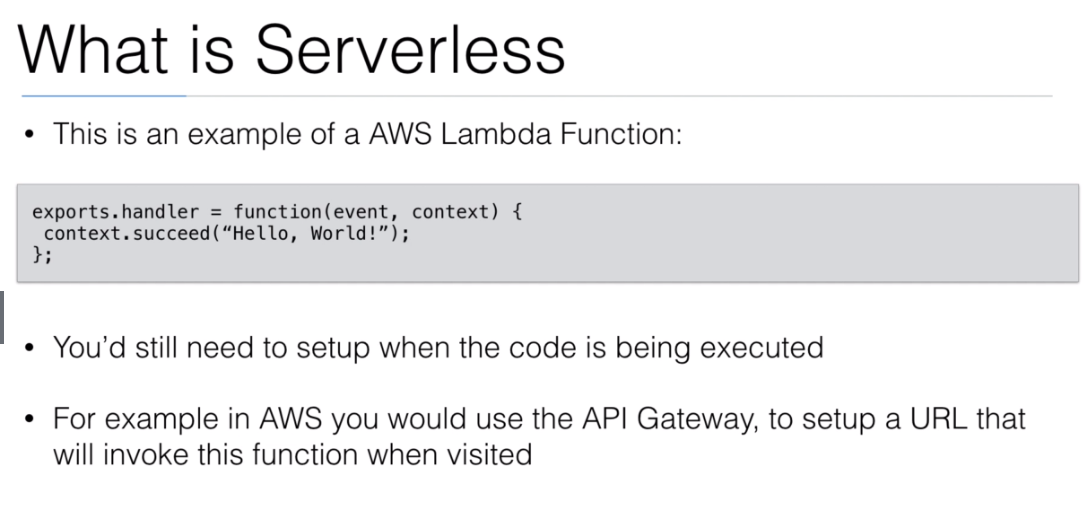

- Section: 6. Serverless on Kubernetes

What I've learned

- Install and configure Kubernetes (on your laptop/desktop or production grade cluster on AWS)

- Use Docker Client (with kubernetes), kubeadm, kops, or minikube to setup your cluster

- Be able to run stateless and stateful applications on Kubernetes

- Use Healthchecks, Secrets, ConfigMaps, placement strategies using Node/Pod affinity / anti-affinity

- Use StatefulSets to deploy a Cassandra cluster on Kubernetes

- Add users, set quotas/limits, do node maintenance, setup monitoring

- Use Volumes to provide persistence to your containers

- Be able to scale your apps using metrics

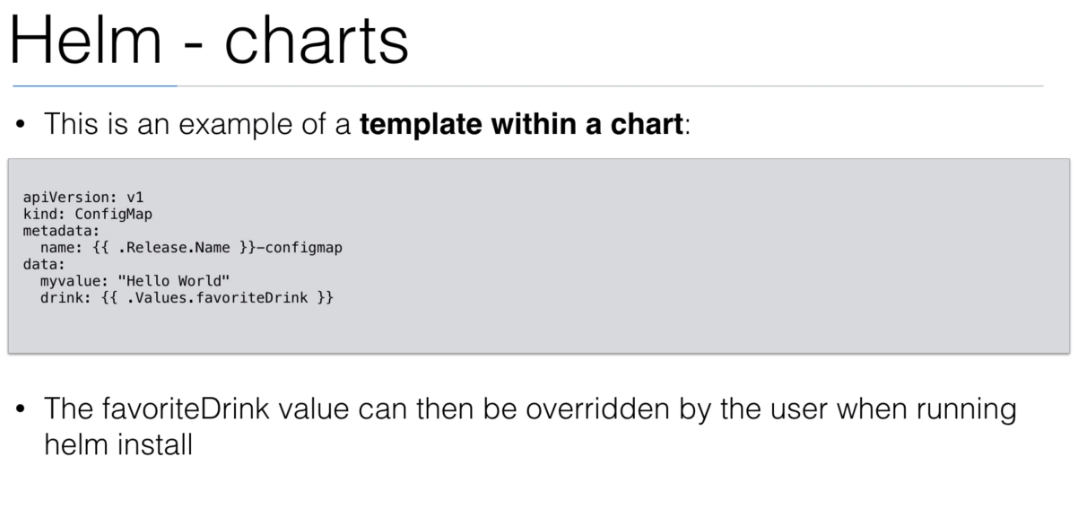

- Package applications with Helm and write your own Helm charts for your applications

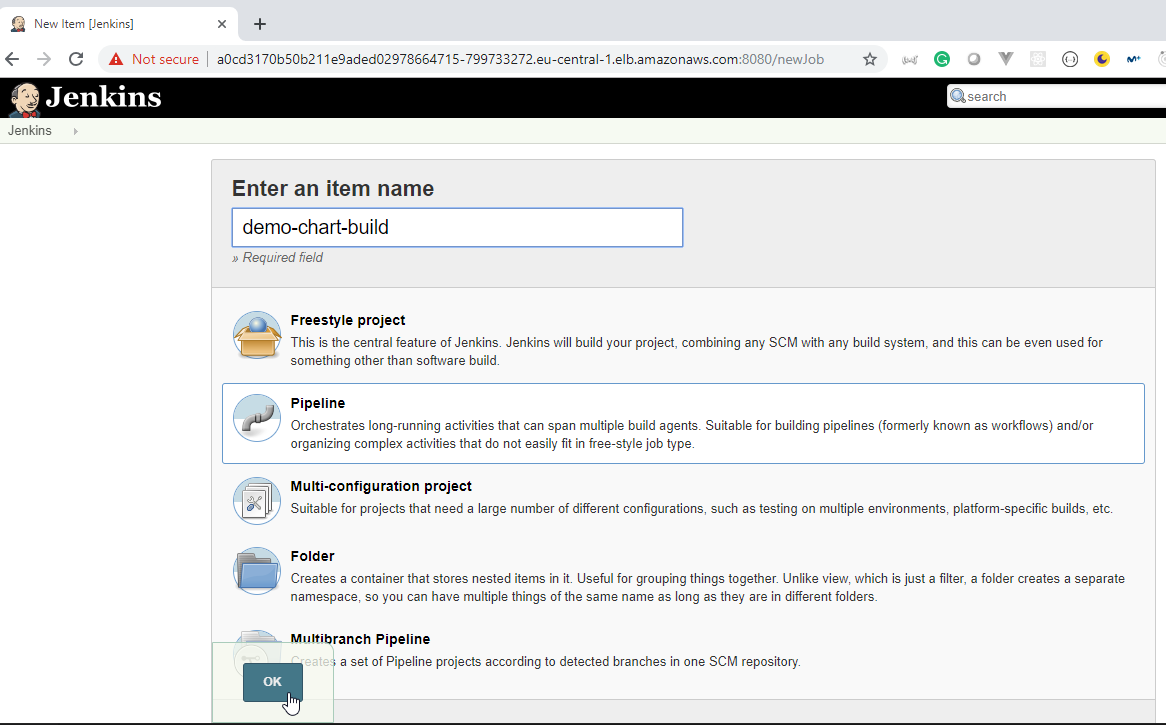

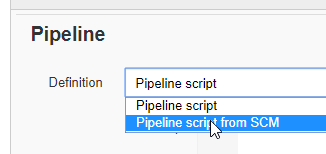

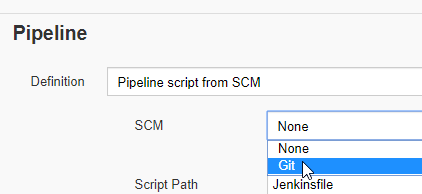

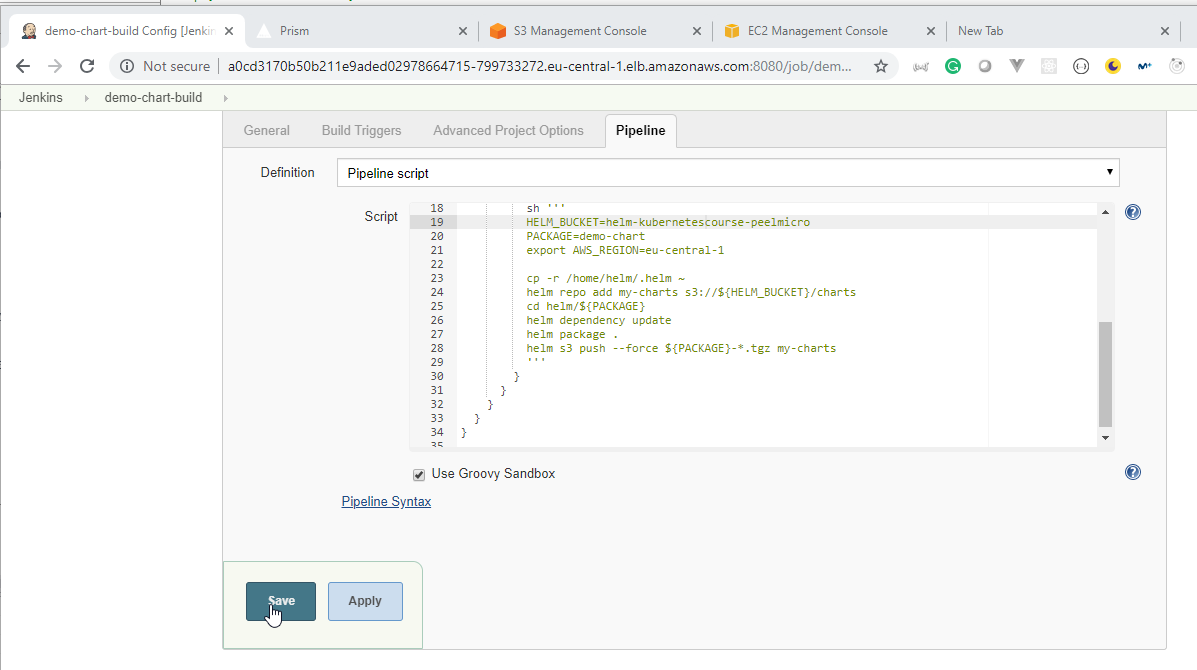

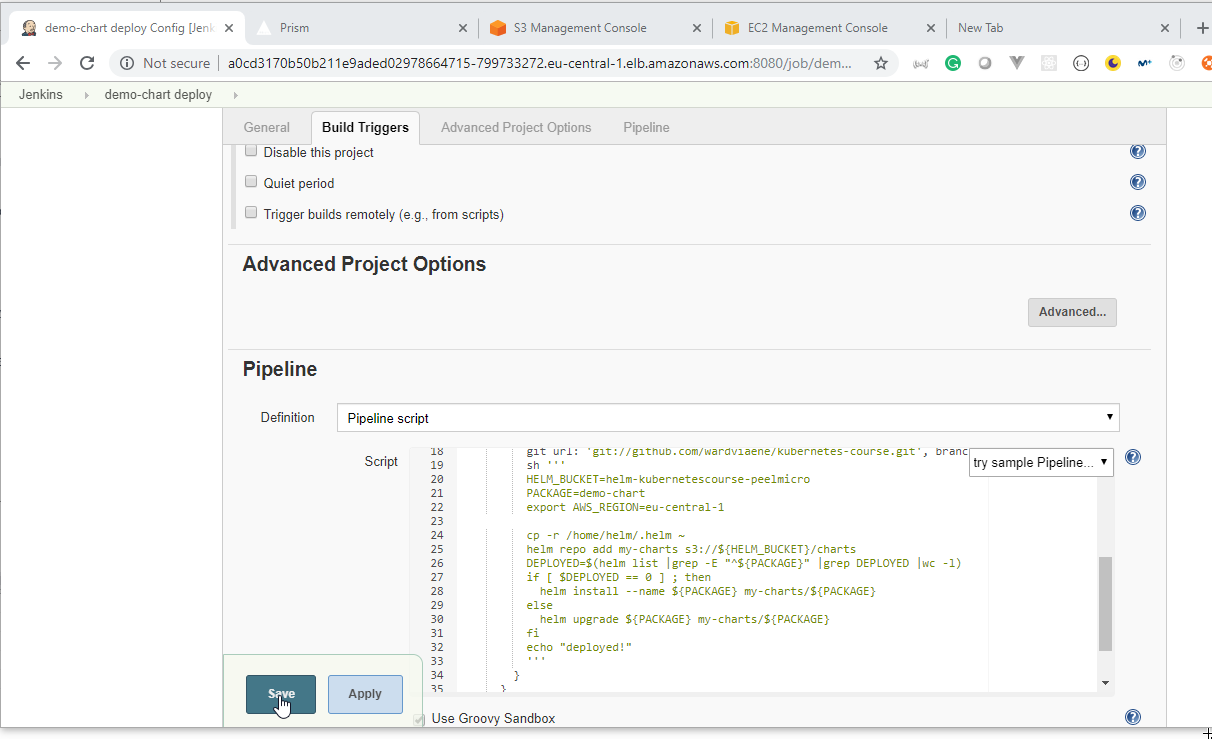

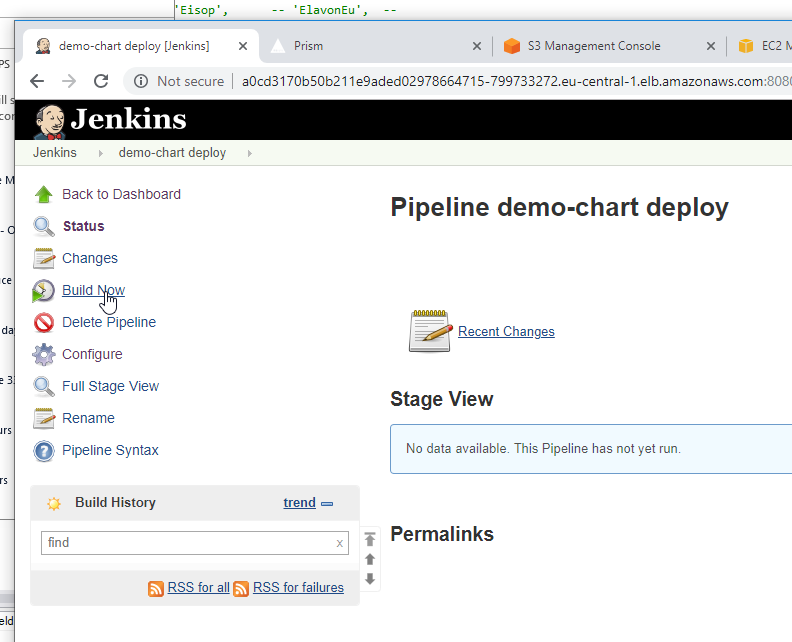

- Automatically build and deploy your own Helm Charts using Jenkins

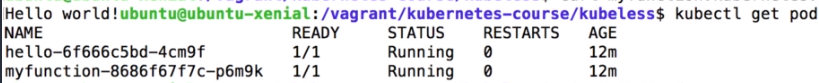

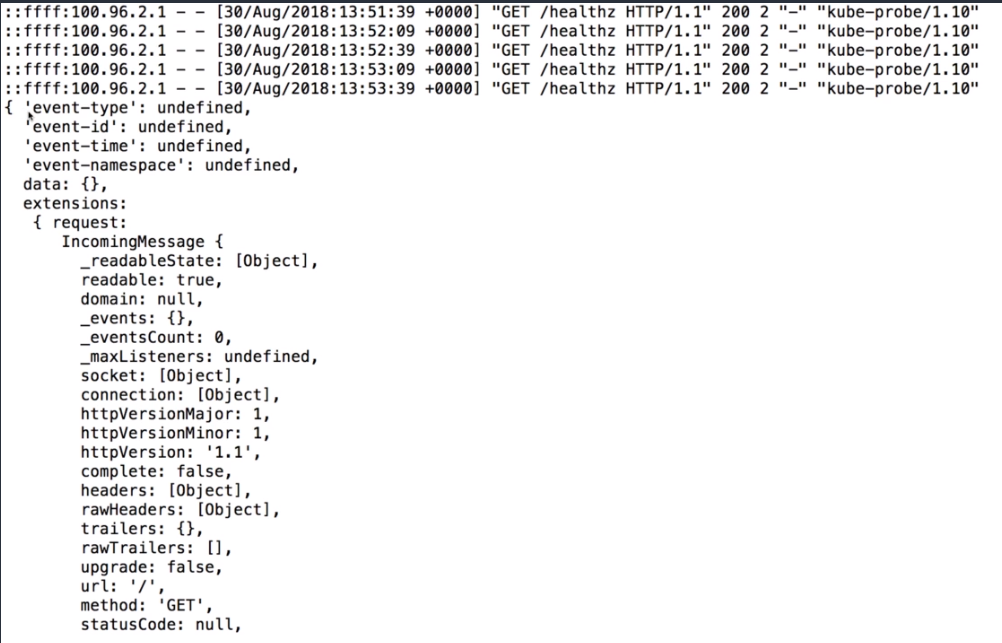

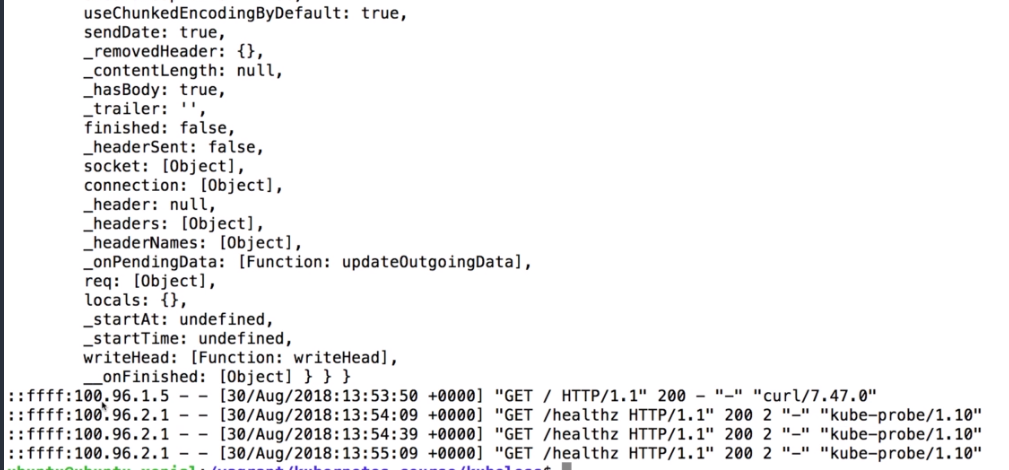

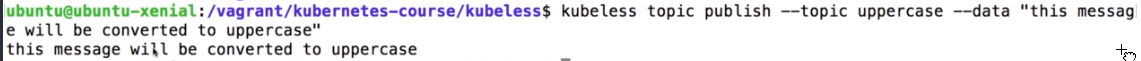

- Install and use kubeless to run functions (Serverless) on Kubernetes

- Install and use Istio to deploy a service mesh on Kubernetes

- Deployment concepts in Kubernetes by using HELM and HELMFILE

Section: 4. Kubernetes Administration

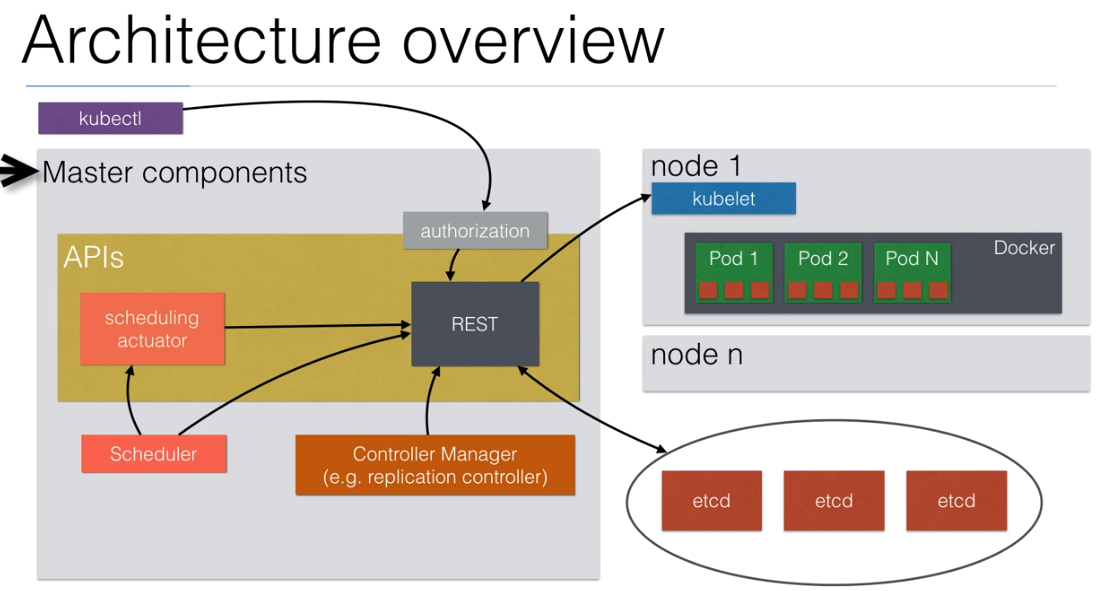

80. The Kubernetes Master Services

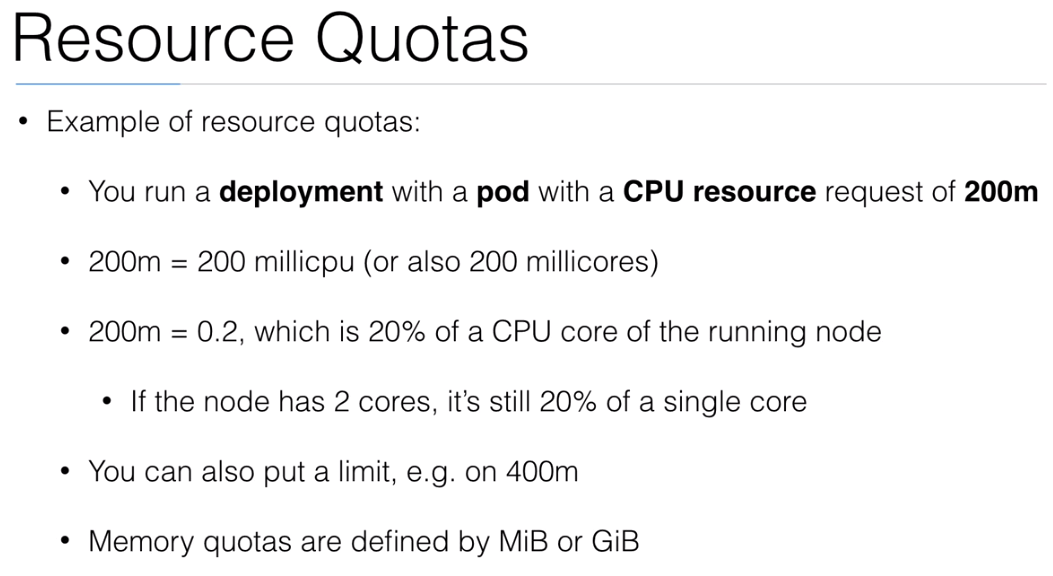

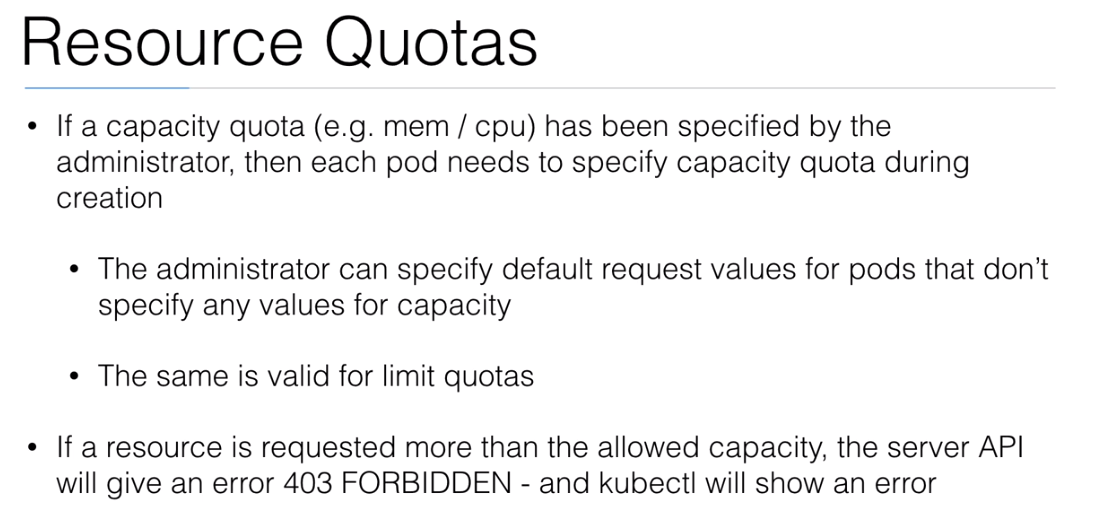

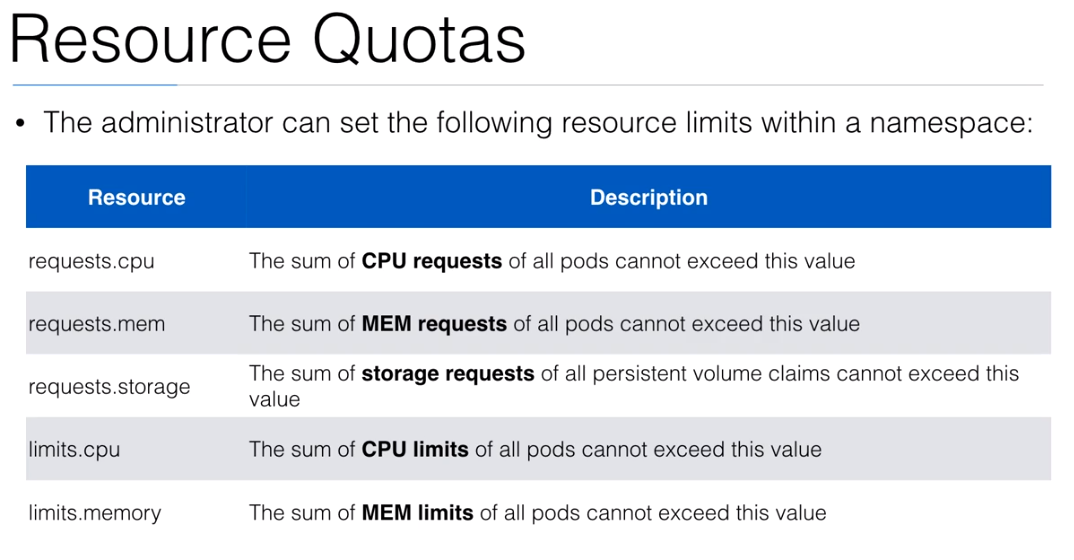

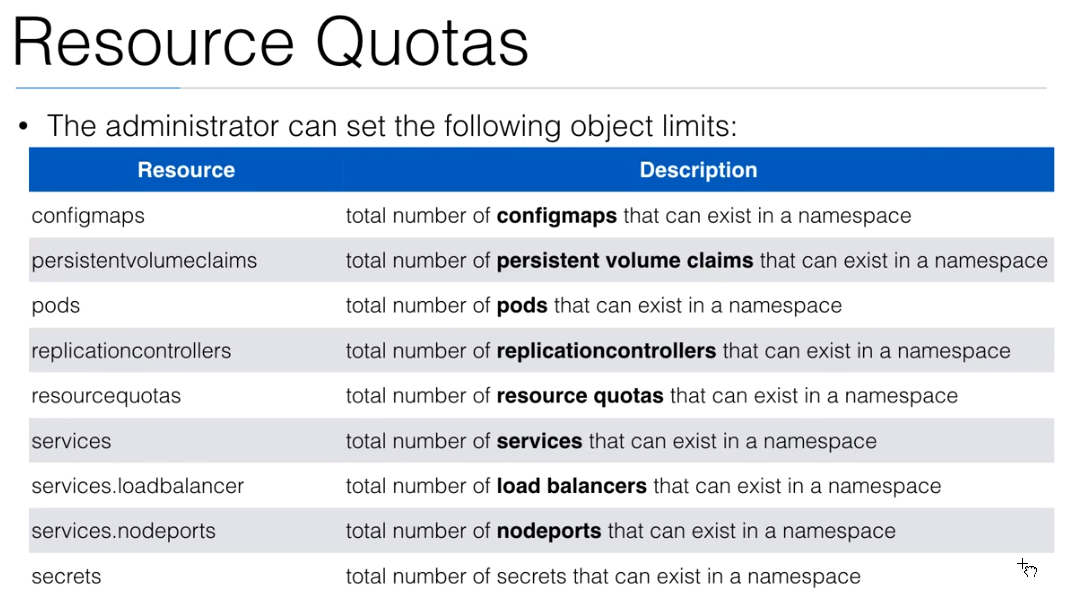

81. Resource Quotas

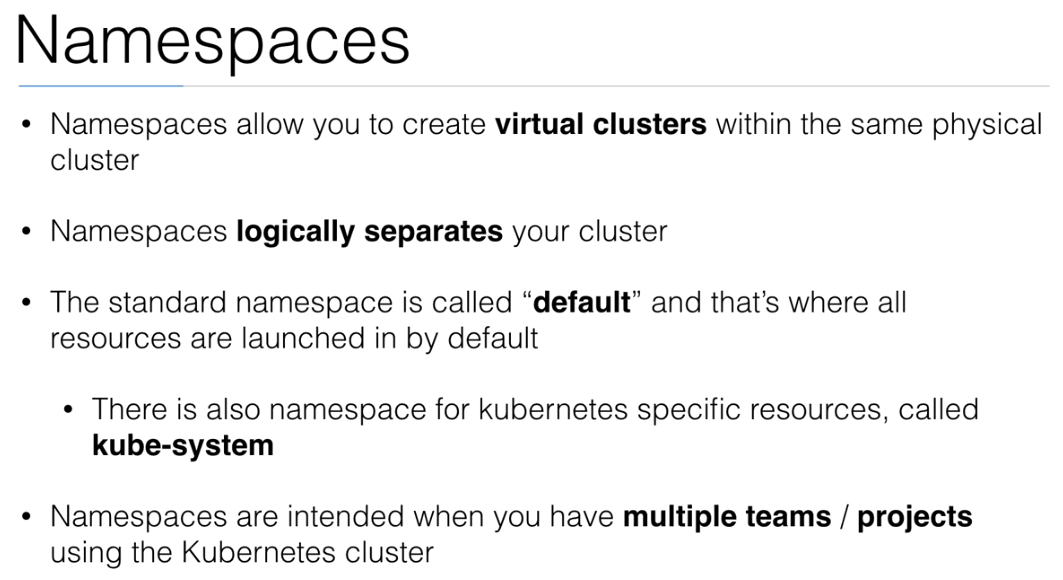

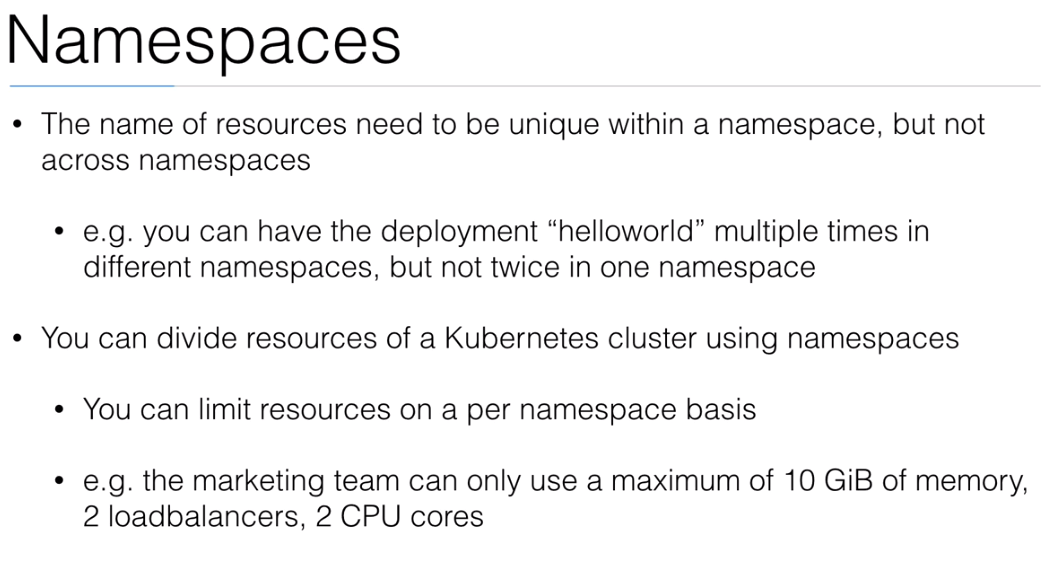

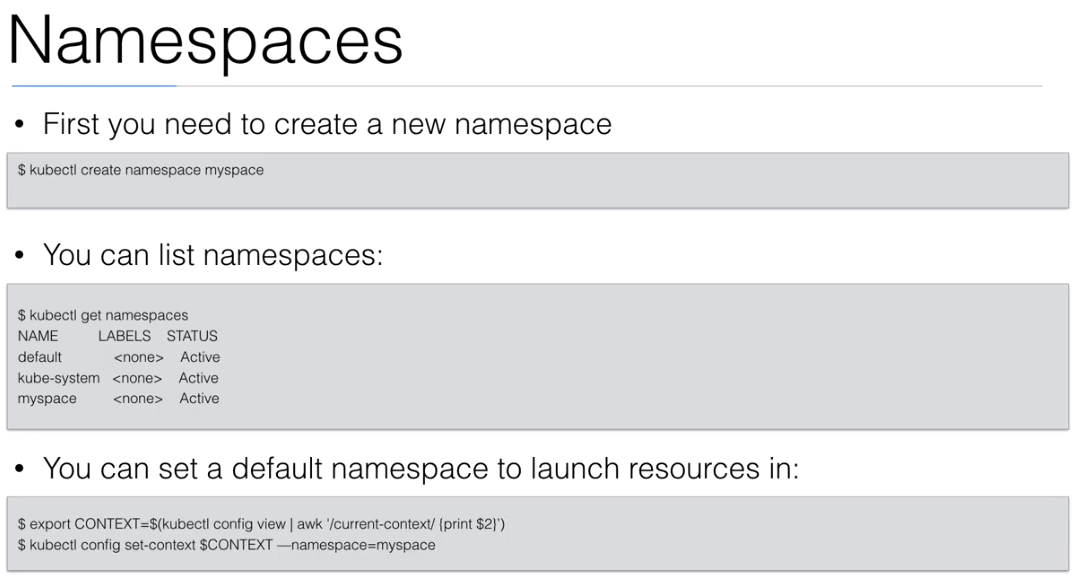

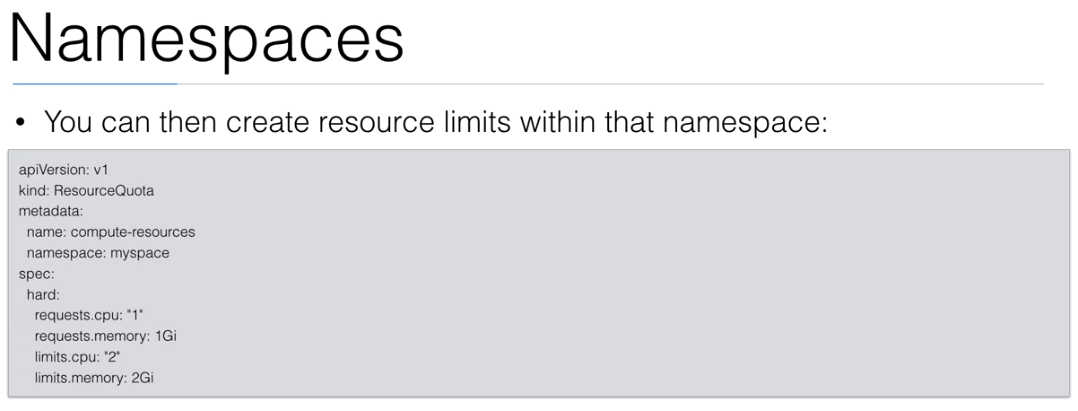

82. Namespaces

83. Demo: Namespace quotas

- We are going to use the

resourcequotas/resourcequota.ymldocument to create themyspacenamespace and thecompute-coutaandobject-coutatypes ofResourceQuotapod.

resourcequotas/resourcequota.yml

apiVersion: v1

kind: Namespace

metadata:

name: myspace

---

apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-quota

namespace: myspace

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

---

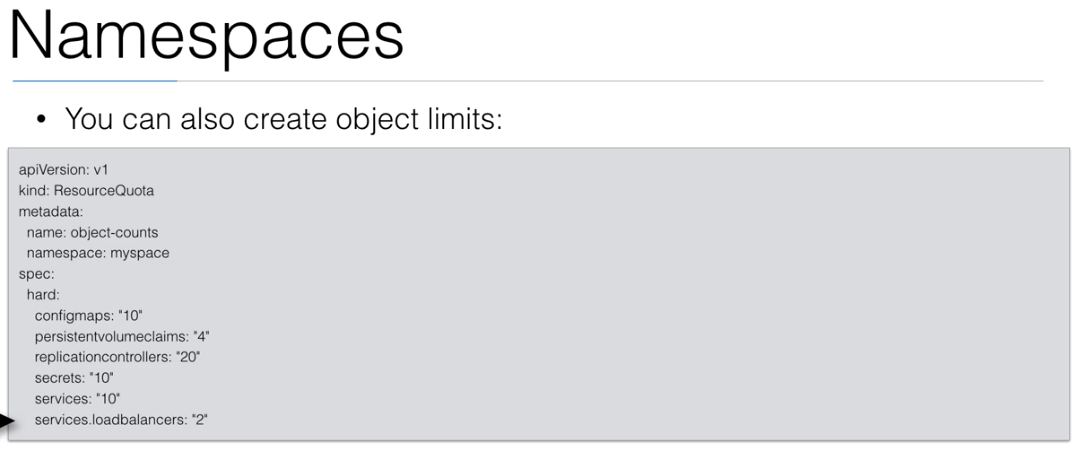

apiVersion: v1

kind: ResourceQuota

metadata:

name: object-quota

namespace: myspace

spec:

hard:

configmaps: "10"

persistentvolumeclaims: "4"

replicationcontrollers: "20"

secrets: "10"

services: "10"

services.loadbalancers: "2"

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl create -f resourcequota.ymlnamespace/myspace created

resourcequota/compute-quota created

resourcequota/object-quota created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get namespace

NAME STATUS AGE

default Active 33d

kube-public Active 33d

kube-system Active 33d

myspace Active 48s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get resourcequota

No resources found.

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get resourcequota --namespace=myspace

NAME CREATED AT

compute-quota 2019-04-02T05:02:50Z

object-quota 2019-04-02T05:02:50Z

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$

- We are going to use the

resourcequotas/helloworld-no-quotas.ymldocument to create adeploymentwith no quotas defined.

resourcequotas/helloworld-no-quotas.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: helloworld-deployment

namespace: myspace

spec:

replicas: 3

template:

metadata:

labels:

app: helloworld

spec:

containers:

- name: k8s-demo

image: wardviaene/k8s-demo

ports:

- name: nodejs-port

containerPort: 3000

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl create -f helloworld-no-quotas.yml

deployment.extensions/helloworld-deployment created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get deploy --namespace=myspace

NAME READY UP-TO-DATE AVAILABLE AGE

helloworld-deployment 0/3 0 0 44s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get rs --namespace=myspace

NAME DESIRED CURRENT READY AGE

helloworld-deployment-969d5cbd5 3 0 0 2m49s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl describe rs/helloworld-deployment-969d5cbd5 --namespace=myspace

Name: helloworld-deployment-969d5cbd5

Namespace: myspace

Selector: app=helloworld,pod-template-hash=969d5cbd5

Labels: app=helloworld

pod-template-hash=969d5cbd5

Annotations: deployment.kubernetes.io/desired-replicas: 3

deployment.kubernetes.io/max-replicas: 4

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/helloworld-deployment

Replicas: 0 current / 3 desired

Pods Status: 0 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=helloworld

pod-template-hash=969d5cbd5

Containers:

k8s-demo:

Image: wardviaene/k8s-demo

Port: 3000/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

ReplicaFailure True FailedCreate

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-226vp" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-msrr7" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-7nmct" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-l2t2z" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-8l75l" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-vskfw" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-l5kmc" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m57s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-jxb7q" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 3m56s replicaset-controller Error creating: pods "helloworld-deployment-969d5cbd5-h8sls" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

Warning FailedCreate 74s (x7 over 3m55s) replicaset-controller (combined from similar events): Error creating: pods "helloworld-deployment-969d5cbd5-5vvlm" is forbidden: failed quota: compute-quota: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl delete deploy/helloworld-deployment --namespace=myspace

deployment.extensions "helloworld-deployment" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get rs --namespace=myspace No resources found.

- We are going to use the

resourcequotas/helloworld-with-quotas.ymldocument to create adeploymentwith quotas defined.

resourcequotas/helloworld-with-quotas.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: helloworld-deployment

namespace: myspace

spec:

replicas: 3

template:

metadata:

labels:

app: helloworld

spec:

containers:

- name: k8s-demo

image: wardviaene/k8s-demo

ports:

- name: nodejs-port

containerPort: 3000

resources:

requests:

cpu: 200m

memory: 0.5Gi

limits:

cpu: 400m

memory: 1Gi

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl create -f helloworld-with-quotas.yml deployment.extensions/helloworld-deployment created

- Even though 3 replicas were required only 2 have been created because the third one is outside the boundaries defined in the

ResourceQuota

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get pod --namespace=myspace

NAME READY STATUS RESTARTS AGE

helloworld-deployment-bbf665cb8-nxsmh 1/1 Running 0 24s

helloworld-deployment-bbf665cb8-sn27t 1/1 Running 0 24s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get rs --namespace=myspace

NAME DESIRED CURRENT READY AGE

helloworld-deployment-bbf665cb8 3 2 2 3m57s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl describe rs/helloworld-deployment-bbf665cb8 --namespace=myspace

Name: helloworld-deployment-bbf665cb8

Namespace: myspace

Selector: app=helloworld,pod-template-hash=bbf665cb8

Labels: app=helloworld

pod-template-hash=bbf665cb8

Annotations: deployment.kubernetes.io/desired-replicas: 3

deployment.kubernetes.io/max-replicas: 4

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/helloworld-deployment

Replicas: 2 current / 3 desired

Pods Status: 2 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=helloworld

pod-template-hash=bbf665cb8

Containers:

k8s-demo:

Image: wardviaene/k8s-demo

Port: 3000/TCP

Host Port: 0/TCP

Limits:

cpu: 400m

memory: 1Gi

Requests:

cpu: 200m

memory: 512Mi

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

ReplicaFailure True FailedCreate

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 4m26s replicaset-controller Created pod: helloworld-deployment-bbf665cb8-nxsmh

Warning FailedCreate 4m26s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-mkzww" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Normal SuccessfulCreate 4m26s replicaset-controller Created pod: helloworld-deployment-bbf665cb8-sn27t

Warning FailedCreate 4m26s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-9wz9z" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 4m26s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-nmqkc" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 4m26s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-vrf27" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 4m26s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-vhd94" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 4m26s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-5mpf2" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 4m26s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-fbm29" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 4m25s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-62hdr" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 4m25s replicaset-controller Error creating: pods "helloworld-deployment-bbf665cb8-7mthr" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

Warning FailedCreate 96s (x8 over 4m25s) replicaset-controller (combined from similar events): Error creating: pods "helloworld-deployment-bbf665cb8-cg8bx" is forbidden: exceeded quota: compute-quota, requested: limits.memory=1Gi,requests.memory=512Mi, used: limits.memory=2Gi,requests.memory=1Gi, limited: limits.memory=2Gi,requests.memory=1Gi

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get quota --namespace=myspace

NAME CREATED AT

compute-quota 2019-04-02T05:02:50Z

object-quota 2019-04-02T05:02:50Z

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl describe quota/compute-quota --namespace=myspace Name: compute-quota

Namespace: myspace

Resource Used Hard

-------- ---- ----

limits.cpu 800m 2

limits.memory 2Gi 2Gi

requests.cpu 400m 1

requests.memory 1Gi 1Gi

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl delete -f helloworld-with-quotas.yml deployment.extensions "helloworld-deployment" deleted

- We are going to use the

resourcequotas/defaults.ymldocument to create aLimitRangewith quotas defined.

resourcequotas/defaults.yml

apiVersion: v1

kind: LimitRange

metadata:

name: limits

namespace: myspace

spec:

limits:

- default:

cpu: 200m

memory: 512Mi

defaultRequest:

cpu: 100m

memory: 256Mi

type: Container

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl create -f defaults.yml limitrange/limits created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl describe limits/limits --namespace=myspace Name: limits

Namespace: myspace

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory - - 256Mi 512Mi -

Container cpu - - 100m 200m -

- The deployment with no quotas has now been created with success:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl create -f helloworld-no-quotas.yml

deployment.extensions/helloworld-deployment created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl get pods --namespace=myspace NAME READY STATUS RESTARTS AGE

helloworld-deployment-969d5cbd5-57g9j 1/1 Running 0 22s

helloworld-deployment-969d5cbd5-qchpx 1/1 Running 0 22s

helloworld-deployment-969d5cbd5-zp655 1/1 Running 0 23s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl delete -f helloworld-no-quotas.yml

deployment.extensions "helloworld-deployment" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl delete -f defaults.yml

limitrange "limits" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/resourcequotas$ kubectl delete -f resourcequota.yml

namespace "myspace" deleted

resourcequota "compute-quota" deleted

resourcequota "object-quota" deleted

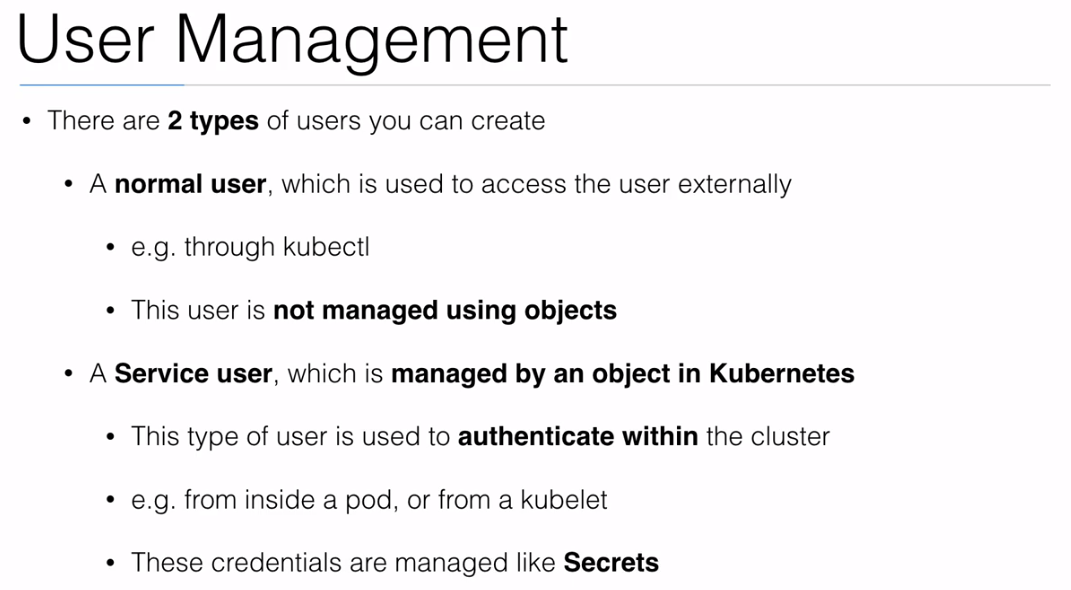

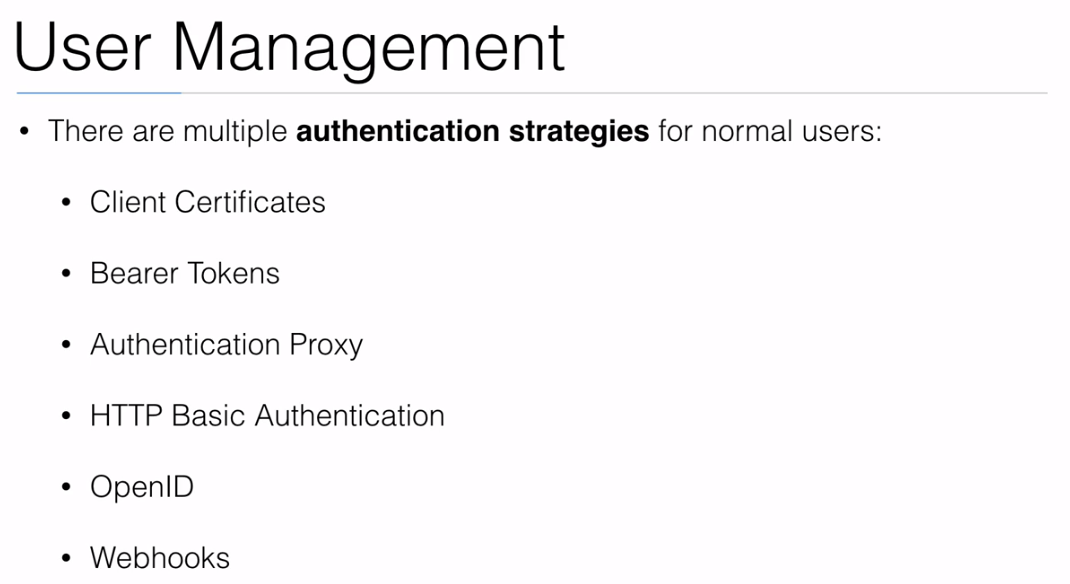

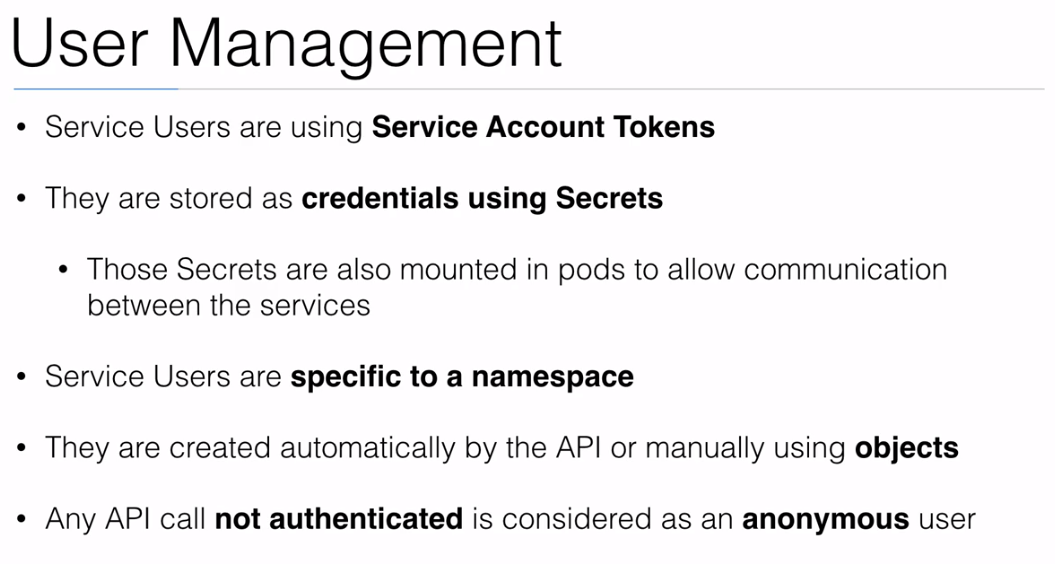

84. User Management

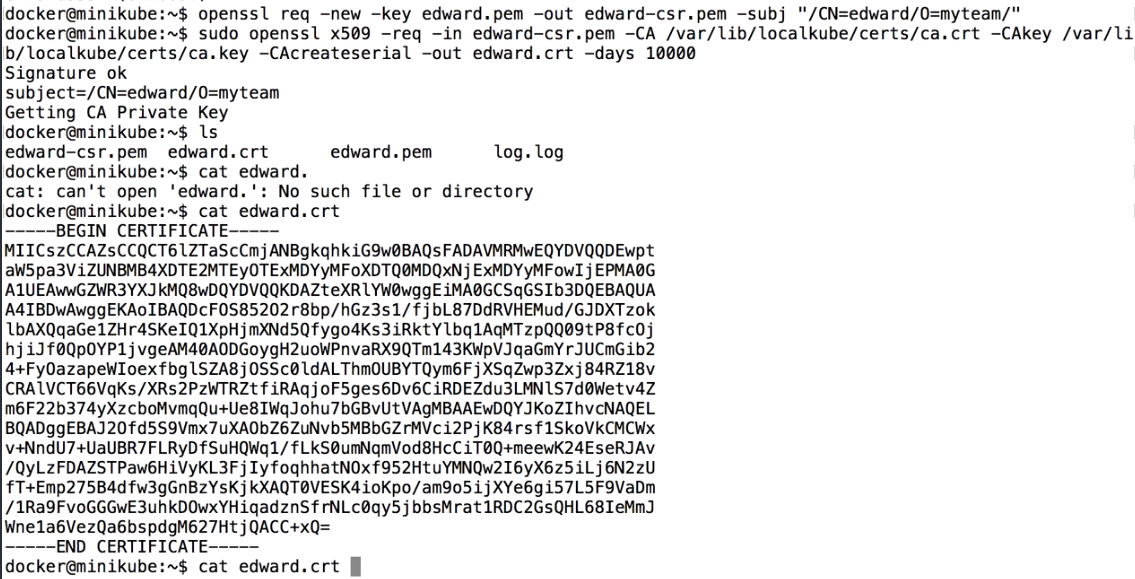

85. Demo: Adding Users

- We are going to generate a new RSA key

ubuntu@kubernetes-master:~$ openssl genrsa -out edward.pem 2048

Generating RSA private key, 2048 bit long modulus

...+++

........................................................................+++

e is 65537 (0x10001)

- We are going to create a new certificate request where the login has been specified the user login and group

ubuntu@kubernetes-master:~$ openssl req -new -key edward.pem -out edward-csr.pem -subj "/CN=edward/O=myteam/"

ubuntu@kubernetes-master:~$

- We are going to create a new certificate

openssl x509 -req -in edward-csr.pem -CA ca.crt -CAkey ca.key -CAcreateserial -out edward.crt -days 10000

- It is not working in this server becuase

ca.crtcannot be found

- We need to copy the certificate and the private key created

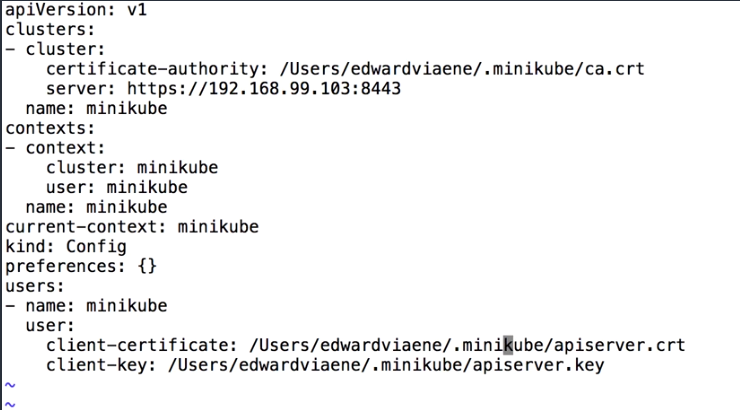

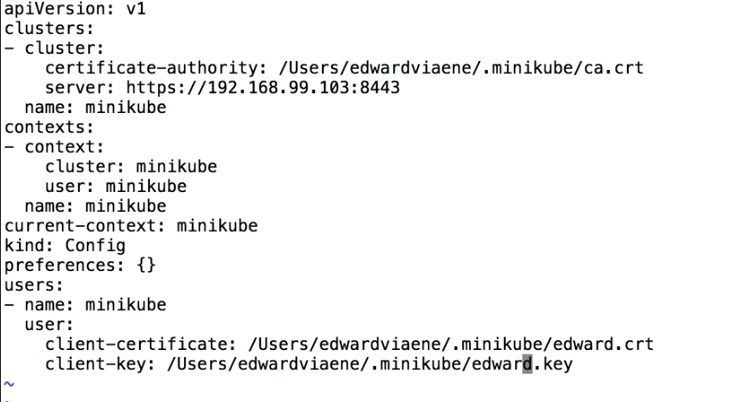

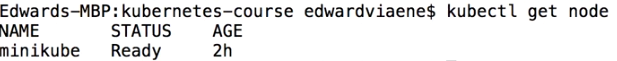

- We need to modify the

~./kube/configdocument to use the new certificate and key

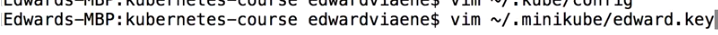

- We need to paste the key in the

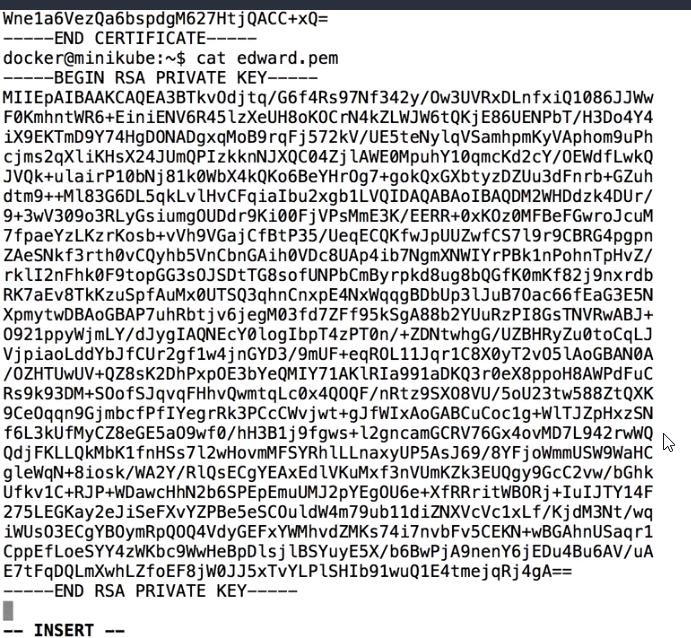

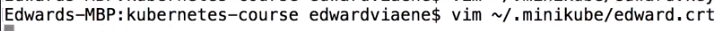

~/.minikube/edward.keydocument

- We need to paste the key in the

~/.minikube/edward.crtdocument

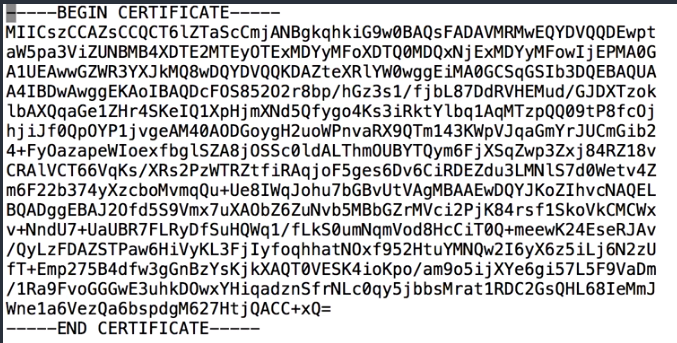

- Now the cluster is using the new user and the new certificate

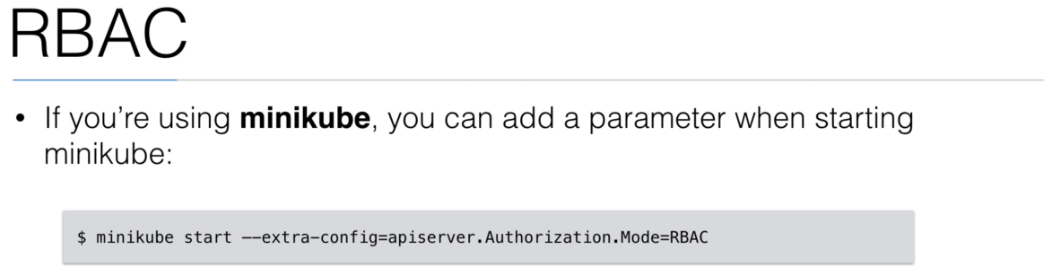

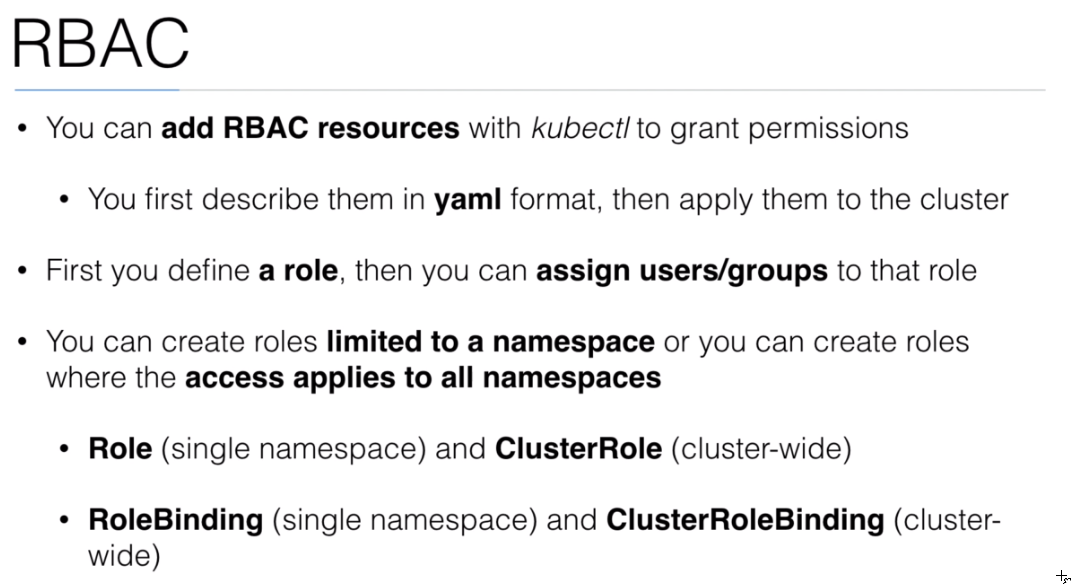

86. RBAC

87. Demo: RBAC

- We need to create the AWS Cluster again.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops create cluster --name=kubernetes.peelmicro.com --state=s3://kubernetes.peelmicro.com --zones=eu-central-1a --node-count=2 --node-size=t2.micro --master-size=t2.micro --dns-zone=kubernetes.peelmicro.com

I0403 04:22:30.149454 27605 create_cluster.go:480] Inferred --cloud=aws from zone "eu-central-1a"

I0403 04:22:30.268815 27605 subnets.go:184] Assigned CIDR 172.20.32.0/19 to subnet eu-central-1a

I0403 04:22:30.922684 27605 create_cluster.go:1351] Using SSH public key: /root/.ssh/id_rsa.pub

Previewing changes that will be made:

I0403 04:22:32.892966 27605 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0403 04:22:33.269317 27605 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0403 04:22:33.461266 27605 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0403 04:22:33.594023 27605 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0403 04:22:33.630235 27605 executor.go:103] Tasks: 73 done / 73 total; 0 can run

Will create resources:

AutoscalingGroup/master-eu-central-1a.masters.kubernetes.peelmicro.com

MinSize 1

MaxSize 1

Subnets [name:eu-central-1a.kubernetes.peelmicro.com]

Tags {k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: master-eu-central-1a, k8s.io/role/master: 1, Name: master-eu-central-1a.masters.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

Granularity 1Minute

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

LaunchConfiguration name:master-eu-central-1a.masters.kubernetes.peelmicro.com

AutoscalingGroup/nodes.kubernetes.peelmicro.com

MinSize 2

MaxSize 2

Subnets [name:eu-central-1a.kubernetes.peelmicro.com]

Tags {k8s.io/role/node: 1, Name: nodes.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: nodes}

Granularity 1Minute

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

LaunchConfiguration name:nodes.kubernetes.peelmicro.com

DHCPOptions/kubernetes.peelmicro.com

DomainName eu-central-1.compute.internal

DomainNameServers AmazonProvidedDNS

Shared false

Tags {kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

EBSVolume/a.etcd-events.kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

VolumeType gp2

SizeGB 20

Encrypted false

Tags {k8s.io/etcd/events: a/a, k8s.io/role/master: 1, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: a.etcd-events.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

EBSVolume/a.etcd-main.kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

VolumeType gp2

SizeGB 20

Encrypted false

Tags {kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: a.etcd-main.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, k8s.io/etcd/main: a/a, k8s.io/role/master: 1}

IAMInstanceProfile/masters.kubernetes.peelmicro.com

Shared false

IAMInstanceProfile/nodes.kubernetes.peelmicro.com

Shared false

IAMInstanceProfileRole/masters.kubernetes.peelmicro.com

InstanceProfile name:masters.kubernetes.peelmicro.com id:masters.kubernetes.peelmicro.com

Role name:masters.kubernetes.peelmicro.com

IAMInstanceProfileRole/nodes.kubernetes.peelmicro.com

InstanceProfile name:nodes.kubernetes.peelmicro.com id:nodes.kubernetes.peelmicro.com

Role name:nodes.kubernetes.peelmicro.com

IAMRole/masters.kubernetes.peelmicro.com

ExportWithID masters

IAMRole/nodes.kubernetes.peelmicro.com

ExportWithID nodes

IAMRolePolicy/masters.kubernetes.peelmicro.com

Role name:masters.kubernetes.peelmicro.com

IAMRolePolicy/nodes.kubernetes.peelmicro.com

Role name:nodes.kubernetes.peelmicro.com

InternetGateway/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

Shared false

Tags {kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

Keypair/apiserver-aggregator

Signer name:apiserver-aggregator-ca id:cn=apiserver-aggregator-ca

Subject cn=aggregator

Type client

Format v1alpha2

Keypair/apiserver-aggregator-ca

Subject cn=apiserver-aggregator-ca

Type ca

Format v1alpha2

Keypair/apiserver-proxy-client

Signer name:ca id:cn=kubernetes

Subject cn=apiserver-proxy-client

Type client

Format v1alpha2

Keypair/ca

Subject cn=kubernetes

Type ca

Format v1alpha2

Keypair/kops

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kops

Type client

Format v1alpha2

Keypair/kube-controller-manager

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-controller-manager

Type client

Format v1alpha2

Keypair/kube-proxy

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-proxy

Type client

Format v1alpha2

Keypair/kube-scheduler

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-scheduler

Type client

Format v1alpha2

Keypair/kubecfg

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kubecfg

Type client

Format v1alpha2

Keypair/kubelet

Signer name:ca id:cn=kubernetes

Subject o=system:nodes,cn=kubelet

Type client

Format v1alpha2

Keypair/kubelet-api

Signer name:ca id:cn=kubernetes

Subject cn=kubelet-api

Type client

Format v1alpha2

Keypair/master

AlternateNames [100.64.0.1, 127.0.0.1, api.internal.kubernetes.peelmicro.com, api.kubernetes.peelmicro.com, kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.cluster.local]

Signer name:ca id:cn=kubernetes

Subject cn=kubernetes-master

Type server

Format v1alpha2

LaunchConfiguration/master-eu-central-1a.masters.kubernetes.peelmicro.com

ImageID kope.io/k8s-1.10-debian-jessie-amd64-hvm-ebs-2018-08-17

InstanceType t2.micro

SSHKey name:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e id:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

SecurityGroups [name:masters.kubernetes.peelmicro.com]

AssociatePublicIP true

IAMInstanceProfile name:masters.kubernetes.peelmicro.com id:masters.kubernetes.peelmicro.com

RootVolumeSize 64

RootVolumeType gp2

SpotPrice

LaunchConfiguration/nodes.kubernetes.peelmicro.com

ImageID kope.io/k8s-1.10-debian-jessie-amd64-hvm-ebs-2018-08-17

InstanceType t2.micro

SSHKey name:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e id:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

SecurityGroups [name:nodes.kubernetes.peelmicro.com]

AssociatePublicIP true

IAMInstanceProfile name:nodes.kubernetes.peelmicro.com id:nodes.kubernetes.peelmicro.com

RootVolumeSize 128

RootVolumeType gp2

SpotPrice

ManagedFile/kubernetes.peelmicro.com-addons-bootstrap

Location addons/bootstrap-channel.yaml

ManagedFile/kubernetes.peelmicro.com-addons-core.addons.k8s.io

Location addons/core.addons.k8s.io/v1.4.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-dns-controller.addons.k8s.io-k8s-1.6

Location addons/dns-controller.addons.k8s.io/k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-dns-controller.addons.k8s.io-pre-k8s-1.6

Location addons/dns-controller.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-kube-dns.addons.k8s.io-k8s-1.6

Location addons/kube-dns.addons.k8s.io/k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-kube-dns.addons.k8s.io-pre-k8s-1.6

Location addons/kube-dns.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-limit-range.addons.k8s.io

Location addons/limit-range.addons.k8s.io/v1.5.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-rbac.addons.k8s.io-k8s-1.8

Location addons/rbac.addons.k8s.io/k8s-1.8.yaml

ManagedFile/kubernetes.peelmicro.com-addons-storage-aws.addons.k8s.io-v1.6.0

Location addons/storage-aws.addons.k8s.io/v1.6.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-storage-aws.addons.k8s.io-v1.7.0

Location addons/storage-aws.addons.k8s.io/v1.7.0.yaml

Route/0.0.0.0/0

RouteTable name:kubernetes.peelmicro.com

CIDR 0.0.0.0/0

InternetGateway name:kubernetes.peelmicro.com

RouteTable/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

Shared false

Tags {Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, kubernetes.io/kops/role: public}

RouteTableAssociation/eu-central-1a.kubernetes.peelmicro.com

RouteTable name:kubernetes.peelmicro.com

Subnet name:eu-central-1a.kubernetes.peelmicro.com

SSHKey/kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

KeyFingerprint 9a:fa:b7:ad:4e:62:1b:16:a4:6b:a5:8f:8f:86:59:f6

Secret/admin

Secret/kube

Secret/kube-proxy

Secret/kubelet

Secret/system:controller_manager

Secret/system:dns

Secret/system:logging

Secret/system:monitoring

Secret/system:scheduler

SecurityGroup/masters.kubernetes.peelmicro.com

Description Security group for masters

VPC name:kubernetes.peelmicro.com

RemoveExtraRules [port=22, port=443, port=2380, port=2381, port=4001, port=4002, port=4789, port=179]

Tags {Name: masters.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

SecurityGroup/nodes.kubernetes.peelmicro.com

Description Security group for nodes

VPC name:kubernetes.peelmicro.com

RemoveExtraRules [port=22]

Tags {Name: nodes.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

SecurityGroupRule/all-master-to-master

SecurityGroup name:masters.kubernetes.peelmicro.com

SourceGroup name:masters.kubernetes.peelmicro.com

SecurityGroupRule/all-master-to-node

SecurityGroup name:nodes.kubernetes.peelmicro.com

SourceGroup name:masters.kubernetes.peelmicro.com

SecurityGroupRule/all-node-to-node

SecurityGroup name:nodes.kubernetes.peelmicro.com

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/https-external-to-master-0.0.0.0/0

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 443

ToPort 443

SecurityGroupRule/master-egress

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-egress

SecurityGroup name:nodes.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-to-master-tcp-1-2379

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 1

ToPort 2379

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-tcp-2382-4000

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 2382

ToPort 4000

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-tcp-4003-65535

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 4003

ToPort 65535

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-udp-1-65535

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol udp

FromPort 1

ToPort 65535

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/ssh-external-to-master-0.0.0.0/0

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

SecurityGroupRule/ssh-external-to-node-0.0.0.0/0

SecurityGroup name:nodes.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

Subnet/eu-central-1a.kubernetes.peelmicro.com

ShortName eu-central-1a

VPC name:kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

CIDR 172.20.32.0/19

Shared false

Tags {KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, kubernetes.io/role/elb: 1, SubnetType: Public, Name: eu-central-1a.kubernetes.peelmicro.com}

VPC/kubernetes.peelmicro.com

CIDR 172.20.0.0/16

EnableDNSHostnames true

EnableDNSSupport true

Shared false

Tags {Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

VPCDHCPOptionsAssociation/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

DHCPOptions name:kubernetes.peelmicro.com

Must specify --yes to apply changes

Cluster configuration has been created.

Suggestions:

* list clusters with: kops get cluster

* edit this cluster with: kops edit cluster kubernetes.peelmicro.com

* edit your node instance group: kops edit ig --name=kubernetes.peelmicro.com nodes

* edit your master instance group: kops edit ig --name=kubernetes.peelmicro.com master-eu-central-1a

Finally configure your cluster with: kops update cluster kubernetes.peelmicro.com --yes

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops update cluster kubernetes.peelmicro.com --yes --state=s3://kubernetes.peelmicro.com

I0403 04:23:56.983165 27629 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0403 04:23:57.528369 27629 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator-ca"

I0403 04:23:57.632754 27629 vfs_castore.go:735] Issuing new certificate: "ca"

I0403 04:23:57.965406 27629 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0403 04:23:59.952092 27629 vfs_castore.go:735] Issuing new certificate: "kubelet"

I0403 04:24:00.310394 27629 vfs_castore.go:735] Issuing new certificate: "kube-scheduler"

I0403 04:24:00.544302 27629 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator"

I0403 04:24:00.908811 27629 vfs_castore.go:735] Issuing new certificate: "kube-controller-manager"

I0403 04:24:01.691687 27629 vfs_castore.go:735] Issuing new certificate: "apiserver-proxy-client"

I0403 04:24:01.738193 27629 vfs_castore.go:735] Issuing new certificate: "kube-proxy"

I0403 04:24:01.781742 27629 vfs_castore.go:735] Issuing new certificate: "master"

I0403 04:24:01.967105 27629 vfs_castore.go:735] Issuing new certificate: "kubelet-api"

I0403 04:24:02.044968 27629 vfs_castore.go:735] Issuing new certificate: "kubecfg"

I0403 04:24:02.186049 27629 vfs_castore.go:735] Issuing new certificate: "kops"

I0403 04:24:02.441478 27629 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0403 04:24:02.745208 27629 launchconfiguration.go:380] waiting for IAM instance profile "nodes.kubernetes.peelmicro.com" to be ready

I0403 04:24:02.755869 27629 launchconfiguration.go:380] waiting for IAM instance profile "masters.kubernetes.peelmicro.com" to be ready

I0403 04:24:13.189545 27629 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0403 04:24:13.766228 27629 executor.go:103] Tasks: 73 done / 73 total; 0 can run

I0403 04:24:13.766469 27629 dns.go:153] Pre-creating DNS records

I0403 04:24:16.386262 27629 update_cluster.go:290] Exporting kubecfg for cluster

kops has set your kubectl context to kubernetes.peelmicro.com

Cluster is starting. It should be ready in a few minutes.

Suggestions:

* validate cluster: kops validate cluster

* list nodes: kubectl get nodes --show-labels

* ssh to the master: ssh -i ~/.ssh/id_rsa admin@api.kubernetes.peelmicro.com

* the admin user is specific to Debian. If not using Debian please use the appropriate user based on your OS.

* read about installing addons at: https://github.com/kubernetes/kops/blob/master/docs/addons.md.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops validate cluster --state=s3://kubernetes.peelmicro.com

Using cluster from kubectl context: kubernetes.peelmicro.com

Validating cluster kubernetes.peelmicro.com

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-eu-central-1a Master t2.micro 1 1 eu-central-1a

nodes Node t2.micro 2 2 eu-central-1a

NODE STATUS

NAME ROLE READY

VALIDATION ERRORS

KIND NAME MESSAGE

dns apiserver Validation Failed

The dns-controller Kubernetes deployment has not updated the Kubernetes cluster's API DNS entry to the correct IP address. The API DNS IP address is the placeholder address that kops creates: 203.0.113.123. Please wait about 5-10 minutes for a master to start, dns-controller to launch, and DNS to propagate. The protokube container and dns-controller deployment logs may contain more diagnostic information. Etcd and the API DNS entries must be updated for a kops Kubernetes cluster to start.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops validate cluster --state=s3://kubernetes.peelmicro.com

Using cluster from kubectl context: kubernetes.peelmicro.com

Validating cluster kubernetes.peelmicro.com

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-eu-central-1a Master t2.micro 1 1 eu-central-1a

nodes Node t2.micro 2 2 eu-central-1a

NODE STATUS

NAME ROLE READY

ip-172-20-36-108.eu-central-1.compute.internal node True

ip-172-20-43-235.eu-central-1.compute.internal master True

ip-172-20-56-84.eu-central-1.compute.internal node True

Your cluster kubernetes.peelmicro.com is ready

- We need to retrieve the key and certificate from kops

root@ubuntu-s-1vcpu-2gb-lon1-01:~# aws s3 sync s3://kubernetes.peelmicro.com/kubernetes.peelmicro.com/pki/private/ca/ ca-key

download: s3://kubernetes.peelmicro.com/kubernetes.peelmicro.com/pki/private/ca/6675519223935684468666002372.key to ca-key/6675519223935684468666002372.key

download: s3://kubernetes.peelmicro.com/kubernetes.peelmicro.com/pki/private/ca/keyset.yaml to ca-key/keyset.yaml

root@ubuntu-s-1vcpu-2gb-lon1-01:~# aws s3 sync s3://kubernetes.peelmicro.com/kubernetes.peelmicro.com/pki/issued/ca/ ca-crt

download: s3://kubernetes.peelmicro.com/kubernetes.peelmicro.com/pki/issued/ca/6675519223935684468666002372.crt to ca-crt/6675519223935684468666002372.crt

download: s3://kubernetes.peelmicro.com/kubernetes.peelmicro.com/pki/issued/ca/keyset.yaml to ca-crt/keyset.yaml

root@ubuntu-s-1vcpu-2gb-lon1-01:~# mv ca-key/*.key ca.key

root@ubuntu-s-1vcpu-2gb-lon1-01:~# mv ca-crt/*.crt ca.crt

- Ensure

opensslis installed

root@ubuntu-s-1vcpu-2gb-lon1-01:~# apt install openssl

Reading package lists... Done

Building dependency tree

Reading state information... Done

openssl is already the newest version (1.1.0g-2ubuntu4.3).

0 upgraded, 0 newly installed, 0 to remove and 35 not upgraded.

- Create a new RSA key

root@ubuntu-s-1vcpu-2gb-lon1-01:~# openssl genrsa -out juan.pem 2048

Generating RSA private key, 2048 bit long modulus

.....................................+++

..................................................................+++

e is 65537 (0x010001)

- Create a new certificate request

root@ubuntu-s-1vcpu-2gb-lon1-01:~# openssl req -new -key juan.pem -out juan-csr.pem -subj "/CN=juan/O=myteam/"

root@ubuntu-s-1vcpu-2gb-lon1-01:~# ls juan*

juan-csr.pem juan.pem

- Assign the new certificate using the key

root@ubuntu-s-1vcpu-2gb-lon1-01:~# openssl x509 -req -in juan-csr.pem -CA ca.crt -CAkey ca.key -CAcreateserial -out juan.crt -days 10000

Signature ok

subject=CN = juan, O = myteam

Getting CA Private Key

root@ubuntu-s-1vcpu-2gb-lon1-01:~# ls juan*

juan-csr.pem juan.crt juan.pem

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- We new to create a new context by adding entries to the kube config file.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config set-credentials juan --client-certificate=juan.crt --client-key=juan.pem

User "juan" set.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config set-context juan --cluster=kubernetes.peelmicro.com --user juan

Context "juan" created.

- We can see the config file

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://api.kubernetes.peelmicro.com

name: kubernetes.peelmicro.com

contexts:

- context:

cluster: kubernetes.peelmicro.com

user: juan

name: juan

- context:

cluster: kubernetes.peelmicro.com

user: kubernetes.peelmicro.com

name: kubernetes.peelmicro.com

current-context: kubernetes.peelmicro.com

kind: Config

preferences: {}

users:

- name: juan

user:

client-certificate: /root/juan.crt

client-key: /root/juan.pem

- name: kubernetes.peelmicro.com

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

password: EORkXBPe2xemfZljk9y01buyVTeLv1vc

username: admin

- name: kubernetes.peelmicro.com-basic-auth

user:

password: EORkXBPe2xemfZljk9y01buyVTeLv1vc

username: admin

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- We can see the contexts available

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

juan kubernetes.peelmicro.com juan

* kubernetes.peelmicro.com kubernetes.peelmicro.com kubernetes.peelmicro.com

- We are still using the

adminuser, for instance when executing the following command

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-172-20-36-108.eu-central-1.compute.internal Ready node 19m v1.10.13

ip-172-20-43-235.eu-central-1.compute.internal Ready master 20m v1.10.13

ip-172-20-56-84.eu-central-1.compute.internal Ready node 19m v1.10.13

- We can change the user by executing the following command

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config use-context juan

Switched to context "juan".

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* juan kubernetes.peelmicro.com juan

kubernetes.peelmicro.com kubernetes.peelmicro.com kubernetes.peelmicro.com

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get nodes

Error from server (Forbidden): nodes is forbidden: User "juan" cannot list nodes at the cluster scope

- If we have to try to see the cluster we are not allowed because the user

juandoesn't have permission yet. The user as authenticated but is not authorised yet.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get nodes

Error from server (Forbidden): nodes is forbidden: User "juan" cannot list nodes at the cluster scope.

- We need to change contexts again to use an user that can give permission to the user

juan

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config use-context kubernetes.peelmicro.com

Switched to context "kubernetes.peelmicro.com".

- We are going to use the

users/admin-user.yamldocument to grantadminrole permissions to the userjuan

users/admin-user.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: User

name: "juan"

apiGroup: rbac.authorization.k8s.io

- We need to execute it:

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/users# kubectl create -f admin-user.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

- If we change contexts to

juanwe can see the user has permission to see the nodes.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config use-context juan

Switched to context "juan".

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* juan kubernetes.peelmicro.com juan

kubernetes.peelmicro.com kubernetes.peelmicro.com kubernetes.peelmicro.com

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-172-20-36-108.eu-central-1.compute.internal Ready node 32m v1.10.13

ip-172-20-43-235.eu-central-1.compute.internal Ready master 34m v1.10.13

ip-172-20-56-84.eu-central-1.compute.internal Ready node 32m v1.10.13

- We are going to delete the

ClusterRoleBindingcreated.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl config use-context kubernetes.peelmicro.com

Switched to context "kubernetes.peelmicro.com".

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/users# kubectl delete -f admin-user.yaml

clusterrolebinding.rbac.authorization.k8s.io "admin-user" deleted

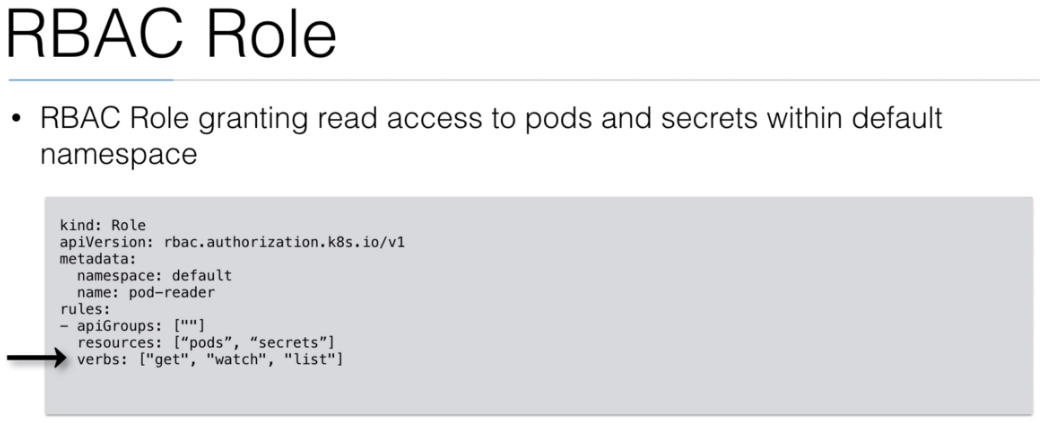

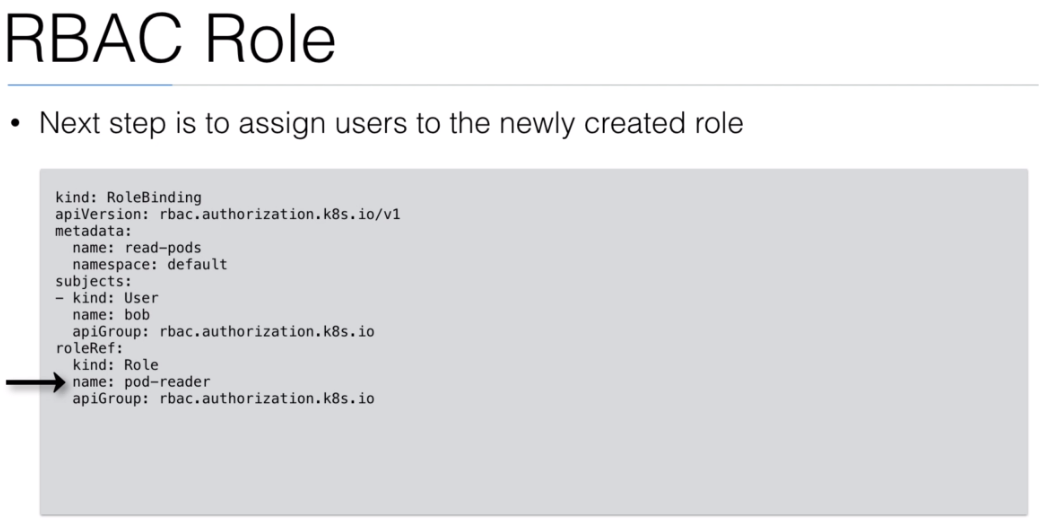

- We are going to use the

users/user.yamldocument to create thepod-readerrole and assign it to the userjuan

users/user.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "watch", "list", "create", "update", "patch", "delete"]

- apiGroups: ["extensions", "apps"]

resources: ["deployments"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: read-pods

namespace: default

subjects:

- kind: User

name: juan

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/users# kubectl create -f user.yaml

role.rbac.authorization.k8s.io/pod-reader created

rolebinding.rbac.authorization.k8s.io/read-pods created

- If we change the context to the user

juanwe can see what this user can do now.

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/users# kubectl config use-context juan

Switched to context "juan".

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/users# kubectl get nodes

Error from server (Forbidden): nodes is forbidden: User "juan" cannot list nodes at the cluster scope

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/users# kubectl get pod

No resources found.

- The user can only manage

podsin thedefaultenvironment

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/users# kubectl get pod -n kube-system

Error from server (Forbidden): pods is forbidden: User "juan" cannot list pods in the namespace "kube-system"

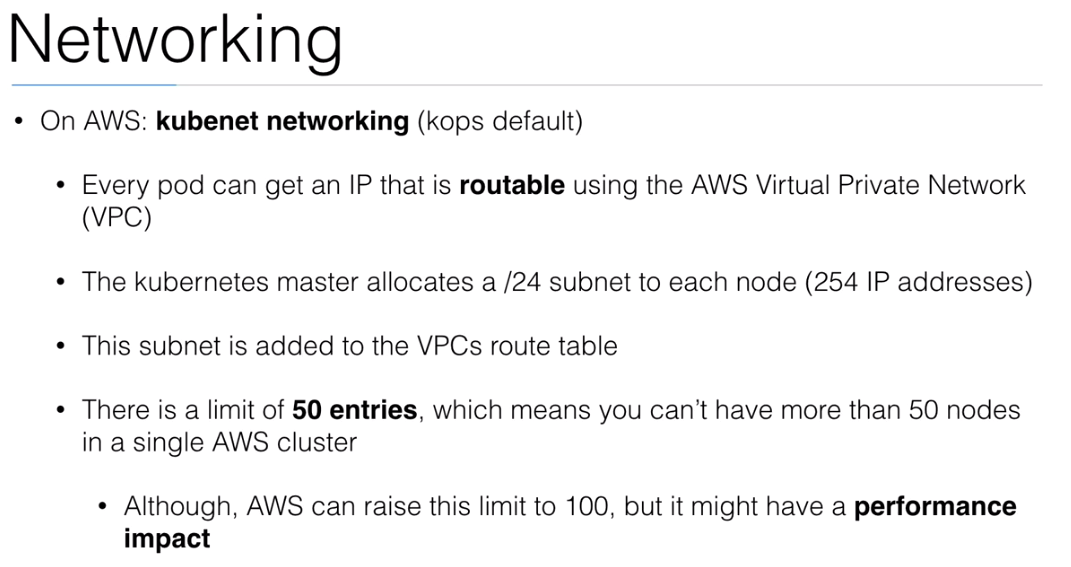

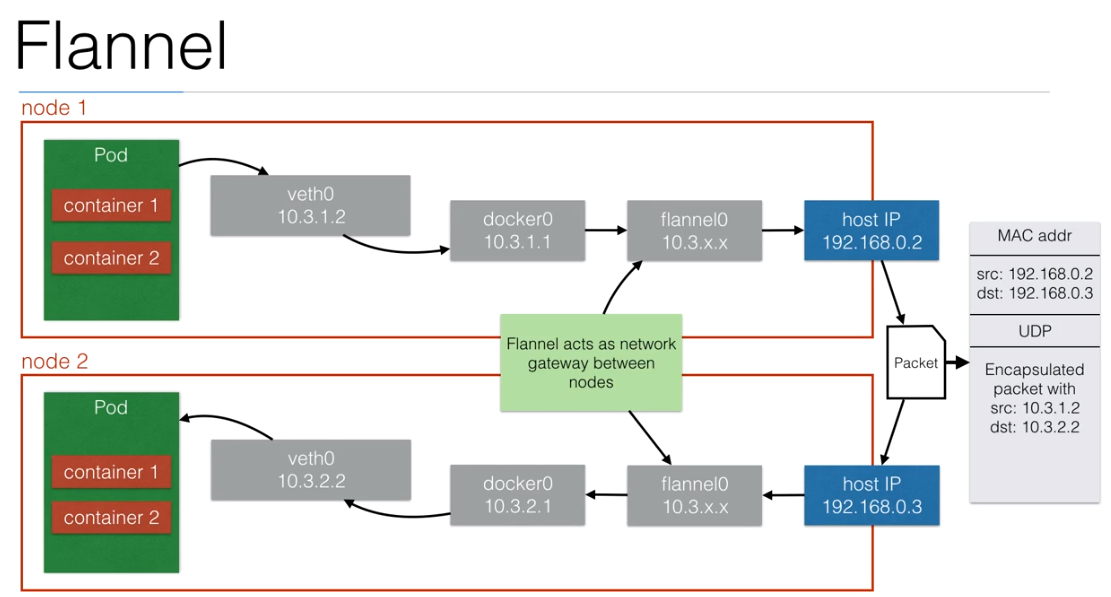

88. Networking

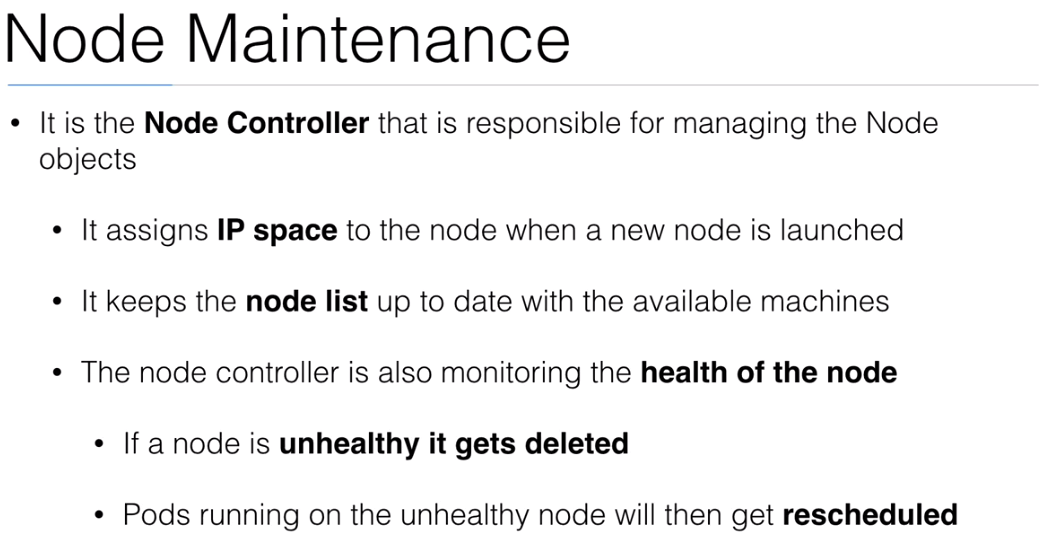

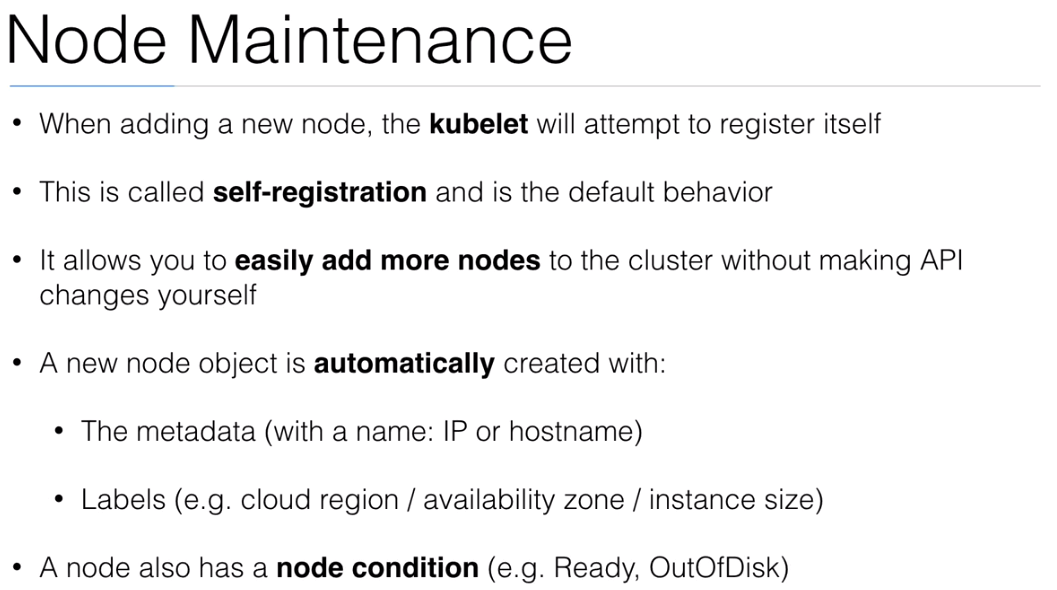

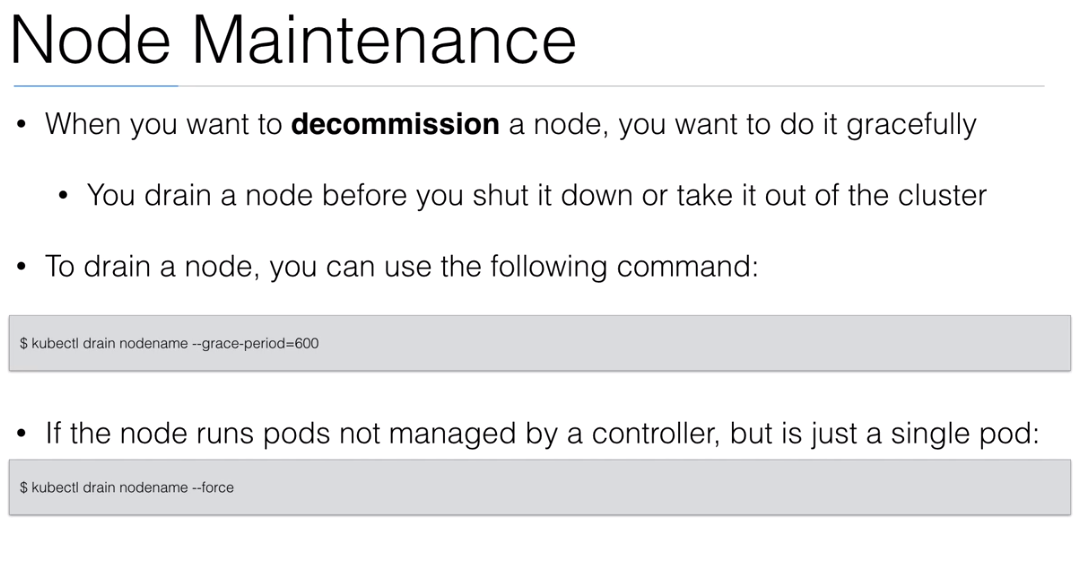

89. Node Maintenance

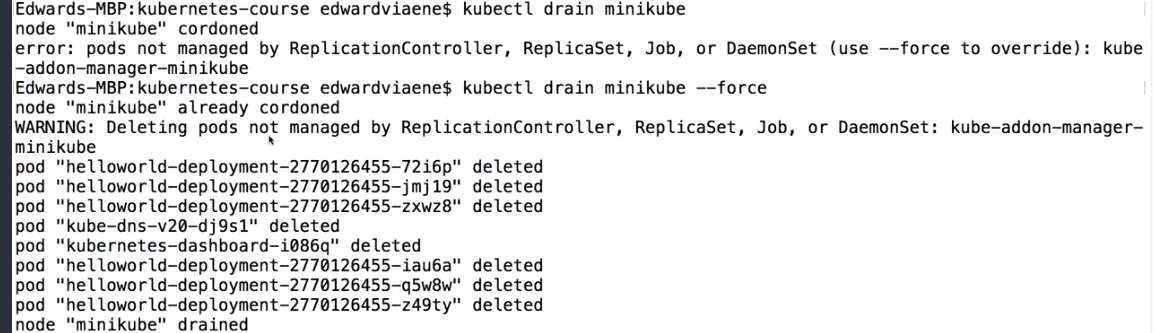

90. Demo: Node Maintenance

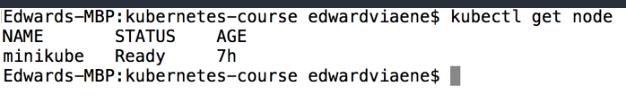

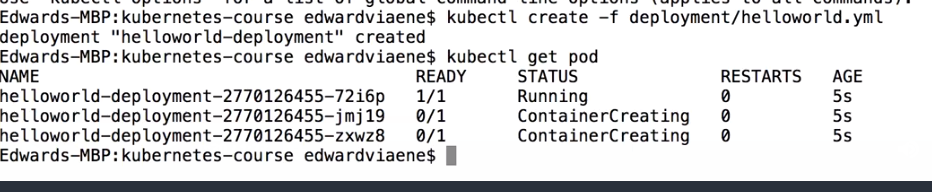

- We have just one node

- We are going to create a deployment

- We are going to drain the node by using

kubectl drain nameofnode

- The pods and deployments belonging to the node are disabled

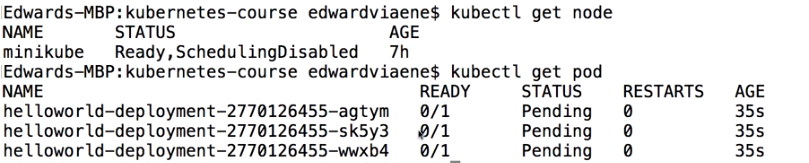

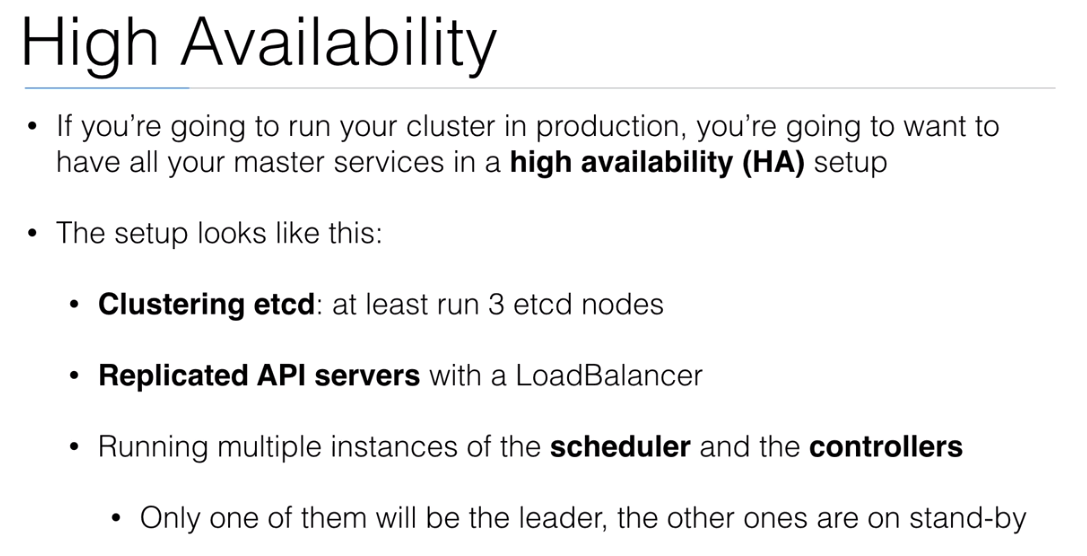

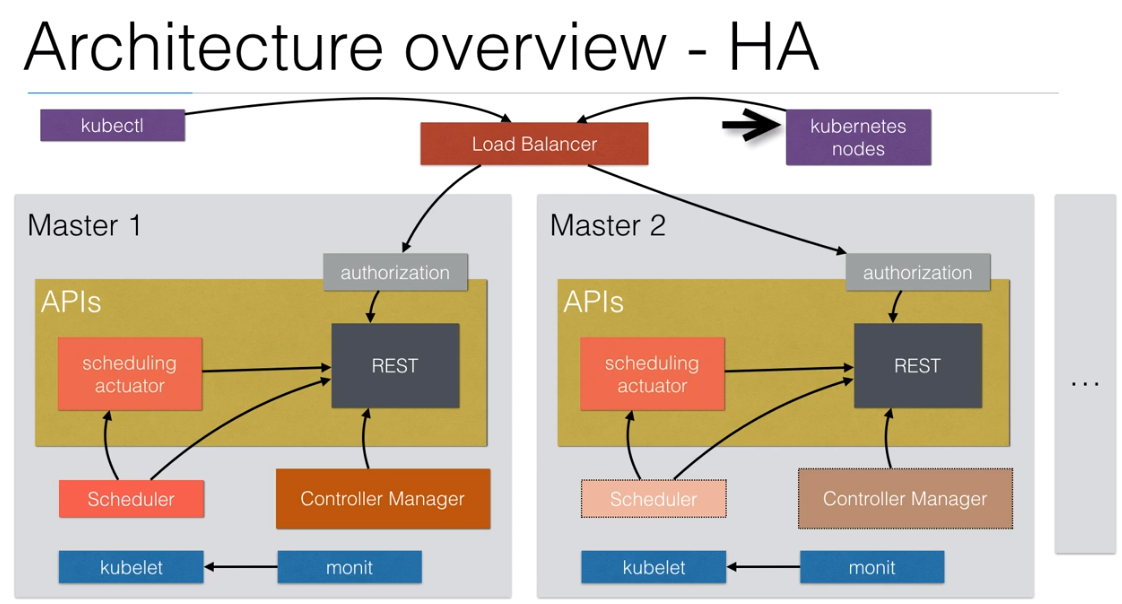

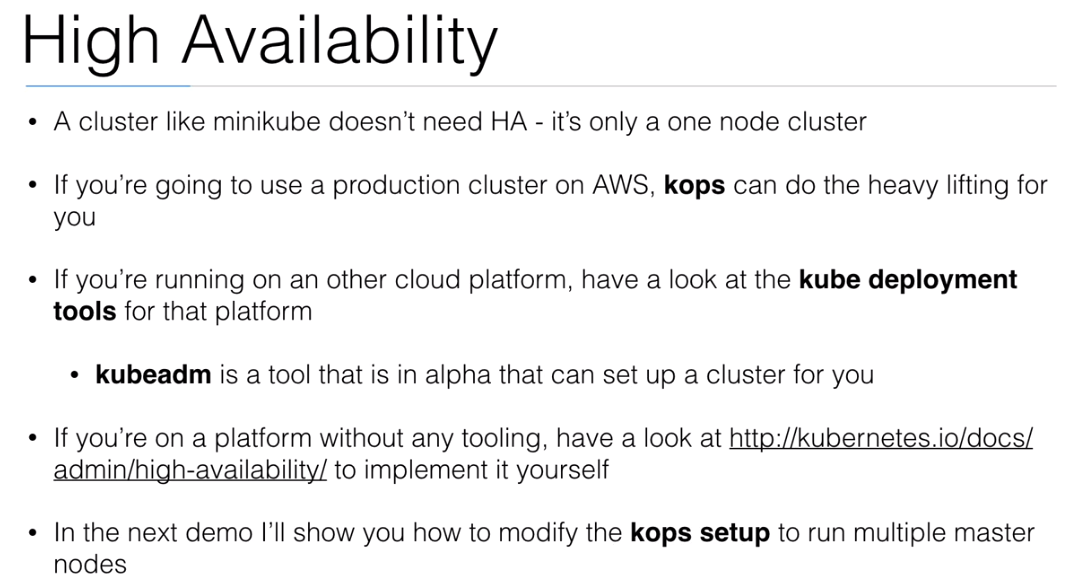

91. High Availability

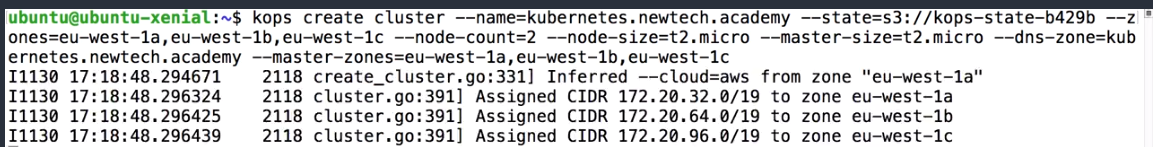

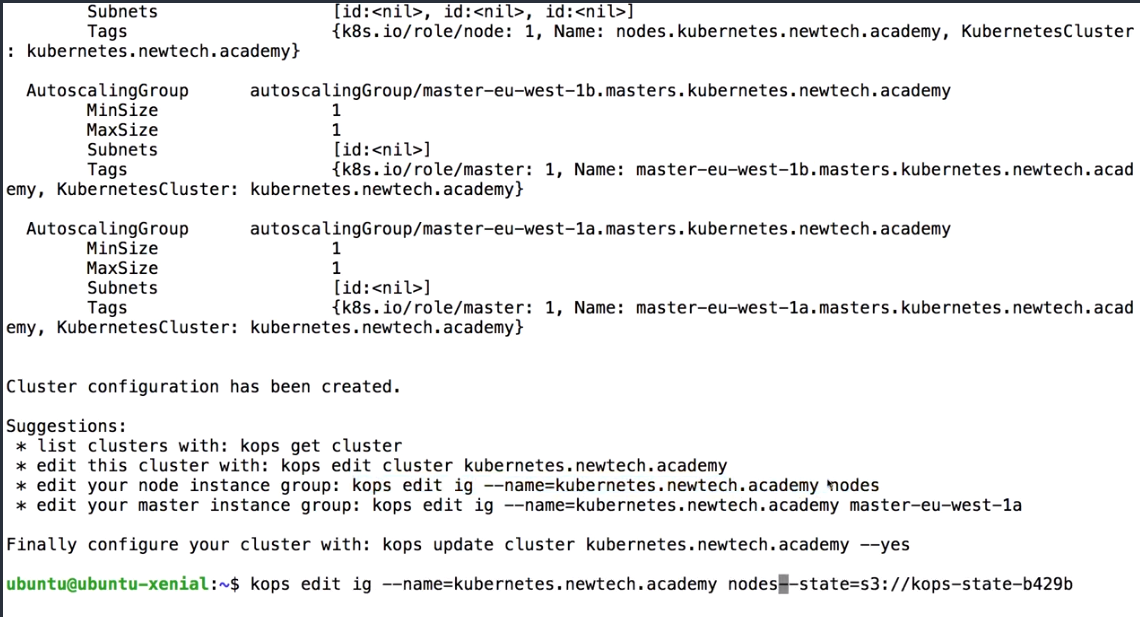

92. Demo: High Availability

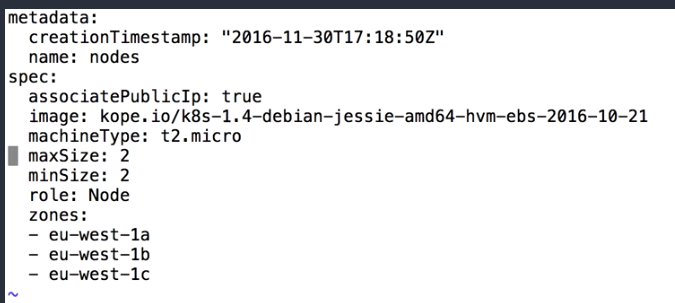

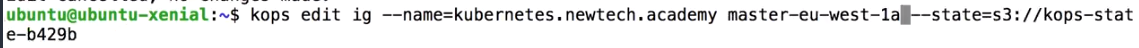

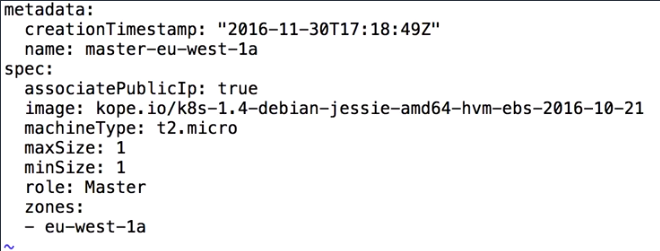

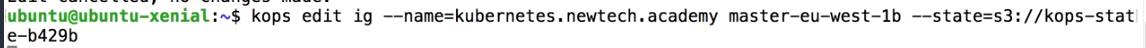

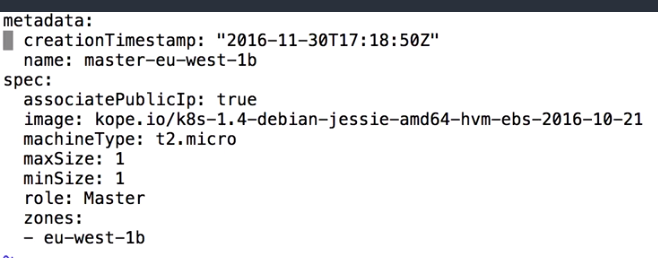

- We can use kops to create a master in different zones:

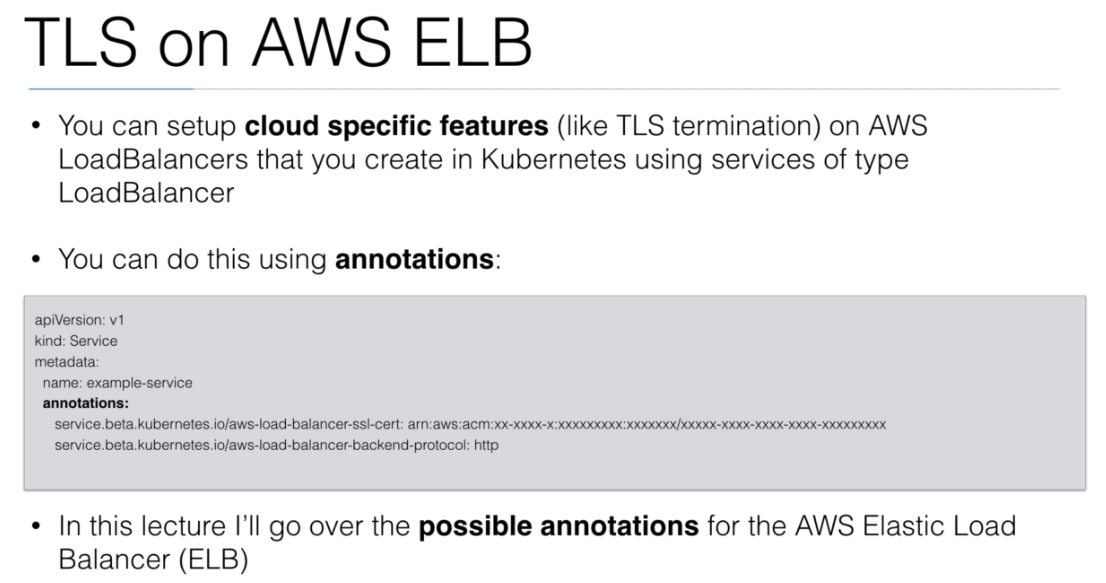

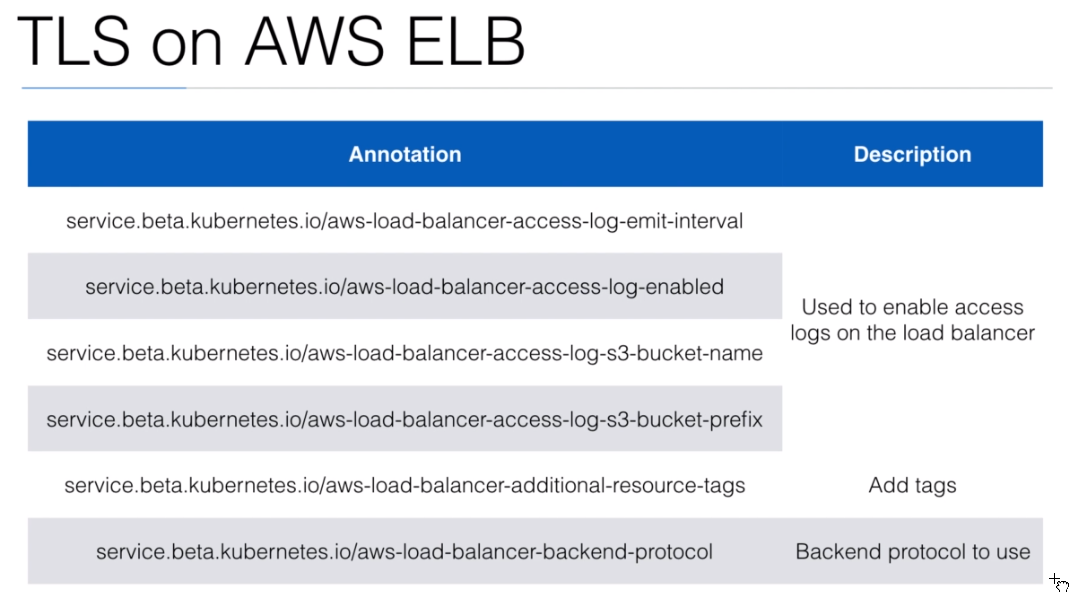

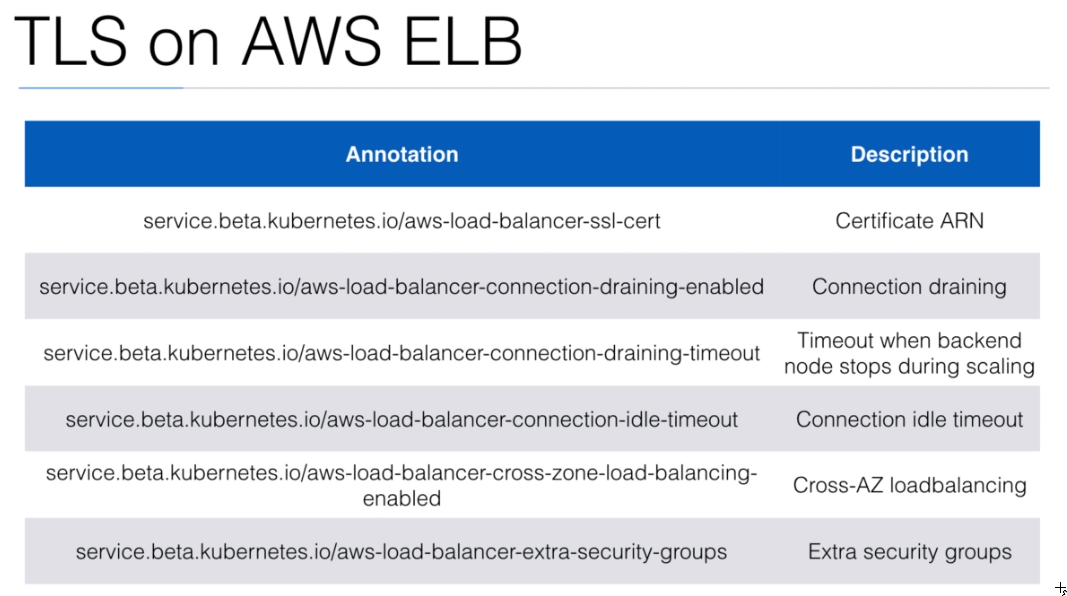

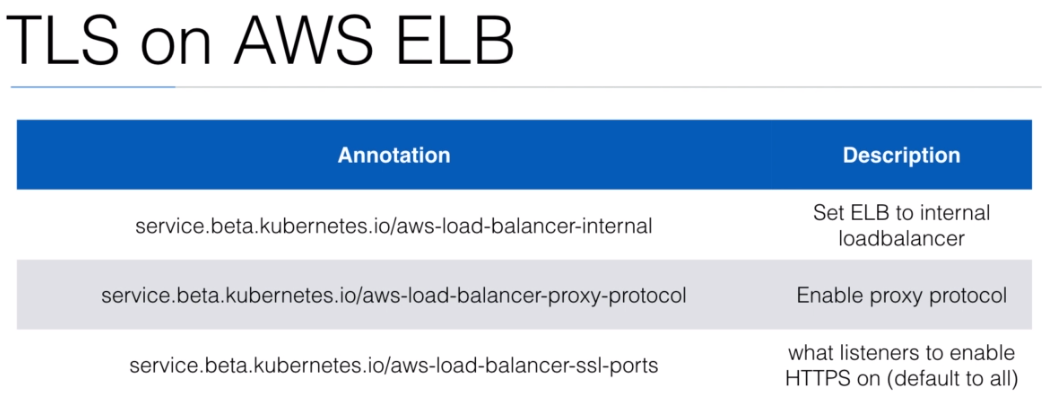

93. TLS on ELB using Annotations

94. Demo: TLS on ELB

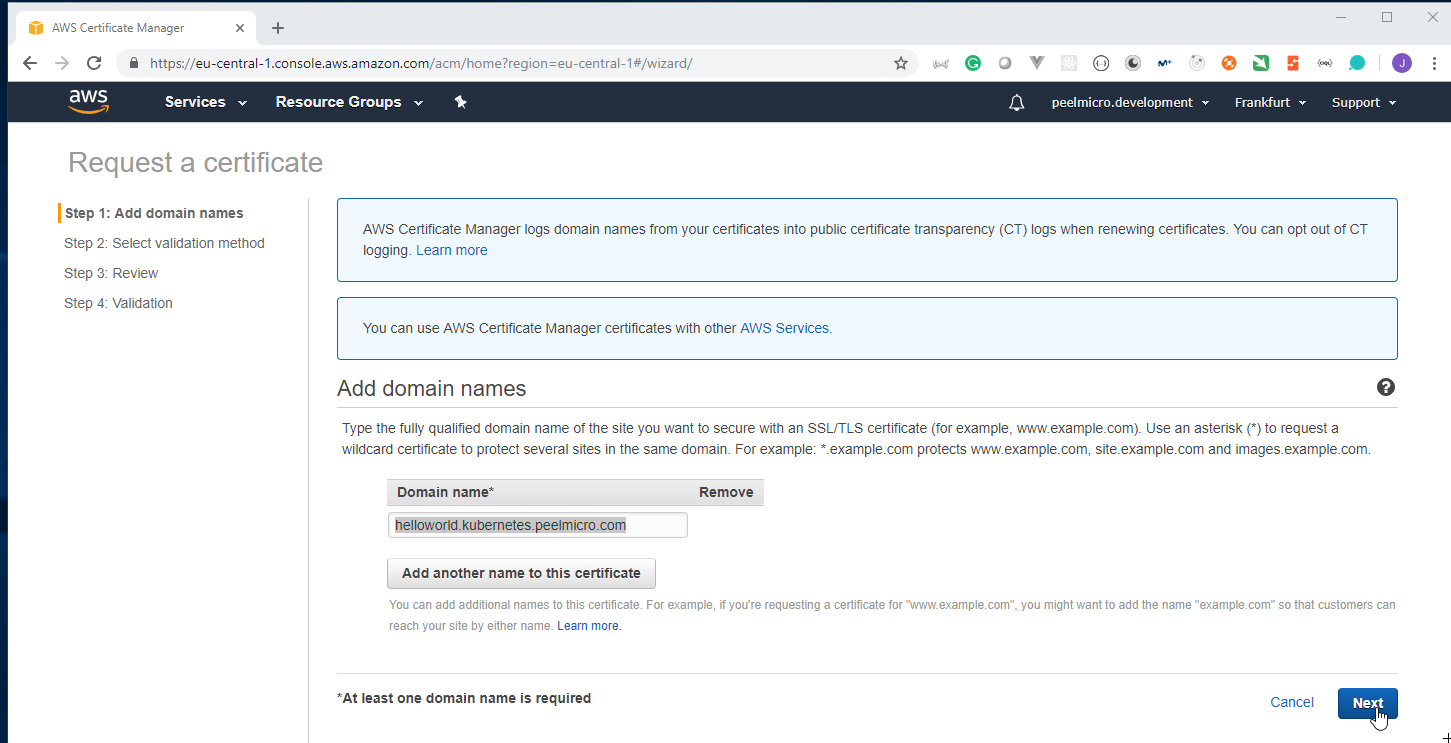

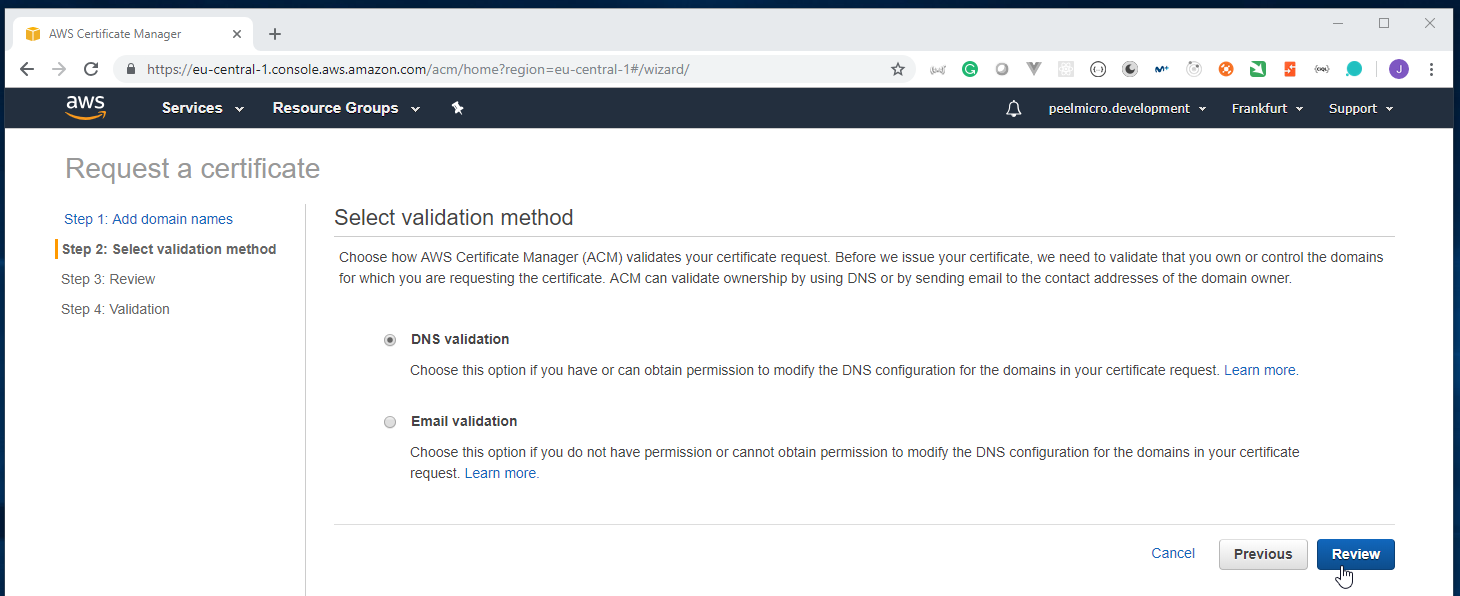

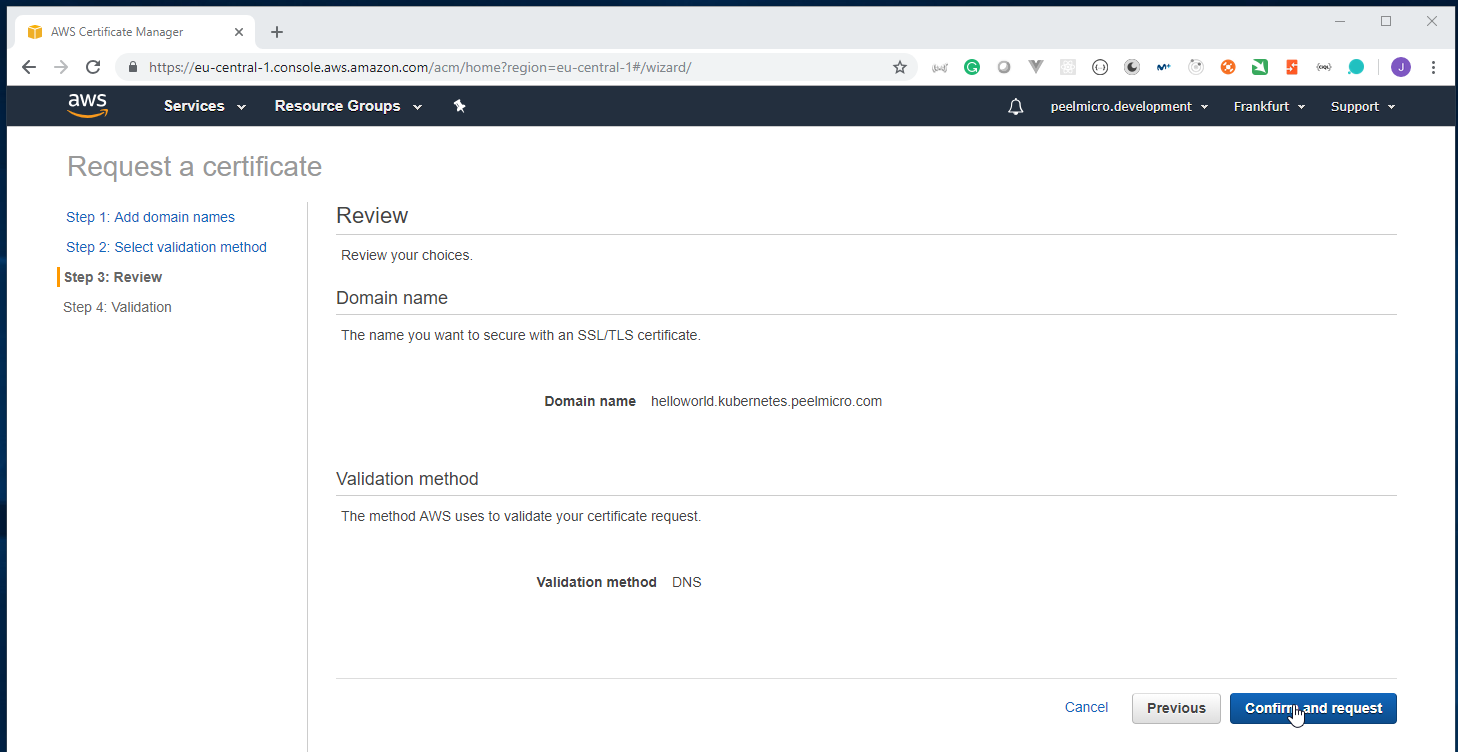

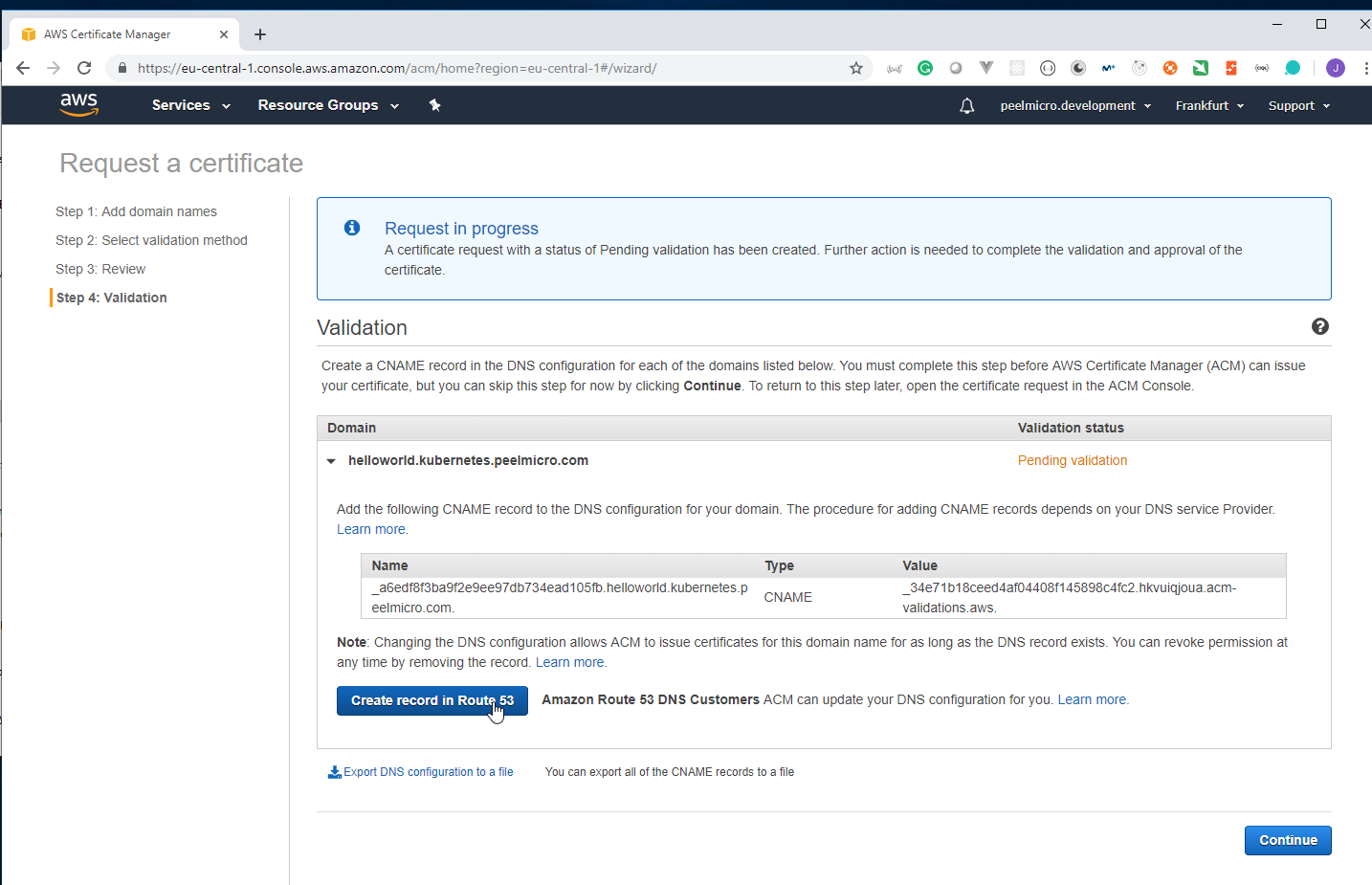

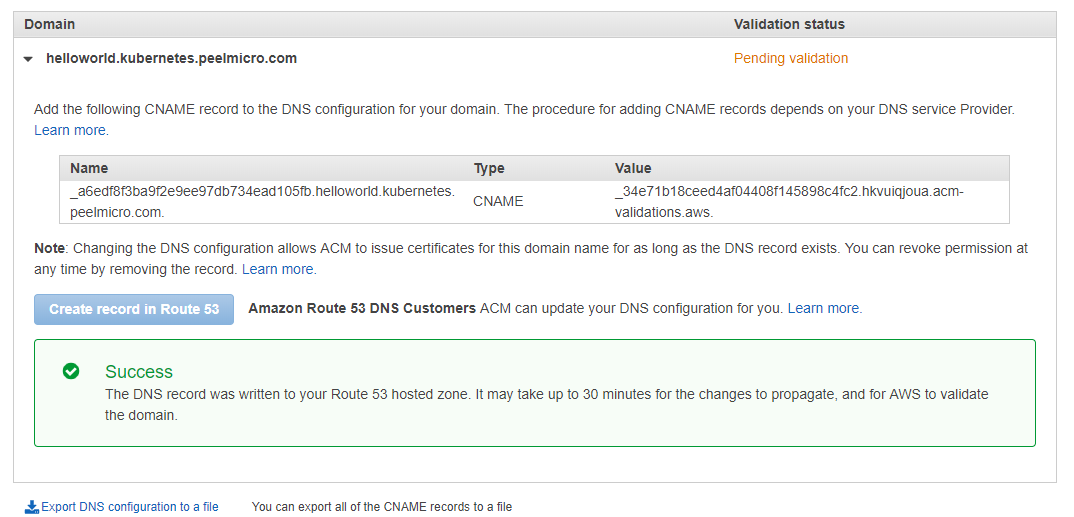

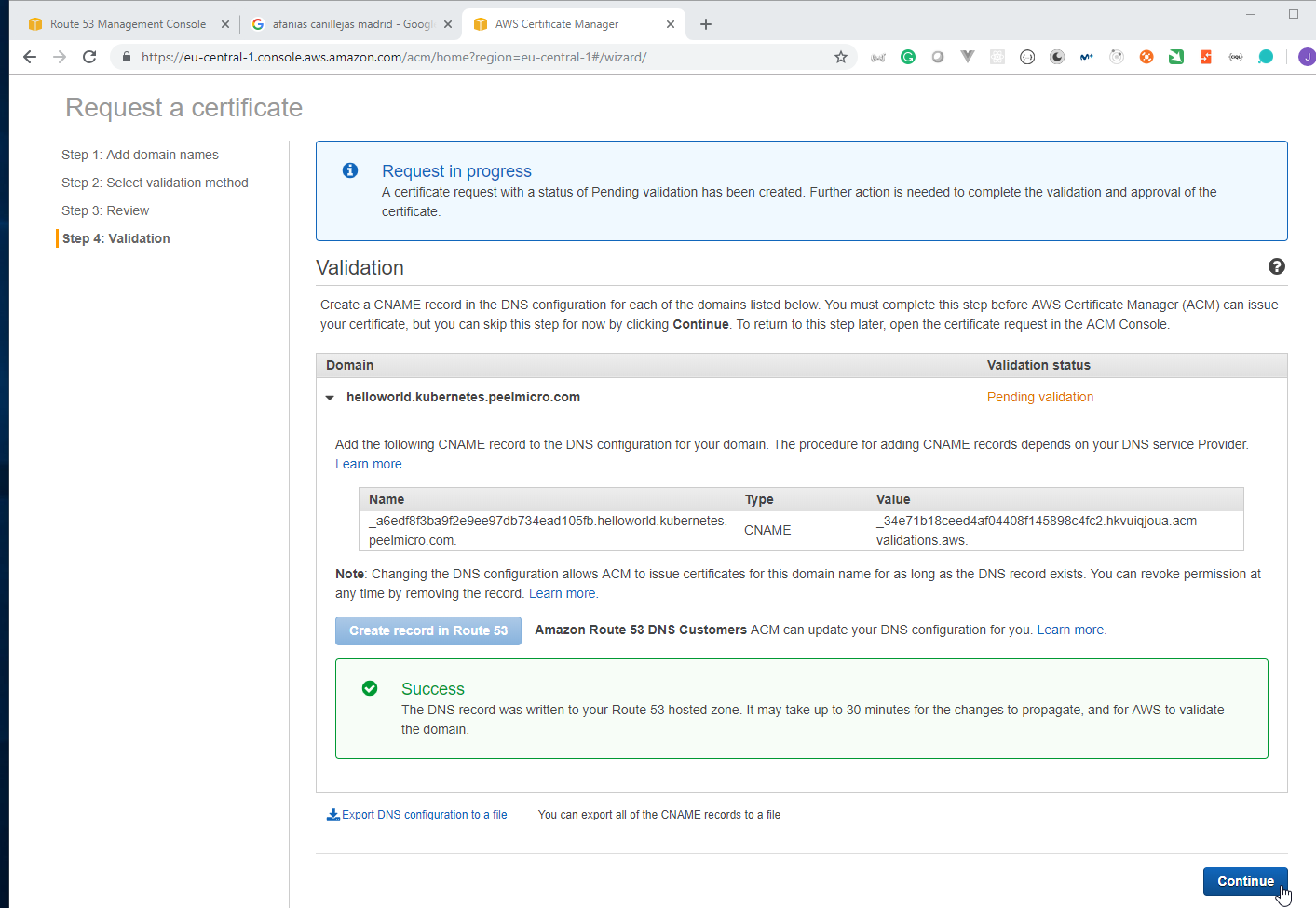

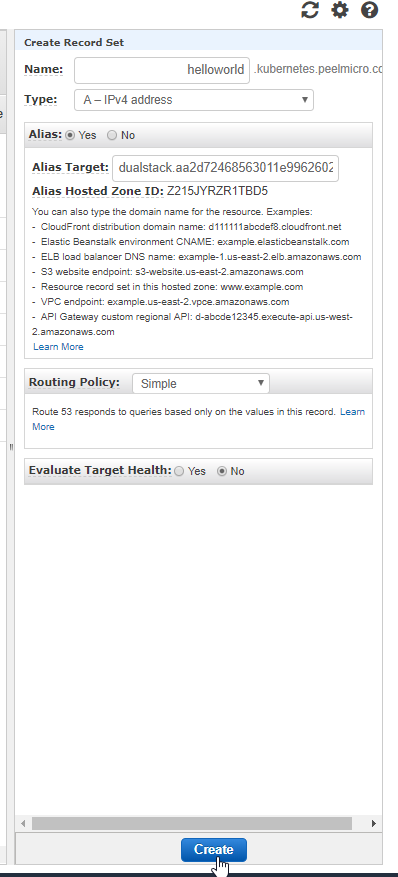

- We need to create a SSL Certificate. Browse to AWS Management Console and search for

certificate

- We are going to create

helloworld.kubernetes.peelmicro.com

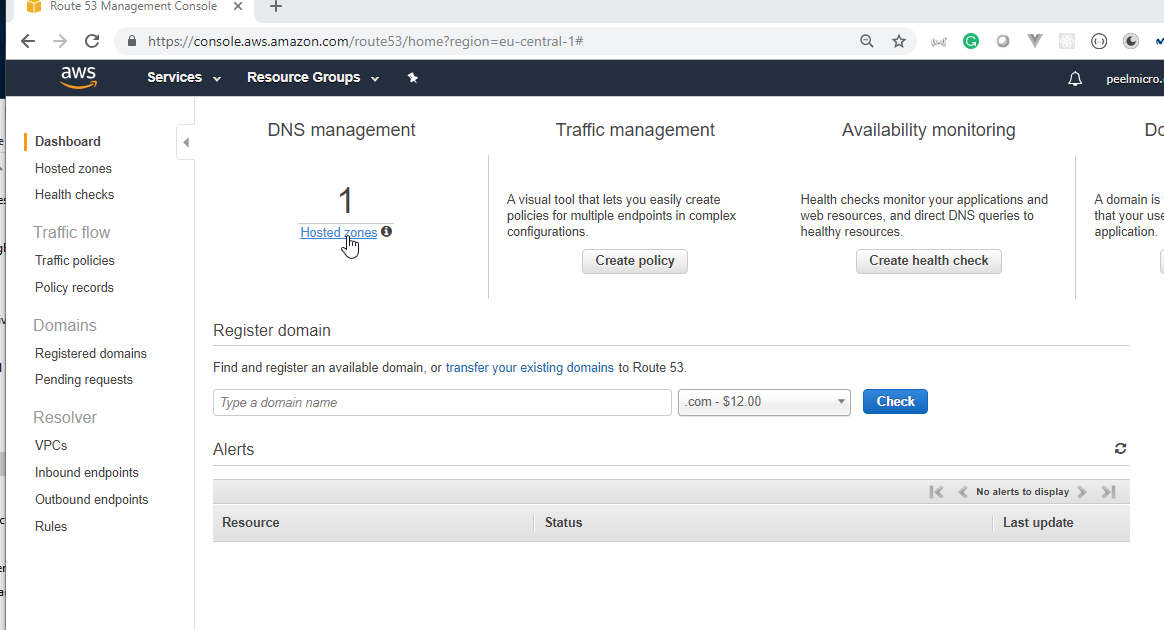

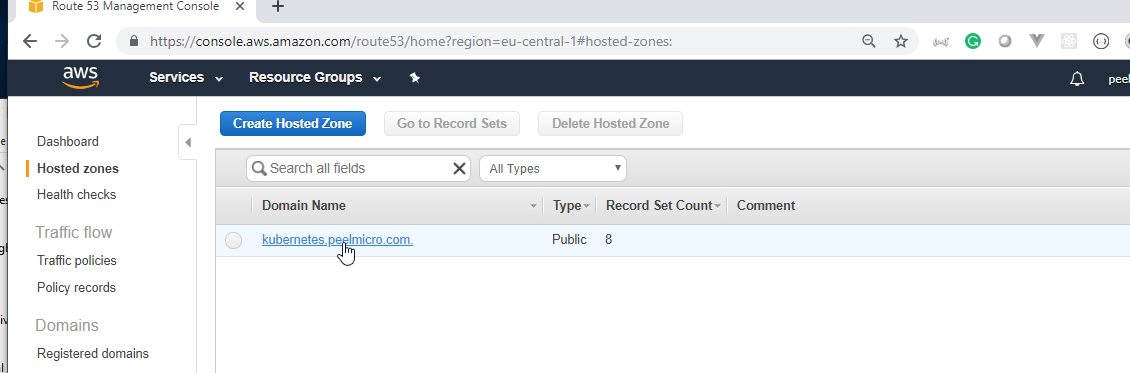

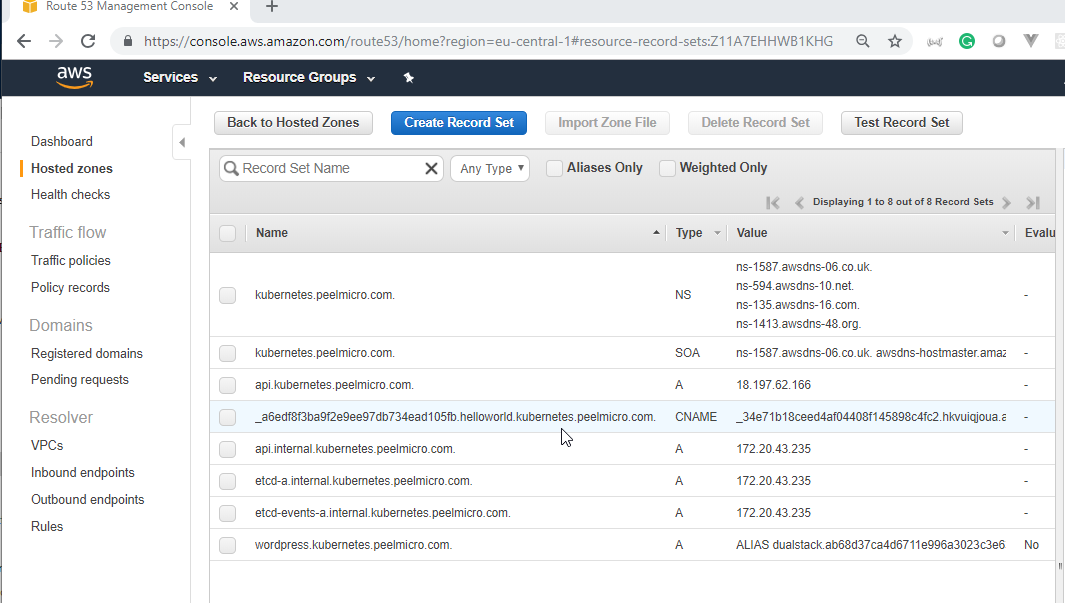

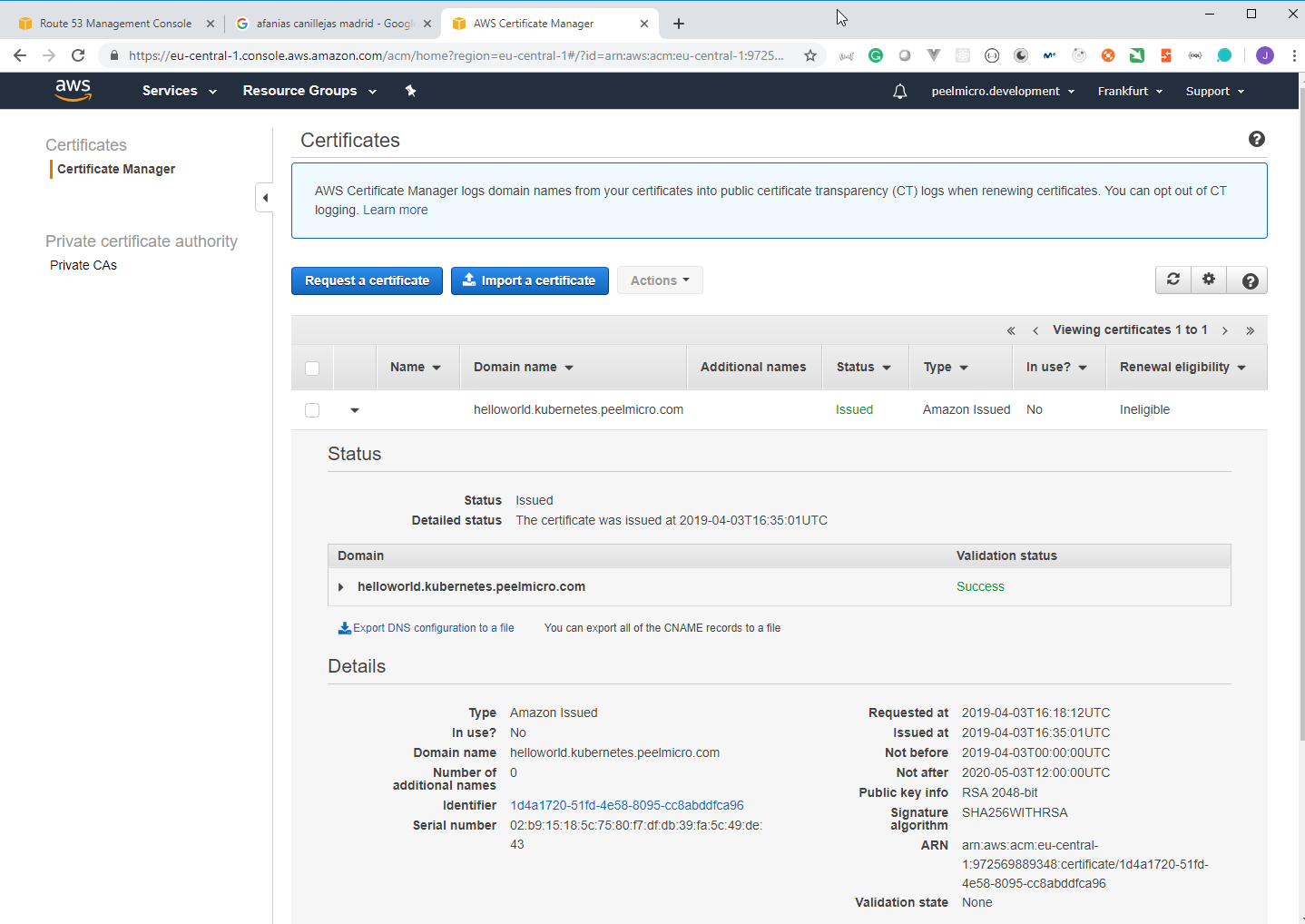

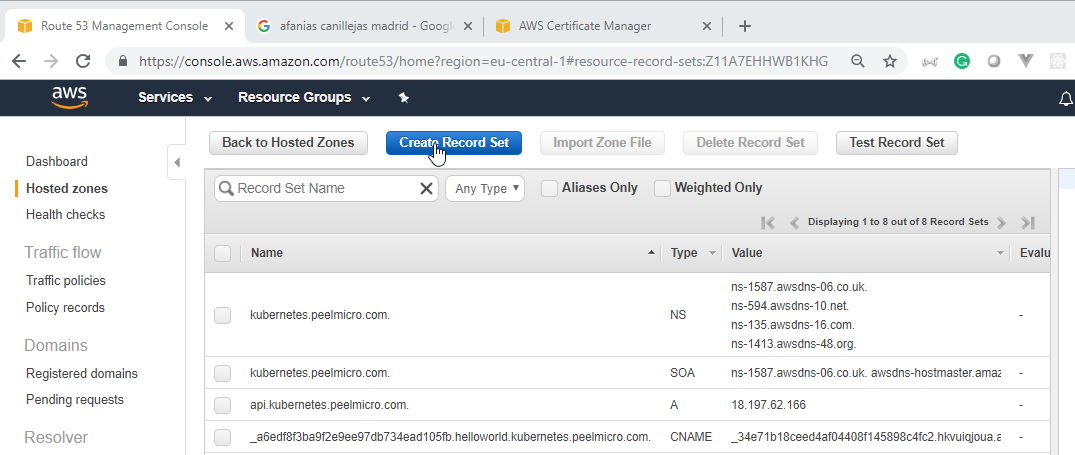

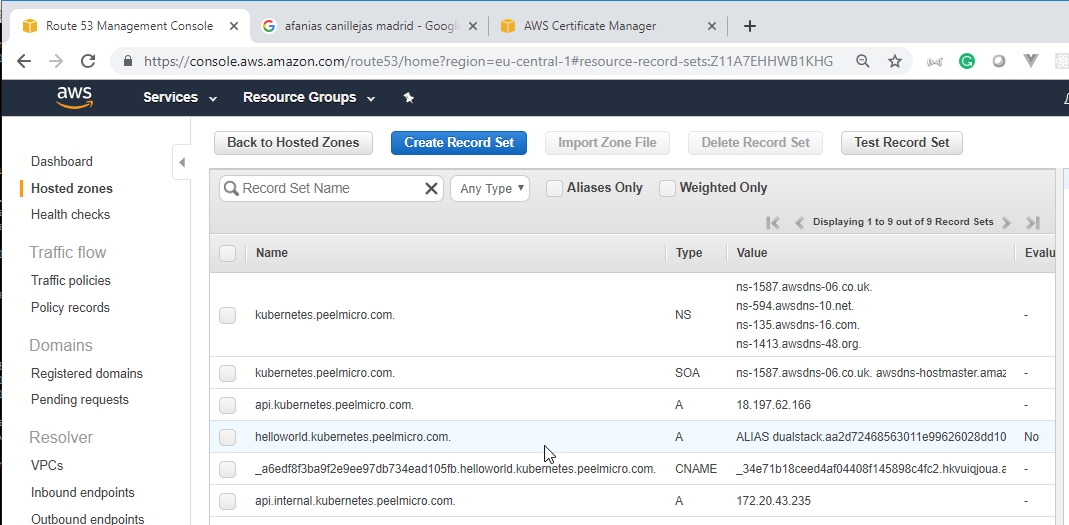

- We can go to Route 53 to ensure the DNS has been created.

We need to copy the ARN value.

We are going to use the

elb-tls/helloworld.ymldocument to create a new deployment.

elb-tls/helloworld.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: helloworld-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: helloworld

spec:

containers:

- name: k8s-demo

image: wardviaene/k8s-demo

ports:

- name: nodejs-port

containerPort: 3000

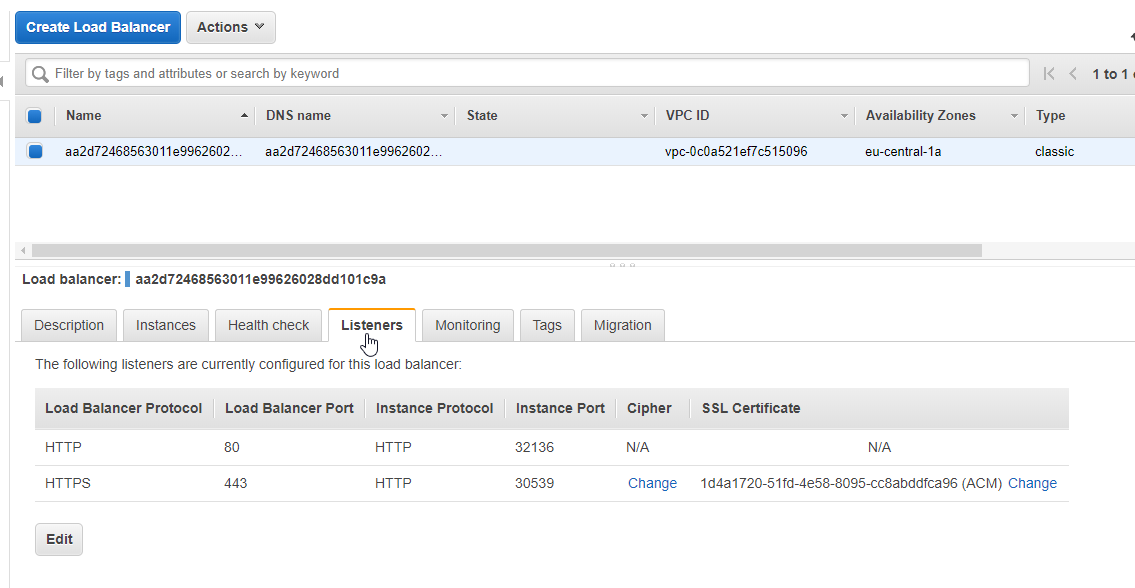

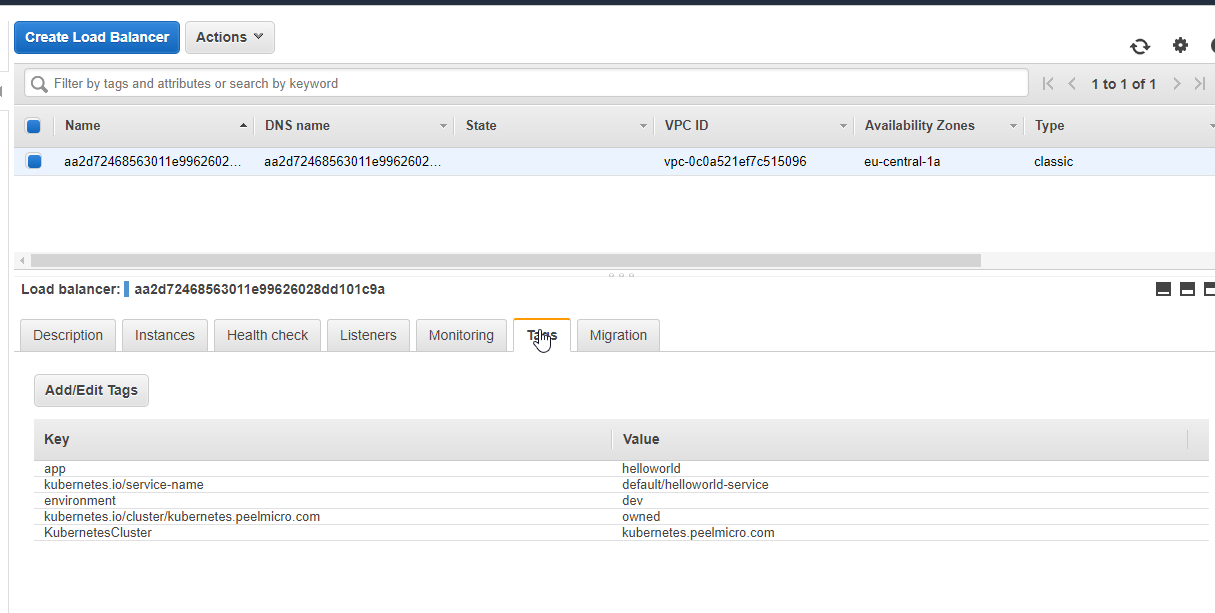

- We are going to use the

elb-tls/helloworld-service.ymldocument to create a new deployment.

elb-tls/helloworld-service.yml

apiVersion: v1

kind: Service

metadata:

name: helloworld-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: "arn:aws:acm:eu-central-1:972569889348:certificate/1d4a1720-51fd-4e58-8095-cc8abddfca96" #replace this value

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: "http"

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: "443"

service.beta.kubernetes.io/aws-load-balancer-connection-draining-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-connection-draining-timeout: "60"

service.beta.kubernetes.io/aws-load-balancer-additional-resource-tags: "environment=dev,app=helloworld"

spec:

ports:

- name: http

port: 80

targetPort: nodejs-port

protocol: TCP

- name: https

port: 443

targetPort: nodejs-port

protocol: TCP

selector:

app: helloworld

type: LoadBalancer

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/elb-tls# kubectl create -f helloworld.yml

deployment.extensions/helloworld-deployment created

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/elb-tls# kubectl create -f helloworld-service.yml

service/helloworld-service created

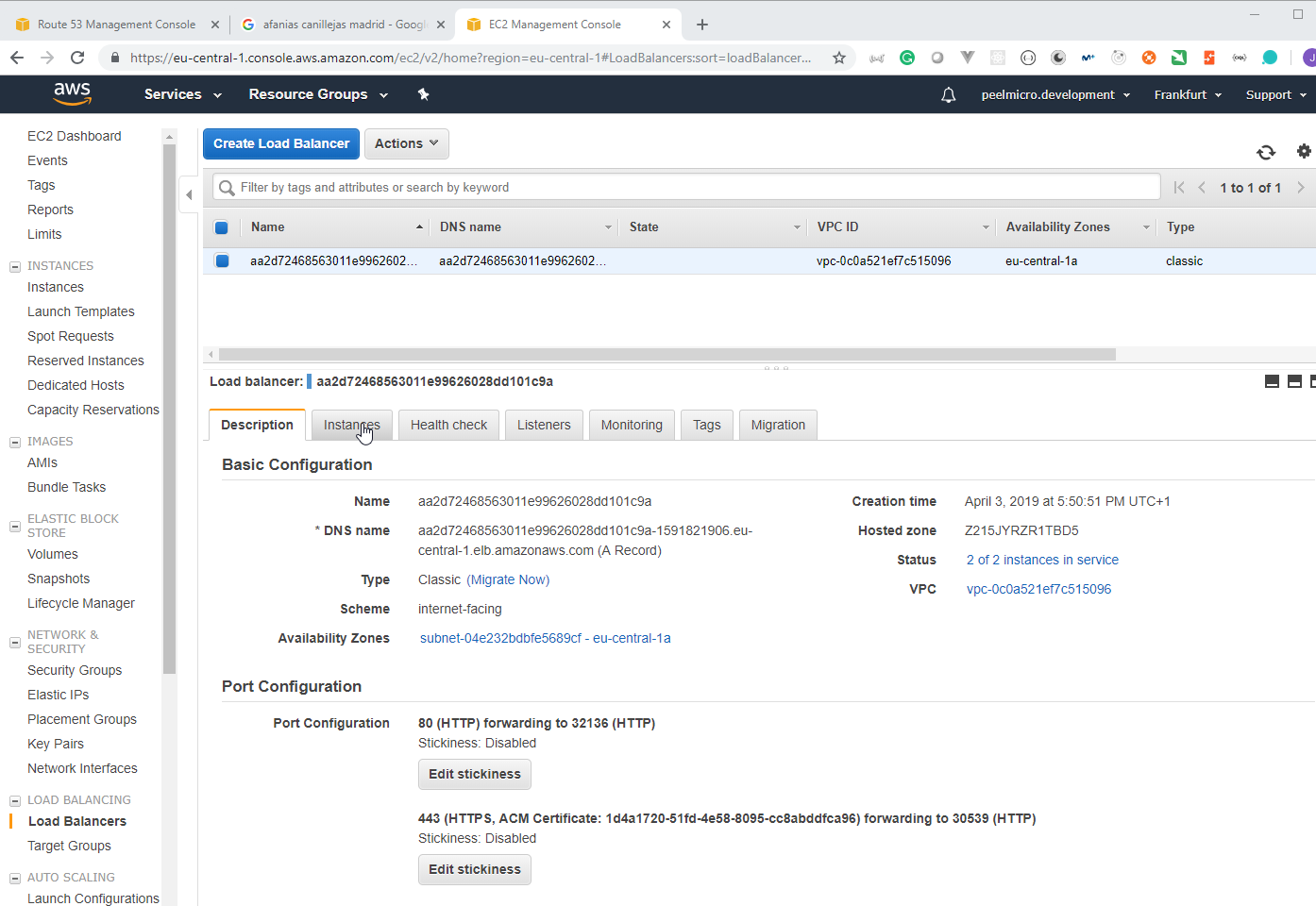

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/elb-tls# kubectl get services -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

helloworld-service LoadBalancer 100.65.230.45 aa2d72468563011e99626028dd101c9a-1591821906.eu-central-1.elb.amazonaws.com 80:32136/TCP,443:30539/TCP 56s app=helloworld

kubernetes ClusterIP 100.64.0.1 <none> 443/TCP 12h <none>

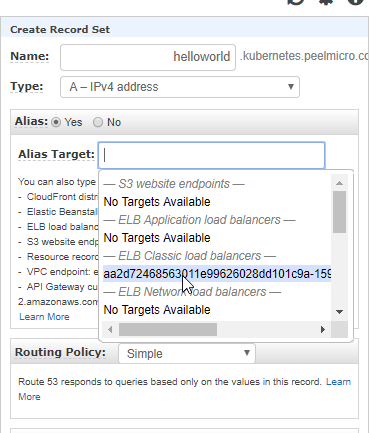

- We have to go back to

Route 53

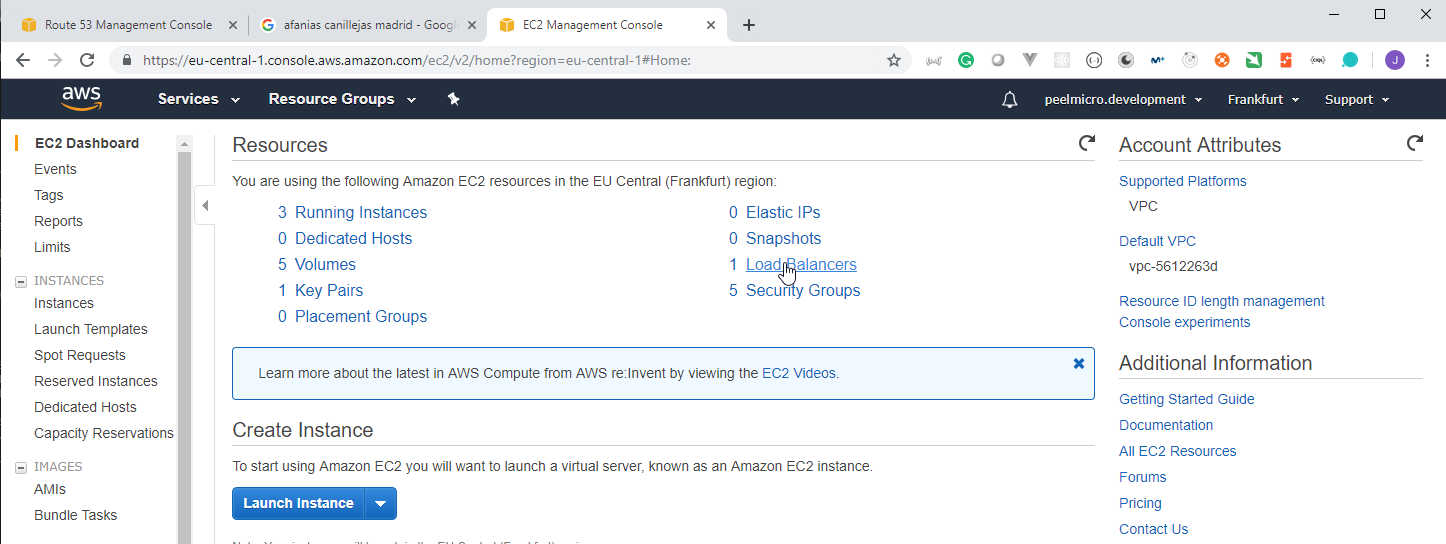

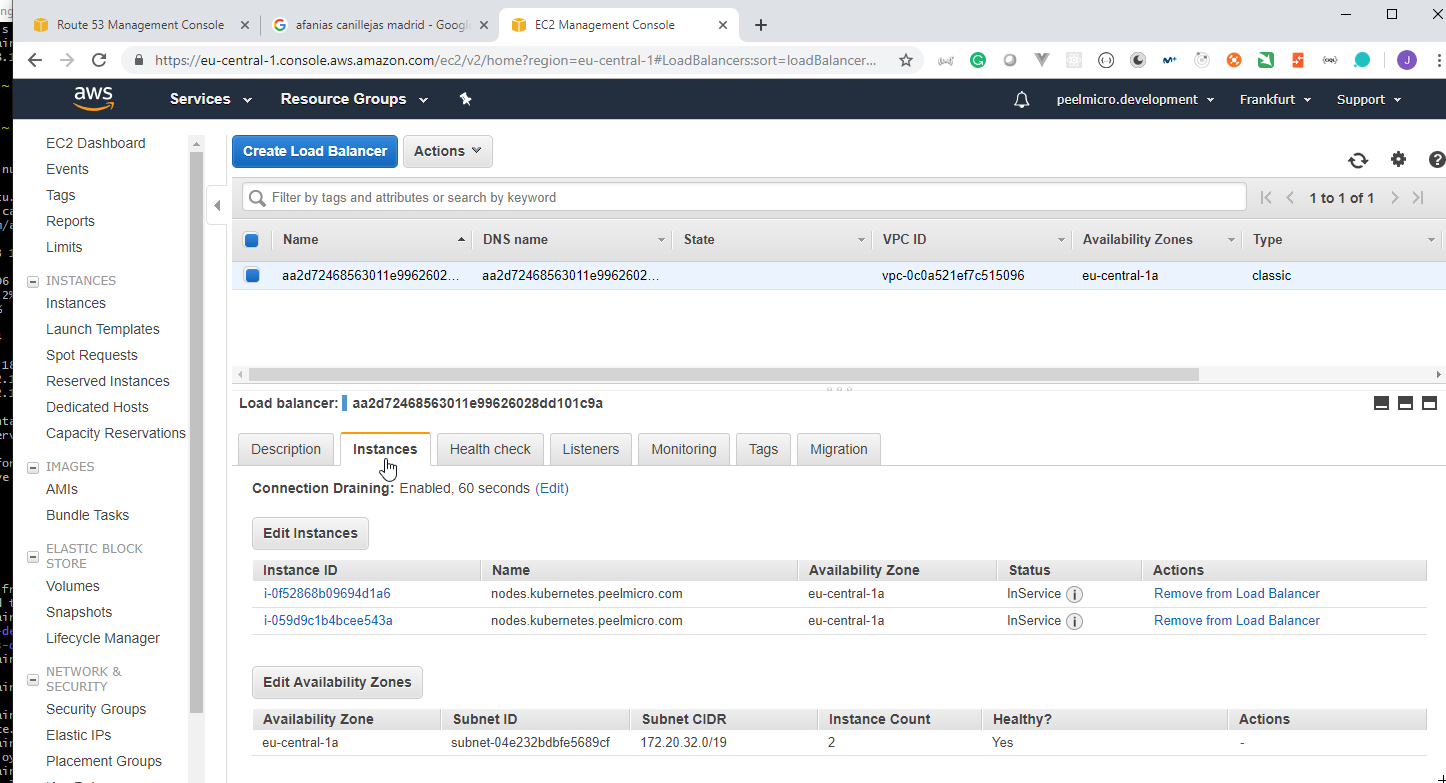

- We can go now to the Load Balancer

- We can now test it

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/elb-tls# curl aa2d72468563011e99626028dd101c9a-1591821906.eu-central-1.elb.amazonaws.com

Hello World!root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/elb-tls#

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course/learn-devops-the-complete-kubernetes-course/elb-tls# curl https://aa2d72468563011e99626028dd101c9a-1591821906.eu-central-1.elb.amazonaws.com

curl: (51) SSL: no alternative certificate subject name matches target host name 'aa2d72468563011e99626028dd101c9a-159Connection reset by 68.183.44.204 port 22

root@ubuntu-s-1vcpu-2gb-lon1-01:~# curl https://aa2d72468563011e99626028dd101c9a-1591821906.eu-central-1.elb.amazonaws.com -k

Hello World!root@ubuntu-s-1vcpu-2gb-lon1-01:~#

root@ubuntu-s-1vcpu-2gb-lon1-01:~# curl https://helloworld.kubernetes.peelmicro.com

Hello World!root@ubuntu-s-1vcpu-2gb-lon1-01:~#

root@ubuntu-s-1vcpu-2gb-lon1-01:~# curl helloworld.kubernetes.peelmicro.com

Hello World!root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- We need to terminate the cluster:

Hello World!root@ubuntu-s-1vcpu-2gb-lon1-01:~# ^C

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops delete cluster kubernetes.peelmicro.com --state=s3://kubernetes.peelmicro.com --yes

TYPE NAME ID

autoscaling-config master-eu-central-1a.masters.kubernetes.peelmicro.com-20190403042402 master-eu-central-1a.masters.kubernetes.peelmicro.com-20190403042402

autoscaling-config nodes.kubernetes.peelmicro.com-20190403042402 nodes.kubernetes.peelmicro.com-20190403042402

autoscaling-group master-eu-central-1a.masters.kubernetes.peelmicro.com master-eu-central-1a.masters.kubernetes.peelmicro.com

autoscaling-group nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

dhcp-options kubernetes.peelmicro.com dopt-07b67c9820a785fc3

iam-instance-profile masters.kubernetes.peelmicro.com masters.kubernetes.peelmicro.com

iam-instance-profile nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

iam-role masters.kubernetes.peelmicro.com masters.kubernetes.peelmicro.com

iam-role nodes.kubernetes.peelmicro.com nodes.kubernetes.peelmicro.com

instance master-eu-central-1a.masters.kubernetes.peelmicro.com i-0c5bfab9c749d5e77

instance nodes.kubernetes.peelmicro.com i-059d9c1b4bcee543a

instance nodes.kubernetes.peelmicro.com i-0f52868b09694d1a6

internet-gateway kubernetes.peelmicro.com igw-083b4c97ec6d31f8c

keypair kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

load-balancer aa2d72468563011e99626028dd101c9a

route-table kubernetes.peelmicro.com rtb-007ab600d9cbcdb90

route53-record api.internal.kubernetes.peelmicro.com. Z11A7EHHWB1KHG/api.internal.kubernetes.peelmicro.com.

route53-record api.kubernetes.peelmicro.com. Z11A7EHHWB1KHG/api.kubernetes.peelmicro.com.

route53-record etcd-a.internal.kubernetes.peelmicro.com. Z11A7EHHWB1KHG/etcd-a.internal.kubernetes.peelmicro.com.

route53-record etcd-events-a.internal.kubernetes.peelmicro.com. Z11A7EHHWB1KHG/etcd-events-a.internal.kubernetes.peelmicro.com.

security-group sg-00b854161b4394ca5

security-group masters.kubernetes.peelmicro.com sg-0e2ec7830d974a5ff

security-group nodes.kubernetes.peelmicro.com sg-078a333d7c9f90aec

subnet eu-central-1a.kubernetes.peelmicro.com subnet-04e232bdbfe5689cf

volume a.etcd-events.kubernetes.peelmicro.com vol-0bcf3603d4ebaebaa

volume a.etcd-main.kubernetes.peelmicro.com vol-07fb28cb26783cc8e

vpc kubernetes.peelmicro.com vpc-0c0a521ef7c515096

load-balancer:aa2d72468563011e99626028dd101c9a ok

keypair:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e ok

autoscaling-group:master-eu-central-1a.masters.kubernetes.peelmicro.com ok

autoscaling-group:nodes.kubernetes.peelmicro.com ok

route53-record:Z11A7EHHWB1KHG/etcd-a.internal.kubernetes.peelmicro.com. ok

.

.

.

dhcp-options:dopt-07b67c9820a785fc3

route-table:rtb-007ab600d9cbcdb90

internet-gateway:igw-083b4c97ec6d31f8c

vpc:vpc-0c0a521ef7c515096

subnet:subnet-04e232bdbfe5689cf ok

security-group:sg-00b854161b4394ca5 ok

internet-gateway:igw-083b4c97ec6d31f8c ok

route-table:rtb-007ab600d9cbcdb90 ok

vpc:vpc-0c0a521ef7c515096 ok

dhcp-options:dopt-07b67c9820a785fc3 ok

Deleted kubectl config for kubernetes.peelmicro.com

Deleted cluster: "kubernetes.peelmicro.com"

Section: 5. Packaging and Deploying on Kubernetes

95. Introduction to Helm

96. Demo: Helm

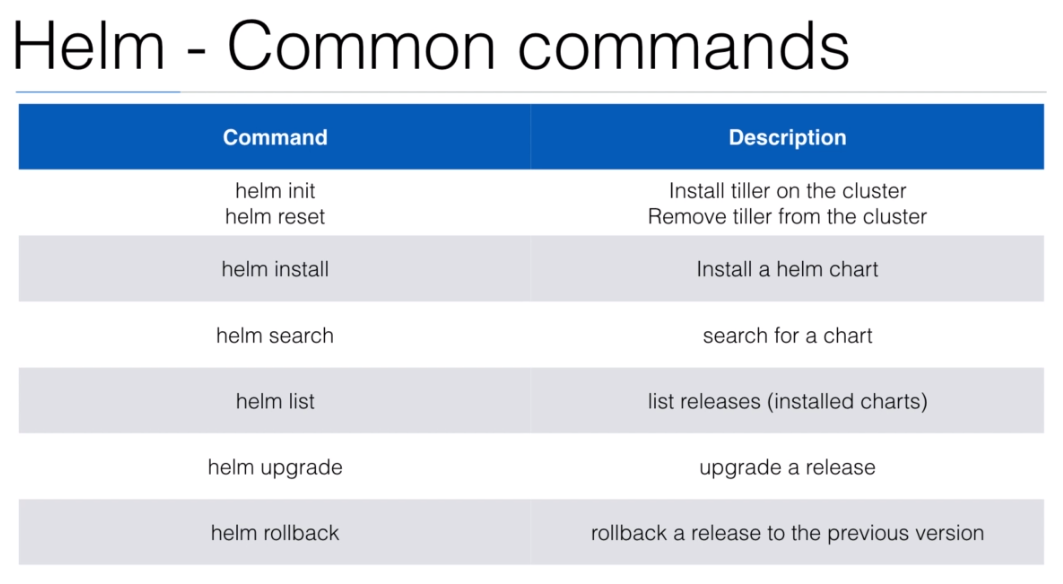

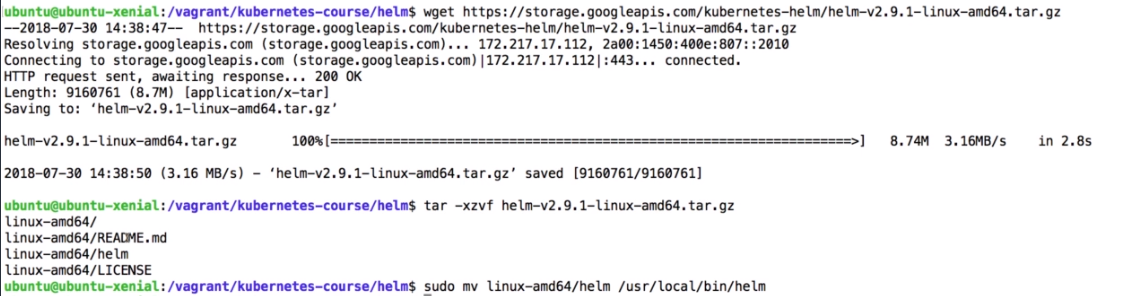

Installing HELMis initially explained in the 25. Materials: Install HELM binary and activate HELM user account in your cluster chapter of the Learn DevOps Helm/Helmfile Kubernetes deployment Udemy course.It can be also be installed by executing

1. Install helm

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

tar -xzvf helm-v2.11.0-linux-amd64.tar.gz

sudo mv linux-amd64/helm /usr/local/bin/helm

ubuntu@kubernetes-master:~$ wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

--2019-03-24 17:46:01-- https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

Resolving storage.googleapis.com (storage.googleapis.com)... 216.58.214.16, 2a00:1450:4009:804::2010

Connecting to storage.googleapis.com (storage.googleapis.com)|216.58.214.16|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 19149273 (18M) [application/x-tar]

Saving to: ‘helm-v2.11.0-linux-amd64.tar.gz’

helm-v2.11.0-linux-amd64.tar.gz 100%[=======================================================================================>] 18.26M 46.3MB/s in 0.4s

2019-03-24 17:46:02 (46.3 MB/s) - ‘helm-v2.11.0-linux-amd64.tar.gz’ saved [19149273/19149273]

ubuntu@kubernetes-master:~$ tar -xzvf helm-v2.11.0-linux-amd64.tar.gz

linux-amd64/

linux-amd64/tiller

linux-amd64/README.md

linux-amd64/helm

linux-amd64/LICENSE

ubuntu@kubernetes-master:~$ sudo mv linux-amd64/helm /usr/local/bin/helm

ubuntu@kubernetes-master:~$ helm -h

The Kubernetes package manager

To begin working with Helm, run the 'helm init' command:

$ helm init

This will install Tiller to your running Kubernetes cluster.

It will also set up any necessary local configuration.

Common actions from this point include:

- helm search: search for charts

- helm fetch: download a chart to your local directory to view

- helm install: upload the chart to Kubernetes

- helm list: list releases of charts

Environment:

$HELM_HOME set an alternative location for Helm files. By default, these are stored in ~/.helm

$HELM_HOST set an alternative Tiller host. The format is host:port

$HELM_NO_PLUGINS disable plugins. Set HELM_NO_PLUGINS=1 to disable plugins.

$TILLER_NAMESPACE set an alternative Tiller namespace (default "kube-system")

$KUBECONFIG set an alternative Kubernetes configuration file (default "~/.kube/config")

Usage:

helm [command]

Available Commands:

completion Generate autocompletions script for the specified shell (bash or zsh)

create create a new chart with the given name

delete given a release name, delete the release from Kubernetes

dependency manage a chart's dependencies

fetch download a chart from a repository and (optionally) unpack it in local directory

get download a named release

history fetch release history

home displays the location of HELM_HOME

init initialize Helm on both client and server

inspect inspect a chart

install install a chart archive

lint examines a chart for possible issues

list list releases

package package a chart directory into a chart archive

plugin add, list, or remove Helm plugins

repo add, list, remove, update, and index chart repositories

reset uninstalls Tiller from a cluster

rollback roll back a release to a previous revision

search search for a keyword in charts

serve start a local http web server

status displays the status of the named release

template locally render templates

test test a release

upgrade upgrade a release

verify verify that a chart at the given path has been signed and is valid

version print the client/server version information

Flags:

--debug enable verbose output

--home string location of your Helm config. Overrides $HELM_HOME (default "/root/.helm")

--host string address of Tiller. Overrides $HELM_HOST

--kube-context string name of the kubeconfig context to use

--tiller-connection-timeout int the duration (in seconds) Helm will wait to establish a connection to tiller (default 300)

--tiller-namespace string namespace of Tiller (default "kube-system")

Use "helm [command] --help" for more information about a command.

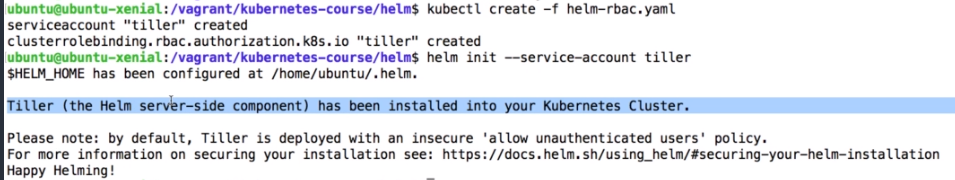

2. Initialize helm

kubectl create -f helm-rbac.yaml

helm init --service-account tiller

helm-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/helm$ kubectl create -f helm-rbac.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course/helm$ helm init --service-account tiller

Creating /home/ubuntu/.helm

Creating /home/ubuntu/.helm/repository

Creating /home/ubuntu/.helm/repository/cache

Creating /home/ubuntu/.helm/repository/local

Creating /home/ubuntu/.helm/plugins

Creating /home/ubuntu/.helm/starters

Creating /home/ubuntu/.helm/cache/archive

Creating /home/ubuntu/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /home/ubuntu/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

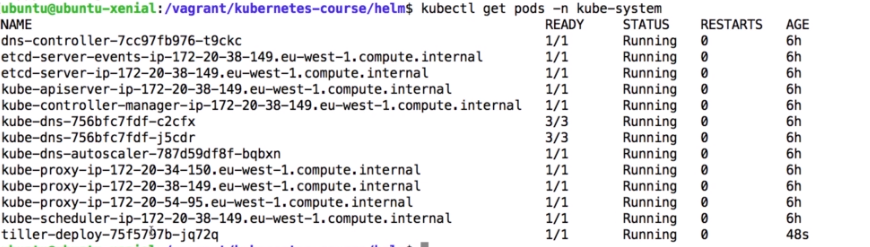

ubuntu@kubernetes-master:~$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

canal-8chhh 3/3 Running 0 24d

canal-nrqm4 3/3 Running 0 24d

coredns-86c58d9df4-h8wc5 1/1 Running 0 24d

coredns-86c58d9df4-x8tbh 1/1 Running 0 24d

etcd-kubernetes-master 1/1 Running 0 24d

kube-apiserver-kubernetes-master 1/1 Running 0 24d

kube-controller-manager-kubernetes-master 1/1 Running 0 24d

kube-proxy-7mttf 1/1 Running 0 24d

kube-proxy-s42q6 1/1 Running 0 24d

kube-scheduler-kubernetes-master 1/1 Running 0 24d

kubernetes-dashboard-57df4db6b-h8bzd 1/1 Running 0 5d12h

tiller-deploy-6cf89f5895-ktwfs 1/1 Running 0 2m5s

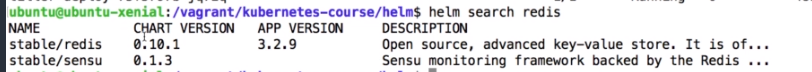

ubuntu@kubernetes-master:~$ helm search redis

NAME CHART VERSION APP VERSION DESCRIPTION

stable/prometheus-redis-exporter 1.0.2 0.28.0 Prometheus exporter for Redis metrics

stable/redis 6.4.3 4.0.14 Open source, advanced key-value store. It is often referr...

stable/redis-ha 3.3.3 5.0.3 Highly available Kubernetes implementation of Redis

stable/sensu 0.2.3 0.28 Sensu monitoring framework backed by the Redis transport

- We can install

redisby executing

ubuntu@kubernetes-master:~$ helm install stable/redis

NAME: joyous-eagle

LAST DEPLOYED: Sun Mar 24 17:58:01 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Secret

NAME AGE

joyous-eagle-redis 0s

==> v1/ConfigMap

joyous-eagle-redis 0s

joyous-eagle-redis-health 0s

==> v1/Service

joyous-eagle-redis-master 0s

joyous-eagle-redis-slave 0s

==> v1beta1/Deployment

joyous-eagle-redis-slave 0s

==> v1beta2/StatefulSet

joyous-eagle-redis-master 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

joyous-eagle-redis-slave-75677ddbf5-2fwm4 0/1 ContainerCreating 0 0s

joyous-eagle-redis-master-0 0/1 Pending 0 0s

NOTES:

** Please be patient while the chart is being deployed **

Redis can be accessed via port 6379 on the following DNS names from within your cluster:

joyous-eagle-redis-master.default.svc.cluster.local for read/write operations

joyous-eagle-redis-slave.default.svc.cluster.local for read-only operations

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace default joyous-eagle-redis -o jsonpath="{.data.redis-password}" | base64 --decode)

To connect to your Redis server:

1. Run a Redis pod that you can use as a client:

kubectl run --namespace default joyous-eagle-redis-client --rm --tty -i --restart='Never' \

--env REDIS_PASSWORD=$REDIS_PASSWORD \

--image docker.io/bitnami/redis:4.0.14 -- bash

2. Connect using the Redis CLI:

redis-cli -h joyous-eagle-redis-master -a $REDIS_PASSWORD

redis-cli -h joyous-eagle-redis-slave -a $REDIS_PASSWORD

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/joyous-eagle-redis 6379:6379 &

redis-cli -h 127.0.0.1 -p 6379 -a $REDIS_PASSWORD

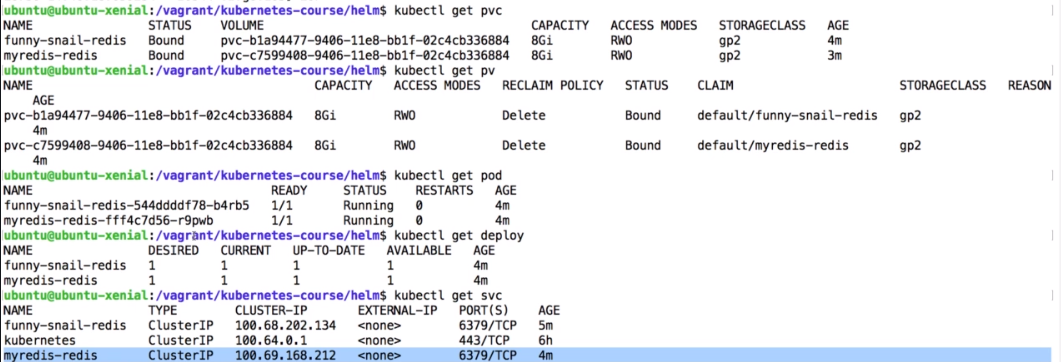

- We can assign a name to the deployment by using

--name deploymentname. It we don't assign a name a random name is assigned

ubuntu@kubernetes-master:~$ helm install --name myredis stable/redis

NAME: myredis

LAST DEPLOYED: Sun Mar 24 18:01:14 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Secret

NAME AGE

myredis 0s

==> v1/ConfigMap

myredis 0s

myredis-health 0s

==> v1/Service

myredis-master 0s

myredis-slave 0s

==> v1beta1/Deployment

myredis-slave 0s

==> v1beta2/StatefulSet

myredis-master 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

myredis-slave-6d77cc8cdf-4hw59 0/1 ContainerCreating 0 0s

myredis-master-0 0/1 Pending 0 0s

NOTES:

** Please be patient while the chart is being deployed **

Redis can be accessed via port 6379 on the following DNS names from within your cluster:

myredis-master.default.svc.cluster.local for read/write operations

myredis-slave.default.svc.cluster.local for read-only operations

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace default myredis -o jsonpath="{.data.redis-password}" | base64 --decode)

To connect to your Redis server:

1. Run a Redis pod that you can use as a client:

kubectl run --namespace default myredis-client --rm --tty -i --restart='Never' \

--env REDIS_PASSWORD=$REDIS_PASSWORD \

--image docker.io/bitnami/redis:4.0.14 -- bash

2. Connect using the Redis CLI:

redis-cli -h myredis-master -a $REDIS_PASSWORD

redis-cli -h myredis-slave -a $REDIS_PASSWORD

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/myredis 6379:6379 &

redis-cli -h 127.0.0.1 -p 6379 -a $REDIS_PASSWORD

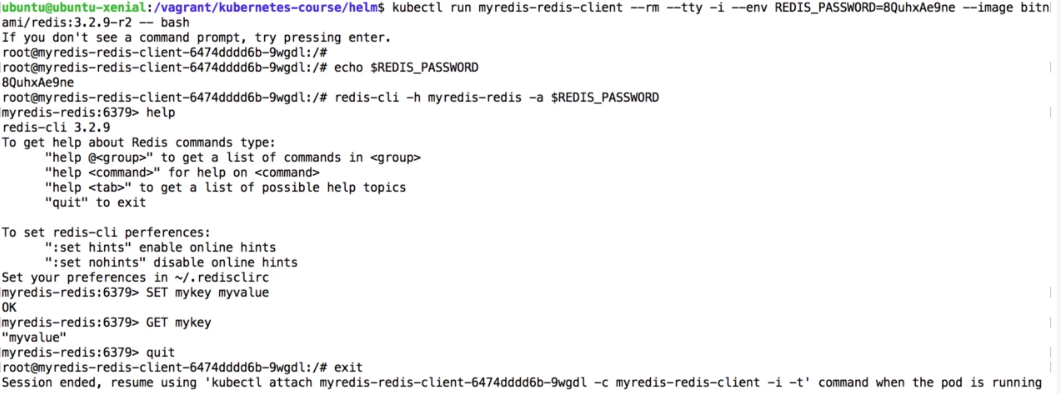

- Get the

redis passwordby executing:

ubuntu@kubernetes-master:~$ kubectl get secret --namespace default myredis -o yaml

apiVersion: v1

data:

redis-password: WEF3QUY4T09OWg==

kind: Secret

metadata:

creationTimestamp: "2019-03-24T18:01:14Z"

labels:

app: redis

chart: redis-6.4.3

heritage: Tiller

release: myredis

name: myredis

namespace: default

resourceVersion: "2808511"

selfLink: /api/v1/namespaces/default/secrets/myredis

uid: d08bef97-4e5e-11e9-abeb-babbda5ce12f

type: Opaque

ubuntu@kubernetes-master:~$ echo 'WEF3QUY4T09OWg==' | base64 --decode

XAwAF8OONZubuntu@kubernetes-master:~$

ubuntu@kubernetes-master:~$ kubectl run --namespace default myredis-client --rm --tty -i --restart='Never' --env REDIS_PASSWORD=XAwAF8OONZ --image docker.io/bit:4.0.14 -- bash

If you don't see a command prompt, try pressing enter.

I have no name!@myredis-client:/$ echo $REDIS_PASSWORD

XAwAF8OONZ

I have no name!@myredis-client:/$ redis-cli -h myredis-master -a $REDIS_PASSWORD

Warning: Using a password with '-a' option on the command line interface may not be safe.

I have no name!@myredis-client:/$ redis-cli -h myredis-slave -a $REDIS_PASSWORD

Warning: Using a password with '-a' option on the command line interface may not be safe.

I have no name!@myredis-client:/$ redis-cli -h myredis-redis -a $REDIS_PASSWORD

Warning: Using a password with '-a' option on the command line interface may not be safe.

Could not connect to Redis at myredis-redis:6379: Name or service not known

Could not connect to Redis at myredis-redis:6379: Name or service not known

not connected> exit

not connected> exit

I have no name!@myredis-client:/$ exit

exit

pod "myredis-client" deleted

ubuntu@kubernetes-master:~$ helm list

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

joyous-eagle 1 Sun Mar 24 17:58:01 2019 DEPLOYED redis-6.4.3 4.0.14 default

myredis 1 Sun Mar 24 18:01:14 2019 DEPLOYED redis-6.4.3 4.0.14 default

ubuntu@kubernetes-master:~$ helm delete joyous-eagle --purge

release "joyous-eagle" deleted

ubuntu@kubernetes-master:~$ helm delete myredis --purge

release "myredis" deleted

- We are going to use Redis-ha instead.

ubuntu@kubernetes-master:~$ helm install --name myredis stable/redis-ha

NAME: myredis

LAST DEPLOYED: Sun Mar 24 18:21:13 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/ConfigMap

NAME AGE

myredis-redis-ha-configmap 0s

myredis-redis-ha-probes 0s

==> v1/ServiceAccount

myredis-redis-ha 0s

==> v1/Role

myredis-redis-ha 0s

==> v1/RoleBinding

myredis-redis-ha 0s

==> v1/Service

myredis-redis-ha-announce-2 0s

myredis-redis-ha-announce-0 0s

myredis-redis-ha-announce-1 0s

myredis-redis-ha 0s

==> v1/StatefulSet

myredis-redis-ha-server 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

myredis-redis-ha-server-0 0/2 Pending 0 0s

NOTES:

Redis can be accessed via port 6379 and Sentinel can be accessed via port 26379 on the following DNS name from within your cluster:

myredis-redis-ha.default.svc.cluster.local

To connect to your Redis server:

1. Run a Redis pod that you can use as a client:

kubectl exec -it myredis-redis-ha-server-0 sh -n default

2. Connect using the Redis CLI:

redis-cli -h myredis-redis-ha.default.svc.cluster.local

ubuntu@kubernetes-master:~$ kubectl exec -it myredis-redis-ha-server-0 sh -n default

Defaulting container name to redis.

Use 'kubectl describe pod/myredis-redis-ha-server-0 -n default' to see all of the containers in this pod.

Error from server (BadRequest): pod myredis-redis-ha-server-0 does not have a host assigned

ubuntu@kubernetes-master:~$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/myredis-redis-ha-server-0 0/2 Pending 0 2m15s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25d

service/myredis-redis-ha ClusterIP None <none> 6379/TCP,26379/TCP 2m15s

service/myredis-redis-ha-announce-0 ClusterIP 10.106.76.104 <none> 6379/TCP,26379/TCP 2m15s

service/myredis-redis-ha-announce-1 ClusterIP 10.96.243.207 <none> 6379/TCP,26379/TCP 2m15s

service/myredis-redis-ha-announce-2 ClusterIP 10.106.146.214 <none> 6379/TCP,26379/TCP 2m15s

NAME READY AGE

statefulset.apps/myredis-redis-ha-server 0/3 2m15s

ubuntu@kubernetes-master:~$ kubectl describe pod/myredis-redis-ha-server-0

Name: myredis-redis-ha-server-0

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: <none>

Labels: app=redis-ha

controller-revision-hash=myredis-redis-ha-server-76b8874455

release=myredis

statefulset.kubernetes.io/pod-name=myredis-redis-ha-server-0

Annotations: checksum/init-config: 4466b6b67be4f1cb8366cac314fdc99c24d55025f5f89ae85eed09c156984ed4

checksum/probe-config: 80af60df01676bc55ee405936d1c7022090ff46275f2dbea0a2b358b4c60cb56

Status: Pending

IP:

Controlled By: StatefulSet/myredis-redis-ha-server

Init Containers:

config-init:

Image: redis:5.0.3-alpine

Port: <none>

Host Port: <none>

Command:

sh

Args:

/readonly-config/init.sh

Environment:

SENTINEL_ID_0: 8db94befdf51eca7be3ac3b5fe0612be19e1ebac

SENTINEL_ID_1: ec73f3dfc6ad50c811d535db5b7a4ae8fb22c7f1

SENTINEL_ID_2: 54db059996613c2317efe089f8a543150446479c

Mounts:

/data from data (rw)

/readonly-config from config (ro)

/var/run/secrets/kubernetes.io/serviceaccount from myredis-redis-ha-token-ss7z5 (ro)

Containers:

redis:

Image: redis:5.0.3-alpine

Port: 6379/TCP

Host Port: 0/TCP

Command:

redis-server

Args:

/data/conf/redis.conf

Liveness: exec [sh /probes/readiness.sh 6379] delay=15s timeout=1s period=5s #success=1 #failure=3

Readiness: exec [sh /probes/readiness.sh 6379] delay=15s timeout=1s period=5s #success=1 #failure=3

Environment: <none>

Mounts:

/data from data (rw)

/probes from probes (rw)

/var/run/secrets/kubernetes.io/serviceaccount from myredis-redis-ha-token-ss7z5 (ro)

sentinel:

Image: redis:5.0.3-alpine

Port: 26379/TCP

Host Port: 0/TCP

Command:

redis-sentinel

Args:

/data/conf/sentinel.conf

Liveness: exec [sh /probes/readiness.sh 26379] delay=15s timeout=1s period=5s #success=1 #failure=3

Readiness: exec [sh /probes/readiness.sh 26379] delay=15s timeout=1s period=5s #success=1 #failure=3

Environment: <none>

Mounts:

/data from data (rw)

/probes from probes (rw)

/var/run/secrets/kubernetes.io/serviceaccount from myredis-redis-ha-token-ss7z5 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: data-myredis-redis-ha-server-0

ReadOnly: false

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: myredis-redis-ha-configmap

Optional: false

probes:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: myredis-redis-ha-probes

Optional: false

myredis-redis-ha-token-ss7z5:

Type: Secret (a volume populated by a Secret)

SecretName: myredis-redis-ha-token-ss7z5

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 11s (x7 over 2m54s) default-scheduler pod has unbound immediate PersistentVolumeClaims

ubuntu@kubernetes-master:~$ helm list

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

myredis 1 Sun Mar 24 18:21:13 2019 DEPLOYED redis-ha-3.3.3 5.0.3 default

ubuntu@kubernetes-master:~$ helm delete myredis --purge

release "myredis" deleted

ubuntu@kubernetes-master:~$ helm install --name myredis --set password=secretpassword stable/redis

NAME: myredis

LAST DEPLOYED: Sun Mar 24 18:30:05 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

myredis-slave-7bbcb87c49-s6nd2 0/1 ContainerCreating 0 0s

myredis-master-0 0/1 Pending 0 0s

==> v1/Secret

NAME AGE

myredis 0s

==> v1/ConfigMap

myredis 0s

myredis-health 0s

==> v1/Service

myredis-master 0s

myredis-slave 0s

==> v1beta1/Deployment

myredis-slave 0s

==> v1beta2/StatefulSet

myredis-master 0s

NOTES:

** Please be patient while the chart is being deployed **

Redis can be accessed via port 6379 on the following DNS names from within your cluster:

myredis-master.default.svc.cluster.local for read/write operations

myredis-slave.default.svc.cluster.local for read-only operations

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace default myredis -o jsonpath="{.data.redis-password}" | base64 --decode)

To connect to your Redis server:

1. Run a Redis pod that you can use as a client:

kubectl run --namespace default myredis-client --rm --tty -i --restart='Never' \

--env REDIS_PASSWORD=$REDIS_PASSWORD \

--image docker.io/bitnami/redis:4.0.14 -- bash

2. Connect using the Redis CLI:

redis-cli -h myredis-master -a $REDIS_PASSWORD

redis-cli -h myredis-slave -a $REDIS_PASSWORD

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/myredis 6379:6379 &

redis-cli -h 127.0.0.1 -p 6379 -a $REDIS_PASSWORD

ubuntu@kubernetes-master:~$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/myredis-master-0 0/1 Pending 0 62s

pod/myredis-slave-7bbcb87c49-r4s5v 0/1 Running 1 62s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25d

service/myredis-master ClusterIP 10.96.149.24 <none> 6379/TCP 62s

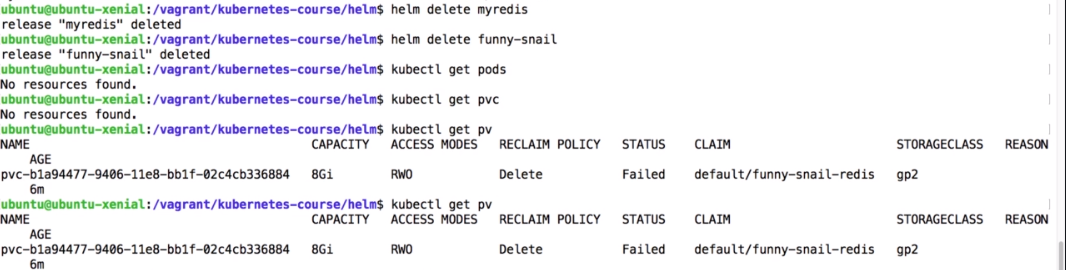

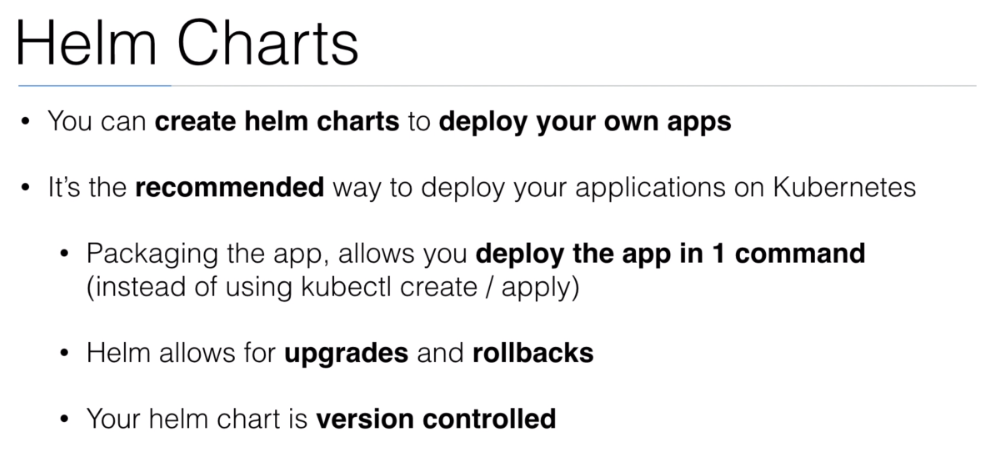

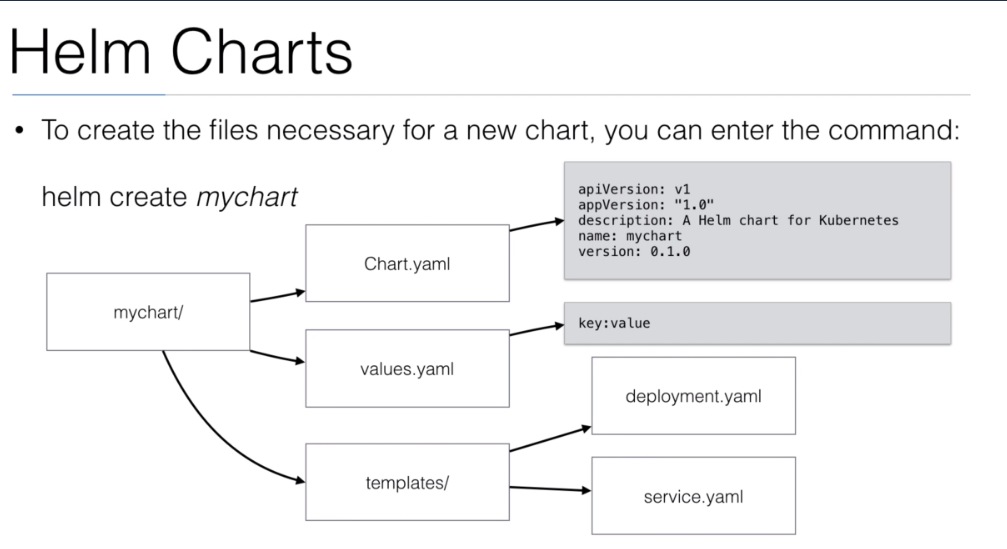

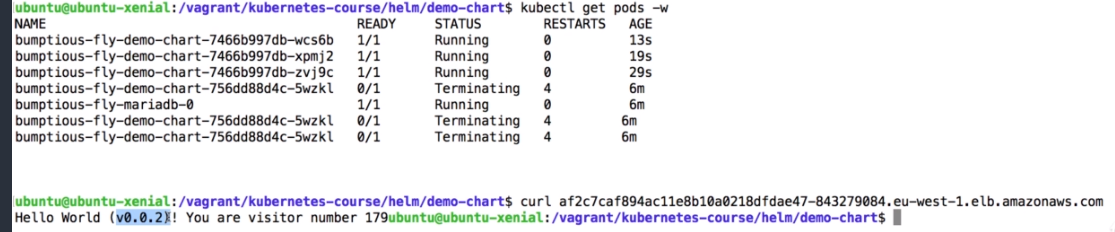

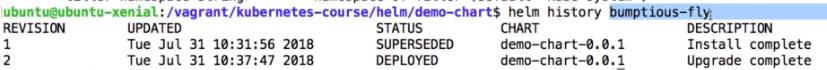

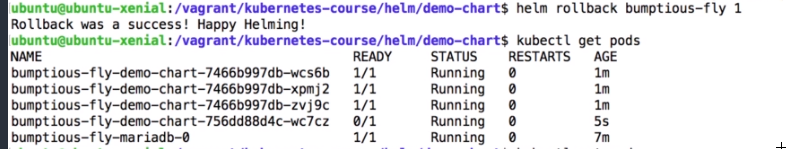

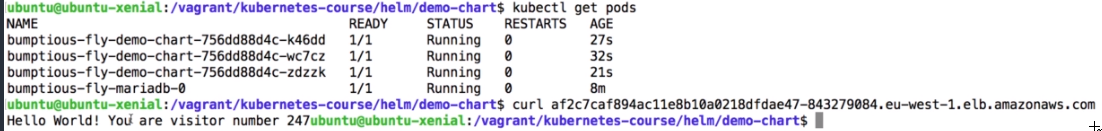

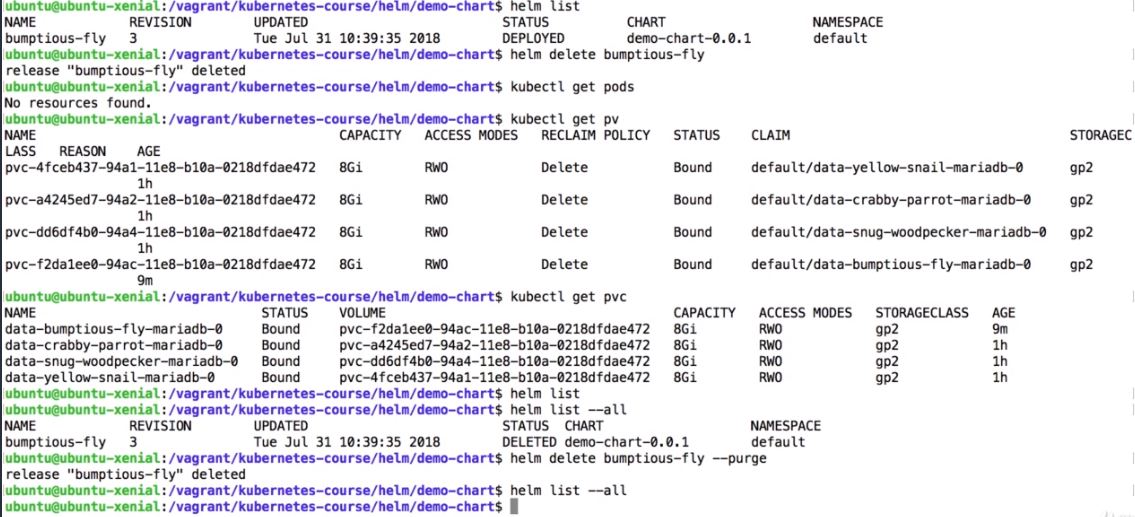

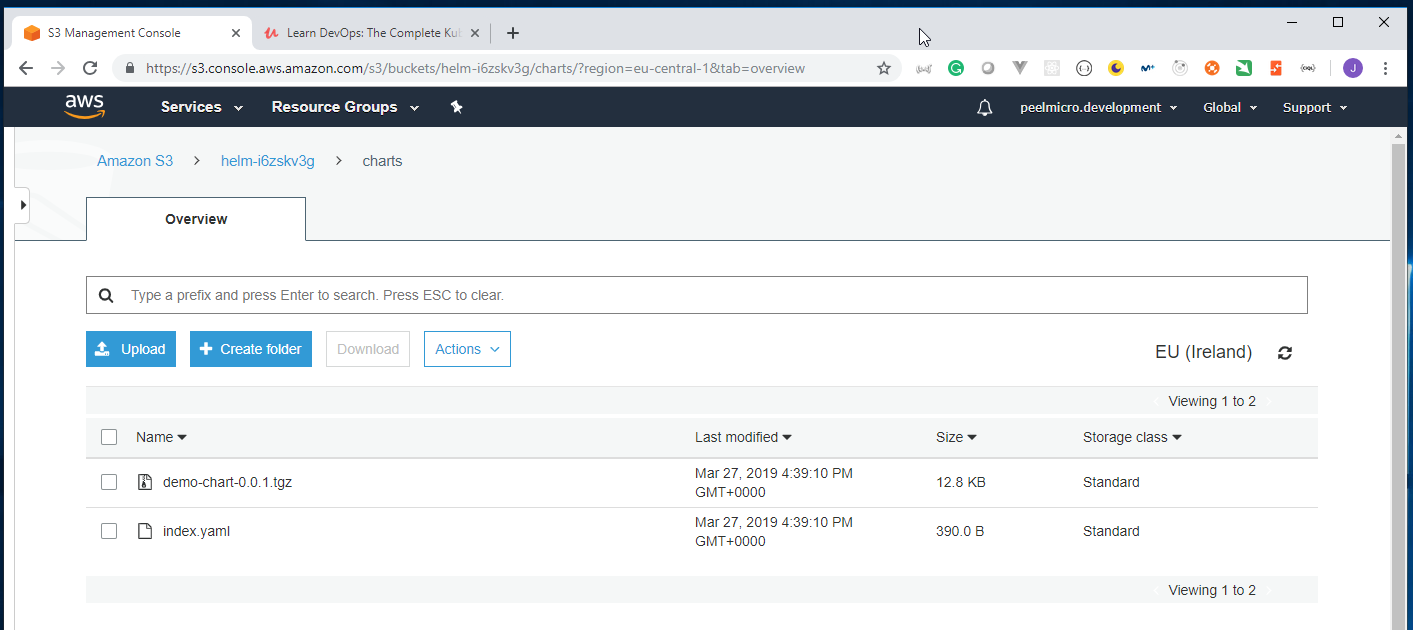

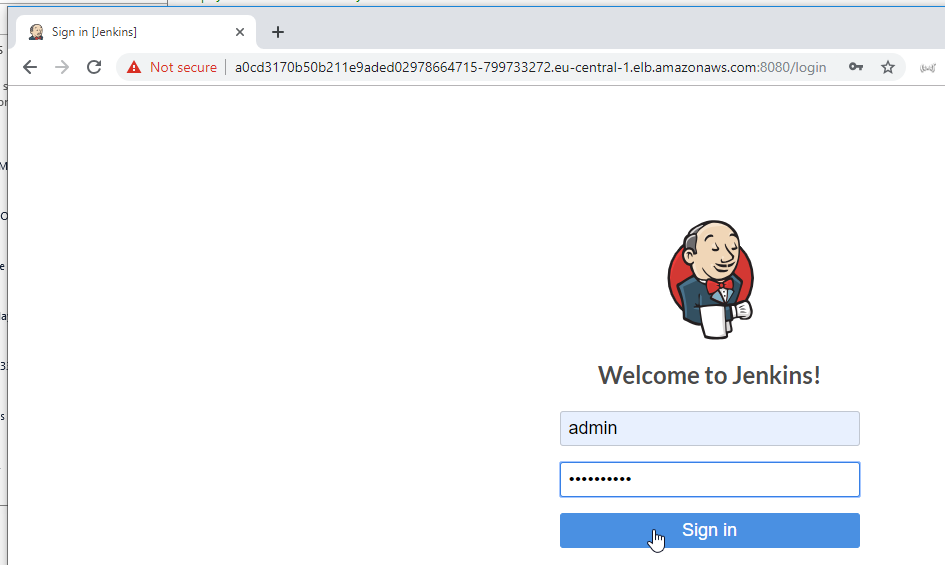

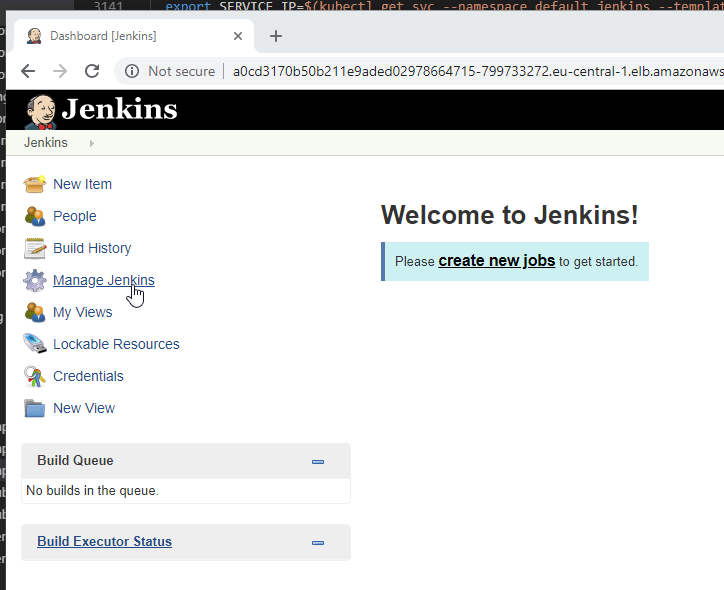

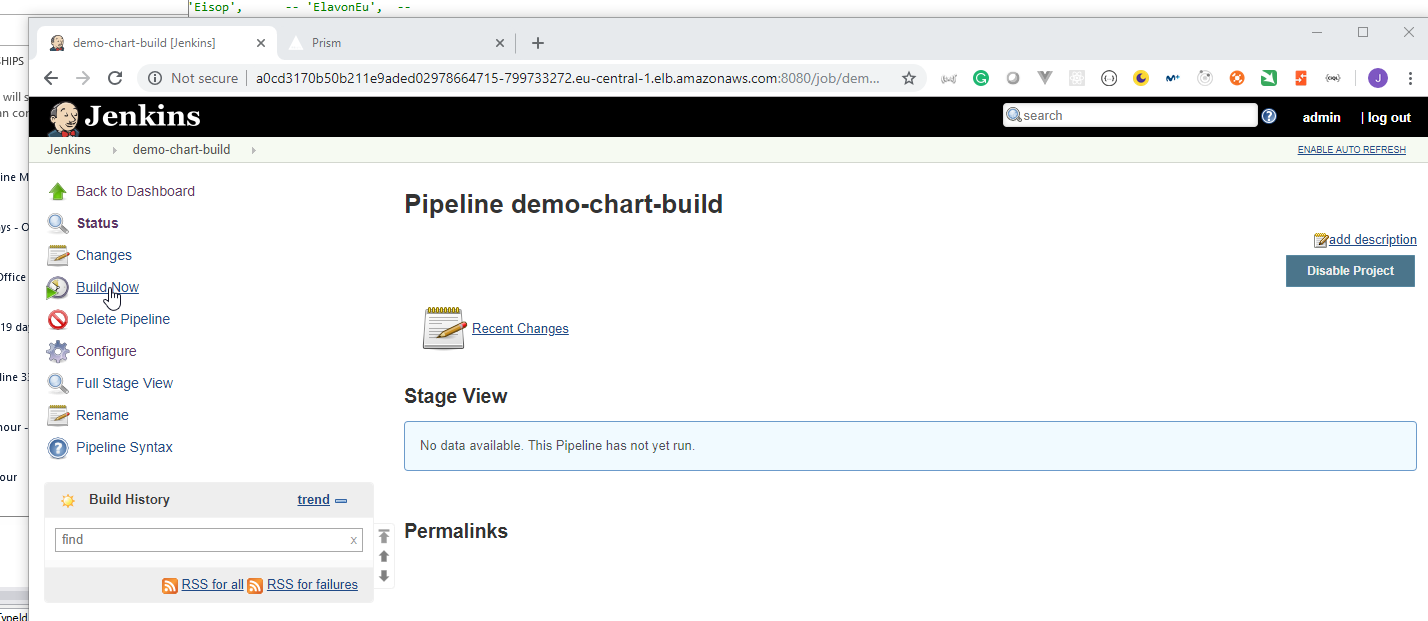

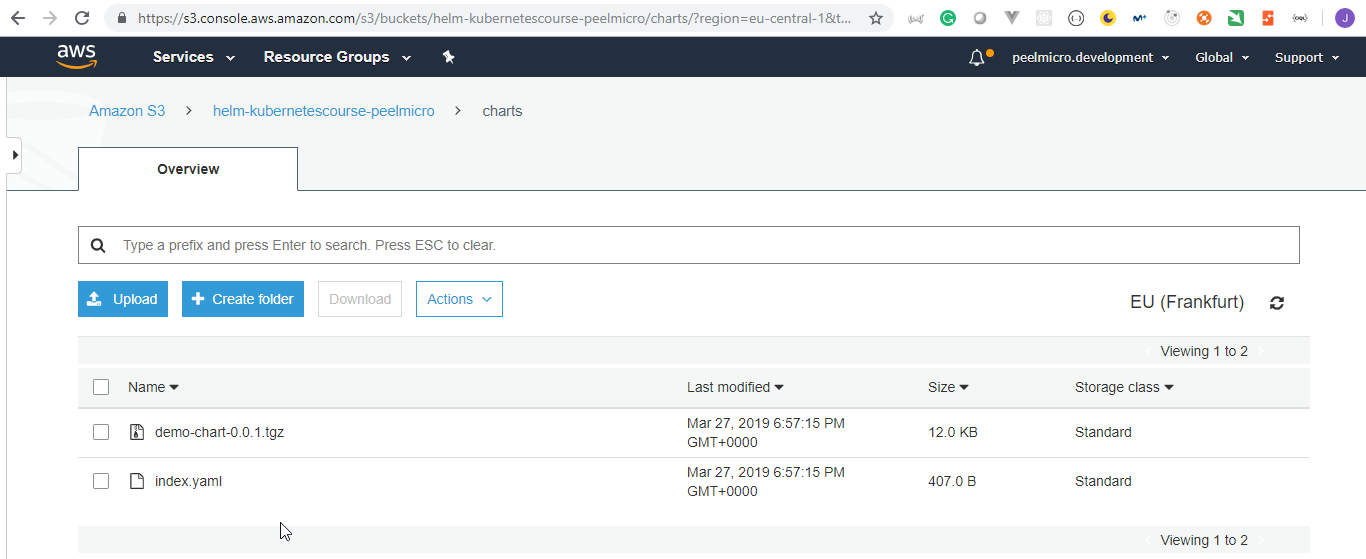

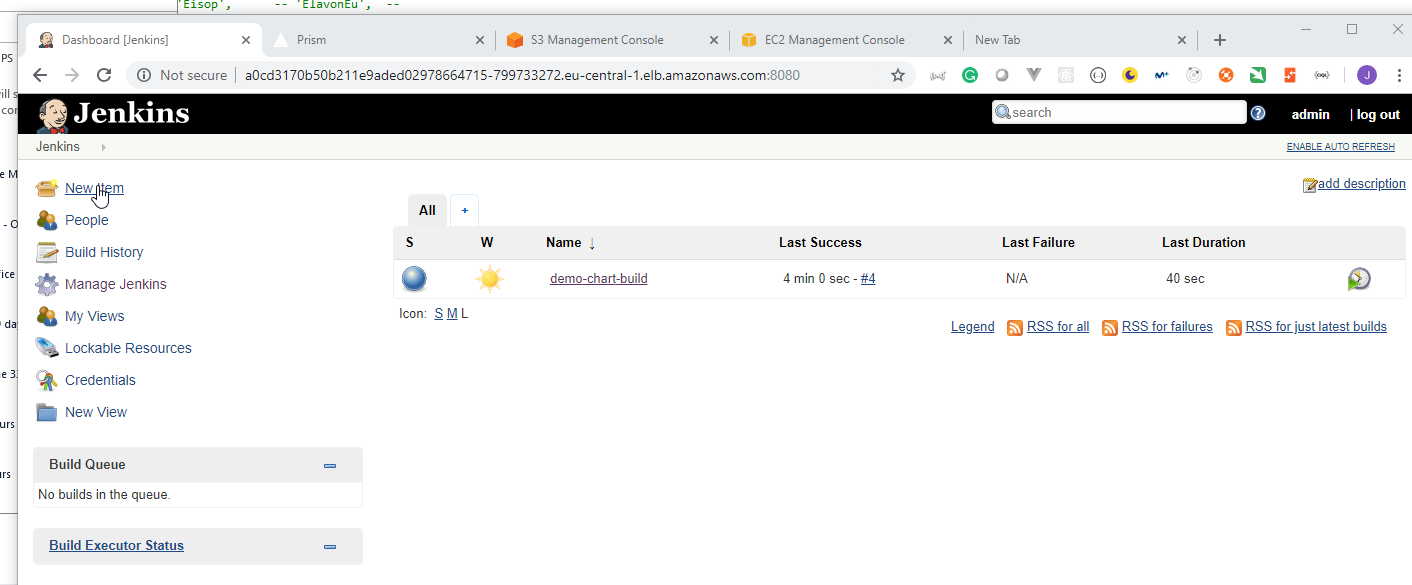

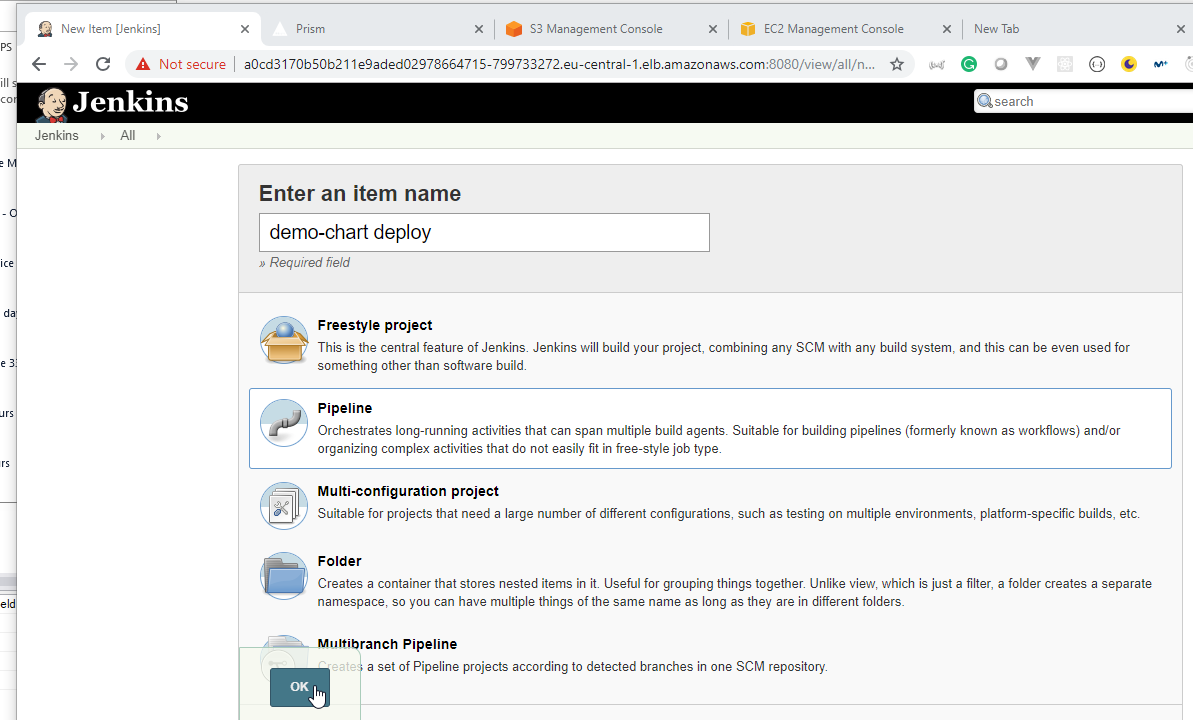

service/myredis-slave ClusterIP 10.100.25.139 <none> 6379/TCP 62s