Learn DevOps: The Complete Kubernetes Course (Part 4)

Github Repositories

- learn-devops-the-complete-kubernetes-course.

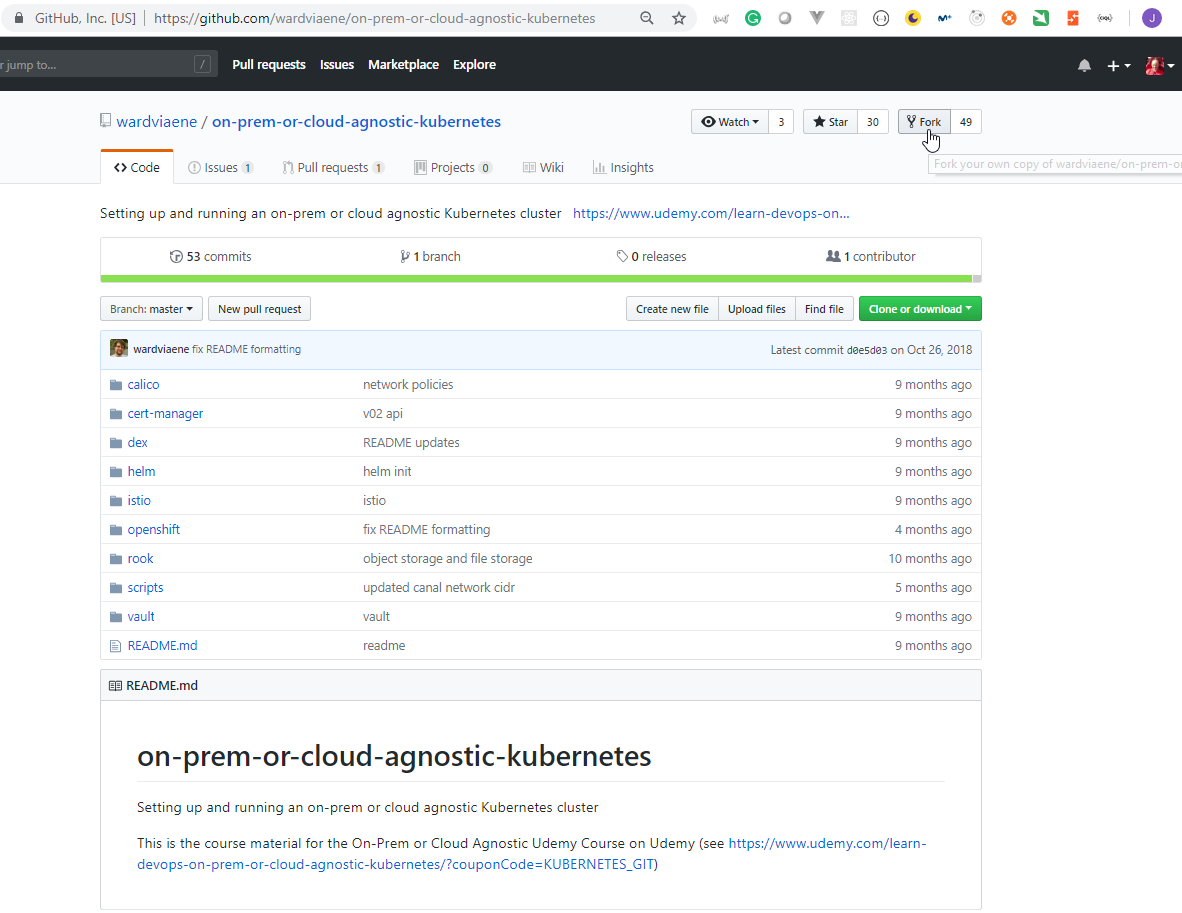

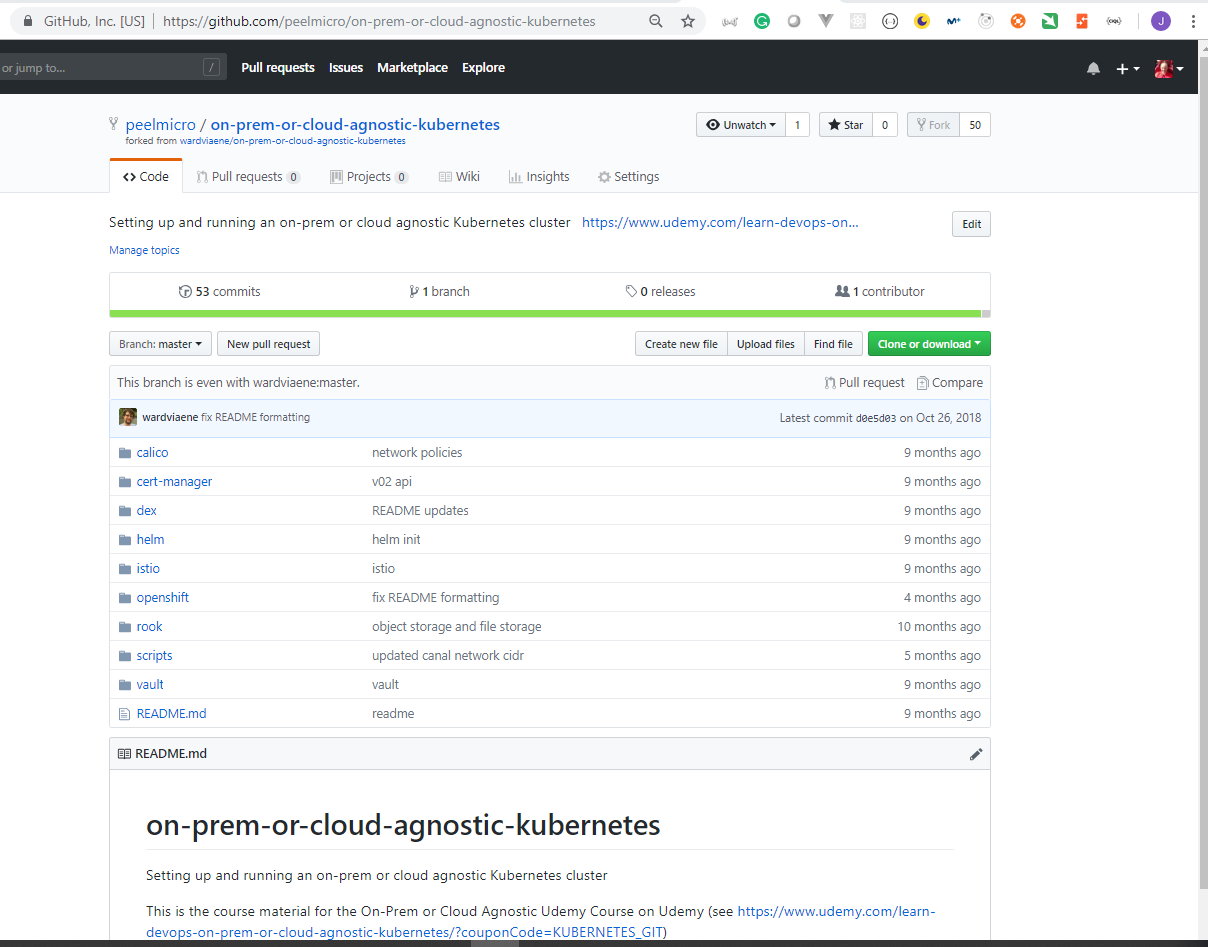

- on-prem-or-cloud-agnostic-kubernetes.

- kubernetes-coursee.

- http-echo.

The Learn DevOps: The Complete Kubernetes Course Udemy course helps learn how Kubernetes will run and manage your containerized applications and to build, deploy, use, and maintain Kubernetes.

Other parts:

- Learn DevOps: The Complete Kubernetes Course (Part 1)

- Learn DevOps: The Complete Kubernetes Course (Part 2)

- Learn DevOps: The Complete Kubernetes Course (Part 3)

Table of contents

- What I've learned

- Section: 7. Microservices

- 106. Introduction to Istio

- 107. Demo: Istio Installation

- 108. Demo: An Istio enabled app

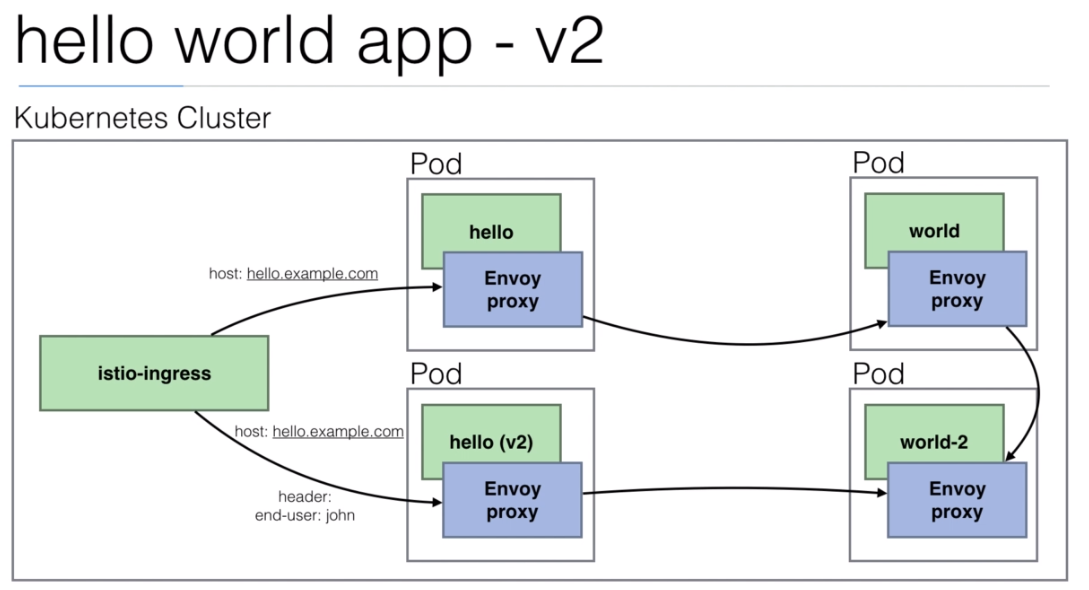

- 109. Demo: Advanced routing with Istio

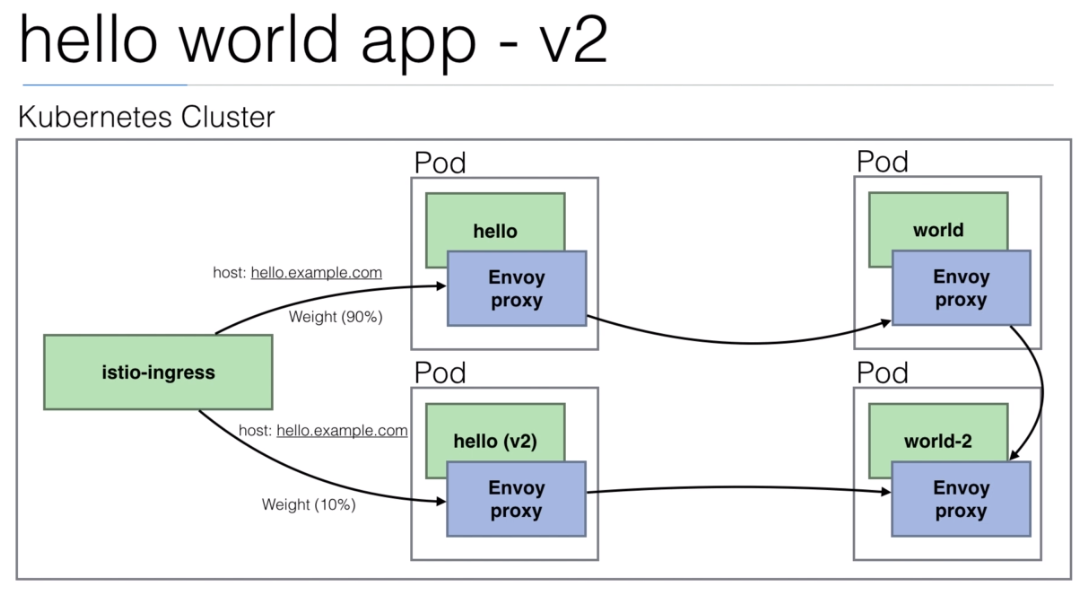

- 110. Demo: Canary Deployments

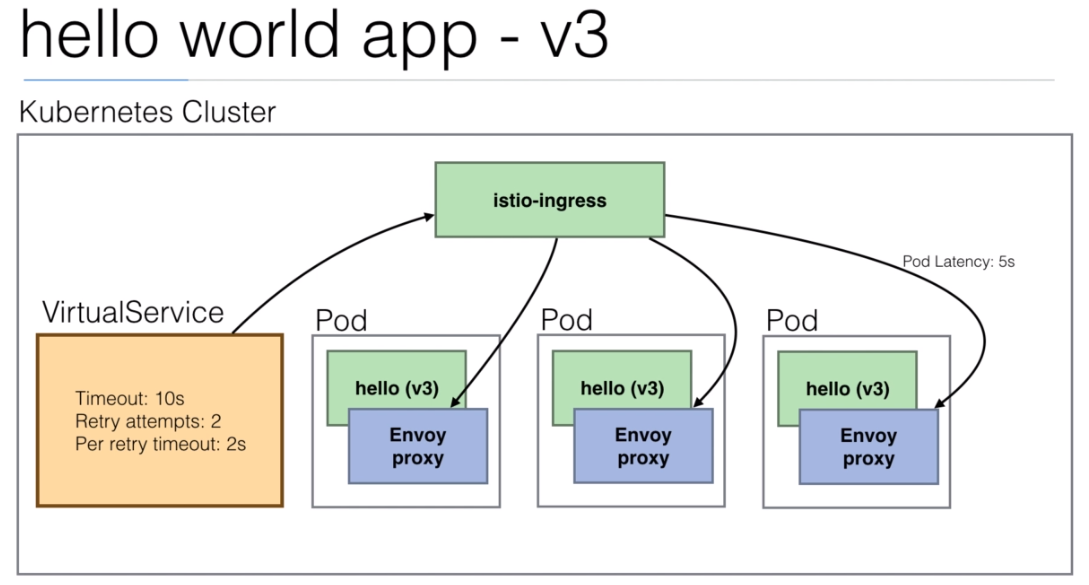

- 111. Demo: Retries

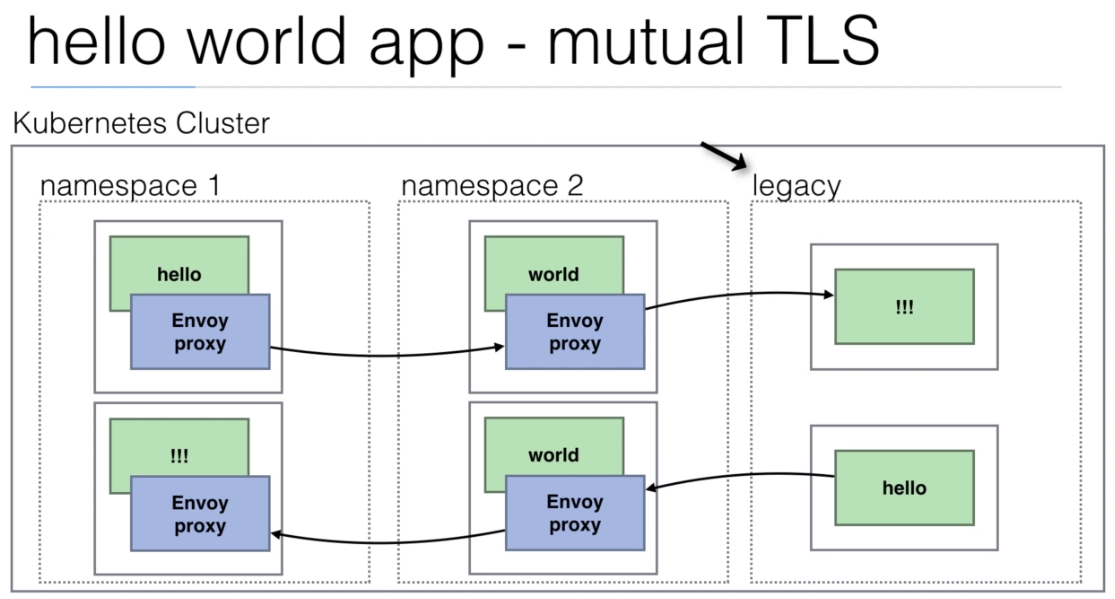

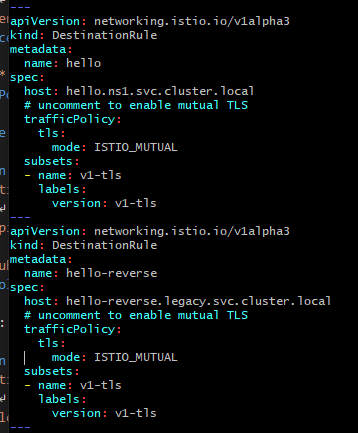

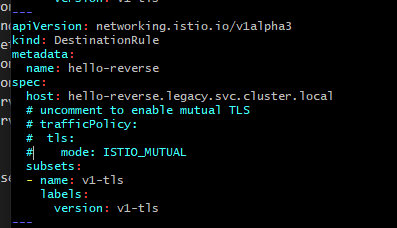

- 112. Mutual TLS

- 113. Demo: Mutual TLS

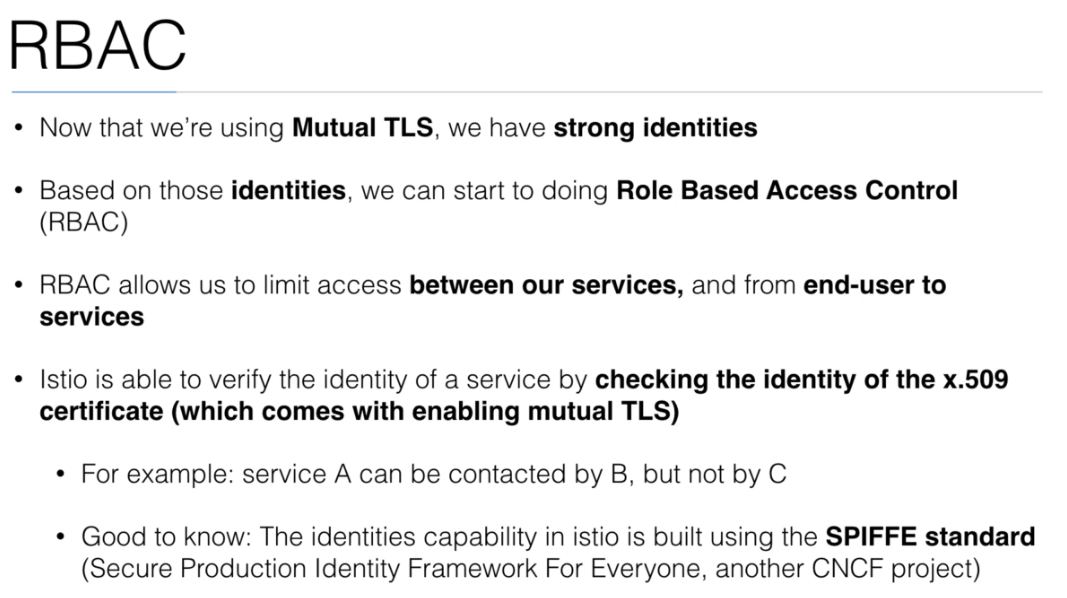

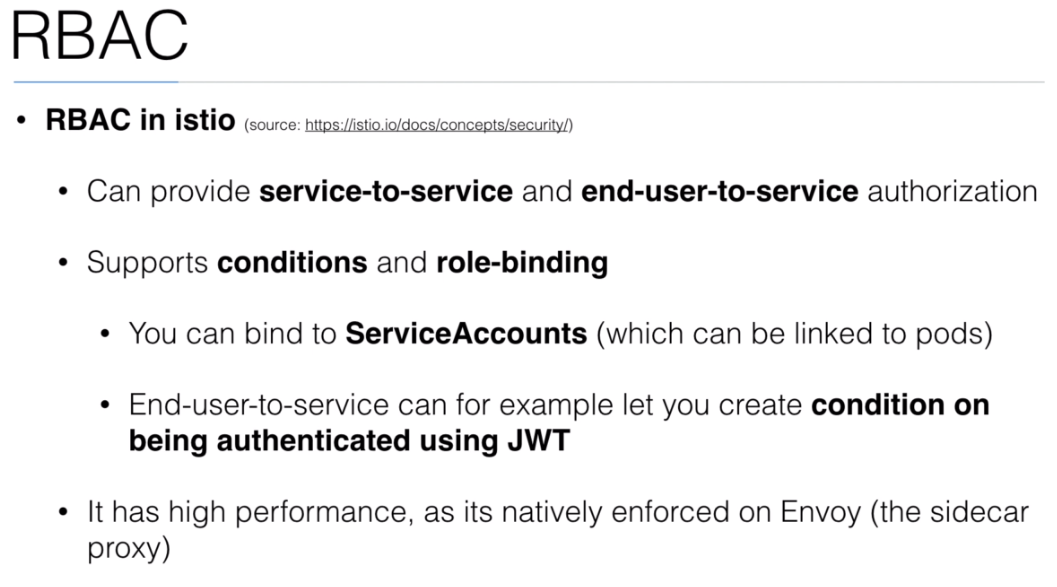

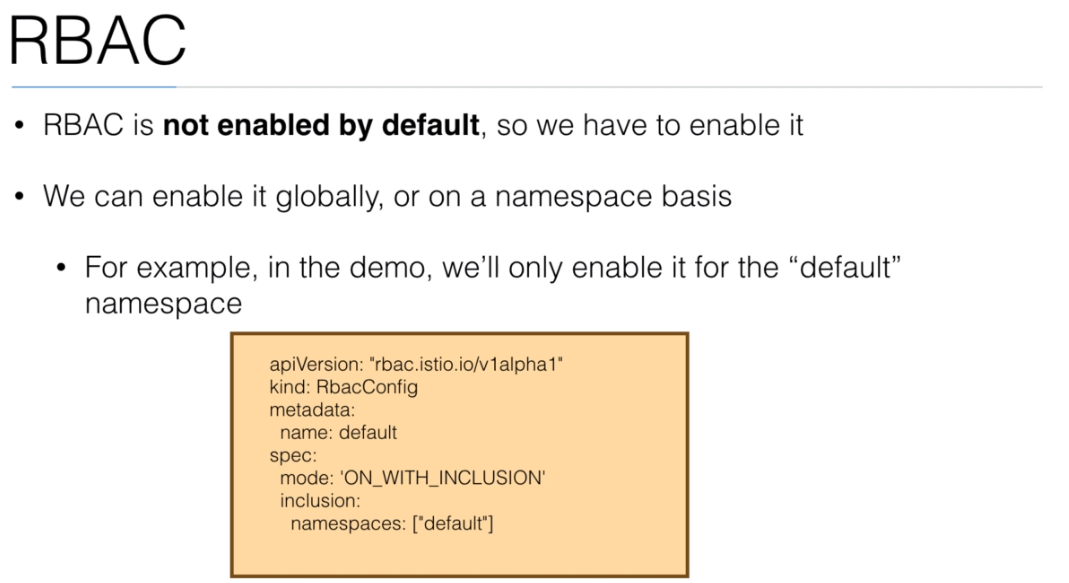

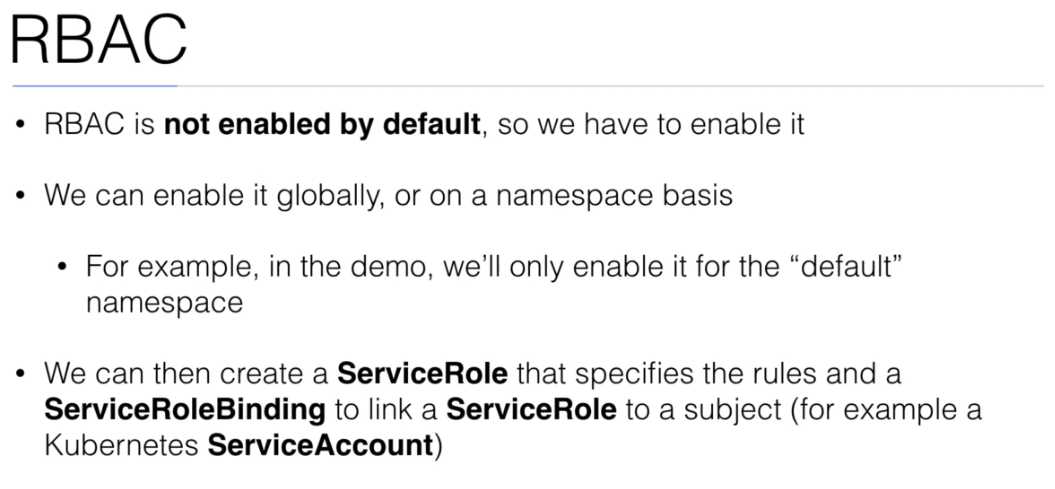

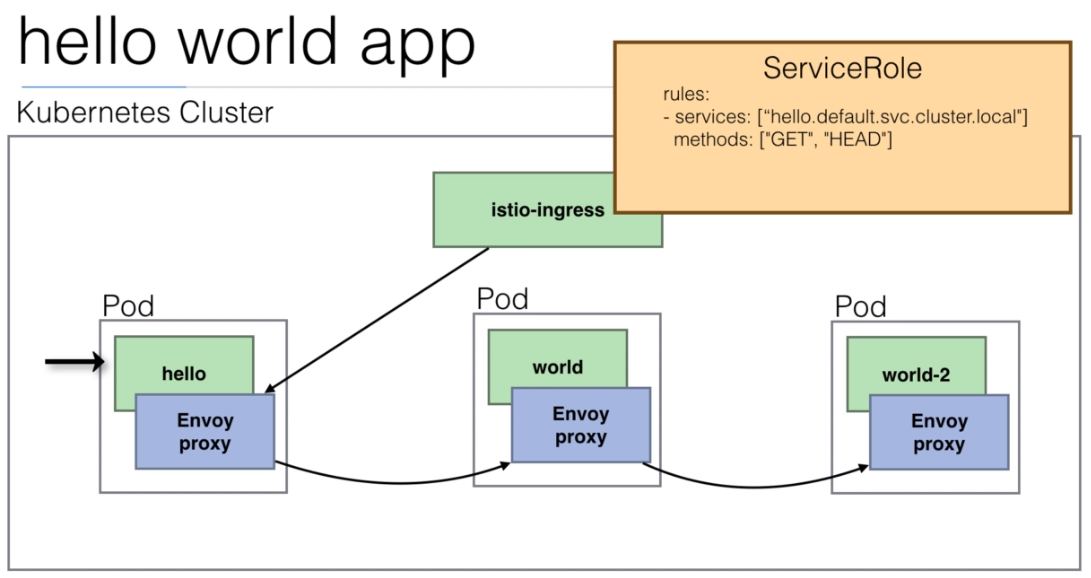

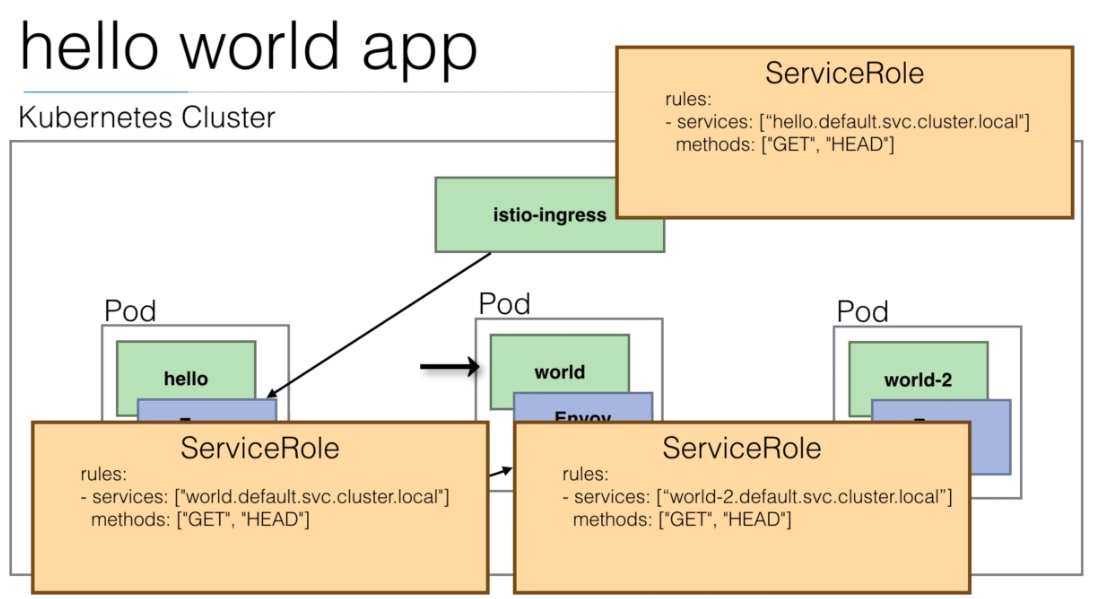

- 114. RBAC with Istio

- 115. Demo: RBAC with Istio

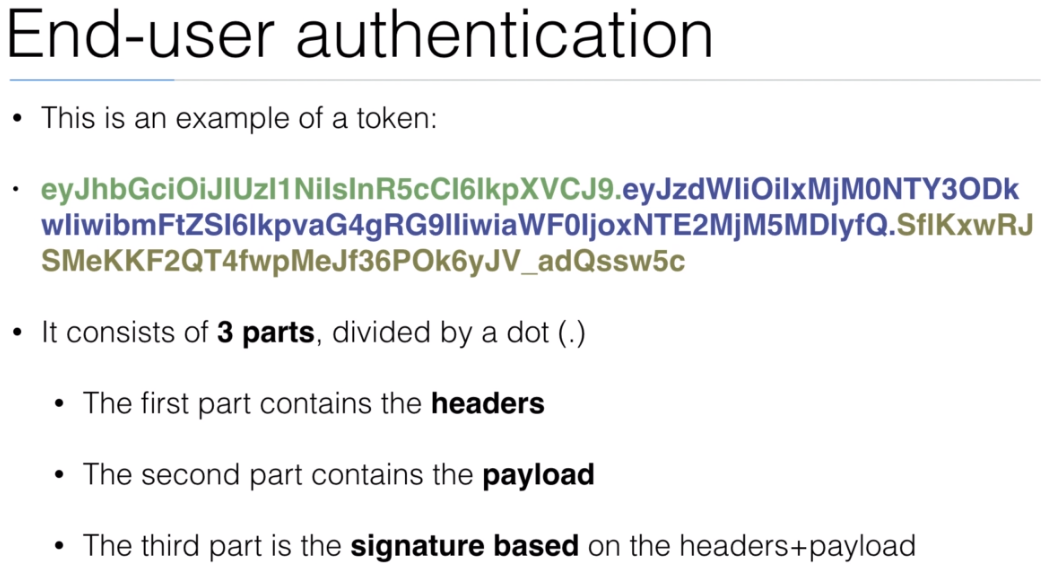

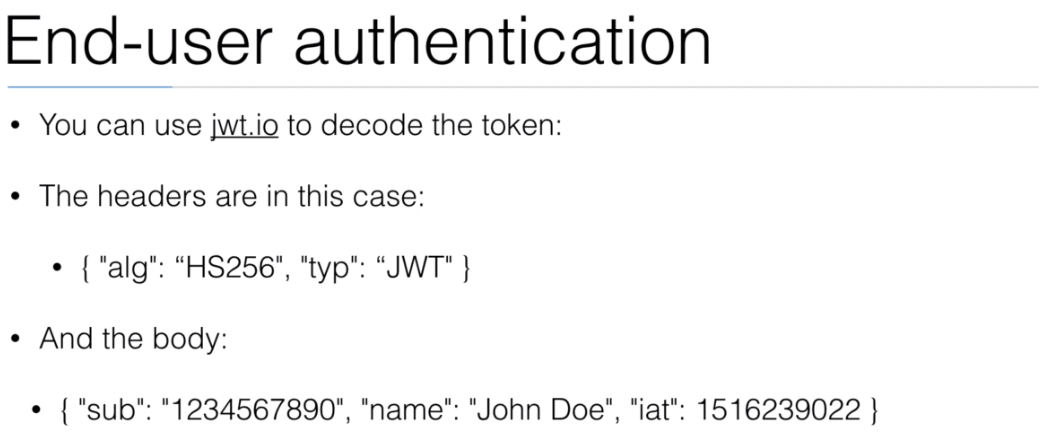

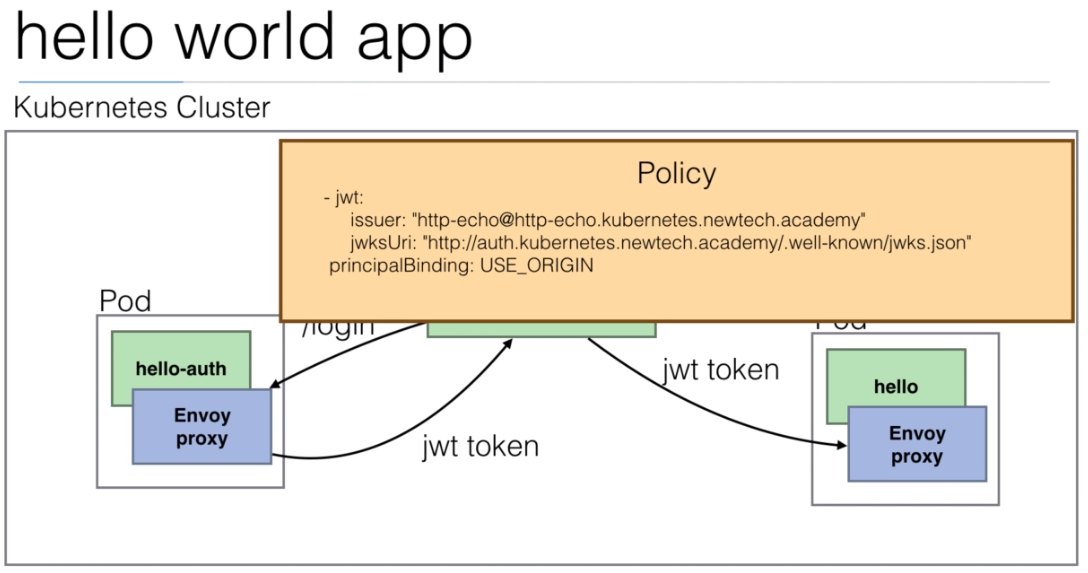

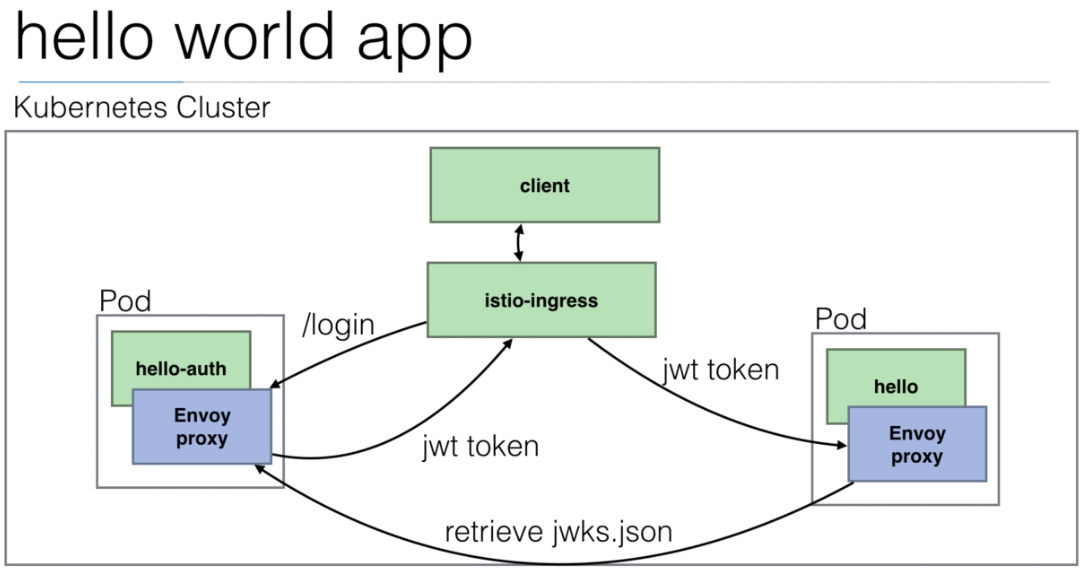

- 116. End-user authentication with istio (JWT)

- 117. Demo: End-user authentication with istio (JWT)

- 118. Demo: Istio Egress traffic

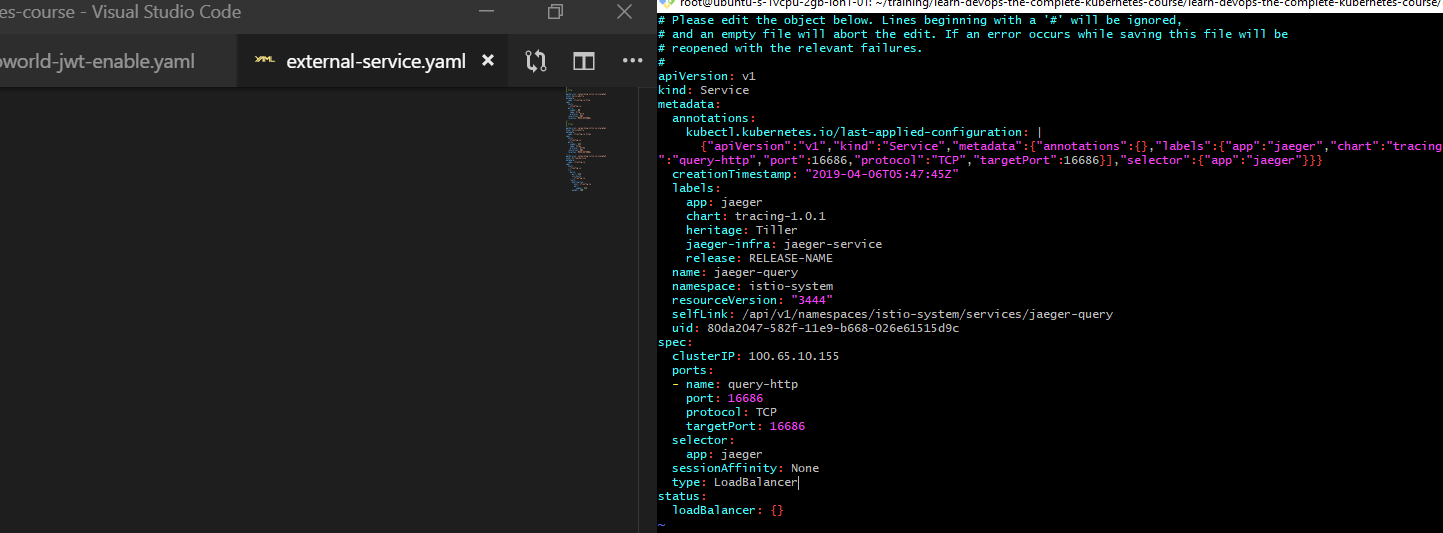

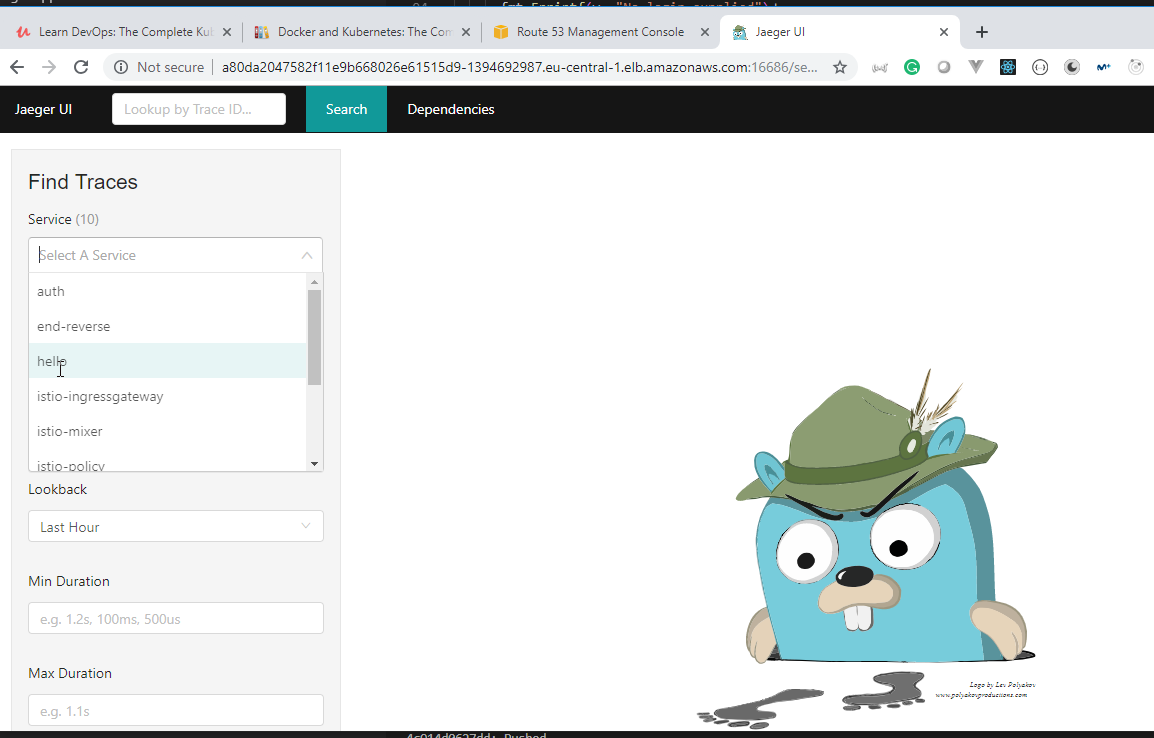

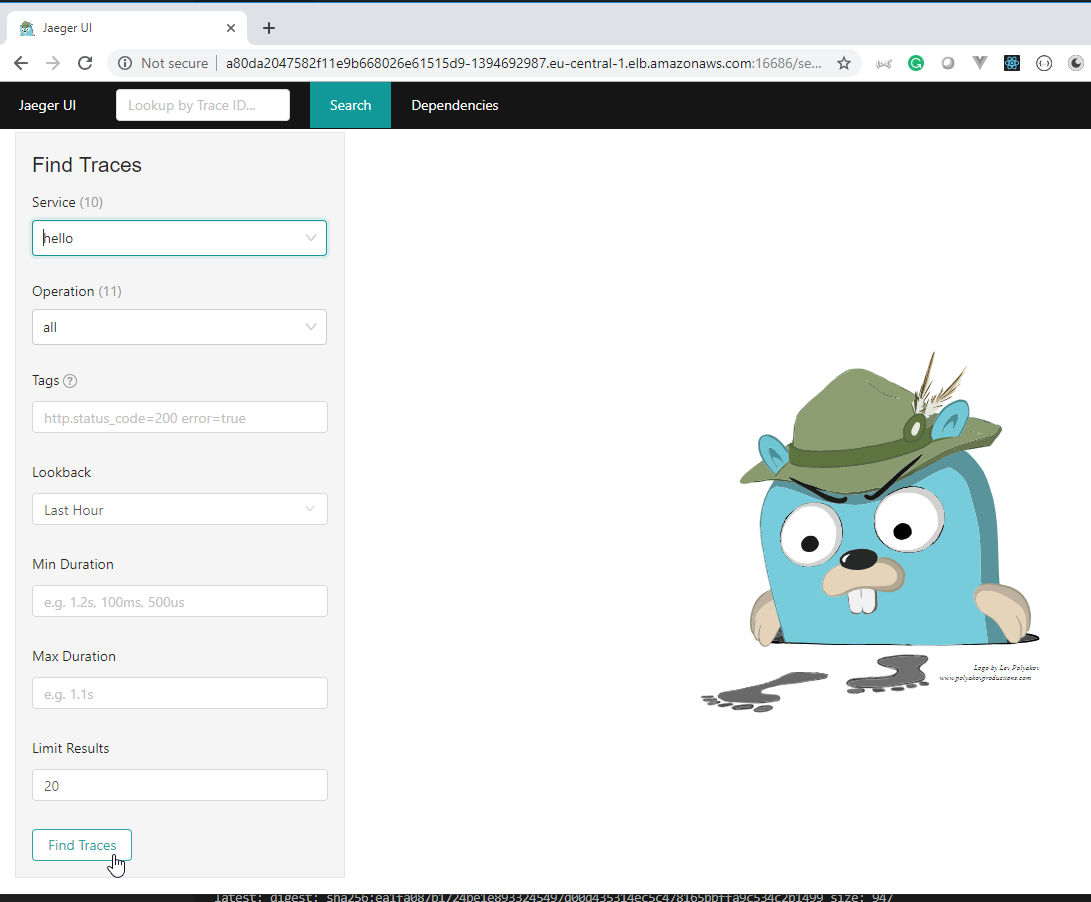

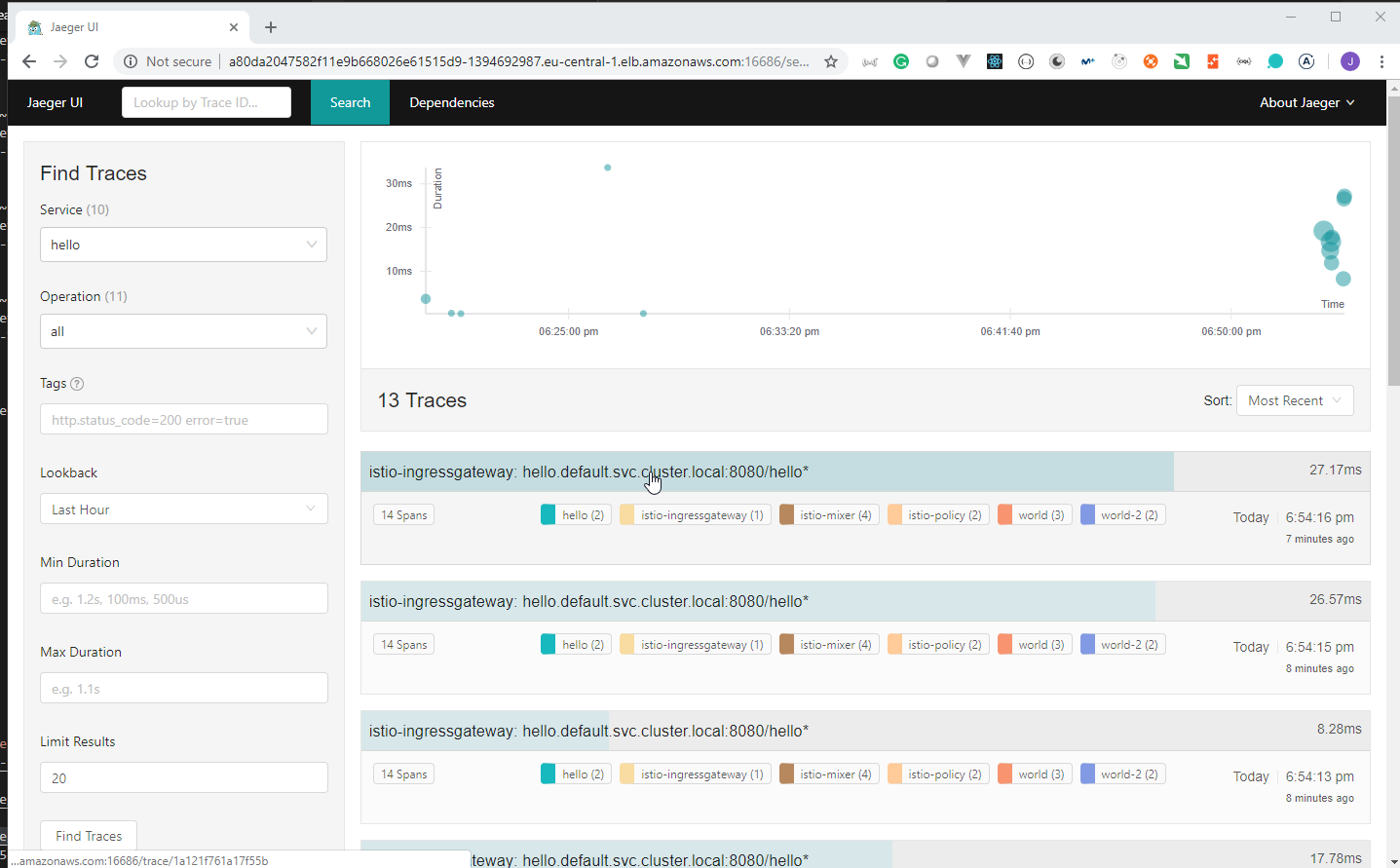

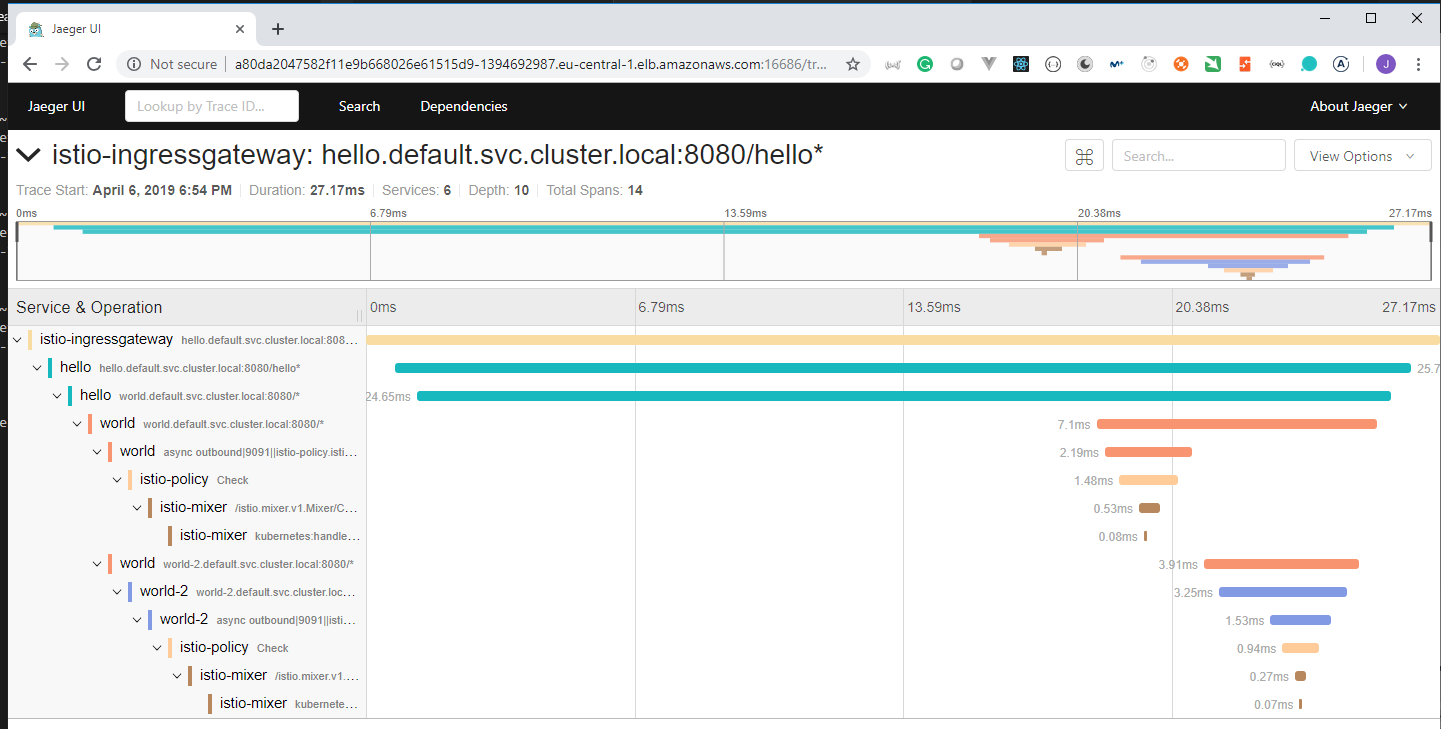

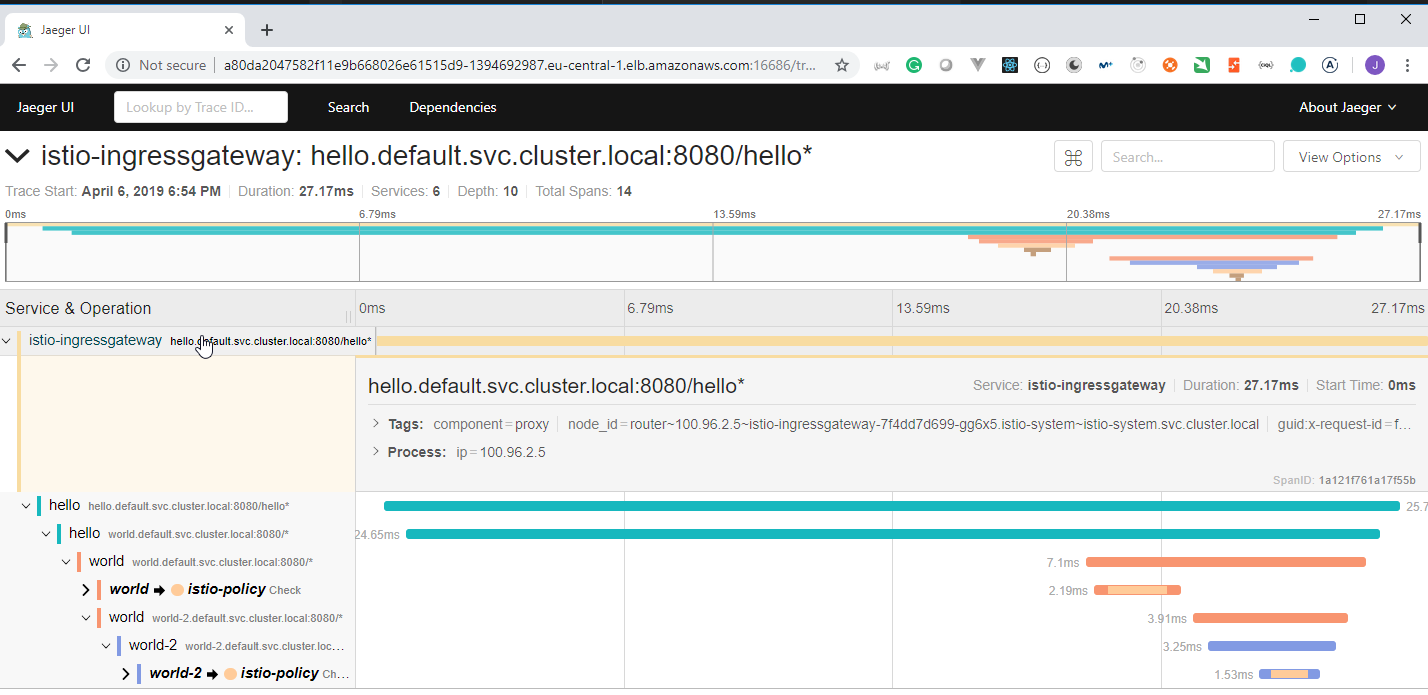

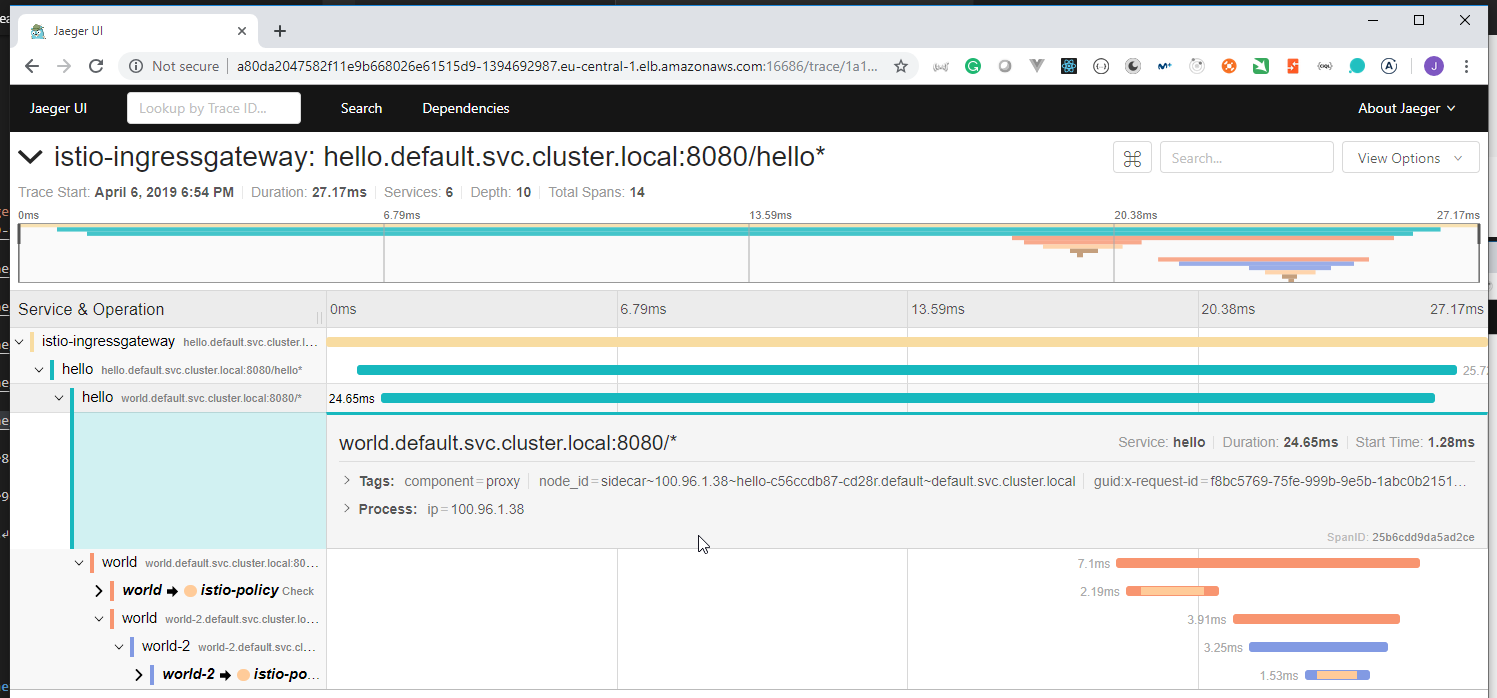

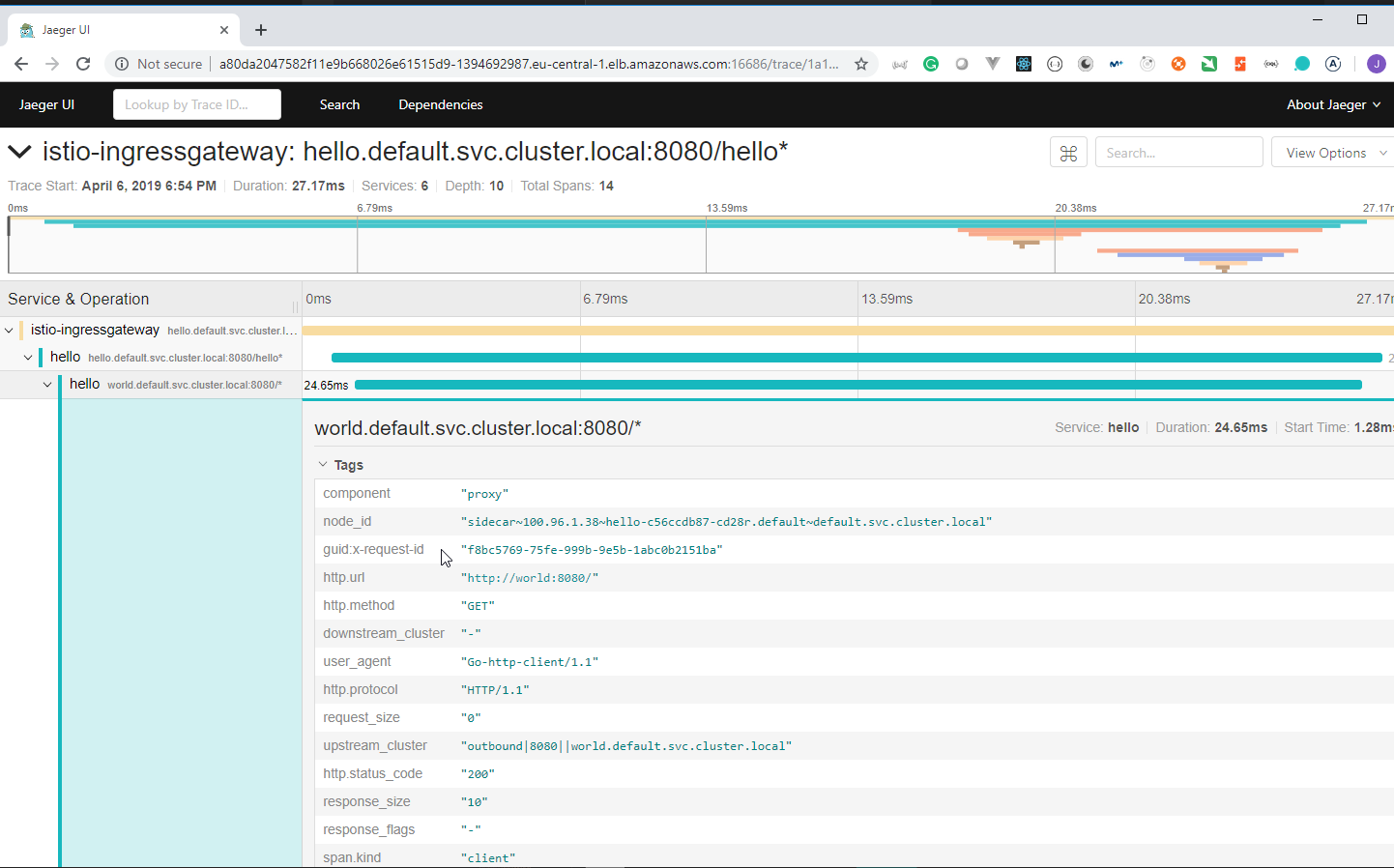

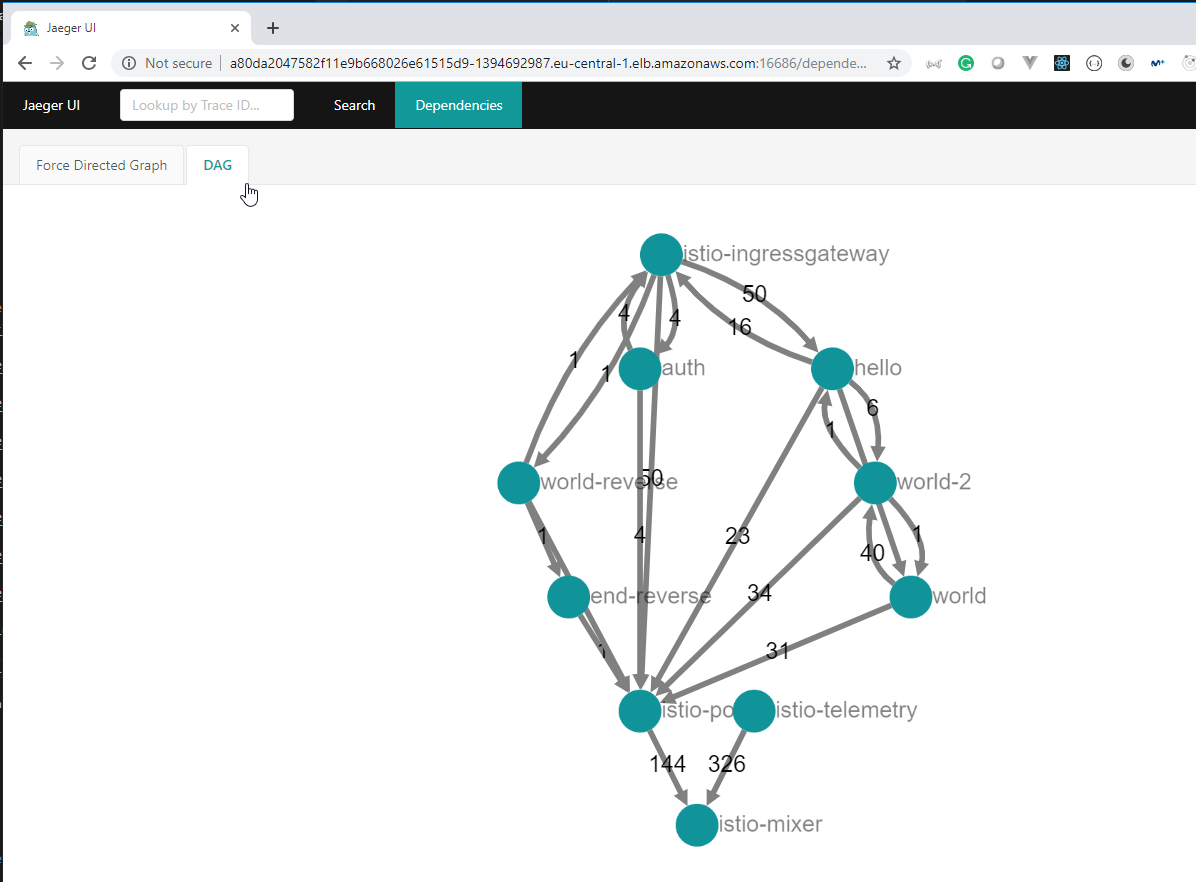

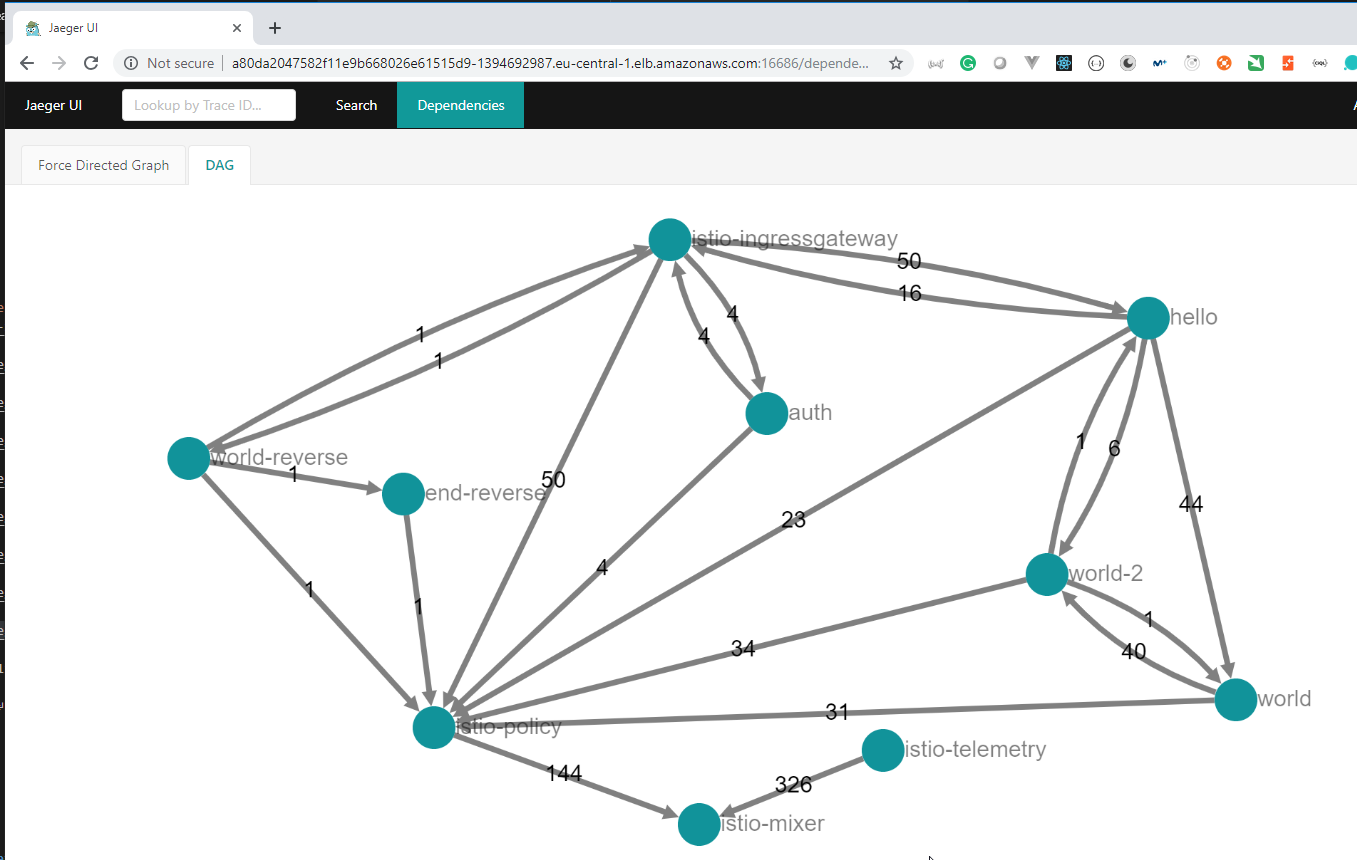

- 119. Demo: Distributed Tracing with Jaeger

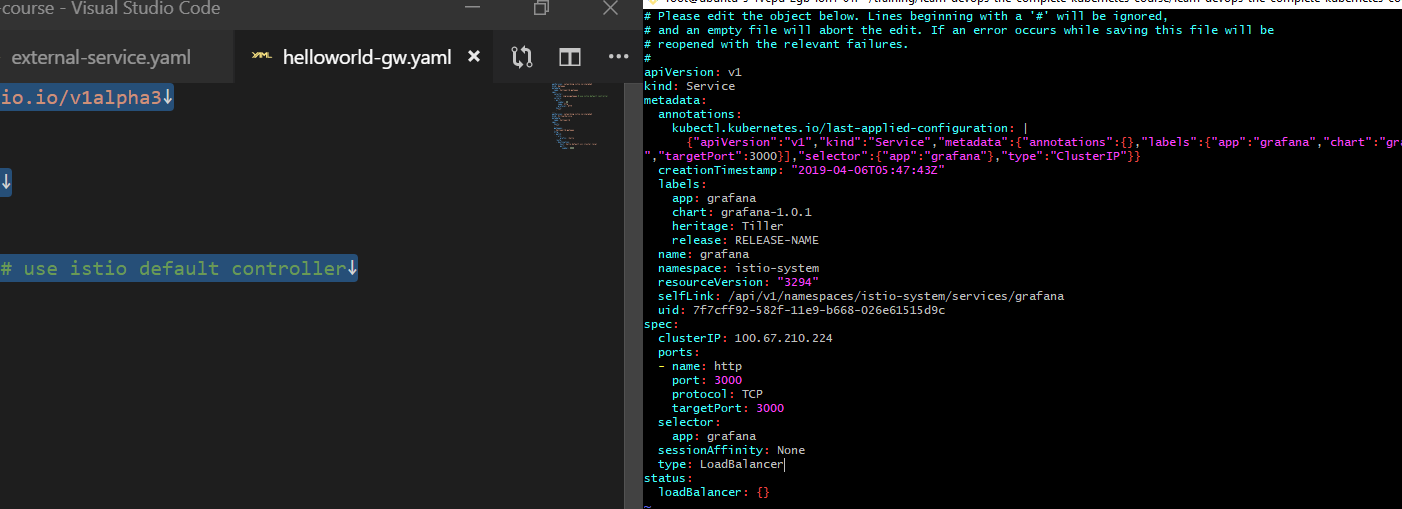

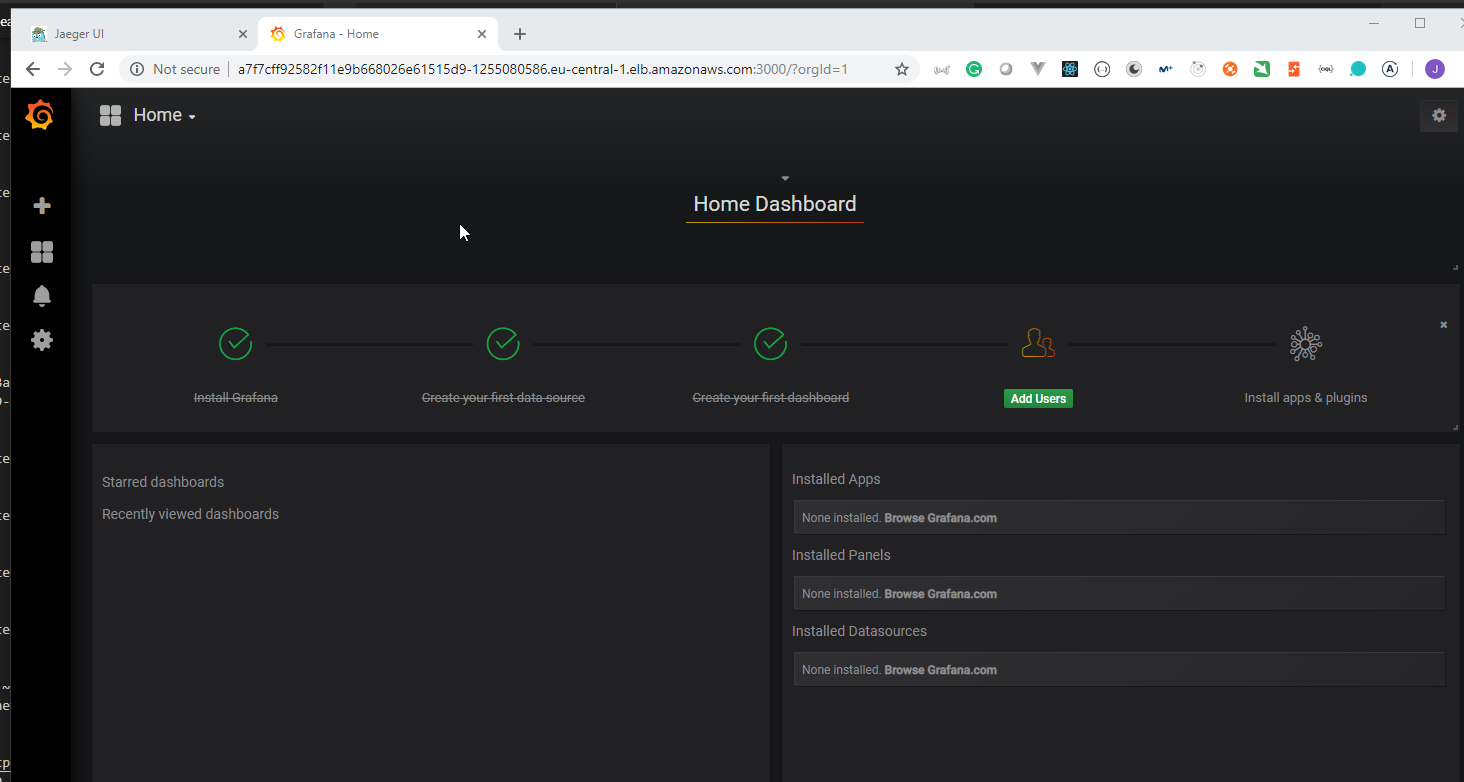

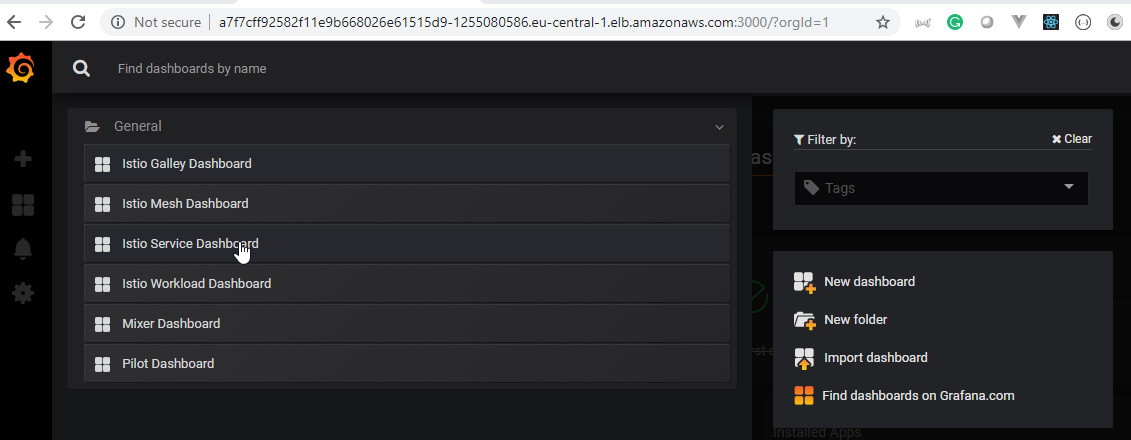

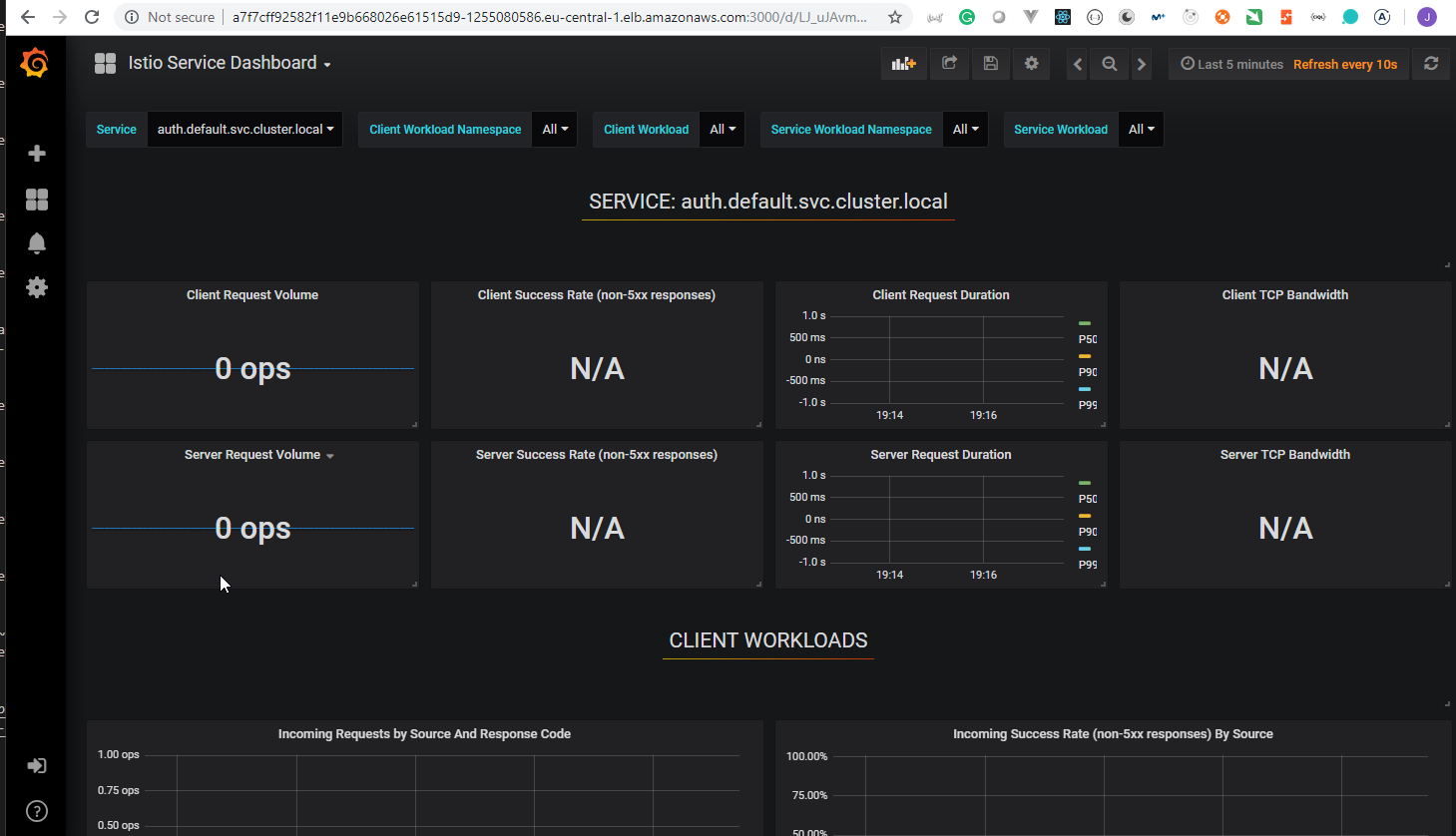

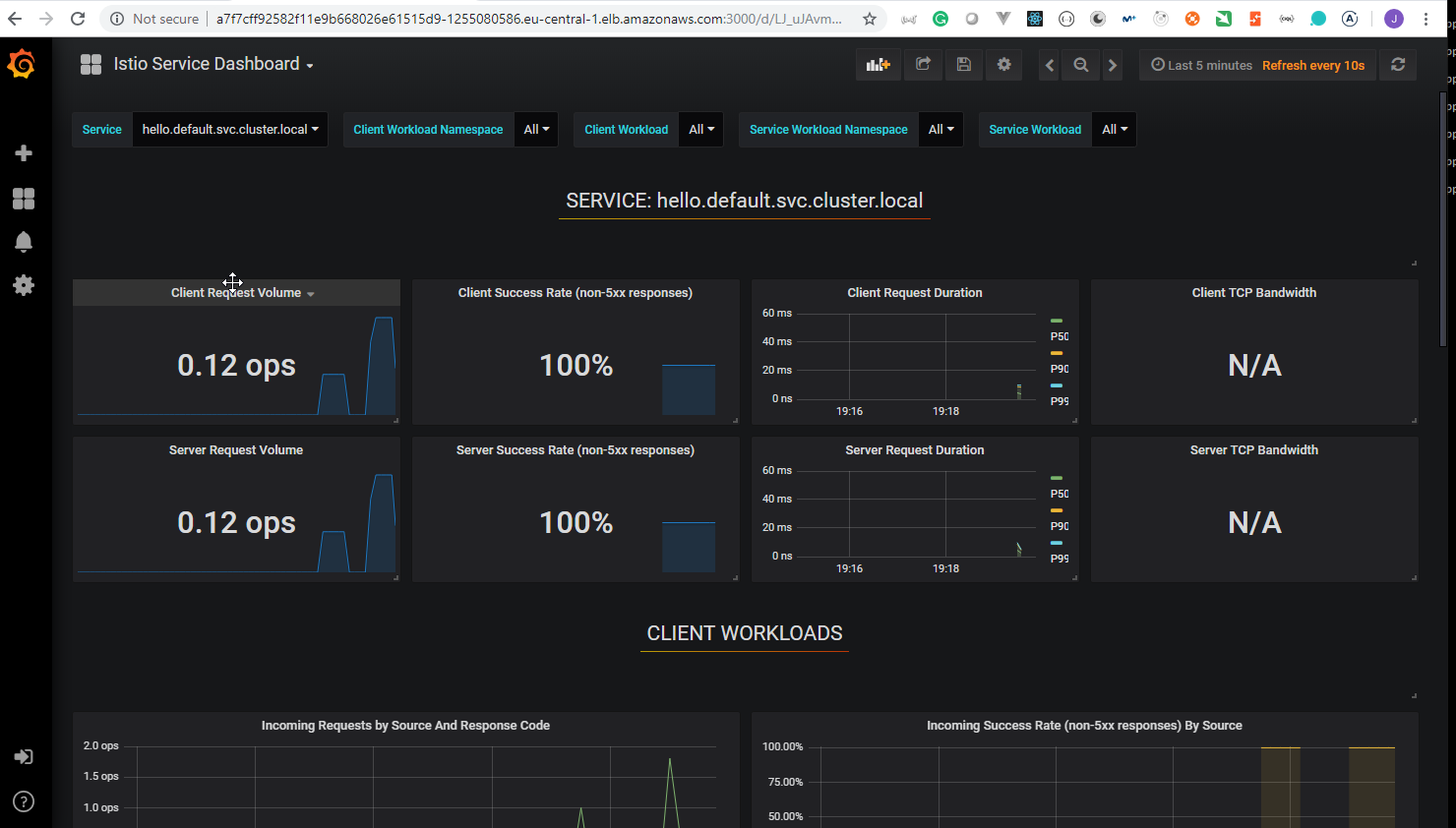

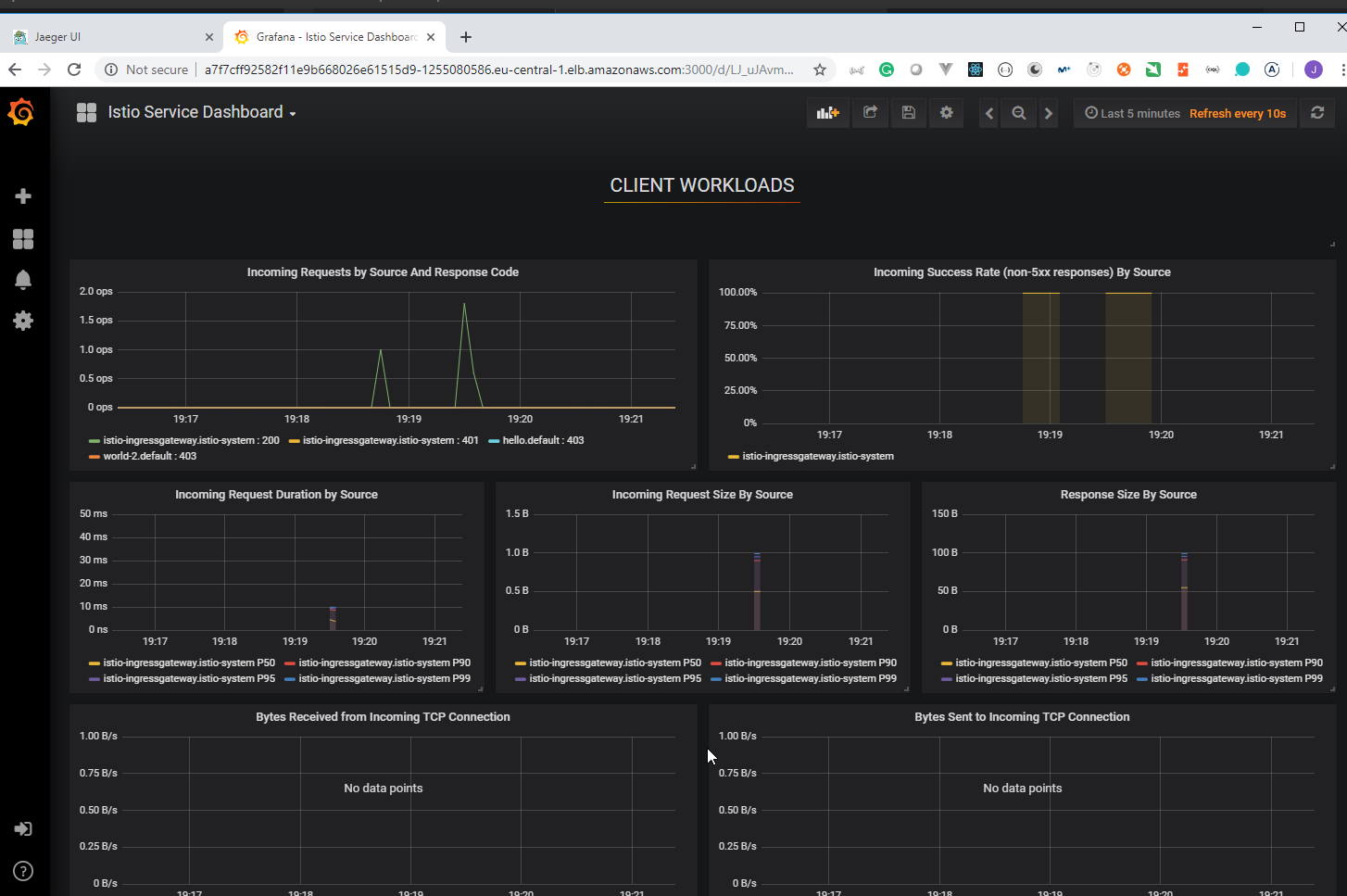

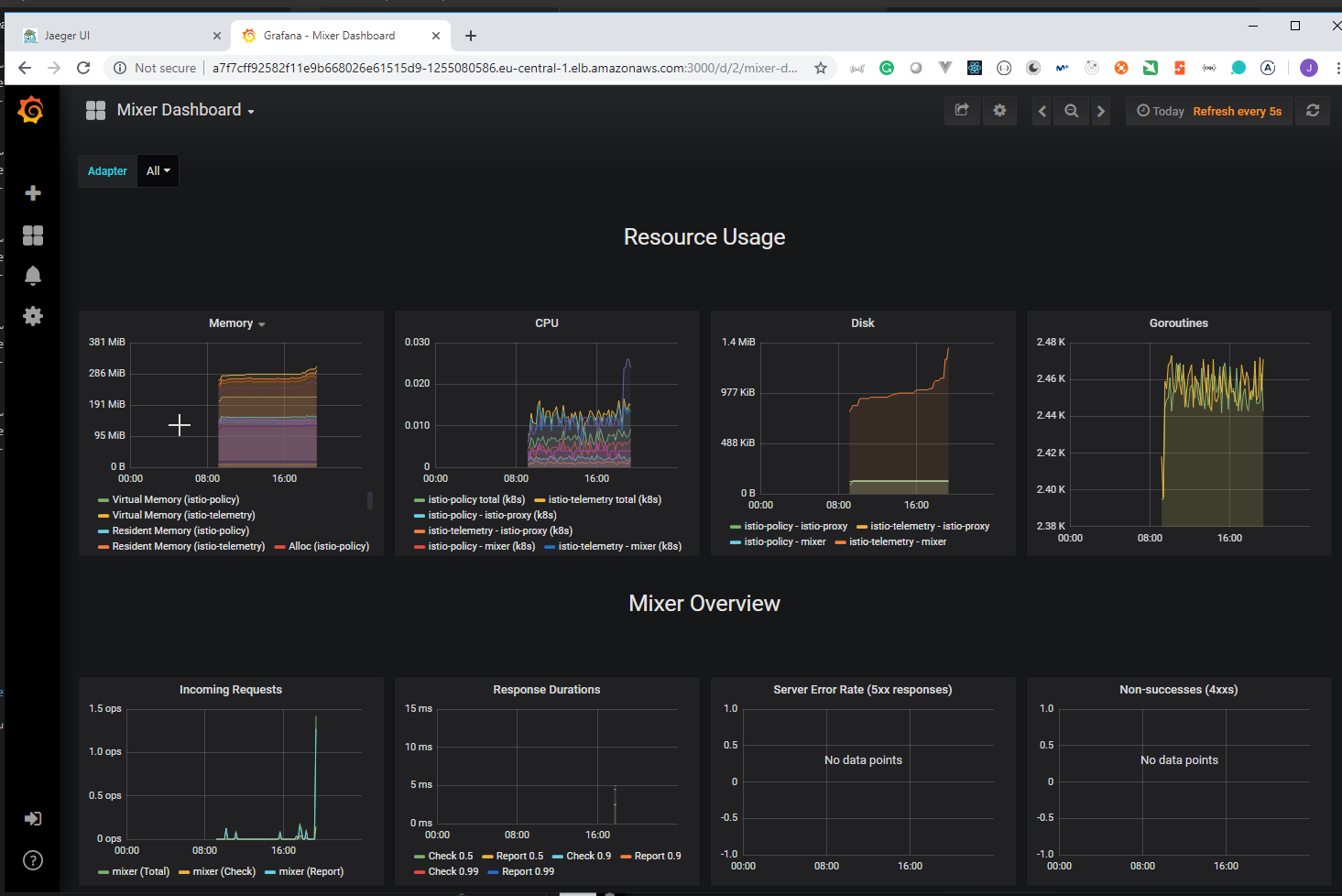

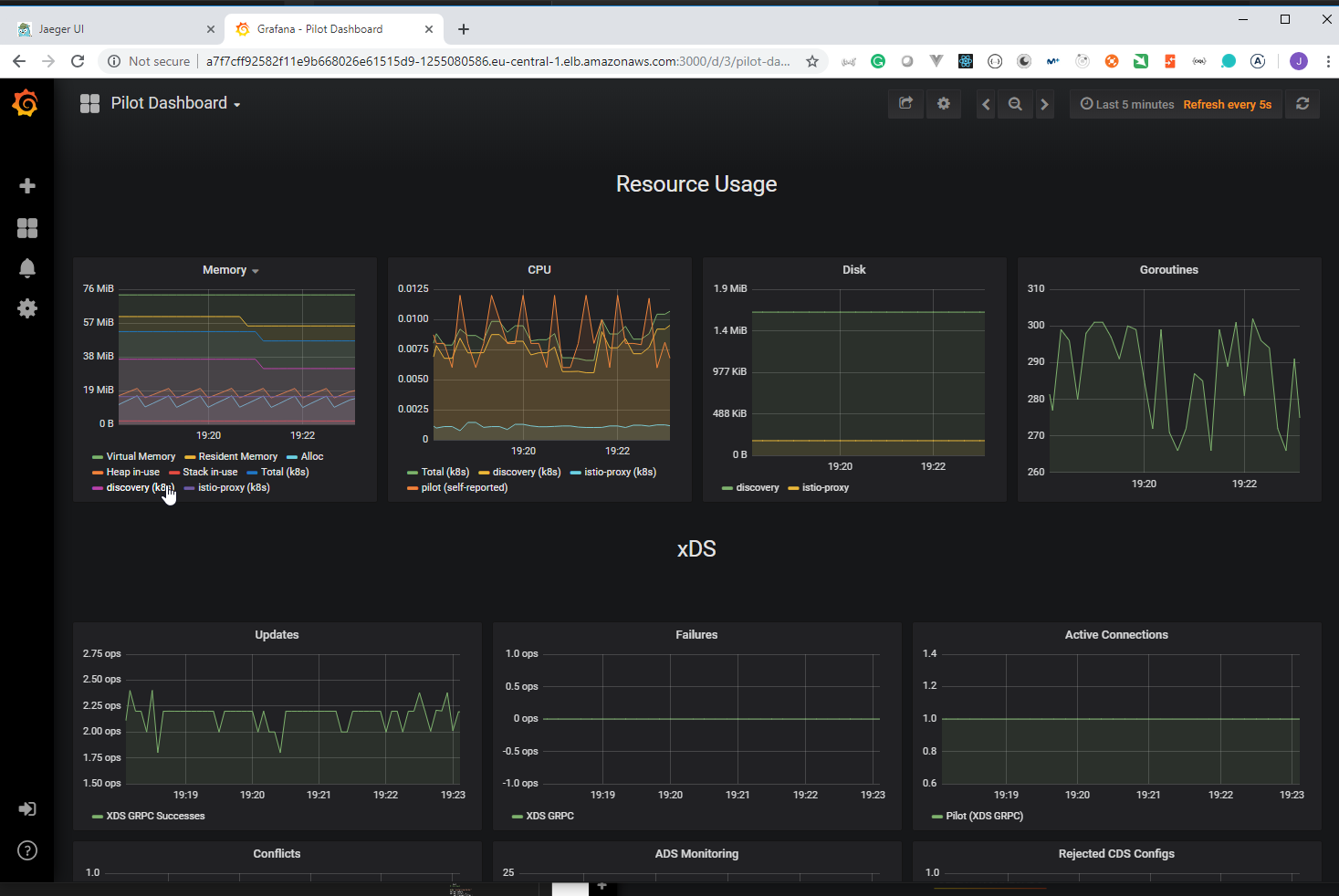

- 120. Istio's Grafana Metrics

- Section: 8. Installing Kubernetes using kubeadm

- Section: 9. On-Prem or Cloud Agnostic Kubernetes

- Section: 10. Course Completion

What I've learned

- Install and configure Kubernetes (on your laptop/desktop or production grade cluster on AWS)

- Use Docker Client (with kubernetes), kubeadm, kops, or minikube to setup your cluster

- Be able to run stateless and stateful applications on Kubernetes

- Use Healthchecks, Secrets, ConfigMaps, placement strategies using Node/Pod affinity / anti-affinity

- Use StatefulSets to deploy a Cassandra cluster on Kubernetes

- Add users, set quotas/limits, do node maintenance, setup monitoring

- Use Volumes to provide persistence to your containers

- Be able to scale your apps using metrics

- Package applications with Helm and write your own Helm charts for your applications

- Automatically build and deploy your own Helm Charts using Jenkins

- Install and use kubeless to run functions (Serverless) on Kubernetes

- Install and use Istio to deploy a service mesh on Kubernetes

- Deployment concepts in Kubernetes by using HELM and HELMFILE

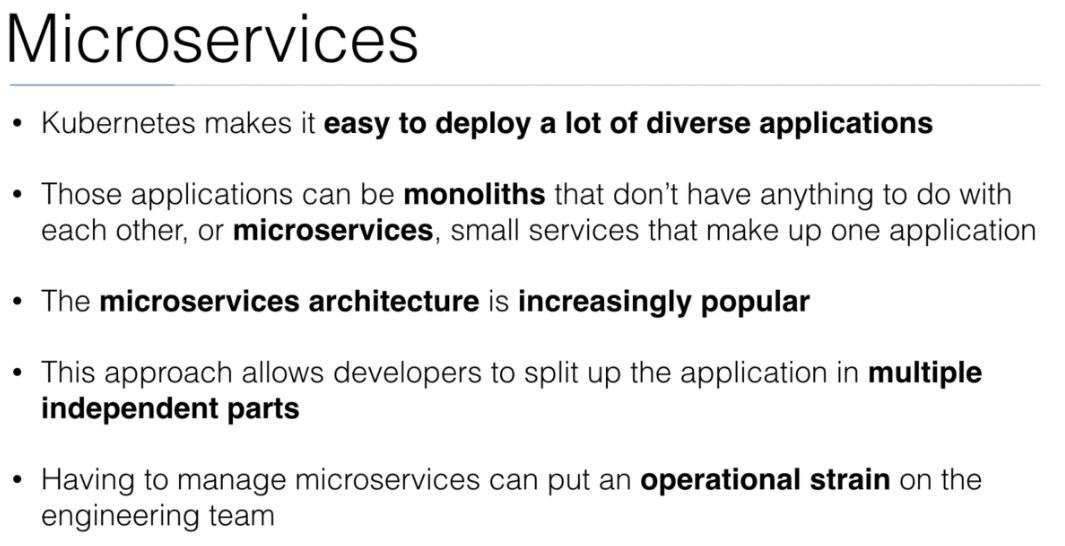

Section: 7. Microservices

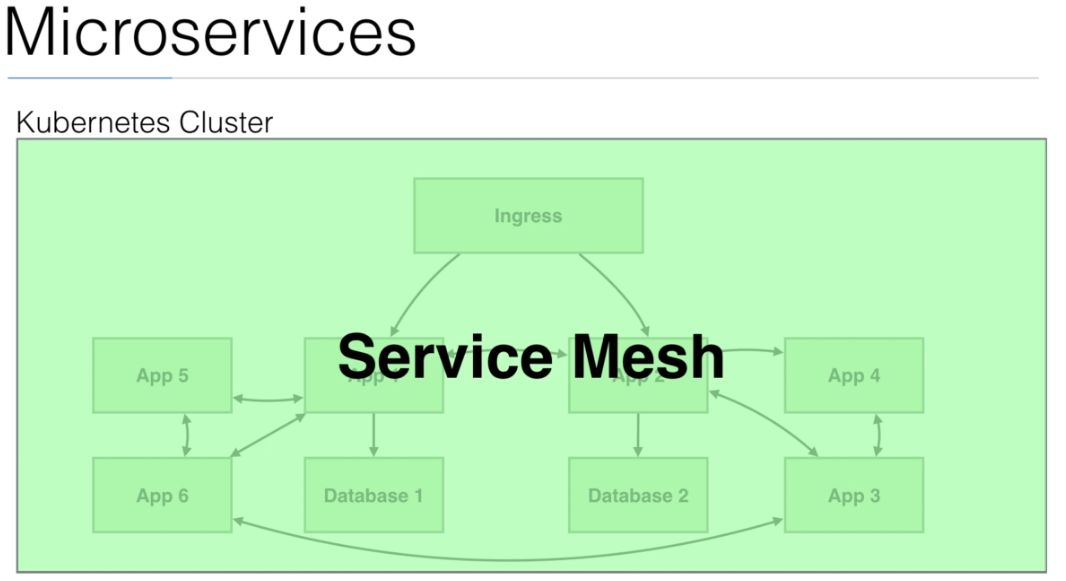

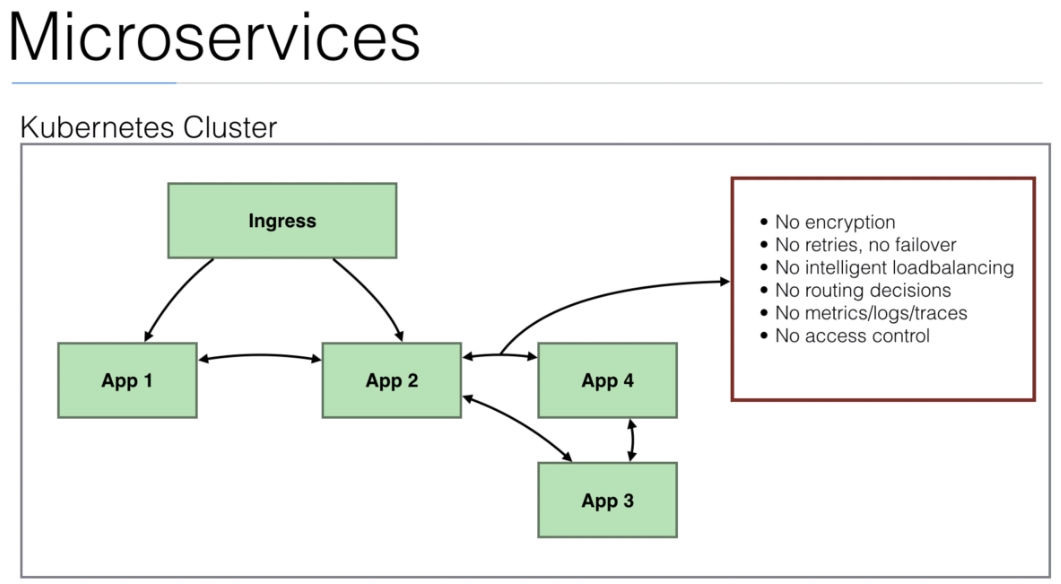

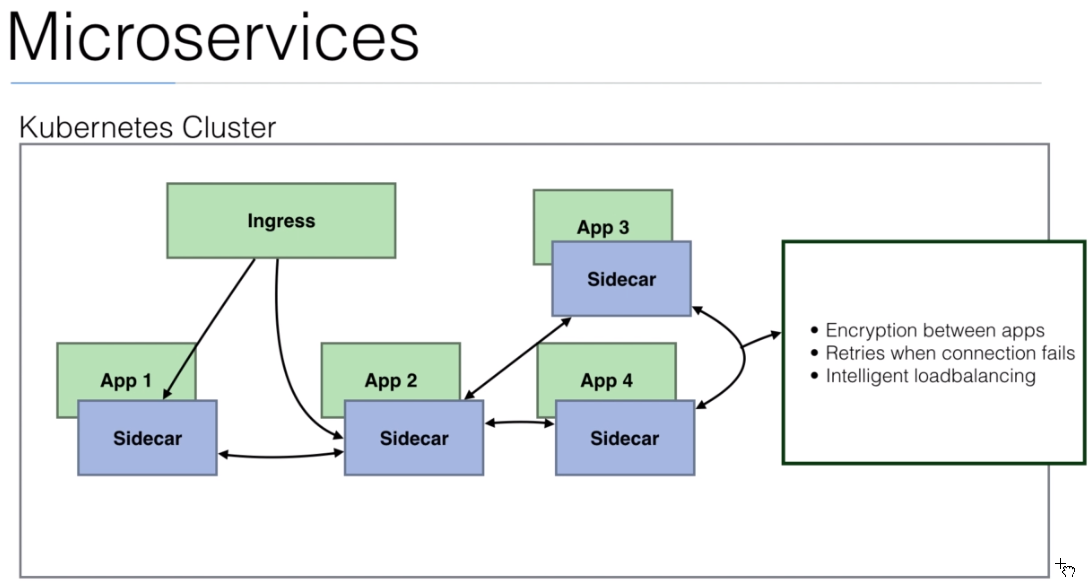

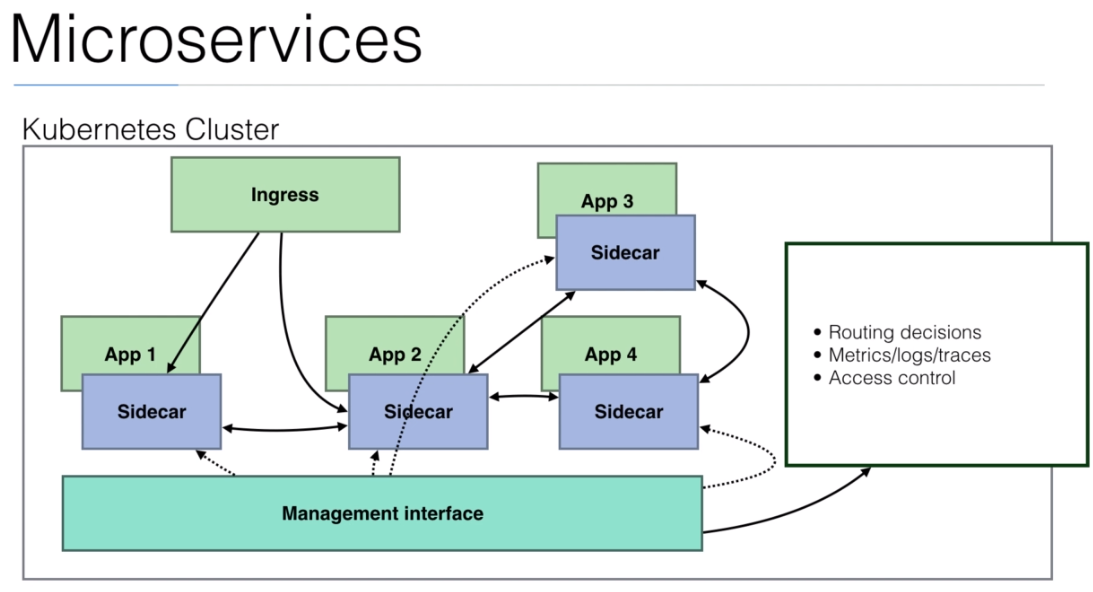

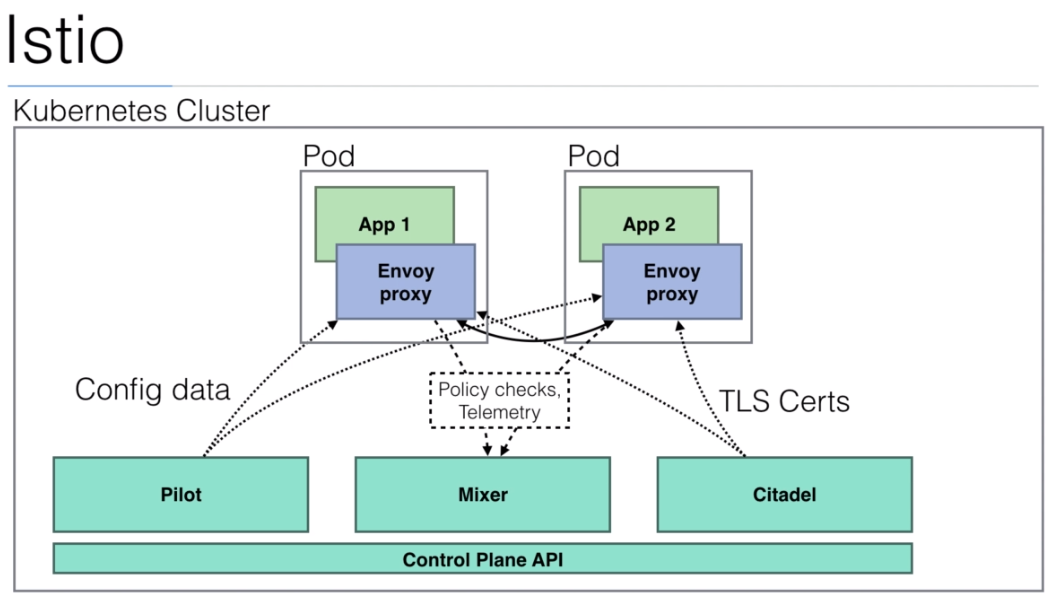

106. Introduction to Istio

- We can add a

proxyto comunicate between apps (microservices). It is calledsidecar.

- We can add a

management interface

- With

Istiowe have this solution.

107. Demo: Istio Installation

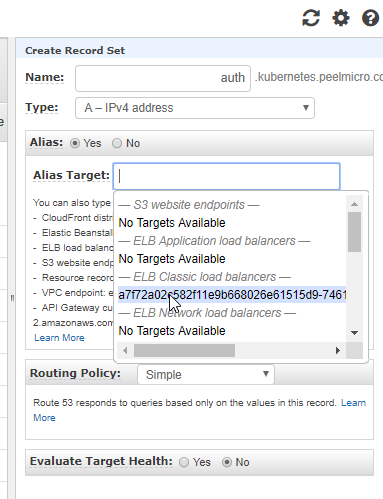

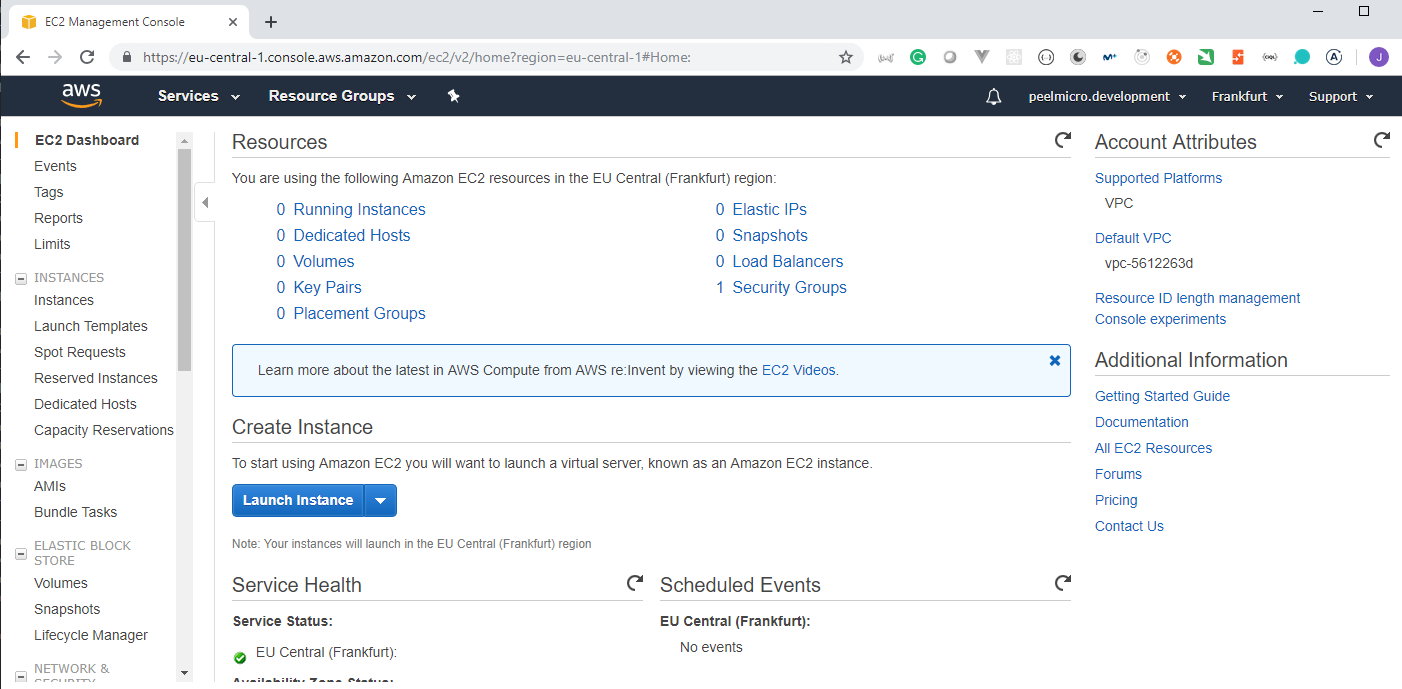

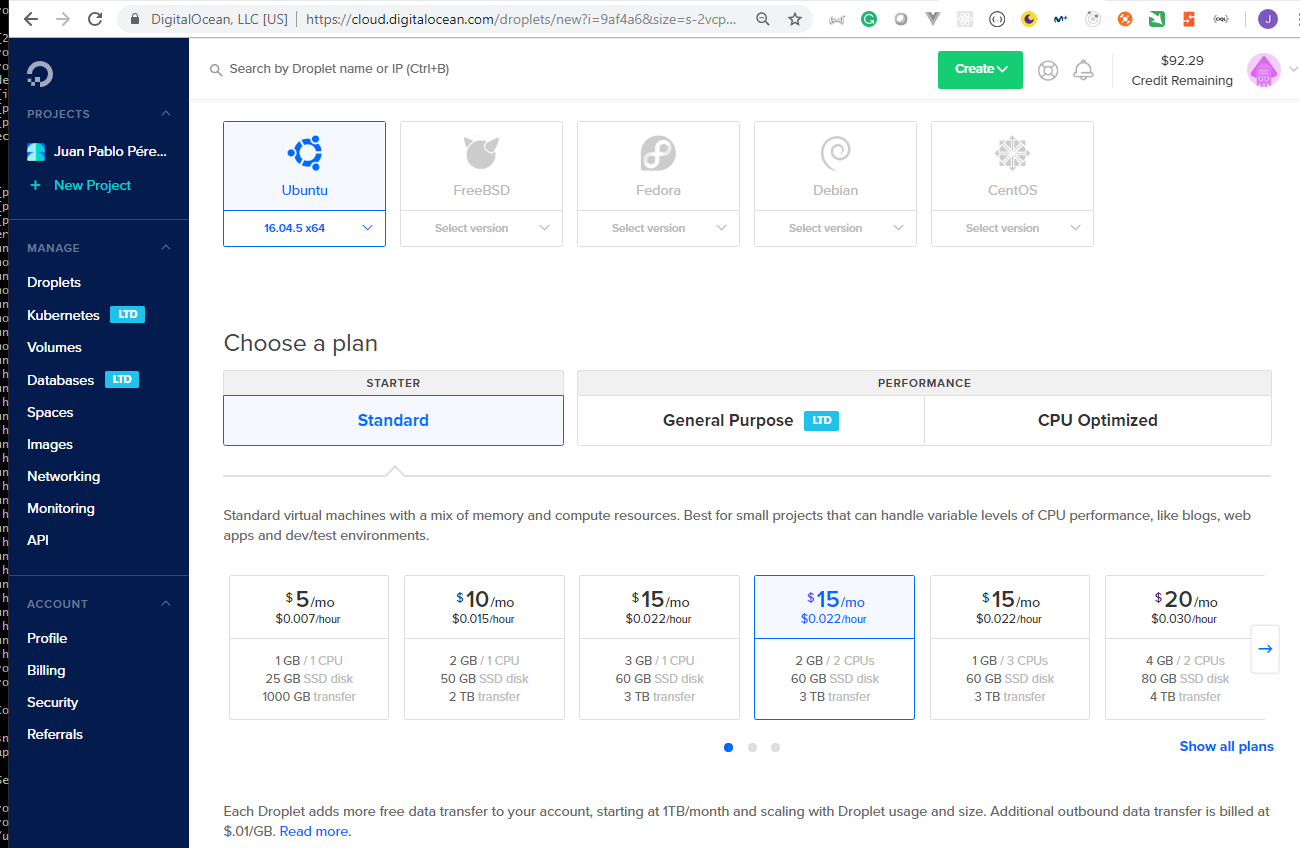

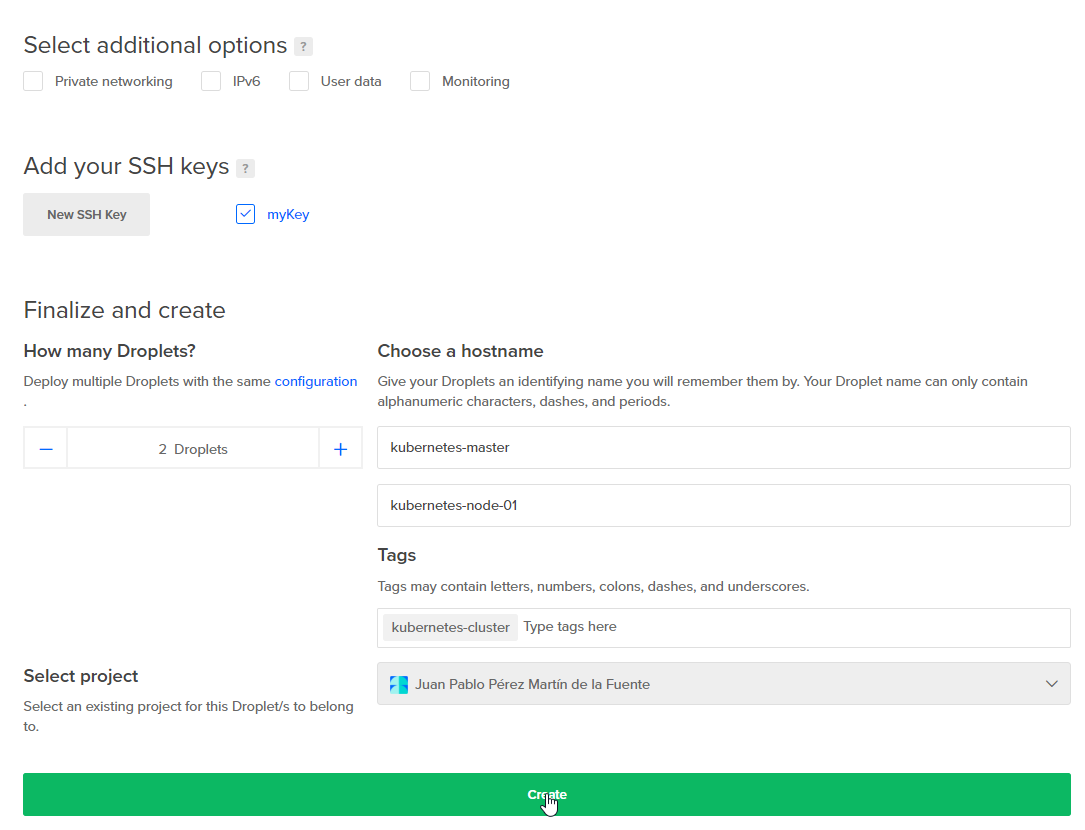

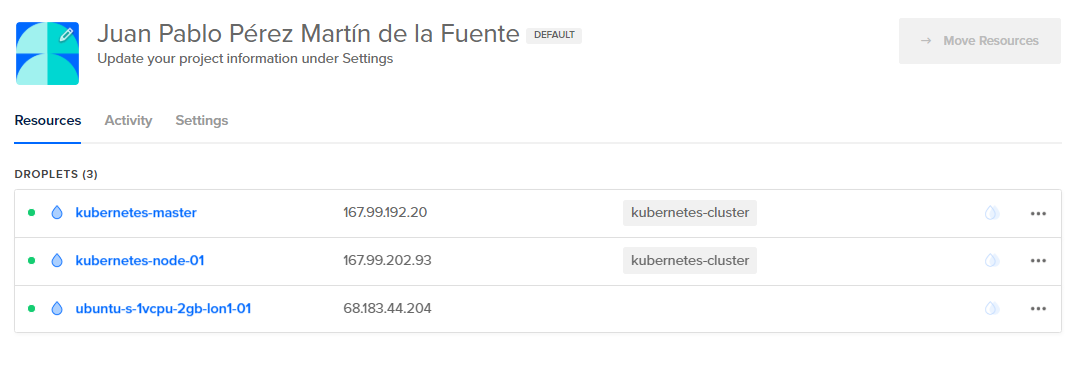

- We need to create the AWS Cluster again, but, in this case, we are going to use

--node-size=t2.mediumbecauseIstioneeds a lot of memory to start.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops create cluster --name=kubernetes.peelmicro.com --state=s3://kubernetes.peelmicro.com --zones=eu-central-1a --node-count=2 --node-size=t2.medium --master-size=t2.micro --dns-zone=kubernetes.peelmicro.com

I0406 05:00:44.737226 26361 create_cluster.go:480] Inferred --cloud=aws from zone "eu-central-1a"

I0406 05:00:44.889229 26361 subnets.go:184] Assigned CIDR 172.20.32.0/19 to subnet eu-central-1a

I0406 05:00:45.245895 26361 create_cluster.go:1351] Using SSH public key: /root/.ssh/id_rsa.pub

Previewing changes that will be made:

I0406 05:00:47.048995 26361 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0406 05:00:47.422697 26361 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0406 05:00:47.618628 26361 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0406 05:00:47.764220 26361 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0406 05:00:47.798288 26361 executor.go:103] Tasks: 73 done / 73 total; 0 can run

Will create resources:

AutoscalingGroup/master-eu-central-1a.masters.kubernetes.peelmicro.com

MinSize 1

MaxSize 1

Subnets [name:eu-central-1a.kubernetes.peelmicro.com]

Tags {Name: master-eu-central-1a.masters.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: master-eu-central-1a, k8s.io/role/master: 1}

Granularity 1Minute

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

LaunchConfiguration name:master-eu-central-1a.masters.kubernetes.peelmicro.com

AutoscalingGroup/nodes.kubernetes.peelmicro.com

MinSize 2

MaxSize 2

Subnets [name:eu-central-1a.kubernetes.peelmicro.com]

Tags {k8s.io/role/node: 1, Name: nodes.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: nodes}

Granularity 1Minute

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

LaunchConfiguration name:nodes.kubernetes.peelmicro.com

DHCPOptions/kubernetes.peelmicro.com

DomainName eu-central-1.compute.internal

DomainNameServers AmazonProvidedDNS

Shared false

Tags {Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

EBSVolume/a.etcd-events.kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

VolumeType gp2

SizeGB 20

Encrypted false

Tags {Name: a.etcd-events.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, k8s.io/etcd/events: a/a, k8s.io/role/master: 1, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

EBSVolume/a.etcd-main.kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

VolumeType gp2

SizeGB 20

Encrypted false

Tags {k8s.io/etcd/main: a/a, k8s.io/role/master: 1, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: a.etcd-main.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

IAMInstanceProfile/masters.kubernetes.peelmicro.com

Shared false

IAMInstanceProfile/nodes.kubernetes.peelmicro.com

Shared false

IAMInstanceProfileRole/masters.kubernetes.peelmicro.com

InstanceProfile name:masters.kubernetes.peelmicro.com id:masters.kubernetes.peelmicro.com

Role name:masters.kubernetes.peelmicro.com

IAMInstanceProfileRole/nodes.kubernetes.peelmicro.com

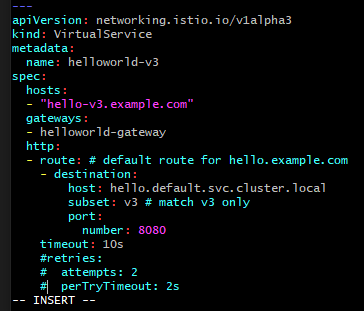

InstanceProfile name:nodes.kubernetes.peelmicro.com id:nodes.kubernetes.peelmicro.com

Role name:nodes.kubernetes.peelmicro.com

IAMRole/masters.kubernetes.peelmicro.com

ExportWithID masters

IAMRole/nodes.kubernetes.peelmicro.com

ExportWithID nodes

IAMRolePolicy/masters.kubernetes.peelmicro.com

Role name:masters.kubernetes.peelmicro.com

IAMRolePolicy/nodes.kubernetes.peelmicro.com

Role name:nodes.kubernetes.peelmicro.com

InternetGateway/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

Shared false

Tags {KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned, Name: kubernetes.peelmicro.com}

Keypair/apiserver-aggregator

Signer name:apiserver-aggregator-ca id:cn=apiserver-aggregator-ca

Subject cn=aggregator

Type client

Format v1alpha2

Keypair/apiserver-aggregator-ca

Subject cn=apiserver-aggregator-ca

Type ca

Format v1alpha2

Keypair/apiserver-proxy-client

Signer name:ca id:cn=kubernetes

Subject cn=apiserver-proxy-client

Type client

Format v1alpha2

Keypair/ca

Subject cn=kubernetes

Type ca

Format v1alpha2

Keypair/kops

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kops

Type client

Format v1alpha2

Keypair/kube-controller-manager

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-controller-manager

Type client

Format v1alpha2

Keypair/kube-proxy

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-proxy

Type client

Format v1alpha2

Keypair/kube-scheduler

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-scheduler

Type client

Format v1alpha2

Keypair/kubecfg

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kubecfg

Type client

Format v1alpha2

Keypair/kubelet

Signer name:ca id:cn=kubernetes

Subject o=system:nodes,cn=kubelet

Type client

Format v1alpha2

Keypair/kubelet-api

Signer name:ca id:cn=kubernetes

Subject cn=kubelet-api

Type client

Format v1alpha2

Keypair/master

AlternateNames [100.64.0.1, 127.0.0.1, api.internal.kubernetes.peelmicro.com, api.kubernetes.peelmicro.com, kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.cluster.local]

Signer name:ca id:cn=kubernetes

Subject cn=kubernetes-master

Type server

Format v1alpha2

LaunchConfiguration/master-eu-central-1a.masters.kubernetes.peelmicro.com

ImageID kope.io/k8s-1.10-debian-jessie-amd64-hvm-ebs-2018-08-17

InstanceType t2.micro

SSHKey name:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e id:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

SecurityGroups [name:masters.kubernetes.peelmicro.com]

AssociatePublicIP true

IAMInstanceProfile name:masters.kubernetes.peelmicro.com id:masters.kubernetes.peelmicro.com

RootVolumeSize 64

RootVolumeType gp2

SpotPrice

LaunchConfiguration/nodes.kubernetes.peelmicro.com

ImageID kope.io/k8s-1.10-debian-jessie-amd64-hvm-ebs-2018-08-17

InstanceType t2.medium

SSHKey name:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e id:kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

SecurityGroups [name:nodes.kubernetes.peelmicro.com]

AssociatePublicIP true

IAMInstanceProfile name:nodes.kubernetes.peelmicro.com id:nodes.kubernetes.peelmicro.com

RootVolumeSize 128

RootVolumeType gp2

SpotPrice

ManagedFile/kubernetes.peelmicro.com-addons-bootstrap

Location addons/bootstrap-channel.yaml

ManagedFile/kubernetes.peelmicro.com-addons-core.addons.k8s.io

Location addons/core.addons.k8s.io/v1.4.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-dns-controller.addons.k8s.io-k8s-1.6

Location addons/dns-controller.addons.k8s.io/k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-dns-controller.addons.k8s.io-pre-k8s-1.6

Location addons/dns-controller.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-kube-dns.addons.k8s.io-k8s-1.6

Location addons/kube-dns.addons.k8s.io/k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-kube-dns.addons.k8s.io-pre-k8s-1.6

Location addons/kube-dns.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/kubernetes.peelmicro.com-addons-limit-range.addons.k8s.io

Location addons/limit-range.addons.k8s.io/v1.5.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-rbac.addons.k8s.io-k8s-1.8

Location addons/rbac.addons.k8s.io/k8s-1.8.yaml

ManagedFile/kubernetes.peelmicro.com-addons-storage-aws.addons.k8s.io-v1.6.0

Location addons/storage-aws.addons.k8s.io/v1.6.0.yaml

ManagedFile/kubernetes.peelmicro.com-addons-storage-aws.addons.k8s.io-v1.7.0

Location addons/storage-aws.addons.k8s.io/v1.7.0.yaml

Route/0.0.0.0/0

RouteTable name:kubernetes.peelmicro.com

CIDR 0.0.0.0/0

InternetGateway name:kubernetes.peelmicro.com

RouteTable/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

Shared false

Tags {kubernetes.io/cluster/kubernetes.peelmicro.com: owned, kubernetes.io/kops/role: public, Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com}

RouteTableAssociation/eu-central-1a.kubernetes.peelmicro.com

RouteTable name:kubernetes.peelmicro.com

Subnet name:eu-central-1a.kubernetes.peelmicro.com

SSHKey/kubernetes.kubernetes.peelmicro.com-ca:41:39:64:b1:ea:14:36:e6:ee:49:10:74:b6:e2:7e

KeyFingerprint 9a:fa:b7:ad:4e:62:1b:16:a4:6b:a5:8f:8f:86:59:f6

Secret/admin

Secret/kube

Secret/kube-proxy

Secret/kubelet

Secret/system:controller_manager

Secret/system:dns

Secret/system:logging

Secret/system:monitoring

Secret/system:scheduler

SecurityGroup/masters.kubernetes.peelmicro.com

Description Security group for masters

VPC name:kubernetes.peelmicro.com

RemoveExtraRules [port=22, port=443, port=2380, port=2381, port=4001, port=4002, port=4789, port=179]

Tags {Name: masters.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

SecurityGroup/nodes.kubernetes.peelmicro.com

Description Security group for nodes

VPC name:kubernetes.peelmicro.com

RemoveExtraRules [port=22]

Tags {Name: nodes.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

SecurityGroupRule/all-master-to-master

SecurityGroup name:masters.kubernetes.peelmicro.com

SourceGroup name:masters.kubernetes.peelmicro.com

SecurityGroupRule/all-master-to-node

SecurityGroup name:nodes.kubernetes.peelmicro.com

SourceGroup name:masters.kubernetes.peelmicro.com

SecurityGroupRule/all-node-to-node

SecurityGroup name:nodes.kubernetes.peelmicro.com

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/https-external-to-master-0.0.0.0/0

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 443

ToPort 443

SecurityGroupRule/master-egress

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-egress

SecurityGroup name:nodes.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-to-master-tcp-1-2379

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 1

ToPort 2379

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-tcp-2382-4000

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 2382

ToPort 4000

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-tcp-4003-65535

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol tcp

FromPort 4003

ToPort 65535

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/node-to-master-udp-1-65535

SecurityGroup name:masters.kubernetes.peelmicro.com

Protocol udp

FromPort 1

ToPort 65535

SourceGroup name:nodes.kubernetes.peelmicro.com

SecurityGroupRule/ssh-external-to-master-0.0.0.0/0

SecurityGroup name:masters.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

SecurityGroupRule/ssh-external-to-node-0.0.0.0/0

SecurityGroup name:nodes.kubernetes.peelmicro.com

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

Subnet/eu-central-1a.kubernetes.peelmicro.com

ShortName eu-central-1a

VPC name:kubernetes.peelmicro.com

AvailabilityZone eu-central-1a

CIDR 172.20.32.0/19

Shared false

Tags {kubernetes.io/role/elb: 1, SubnetType: Public, Name: eu-central-1a.kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

VPC/kubernetes.peelmicro.com

CIDR 172.20.0.0/16

EnableDNSHostnames true

EnableDNSSupport true

Shared false

Tags {Name: kubernetes.peelmicro.com, KubernetesCluster: kubernetes.peelmicro.com, kubernetes.io/cluster/kubernetes.peelmicro.com: owned}

VPCDHCPOptionsAssociation/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

DHCPOptions name:kubernetes.peelmicro.com

Must specify --yes to apply changes

Cluster configuration has been created.

Suggestions:

* list clusters with: kops get cluster

* edit this cluster with: kops edit cluster kubernetes.peelmicro.com

* edit your node instance group: kops edit ig --name=kubernetes.peelmicro.com nodes

* edit your master instance group: kops edit ig --name=kubernetes.peelmicro.com master-eu-central-1a

Finally configure your cluster with: kops update cluster kubernetes.peelmicro.com --yes

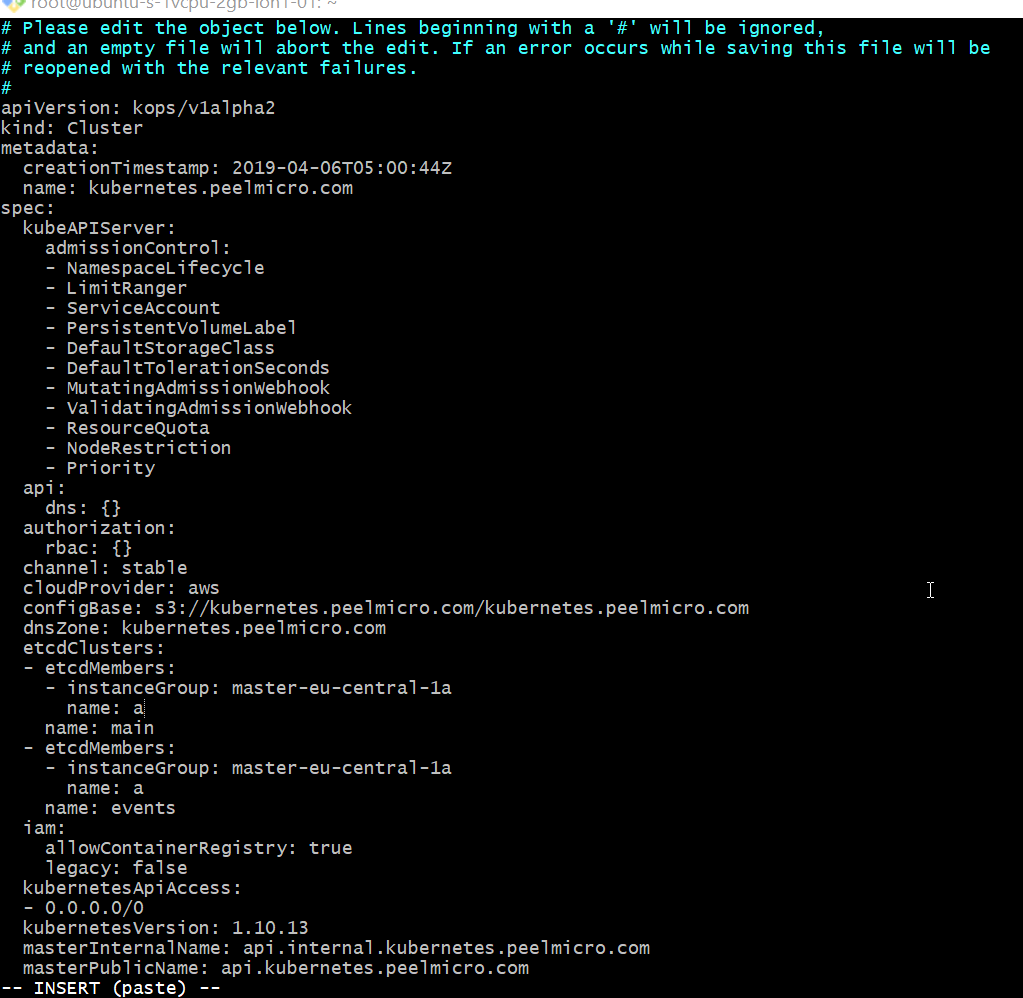

- We have to edit the cluster before executing it adding the following

kubeAPIServer:

admissionControl:

- NamespaceLifecycle

- LimitRanger

- ServiceAccount

- PersistentVolumeLabel

- DefaultStorageClass

- DefaultTolerationSeconds

- MutatingAdmissionWebhook

- ValidatingAdmissionWebhook

- ResourceQuota

- NodeRestriction

- Priority

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops edit cluster kubernetes.peelmicro.com --state=s3://kubernetes.peelmicro.com

- We have to execute the cluster by using:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops update cluster kubernetes.peelmicro.com --yes --state=s3://kubernetes.peelmicro.com

I0406 05:10:00.102613 26433 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0406 05:10:01.144577 26433 vfs_castore.go:735] Issuing new certificate: "ca"

I0406 05:10:01.154530 26433 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator-ca"

I0406 05:10:01.558879 26433 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0406 05:10:03.082048 26433 vfs_castore.go:735] Issuing new certificate: "kubecfg"

I0406 05:10:03.342839 26433 vfs_castore.go:735] Issuing new certificate: "master"

I0406 05:10:03.540417 26433 vfs_castore.go:735] Issuing new certificate: "kubelet"

I0406 05:10:03.555470 26433 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator"

I0406 05:10:03.578749 26433 vfs_castore.go:735] Issuing new certificate: "apiserver-proxy-client"

I0406 05:10:03.745253 26433 vfs_castore.go:735] Issuing new certificate: "kube-scheduler"

I0406 05:10:03.749764 26433 vfs_castore.go:735] Issuing new certificate: "kops"

I0406 05:10:03.898014 26433 vfs_castore.go:735] Issuing new certificate: "kube-controller-manager"

I0406 05:10:04.011317 26433 vfs_castore.go:735] Issuing new certificate: "kube-proxy"

I0406 05:10:04.512692 26433 vfs_castore.go:735] Issuing new certificate: "kubelet-api"

I0406 05:10:04.720408 26433 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0406 05:10:05.001087 26433 launchconfiguration.go:380] waiting for IAM instance profile "nodes.kubernetes.peelmicro.com" to be ready

I0406 05:10:05.017806 26433 launchconfiguration.go:380] waiting for IAM instance profile "masters.kubernetes.peelmicro.com" to be ready

I0406 05:10:15.403451 26433 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0406 05:10:15.923006 26433 executor.go:103] Tasks: 73 done / 73 total; 0 can run

I0406 05:10:15.923178 26433 dns.go:153] Pre-creating DNS records

I0406 05:10:18.348414 26433 update_cluster.go:290] Exporting kubecfg for cluster

kops has set your kubectl context to kubernetes.peelmicro.com

Cluster is starting. It should be ready in a few minutes.

Suggestions:

* validate cluster: kops validate cluster

* list nodes: kubectl get nodes --show-labels

* ssh to the master: ssh -i ~/.ssh/id_rsa admin@api.kubernetes.peelmicro.com

* the admin user is specific to Debian. If not using Debian please use the appropriate user based on your OS.

* read about installing addons at: https://github.com/kubernetes/kops/blob/master/docs/addons.md.

- We can check in a few minutes if everything is ok by executing:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops validate cluster --state=s3://kubernetes.peelmicro.com

Using cluster from kubectl context: kubernetes.peelmicro.com

Validating cluster kubernetes.peelmicro.com

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-eu-central-1a Master t2.micro 1 1 eu-central-1a

nodes Node t2.medium 2 2 eu-central-1a

NODE STATUS

NAME ROLE READY

ip-172-20-43-50.eu-central-1.compute.internal node True

ip-172-20-58-123.eu-central-1.compute.internal node True

ip-172-20-60-85.eu-central-1.compute.internal master True

Your cluster kubernetes.peelmicro.com is ready

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- We can install

Istioby executing:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# wget https://github.com/istio/istio/releases/download/1.0.2/istio-1.0.2-linux.tar.gz

--2019-04-06 05:22:19-- https://github.com/istio/istio/releases/download/1.0.2/istio-1.0.2-linux.tar.gz

Resolving github.com (github.com)... 140.82.118.4, 140.82.118.3

Connecting to github.com (github.com)|140.82.118.4|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github-production-release-asset-2e65be.s3.amazonaws.com/74175805/2ff05080-b1dc-11e8-8efe-74ef7e0233a0?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20190406%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20190406T052219Z&X-Amz-Expires=300&X-Amz-Signature=9ff23decd341e473a378cf5627c65cd9997ef2fa2b12ab458af03960bf41a688&X-Amz-SignedHeaders=host&actor_id=0&response-content-disposition=attachment%3B%20filename%3Distio-1.0.2-linux.tar.gz&response-content-type=application%2Foctet-stream [following]

--2019-04-06 05:22:19-- https://github-production-release-asset-2e65be.s3.amazonaws.com/74175805/2ff05080-b1dc-11e8-8efe-74ef7e0233a0?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20190406%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20190406T052219Z&X-Amz-Expires=300&X-Amz-Signature=9ff23decd341e473a378cf5627c65cd9997ef2fa2b12ab458af03960bf41a688&X-Amz-SignedHeaders=host&actor_id=0&response-content-disposition=attachment%3B%20filename%3Distio-1.0.2-linux.tar.gz&response-content-type=application%2Foctet-stream

Resolving github-production-release-asset-2e65be.s3.amazonaws.com (github-production-release-asset-2e65be.s3.amazonaws.com)... 52.216.0.32

Connecting to github-production-release-asset-2e65be.s3.amazonaws.com (github-production-release-asset-2e65be.s3.amazonaws.com)|52.216.0.32|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 14862866 (14M) [application/octet-stream]

Saving to: ‘istio-1.0.2-linux.tar.gz’

istio-1.0.2-linux.tar.gz 100%[========================================================================================>] 14.17M 16.4MB/s in 0.9s

2019-04-06 05:22:20 (16.4 MB/s) - ‘istio-1.0.2-linux.tar.gz’ saved [14862866/14862866]

root@ubuntu-s-1vcpu-2gb-lon1-01:~# tar -xzvf istio-1.0.2-linux.tar.gz

cd istio-1.0.2istio-1.0.2/

istio-1.0.2/samples/

istio-1.0.2/samples/websockets/

istio-1.0.2/samples/websockets/app.yaml

istio-1.0.2/samples/websockets/README.md

istio-1.0.2/samples/websockets/route.yaml

istio-1.0.2/samples/sleep/

istio-1.0.2/samples/sleep/sleep.yaml

istio-1.0.2/samples/sleep/README.md

istio-1.0.2/samples/httpbin/

istio-1.0.2/samples/httpbin/README.md

istio-1.0.2/samples/httpbin/httpbin.yaml

istio-1.0.2/samples/httpbin/destinationpolicies/

istio-1.0.2/samples/httpbin/destinationpolicies/httpbin-circuit-breaker.yaml

istio-1.0.2/samples/httpbin/routerules/

istio-1.0.2/samples/httpbin/routerules/httpbin-v1.yaml

istio-1.0.2/samples/httpbin/sample-client/

istio-1.0.2/samples/httpbin/sample-client/fortio-deploy.yaml

istio-1.0.2/samples/https/

istio-1.0.2/samples/https/default.conf

istio-1.0.2/samples/https/nginx-app.yaml

istio-1.0.2/samples/CONFIG-MIGRATION.md

istio-1.0.2/samples/certs/

istio-1.0.2/samples/certs/root-cert.pem

istio-1.0.2/samples/certs/ca-cert.pem

istio-1.0.2/samples/certs/cert-chain.pem

istio-1.0.2/samples/certs/ca-key.pem

istio-1.0.2/samples/README.md

istio-1.0.2/samples/rawvm/

istio-1.0.2/samples/rawvm/README.md

istio-1.0.2/samples/helloworld/

istio-1.0.2/samples/helloworld/helloworld.yaml

istio-1.0.2/samples/helloworld/README.md

istio-1.0.2/samples/kubernetes-blog/

istio-1.0.2/samples/kubernetes-blog/bookinfo-reviews-v2.yaml

istio-1.0.2/samples/kubernetes-blog/bookinfo-v1.yaml

istio-1.0.2/samples/kubernetes-blog/bookinfo-ratings.yaml

istio-1.0.2/samples/bookinfo/

istio-1.0.2/samples/bookinfo/policy/

istio-1.0.2/samples/bookinfo/policy/mixer-rule-additional-telemetry.yaml

istio-1.0.2/samples/bookinfo/policy/mixer-rule-kubernetesenv-telemetry.yaml

istio-1.0.2/samples/bookinfo/policy/mixer-rule-productpage-ratelimit.yaml

istio-1.0.2/samples/bookinfo/policy/mixer-rule-ratings-denial.yaml

istio-1.0.2/samples/bookinfo/policy/mixer-rule-ratings-ratelimit.yaml

istio-1.0.2/samples/bookinfo/policy/mixer-rule-deny-serviceaccount.yaml

istio-1.0.2/samples/bookinfo/policy/mixer-rule-ingress-denial.yaml

istio-1.0.2/samples/bookinfo/policy/mixer-rule-deny-label.yaml

istio-1.0.2/samples/bookinfo/networking/

istio-1.0.2/samples/bookinfo/networking/virtual-service-reviews-test-v2.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-ratings-mysql-vm.yaml

istio-1.0.2/samples/bookinfo/networking/certmanager-gateway.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-ratings-test-abort.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-reviews-50-v3.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-ratings-db.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-reviews-jason-v2-v3.yaml

istio-1.0.2/samples/bookinfo/networking/destination-rule-reviews.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-details-v2.yaml

istio-1.0.2/samples/bookinfo/networking/ROUTING_RULE_MIGRATION.md

istio-1.0.2/samples/bookinfo/networking/virtual-service-ratings-test-delay.yaml

istio-1.0.2/samples/bookinfo/networking/bookinfo-gateway.yaml

istio-1.0.2/samples/bookinfo/networking/destination-rule-all.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-all-v1.yaml

istio-1.0.2/samples/bookinfo/networking/destination-rule-all-mtls.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-reviews-v2-v3.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-reviews-80-20.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-ratings-mysql.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-reviews-90-10.yaml

istio-1.0.2/samples/bookinfo/networking/egress-rule-google-apis.yaml

istio-1.0.2/samples/bookinfo/networking/virtual-service-reviews-v3.yaml

istio-1.0.2/samples/bookinfo/swagger.yaml

istio-1.0.2/samples/bookinfo/platform/

istio-1.0.2/samples/bookinfo/platform/consul/

istio-1.0.2/samples/bookinfo/platform/consul/virtual-service-reviews-test-v2.yaml

istio-1.0.2/samples/bookinfo/platform/consul/virtual-service-ratings-test-abort.yaml

istio-1.0.2/samples/bookinfo/platform/consul/bookinfo.yaml

istio-1.0.2/samples/bookinfo/platform/consul/virtual-service-reviews-50-v3.yaml

istio-1.0.2/samples/bookinfo/platform/consul/bookinfo.sidecars.yaml

istio-1.0.2/samples/bookinfo/platform/consul/virtual-service-ratings-test-delay.yaml

istio-1.0.2/samples/bookinfo/platform/consul/README.md

istio-1.0.2/samples/bookinfo/platform/consul/destination-rule-all.yaml

istio-1.0.2/samples/bookinfo/platform/consul/virtual-service-all-v1.yaml

istio-1.0.2/samples/bookinfo/platform/consul/virtual-service-reviews-v2-v3.yaml

istio-1.0.2/samples/bookinfo/platform/consul/virtual-service-reviews-v3.yaml

istio-1.0.2/samples/bookinfo/platform/consul/cleanup.sh

istio-1.0.2/samples/bookinfo/platform/kube/

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-mysql.yaml

istio-1.0.2/samples/bookinfo/platform/kube/istio-rbac-enable.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-db.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-details-v2.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-certificate.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-add-serviceaccount.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-ratings-v2-mysql-vm.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-reviews-v2.yaml

istio-1.0.2/samples/bookinfo/platform/kube/rbac/

istio-1.0.2/samples/bookinfo/platform/kube/rbac/details-reviews-policy.yaml

istio-1.0.2/samples/bookinfo/platform/kube/rbac/productpage-policy.yaml

istio-1.0.2/samples/bookinfo/platform/kube/rbac/rbac-config-ON.yaml

istio-1.0.2/samples/bookinfo/platform/kube/rbac/namespace-policy.yaml

istio-1.0.2/samples/bookinfo/platform/kube/rbac/ratings-policy.yaml

istio-1.0.2/samples/bookinfo/platform/kube/istio-rbac-namespace.yaml

istio-1.0.2/samples/bookinfo/platform/kube/README.md

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-ratings-v2.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-ingress.yaml

istio-1.0.2/samples/bookinfo/platform/kube/istio-rbac-ratings.yaml

istio-1.0.2/samples/bookinfo/platform/kube/istio-rbac-details-reviews.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-details.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-ratings-v2-mysql.yaml

istio-1.0.2/samples/bookinfo/platform/kube/istio-rbac-productpage.yaml

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-ratings.yaml

istio-1.0.2/samples/bookinfo/platform/kube/cleanup.sh

istio-1.0.2/samples/bookinfo/platform/kube/bookinfo-ratings-discovery.yaml

istio-1.0.2/samples/bookinfo/README.md

istio-1.0.2/samples/health-check/

istio-1.0.2/samples/health-check/liveness-http.yaml

istio-1.0.2/samples/health-check/liveness-command.yaml

istio-1.0.2/bin/

istio-1.0.2/bin/istioctl

istio-1.0.2/LICENSE

istio-1.0.2/tools/

istio-1.0.2/tools/license/

istio-1.0.2/tools/license/get_dep_licenses.go

istio-1.0.2/tools/license/README.md

istio-1.0.2/tools/convert_perf_results.py

istio-1.0.2/tools/hyperistio/

istio-1.0.2/tools/hyperistio/hyperistio.go

istio-1.0.2/tools/hyperistio/README.md

istio-1.0.2/tools/hyperistio/index.html

istio-1.0.2/tools/hyperistio/hyperistio_test.go

istio-1.0.2/tools/deb/

istio-1.0.2/tools/deb/istio.mk

istio-1.0.2/tools/deb/Dockerfile

istio-1.0.2/tools/deb/deb_test.sh

istio-1.0.2/tools/deb/istio-iptables.sh

istio-1.0.2/tools/deb/istio.service

istio-1.0.2/tools/deb/istio-node-agent-start.sh

istio-1.0.2/tools/deb/postinst.sh

istio-1.0.2/tools/deb/istio-start.sh

istio-1.0.2/tools/deb/sidecar.env

istio-1.0.2/tools/deb/istio-auth-node-agent.service

istio-1.0.2/tools/deb/envoy_bootstrap_v2.json

istio-1.0.2/tools/deb/istio-ca.sh

istio-1.0.2/tools/perf_istio_rules.yaml

istio-1.0.2/tools/setup_run

istio-1.0.2/tools/perf_k8svcs.yaml

istio-1.0.2/tools/setup_perf_cluster.sh

istio-1.0.2/tools/README.md

istio-1.0.2/tools/update_all

istio-1.0.2/tools/cache_buster.yaml

istio-1.0.2/tools/perf_setup.svg

istio-1.0.2/tools/vagrant/

istio-1.0.2/tools/vagrant/Vagrantfile

istio-1.0.2/tools/vagrant/provision-vagrant.sh

istio-1.0.2/tools/istio-docker.mk

istio-1.0.2/tools/githubContrib/

istio-1.0.2/tools/githubContrib/Contributions.txt

istio-1.0.2/tools/rules.yml

istio-1.0.2/tools/run_canonical_perf_tests.sh

istio-1.0.2/tools/dump_kubernetes.sh

istio-1.0.2/README.md

istio-1.0.2/istio.VERSION

istio-1.0.2/install/

istio-1.0.2/install/kubernetes/

istio-1.0.2/install/kubernetes/ansible/

istio-1.0.2/install/kubernetes/ansible/main.yml

istio-1.0.2/install/kubernetes/ansible/README.md

istio-1.0.2/install/kubernetes/ansible/ansible.cfg

istio-1.0.2/install/kubernetes/ansible/istio/

istio-1.0.2/install/kubernetes/ansible/istio/defaults/

istio-1.0.2/install/kubernetes/ansible/istio/defaults/main.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/

istio-1.0.2/install/kubernetes/ansible/istio/tasks/add_to_path.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/delete_resources.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/main.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/simple_sample_cmd.j2

istio-1.0.2/install/kubernetes/ansible/istio/tasks/change_scc.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/create_namespace_free_definition_file.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/install_on_cluster.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/install_addons.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/bookinfo_cmd.j2

istio-1.0.2/install/kubernetes/ansible/istio/tasks/set_istio_distro_vars.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/install_sample.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/assert_oc_admin.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/set_appropriate_cmd_path.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/safely_create_namespace.yml

istio-1.0.2/install/kubernetes/ansible/istio/tasks/install_samples.yml

istio-1.0.2/install/kubernetes/ansible/istio/vars/

istio-1.0.2/install/kubernetes/ansible/istio/vars/main.yml

istio-1.0.2/install/kubernetes/ansible/istio/meta/

istio-1.0.2/install/kubernetes/ansible/istio/meta/main.yml

istio-1.0.2/install/kubernetes/istio-citadel-standalone.yaml

istio-1.0.2/install/kubernetes/istio-citadel-with-health-check.yaml

istio-1.0.2/install/kubernetes/helm/

istio-1.0.2/install/kubernetes/helm/helm-service-account.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/mutatingwebhook.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/sidecarInjectorWebhook/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/enable-mesh-mtls.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/templates/cleanup-secrets.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/charts/security/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/_affinity.tpl

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/endpoints.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/sidecar-injector-configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/values.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/README.md

istio-1.0.2/install/kubernetes/helm/istio-remote/requirements.yaml

istio-1.0.2/install/kubernetes/helm/istio-remote/Chart.yaml

istio-1.0.2/install/kubernetes/helm/README.md

istio-1.0.2/install/kubernetes/helm/istio/

istio-1.0.2/install/kubernetes/helm/istio/values-istio-one-namespace-auth.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/validatingwehookconfiguration.yaml.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/galley/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/templates/clusterrolebindings.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/templates/autoscale.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/gateways/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/templates/autoscale.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/ingress/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/meshexpansion.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/gateway.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/autoscale.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/pilot/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/mutatingwebhook.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/sidecarInjectorWebhook/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/clusterrolebindings.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/prometheus/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/templates/ingress.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/templates/ingress-jaeger.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/templates/service-jaeger.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/tracing/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/statsdtoprom.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/config.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/autoscale.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/mixer/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/ingress.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/secrets.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/kiali/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/servicegraph/

istio-1.0.2/install/kubernetes/helm/istio/charts/servicegraph/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/servicegraph/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/servicegraph/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/servicegraph/templates/ingress.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/servicegraph/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/servicegraph/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/templates/crds.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/templates/issuer.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/templates/rbac.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/certmanager/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/telemetry-gateway/

istio-1.0.2/install/kubernetes/helm/istio/charts/telemetry-gateway/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/telemetry-gateway/templates/gateway.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/telemetry-gateway/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/serviceaccount.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/meshexpansion.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/clusterrolebinding.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/create-custom-resources-job.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/clusterrole.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/enable-mesh-mtls.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/templates/cleanup-secrets.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/security/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/deployment.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/create-custom-resources-job.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/pvc.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/grafana-ports-mtls.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/secret.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/templates/service.yaml

istio-1.0.2/install/kubernetes/helm/istio/charts/grafana/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/templates/

istio-1.0.2/install/kubernetes/helm/istio/templates/_helpers.tpl

istio-1.0.2/install/kubernetes/helm/istio/templates/crds.yaml

istio-1.0.2/install/kubernetes/helm/istio/templates/configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/templates/_affinity.tpl

istio-1.0.2/install/kubernetes/helm/istio/templates/install-custom-resources.sh.tpl

istio-1.0.2/install/kubernetes/helm/istio/templates/sidecar-injector-configmap.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-galley.yaml

istio-1.0.2/install/kubernetes/helm/istio/values.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-demo-auth.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-demo.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-auth.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-one-namespace.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-auth-galley.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio.yaml

istio-1.0.2/install/kubernetes/helm/istio/README.md

istio-1.0.2/install/kubernetes/helm/istio/requirements.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-gateways.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-auth-multicluster.yaml

istio-1.0.2/install/kubernetes/helm/istio/Chart.yaml

istio-1.0.2/install/kubernetes/helm/istio/values-istio-multicluster.yaml

istio-1.0.2/install/kubernetes/README.md

istio-1.0.2/install/kubernetes/addons/

istio-1.0.2/install/kubernetes/istio-demo.yaml

istio-1.0.2/install/kubernetes/istio-demo-auth.yaml

istio-1.0.2/install/kubernetes/mesh-expansion.yaml

istio-1.0.2/install/kubernetes/istio-citadel-plugin-certs.yaml

istio-1.0.2/install/kubernetes/namespace.yaml

istio-1.0.2/install/tools/

istio-1.0.2/install/tools/setupIstioVM.sh

istio-1.0.2/install/tools/setupMeshEx.sh

istio-1.0.2/install/consul/

istio-1.0.2/install/consul/README.md

istio-1.0.2/install/consul/kubeconfig

istio-1.0.2/install/consul/istio.yaml

istio-1.0.2/install/README.md

istio-1.0.2/install/gcp/

istio-1.0.2/install/gcp/deployment_manager/

istio-1.0.2/install/gcp/deployment_manager/istio-cluster.yaml

istio-1.0.2/install/gcp/deployment_manager/README.md

istio-1.0.2/install/gcp/deployment_manager/istio-cluster.jinja.schema

istio-1.0.2/install/gcp/deployment_manager/istio-cluster.jinja.display

istio-1.0.2/install/gcp/deployment_manager/istio-cluster.jinja

istio-1.0.2/install/gcp/README.md

- Update the $PATH

root@ubuntu-s-1vcpu-2gb-lon1-01:~# echo 'export PATH="$PATH:/root/istio-1.0.2/bin"' >> ~/.profile

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- If we don't want to log oout and log in again:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# export PATH="$PATH:/root/istio-1.0.2/bin"

root@ubuntu-s-1vcpu-2gb-lon1-01:~# istioctl

Istio configuration command line utility.

Create, list, modify, and delete configuration resources in the Istio

system.

Available routing and traffic management configuration types:

[virtualservice gateway destinationrule serviceentry httpapispec httpapispecbinding quotaspec quotaspecbinding servicerole servicerolebinding policy]

See https://istio.io/docs/reference/ for an overview of Istio routing.

Usage:

istioctl [command]

Available Commands:

authn Interact with Istio authentication policies

context-create Create a kubeconfig file suitable for use with istioctl in a non kubernetes environment

create Create policies and rules

delete Delete policies or rules

deregister De-registers a service instance

experimental Experimental commands that may be modified or deprecated

gen-deploy Generates the configuration for Istio's control plane.

get Retrieve policies and rules

help Help about any command

kube-inject Inject Envoy sidecar into Kubernetes pod resources

proxy-config Retrieve information about proxy configuration from Envoy [kube only]

proxy-status Retrieves the synchronization status of each Envoy in the mesh [kube only]

register Registers a service instance (e.g. VM) joining the mesh

replace Replace existing policies and rules

version Prints out build version information

Flags:

--context string The name of the kubeconfig context to use

-h, --help help for istioctl

-i, --istioNamespace string Istio system namespace (default "istio-system")

-c, --kubeconfig string Kubernetes configuration file

--log_as_json Whether to format output as JSON or in plain console-friendly format

--log_caller string Comma-separated list of scopes for which to include caller information, scopes can be any of [ads, default, model, rbac]

--log_output_level string Comma-separated minimum per-scope logging level of messages to output, in the form of <scope>:<level>,<scope>:<level>,... where scope can be one of [ads, default, model, rbac] and level can be one of [debug, info, warn, error, none] (default "default:info")

--log_rotate string The path for the optional rotating log file

--log_rotate_max_age int The maximum age in days of a log file beyond which the file is rotated (0 indicates no limit) (default 30)

--log_rotate_max_backups int The maximum number of log file backups to keep before older files are deleted (0 indicates no limit) (default 1000)

--log_rotate_max_size int The maximum size in megabytes of a log file beyond which the file is rotated (default 104857600)

--log_stacktrace_level string Comma-separated minimum per-scope logging level at which stack traces are captured, in the form of <scope>:<level>,<scope:level>,... where scope can be one of [ads, default, model, rbac] and level can be one of [debug, info, warn, error, none] (default "default:none")

--log_target stringArray The set of paths where to output the log. This can be any path as well as the special values stdout and stderr (default [stdout])

-n, --namespace string Config namespace

-p, --platform string Istio host platform (default "kube")

Use "istioctl [command] --help" for more information about a command.

- We are going to apply CRDs (Custom Resources) by executing:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl apply -f ~/istio-1.0.2/install/kubernetes/helm/istio/templates/crds.yaml

customresourcedefinition.apiextensions.k8s.io/virtualservices.networking.istio.io created

customresourcedefinition.apiextensions.k8s.io/destinationrules.networking.istio.io created

customresourcedefinition.apiextensions.k8s.io/serviceentries.networking.istio.io created

customresourcedefinition.apiextensions.k8s.io/gateways.networking.istio.io created

customresourcedefinition.apiextensions.k8s.io/envoyfilters.networking.istio.io created

customresourcedefinition.apiextensions.k8s.io/policies.authentication.istio.io created

customresourcedefinition.apiextensions.k8s.io/meshpolicies.authentication.istio.io created

customresourcedefinition.apiextensions.k8s.io/httpapispecbindings.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/httpapispecs.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/quotaspecbindings.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/quotaspecs.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/rules.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/attributemanifests.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/bypasses.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/circonuses.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/deniers.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/fluentds.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/kubernetesenvs.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/listcheckers.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/memquotas.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/noops.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/opas.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/prometheuses.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/rbacs.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/redisquotas.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/servicecontrols.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/signalfxs.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/solarwindses.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/stackdrivers.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/statsds.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/stdios.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/apikeys.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/authorizations.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/checknothings.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/kuberneteses.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/listentries.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/logentries.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/edges.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/metrics.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/quotas.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/reportnothings.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/servicecontrolreports.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/tracespans.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/rbacconfigs.rbac.istio.io created

customresourcedefinition.apiextensions.k8s.io/serviceroles.rbac.istio.io created

customresourcedefinition.apiextensions.k8s.io/servicerolebindings.rbac.istio.io created

customresourcedefinition.apiextensions.k8s.io/adapters.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/instances.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/templates.config.istio.io created

customresourcedefinition.apiextensions.k8s.io/handlers.config.istio.io created

- Ensure the CRDs are running

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get crds

NAME CREATED AT

adapters.config.istio.io 2019-04-06T05:41:16Z

apikeys.config.istio.io 2019-04-06T05:41:16Z

attributemanifests.config.istio.io 2019-04-06T05:41:15Z

authorizations.config.istio.io 2019-04-06T05:41:16Z

bypasses.config.istio.io 2019-04-06T05:41:15Z

checknothings.config.istio.io 2019-04-06T05:41:16Z

circonuses.config.istio.io 2019-04-06T05:41:15Z

deniers.config.istio.io 2019-04-06T05:41:15Z

destinationrules.networking.istio.io 2019-04-06T05:41:15Z

edges.config.istio.io 2019-04-06T05:41:16Z

envoyfilters.networking.istio.io 2019-04-06T05:41:15Z

fluentds.config.istio.io 2019-04-06T05:41:15Z

gateways.networking.istio.io 2019-04-06T05:41:15Z

handlers.config.istio.io 2019-04-06T05:41:16Z

httpapispecbindings.config.istio.io 2019-04-06T05:41:15Z

httpapispecs.config.istio.io 2019-04-06T05:41:15Z

instances.config.istio.io 2019-04-06T05:41:16Z

kubernetesenvs.config.istio.io 2019-04-06T05:41:15Z

kuberneteses.config.istio.io 2019-04-06T05:41:16Z

listcheckers.config.istio.io 2019-04-06T05:41:15Z

listentries.config.istio.io 2019-04-06T05:41:16Z

logentries.config.istio.io 2019-04-06T05:41:16Z

memquotas.config.istio.io 2019-04-06T05:41:15Z

meshpolicies.authentication.istio.io 2019-04-06T05:41:15Z

metrics.config.istio.io 2019-04-06T05:41:16Z

noops.config.istio.io 2019-04-06T05:41:15Z

opas.config.istio.io 2019-04-06T05:41:16Z

policies.authentication.istio.io 2019-04-06T05:41:15Z

prometheuses.config.istio.io 2019-04-06T05:41:16Z

quotas.config.istio.io 2019-04-06T05:41:16Z

quotaspecbindings.config.istio.io 2019-04-06T05:41:15Z

quotaspecs.config.istio.io 2019-04-06T05:41:15Z

rbacconfigs.rbac.istio.io 2019-04-06T05:41:16Z

rbacs.config.istio.io 2019-04-06T05:41:16Z

redisquotas.config.istio.io 2019-04-06T05:41:16Z

reportnothings.config.istio.io 2019-04-06T05:41:16Z

rules.config.istio.io 2019-04-06T05:41:15Z

servicecontrolreports.config.istio.io 2019-04-06T05:41:16Z

servicecontrols.config.istio.io 2019-04-06T05:41:16Z

serviceentries.networking.istio.io 2019-04-06T05:41:15Z

servicerolebindings.rbac.istio.io 2019-04-06T05:41:16Z

serviceroles.rbac.istio.io 2019-04-06T05:41:16Z

signalfxs.config.istio.io 2019-04-06T05:41:16Z

solarwindses.config.istio.io 2019-04-06T05:41:16Z

stackdrivers.config.istio.io 2019-04-06T05:41:16Z

statsds.config.istio.io 2019-04-06T05:41:16Z

stdios.config.istio.io 2019-04-06T05:41:16Z

templates.config.istio.io 2019-04-06T05:41:16Z

tracespans.config.istio.io 2019-04-06T05:41:16Z

virtualservices.networking.istio.io 2019-04-06T05:41:15Z

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- We are going to install

Istioin the cluster withno mutual TLS authenticationby executing

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl apply -f ~/istio-1.0.2/install/kubernetes/istio-demo.yaml

namespace/istio-system created

configmap/istio-galley-configuration created

configmap/istio-grafana-custom-resources created

configmap/istio-statsd-prom-bridge created

configmap/prometheus created

configmap/istio-security-custom-resources created

configmap/istio created

configmap/istio-sidecar-injector created

serviceaccount/istio-galley-service-account created

serviceaccount/istio-egressgateway-service-account created

serviceaccount/istio-ingressgateway-service-account created

serviceaccount/istio-grafana-post-install-account created

clusterrole.rbac.authorization.k8s.io/istio-grafana-post-install-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-grafana-post-install-role-binding-istio-system created

job.batch/istio-grafana-post-install created

serviceaccount/istio-mixer-service-account created

serviceaccount/istio-pilot-service-account created

serviceaccount/prometheus created

serviceaccount/istio-cleanup-secrets-service-account created

clusterrole.rbac.authorization.k8s.io/istio-cleanup-secrets-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-cleanup-secrets-istio-system created

job.batch/istio-cleanup-secrets created

serviceaccount/istio-citadel-service-account created

serviceaccount/istio-sidecar-injector-service-account created

customresourcedefinition.apiextensions.k8s.io/virtualservices.networking.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/destinationrules.networking.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/serviceentries.networking.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/gateways.networking.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/envoyfilters.networking.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/httpapispecbindings.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/httpapispecs.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/quotaspecbindings.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/quotaspecs.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/rules.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/attributemanifests.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/bypasses.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/circonuses.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/deniers.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/fluentds.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/kubernetesenvs.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/listcheckers.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/memquotas.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/noops.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/opas.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/prometheuses.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/rbacs.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/redisquotas.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/servicecontrols.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/signalfxs.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/solarwindses.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/stackdrivers.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/statsds.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/stdios.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/apikeys.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/authorizations.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/checknothings.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/kuberneteses.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/listentries.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/logentries.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/edges.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/metrics.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/quotas.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/reportnothings.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/servicecontrolreports.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/tracespans.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/rbacconfigs.rbac.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/serviceroles.rbac.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/servicerolebindings.rbac.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/adapters.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/instances.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/templates.config.istio.io unchanged

customresourcedefinition.apiextensions.k8s.io/handlers.config.istio.io unchanged

clusterrole.rbac.authorization.k8s.io/istio-galley-istio-system created

clusterrole.rbac.authorization.k8s.io/istio-egressgateway-istio-system created

clusterrole.rbac.authorization.k8s.io/istio-ingressgateway-istio-system created

clusterrole.rbac.authorization.k8s.io/istio-mixer-istio-system created

clusterrole.rbac.authorization.k8s.io/istio-pilot-istio-system created

clusterrole.rbac.authorization.k8s.io/prometheus-istio-system created

clusterrole.rbac.authorization.k8s.io/istio-citadel-istio-system created

clusterrole.rbac.authorization.k8s.io/istio-sidecar-injector-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-galley-admin-role-binding-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-egressgateway-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-ingressgateway-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-mixer-admin-role-binding-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-pilot-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-citadel-istio-system created

clusterrolebinding.rbac.authorization.k8s.io/istio-sidecar-injector-admin-role-binding-istio-system created

service/istio-galley created

service/istio-egressgateway created

service/istio-ingressgateway created

service/grafana created

service/istio-policy created

service/istio-telemetry created

service/istio-statsd-prom-bridge created

deployment.extensions/istio-statsd-prom-bridge created

service/istio-pilot created

service/prometheus created

service/istio-citadel created

service/servicegraph created

service/istio-sidecar-injector created

deployment.extensions/istio-galley created

deployment.extensions/istio-egressgateway created

deployment.extensions/istio-ingressgateway created

deployment.extensions/grafana created

deployment.extensions/istio-policy created

deployment.extensions/istio-telemetry created

deployment.extensions/istio-pilot created

deployment.extensions/prometheus created

deployment.extensions/istio-citadel created

deployment.extensions/servicegraph created

deployment.extensions/istio-sidecar-injector created

deployment.extensions/istio-tracing created

gateway.networking.istio.io/istio-autogenerated-k8s-ingress created

horizontalpodautoscaler.autoscaling/istio-egressgateway created

horizontalpodautoscaler.autoscaling/istio-ingressgateway created

horizontalpodautoscaler.autoscaling/istio-policy created

horizontalpodautoscaler.autoscaling/istio-telemetry created

horizontalpodautoscaler.autoscaling/istio-pilot created

service/jaeger-query created

service/jaeger-collector created

service/jaeger-agent created

service/zipkin created

service/tracing created

mutatingwebhookconfiguration.admissionregistration.k8s.io/istio-sidecar-injector created

attributemanifest.config.istio.io/istioproxy created

attributemanifest.config.istio.io/kubernetes created

stdio.config.istio.io/handler created

logentry.config.istio.io/accesslog created

logentry.config.istio.io/tcpaccesslog created

rule.config.istio.io/stdio created

rule.config.istio.io/stdiotcp created

metric.config.istio.io/requestcount created

metric.config.istio.io/requestduration created

metric.config.istio.io/requestsize created

metric.config.istio.io/responsesize created

metric.config.istio.io/tcpbytesent created

metric.config.istio.io/tcpbytereceived created

prometheus.config.istio.io/handler created

rule.config.istio.io/promhttp created

rule.config.istio.io/promtcp created

kubernetesenv.config.istio.io/handler created

rule.config.istio.io/kubeattrgenrulerule created

rule.config.istio.io/tcpkubeattrgenrulerule created

kubernetes.config.istio.io/attributes created

destinationrule.networking.istio.io/istio-policy created

destinationrule.networking.istio.io/istio-telemetry created

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- We can check if everything is working by executing

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kubectl get pod -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-6cbdcfb45-kglcz 1/1 Running 0 1m

istio-citadel-6b6fdfdd6f-hfd2q 1/1 Running 0 1m

istio-cleanup-secrets-dnnph 0/1 Completed 0 1m

istio-egressgateway-56bdd5fcfb-rt92n 1/1 Running 0 1m

istio-galley-96464ff6-wdf6j 1/1 Running 0 1m

istio-grafana-post-install-zf8qg 0/1 Completed 0 1m

istio-ingressgateway-7f4dd7d699-gg6x5 1/1 Running 0 1m

istio-pilot-6f8d49d4c4-c8gtd 2/2 Running 0 1m

istio-policy-67f4d49564-bgh55 2/2 Running 0 1m

istio-sidecar-injector-69c4bc7974-pjpxd 1/1 Running 0 1m

istio-statsd-prom-bridge-7f44bb5ddb-4xmhb 1/1 Running 0 1m

istio-telemetry-76869cd64f-8wjjz 2/2 Running 0 1m

istio-tracing-ff94688bb-fp548 1/1 Running 0 1m

prometheus-84bd4b9796-bwtdz 1/1 Running 0 1m

servicegraph-c6456d6f5-wskrk 1/1 Running 0 1m

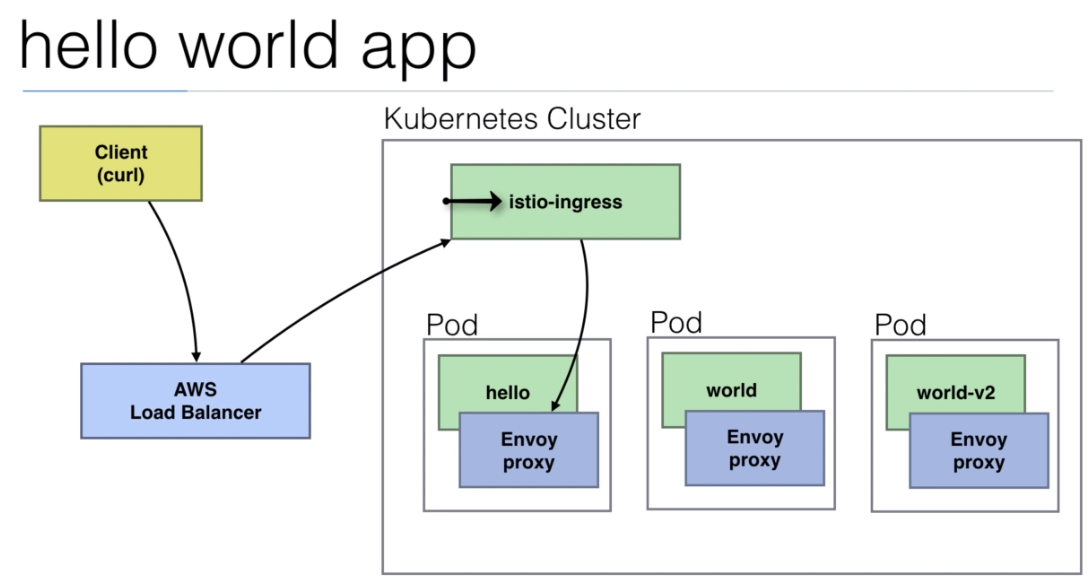

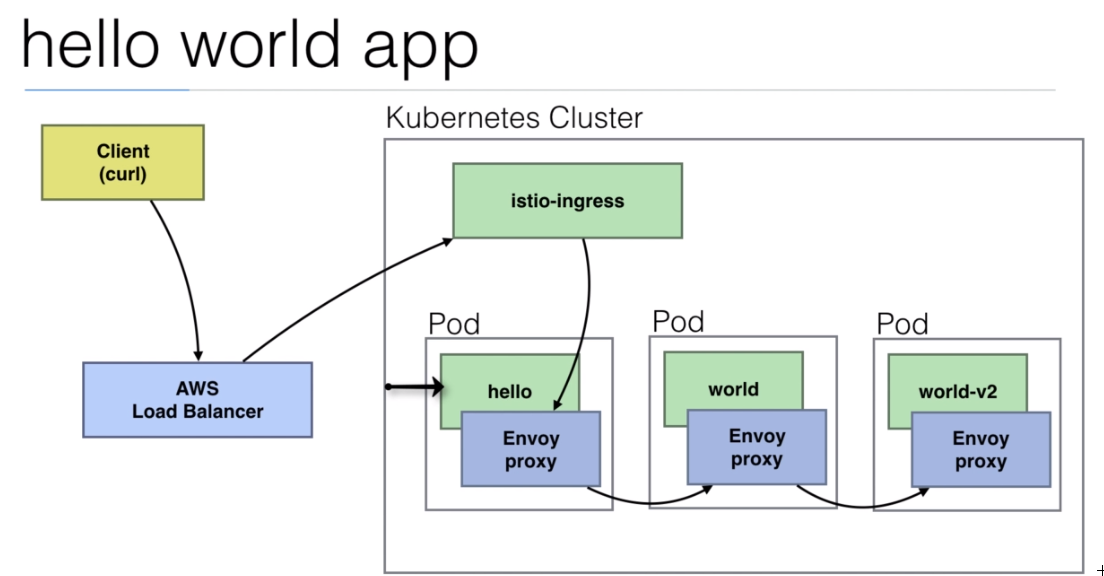

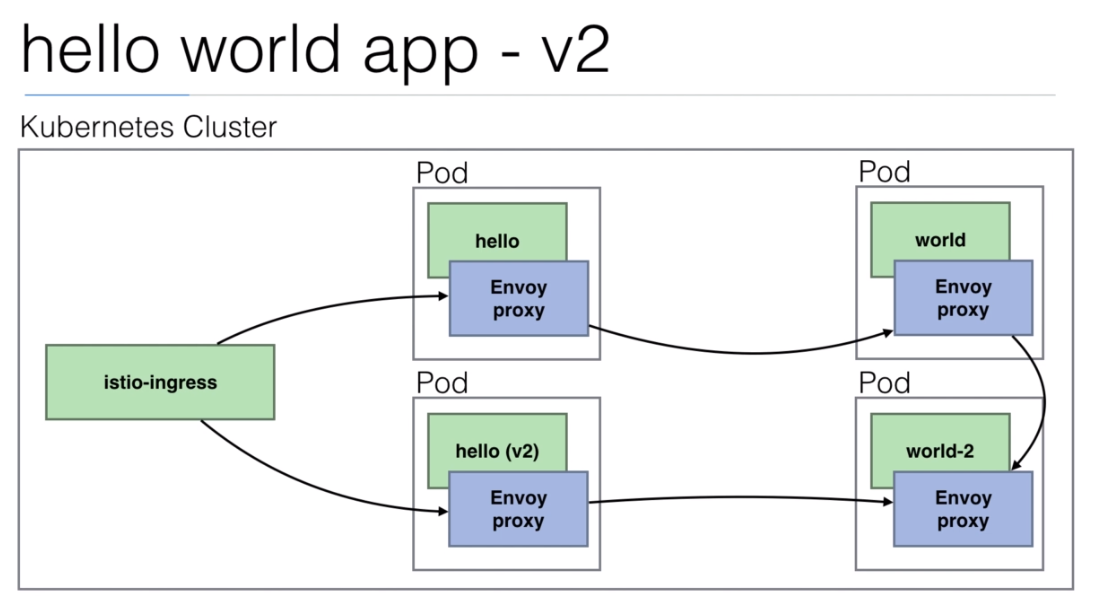

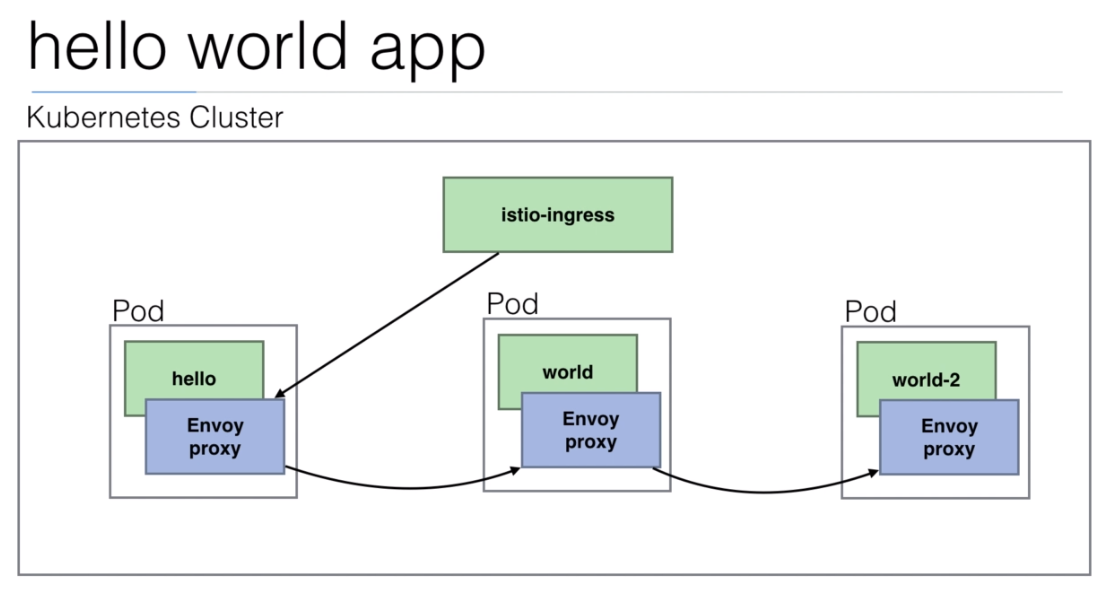

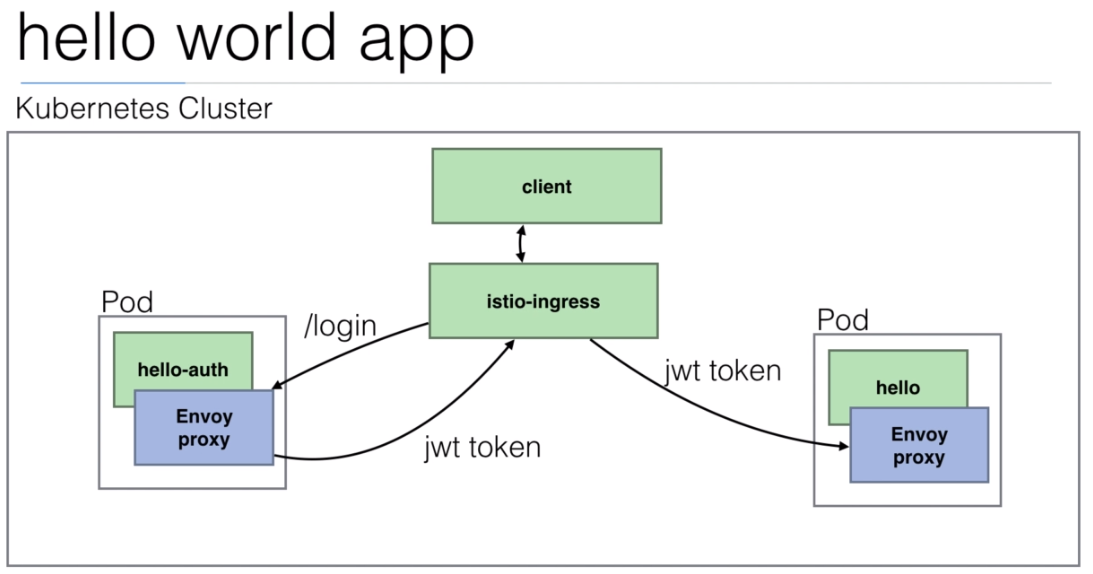

108. Demo: An Istio enabled app

We are going to use the

istio/helloworld.yamldocument to create 3 deployments with their services.The image used is created using the https://github.com/peelmicro/http-echo repository. It has a

GOapp that allows to echo a message and call another app after the message is shown.

main.go

package main

import (

"github.com/dgrijalva/jwt-go"

"github.com/lestrrat-go/jwx/jwk"

"crypto/rsa"

"encoding/json"

"fmt"

"io/ioutil"

"log"

"net/http"

"os"

"strconv"

"strings"

"time"

)

type Login struct {

Login string `json:"login" binding:"required"`

}

type Jwks struct {

Keys []JwksKeys `json:"keys"`

}

type JwksKeys struct {

E string `json:"e"`

Kid string `json:"kid"`

Kty string `json:"kty"`

N string `json:"n"`

}

var (

publicKey *rsa.PublicKey

signKey *rsa.PrivateKey

)

func main() {

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

latency := os.Getenv("LATENCY")

if latency != "" {

i, err := strconv.ParseInt(latency, 10, 64)

if err != nil {

fmt.Fprintf(w, "Env LATENCY needs to be a number")

return

}

time.Sleep(time.Duration(i) * time.Second)

}

text := os.Getenv("TEXT")

if text == "" {

fmt.Fprintf(w, "set env TEXT to display something")

return

}

next := os.Getenv("NEXT")

if next == "" {

fmt.Fprintf(w, "%s", text)

} else {

// initialize client

client := &http.Client{}

req, _ := http.NewRequest("GET", "http://"+next+"/", nil)

// get headirs

for k, _ := range r.Header {

for _, otHeader := range otHeaders {

if strings.ToLower(otHeader) == strings.ToLower(k) {

req.Header.Set(k, r.Header.Get(k))

}

}

}

// do request

resp, err := client.Do(req)

if err != nil {

fmt.Fprintf(w, "Couldn't connect to http://%s/", next)

fmt.Printf("Error: %s", err)

return

}

defer resp.Body.Close()

body, err := ioutil.ReadAll(resp.Body)

fmt.Fprintf(w, "%s %s\n", text, body)

}

})

// load keys for JWT

initKeys()

// handle login

http.HandleFunc("/login", func(w http.ResponseWriter, r *http.Request) {

// read body

decoder := json.NewDecoder(r.Body)

var l Login

err := decoder.Decode(&l)

if err != nil {

fmt.Fprintf(w, "No login supplied")

return

}

// generate jwt token

token := jwt.NewWithClaims(jwt.GetSigningMethod("RS256"), jwt.MapClaims{

"login": l.Login,

"groups": "users",

"iss": "http-echo@http-echo.kubernetes.newtech.academy",

"sub": "http-echo@http-echo.kubernetes.newtech.academy",

"exp": time.Now().Add(time.Hour * 72).Unix(),

"iat": time.Now().Unix(),

})

token.Header["kid"] = "mykey"

tokenString, err := token.SignedString(signKey)

if err != nil {

fmt.Fprintf(w, "Could not sign jwt token")

return

}

fmt.Fprintf(w, "JWT token: %s \n", tokenString)

})

// jwks.json

http.HandleFunc("/.well-known/jwks.json", func(w http.ResponseWriter, r *http.Request) {

key, err := jwk.New(publicKey)

if err != nil {

log.Printf("failed to create JWK: %s", err)

return

}

key.Set("kid", "mykey")

jsonbuf, err := json.MarshalIndent(key, "", " ")

if err != nil {

log.Printf("failed to generate JSON: %s", err)

return

}

var k JwksKeys

if err := json.Unmarshal(jsonbuf, &k); err != nil {

log.Printf("failed to unmarshal JSON: %s", err)

return

}

j := &Jwks{Keys: []JwksKeys{k}}

jsonbuf2, err := json.Marshal(j)

if err != nil {

log.Printf("failed to generate JSON: %s", err)

return

}

fmt.Fprintf(w, "%s", jsonbuf2)

})

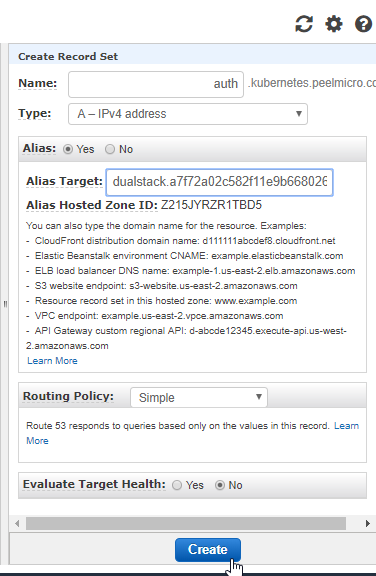

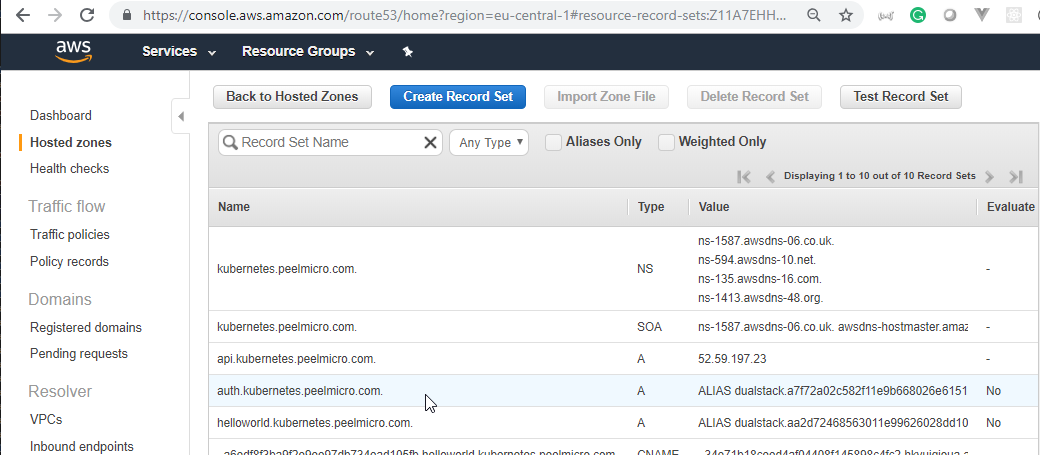

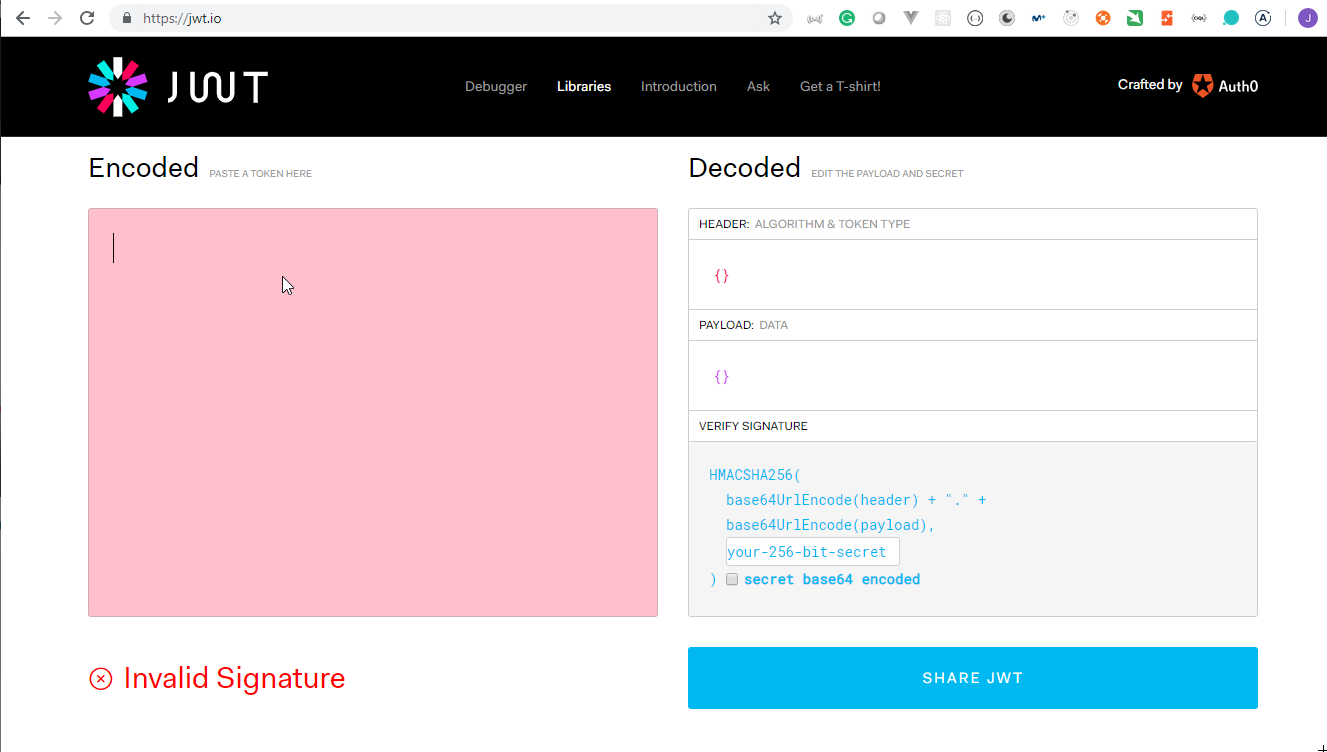

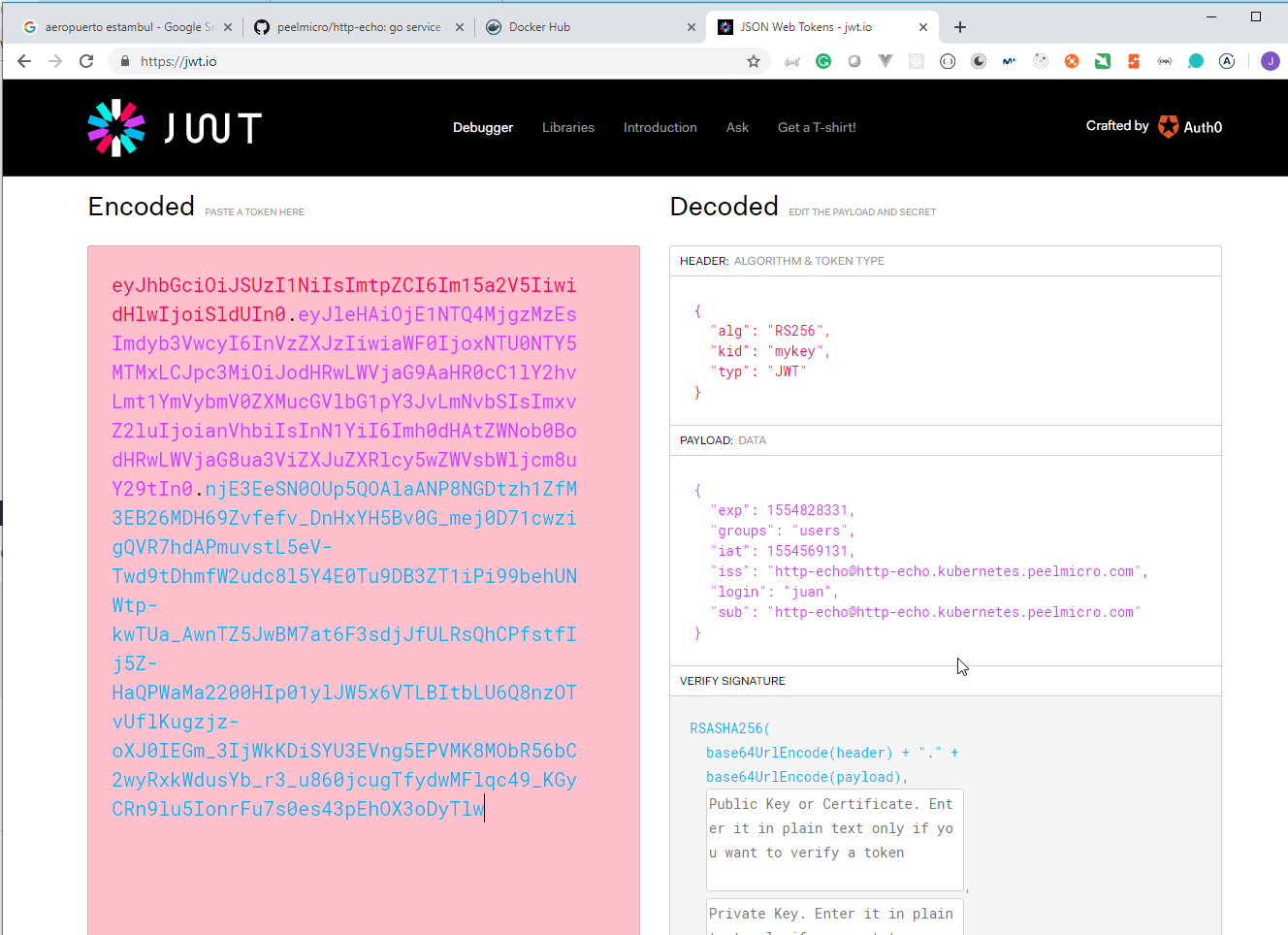

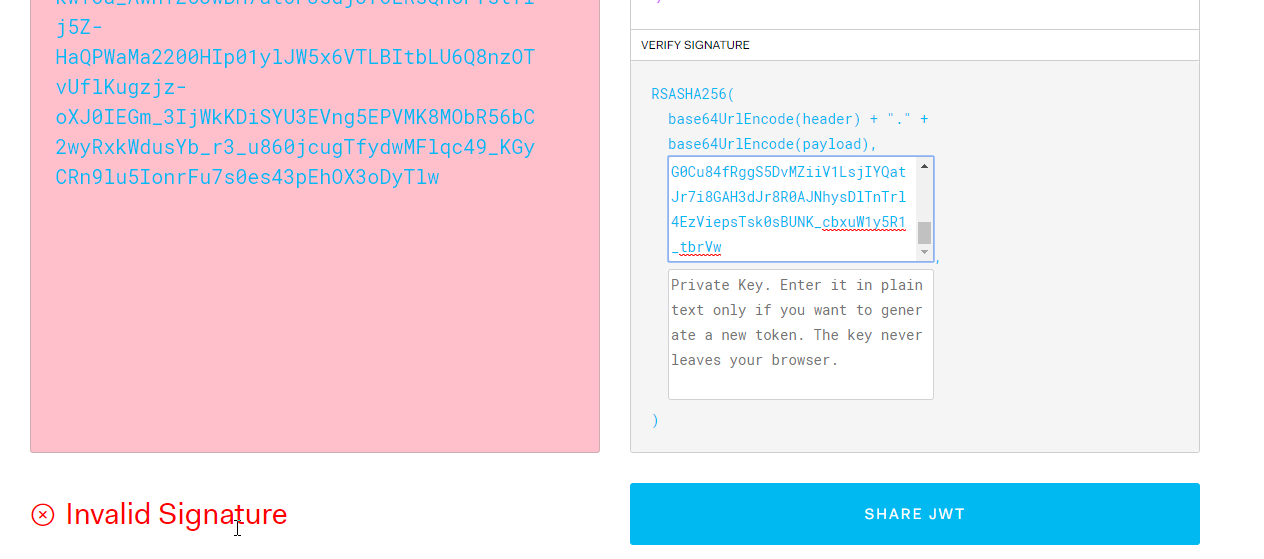

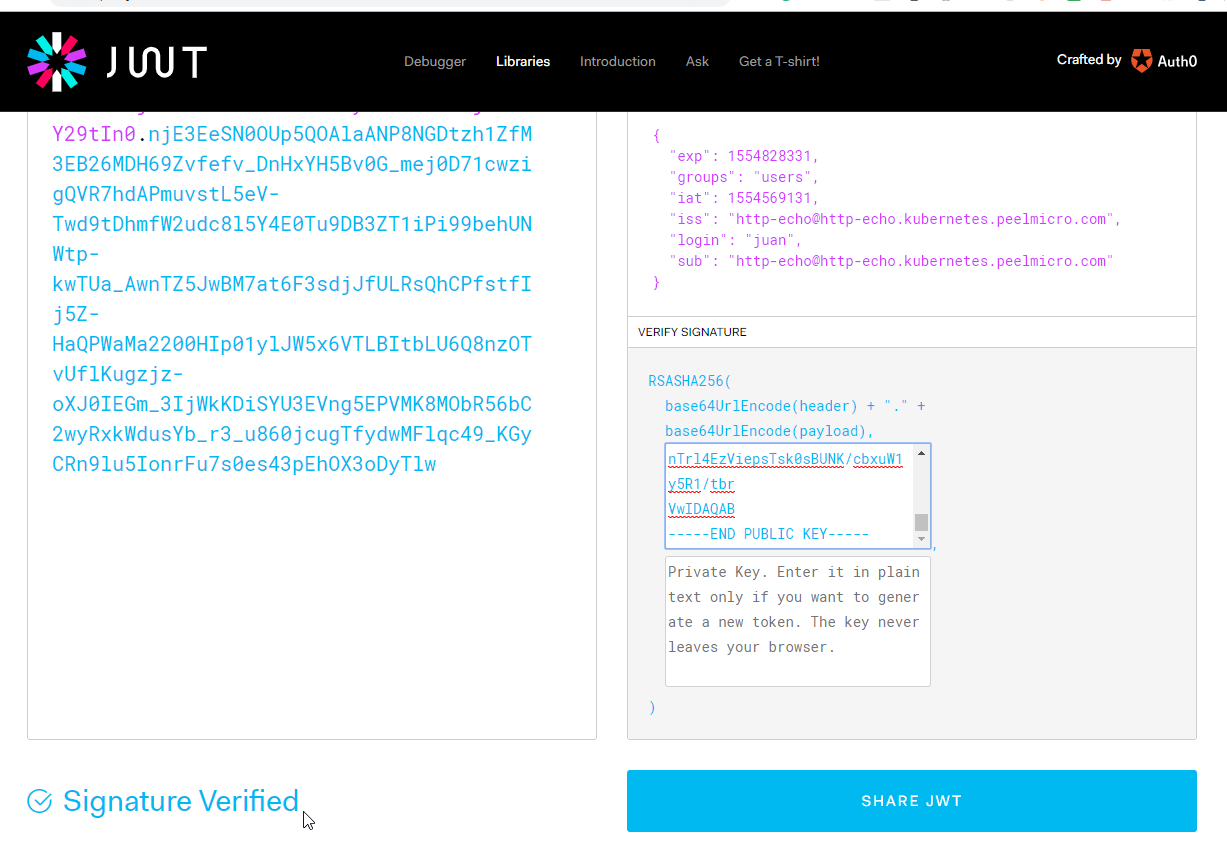

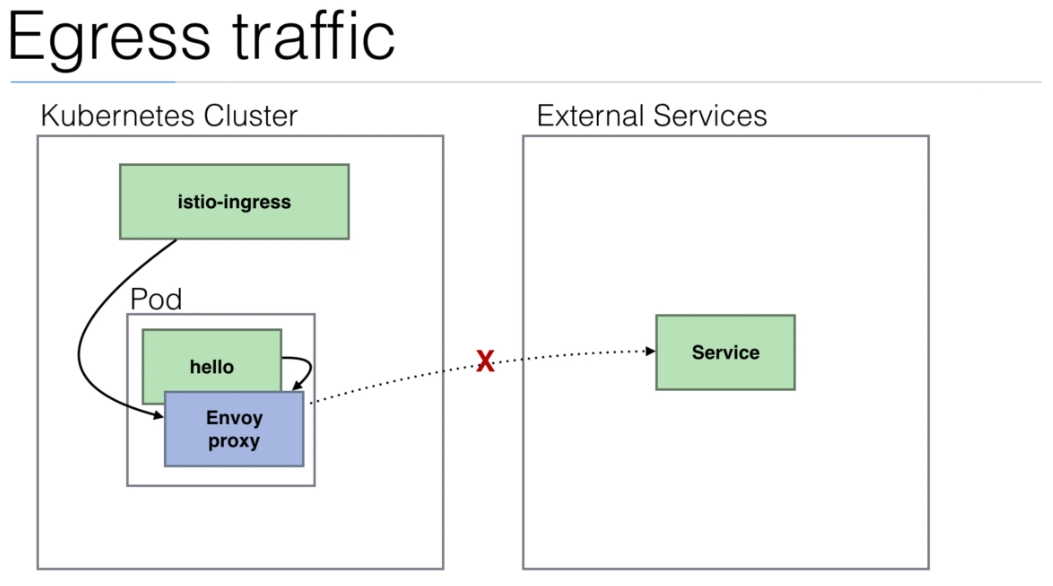

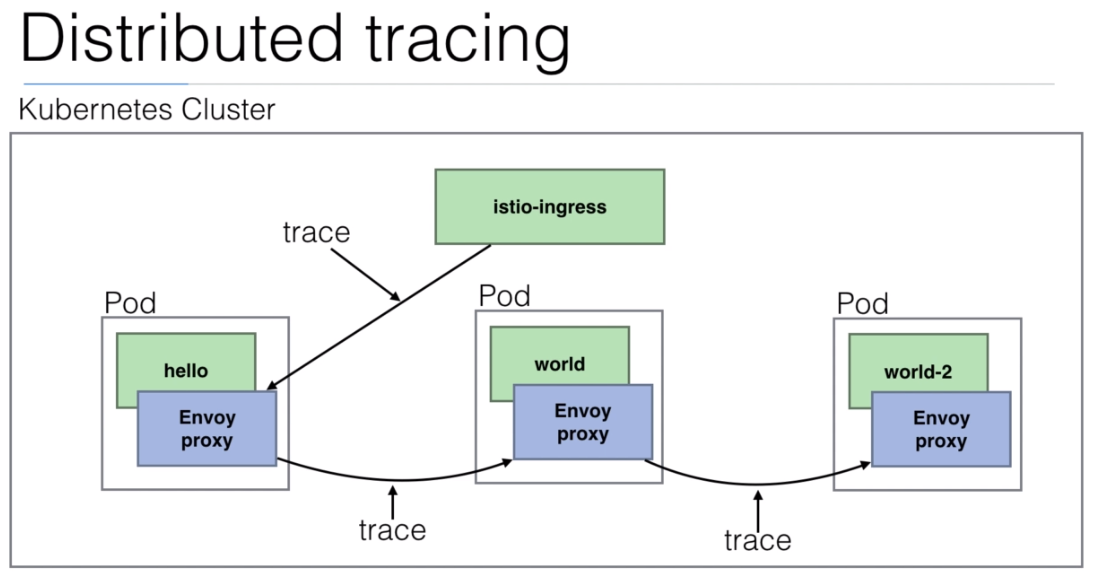

// start server