Learn DevOps: The Complete Kubernetes Course (Part 2)

Github Repositories

- learn-devops-the-complete-kubernetes-course.

- on-prem-or-cloud-agnostic-kubernetes.

- kubernetes-coursee.

- http-echo.

The Learn DevOps: The Complete Kubernetes Course Udemy course helps learn how Kubernetes will run and manage your containerized applications and to build, deploy, use, and maintain Kubernetes.

Other parts:

- Learn DevOps: The Complete Kubernetes Course (Part 1)

- Learn DevOps: The Complete Kubernetes Course (Part 3)

- Learn DevOps: The Complete Kubernetes Course (Part 4)

Table of contents

- What I've learned

- Section: 3. Advanced Topics

- 48. Service Discovery

- 49. Demo: Service Discovery

- 50. ConfigMap

- 51. Demo: ConfigMap

- 52. Ingress Controller

- 53. Demo: Ingress Controller

- 54. External DNS

- 55. Demo: External DNS

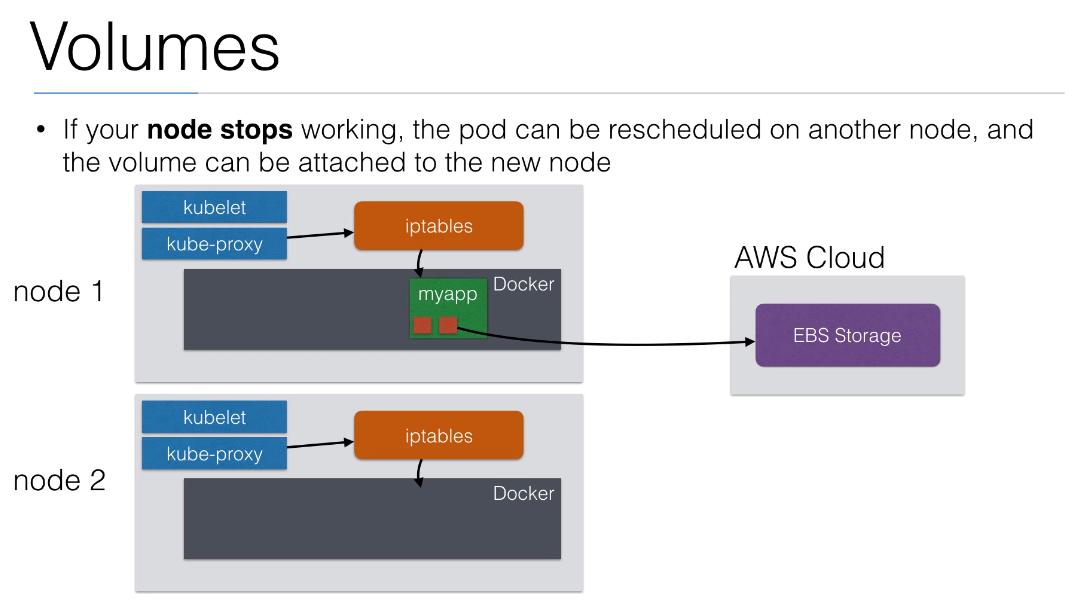

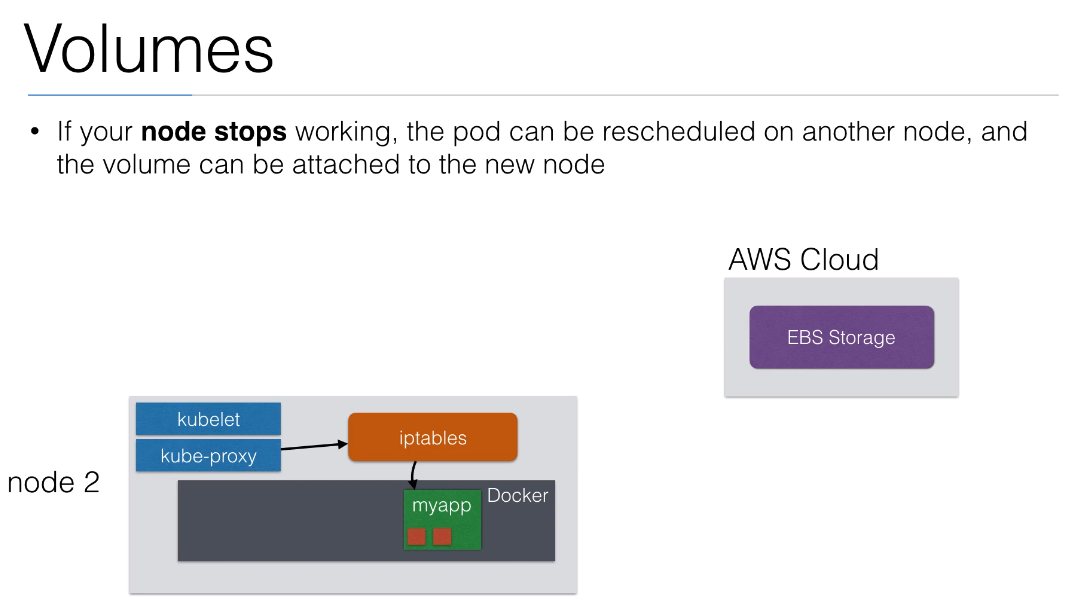

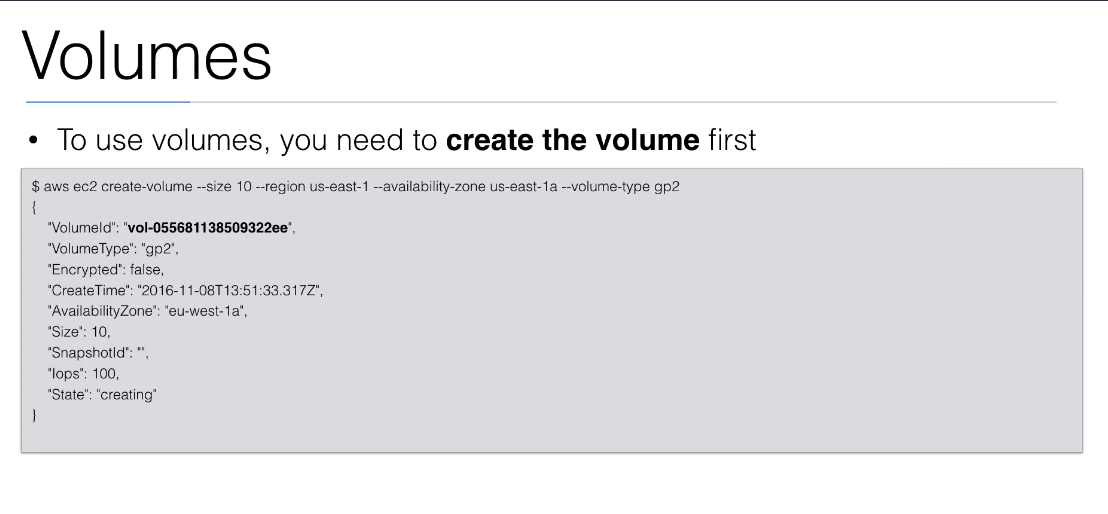

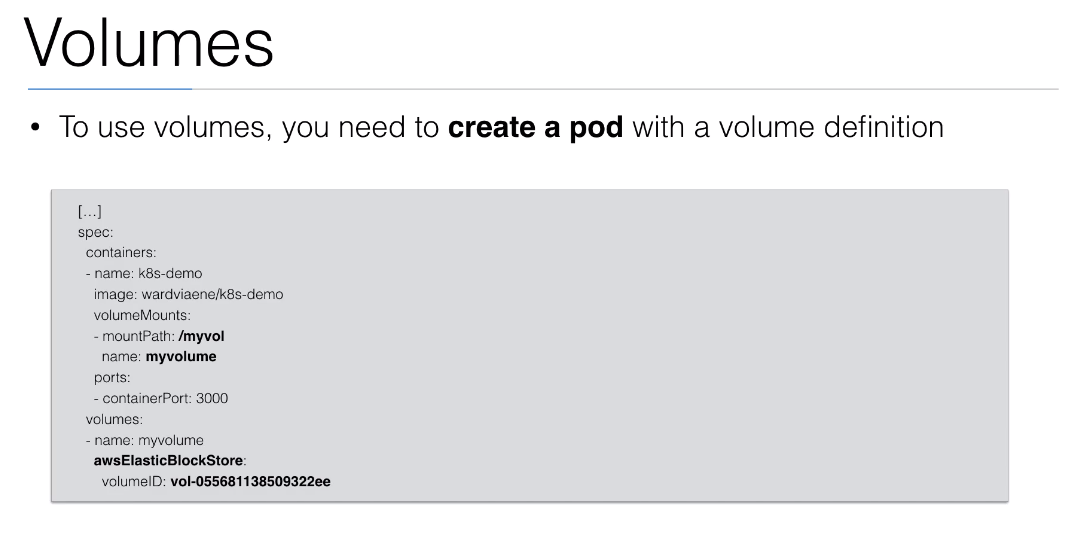

- 56. Volumes

- 57. Demo: Volumes

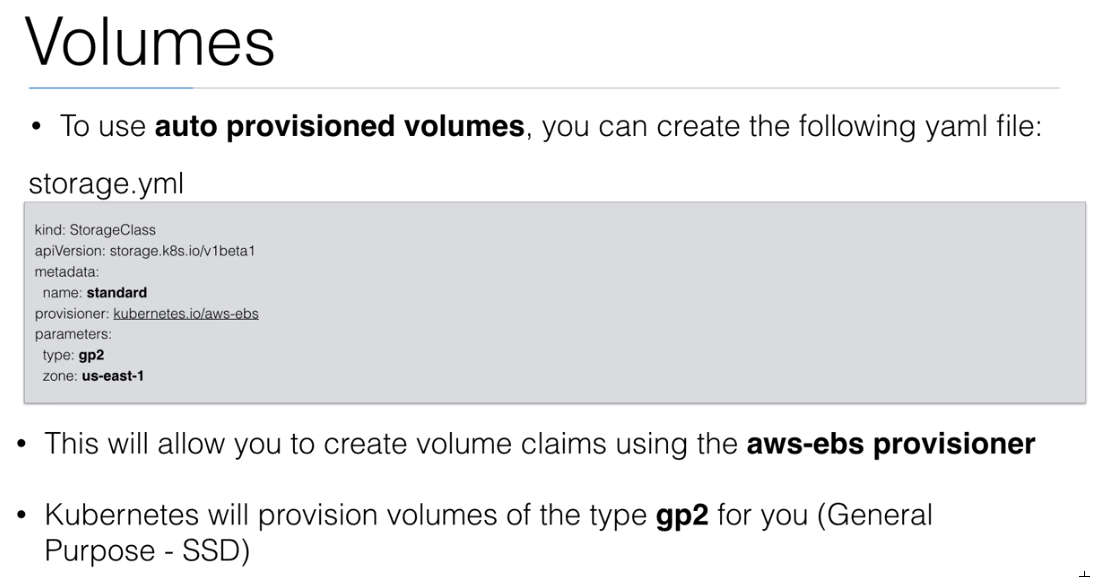

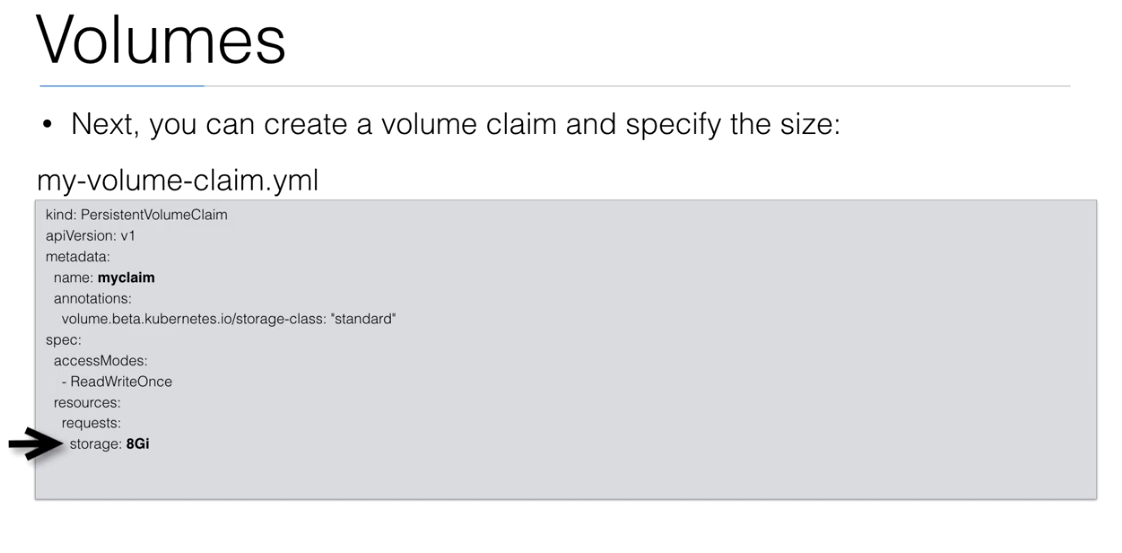

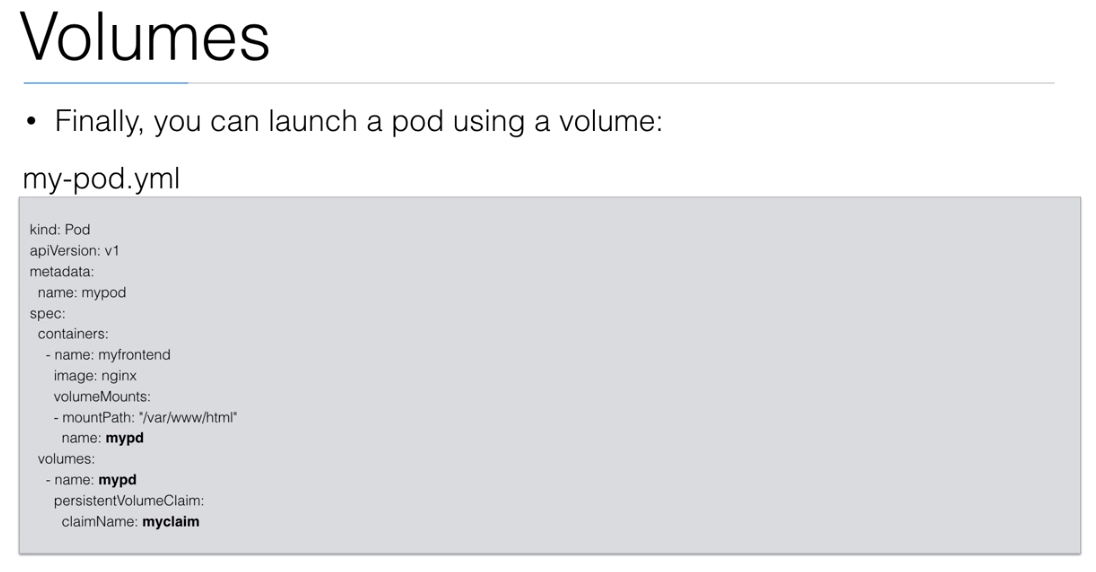

- 58. Volumes Autoprovisioning

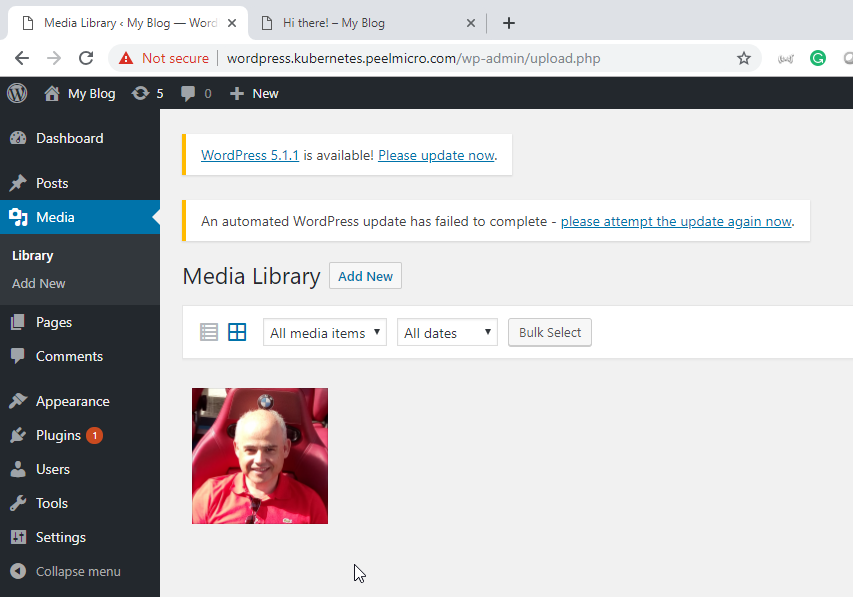

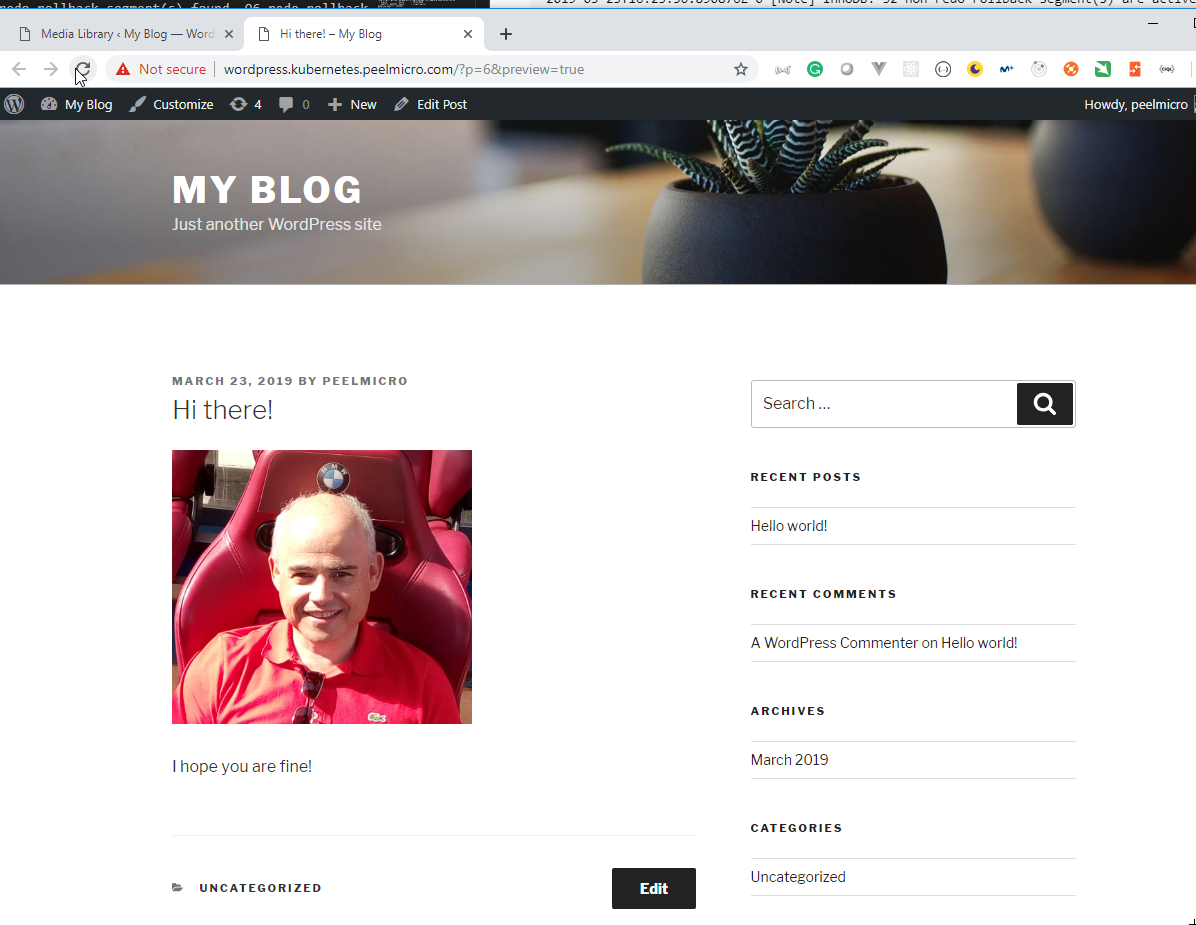

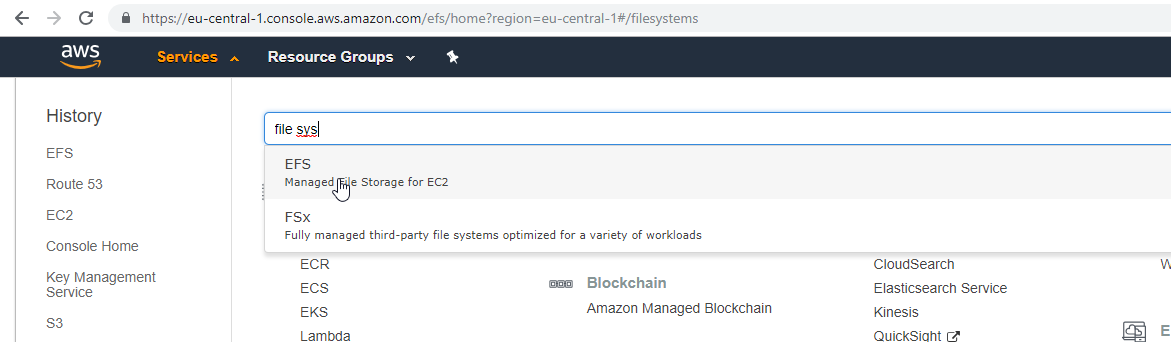

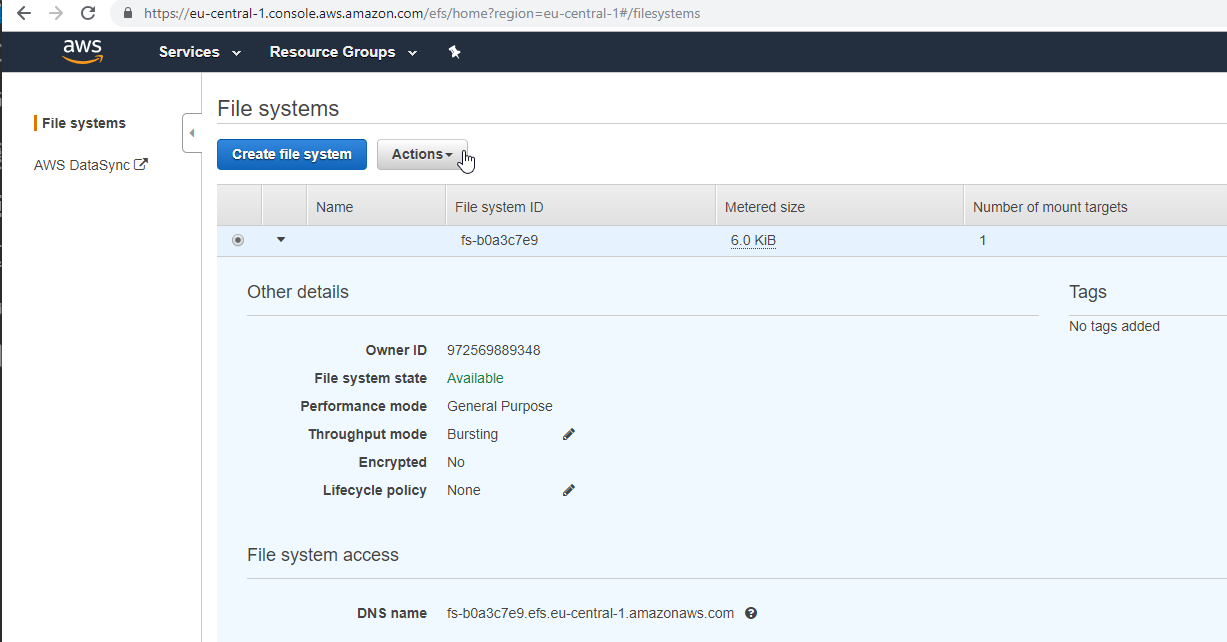

- 59. Demo: Wordpress With Volumes

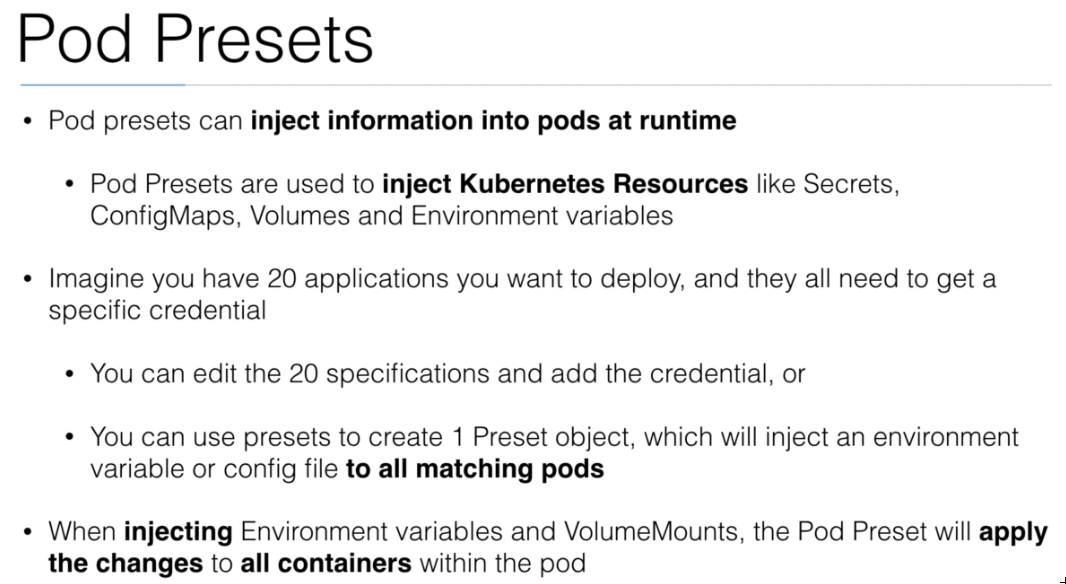

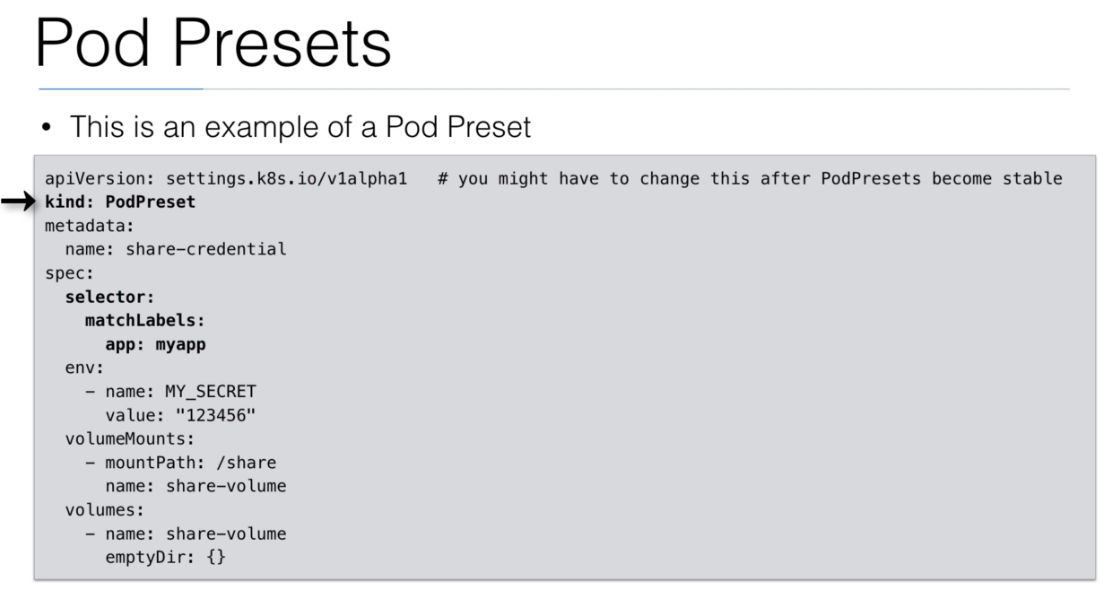

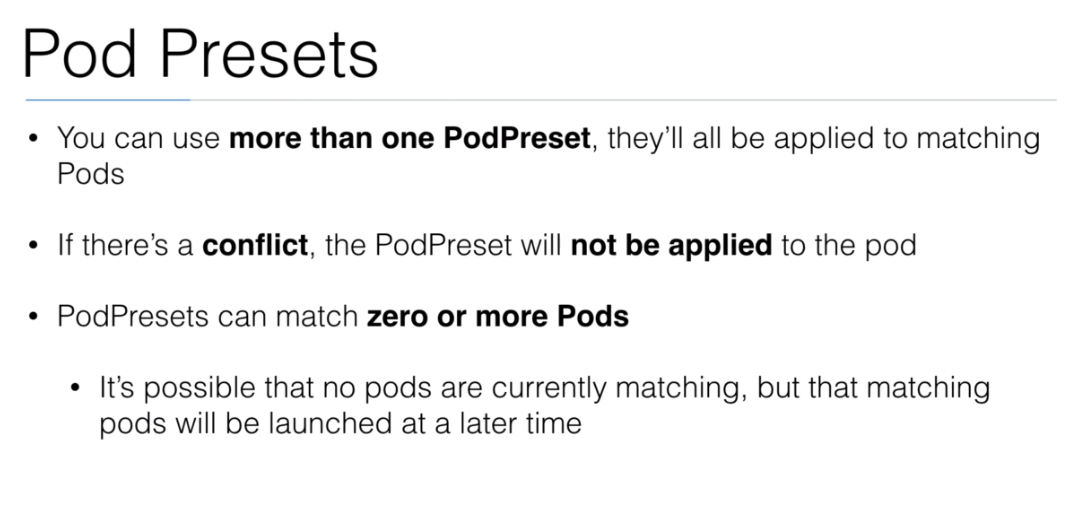

- 60. Pod Presets

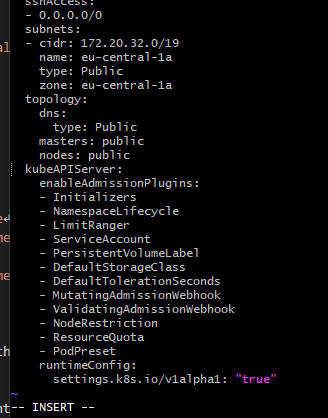

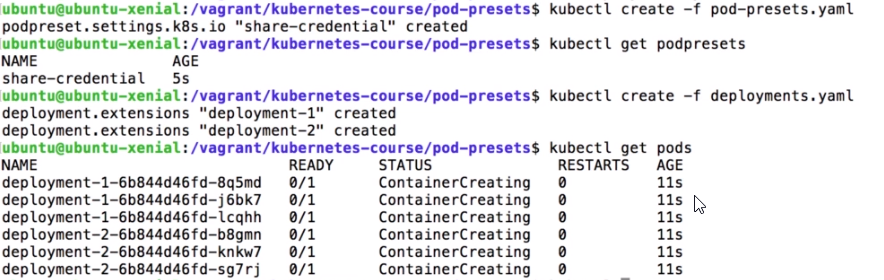

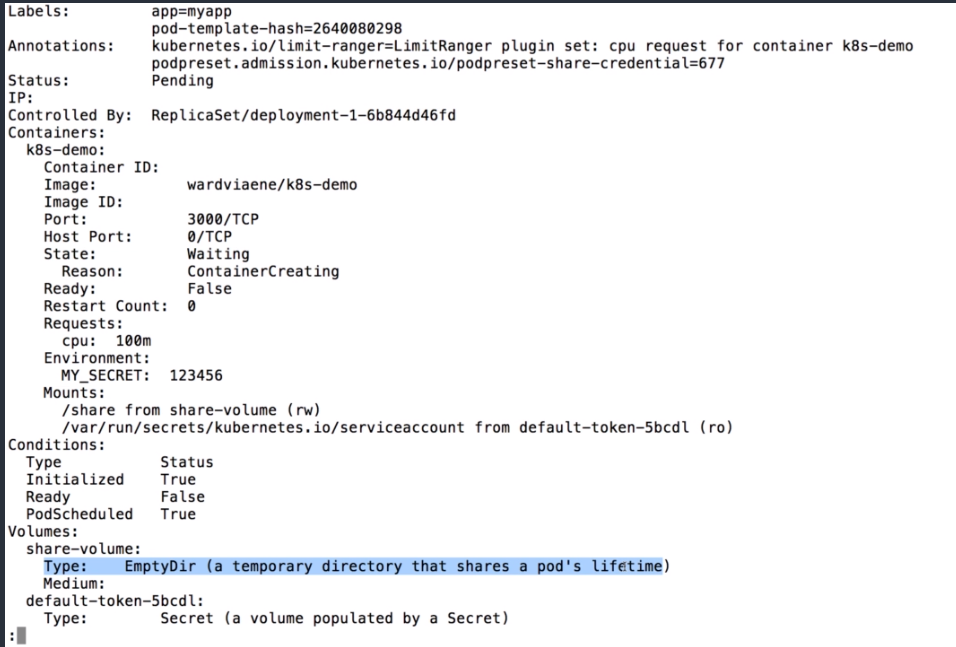

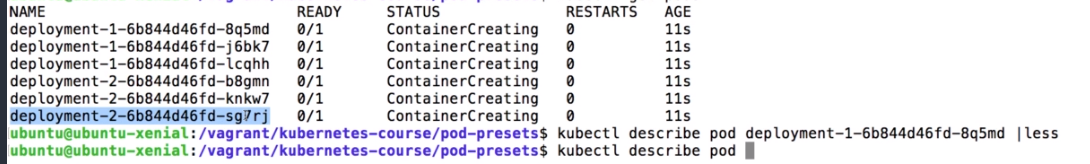

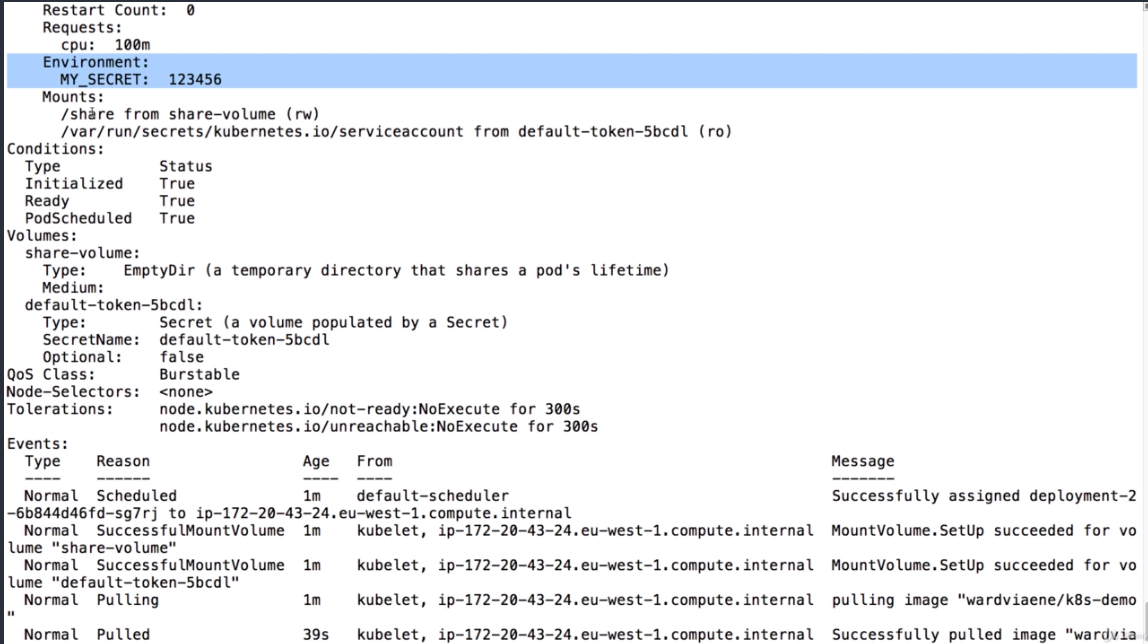

- 61. Demo: Pod Presets

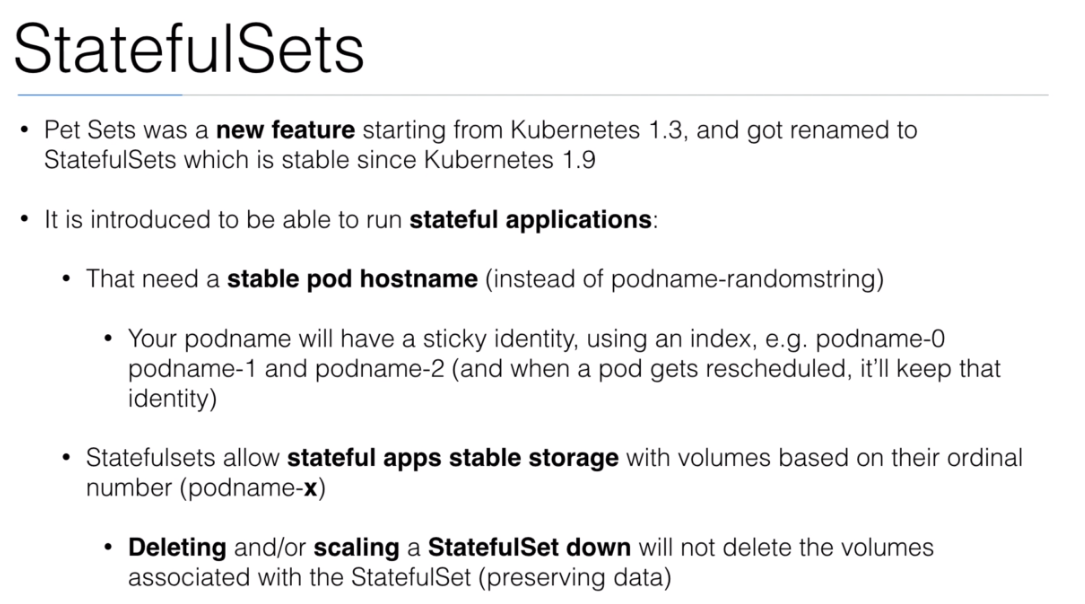

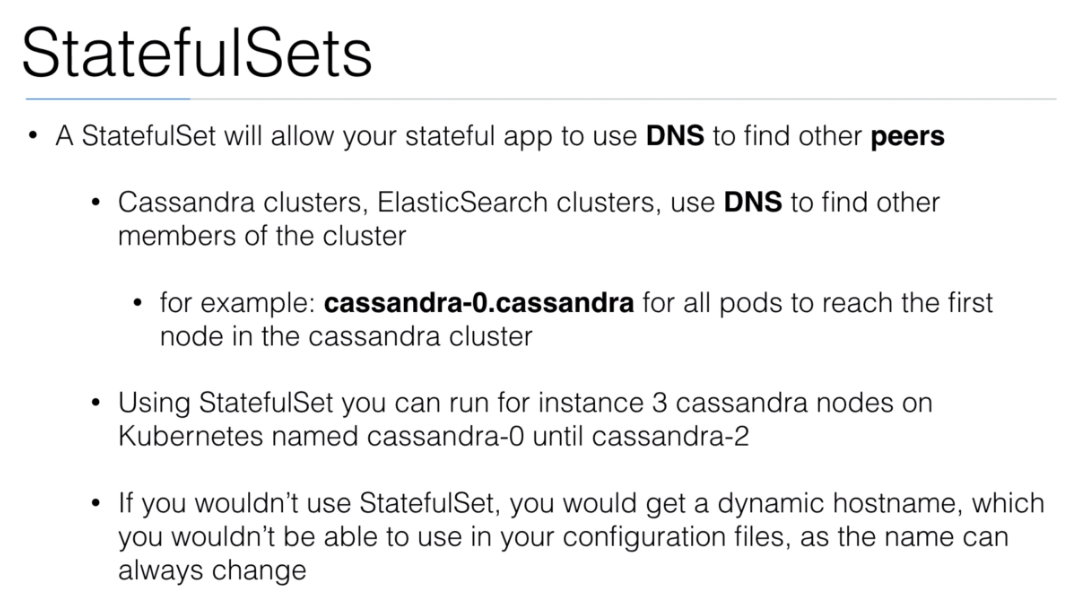

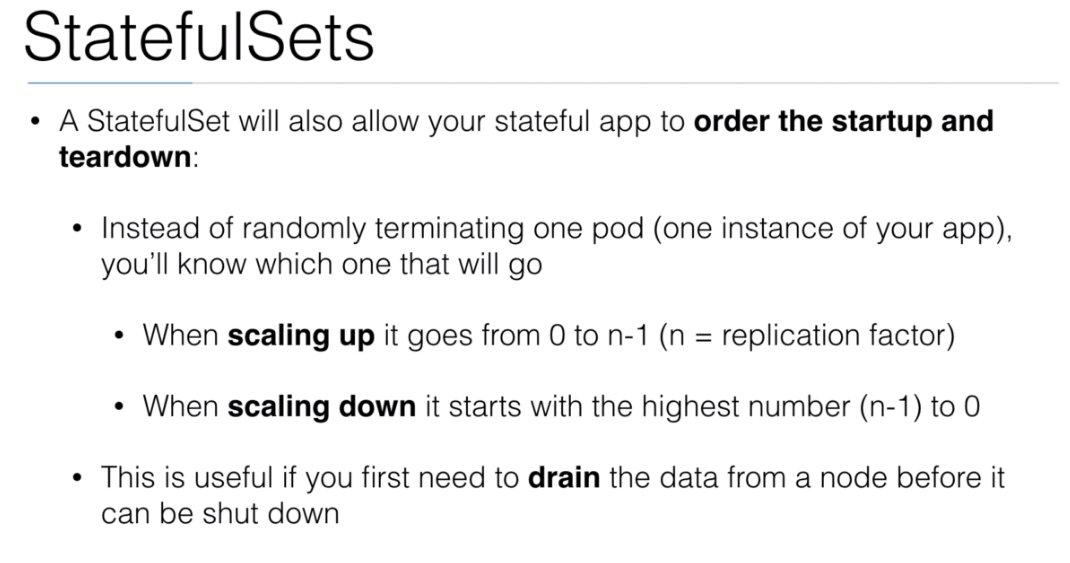

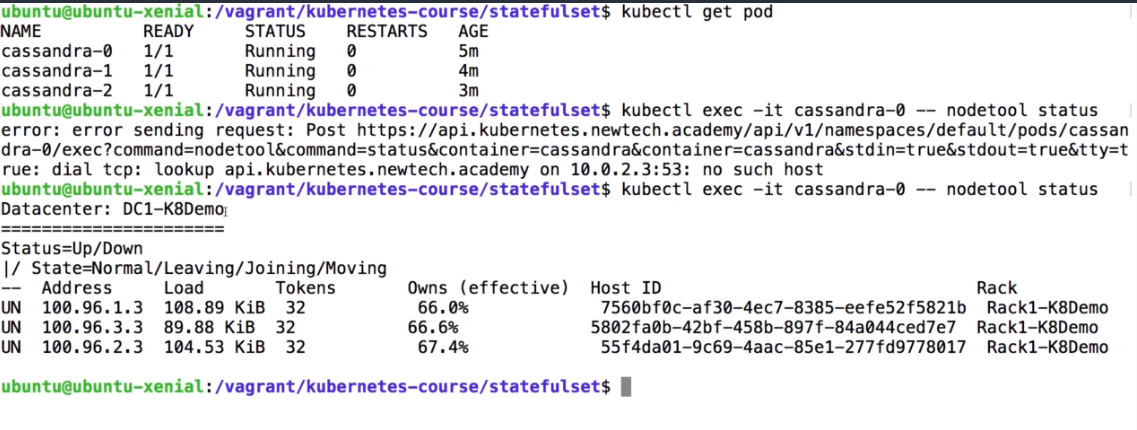

- 62. StatefulSets

- 63. Demo: StatefulSets

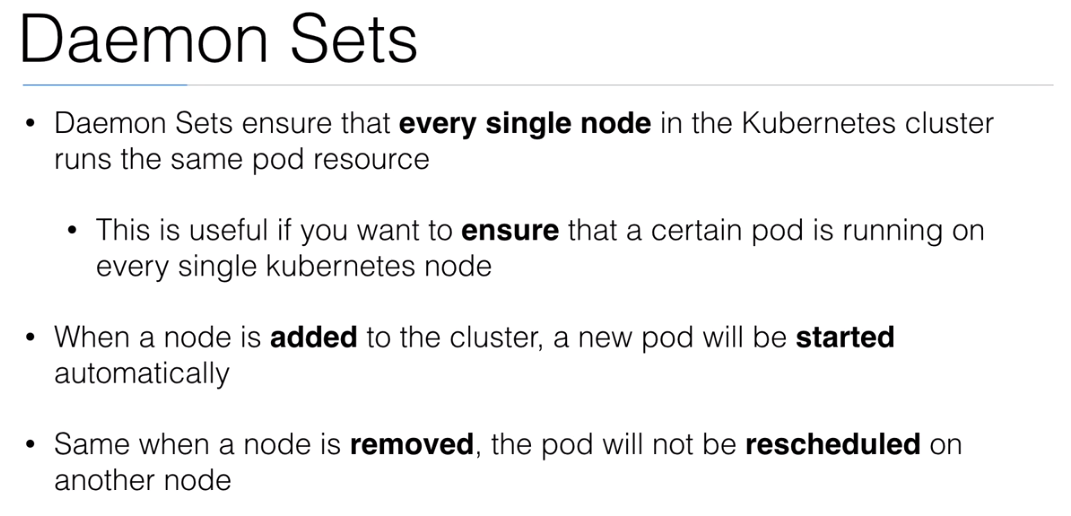

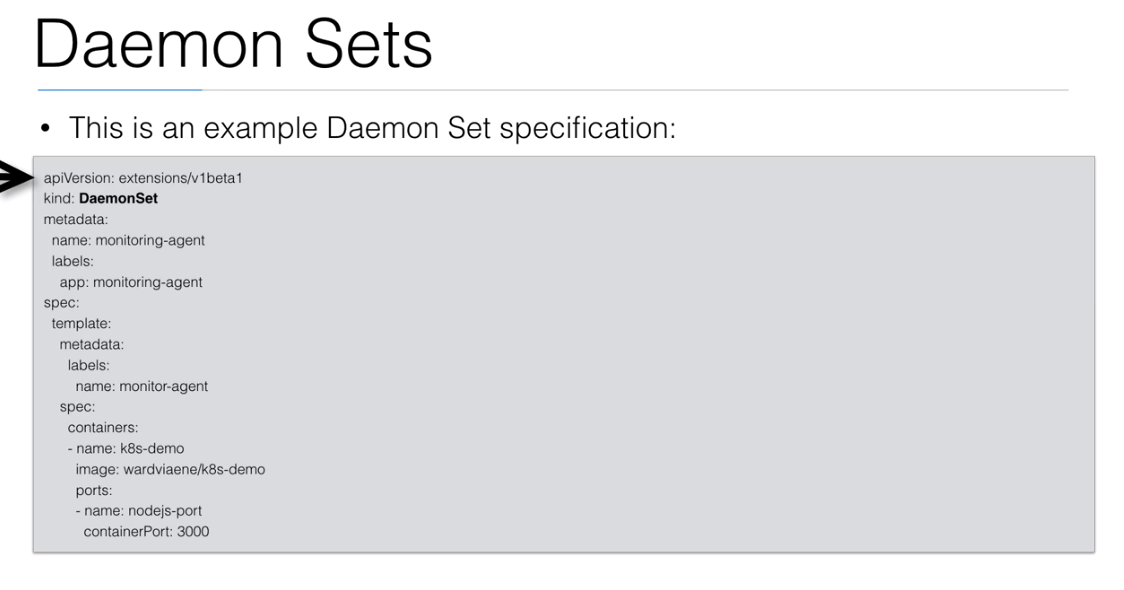

- 64. Daemon Sets

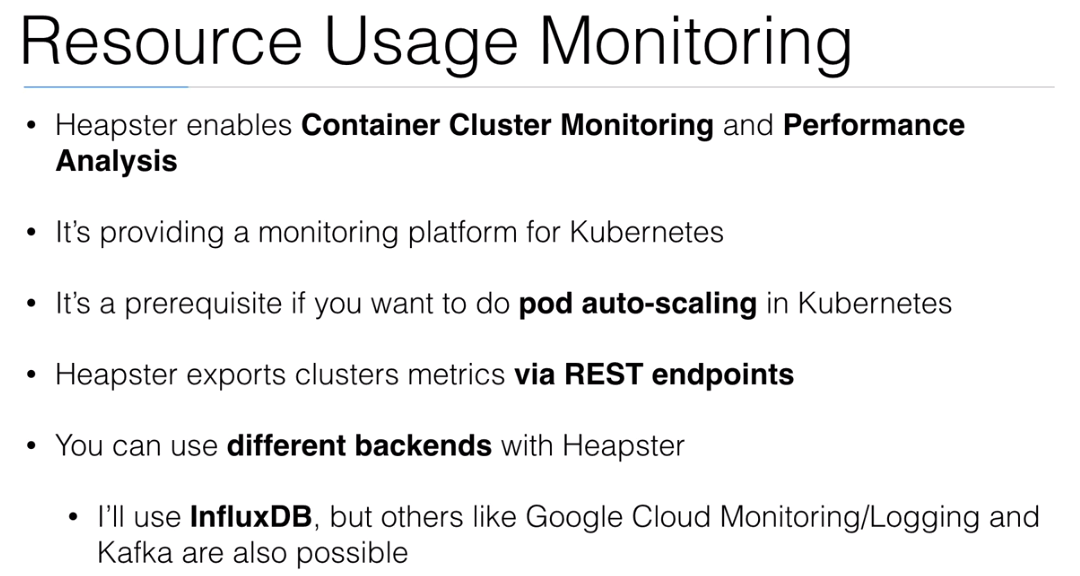

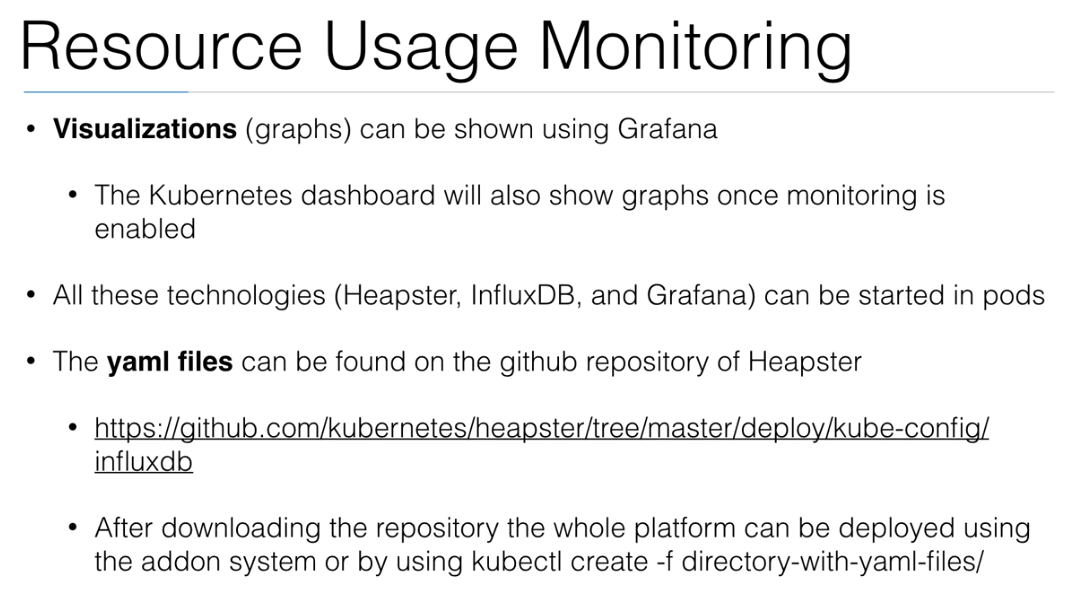

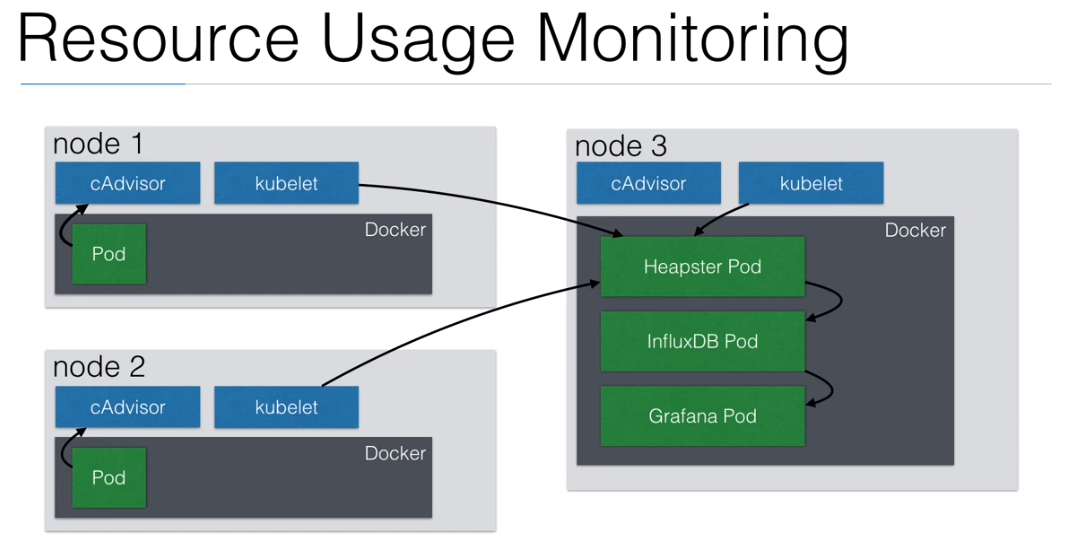

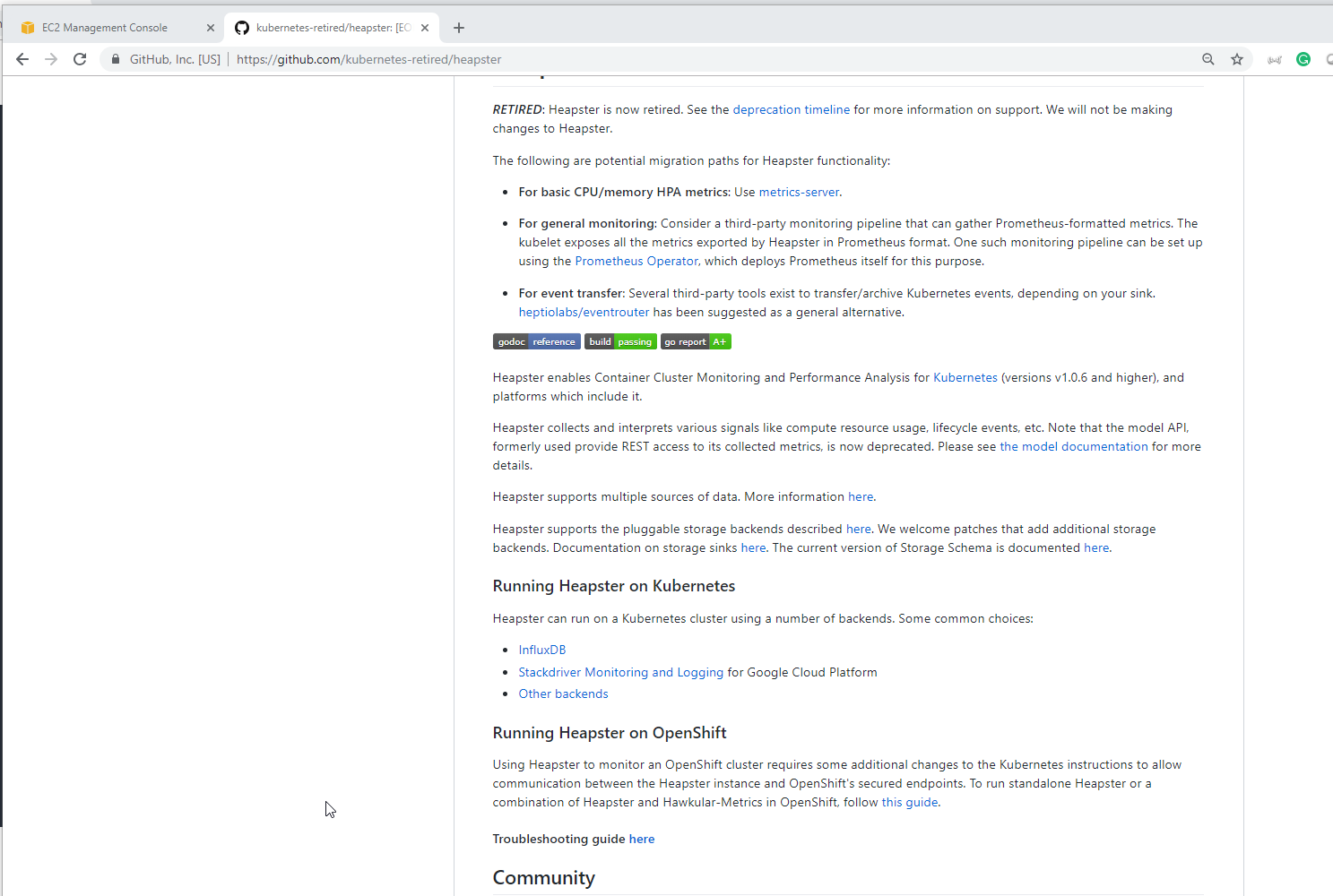

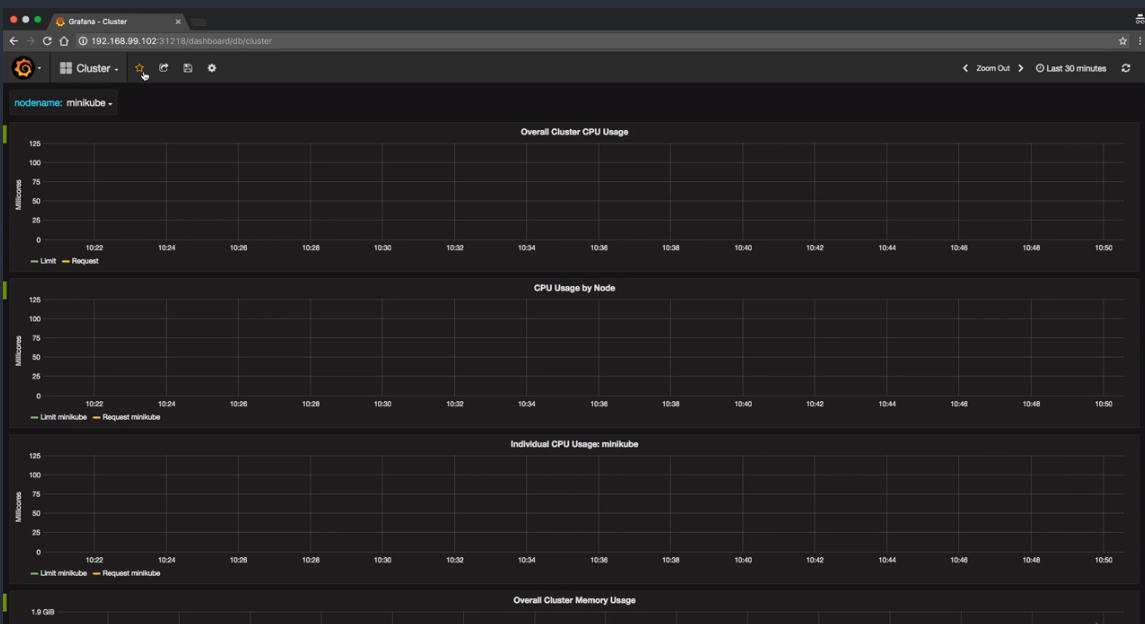

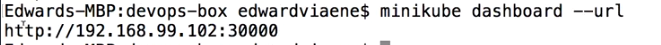

- 65. Resource Usage Monitoring

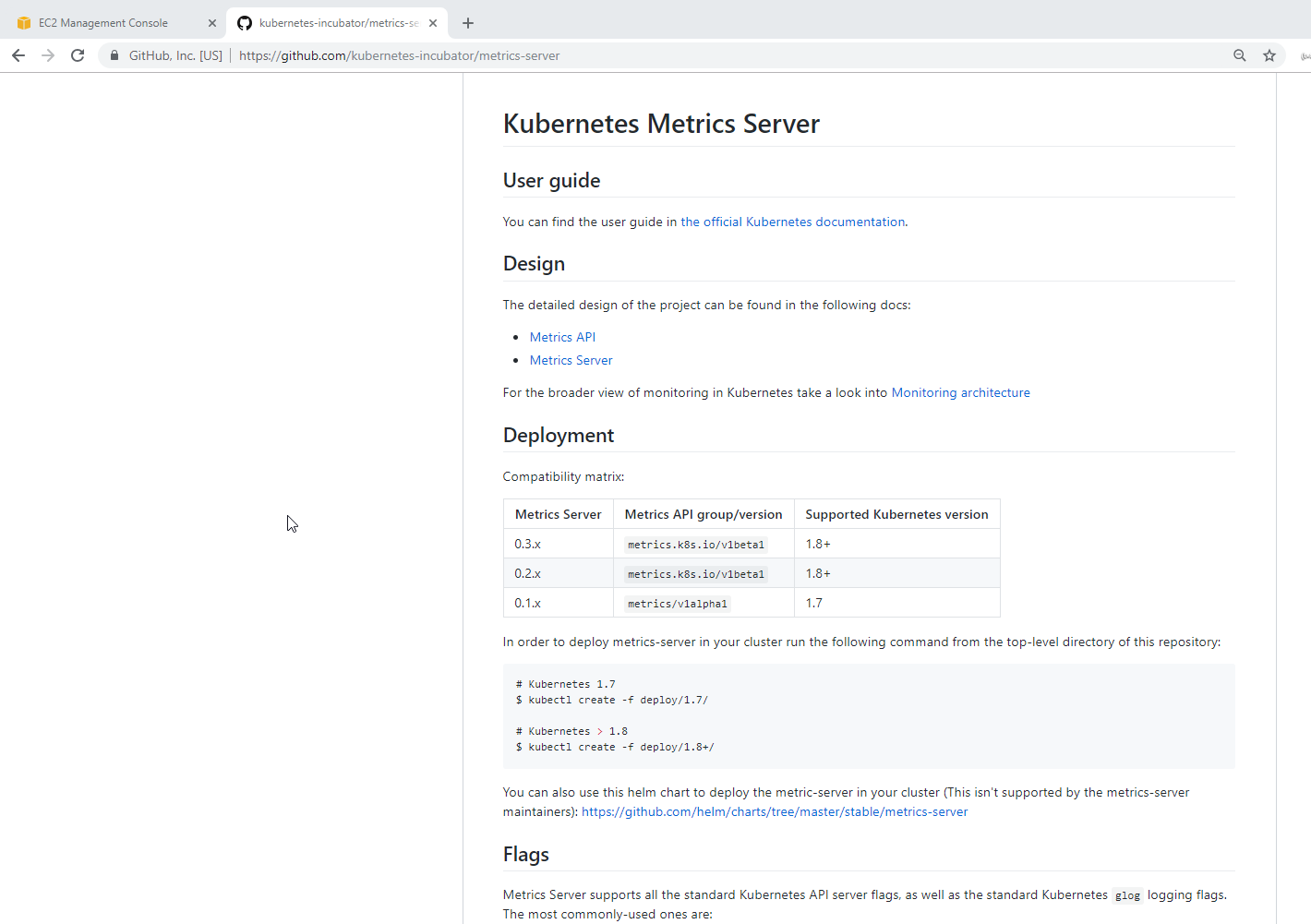

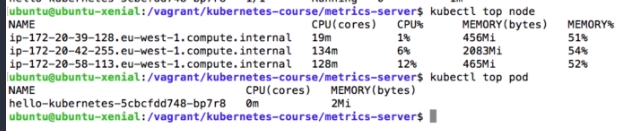

- 66. Demo: Resource Monitoring using Metrics Server

- 67. Demo: Resource Usage Monitoring

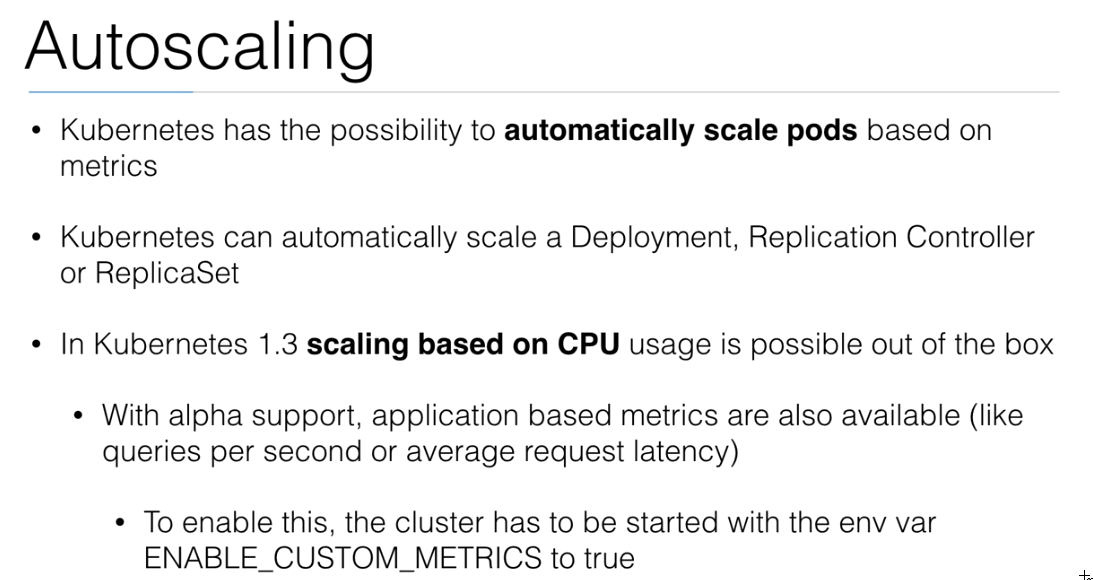

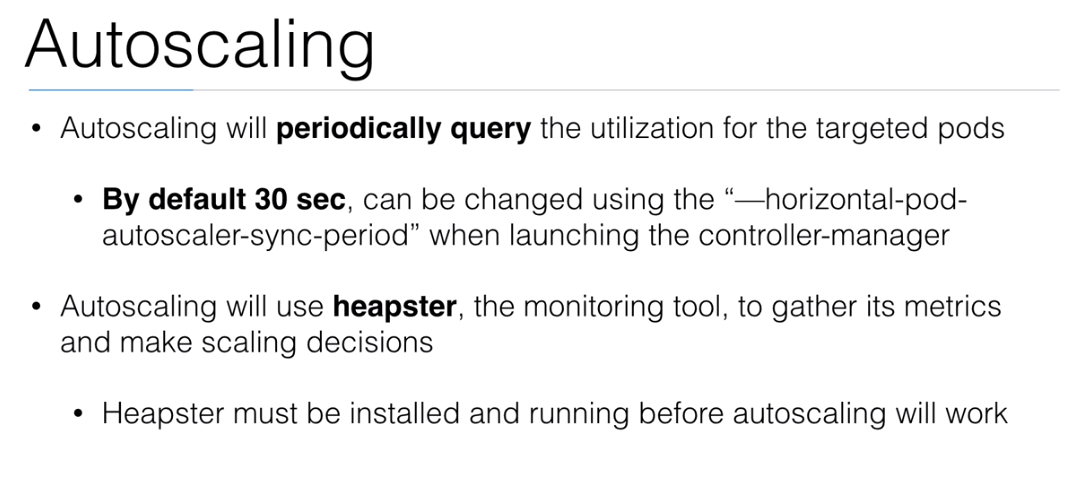

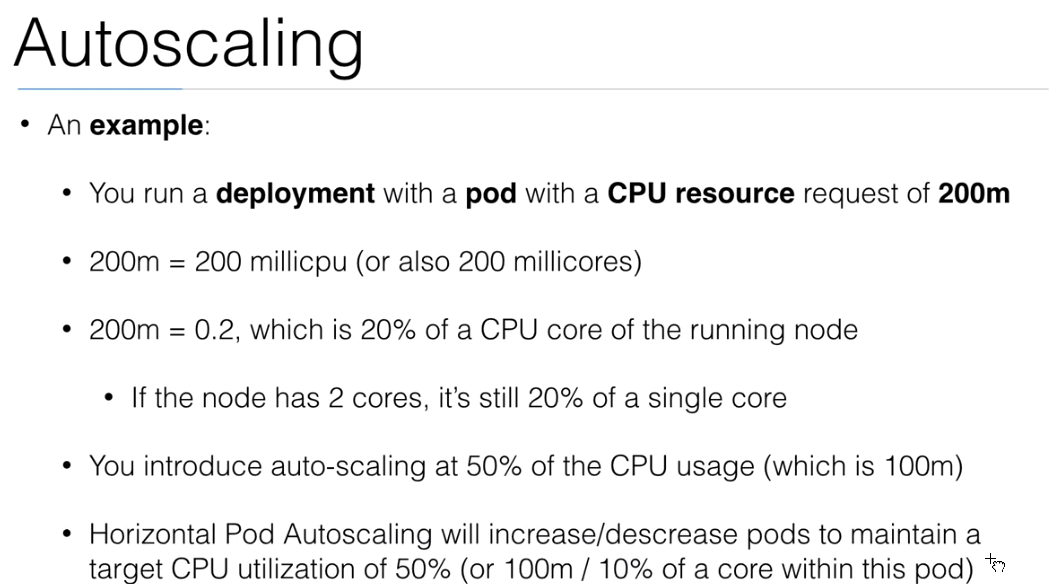

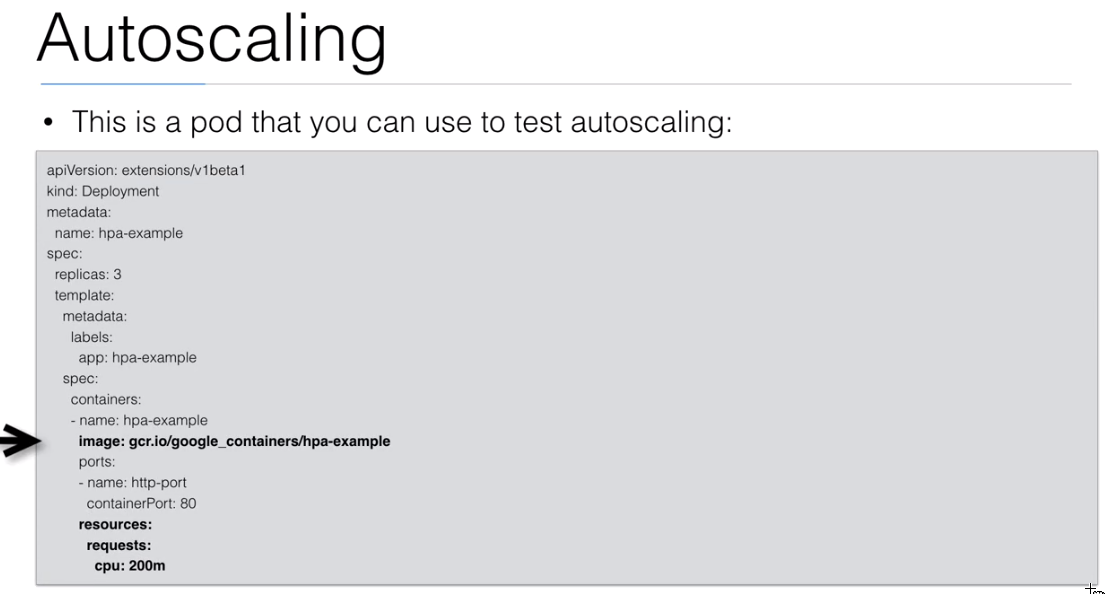

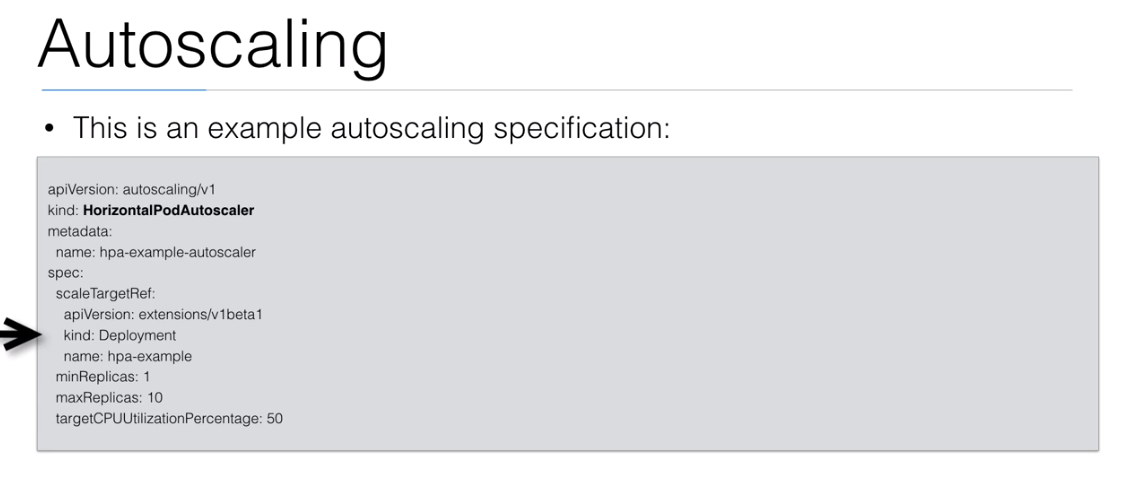

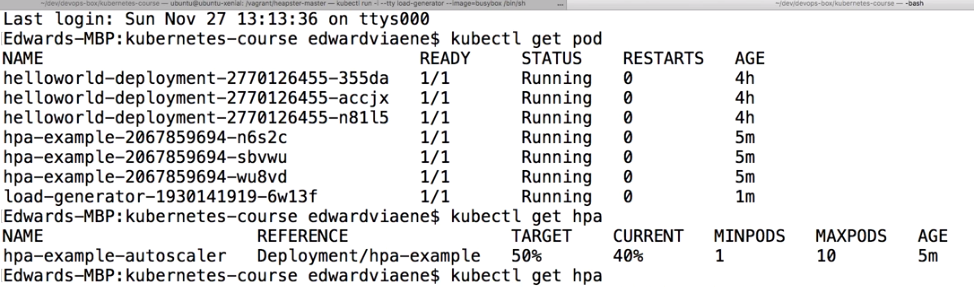

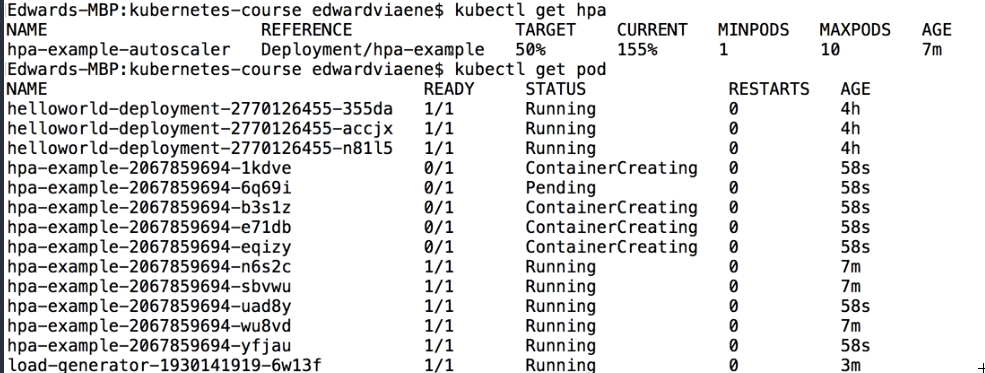

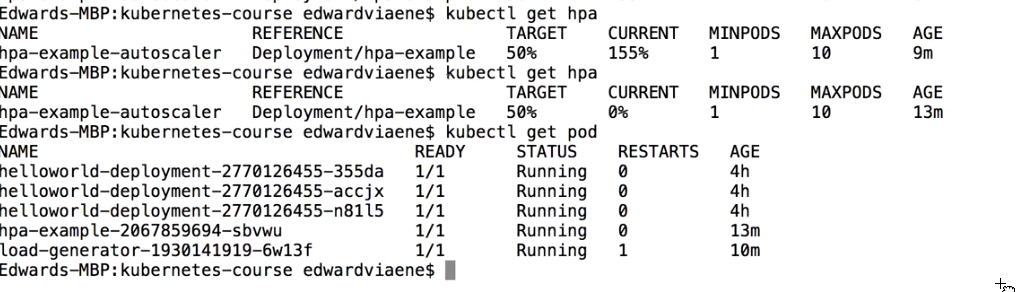

- 68. Autoscaling

- 69. Demo: Autoscaling

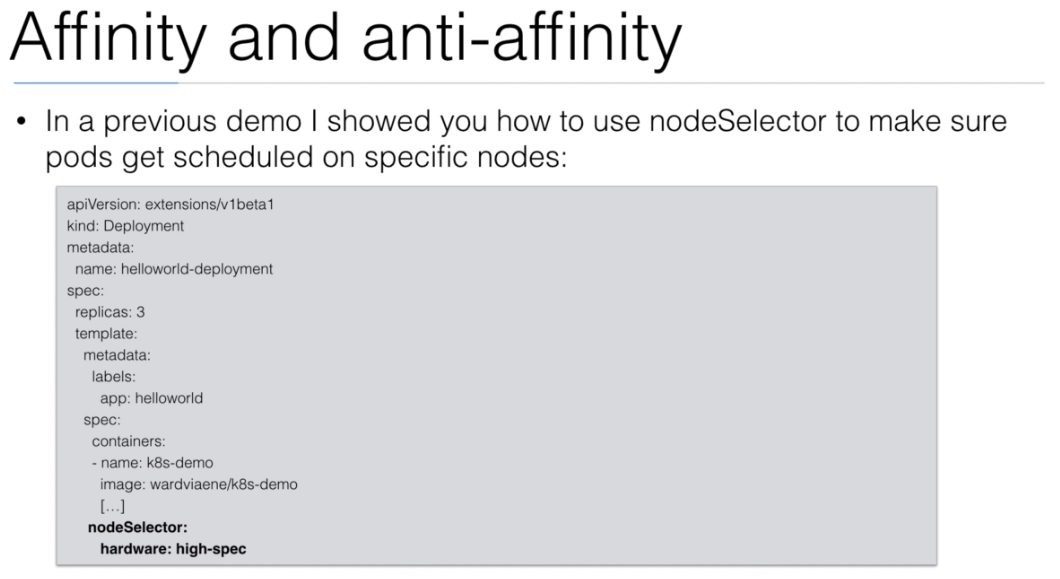

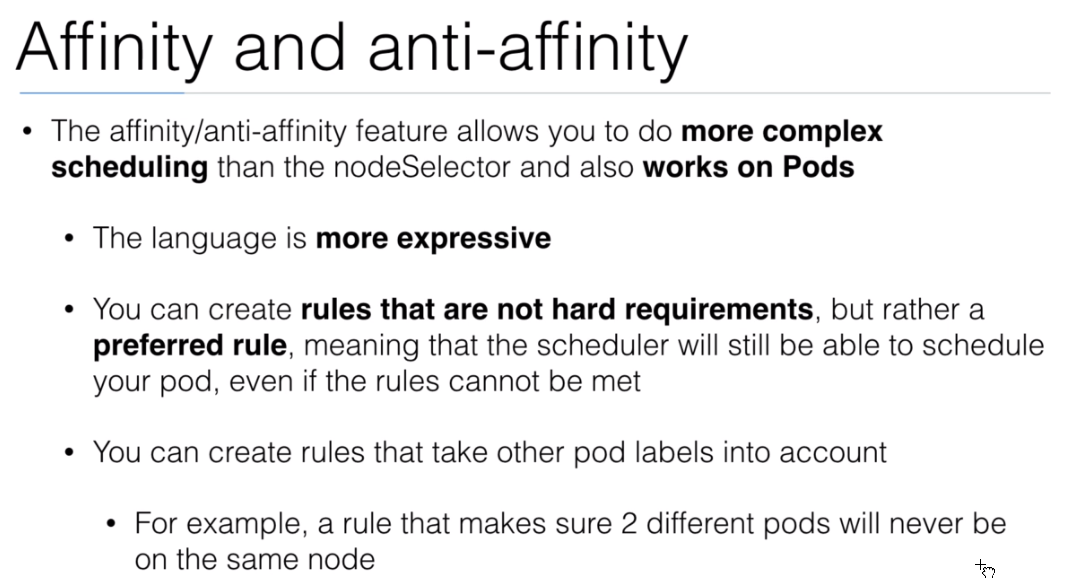

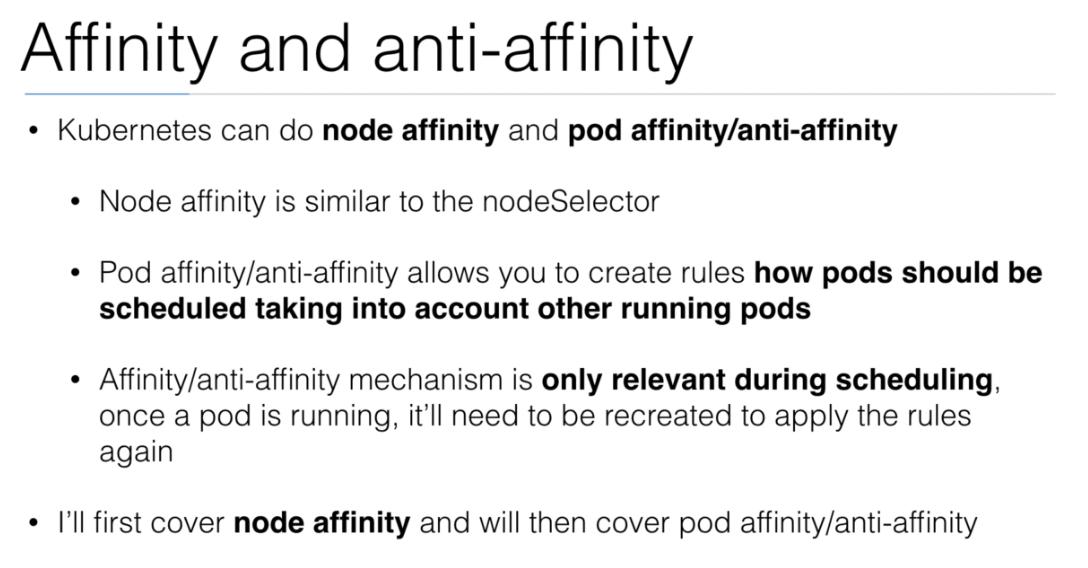

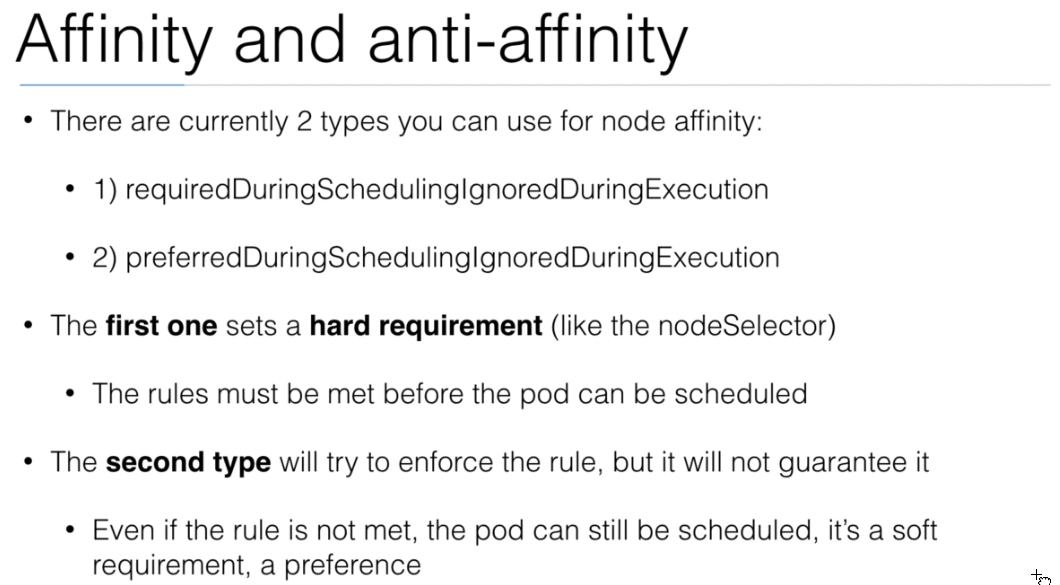

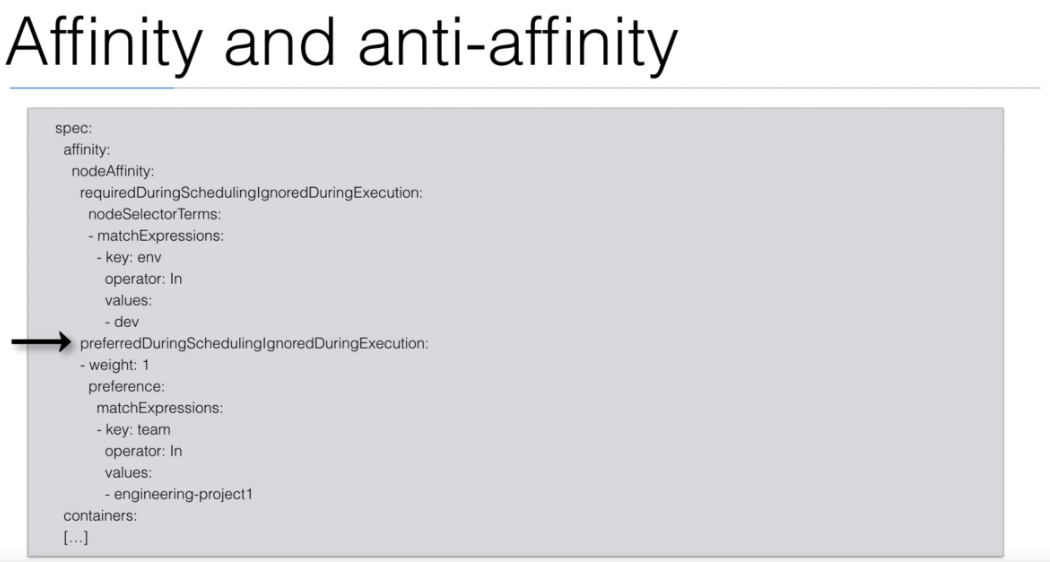

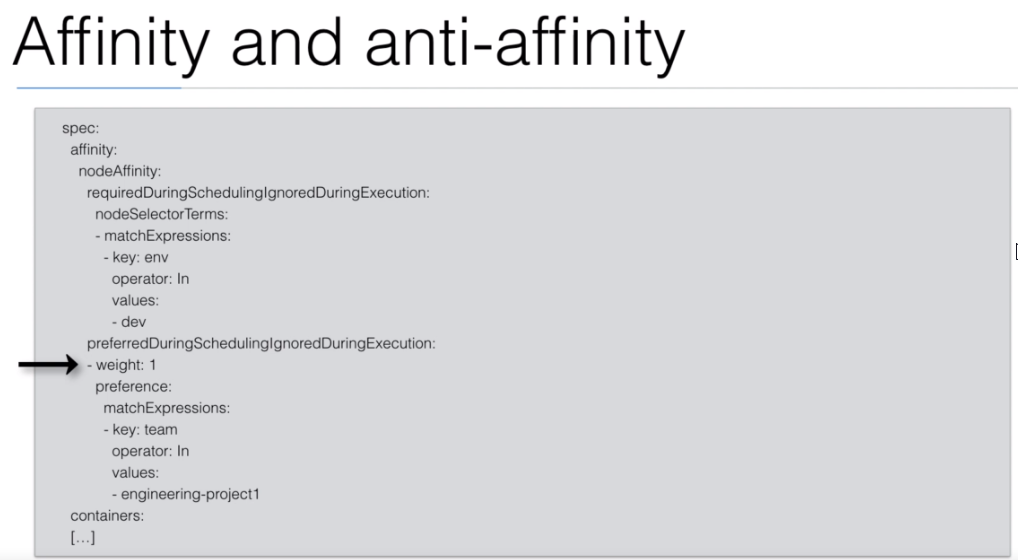

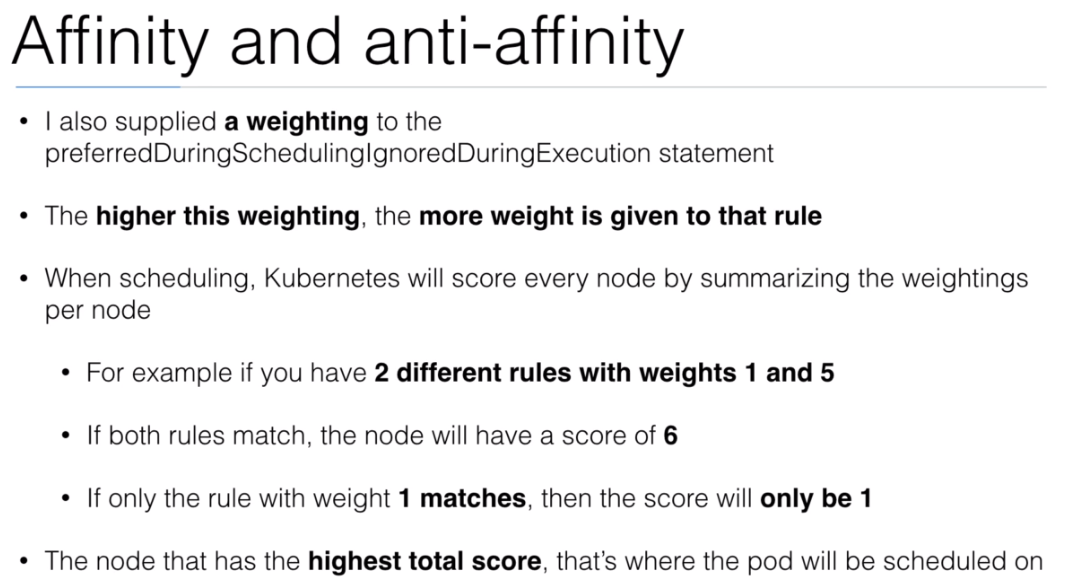

- 70. Affinity / Anti-Affinity

- 71. Demo: Affinity / Anti-Affinity

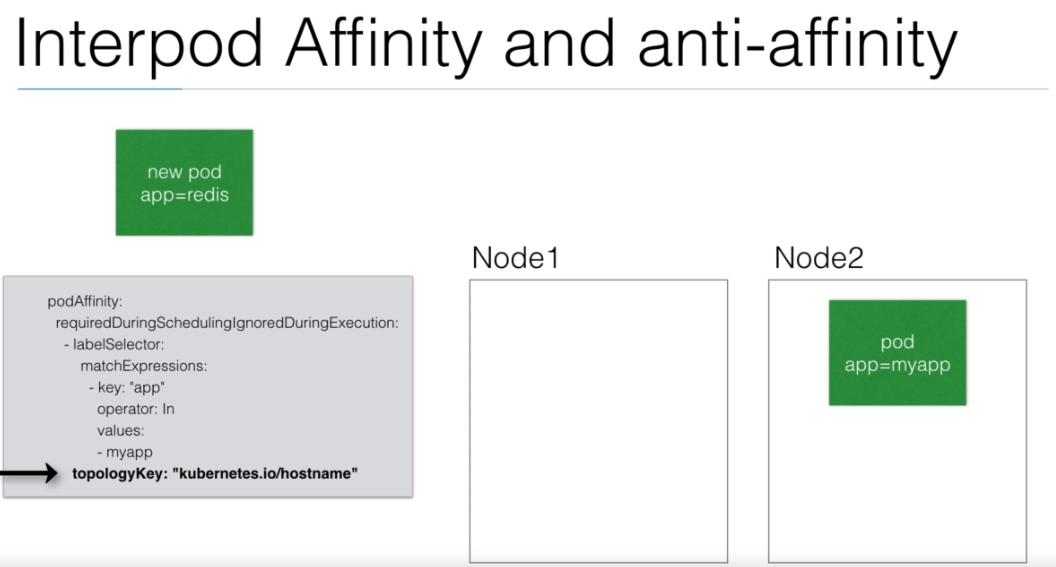

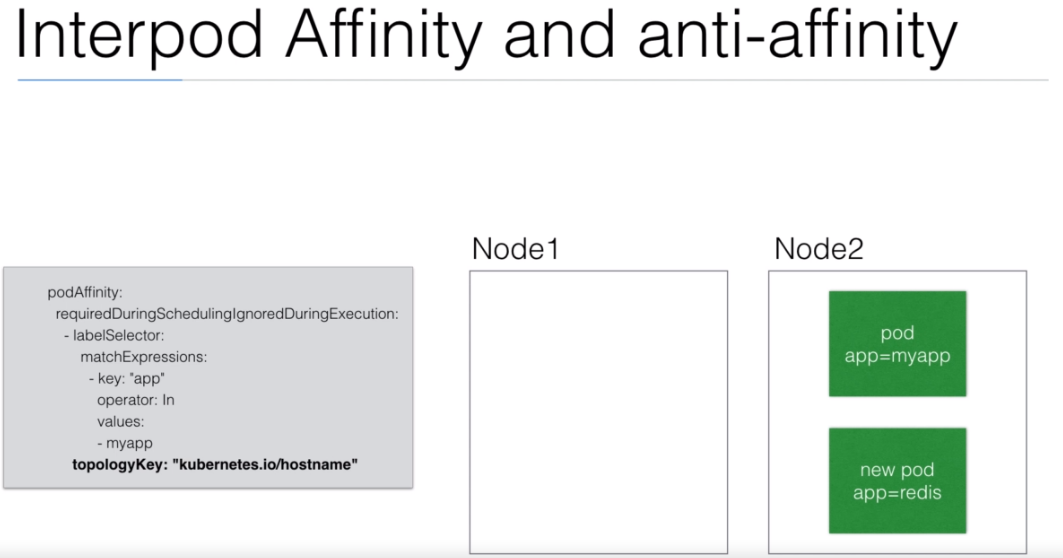

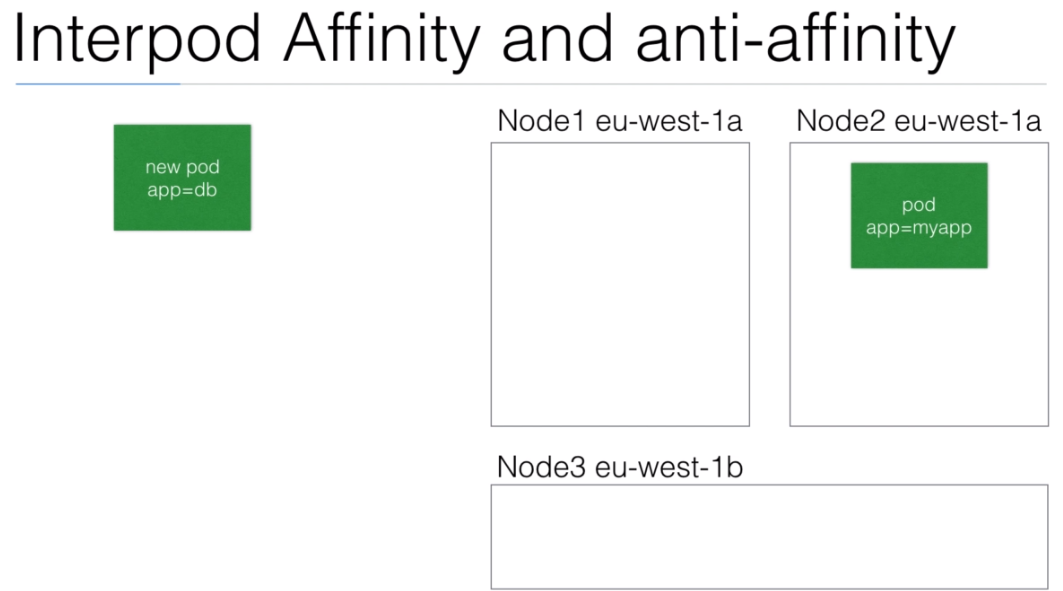

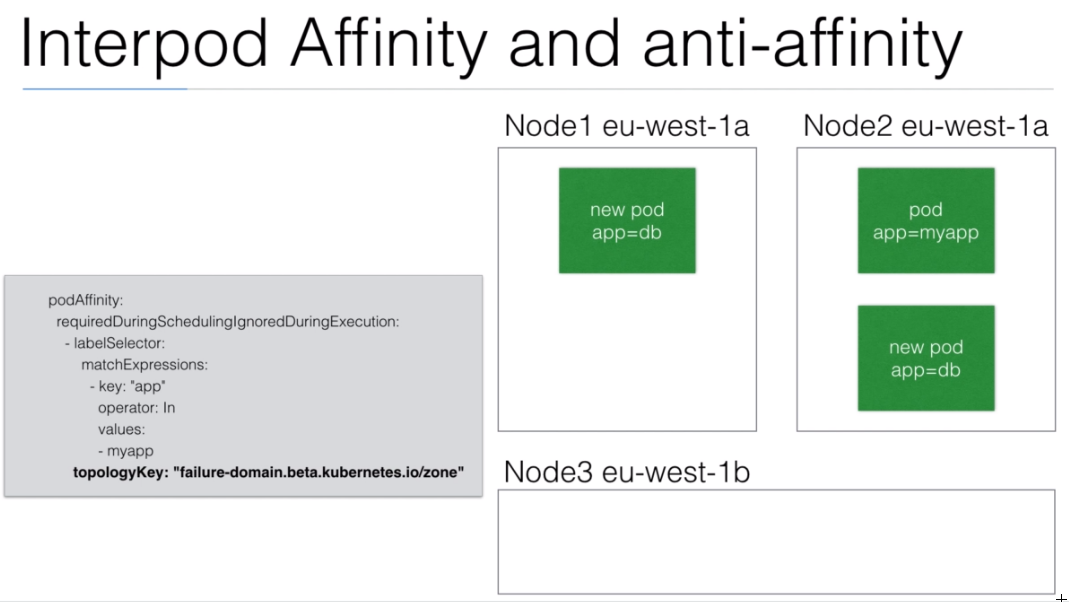

- 72. Interpod Affinity and Anti-affinity

- 73. Demo: Interpod Affinity

- 74. Demo: Interpod Anti-Affinity

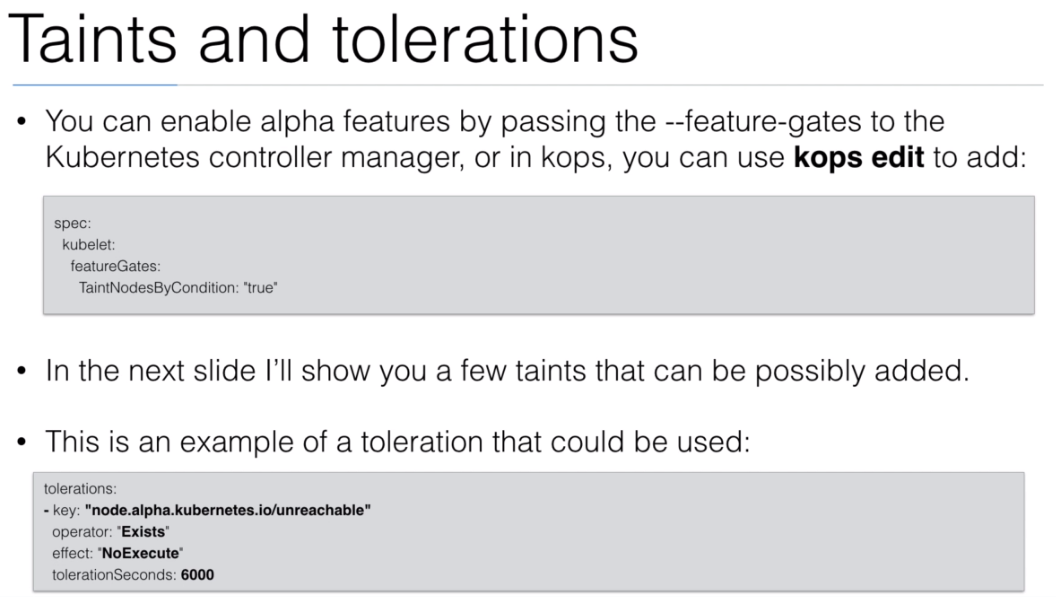

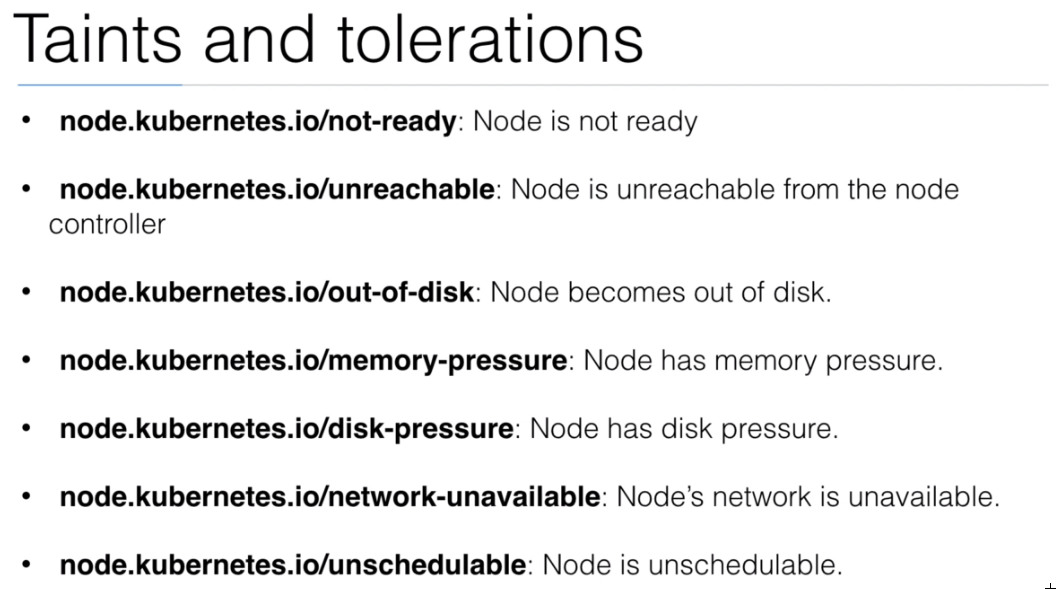

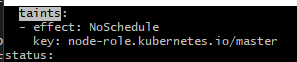

- 75. Taints and Tolerations

- 76. Demo: Taints and Tolerations

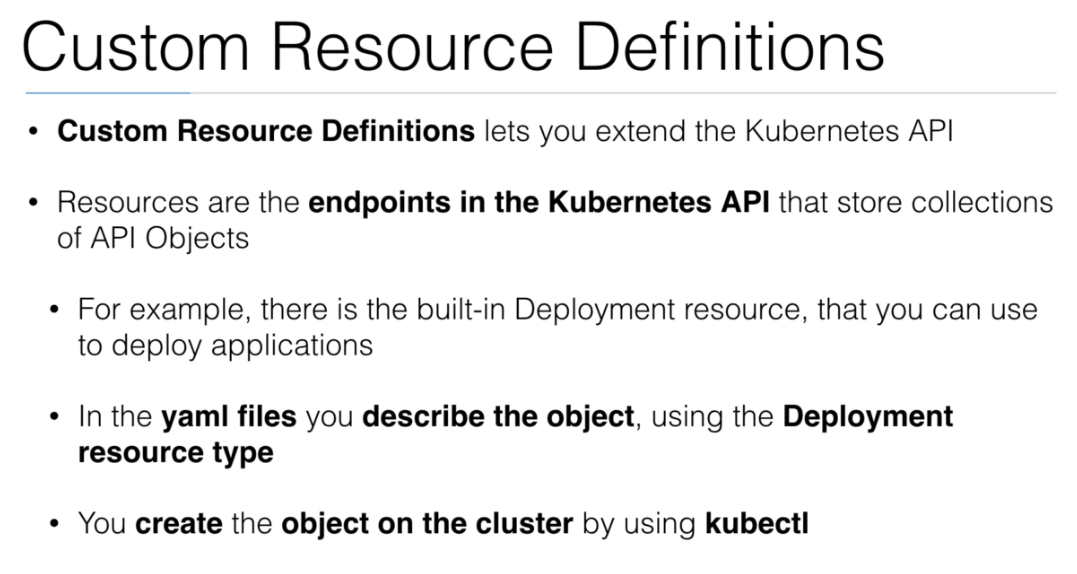

- 77. Custom Resource Definitions (CRDs)

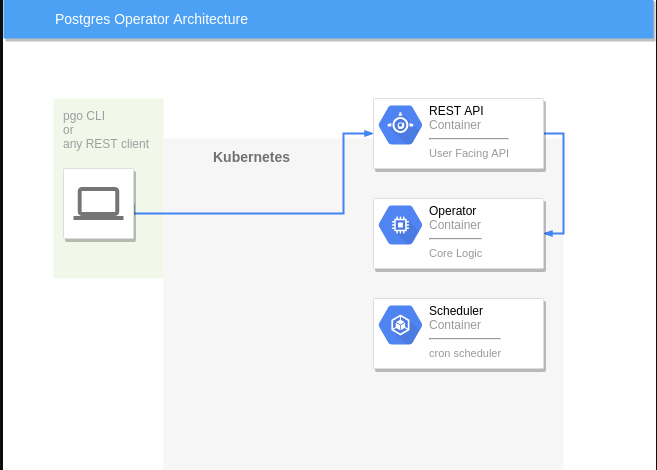

- 78. Operators

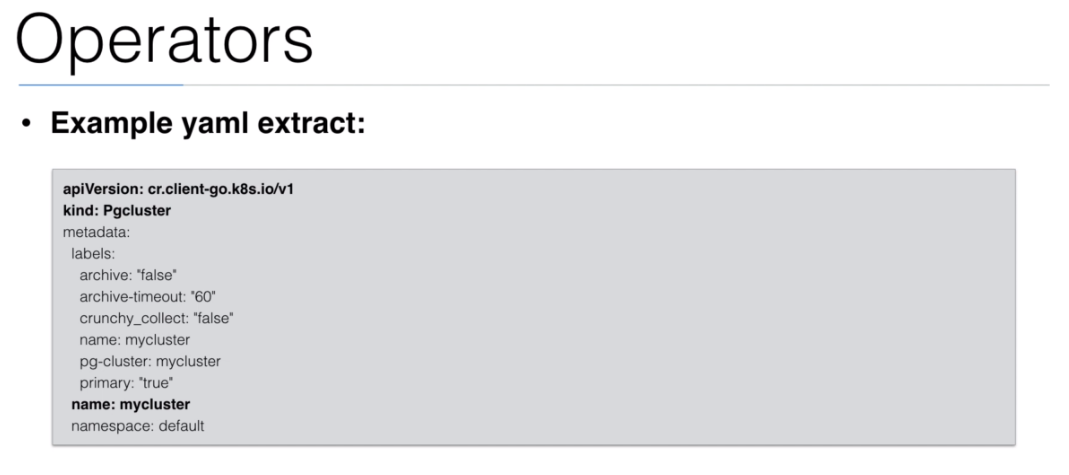

- 79. Demo: postgresql-operator

What I've learned

- Install and configure Kubernetes (on your laptop/desktop or production grade cluster on AWS)

- Use Docker Client (with kubernetes), kubeadm, kops, or minikube to setup your cluster

- Be able to run stateless and stateful applications on Kubernetes

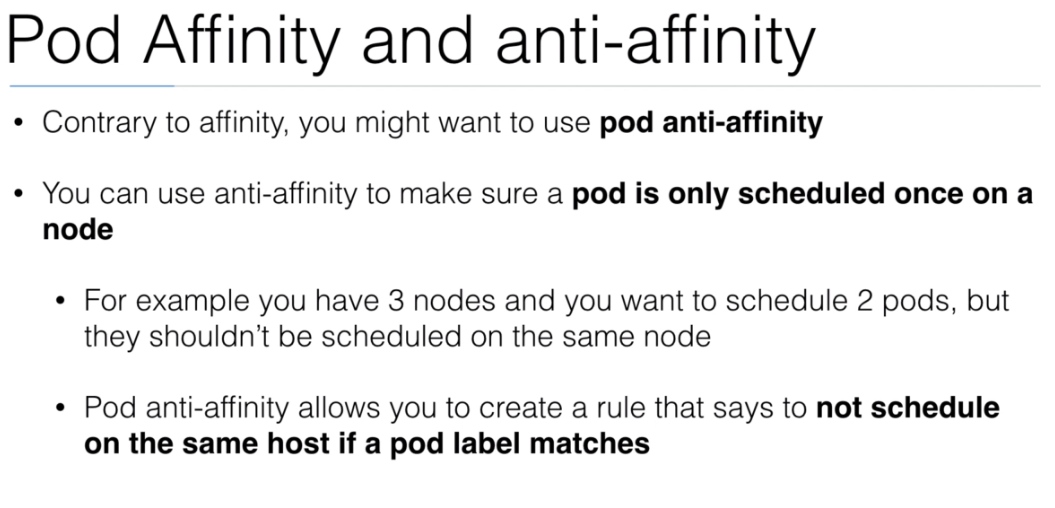

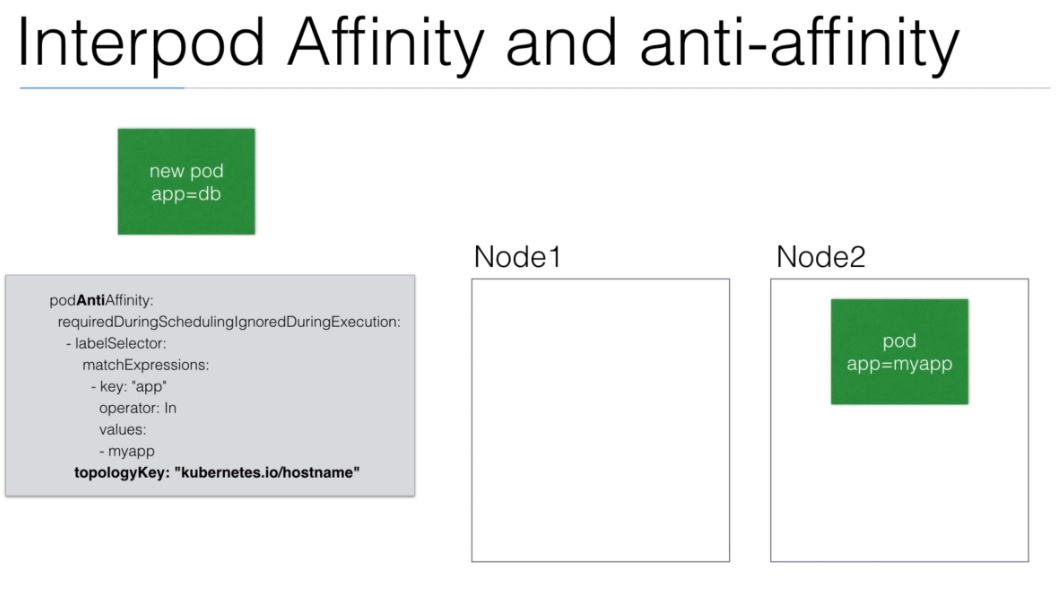

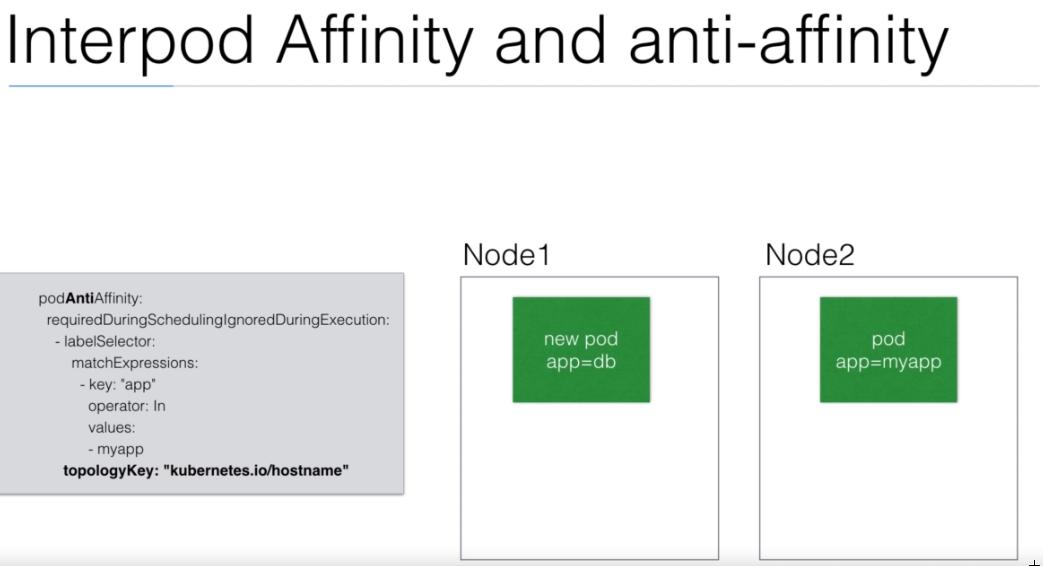

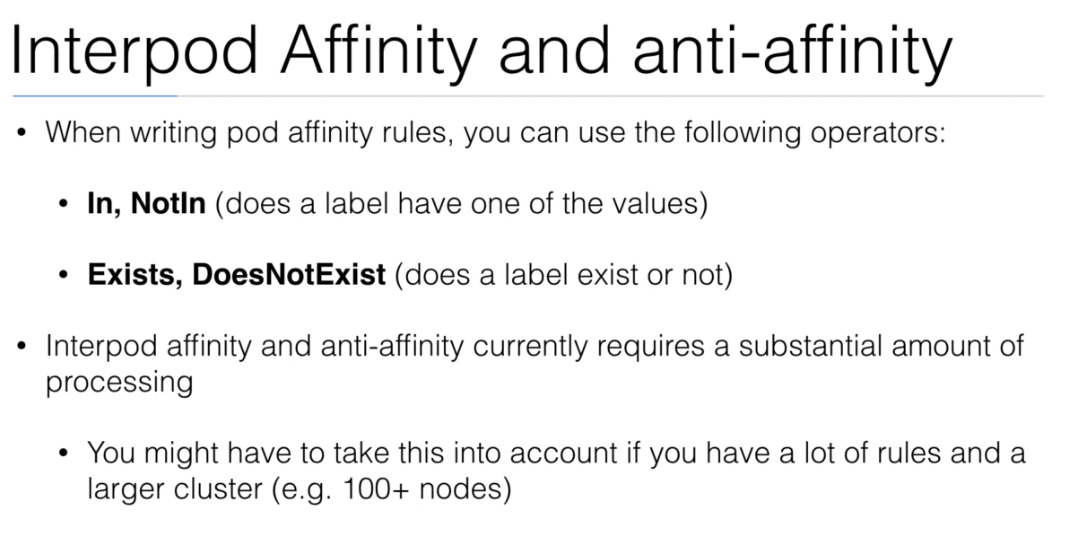

- Use Healthchecks, Secrets, ConfigMaps, placement strategies using Node/Pod affinity / anti-affinity

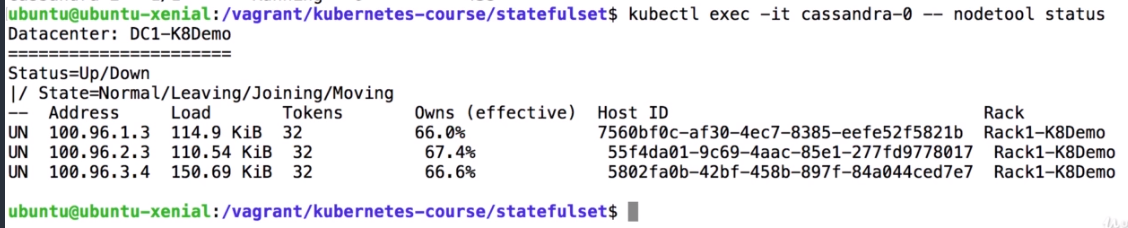

- Use StatefulSets to deploy a Cassandra cluster on Kubernetes

- Add users, set quotas/limits, do node maintenance, setup monitoring

- Use Volumes to provide persistence to your containers

- Be able to scale your apps using metrics

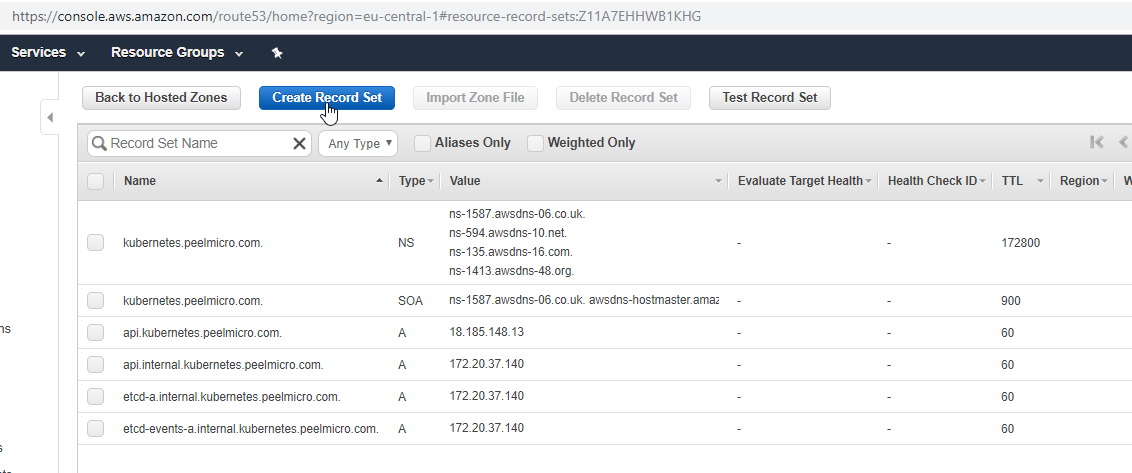

- Package applications with Helm and write your own Helm charts for your applications

- Automatically build and deploy your own Helm Charts using Jenkins

- Install and use kubeless to run functions (Serverless) on Kubernetes

- Install and use Istio to deploy a service mesh on Kubernetes

- Deployment concepts in Kubernetes by using HELM and HELMFILE

41. Demo: Pod Lifecycle

- We are going to execute the

pod-lifecycle/lifecycle.yamldeployment

pod-lifecycle/lifecycle.yaml

kind: Deployment

apiVersion: apps/v1beta1

metadata:

name: lifecycle

spec:

replicas: 1

template:

metadata:

labels:

app: lifecycle

spec:

initContainers:

- name: init

image: busybox

command: ["sh", "-c", "sleep 10"]

containers:

- name: lifecycle-container

image: busybox

command:

[

"sh",

"-c",

'echo $(date +%s): Running >> /timing && echo "The app is running!" && /bin/sleep 120',

]

readinessProbe:

exec:

command:

["sh", "-c", "echo $(date +%s): readinessProbe >> /timing"]

initialDelaySeconds: 35

livenessProbe:

exec:

command:

["sh", "-c", "echo $(date +%s): livenessProbe >> /timing"]

initialDelaySeconds: 35

timeoutSeconds: 30

lifecycle:

postStart:

exec:

command:

[

"sh",

"-c",

"echo $(date +%s): postStart >> /timing && sleep 10 && echo $(date +%s): end postStart >> /timing",

]

preStop:

exec:

command:

[

"sh",

"-c",

"echo $(date +%s): preStop >> /timing && sleep 10",

]

- On one shell execute

kubernetes-course$ watch -n1 kubectl get pods

NAME READY STATUS RESTARTS AGE

lifecycle-79655dfc59-d5njq 0/1 Init:0/1 0 6s

Every 1.0s: kubectl get pods Mon Mar 18 18:32:21 2019

NAME READY STATUS RESTARTS AGE

lifecycle-79655dfc59-d5njq 0/1 Running 0 45s

Every 1.0s: kubectl get pods Mon Mar 18 18:33:08 2019

NAME READY STATUS RESTARTS AGE

lifecycle-79655dfc59-d5njq 1/1 Running 0 91s

Every 1.0s: kubectl get pods Mon Mar 18 18:36:15 2019

NAME READY STATUS RESTARTS AGE

lifecycle-79655dfc59-d5njq 0/1 CrashLoopBackOff 1 4m38s

Every 1.0s: kubectl get pods Mon Mar 18 18:36:38 2019

NAME READY STATUS RESTARTS AGE

lifecycle-79655dfc59-d5njq 0/1 Running 2 5m1s

Every 1.0s: kubectl get pods Mon Mar 18 18:37:22 2019

NAME READY STATUS RESTARTS AGE

lifecycle-79655dfc59-d5njq 1/1 Running 2 5m45s

- On the other shell create the deployment

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19d

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f pod-lifecycle/lifecycle.yaml

deployment.apps/lifecycle created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl exec -t lifecycle-79655dfc59-d5njq -- cat /timing

1552934034: Running

1552934034: postStart

1552934044: end postStart

1552934069: livenessProbe

1552934078: readinessProbe

1552934079: livenessProbe

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

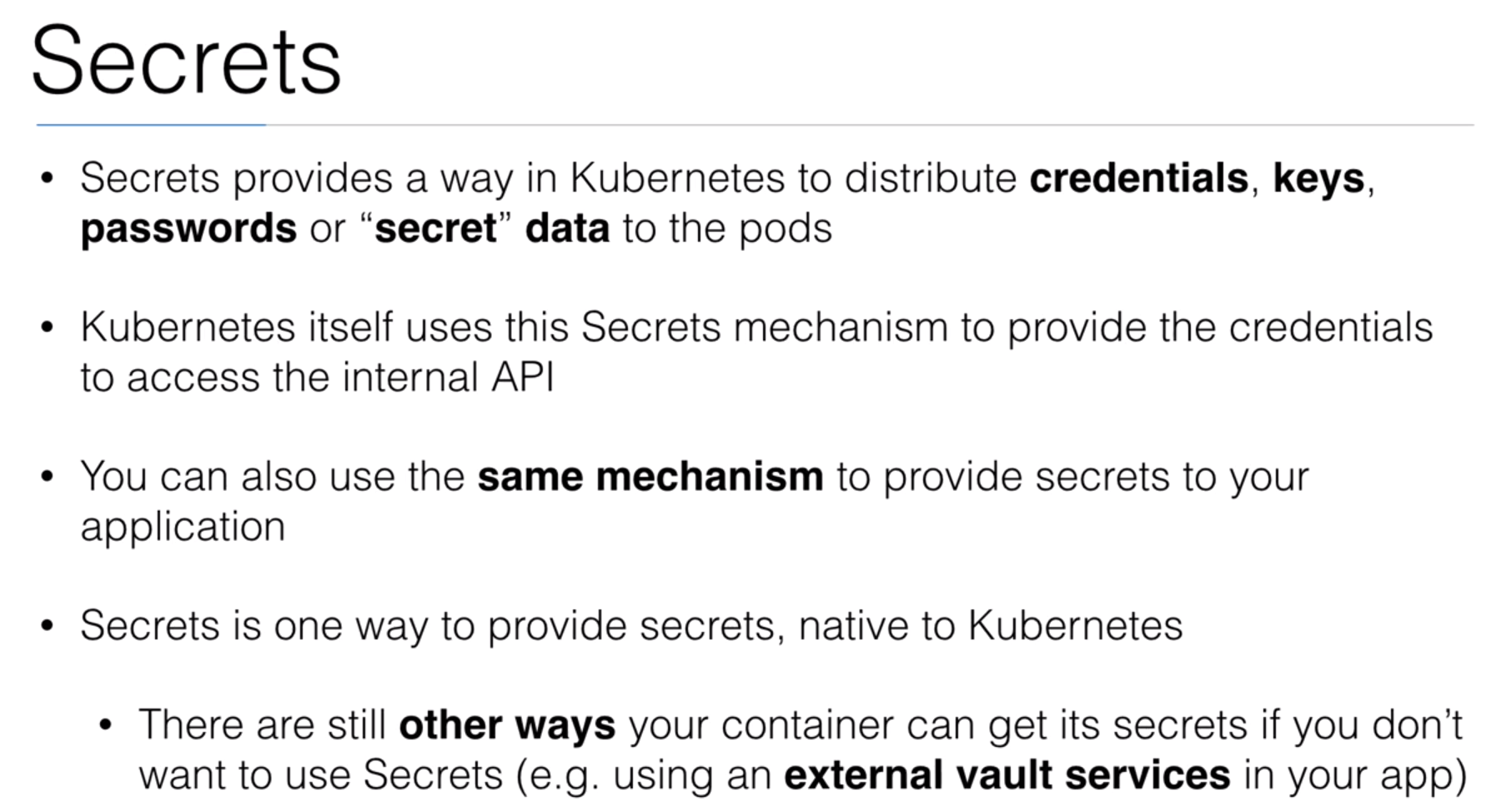

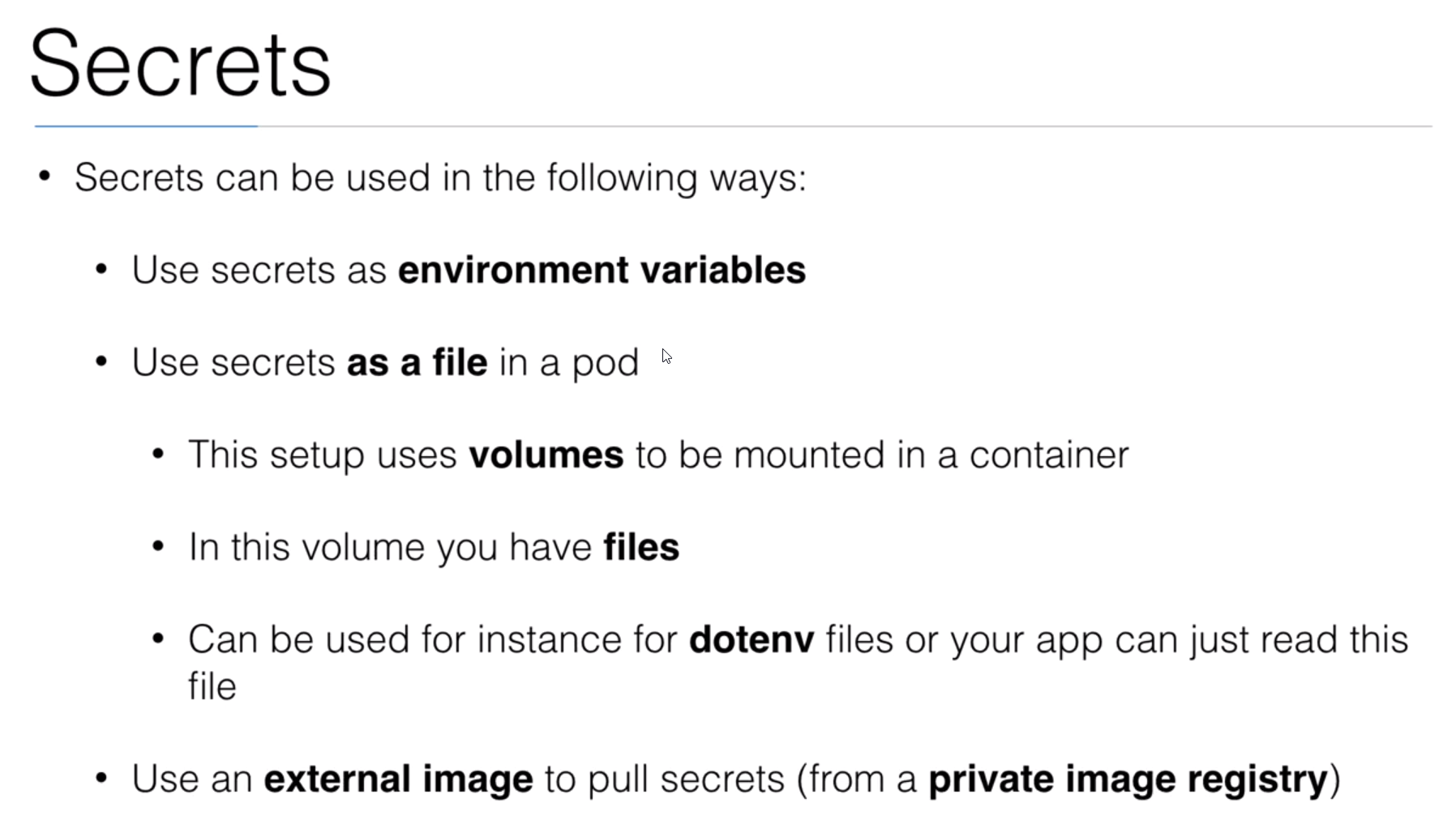

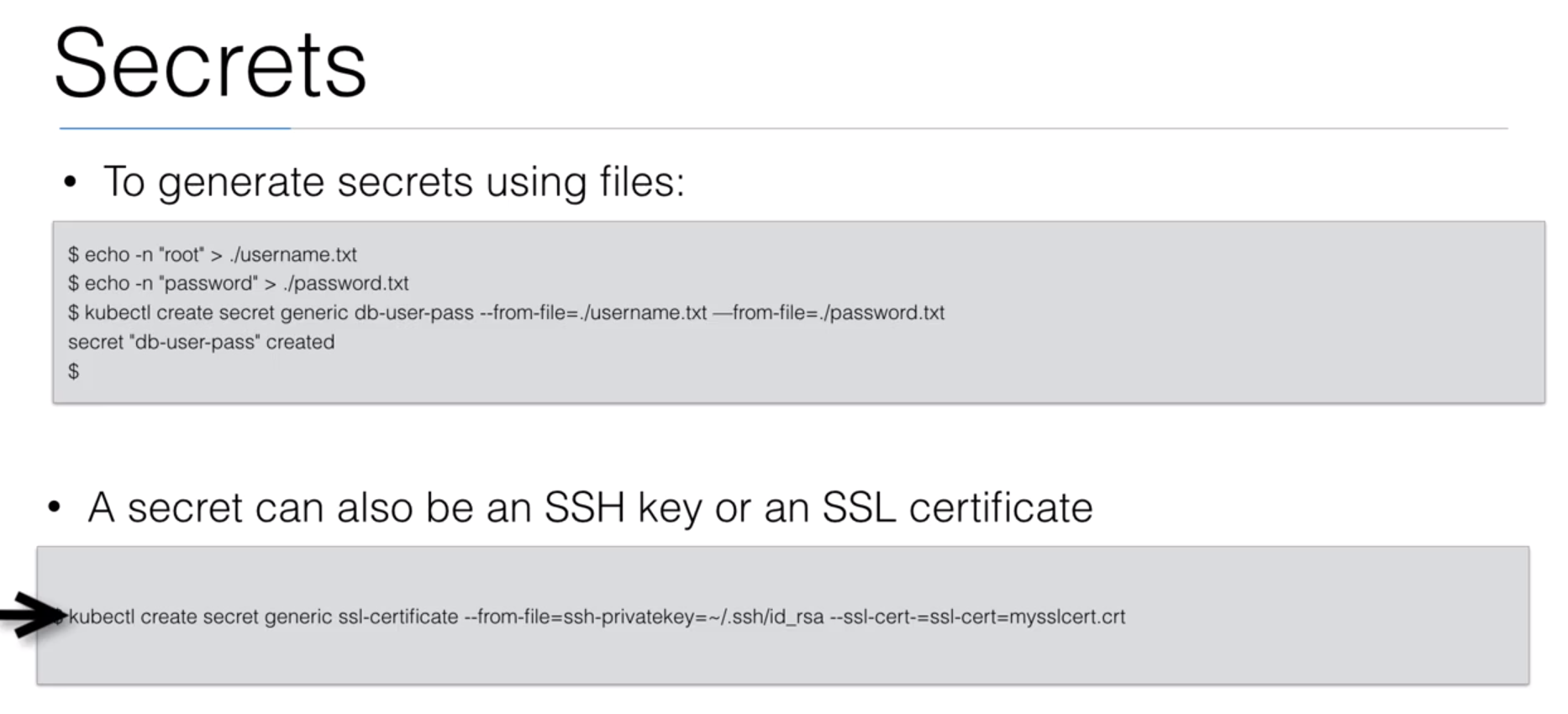

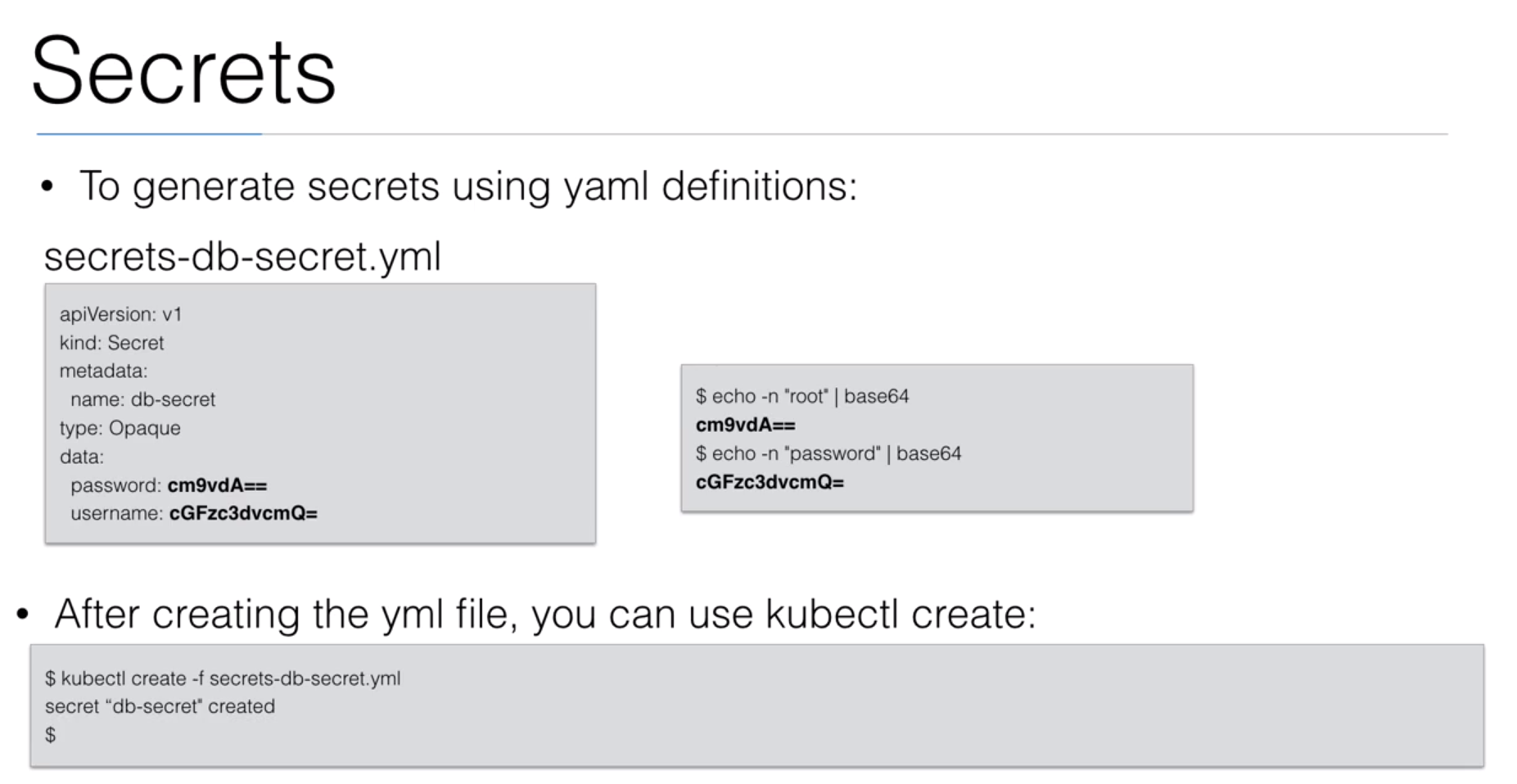

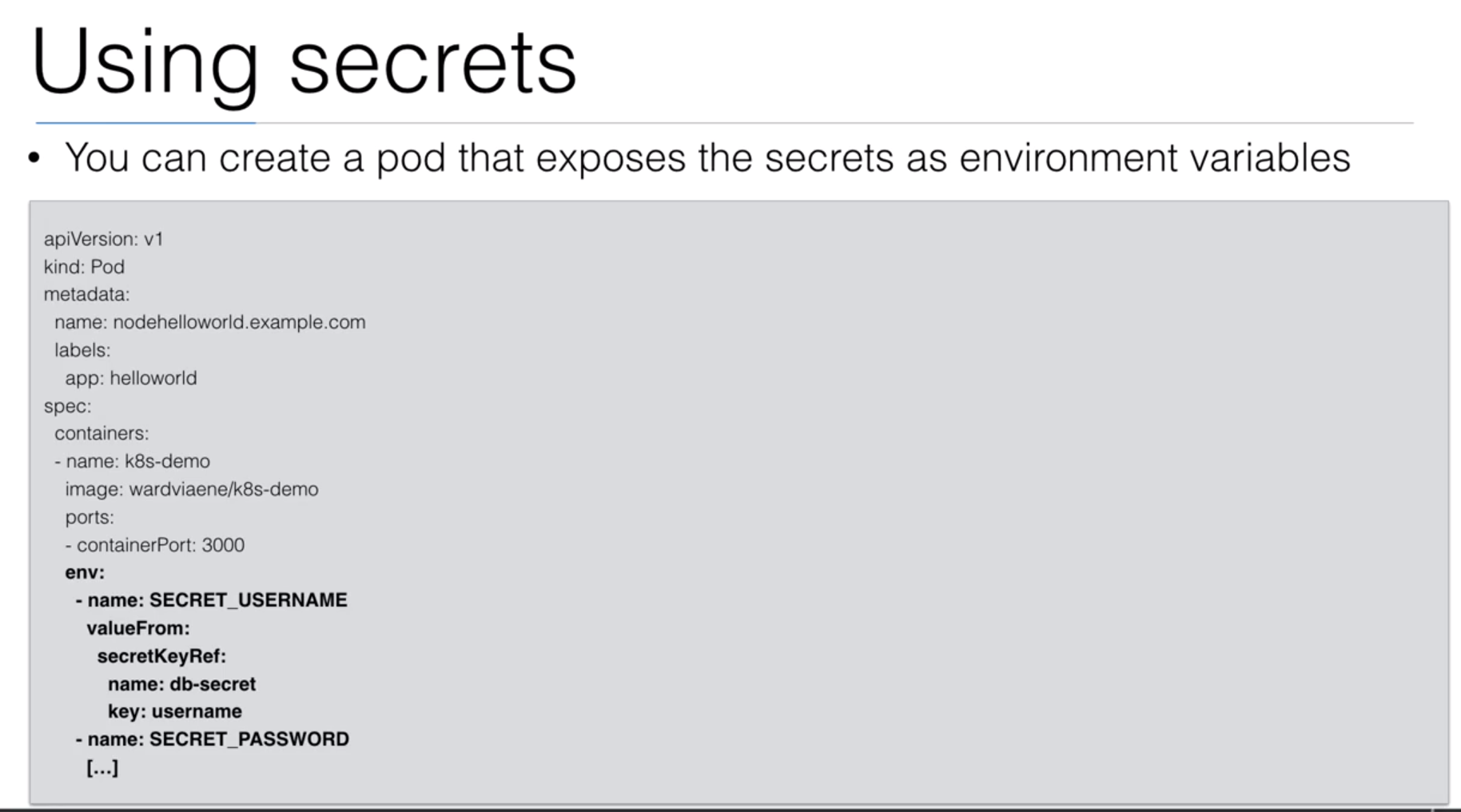

42. Secrets

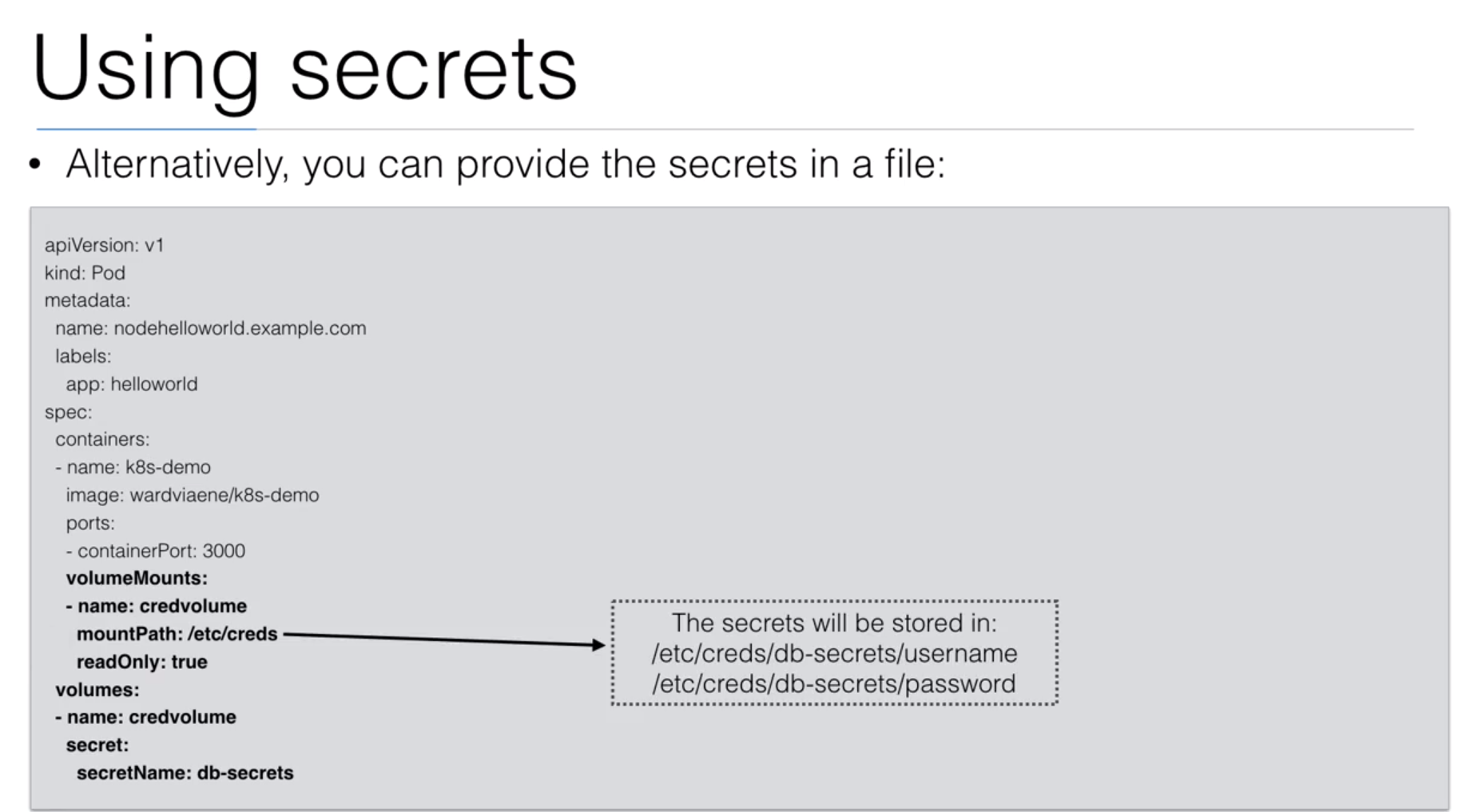

43. Demo: Credentials using Volumes

- We are going to use the

deployment/helloworld-secrets.ymldocument to create the deployment.

deployment/helloworld-secrets.yml

apiVersion: v1

kind: Secret

metadata:

name: db-secrets

type: Opaque

data:

username: cm9vdA==

password: cGFzc3dvcmQ=

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f deployment/helloworld-secrets.yml

secret/db-secrets created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19d

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get deployment

No resources found.

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are also going to use the

deployment/helloworld-secrets-volumes.ymldocument to create another deployment.

deployment/helloworld-secrets-volumes.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: helloworld-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: helloworld

spec:

containers:

- name: docker-nodejs-demo

image: peelmicro/docker-nodejs-demo

ports:

- name: nodejs-port

containerPort: 3000

volumeMounts:

- name: cred-volume

mountPath: /etc/creds

readOnly: true

volumes:

- name: cred-volume

secret:

secretName: db-secrets

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f deployment/helloworld-secrets-volumes.yml

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/helloworld-deployment-6555768dbf-cvrg4 1/1 Running 0 11s

pod/helloworld-deployment-6555768dbf-mnr2z 1/1 Running 0 11s

pod/helloworld-deployment-6555768dbf-qvlmf 1/1 Running 0 11s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/helloworld-deployment 3/3 3 3 11s

NAME DESIRED CURRENT READY AGE

replicaset.apps/helloworld-deployment-6555768dbf 3 3 3 11s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl describe pod/helloworld-deployment-6555768dbf-cvrg4

Name: helloworld-deployment-6555768dbf-cvrg4

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: kubernetes-node-01/167.99.202.93

Start Time: Mon, 18 Mar 2019 19:00:53 +0000

Labels: app=helloworld

pod-template-hash=6555768dbf

Annotations: cni.projectcalico.org/podIP: 10.244.1.53/32

Status: Running

IP: 10.244.1.53

Controlled By: ReplicaSet/helloworld-deployment-6555768dbf

Containers:

docker-nodejs-demo:

Container ID: docker://3e9df0a8410e5b41fbb2808cb77c6269e4e0c7656593dd35980daf749785d85a

Image: peelmicro/docker-nodejs-demo

Image ID: docker-pullable://peelmicro/docker-nodejs-demo@sha256:a10d360875d2ae10a9eb1fdd88aee16ddf7b8a5b08b4a903037109ee3c063e7f

Port: 3000/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 18 Mar 2019 19:00:57 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/etc/creds from cred-volume (ro)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rtvbw (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

cred-volume:

Type: Secret (a volume populated by a Secret)

SecretName: db-secrets

Optional: false

default-token-rtvbw:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-rtvbw

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 111s default-scheduler Successfully assigned default/helloworld-deployment-6555768dbf-cvrg4 to kubernetes-node-01

Normal Pulling 110s kubelet, kubernetes-node-01 pulling image "peelmicro/docker-nodejs-demo"

Normal Pulled 107s kubelet, kubernetes-node-01 Successfully pulled image "peelmicro/docker-nodejs-demo"

Normal Created 107s kubelet, kubernetes-node-01 Created container

Normal Started 107s kubelet, kubernetes-node-01 Started container

- We can access at the volume inside the pod

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl exec helloworld-deployment-6555768dbf-cvrg4 -i -t -- /bin/bash

root@helloworld-deployment-6555768dbf-cvrg4:/app# cat /etc/creds/username

rootroot@helloworld-deployment-6555768dbf-cvrg4:/app# cat /etc/creds/password

passwordroot@helloworld-deployment-6555768dbf-cvrg4:/app# exit

exit

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

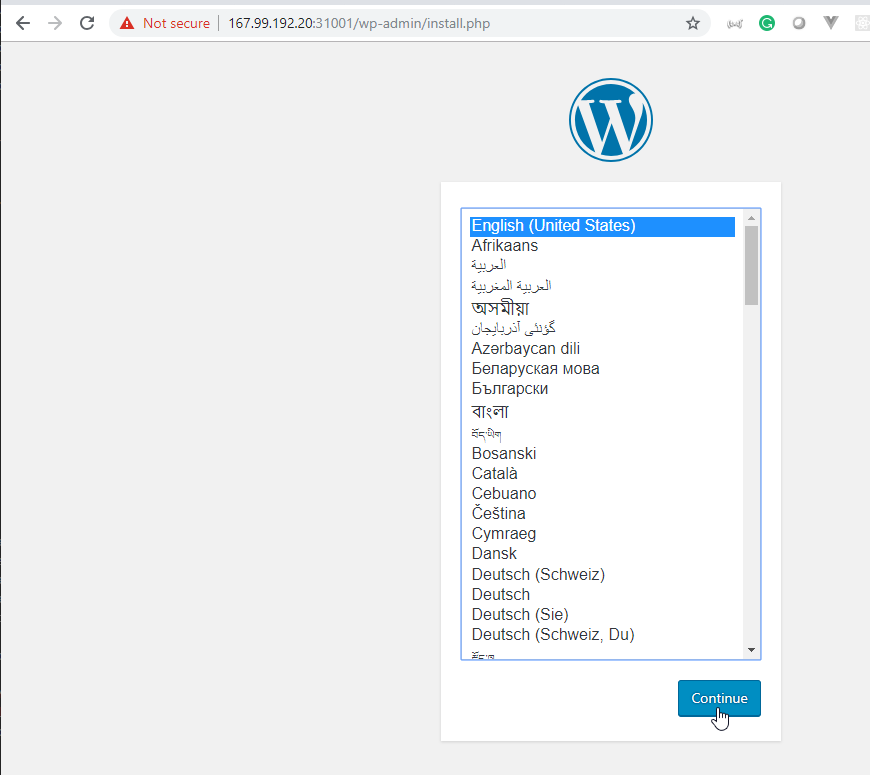

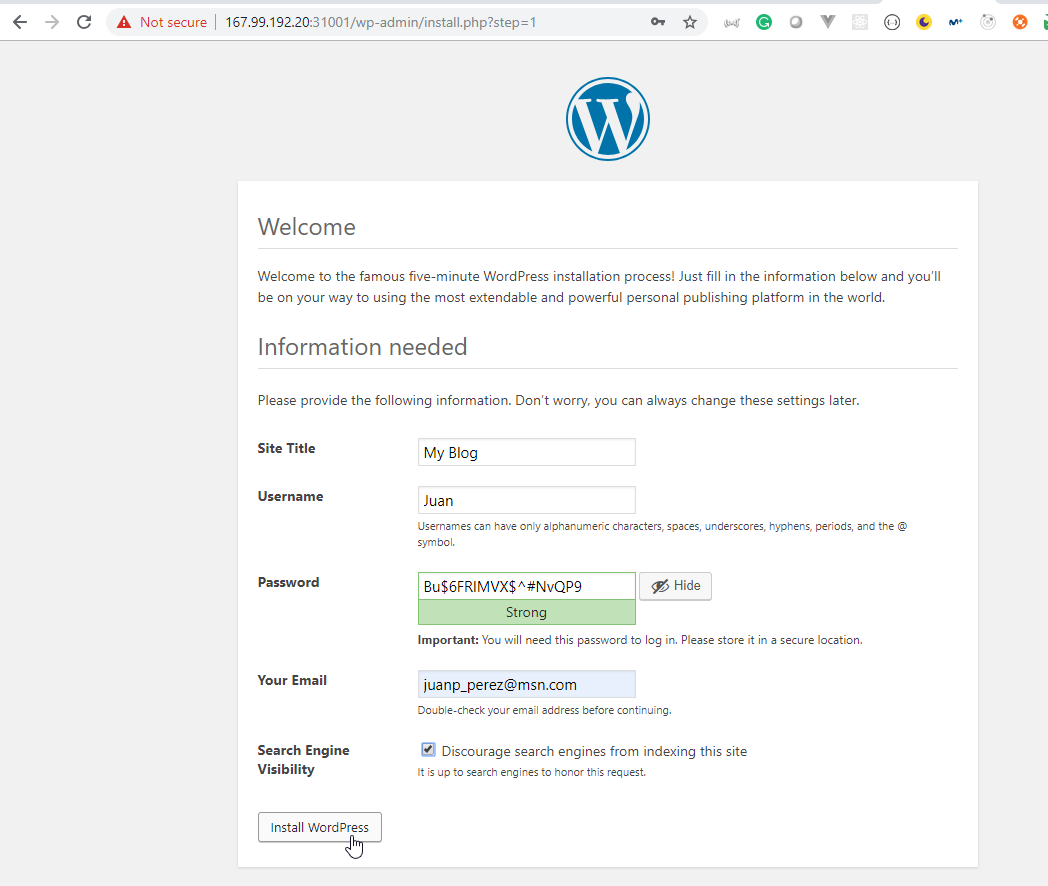

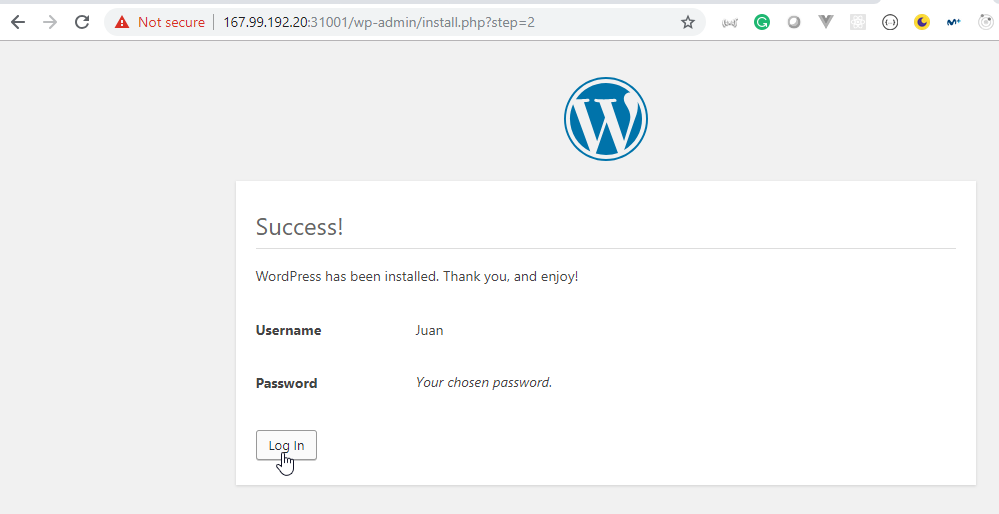

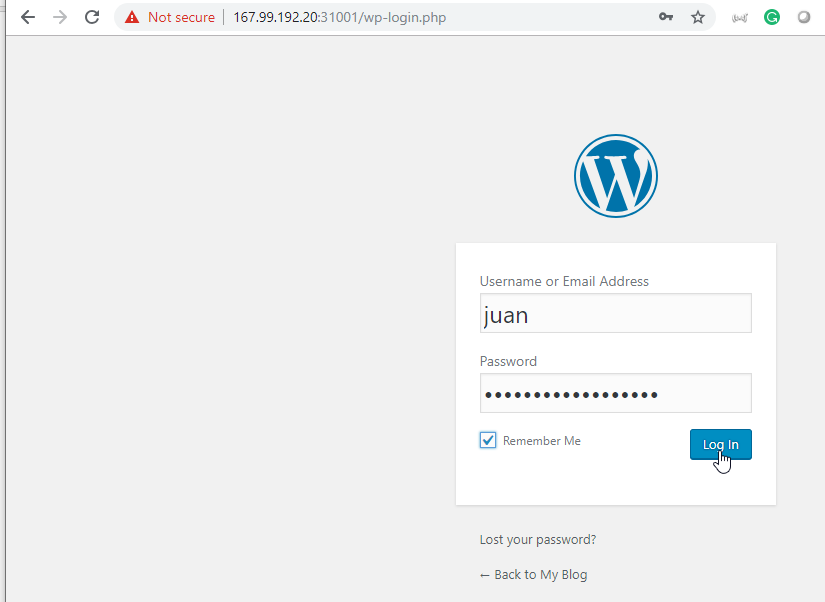

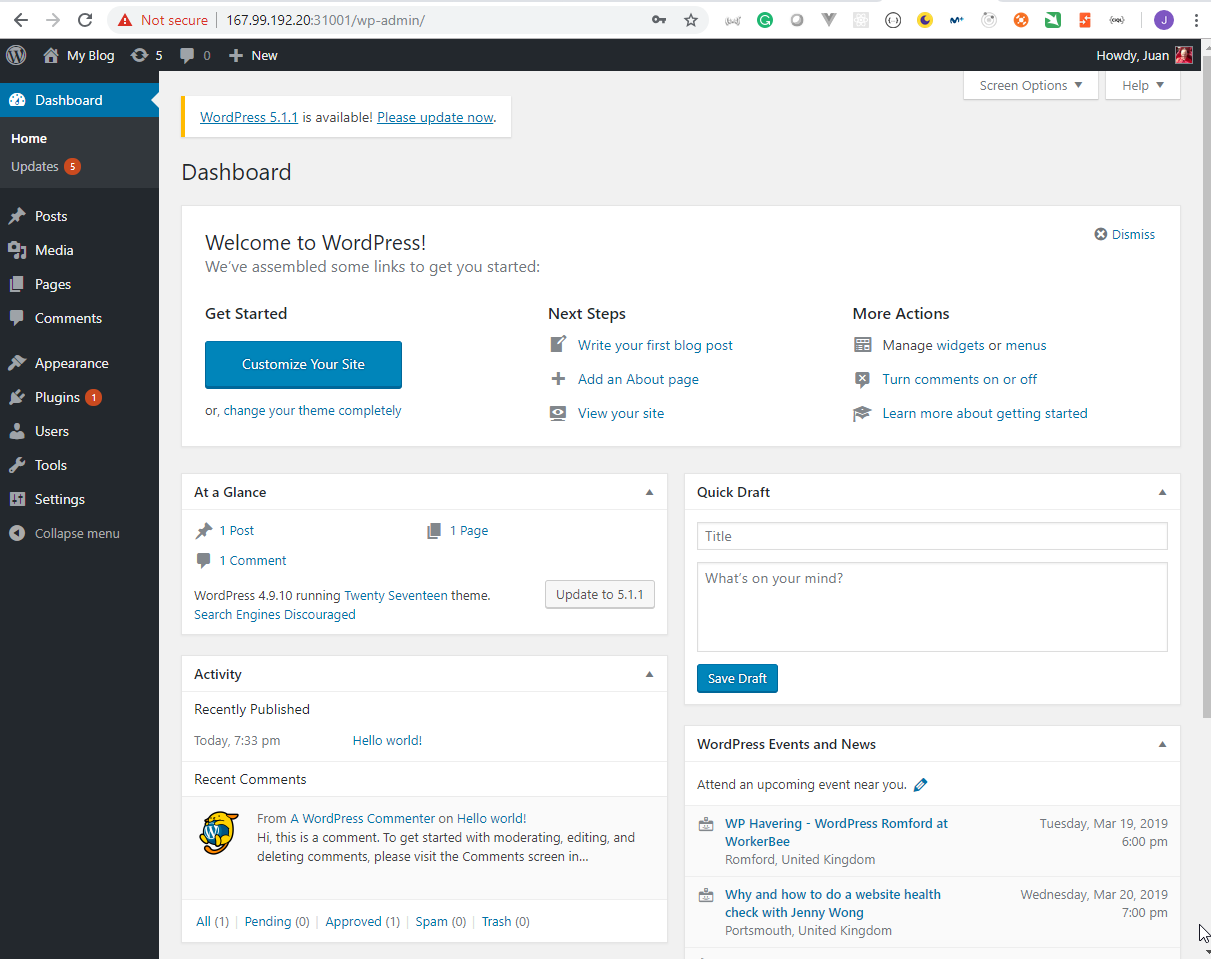

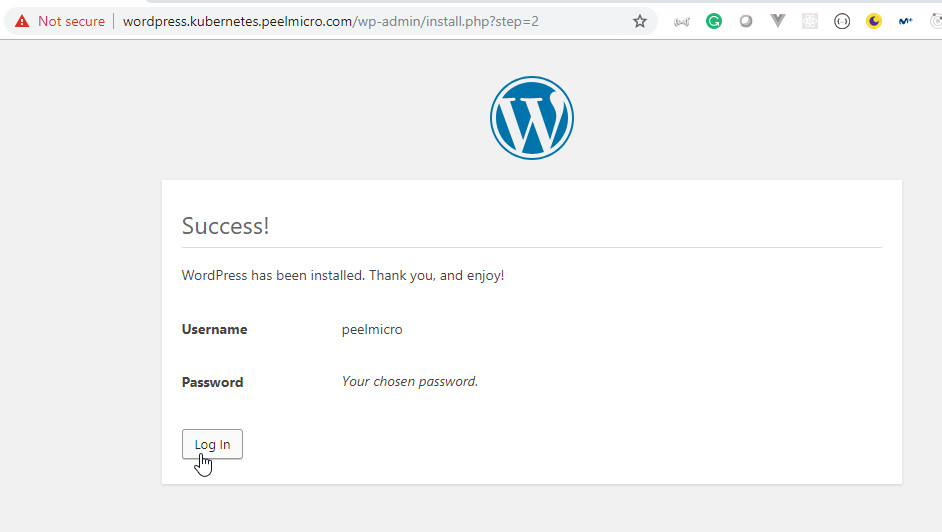

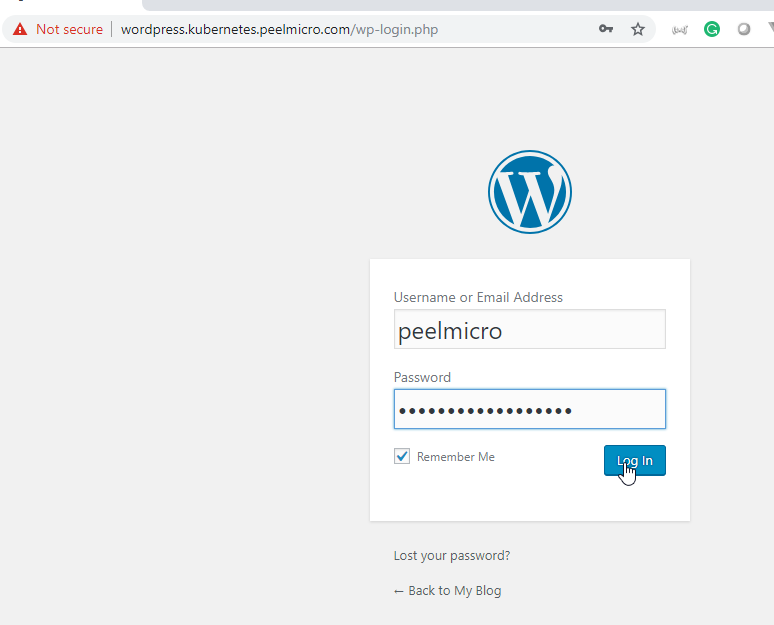

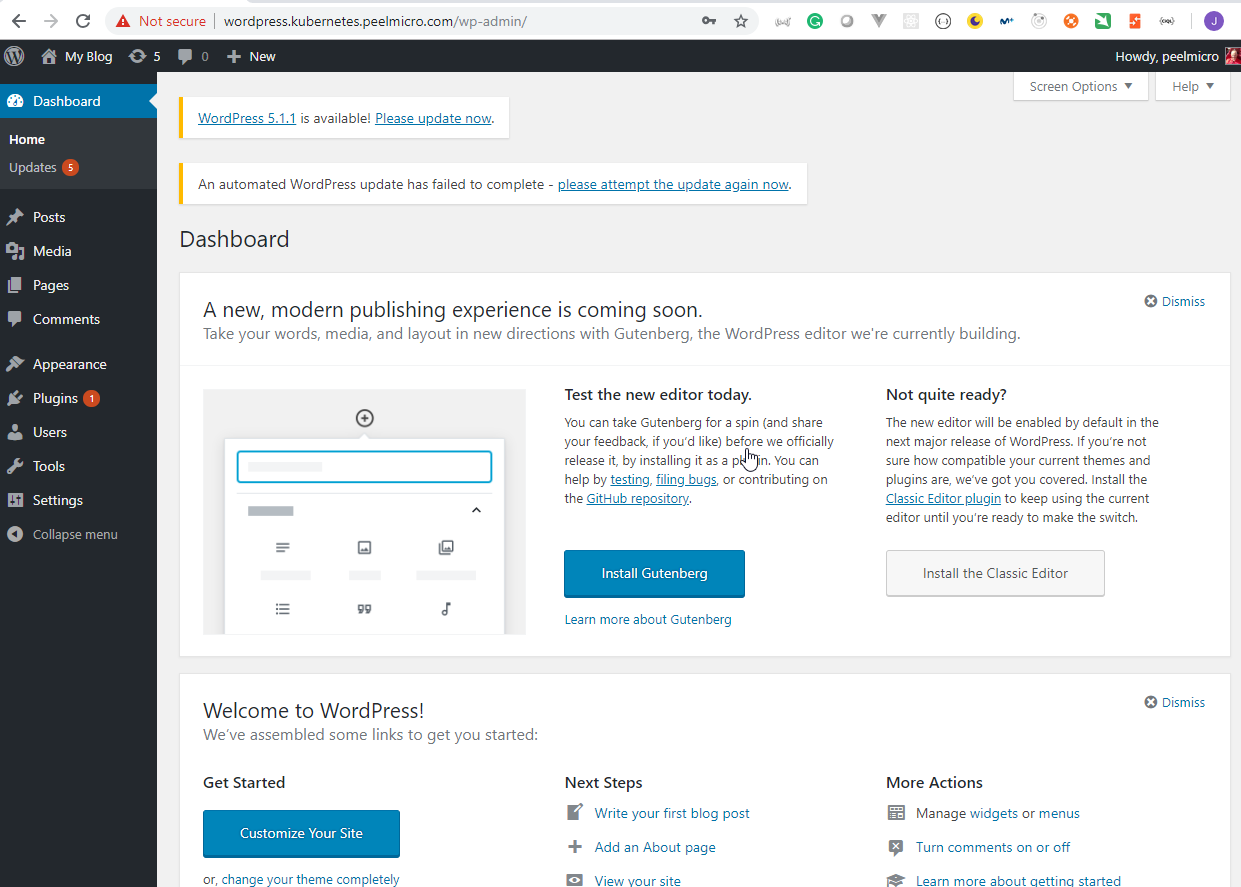

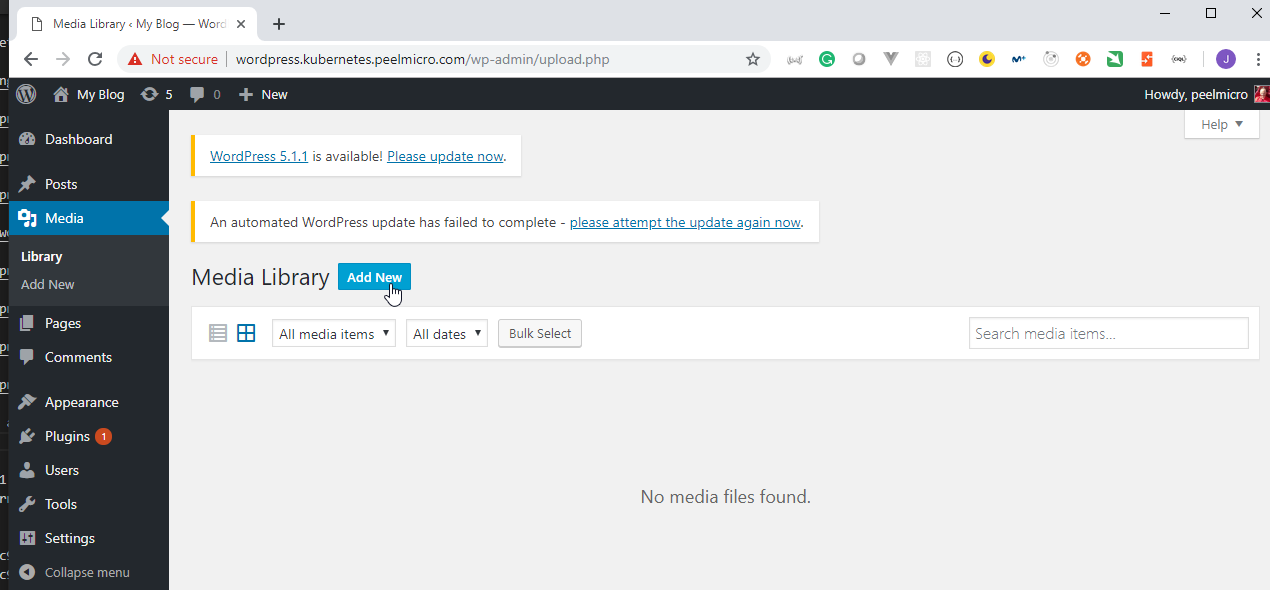

44. Demo: Running Wordpress on Kubernetes

- We are going to use the

wordpress/wordpress-secrets.ymldocument to create the secret with the password

wordpress/wordpress-secrets.yml

apiVersion: v1

kind: Secret

metadata:

name: wordpress-secrets

type: Opaque

data:

db-password: cGFzc3dvcmQ=

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f wordpress/wordpress-secrets.yml

secret/wordpress-secrets created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

wordpress/wordpress-single-deployment-no-volumes.ymldocument to create the deployment.

wordpress/wordpress-single-deployment-no-volumes.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: wordpress-deployment

spec:

replicas: 1

template:

metadata:

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress:4-php7.0

ports:

- name: http-port

containerPort: 80

env:

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: wordpress-secrets

key: db-password

- name: WORDPRESS_DB_HOST

value: 127.0.0.1

- name: mysql

image: mysql:5.7

ports:

- name: mysql-port

containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: wordpress-secrets

key: db-password

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f wordpress/wordpress-single-deployment-no-volumes.yml

deployment.extensions/wordpress-deployment created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/wordpress-deployment-6958b7b48f-rpvzz 0/2 ContainerCreating 0 4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/wordpress-deployment 0/1 1 0 4s

NAME DESIRED CURRENT READY AGE

replicaset.apps/wordpress-deployment-6958b7b48f 1 1 0 4s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/wordpress-deployment-6958b7b48f-rpvzz 2/2 Running 0 49s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/wordpress-deployment 1/1 1 1 49s

NAME DESIRED CURRENT READY AGE

replicaset.apps/wordpress-deployment-6958b7b48f 1 1 1 49s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl describe pod/wordpress-deployment-6958b7b48f-rpvzz

Name: wordpress-deployment-6958b7b48f-rpvzz

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: kubernetes-node-01/167.99.202.93

Start Time: Mon, 18 Mar 2019 19:21:07 +0000

Labels: app=wordpress

pod-template-hash=6958b7b48f

Annotations: cni.projectcalico.org/podIP: 10.244.1.54/32

Status: Running

IP: 10.244.1.54

Controlled By: ReplicaSet/wordpress-deployment-6958b7b48f

Containers:

wordpress:

Container ID: docker://cc9f8cb9b4d9b846e567e09b6b51d7534e6376e3c35e5812bd65eb2246d56279

Image: wordpress:4-php7.0

Image ID: docker-pullable://wordpress@sha256:c24c534fa99c17f7b0923d6ffa791b0f7fb43a821de5bc09564e46ae09d9966a

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 18 Mar 2019 19:21:21 +0000

Ready: True

Restart Count: 0

Environment:

WORDPRESS_DB_PASSWORD: <set to the key 'db-password' in secret 'wordpress-secrets'> Optional: false

WORDPRESS_DB_HOST: 127.0.0.1

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rtvbw (ro)

mysql:

Container ID: docker://05cbc1c93f22c75956d0a5cd0fd80a61726f6878c21ee8525515d8b201e02a0d

Image: mysql:5.7

Image ID: docker-pullable://mysql@sha256:de482b2b0fdbe5bb142462c07c5650a74e0daa31e501bc52448a2be10f384e6d

Port: 3306/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 18 Mar 2019 19:21:33 +0000

Ready: True

Restart Count: 0

Environment:

MYSQL_ROOT_PASSWORD: <set to the key 'db-password' in secret 'wordpress-secrets'> Optional: false

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rtvbw (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-rtvbw:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-rtvbw

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 114s default-scheduler Successfully assigned default/wordpress-deployment-6958b7b48f-rpvzz to kubernetes-node-01

Normal Pulling 114s kubelet, kubernetes-node-01 pulling image "wordpress:4-php7.0"

Normal Pulled 101s kubelet, kubernetes-node-01 Successfully pulled image "wordpress:4-php7.0"

Normal Created 101s kubelet, kubernetes-node-01 Created container

Normal Started 101s kubelet, kubernetes-node-01 Started container

Normal Pulling 101s kubelet, kubernetes-node-01 pulling image "mysql:5.7"

Normal Pulled 90s kubelet, kubernetes-node-01 Successfully pulled image "mysql:5.7"

Normal Created 90s kubelet, kubernetes-node-01 Created container

Normal Started 89s kubelet, kubernetes-node-01 Started container

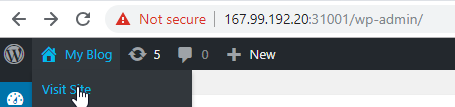

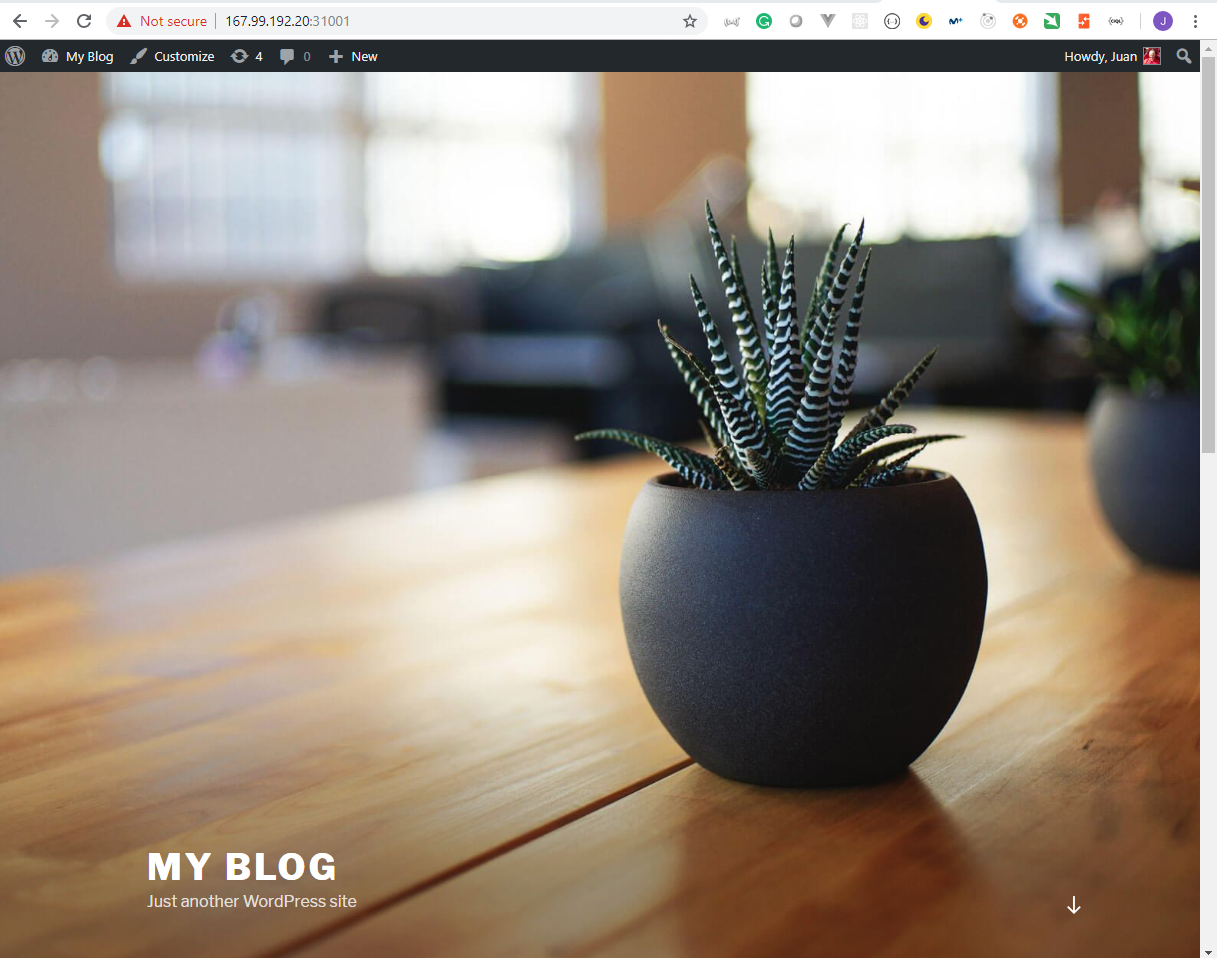

- We are going to use the

wordpress/wordpress-service.ymldocument to crreate the service to accesswordpress

wordpress/wordpress-service.yml

apiVersion: v1

kind: Service

metadata:

name: wordpress-service

spec:

ports:

- port: 31001

nodePort: 31001

targetPort: http-port

protocol: TCP

selector:

app: wordpress

type: NodePort

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f wordpress/wordpress-service.yml

service/wordpress-service created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/wordpress-deployment-6958b7b48f-rpvzz 2/2 Running 0 6m26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19d

service/wordpress-service NodePort 10.110.18.178 <none> 31001:31001/TCP 17s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/wordpress-deployment 1/1 1 1 6m26s

NAME DESIRED CURRENT READY AGE

replicaset.apps/wordpress-deployment-6958b7b48f 1 1 1 6m26s

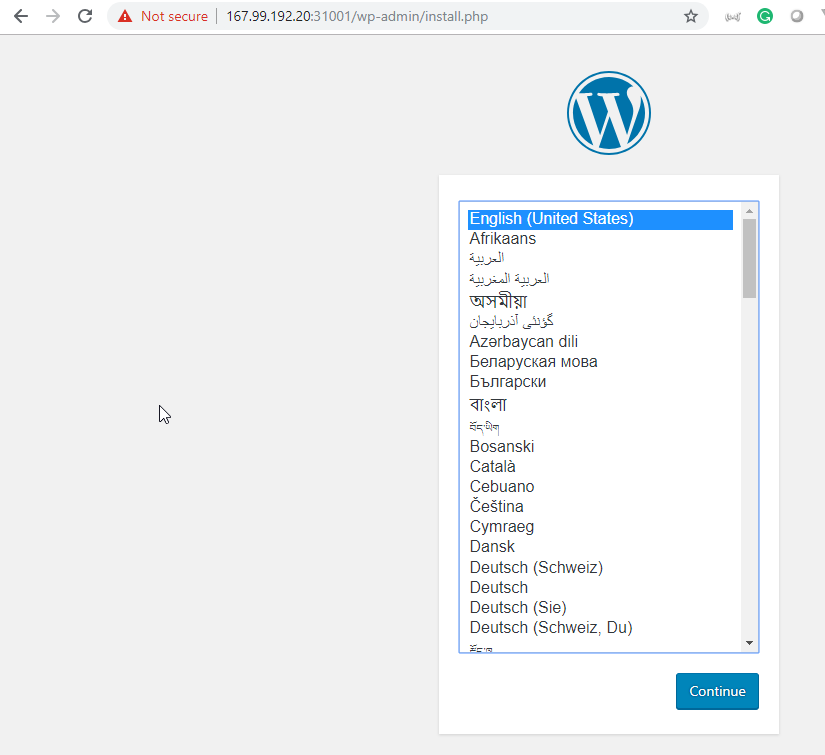

- The information is not persisted. If the containers are killed everything is lost.

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get pods

NAME READY STATUS RESTARTS AGE

wordpress-deployment-6958b7b48f-rpvzz 2/2 Running 0 18m

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl delete pod wordpress-deployment-6958b7b48f-rpvzz

pod "wordpress-deployment-6958b7b48f-rpvzz" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- The pod is recreated:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/wordpress-deployment-6958b7b48f-92vsl 2/2 Running 0 54s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19d

service/wordpress-service NodePort 10.110.18.178 <none> 31001:31001/TCP 13m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/wordpress-deployment 1/1 1 1 20m

NAME DESIRED CURRENT READY AGE

replicaset.apps/wordpress-deployment-6958b7b48f 1 1 1 20m

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- It starts again

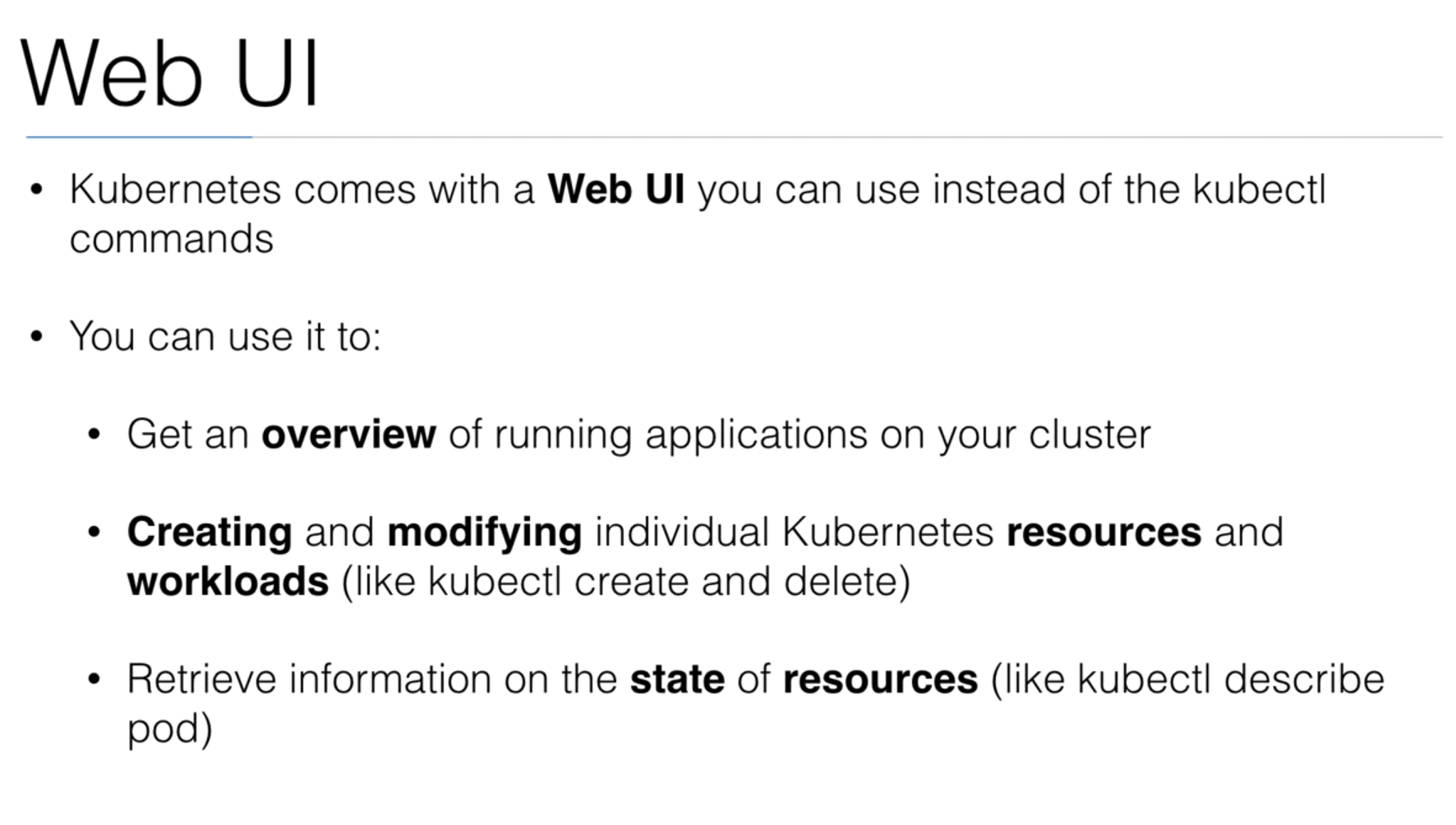

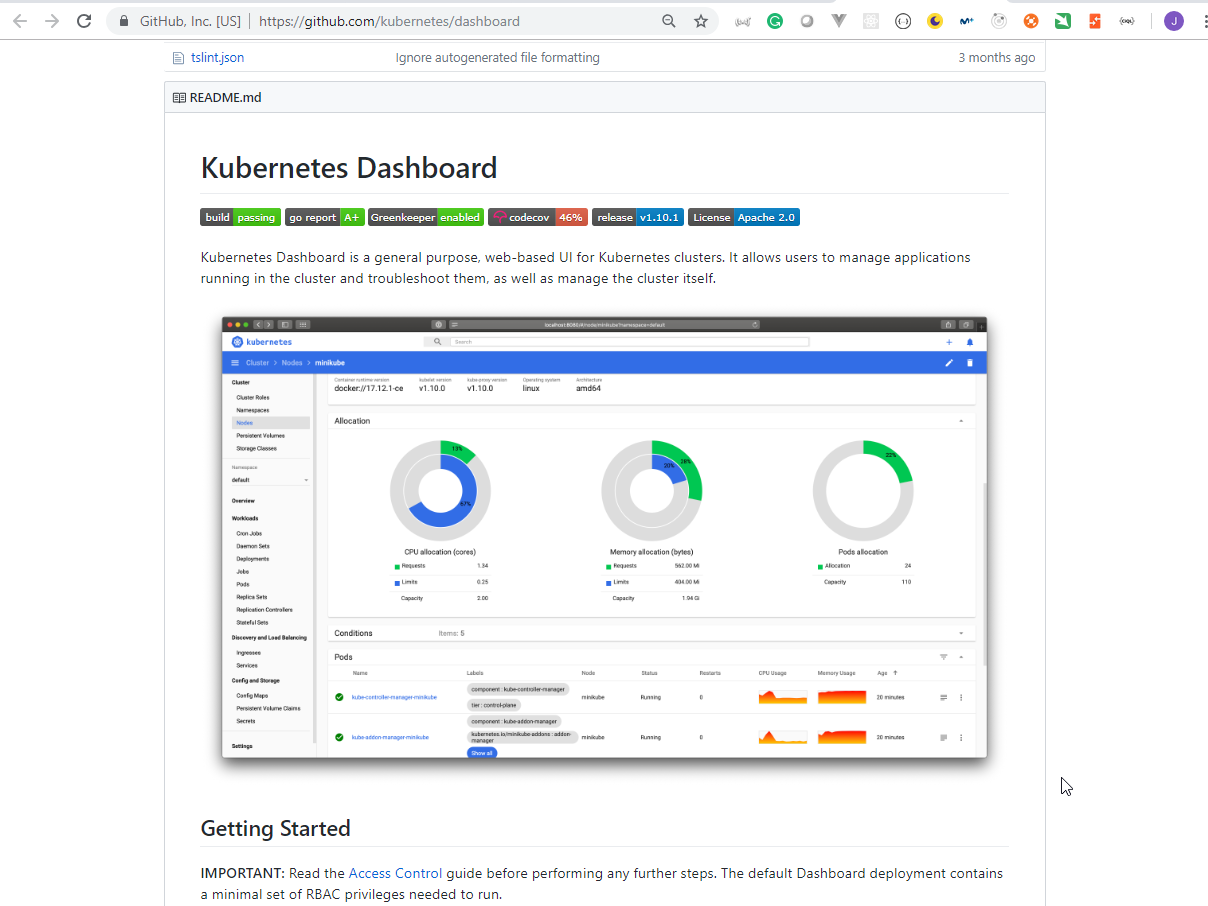

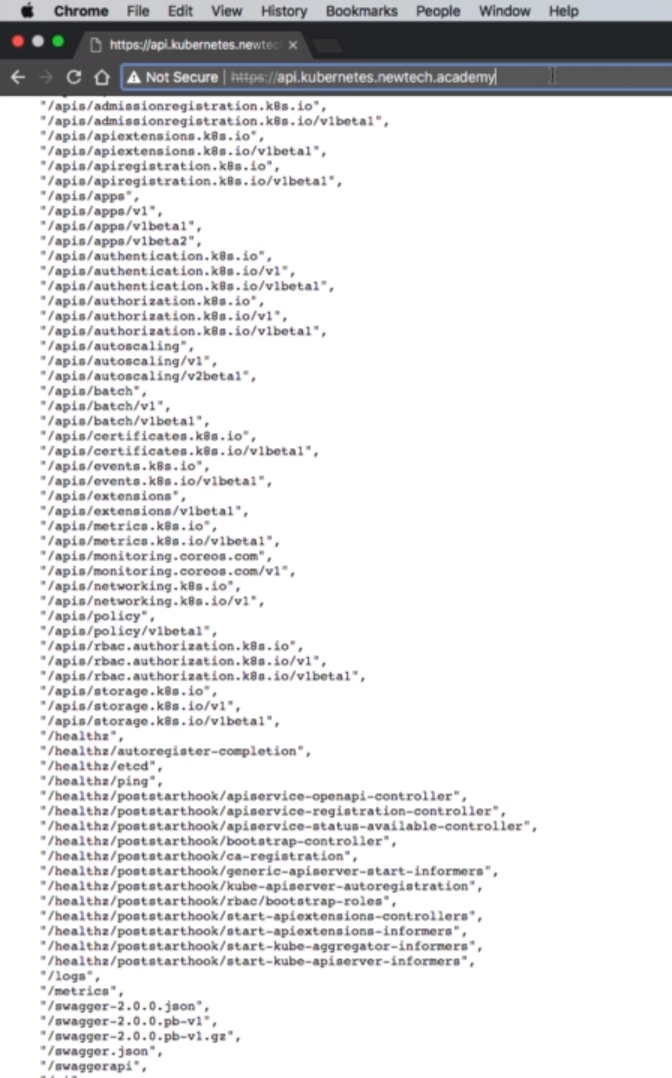

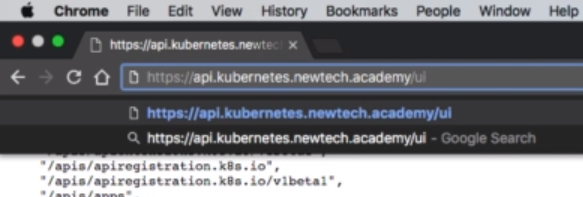

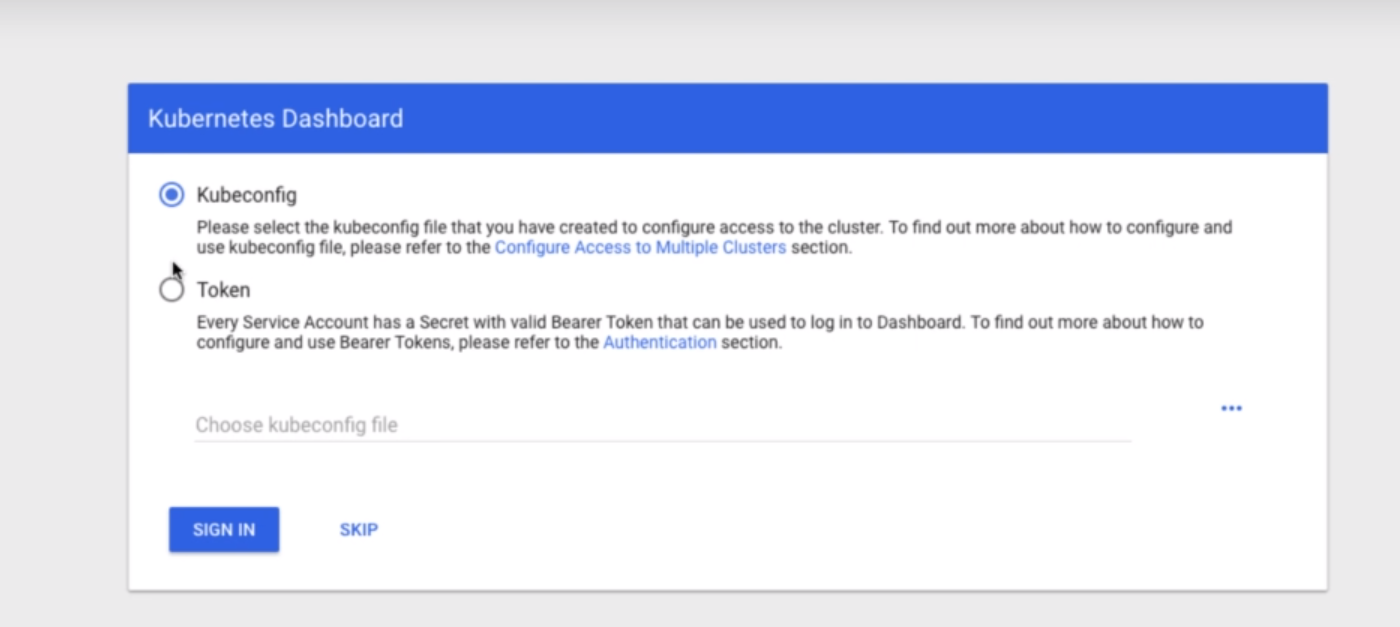

45. WebUI

- We can get more information on Kubernetes Dashboard

46. Demo: Web UI in Kops

- We can see the

dashboard/README.mddocument to see how to implement

dashboard/README.md

# Setting up the dashboard

## Start dashboard

Create dashboard:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

## Create user

Create sample user (if using RBAC - on by default on new installs with kops / kubeadm):

kubectl create -f sample-user.yaml

## Get login token:

kubectl -n kube-system get secret | grep admin-user

kubectl -n kube-system describe secret admin-user-token-<id displayed by previous command>

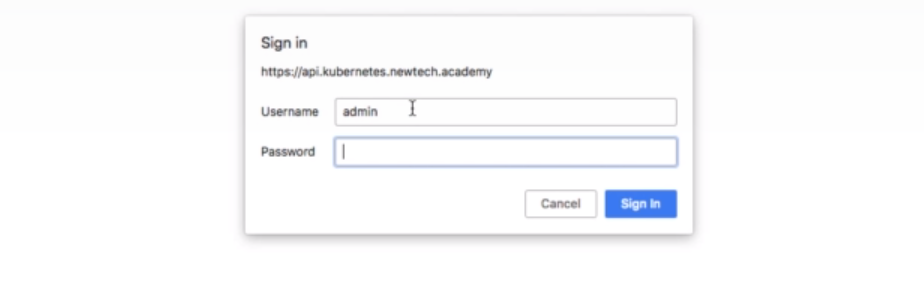

## Login to dashboard

Go to http://api.yourdomain.com:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/#!/login

Login: admin

Password: the password that is listed in ~/.kube/config (open file in editor and look for "password: ..."

Choose for login token and enter the login token from the previous step

Create dashboard:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

dashboard/sample-user.yamlService Account to create theadmin user

dashboard/sample-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f dashboard/sample-user.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

Get login token:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl -n kube-system get secret | grep admin-user

admin-user-token-8zffr kubernetes.io/service-account-token 3 103s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl -n kube-system describe secret admin-user-token-8zffr

Name: admin-user-token-8zffr

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 82341687-4a07-11e9-abeb-babbda5ce12f

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTh6ZmZyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI4MjM0MTY4Ny00YTA3LTExZTktYWJlYi1iYWJiZGE1Y2UxMmYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.slMXCeJFSBO3zsyVFIuIbX7j4iL-EqyEaxeJUugluQI7YuL63o3DEAlzkzkUy3tAokiU-t5sxsc05MIgX5jcnOjy00FOywCXDj5BDmDEdYvYKJ71vfyQ28vIszN1EcJc8rg7H_HrgvIe4Y4IwgAyx2SzNVpeEj6ofF64Yqyq9A7oCy7GKVg4BJX6TXunjh2fKDdFCHJfULMzBsxdkSF6egdDY8D-kKi48QBqS30DgKQb5jmX2zMA1C3ocC_iEVbCodgC6aE4N-nDN8eR9ghgDq86WmbOq0OHUSiWR6UnhRhd-a9tDMtuXRiIgC7V_dyWMQgSbJlxA4ZvJ3jQ9I_hRA

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

We are going to use this token to login instead of using a certificate.

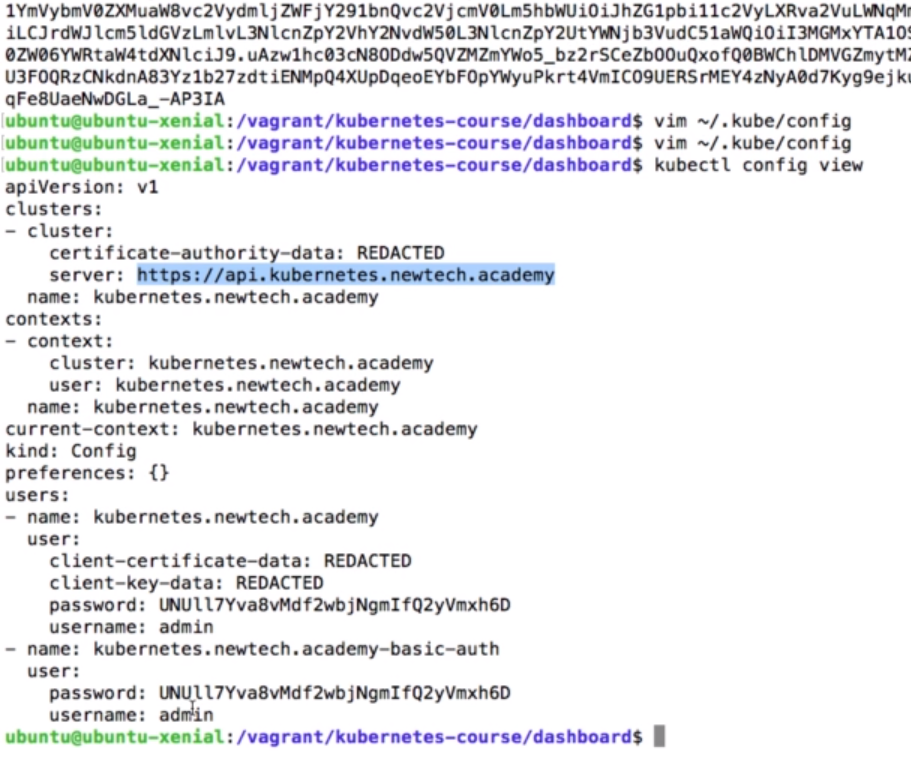

In order to get the password we can use:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://167.99.192.20:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

That only works on a AWS server

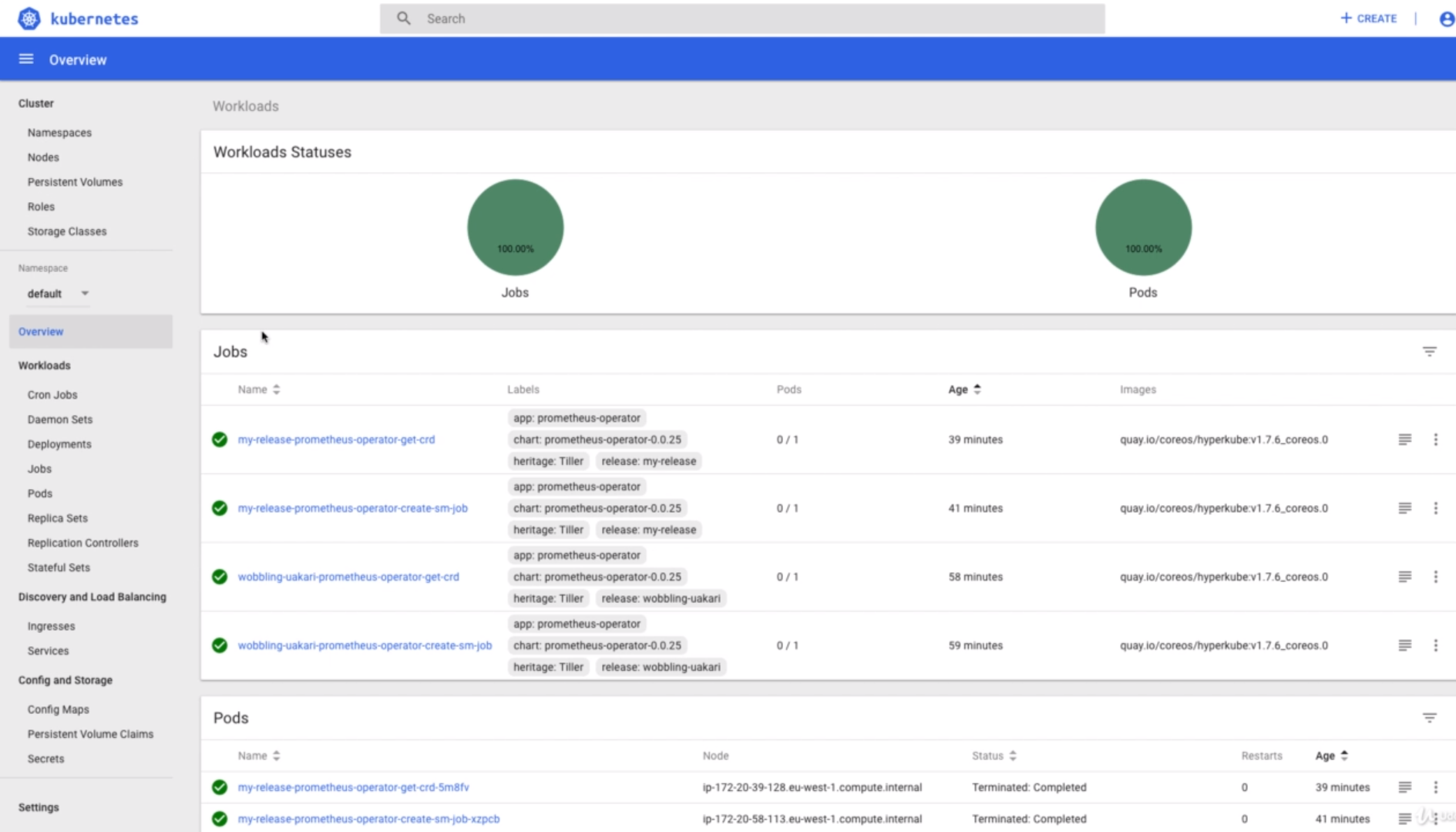

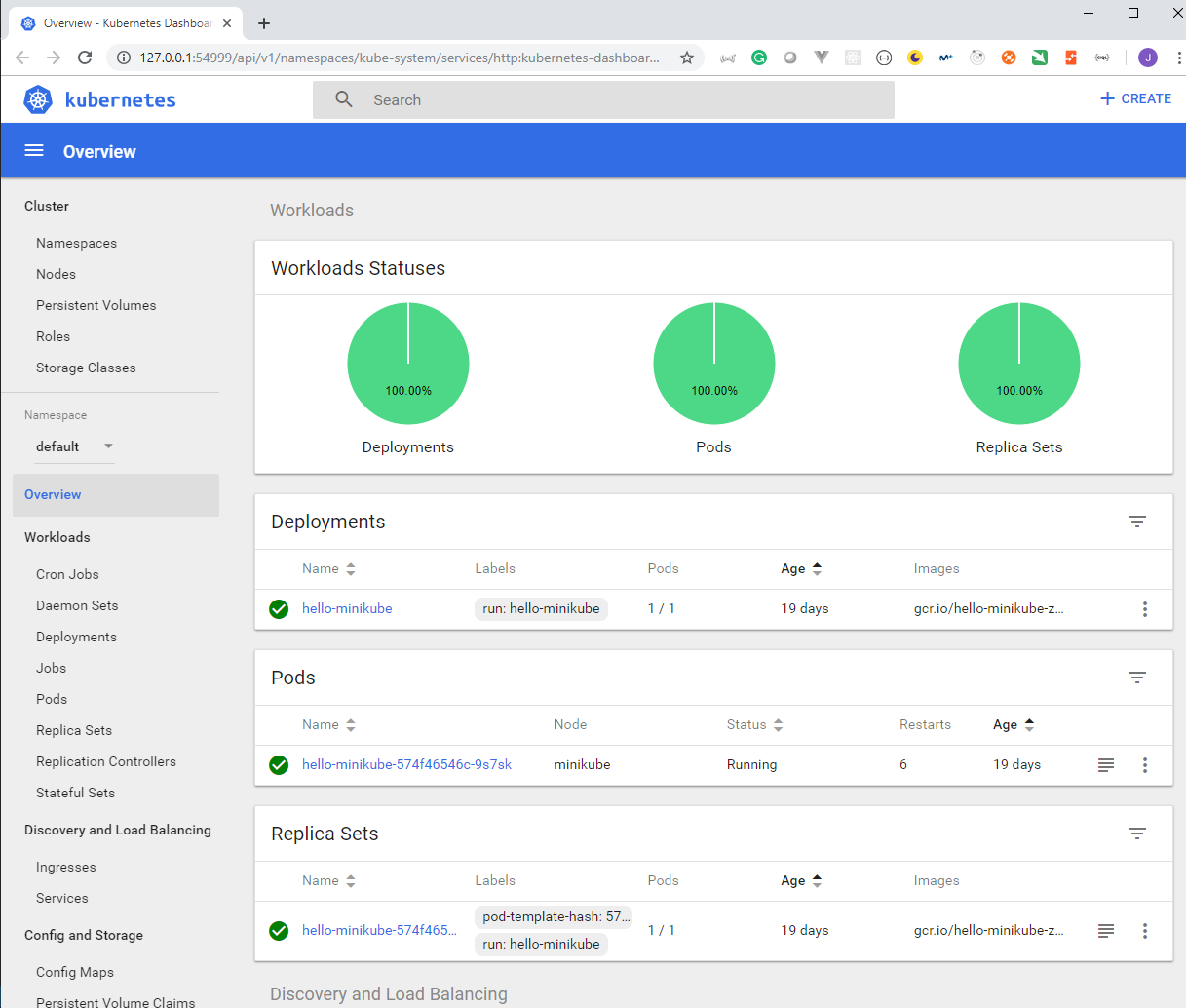

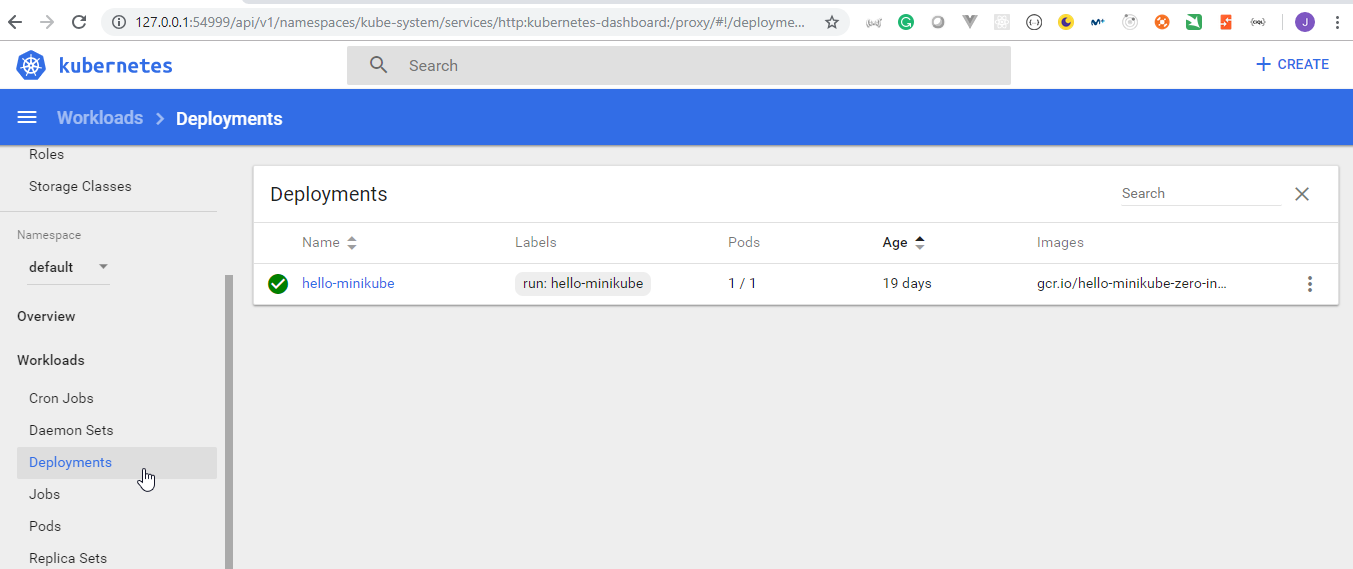

47. Demo: WebUI

- Start minikube if is not running

Windows PowerShell

Copyright (C) Microsoft Corporation. All rights reserved.

PS C:\Windows\system32> minikube start

There is a newer version of minikube available (v0.35.0). Download it here:

https://github.com/kubernetes/minikube/releases/tag/v0.35.0

To disable this notification, run the following:

minikube config set WantUpdateNotification false

o minikube v0.34.1 on windows (amd64)

! Ignoring --vm-driver=virtualbox, as the existing "minikube" VM was created using the hyperv driver.

! To switch drivers, you may create a new VM using `minikube start -p <name> --vm-driver=virtualbox`

! Alternatively, you may delete the existing VM using `minikube delete -p minikube`

: Re-using the currently running hyperv VM for "minikube" ...

: Waiting for SSH access ...

- "minikube" IP address is 192.168.1.137

- Configuring Docker as the container runtime ...

- Preparing Kubernetes environment ...

- Pulling images required by Kubernetes v1.13.3 ...

: Relaunching Kubernetes v1.13.3 using kubeadm ...

! Error restarting cluster: running cmd: sudo kubeadm init phase certs all --config /var/lib/kubeadm.yaml: command failed: sudo kubeadm init phase certs all --config /var/lib/kubeadm.yaml

stdout: [certs] Using certificateDir folder "/var/lib/minikube/certs/"

[certs] Using existing ca certificate authority

stderr: error execution phase certs/apiserver: failed to write certificate "apiserver": failure loading apiserver certificate: failed to load certificate: the certificate is not valid yet

: Process exited with status 1

* Sorry that minikube crashed. If this was unexpected, we would love to hear from you:

- https://github.com/kubernetes/minikube/issues/new

PS C:\Windows\system32>

PS C:\Windows\system32> minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.1.137

PS C:\Windows\system32> kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready master 21d v1.13.3

- Ask for the URL of the dashboard

PS C:\Windows\system32> minikube dashboard --url

- Enabling dashboard ...

- Verifying dashboard health ...

- Launching proxy ...

- Verifying proxy health ...

http://127.0.0.1:54999/api/v1/namespaces/kube-system/services/http:kubernetes-dashboard:/proxy/

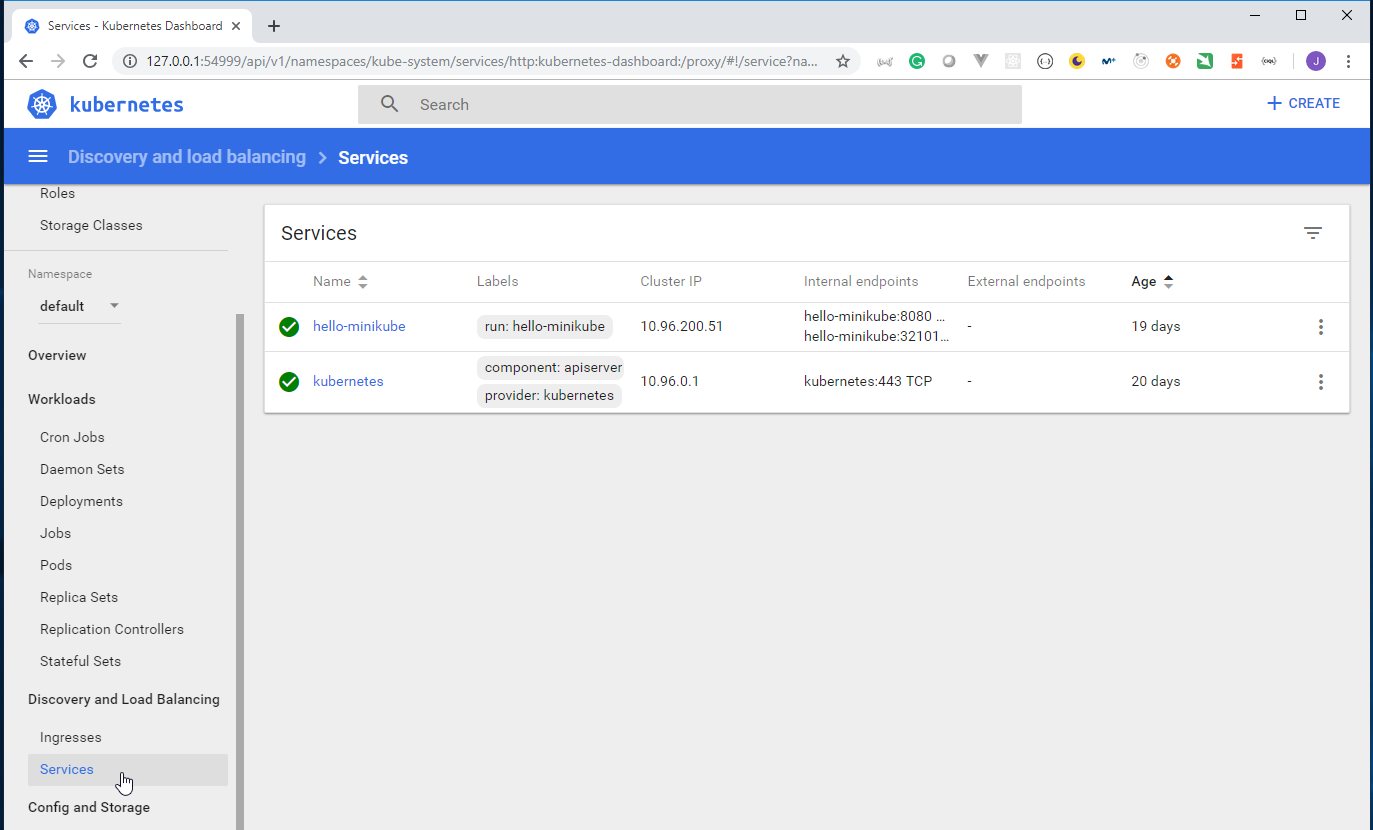

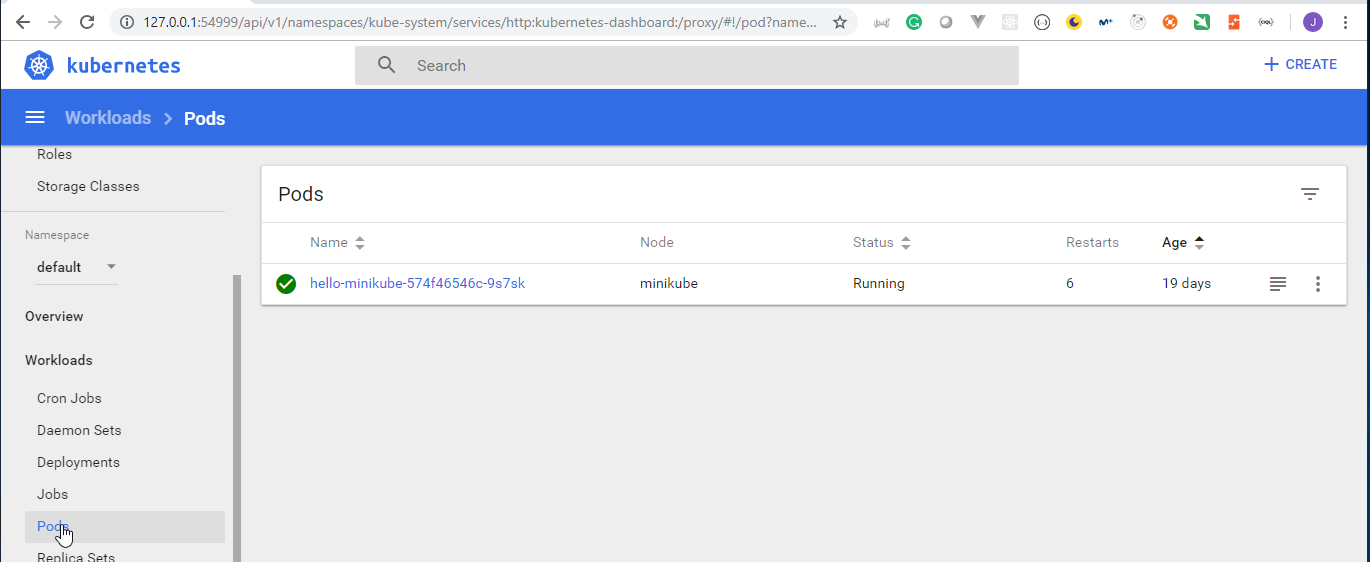

PS C:\Windows\system32> kubectl get all

NAME READY STATUS RESTARTS AGE

pod/hello-minikube-574f46546c-9s7sk 1/1 Running 6 21d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/hello-minikube NodePort 10.96.200.51 <none> 8080:32101/TCP 21d

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

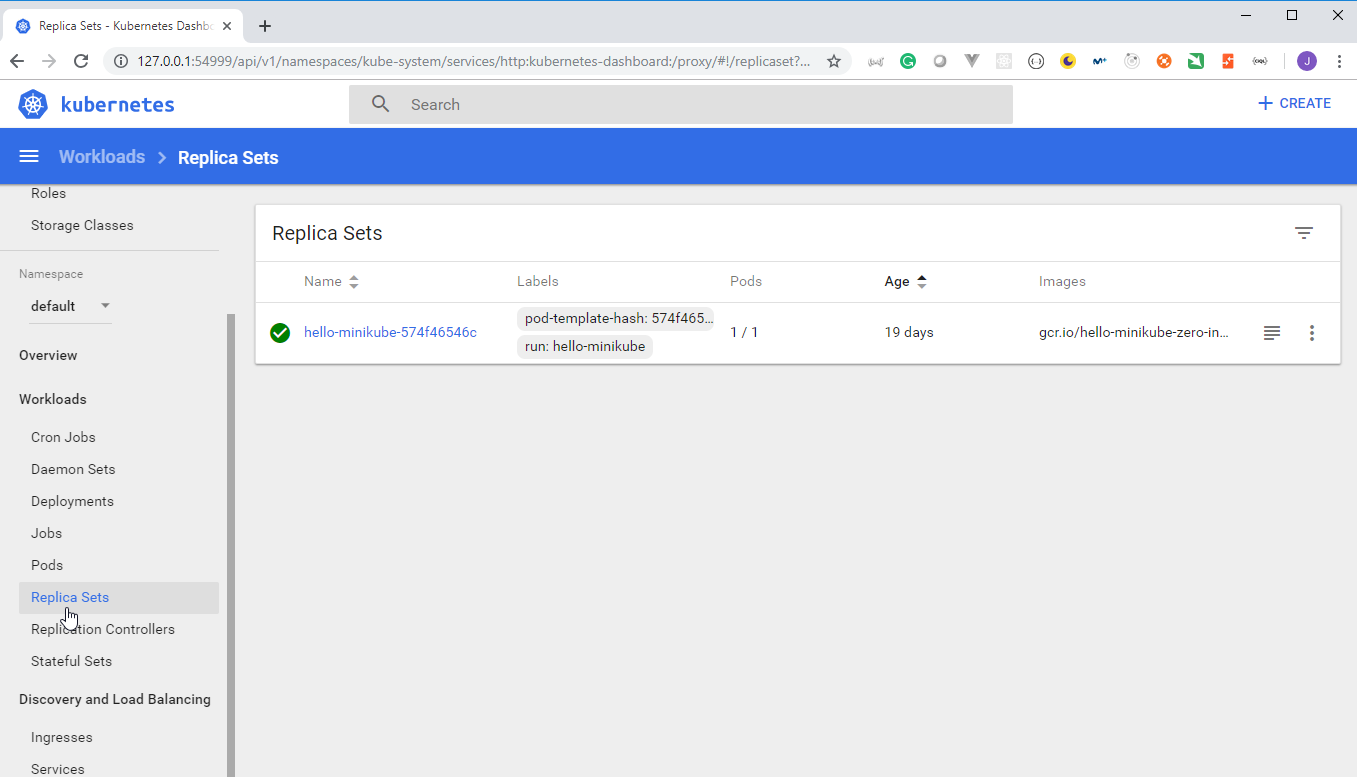

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/hello-minikube 1 1 1 1 21d

NAME DESIRED CURRENT READY AGE

replicaset.apps/hello-minikube-574f46546c 1 1 1 21d

Section: 3. Advanced Topics

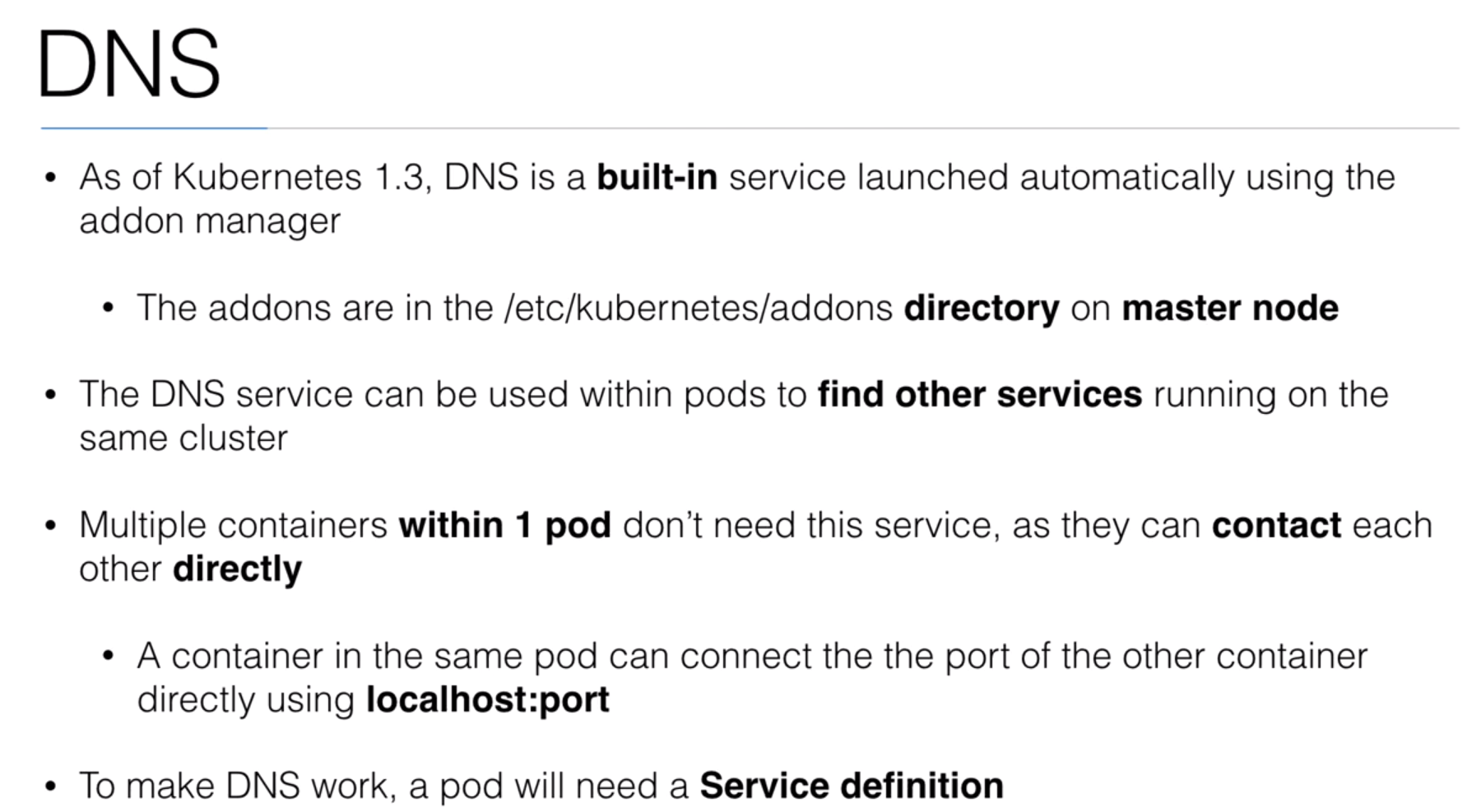

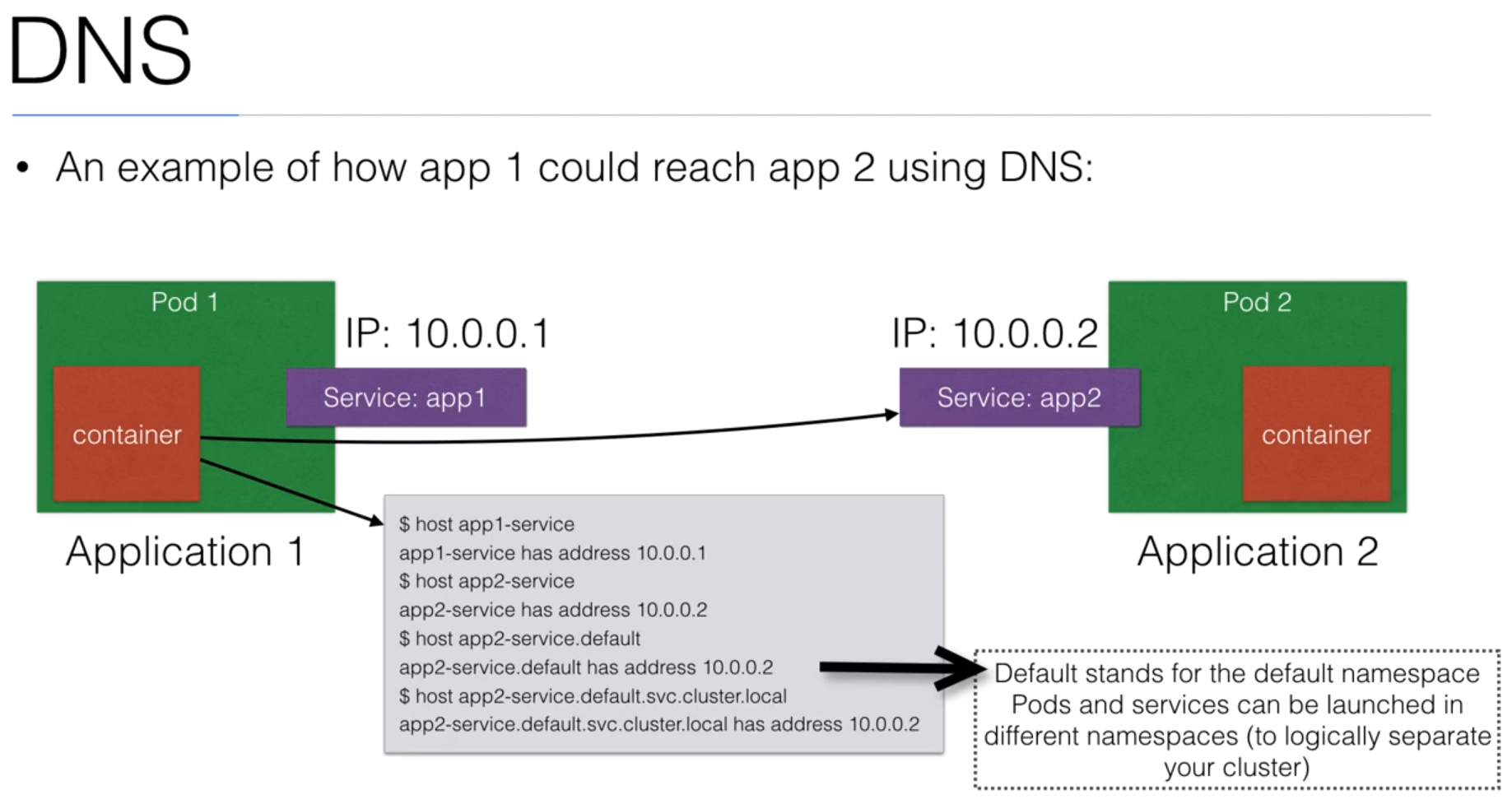

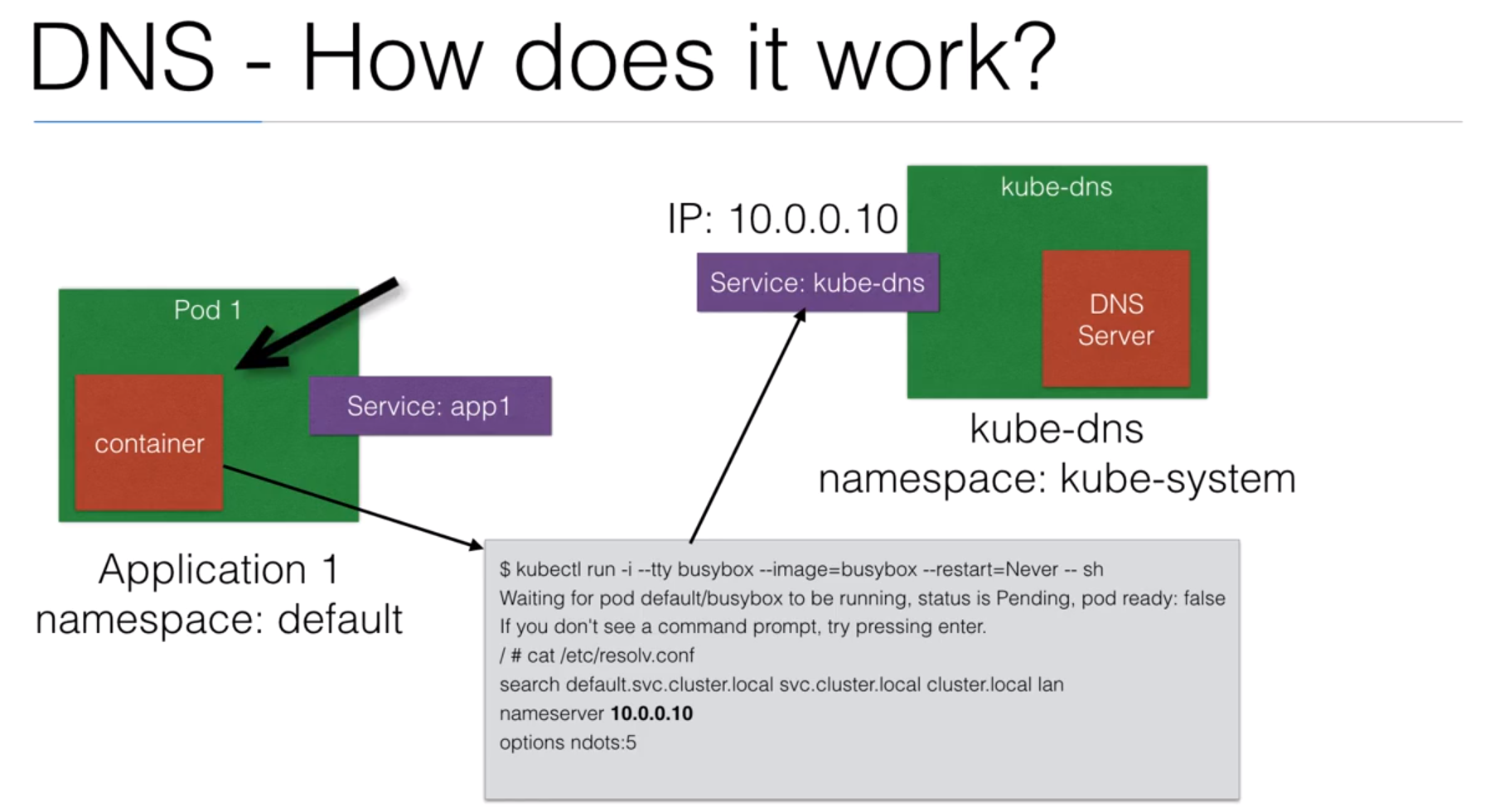

48. Service Discovery

49. Demo: Service Discovery

- We are going to use the

service-discovery/secrets.ymldocument to define the secrets.

service-discovery/secrets.yml

apiVersion: v1

kind: Secret

metadata:

name: helloworld-secrets

type: Opaque

data:

username: aGVsbG93b3JsZA==

password: cGFzc3dvcmQ=

rootPassword: cm9vdHBhc3N3b3Jk

database: aGVsbG93b3JsZA==

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f service-discovery/secrets.yml

secret/helloworld-secrets created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

service-discovery/database.ymldocument to create themysqldatabase pod.

service-discovery/database.yml

apiVersion: v1

kind: Pod

metadata:

name: database

labels:

app: database

spec:

containers:

- name: mysql

image: mysql:5.7

ports:

- name: mysql-port

containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: helloworld-secrets

key: rootPassword

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: helloworld-secrets

key: username

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: helloworld-secrets

key: password

- name: MYSQL_DATABASE

valueFrom:

secretKeyRef:

name: helloworld-secrets

key: database

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f service-discovery/database.yml

pod/database created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

service-discovery/database-service.ymldocument to create theserviceto access themysqldatabase.

service-discovery/database-service.yml

apiVersion: v1

kind: Service

metadata:

name: database-service

spec:

ports:

- port: 3306

protocol: TCP

selector:

app: database

type: NodePort

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f service-discovery/database-service.yml

service/database-service created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

service-discovery/helloworld-db.ymldocument to create thedeploymentof thehelloworld-dbapp.

service-discovery/database-service.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: helloworld-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: helloworld-db

spec:

containers:

- name: docker-nodejs-demo

image: peelmicro/docker-nodejs-demo

command: ["node", "index-db.js"]

ports:

- name: nodejs-port

containerPort: 3000

env:

- name: MYSQL_HOST

value: database-service

- name: MYSQL_USER

value: root

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: helloworld-secrets

key: rootPassword

- name: MYSQL_DATABASE

valueFrom:

secretKeyRef:

name: helloworld-secrets

key: database

- It is going to execute the

index-db.jsdocument when it is started and get the setting variables from secrets.

index-db.js

var express = require("express");

var app = express();

var mysql = require("mysql");

var con = mysql.createConnection({

host: process.env.MYSQL_HOST,

user: process.env.MYSQL_USER,

password: process.env.MYSQL_PASSWORD,

database: process.env.MYSQL_DATABASE

});

// mysql code

con.connect(function(err) {

if (err) {

console.log("Error connecting to db: ", err);

return;

}

console.log("Connection to db established");

con.query(

"CREATE TABLE IF NOT EXISTS visits (id INT NOT NULL PRIMARY KEY AUTO_INCREMENT, ts BIGINT)",

function(err) {

if (err) throw err;

}

);

});

// Request handling

app.get("/", function(req, res) {

// create table if not exist

con.query("INSERT INTO visits (ts) values (?)", Date.now(), function(

err,

dbRes

) {

if (err) throw err;

res.send("Hello World! You are visitor number " + dbRes.insertId);

});

});

// server

var server = app.listen(3000, function() {

var host = server.address().address;

var port = server.address().port;

console.log("Example app listening at http://%s:%s", host, port);

});

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f service-discovery/helloworld-db.yml

deployment.extensions/helloworld-deployment created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

service-discovery/helloworld-db-service.ymldocument to create theserviceto give access thehelloworld-dbapp.

service-discovery/database-service-service.yml

apiVersion: v1

kind: Service

metadata:

name: helloworld-db-service

spec:

ports:

- port: 3000

protocol: TCP

selector:

app: helloworld-db

type: NodePort

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f service-discovery/helloworld-db-service.yml

service/helloworld-db-service created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- Ensure everything is running

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/database 1/1 Running 0 10h

pod/helloworld-deployment-86bff7779d-8vszz 1/1 Running 0 40s

pod/helloworld-deployment-86bff7779d-cd4qc 1/1 Running 0 40s

pod/helloworld-deployment-86bff7779d-tsphf 1/1 Running 0 40s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/database-service NodePort 10.97.3.159 <none> 3306:32664/TCP 10h

service/helloworld-db-service NodePort 10.102.216.111 <none> 3000:30032/TCP 22s

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/helloworld-deployment 3/3 3 3 40s

NAME DESIRED CURRENT READY AGE

replicaset.apps/helloworld-deployment-86bff7779d 3 3 3 40s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- Ensure the connection with the database has been established

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl logs pod/helloworld-deployment-86bff7779d-8vszz

Example app listening at http://:::3000

Connection to db established

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

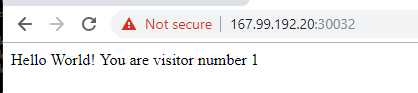

- Ensure that everythime with hit the web the counter is increased

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl http://167.99.192.20:30032/

Hello World! You are visitor number 2ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl http://10.102.216.111:3000

Hello World! You are visitor number 3ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl http://167.99.192.20:30032/

Hello World! You are visitor number 4ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl http://10.102.216.111:3000

Hello World! You are visitor number 5ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We can query the database using the following command: (password=rootpassword)

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl exec database -i -t -- mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 11

Server version: 5.7.25 MySQL Community Server (GPL)

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| helloworld |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> use helloworld;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+----------------------+

| Tables_in_helloworld |

+----------------------+

| visits |

+----------------------+

1 row in set (0.00 sec)

mysql> select * from visits;

+----+---------------+

| id | ts |

+----+---------------+

| 1 | 1553104232682 |

| 2 | 1553104450359 |

| 3 | 1553104516517 |

| 4 | 1553104580779 |

| 5 | 1553104584555 |

+----+---------------+

5 rows in set (0.00 sec)

mysql> \q

Bye

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We can access the shell of the database pod by using:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl run -i --tty busybox --image=busybox --restart=Never -- sh

If you don't see a command prompt, try pressing enter.

/ # nslookup helloworld-db-server

Server: 10.96.0.10

Address: 10.96.0.10:53

** server can't find helloworld-db-server.default.svc.cluster.local: NXDOMAIN

^[[A*** Can't find helloworld-db-server.svc.cluster.local: No answer

*** Can't find helloworld-db-server.cluster.local: No answer

*** Can't find helloworld-db-server.default.svc.cluster.local: No answer

*** Can't find helloworld-db-server.svc.cluster.local: No answer

*** Can't find helloworld-db-server.cluster.local: No answer

/ # nslookup database-service

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: database-service.default.svc.cluster.local

Address: 10.97.3.159

*** Can't find database-service.svc.cluster.local: No answer

*** Can't find database-service.cluster.local: No answer

*** Can't find database-service.default.svc.cluster.local: No answer

*** Can't find database-service.svc.cluster.local: No answer

*** Can't find database-service.cluster.local: No answer

/ # nslookup database-db-service

Server: 10.96.0.10

Address: 10.96.0.10:53

** server can't find database-db-service.default.svc.cluster.local: NXDOMAIN

*** Can't find database-db-service.svc.cluster.local: No answer

*** Can't find database-db-service.cluster.local: No answer

*** Can't find database-db-service.default.svc.cluster.local: No answer

*** Can't find database-db-service.svc.cluster.local: No answer

*** Can't find database-db-service.cluster.local: No answer

/ # telnet helloworld-db-service 3000

GET /

HTTP/1.1 200 OK

X-Powered-By: Express

Content-Type: text/html; charset=utf-8

Content-Length: 37

ETag: W/"25-rn5uqEDMXsmWIlIUH7iA+ZOGwsg"

Date: Wed, 20 Mar 2019 18:39:37 GMT

Connection: close

Hello World! You are visitor number 6Connection closed by foreign host

/ # pod default/busybox terminated (Error)

- Delete the busybox pod just created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl delete pod busybox

pod "busybox" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl delete deployment.apps/helloworld-deployment

deployment.apps "helloworld-deployment" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/database 1/1 Running 0 23h

pod/helloworld-deployment-86bff7779d-8vszz 1/1 Terminating 1 13h

pod/helloworld-deployment-86bff7779d-cd4qc 1/1 Terminating 1 13h

pod/helloworld-deployment-86bff7779d-tsphf 1/1 Terminating 1 13h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/database-service NodePort 10.97.3.159 <none> 3306:32664/TCP 23h

service/helloworld-db-service NodePort 10.102.216.111 <none> 3000:30032/TCP 13h

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl delete service/helloworld-db-service

service "helloworld-db-service" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl delete service/database-service

service "database-service" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/database 1/1 Running 0 23h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl delete pod/database

pod "database" deleted

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

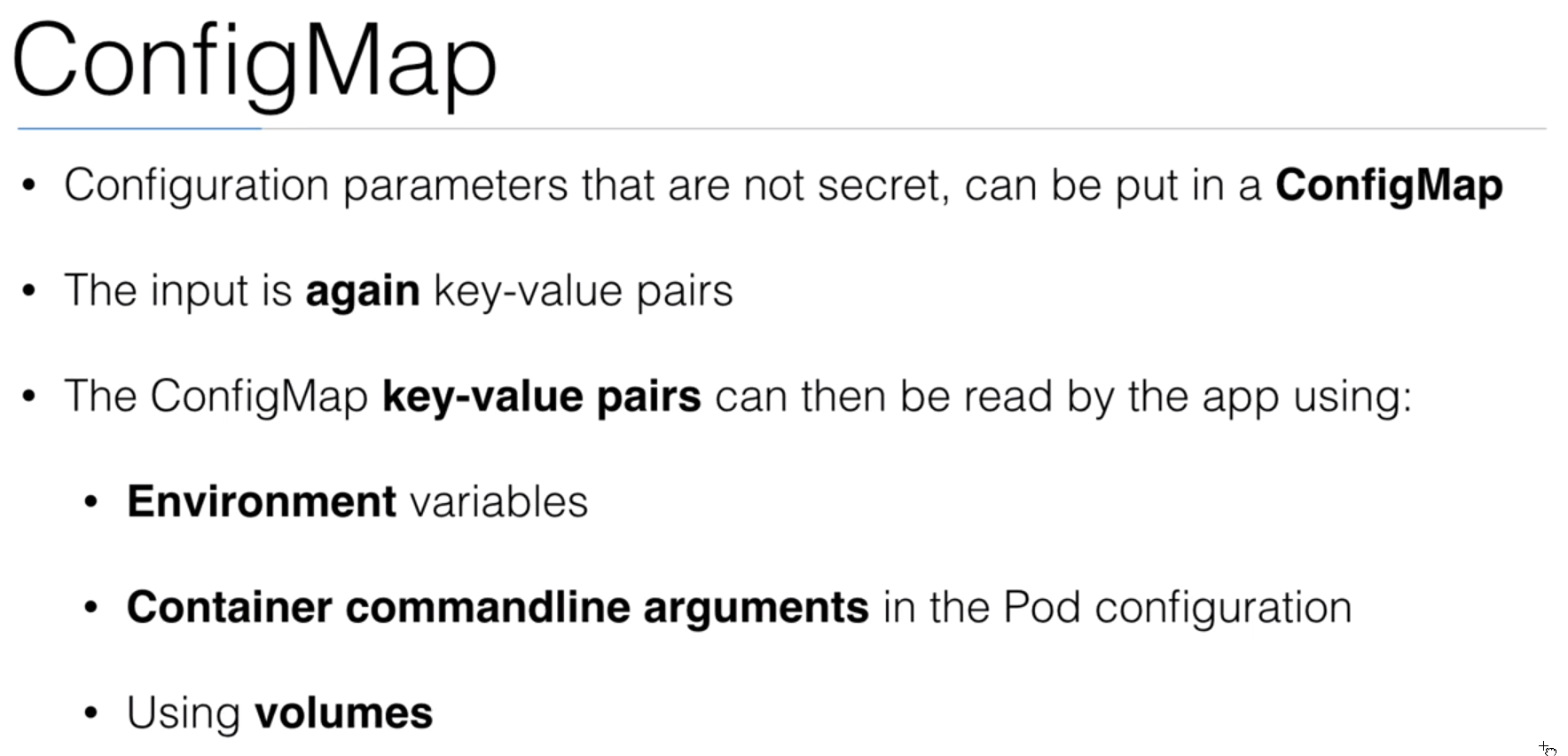

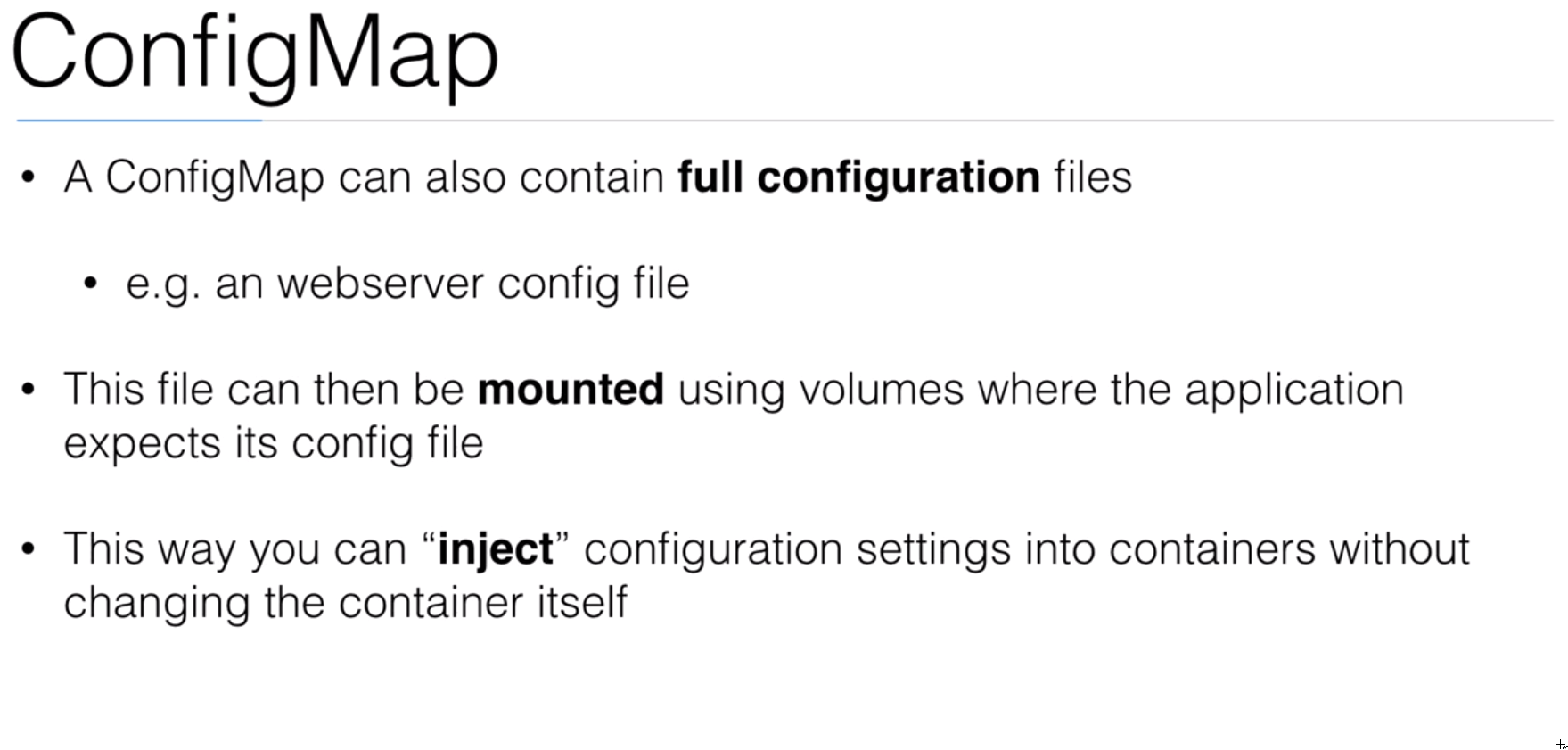

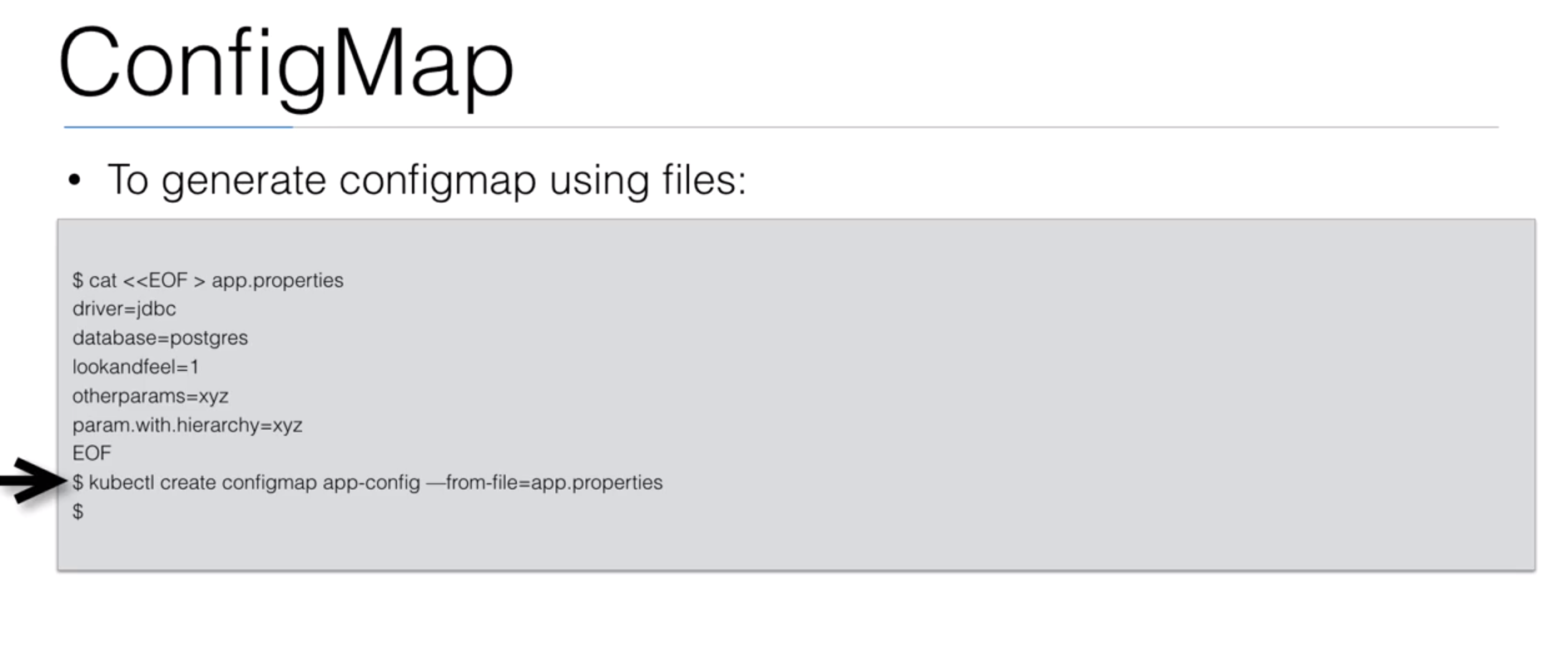

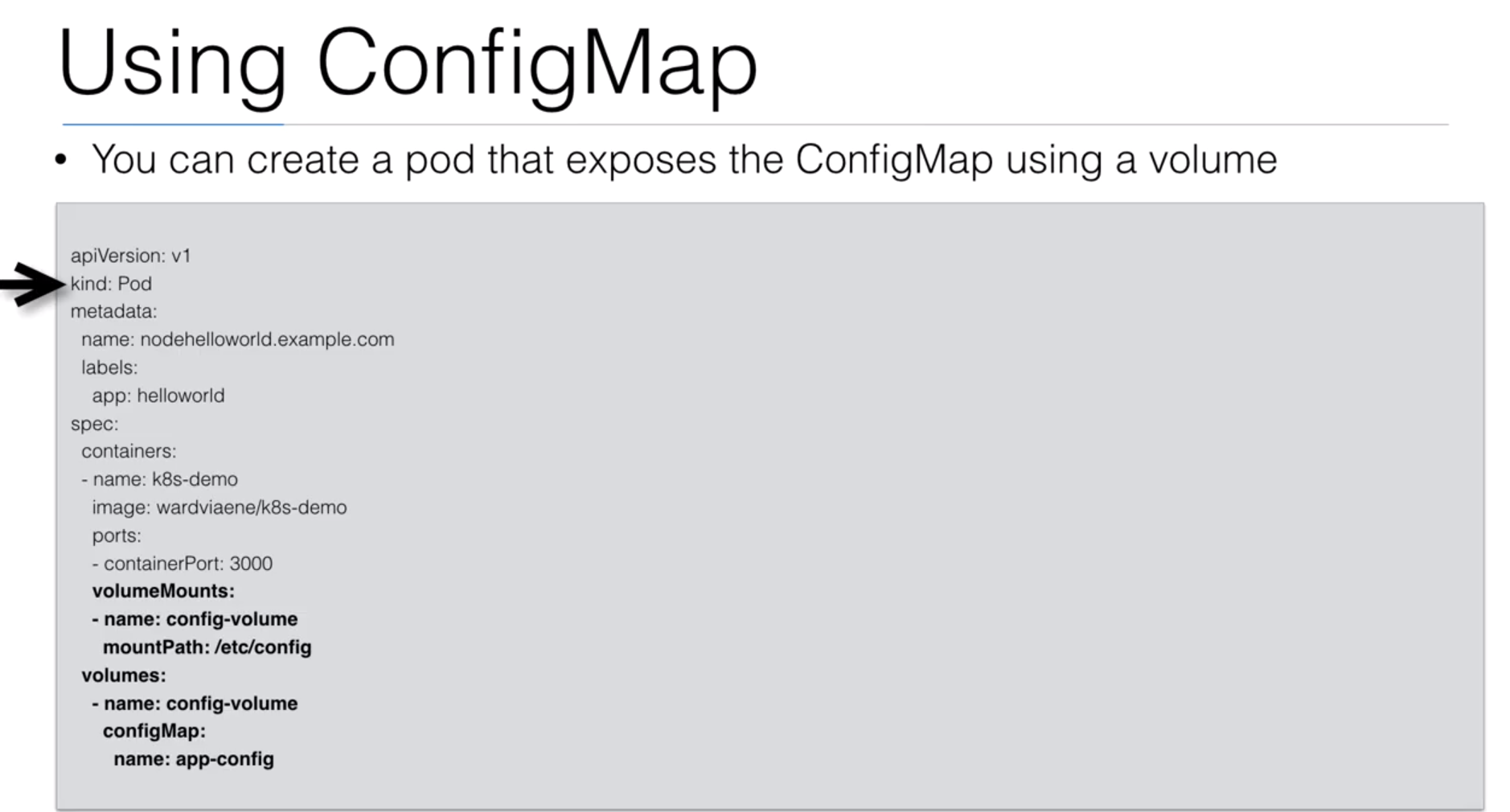

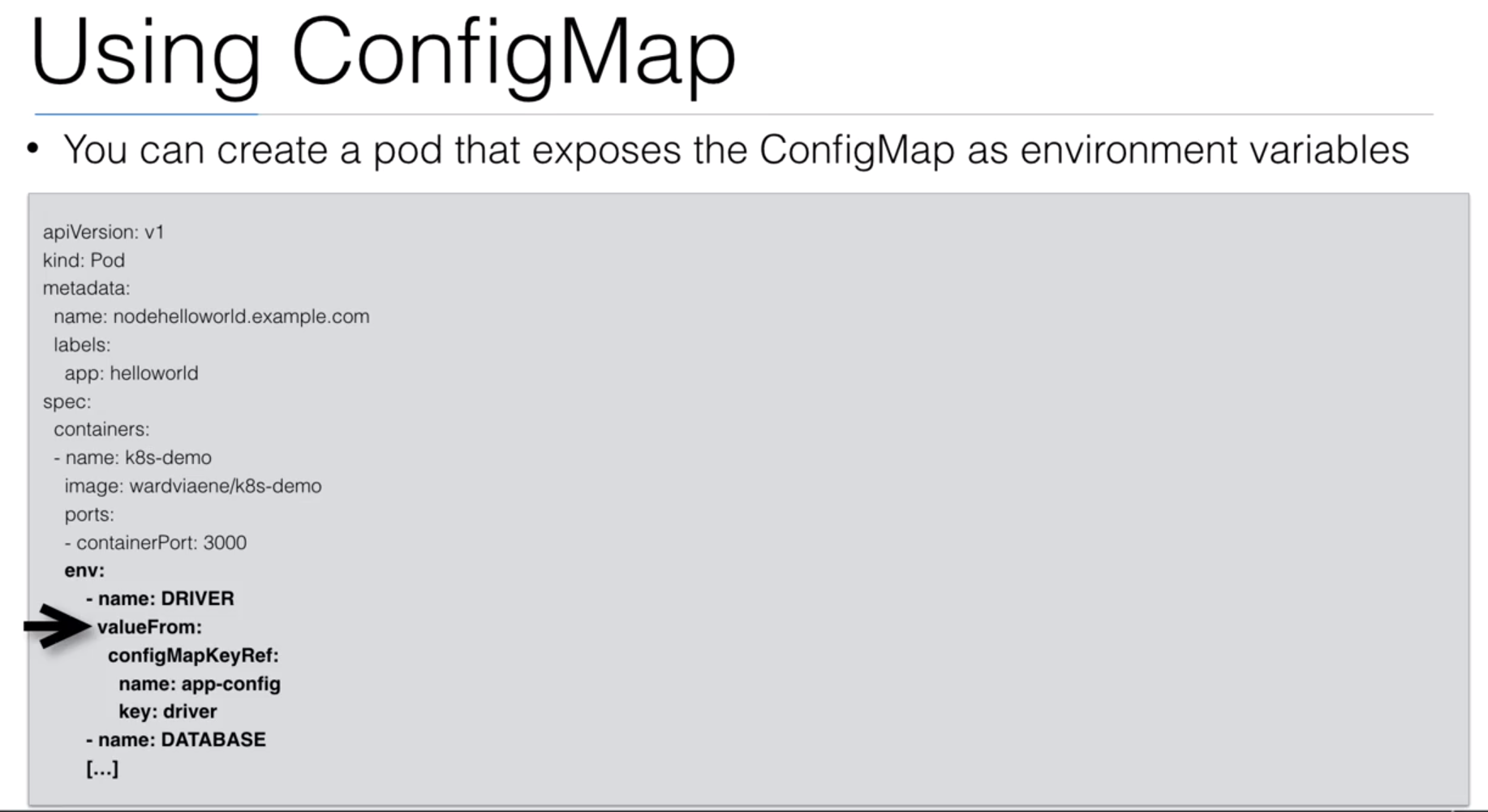

50. ConfigMap

51. Demo: ConfigMap

- We are going to use the

configmap/reverseproxy.confdocument to configure a nginx service.

configmap/reverseproxy.conf

server {

listen 80;

server_name localhost;

location / {

proxy_bind 127.0.0.1;

proxy_pass http://127.0.0.1:3000;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

- We can create a configmap by using the following command:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create configmap nginx-config --from-file=configmap/reverseproxy.conf

configmap/nginx-config created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get configmap

NAME DATA AGE

nginx-config 1 26s

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We can see the content of the configmap by using the following command:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get configmap nginx-config -o yaml

apiVersion: v1

data:

reverseproxy.conf: |

server {

listen 80;

server_name localhost;

location / {

proxy_bind 127.0.0.1;

proxy_pass http://127.0.0.1:3000;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

kind: ConfigMap

metadata:

creationTimestamp: "2019-03-21T05:29:00Z"

name: nginx-config

namespace: default

resourceVersion: "2412112"

selfLink: /api/v1/namespaces/default/configmaps/nginx-config

uid: 3b4d0dbf-4b9a-11e9-abeb-babbda5ce12f

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

configmap/nginx.ymldocument to create a Pod with the normal example container and a nginx container with access to the configmap created.

configmap/nginx.yml

apiVersion: v1

kind: Pod

metadata:

name: helloworld-nginx

labels:

app: helloworld-nginx

spec:

containers:

- name: nginx

image: nginx:1.11

ports:

- containerPort: 80

volumeMounts:

- name: config-volume

mountPath: /etc/nginx/conf.d

- name: docker-nodejs-demo

image: peelmicro/docker-nodejs-demo

ports:

- containerPort: 3000

volumes:

- name: config-volume

configMap:

name: nginx-config

items:

- key: reverseproxy.conf

path: reverseproxy.conf

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f configmap/nginx.yml

pod/helloworld-nginx created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/helloworld-nginx 0/2 ContainerCreating 0 6s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

configmap/nginx-service.ymldocument to create the Service to access the Pod.

configmap/nginx-service.yml

apiVersion: v1

kind: Service

metadata:

name: helloworld-nginx-service

spec:

ports:

- port: 80

protocol: TCP

selector:

app: helloworld-nginx

type: NodePort

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f configmap/nginx-service.yml

service/helloworld-nginx-service created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/helloworld-nginx 2/2 Running 0 106s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/helloworld-nginx-service NodePort 10.104.62.200 <none> 80:32032/TCP 3s

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl http://10.104.62.200:80 -vvvv

* Rebuilt URL to: http://10.104.62.200:80/

* Trying 10.104.62.200...

* Connected to 10.104.62.200 (10.104.62.200) port 80 (#0)

> GET / HTTP/1.1

> Host: 10.104.62.200

> User-Agent: curl/7.47.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.11.13

< Date: Thu, 21 Mar 2019 05:41:30 GMT

< Content-Type: text/html; charset=utf-8

< Content-Length: 12

< Connection: keep-alive

< X-Powered-By: Express

< ETag: W/"c-Lve95gjOVATpfV8EL5X4nxwjKHE"

<

* Connection #0 to host 10.104.62.200 left intact

Hello World!ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl http://167.99.192.20:32032 -vvvv

* Rebuilt URL to: http://167.99.192.20:32032/

* Trying 167.99.192.20...

* Connected to 167.99.192.20 (167.99.192.20) port 32032 (#0)

> GET / HTTP/1.1

> Host: 167.99.192.20:32032

> User-Agent: curl/7.47.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.11.13

< Date: Thu, 21 Mar 2019 05:42:44 GMT

< Content-Type: text/html; charset=utf-8

< Content-Length: 12

< Connection: keep-alive

< X-Powered-By: Express

< ETag: W/"c-Lve95gjOVATpfV8EL5X4nxwjKHE"

<

* Connection #0 to host 167.99.192.20 left intact

Hello World!ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We can access the nginx container inside the pod by using:

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl exec -i -t helloworld-nginx -c nginx -- bash

root@helloworld-nginx:/# ps x

PID TTY STAT TIME COMMAND

1 ? Ss 0:00 nginx: master process nginx -g daemon off;

8 ? Ss 0:00 bash

13 ? R+ 0:00 ps x

root@helloworld-nginx:/# cat /etc/nginx/conf.d/reverseproxy.conf

server {

listen 80;

server_name localhost;

location / {

proxy_bind 127.0.0.1;

proxy_pass http://127.0.0.1:3000;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

root@helloworld-nginx:/#

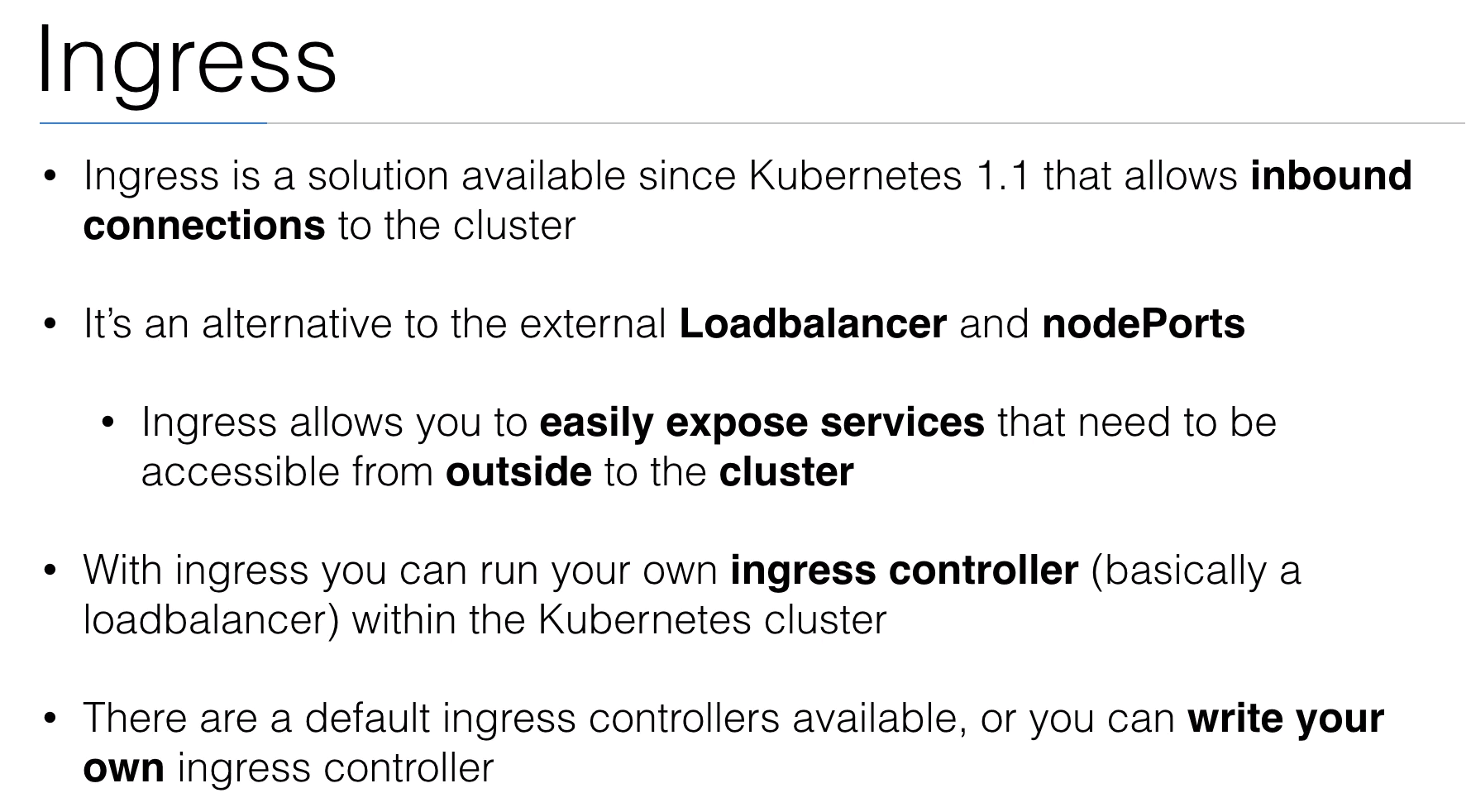

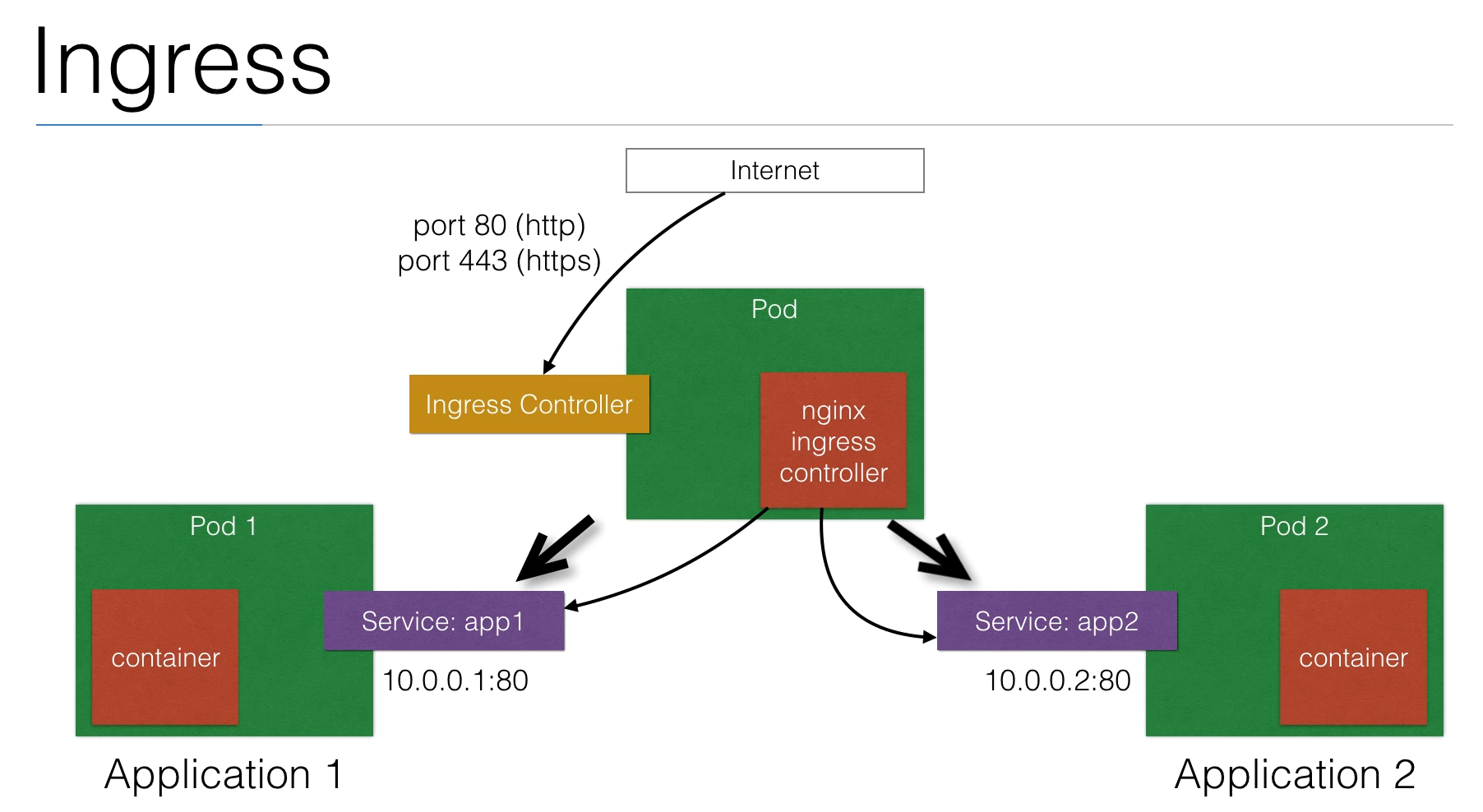

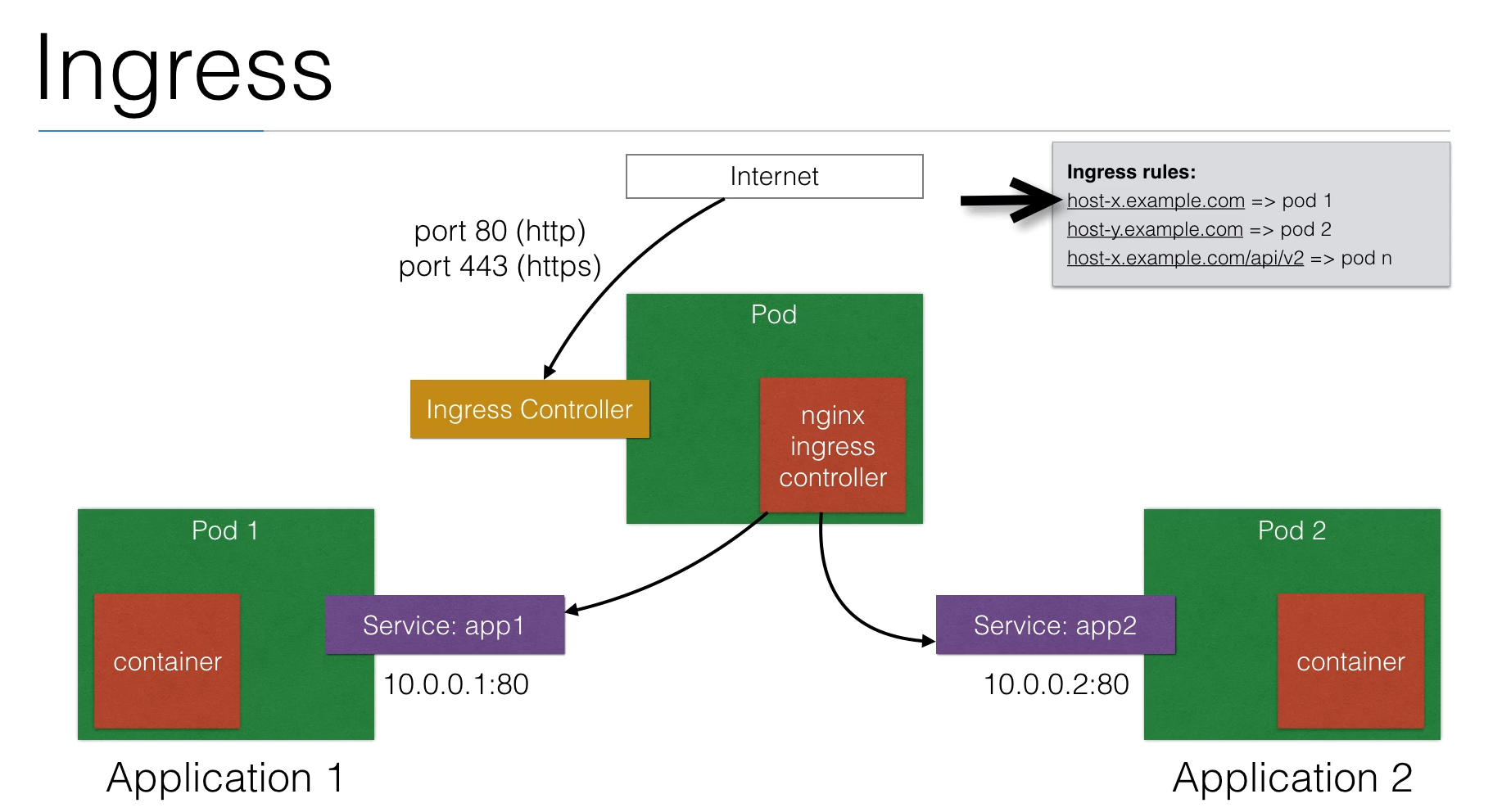

52. Ingress Controller

53. Demo: Ingress Controller

- We are going to use the

ingress/nginx-ingress-controller.ymldocument to create the ingress-controller. This is a mandatory controller needed to use ingress. We could update it with the latest version

ingress/nginx-ingress-controller.yml

# updated this file with the latest ingress-controller from https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

spec:

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

spec:

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.17.1

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/echoheaders-default

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

hostPort: 80

- name: https

containerPort: 443

hostPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

labels:

app: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: default

---

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f ingress/nginx-ingress-controller.yml

daemonset.extensions/nginx-ingress-controller created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

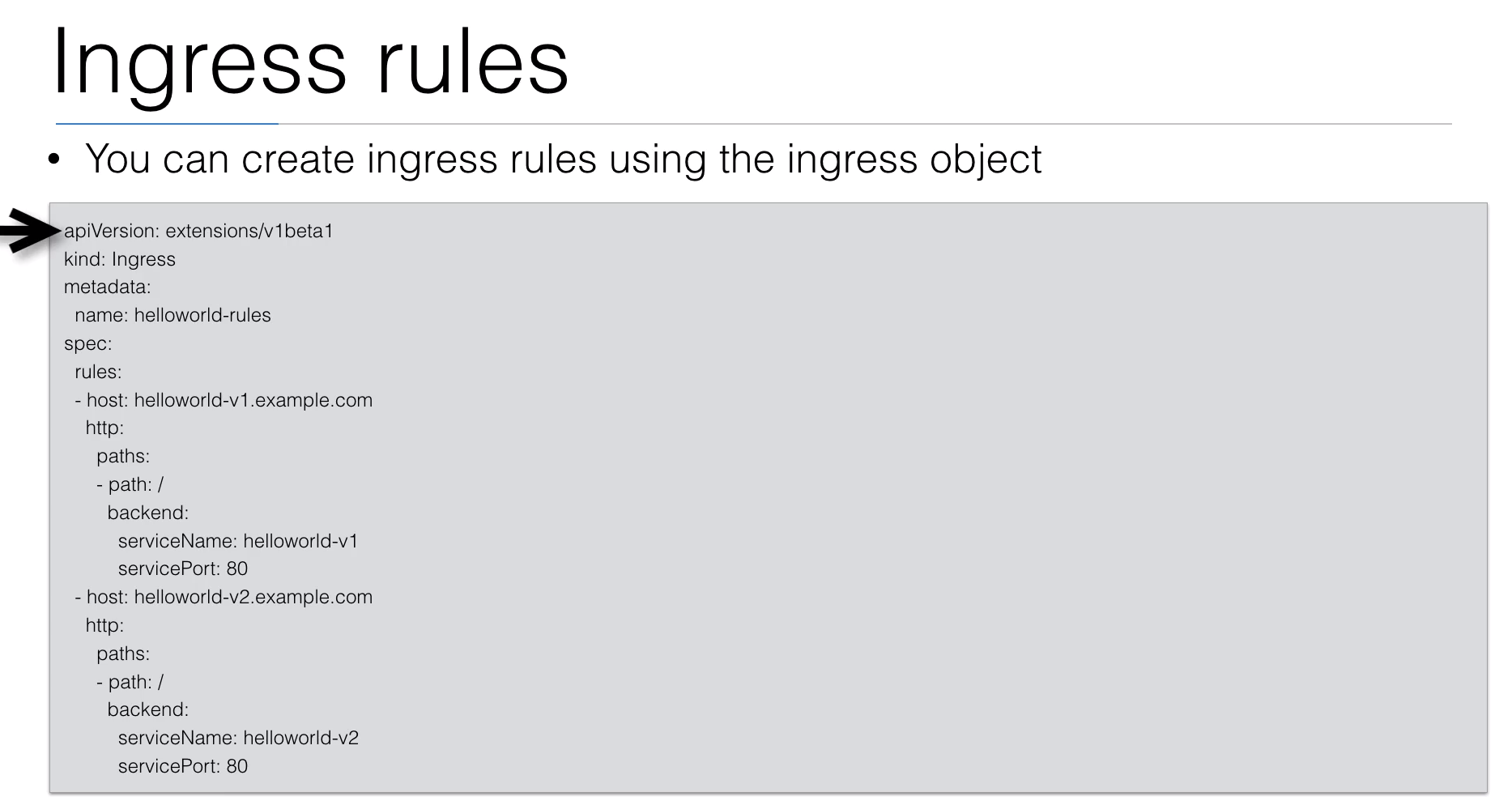

- We are going to use the

ingress/ingress.ymldocument to create the ingress pod. The URLs are ficticious and are going to be redirect to the default one by theingress=controller:--default-backend-service=$(POD_NAMESPACE)/echoheaders-default

ingress/ingress.yml

# An Ingress with 2 hosts and 3 endpoints

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: helloworld-rules

spec:

rules:

- host: helloworld-v1.example.com

http:

paths:

- path: /

backend:

serviceName: helloworld-v1

servicePort: 80

- host: helloworld-v2.example.com

http:

paths:

- path: /

backend:

serviceName: helloworld-v2

servicePort: 80

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f ingress/ingress.yml

ingress.extensions/helloworld-rules created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

ingress/helloworld-v1.ymldocument to create the Deployment and the service of Version 1.

ingress/helloworld-v1.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: helloworld-v1-deployment

spec:

replicas: 1

template:

metadata:

labels:

app: helloworld-v1

spec:

containers:

- name: k8s-demo

image: wardviaene/k8s-demo:latest

ports:

- name: nodejs-port

containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: helloworld-v1

spec:

type: NodePort

ports:

- port: 80

nodePort: 30303

targetPort: 3000

protocol: TCP

name: http

selector:

app: helloworld-v1

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f ingress/helloworld-v1.yml

deployment.extensions/helloworld-v1-deployment created

service/helloworld-v1 created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

ingress/helloworld-v2.ymldocument to create the Deployment and the service of Version 1.

ingress/helloworld-v2.yml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: helloworld-v2-deployment

spec:

replicas: 1

template:

metadata:

labels:

app: helloworld-v2

spec:

containers:

- name: k8s-demo

image: wardviaene/k8s-demo:2

ports:

- name: nodejs-port

containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: helloworld-v2

spec:

type: NodePort

ports:

- port: 80

nodePort: 30304

targetPort: 3000

protocol: TCP

name: http

selector:

app: helloworld-v2

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f ingress/helloworld-v2.yml

deployment.extensions/helloworld-v2-deployment created

service/helloworld-v2 created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to use the

ingress/echoservice.ymldocument to create the ReplicaController for the echoservice image from Google and the Service related.

ingress/echoservice.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: echoheaders

spec:

replicas: 1

template:

metadata:

labels:

app: echoheaders

spec:

containers:

- name: echoheaders

image: gcr.io/google_containers/echoserver:1.0

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: echoheaders-default

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30302

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaders

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl create -f ingress/echoservice.yml

replicationcontroller/echoheaders created

service/echoheaders-default created

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/echoheaders-t5p5q 1/1 Running 0 7m48s

pod/helloworld-v1-deployment-68b5f886b-bh7rt 1/1 Running 0 11m

pod/helloworld-v2-deployment-65dfc98468-xt9nz 1/1 Running 0 10m

pod/nginx-ingress-controller-hrmmz 1/1 Running 7 14m

NAME DESIRED CURRENT READY AGE

replicationcontroller/echoheaders 1 1 1 7m48s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/echoheaders-default NodePort 10.111.53.208 <none> 80:30302/TCP 7m48s

service/helloworld-v1 NodePort 10.109.130.196 <none> 80:30303/TCP 11m

service/helloworld-v2 NodePort 10.99.132.183 <none> 80:30304/TCP 10m

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/nginx-ingress-controller 1 1 1 1 1 <none> 14m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/helloworld-v1-deployment 1/1 1 1 11m

deployment.apps/helloworld-v2-deployment 1/1 1 1 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/helloworld-v1-deployment-68b5f886b 1 1 1 11m

replicaset.apps/helloworld-v2-deployment-65dfc98468 1 1 1 10m

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

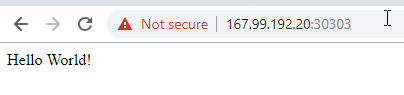

As we are not running

minikubewe don't have just one URL to access the cluster, we don't need the-H 'Host: helloworld-v1.example.com'headerWe are going to check if the

service/helloworld-v1service is working properly by accesing the local url and the external url with port30303

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl 10.109.130.196

Hello World!ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl 167.99.192.20:30303

Hello World!ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

- We are going to check if the

service/helloworld-v2service is working properly by accesing the local url and the external url with port30304

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl 10.99.132.183

Hello World v2!ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl 167.99.192.20:30304

Hello World v2!ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

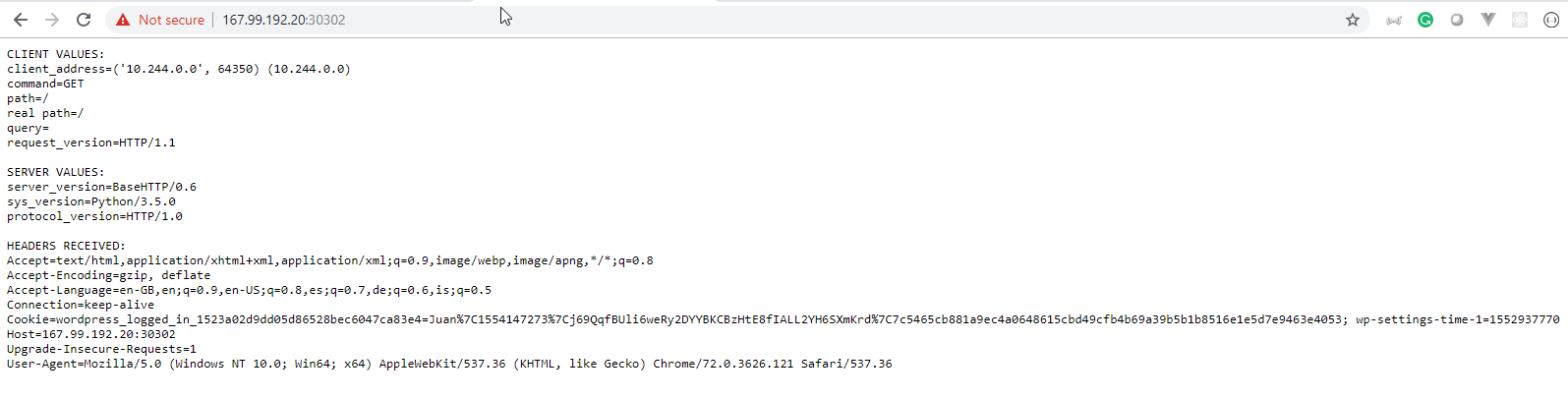

- We are going to check if the

service/echoheaders-defaultservice is working properly by accesing the local url and the external url with port30302

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl 10.111.53.208

CLIENT VALUES:

client_address=('10.244.0.0', 54830) (10.244.0.0)

command=GET

path=/

real path=/

query=

request_version=HTTP/1.1

SERVER VALUES:

server_version=BaseHTTP/0.6

sys_version=Python/3.5.0

protocol_version=HTTP/1.0

HEADERS RECEIVED:

Accept=*/*

Host=10.111.53.208

User-Agent=curl/7.47.0

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$ curl 167.99.192.20:30302

CLIENT VALUES:

client_address=('10.244.0.0', 49362) (10.244.0.0)

command=GET

path=/

real path=/

query=

request_version=HTTP/1.1

SERVER VALUES:

server_version=BaseHTTP/0.6

sys_version=Python/3.5.0

protocol_version=HTTP/1.0

HEADERS RECEIVED:

Accept=*/*

Host=167.99.192.20:30302

User-Agent=curl/7.47.0

ubuntu@kubernetes-master:~/training/learn-devops-the-complete-kubernetes-course/kubernetes-course$

DemoIngressController4

DemoIngressController5

DemoIngressController4

DemoIngressController5

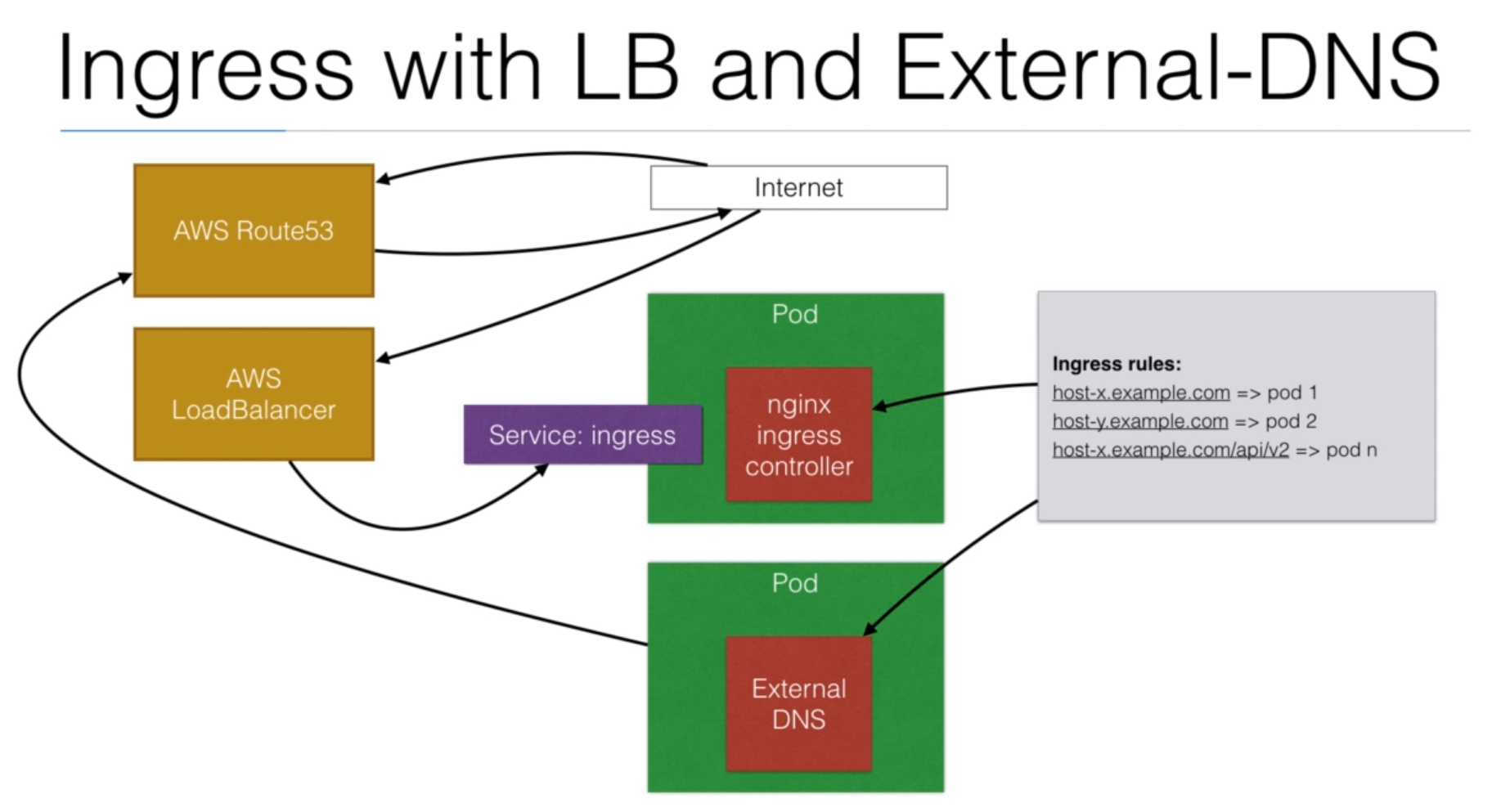

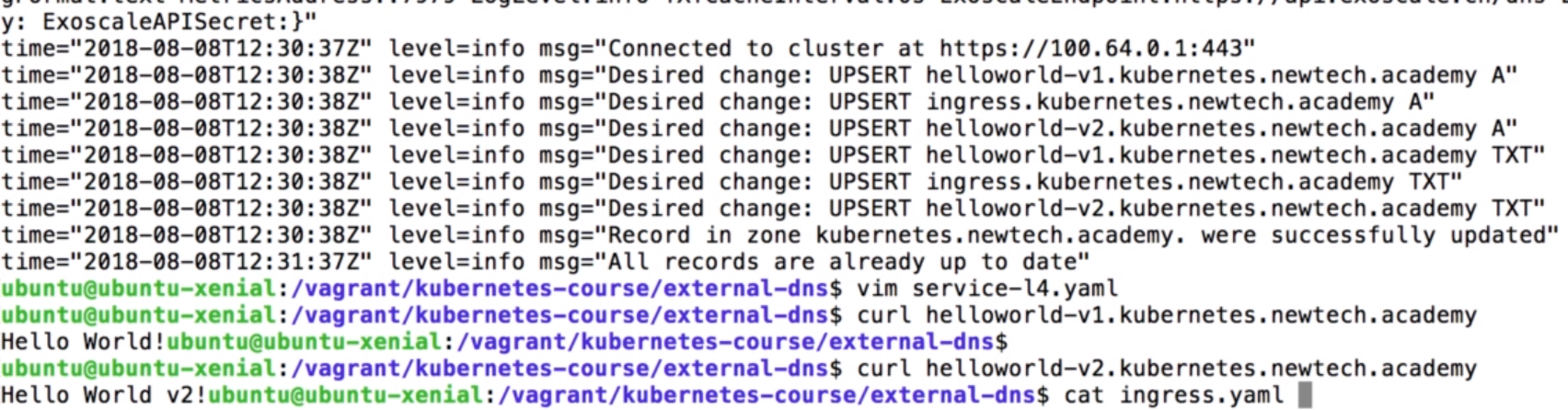

54. External DNS

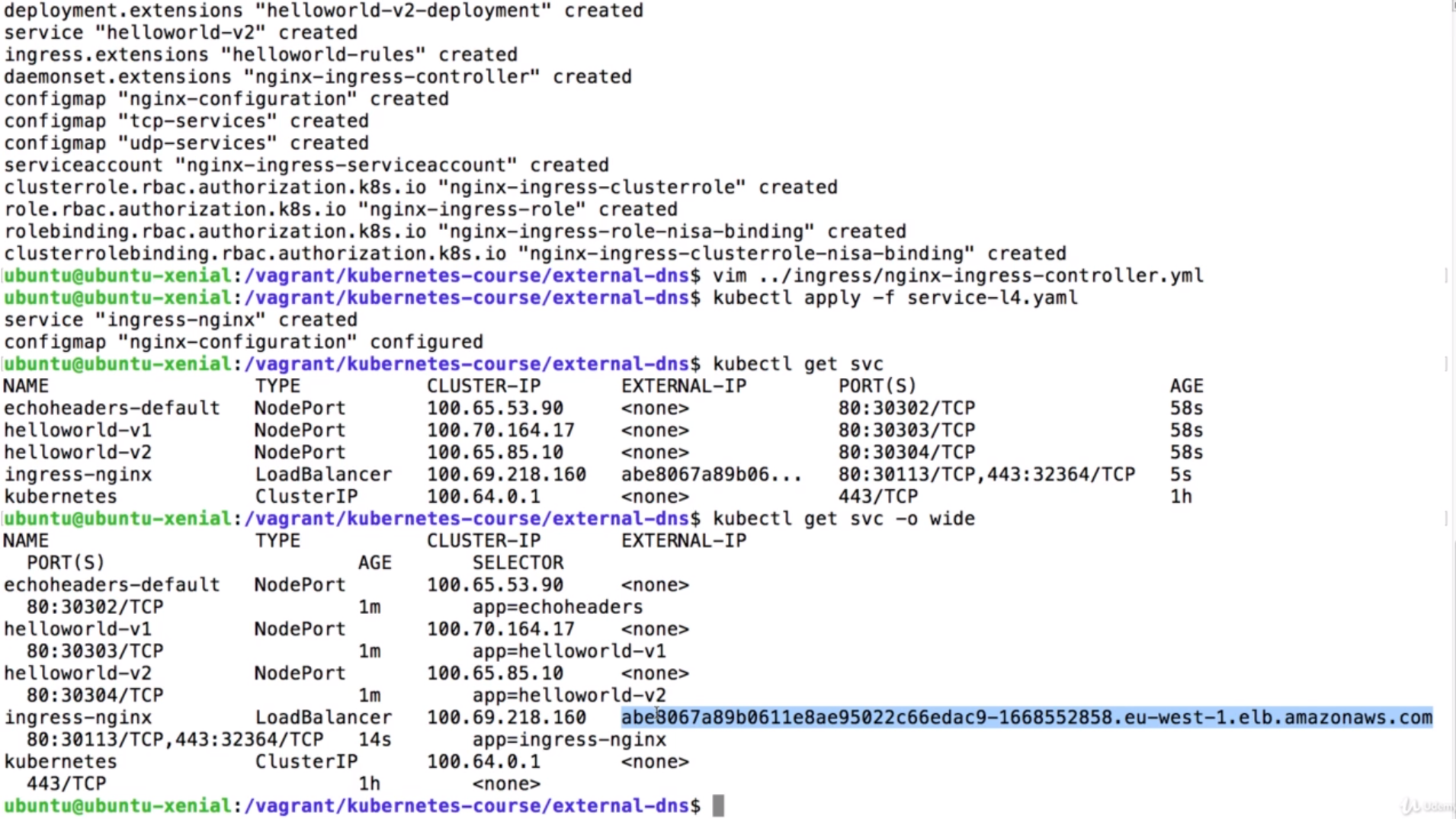

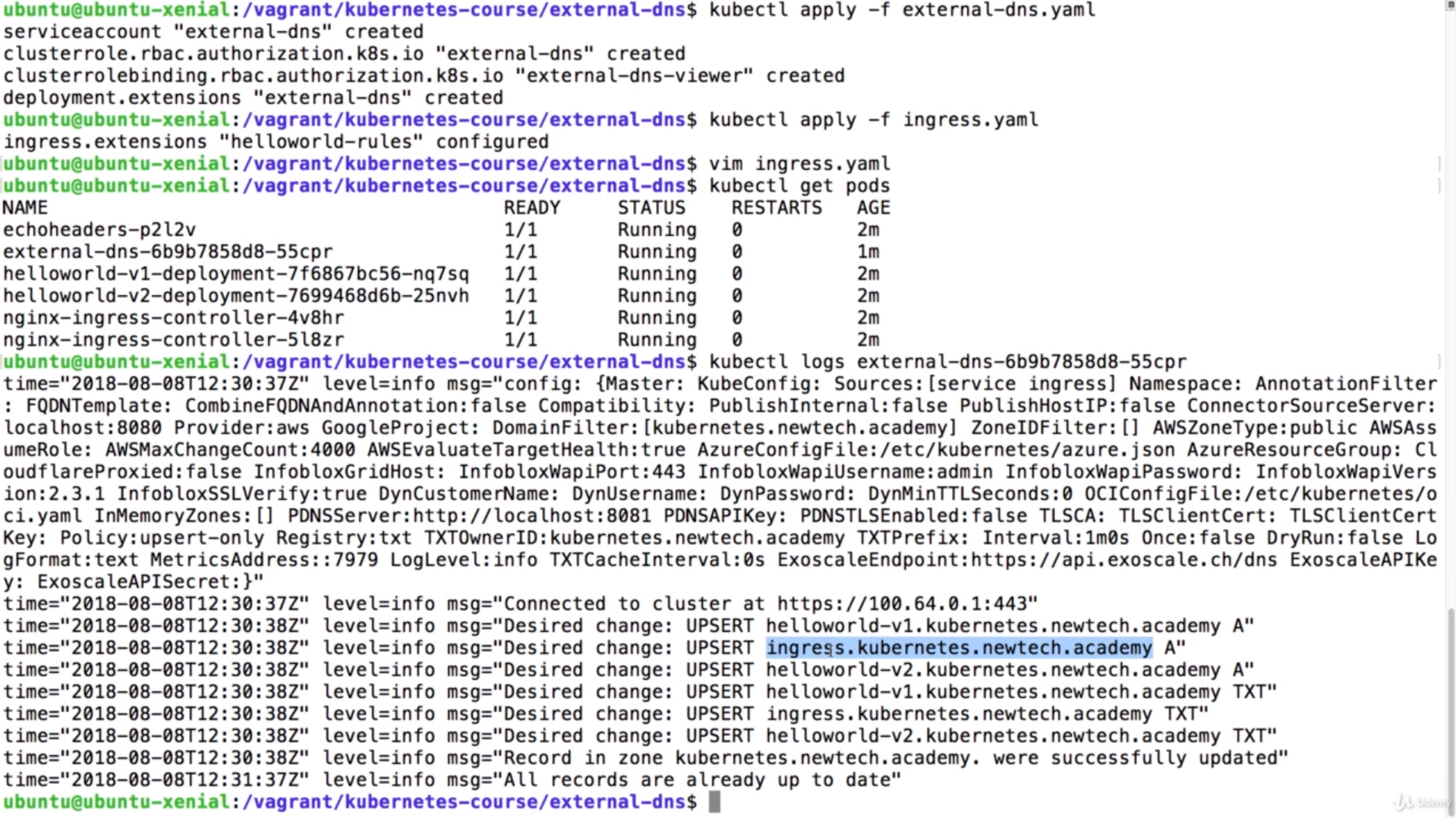

55. Demo: External DNS

- We can check the

external-dns/README.mddocument to see what we need to do.

external-dns/README.md

# External DNS

Project page: https://github.com/kubernetes-incubator/external-dns

## Create IAM Policy

./put-node-policy.sh

## start ingress

kubectl apply -f ../ingress/

## Create LoadBalancer for Ingress

kubectl apply -f service-l4.yaml

## Create external DNS and ingress rules

kubectl apply -f external-dns.yaml

kubectl apply -f ingress.yaml

- We can use the

external-dns/put-node-policy.shscript to add permissions to the nodes IAM role, enabling any pod to use these AWS privileges

external-dns/put-node-policy.sh

#!/bin/bash

#

# This script adds permissions to the nodes IAM role, enabling any pod to use these AWS privileges

# Usage of kube2iam is recommended, but not yet implemented by default in kops

#

DEFAULT_REGION="eu-west-1"

AWS_REGION="${AWS_REGION:-${DEFAULT_REGION}}"

NODE_ROLE="nodes.kubernetes.newtech.academy"

export AWS_REGION

aws iam put-role-policy --role-name ${NODE_ROLE} --policy-name external-dns-policy --policy-document '{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets"

],

"Resource": [

"arn:aws:route53:::hostedzone/*"

]

},

{

"Effect": "Allow",

"Action": [

"route53:ListHostedZones",

"route53:ListResourceRecordSets"

],

"Resource": [

"*"

]

}

]

}'

- We can use AWS LoadBalancer for Ingress by executing the

external-dns/service-l4.yamldocument.

external-dns/service-l4.yaml

kind: Service

apiVersion: v1

metadata:

name: ingress-nginx

labels:

app: ingress-nginx

annotations:

# Enable PROXY protocol

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: "*"

# Increase the ELB idle timeout to avoid issues with WebSockets or Server-Sent Events.

service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "3600"

# external-dns

external-dns.alpha.kubernetes.io/hostname: ingress.kubernetes.newtech.academy

spec:

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

labels:

app: ingress-nginx

data:

use-proxy-protocol: "true"

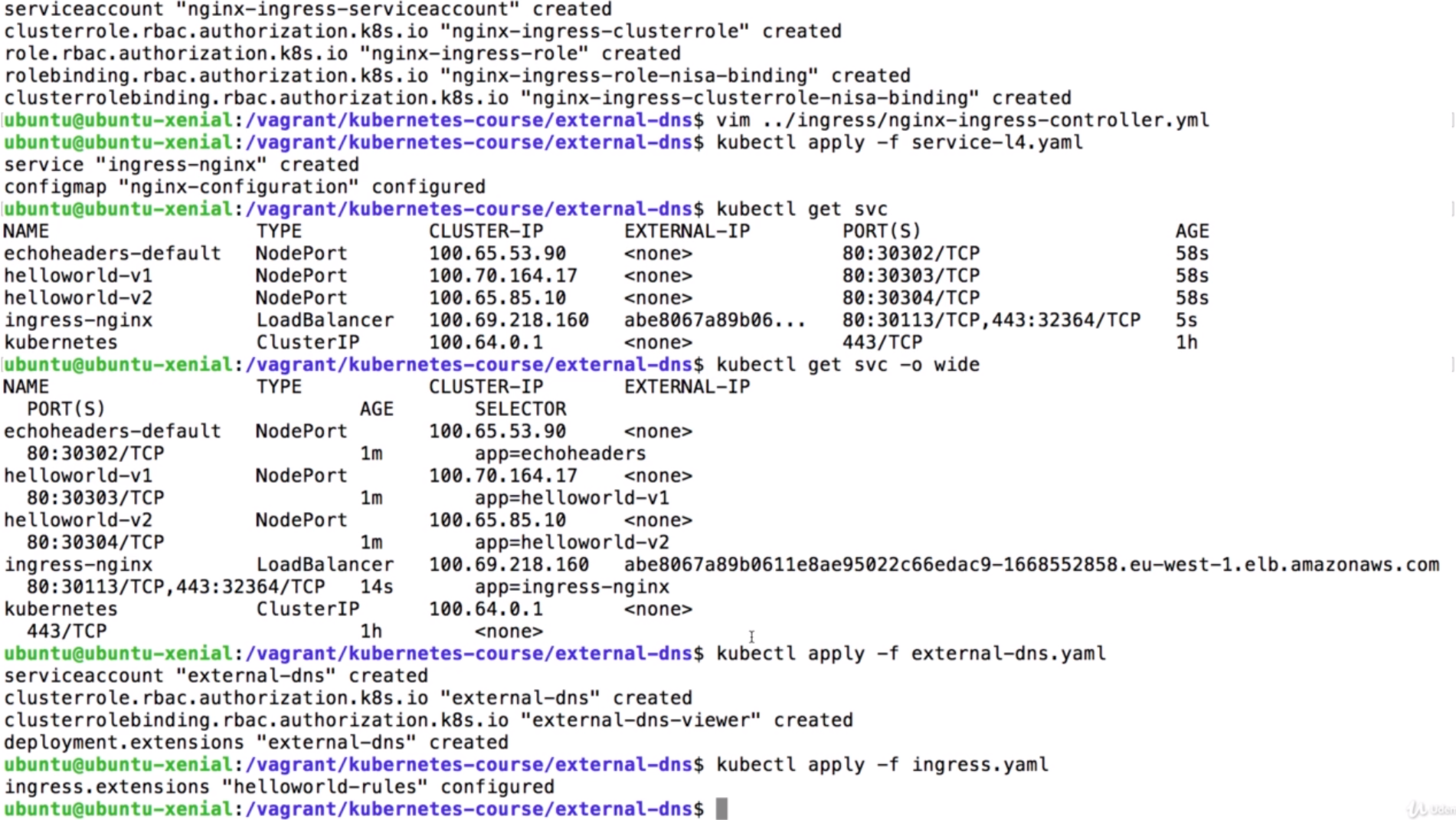

- We can use the

external-dns/external-dns.yamldocument to create the DNS rules

external-dns/external-dns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: external-dns

rules:

- apiGroups: [""]

resources: ["services"]

verbs: ["get", "watch", "list"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "watch", "list"]

- apiGroups: ["extensions"]

resources: ["ingresses"]

verbs: ["get", "watch", "list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: default

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: external-dns

spec:

strategy:

type: Recreate

template:

metadata:

labels:

app: external-dns

spec:

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.opensource.zalan.do/teapot/external-dns:latest

args:

- --source=service

- --source=ingress

- --domain-filter=kubernetes.newtech.academy # will make ExternalDNS see only the hosted zones matching provided domain, omit to process all available hosted zones

- --provider=aws

- --policy=upsert-only # would prevent ExternalDNS from deleting any records, omit to enable full synchronization

- --aws-zone-type=public # only look at public hosted zones (valid values are public, private or no value for both)

- --registry=txt

- --txt-owner-id=kubernetes.newtech.academy

- We can use the

external-dns/ingress.yamldocument to create the DNS rules

external-dns/ingress.yaml

# An Ingress with 2 hosts and 3 endpoints

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: helloworld-rules

spec:

rules:

- host: helloworld-v1.kubernetes.newtech.academy

http:

paths:

- path: /

backend:

serviceName: helloworld-v1

servicePort: 80

- host: helloworld-v2.kubernetes.newtech.academy

http:

paths:

- path: /

backend:

serviceName: helloworld-v2

servicePort: 80

f

f

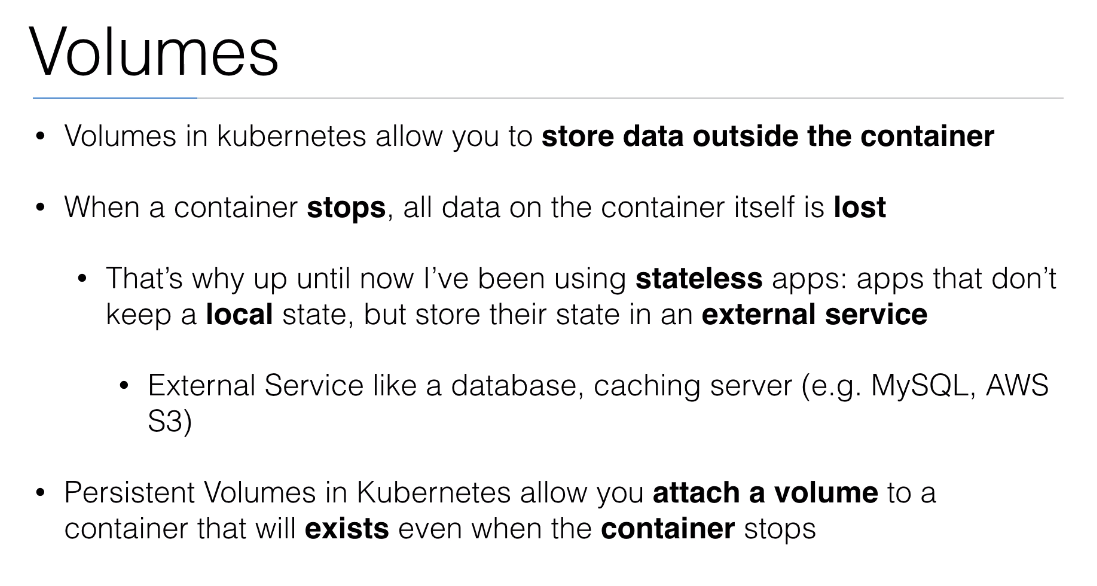

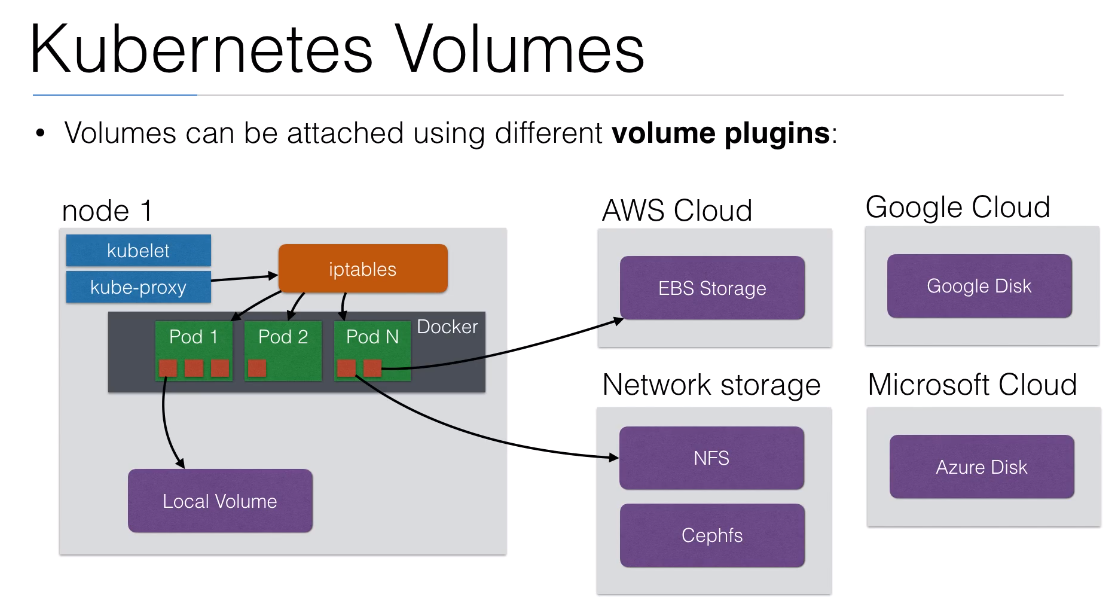

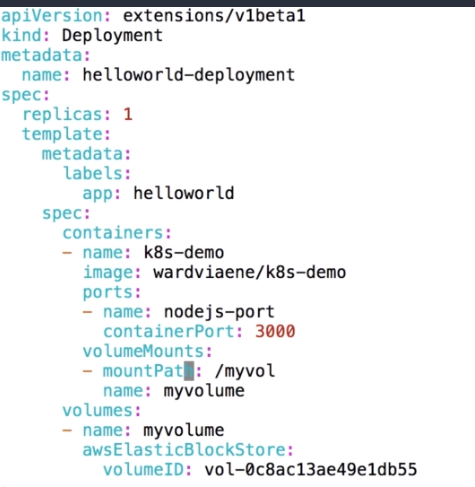

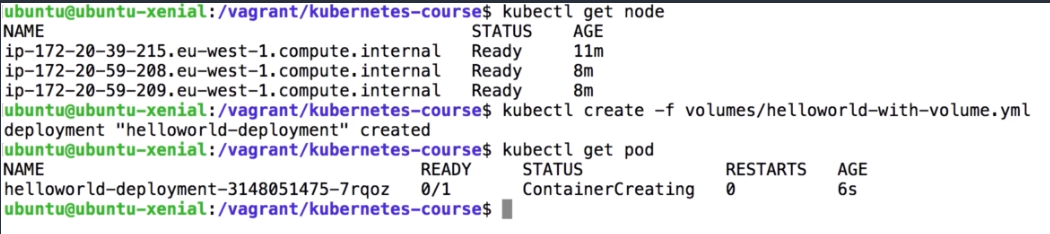

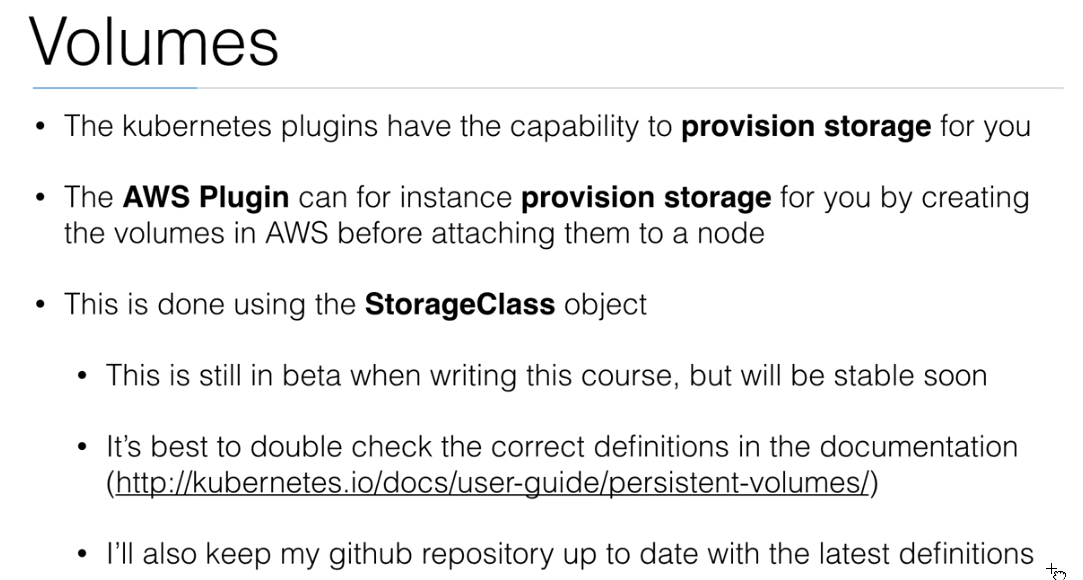

56. Volumes

57. Demo: Volumes

- We need to the create the

AWS Clusteragain:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops create cluster --name=kubernetes.peelmicro.com --state=s3://kubernetes.peelmicro.com --zones=eu-central-1a --node-count=2 --node-size=t2.micro --master-size=t2.micro --dns-zone=kubernetes.peelmicro.com

I0323 07:02:35.765163 18613 create_cluster.go:480] Inferred --cloud=aws from zone "eu-central-1a"

I0323 07:02:35.880913 18613 subnets.go:184] Assigned CIDR 172.20.32.0/19 to subnet eu-central-1a

I0323 07:02:36.261184 18613 create_cluster.go:1351] Using SSH public key: /root/.ssh/id_rsa.pub

Previewing changes that will be made:

I0323 07:02:38.332294 18613 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0323 07:02:38.753930 18613 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0323 07:02:38.950696 18613 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0323 07:02:39.062305 18613 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0323 07:02:39.093184 18613 executor.go:103] Tasks: 73 done / 73 total; 0 can run

Will create resources:

AutoscalingGroup/master-eu-central-1a.masters.kubernetes.peelmicro.com

.

.

.

VPCDHCPOptionsAssociation/kubernetes.peelmicro.com

VPC name:kubernetes.peelmicro.com

DHCPOptions name:kubernetes.peelmicro.com

Must specify --yes to apply changes

Cluster configuration has been created.

Suggestions:

* list clusters with: kops get cluster

* edit this cluster with: kops edit cluster kubernetes.peelmicro.com

* edit your node instance group: kops edit ig --name=kubernetes.peelmicro.com nodes

* edit your master instance group: kops edit ig --name=kubernetes.peelmicro.com master-eu-central-1a

Finally configure your cluster with: kops update cluster kubernetes.peelmicro.com --yes

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- Now we have to deploy the cluster

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops update cluster kubernetes.peelmicro.com --yes --state=s3://kubernetes.peelmicro.com

I0323 07:05:47.508941 18732 executor.go:103] Tasks: 0 done / 73 total; 31 can run

I0323 07:05:48.181015 18732 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator-ca"

I0323 07:05:48.344802 18732 vfs_castore.go:735] Issuing new certificate: "ca"

I0323 07:05:48.533220 18732 executor.go:103] Tasks: 31 done / 73 total; 24 can run

I0323 07:05:50.773076 18732 vfs_castore.go:735] Issuing new certificate: "apiserver-proxy-client"

I0323 07:05:50.841660 18732 vfs_castore.go:735] Issuing new certificate: "kube-proxy"

I0323 07:05:51.276018 18732 vfs_castore.go:735] Issuing new certificate: "kubecfg"

I0323 07:05:52.115757 18732 vfs_castore.go:735] Issuing new certificate: "kubelet-api"

I0323 07:05:52.323344 18732 vfs_castore.go:735] Issuing new certificate: "apiserver-aggregator"

I0323 07:05:52.339421 18732 vfs_castore.go:735] Issuing new certificate: "kops"

I0323 07:05:52.346598 18732 vfs_castore.go:735] Issuing new certificate: "master"

I0323 07:05:52.533204 18732 vfs_castore.go:735] Issuing new certificate: "kube-scheduler"

I0323 07:05:52.821499 18732 vfs_castore.go:735] Issuing new certificate: "kubelet"

I0323 07:05:53.726052 18732 vfs_castore.go:735] Issuing new certificate: "kube-controller-manager"

I0323 07:05:53.954350 18732 executor.go:103] Tasks: 55 done / 73 total; 16 can run

I0323 07:05:54.222571 18732 launchconfiguration.go:380] waiting for IAM instance profile "masters.kubernetes.peelmicro.com" to be ready

I0323 07:05:54.232091 18732 launchconfiguration.go:380] waiting for IAM instance profile "nodes.kubernetes.peelmicro.com" to be ready

I0323 07:06:04.655725 18732 executor.go:103] Tasks: 71 done / 73 total; 2 can run

I0323 07:06:05.190794 18732 executor.go:103] Tasks: 73 done / 73 total; 0 can run

I0323 07:06:05.190993 18732 dns.go:153] Pre-creating DNS records

I0323 07:06:07.117673 18732 update_cluster.go:290] Exporting kubecfg for cluster

kops has set your kubectl context to kubernetes.peelmicro.com

Cluster is starting. It should be ready in a few minutes.

Suggestions:

* validate cluster: kops validate cluster

* list nodes: kubectl get nodes --show-labels

* ssh to the master: ssh -i ~/.ssh/id_rsa admin@api.kubernetes.peelmicro.com

* the admin user is specific to Debian. If not using Debian please use the appropriate user based on your OS.

* read about installing addons at: https://github.com/kubernetes/kops/blob/master/docs/addons.md.

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

- Finally to have to ensure the cluster is valid.

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops validate cluster --state=s3://kubernetes.peelmicro.com

Using cluster from kubectl context: kubernetes.peelmicro.com

Validating cluster kubernetes.peelmicro.com

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-eu-central-1a Master t2.micro 1 1 eu-central-1a

nodes Node t2.micro 2 2 eu-central-1a

NODE STATUS

NAME ROLE READY

VALIDATION ERRORS

KIND NAME MESSAGE

dns apiserver Validation Failed

The dns-controller Kubernetes deployment has not updated the Kubernetes cluster's API DNS entry to the correct IP address. The API DNS IP address is the placeholder address that kops creates: 203.0.113.123. Please wait about 5-10 minutes for a master to start, dns-controller to launch, and DNS to propagate. The protokube container and dns-controller deployment logs may contain more diagnostic information. Etcd and the API DNS entries must be updated for a kops Kubernetes cluster to start.

Validation Failed

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

root@ubuntu-s-1vcpu-2gb-lon1-01:~# kops validate cluster --state=s3://kubernetes.peelmicro.com

Using cluster from kubectl context: kubernetes.peelmicro.com

Validating cluster kubernetes.peelmicro.com

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-eu-central-1a Master t2.micro 1 1

nodes Node t2.micro 2 2 eu-central-1a

NODE STATUS

NAME ROLE READY

ip-172-20-37-140.eu-central-1.compute.internal master True

ip-172-20-47-24.eu-central-1.compute.internal node True

ip-172-20-62-170.eu-central-1.compute.internal node True

Your cluster kubernetes.peelmicro.com is ready

root@ubuntu-s-1vcpu-2gb-lon1-01:~#

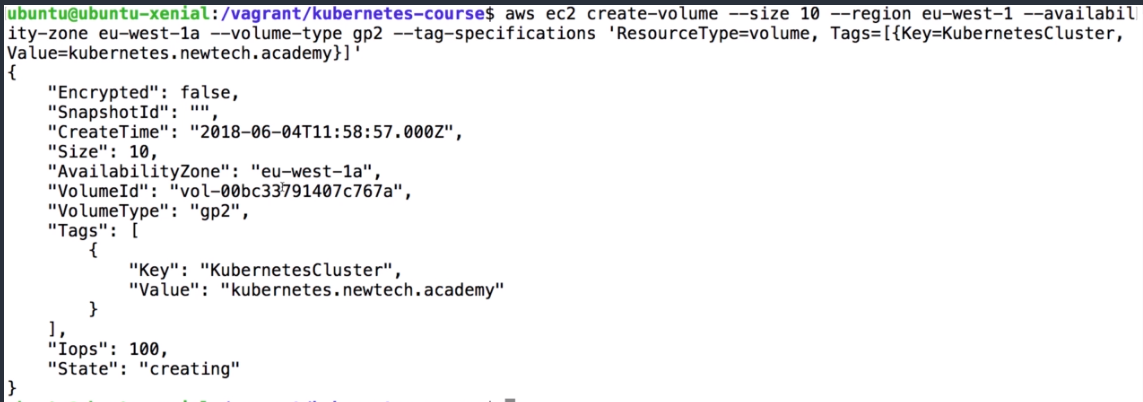

- We can create the volume by executing:

root@ubuntu-s-1vcpu-2gb-lon1-01:~# aws ec2 create-volume --size 10 --region eu-central-1 --availability-zone eu-central-1a --volume-type gp2 --tag-specifications 'ResourceType=volume, Tags=[{Key= KubernetesCluster, Value=kubernetes.peelmicro.com}]'

{

"AvailabilityZone": "eu-central-1a",

"CreateTime": "2019-03-23T07:31:07.000Z",

"Encrypted": false,

"Size": 10,

"SnapshotId": "",

"State": "creating",

"VolumeId": "vol-0af16d75075776394",

"Iops": 100,

"Tags": [

{

"Key": "KubernetesCluster",

"Value": "kubernetes.peelmicro.com"

}

],

"VolumeType": "gp2"

}

- Clone the repository

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course# git clone https://github.com/peelmicro/learn-devops-the-complete-kubernetes-course

Cloning into 'learn-devops-the-complete-kubernetes-course'...

remote: Enumerating objects: 168, done.

remote: Counting objects: 100% (168/168), done.

remote: Compressing objects: 100% (119/119), done.

remote: Total 168 (delta 44), reused 168 (delta 44), pack-reused 0

Receiving objects: 100% (168/168), 41.33 KiB | 470.00 KiB/s, done.

Resolving deltas: 100% (44/44), done.

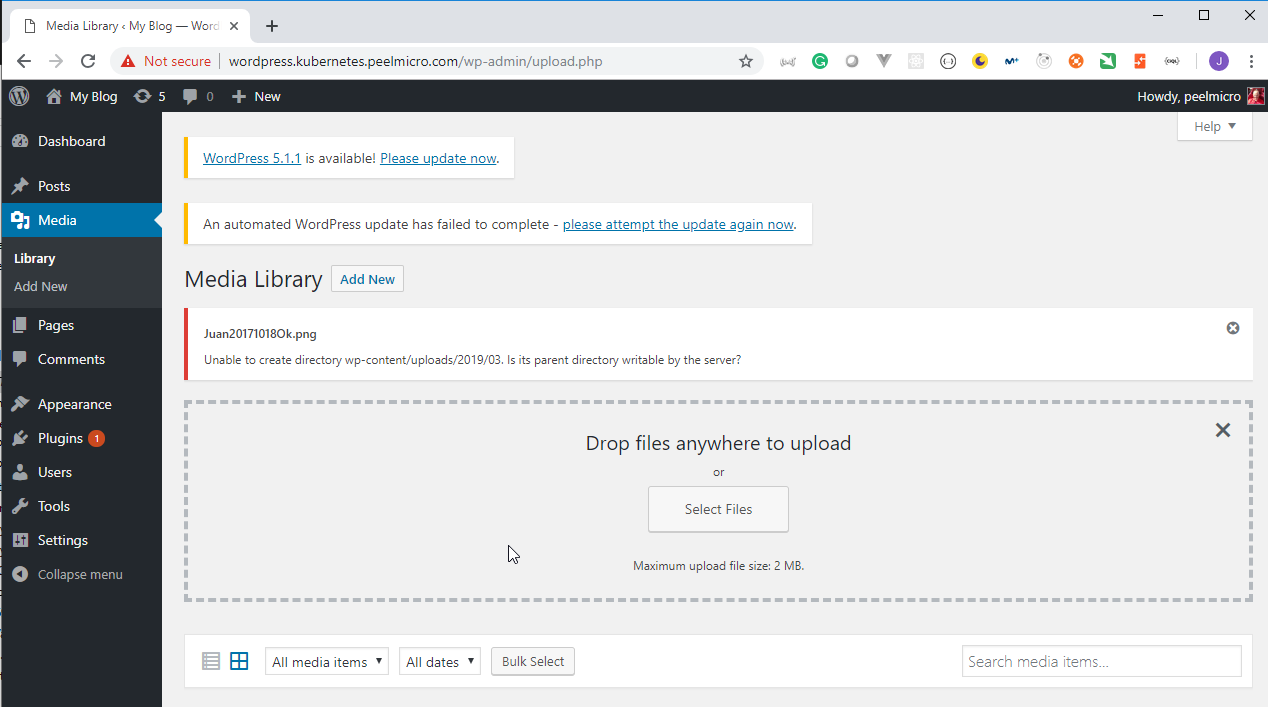

root@ubuntu-s-1vcpu-2gb-lon1-01:~/training/learn-devops-the-complete-kubernetes-course# ls