MongoDB - The Complete Developer's Guide

Github Repositories

The MongoDB - The Complete Developer's Guide Udemy course teaches Master MongoDB Development for Web & Mobile Apps. CRUD Operations, Indexes, Aggregation Framework - All about MongoDB!

Table of contents

- What I've learned

- Introduction

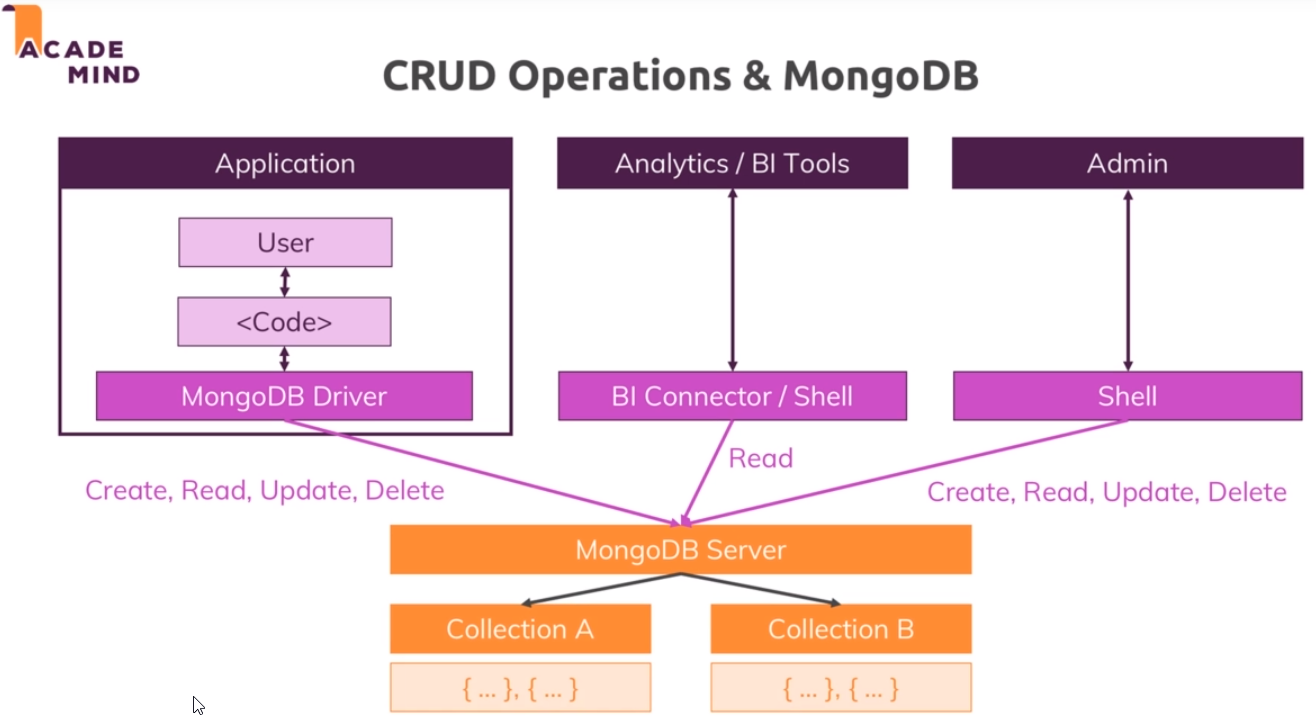

- Working with the Database (CRUD Operations)

- Schemas & Relations: How to Structure Documents

- Exploring The Shell & The Server

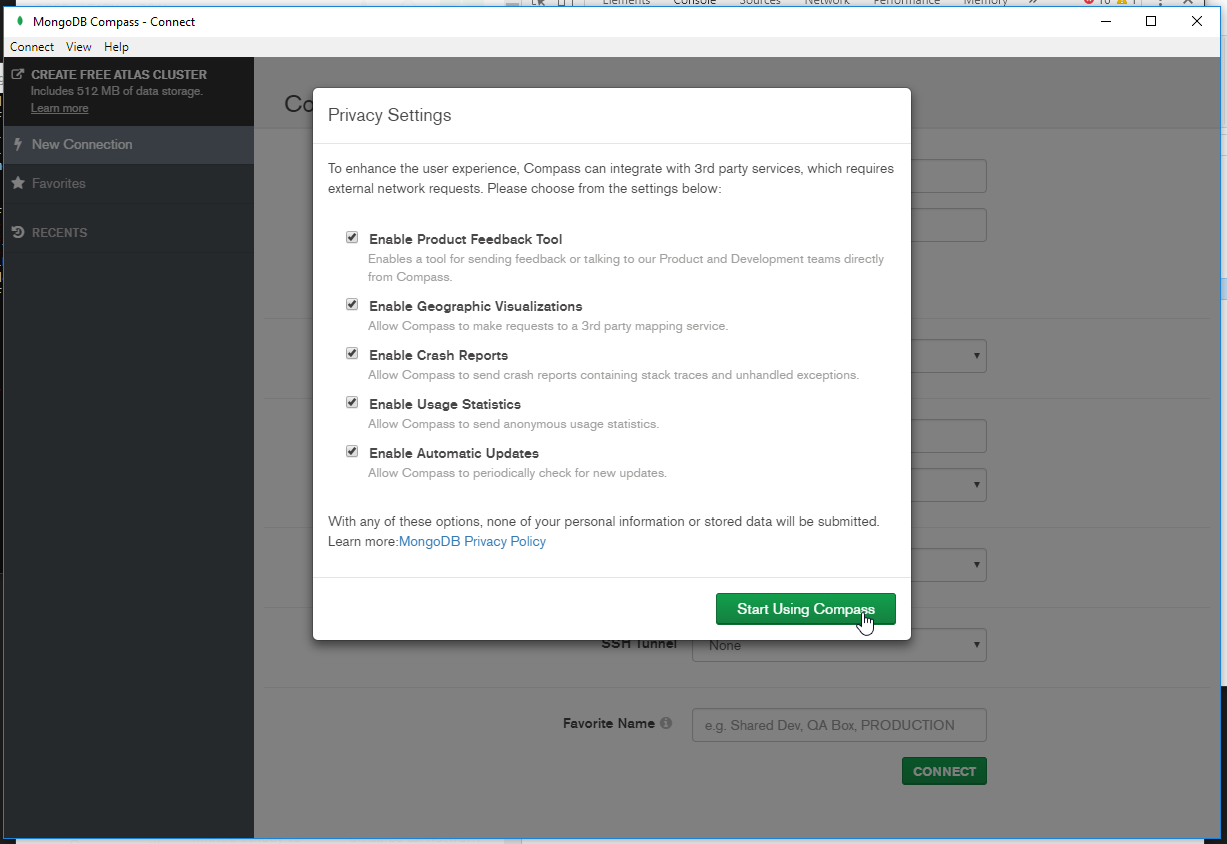

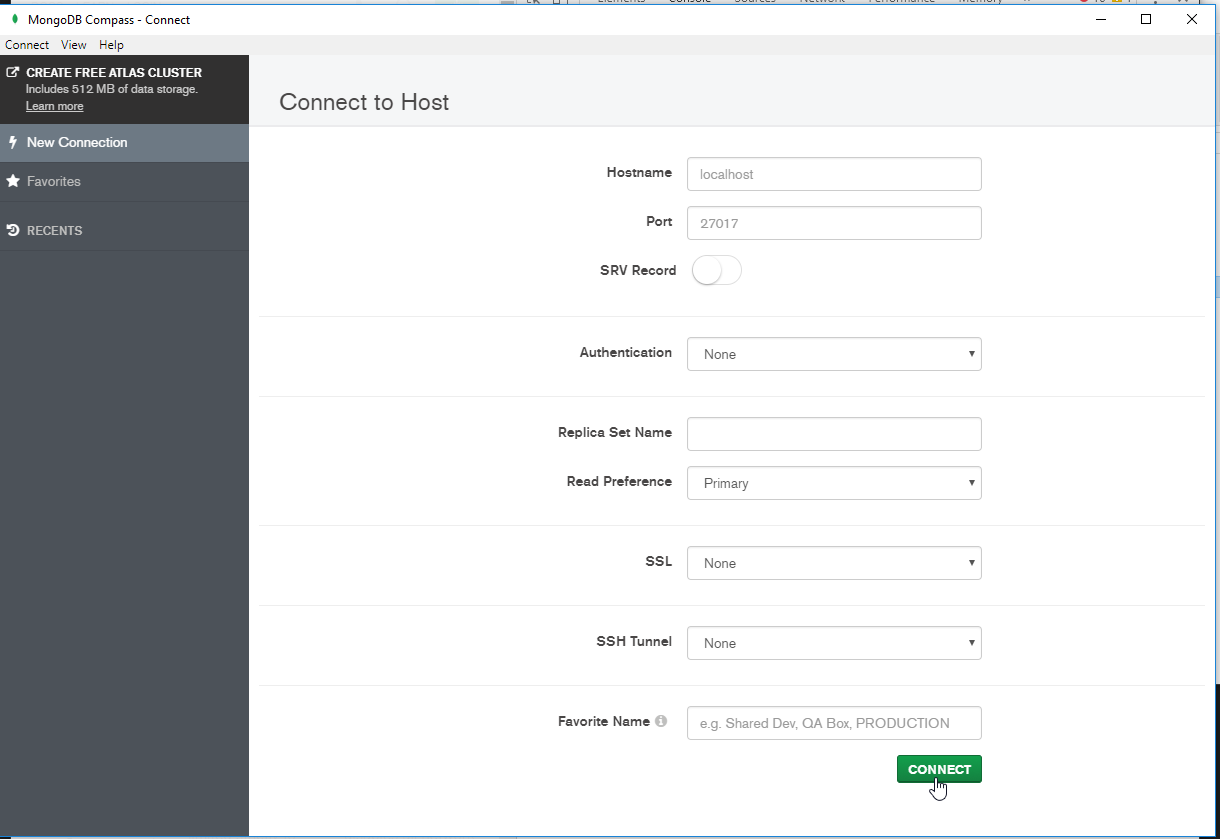

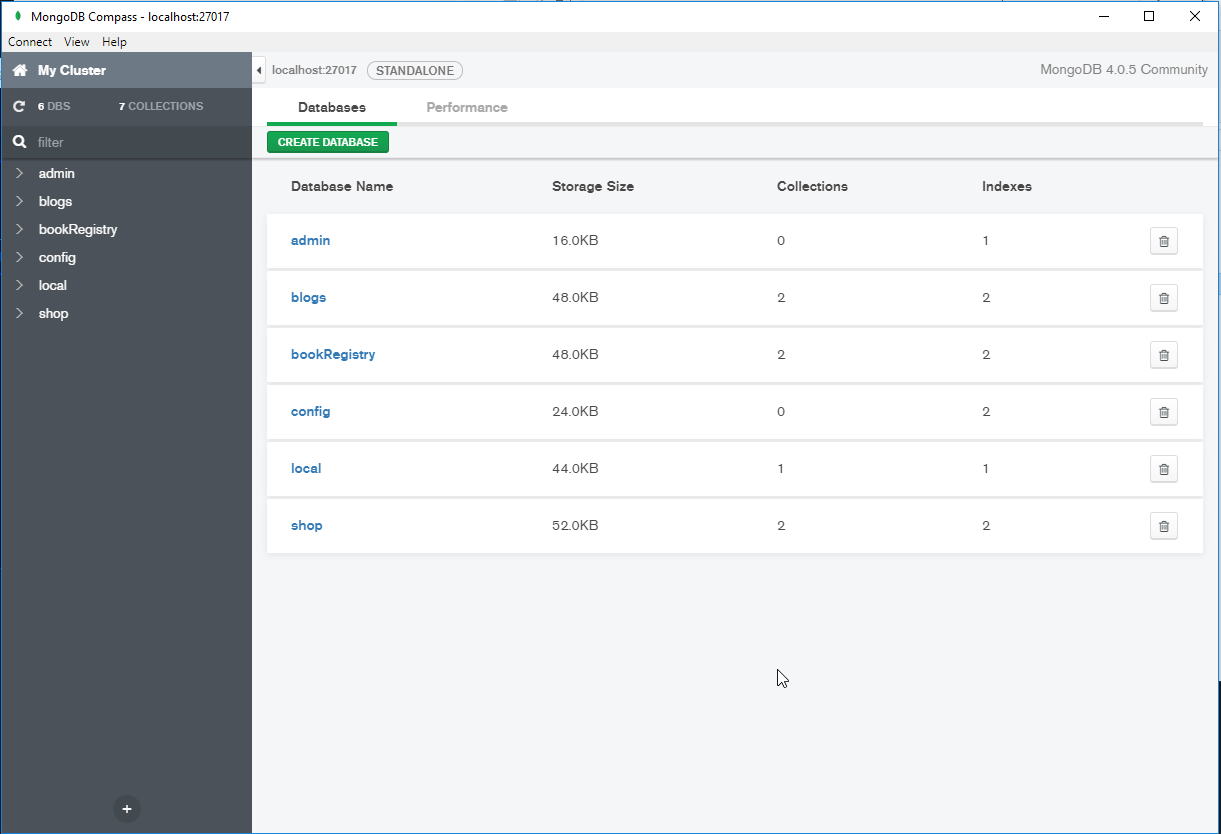

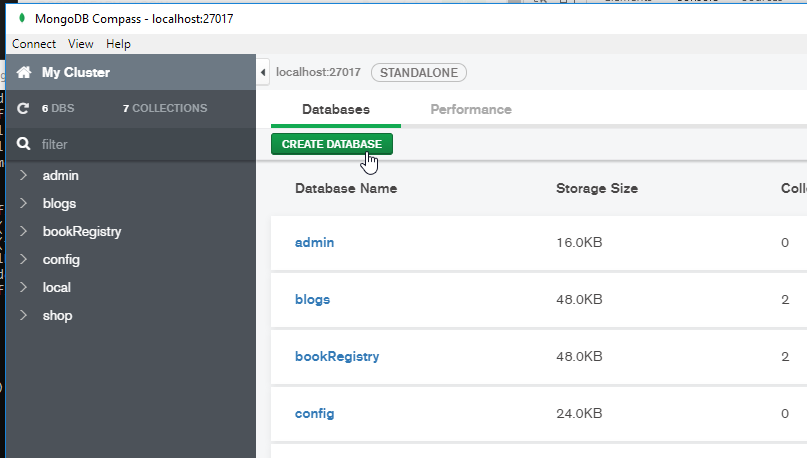

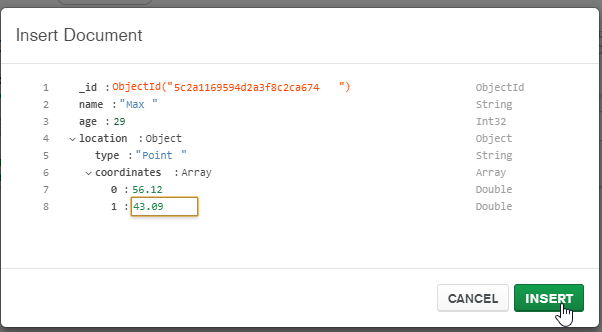

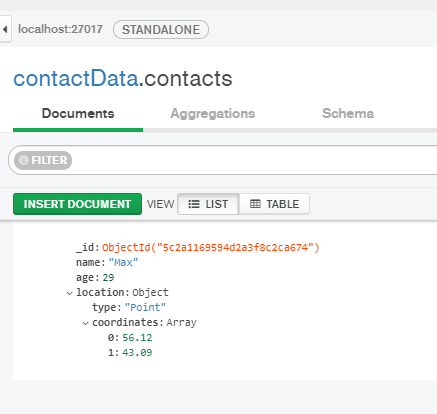

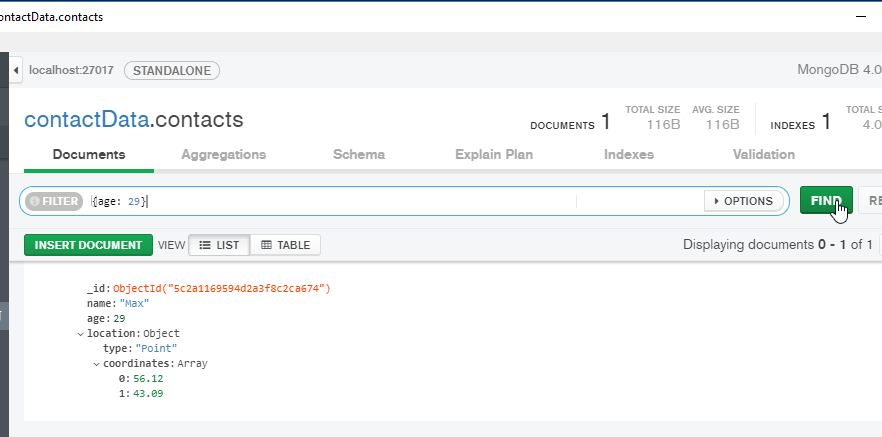

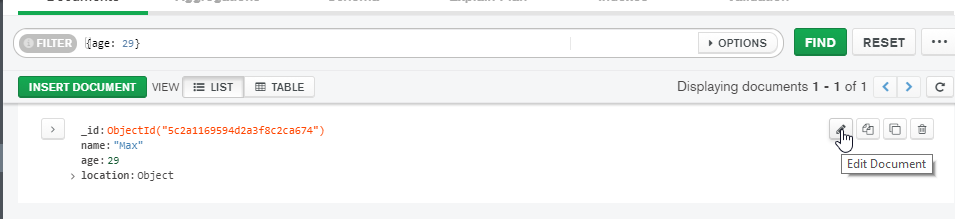

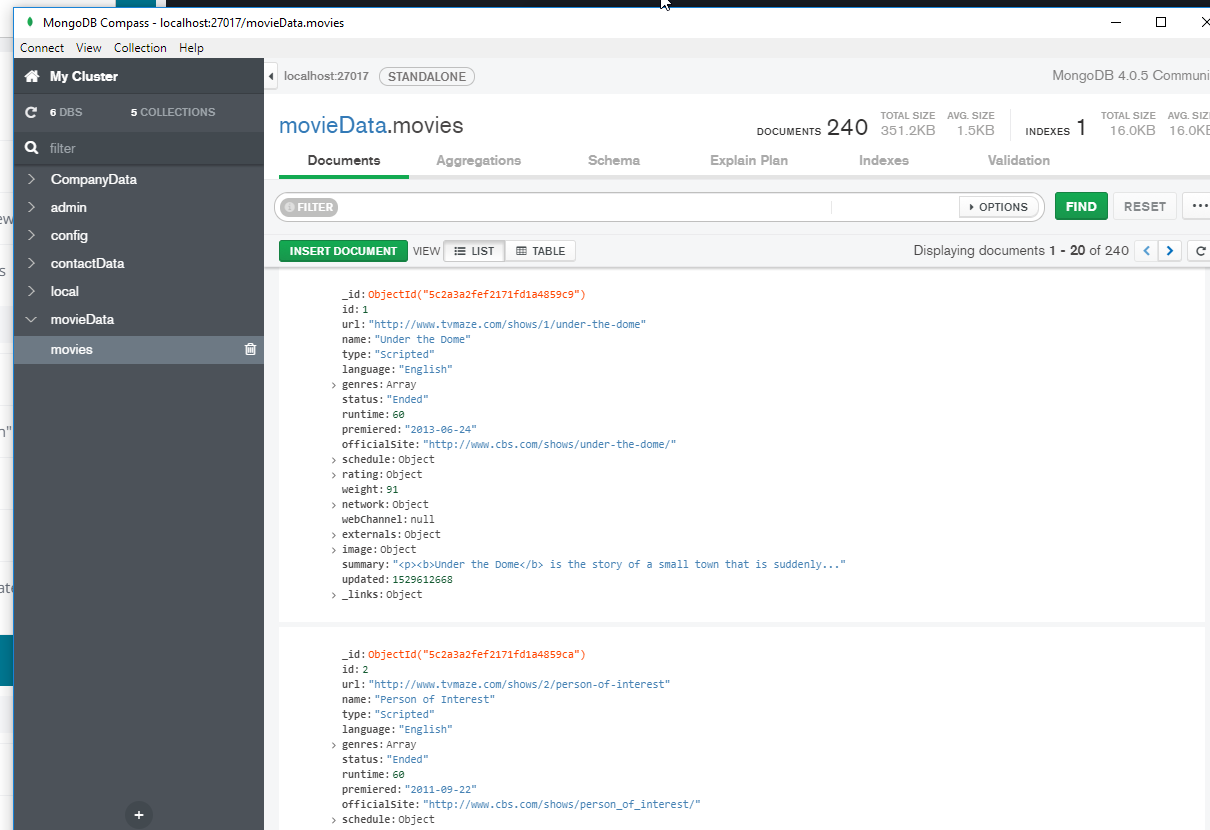

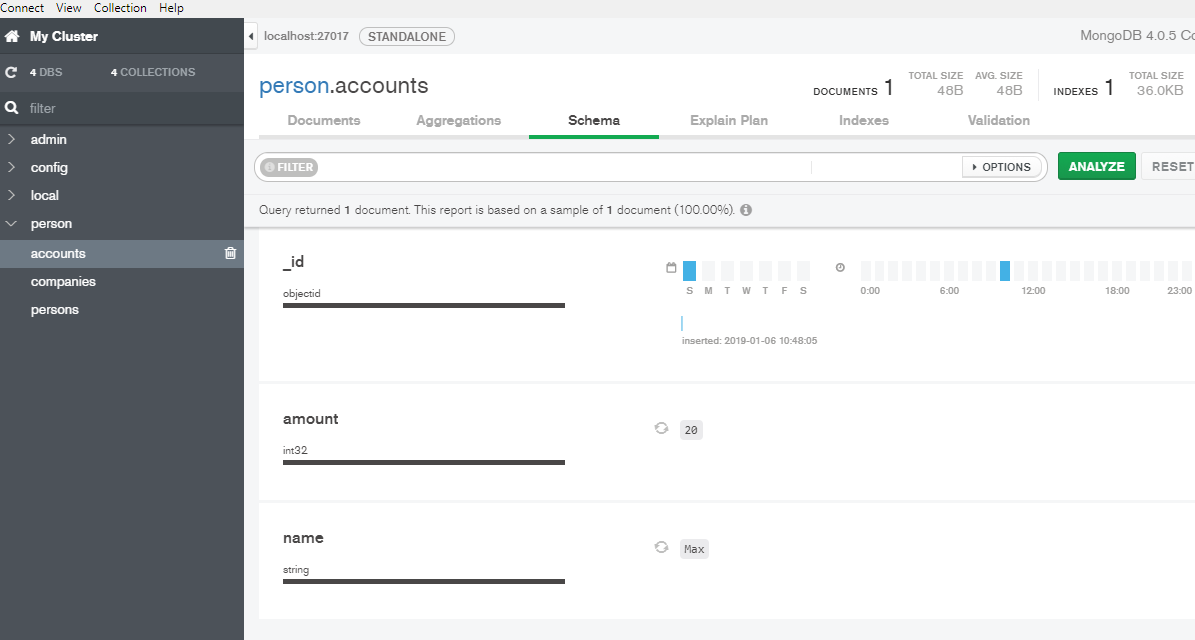

- MongoDB Compass

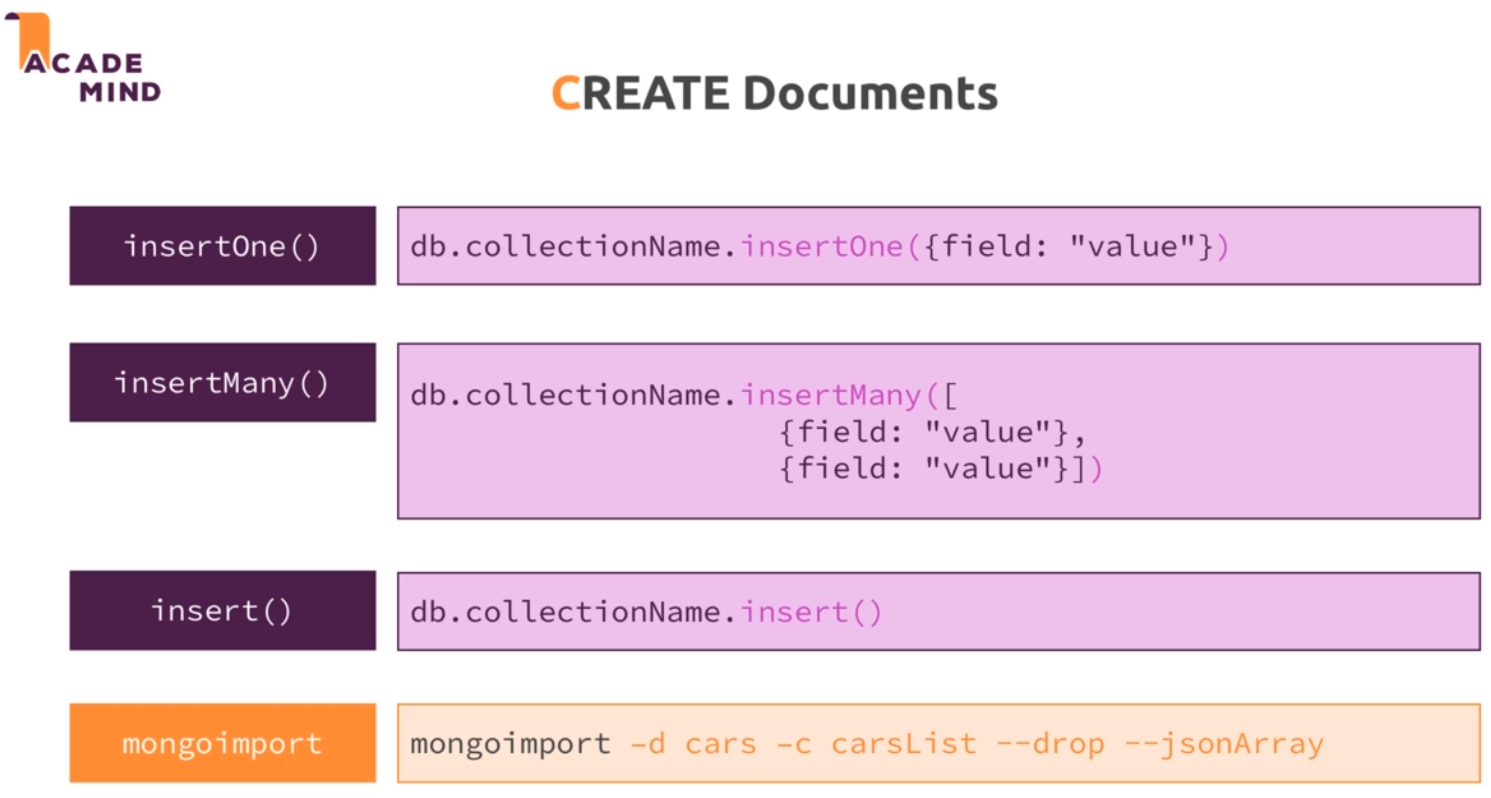

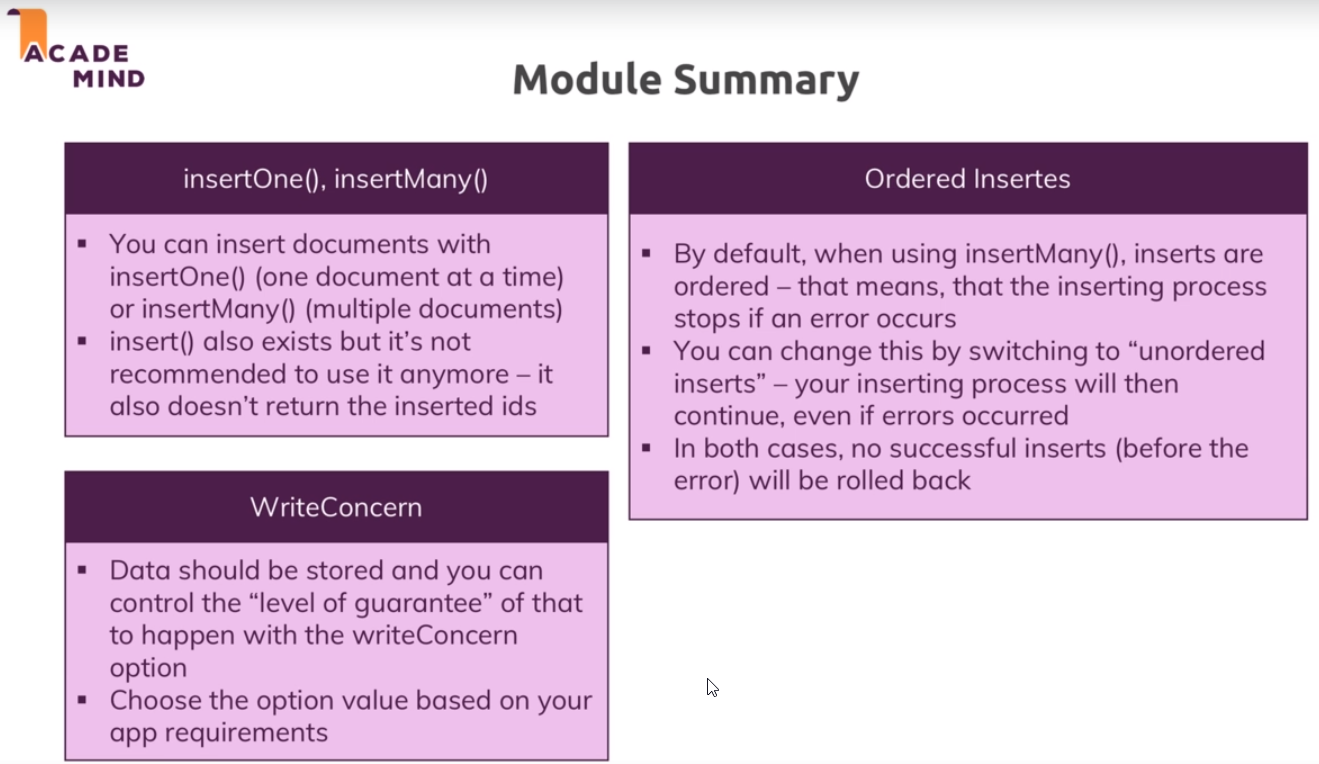

- Diving Into Create Operations

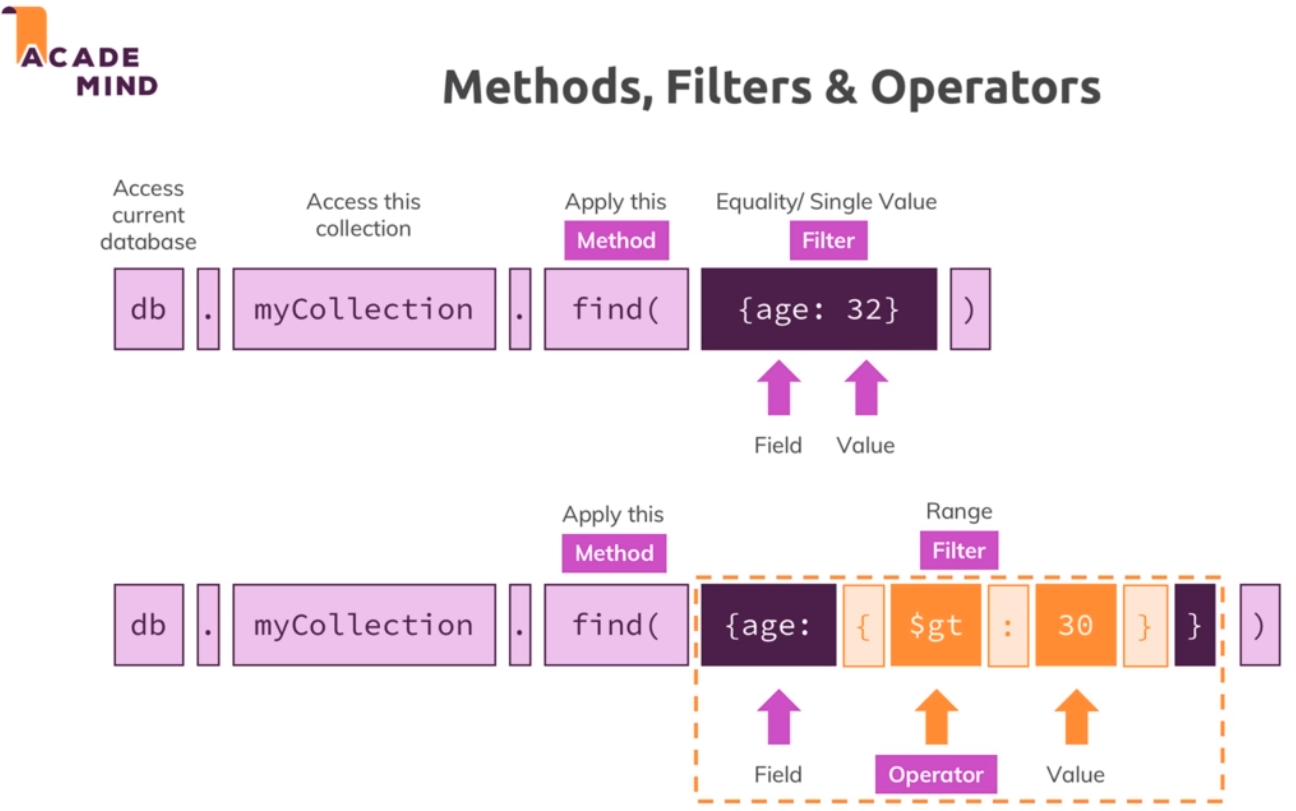

- Read Opertions - A closer look

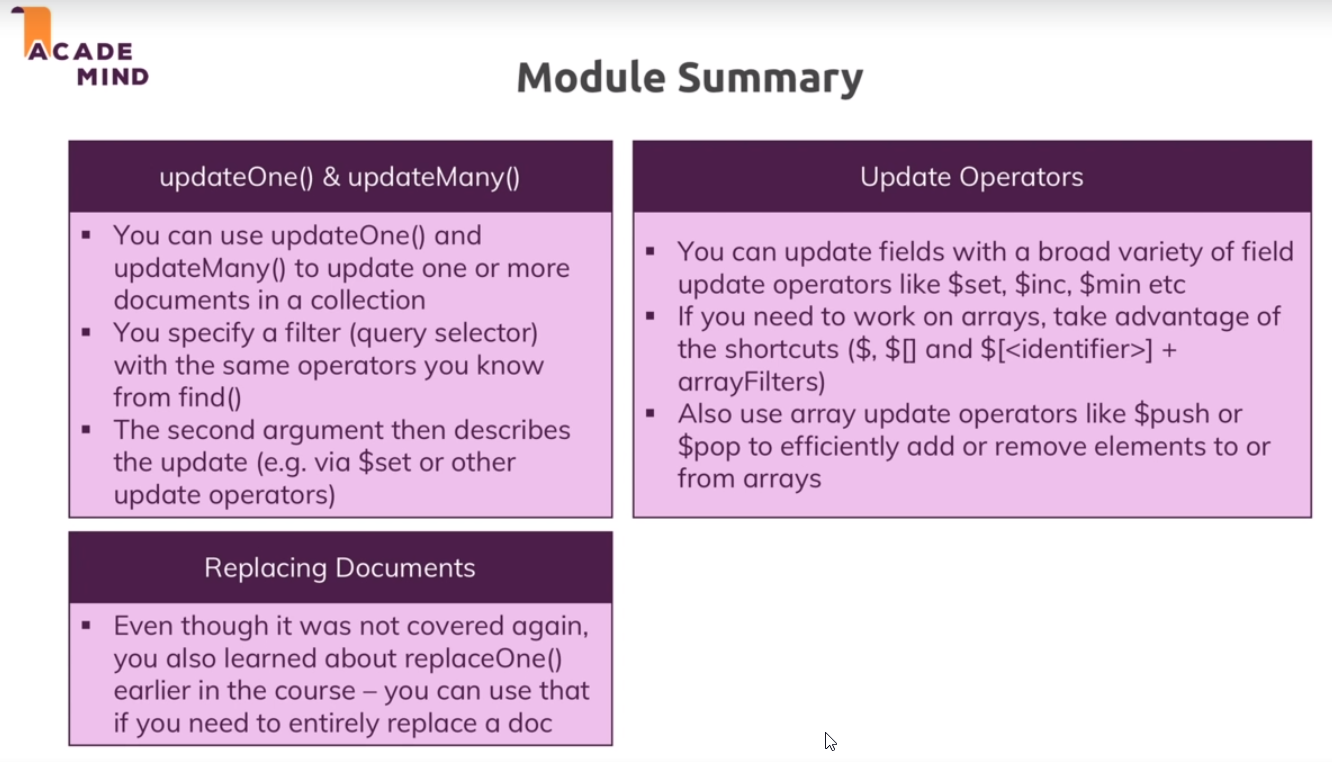

- Update operations

- Understanding Delete Operations

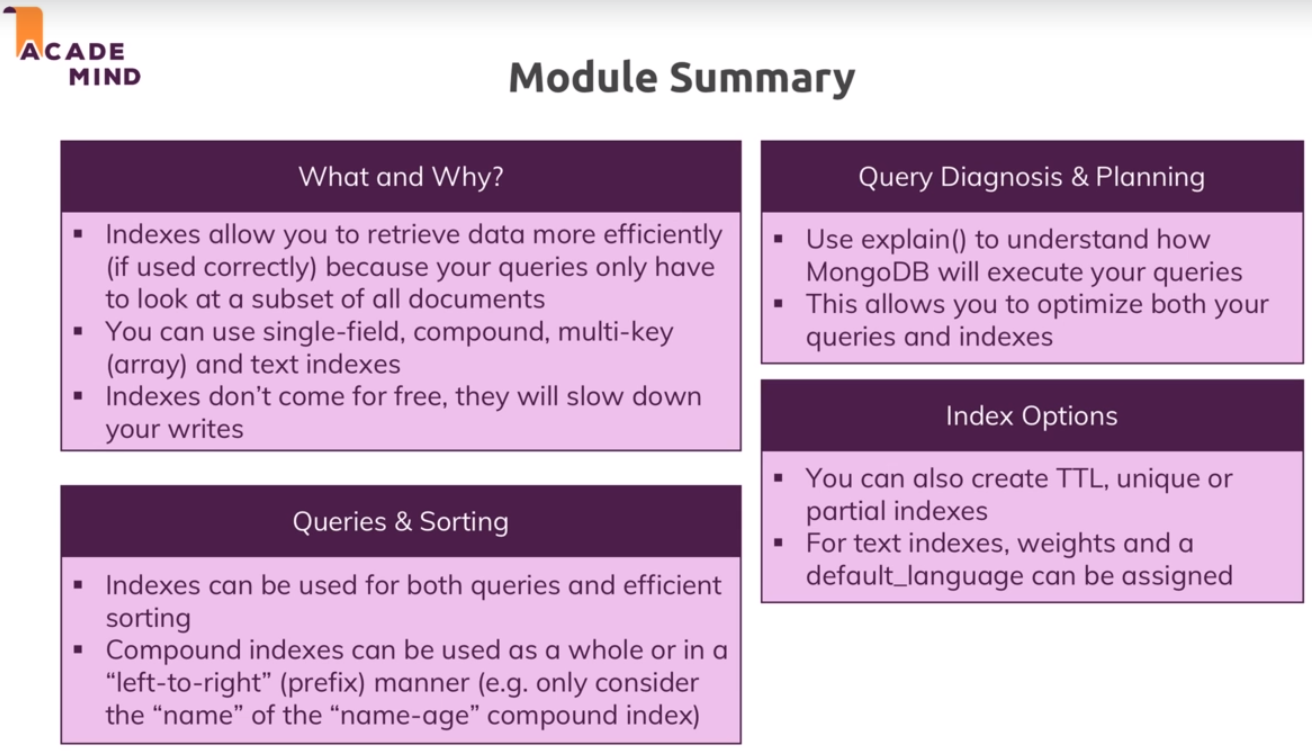

- Working with Indexes

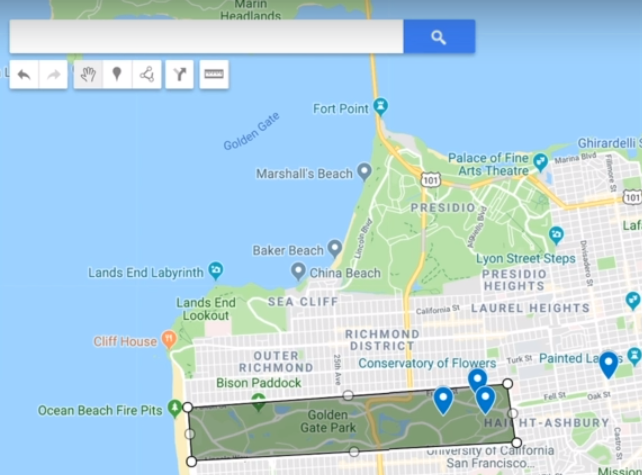

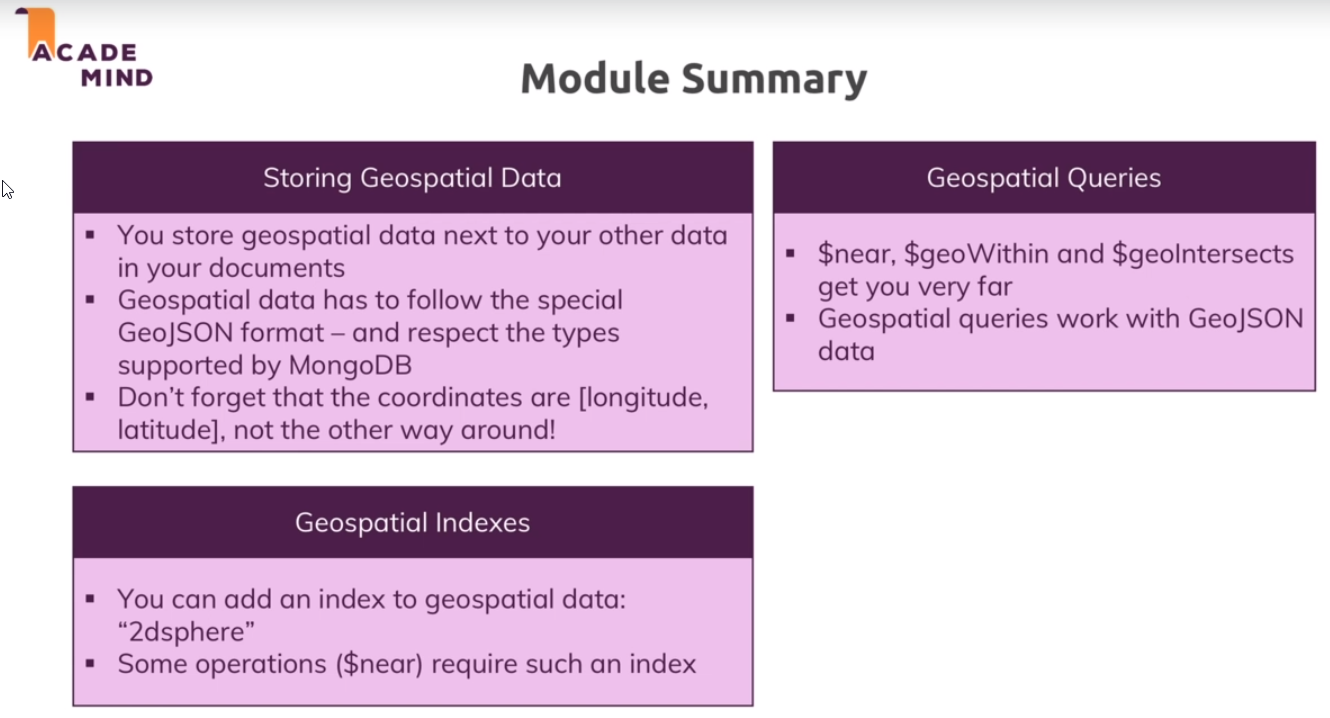

- Working with Geospatial Data

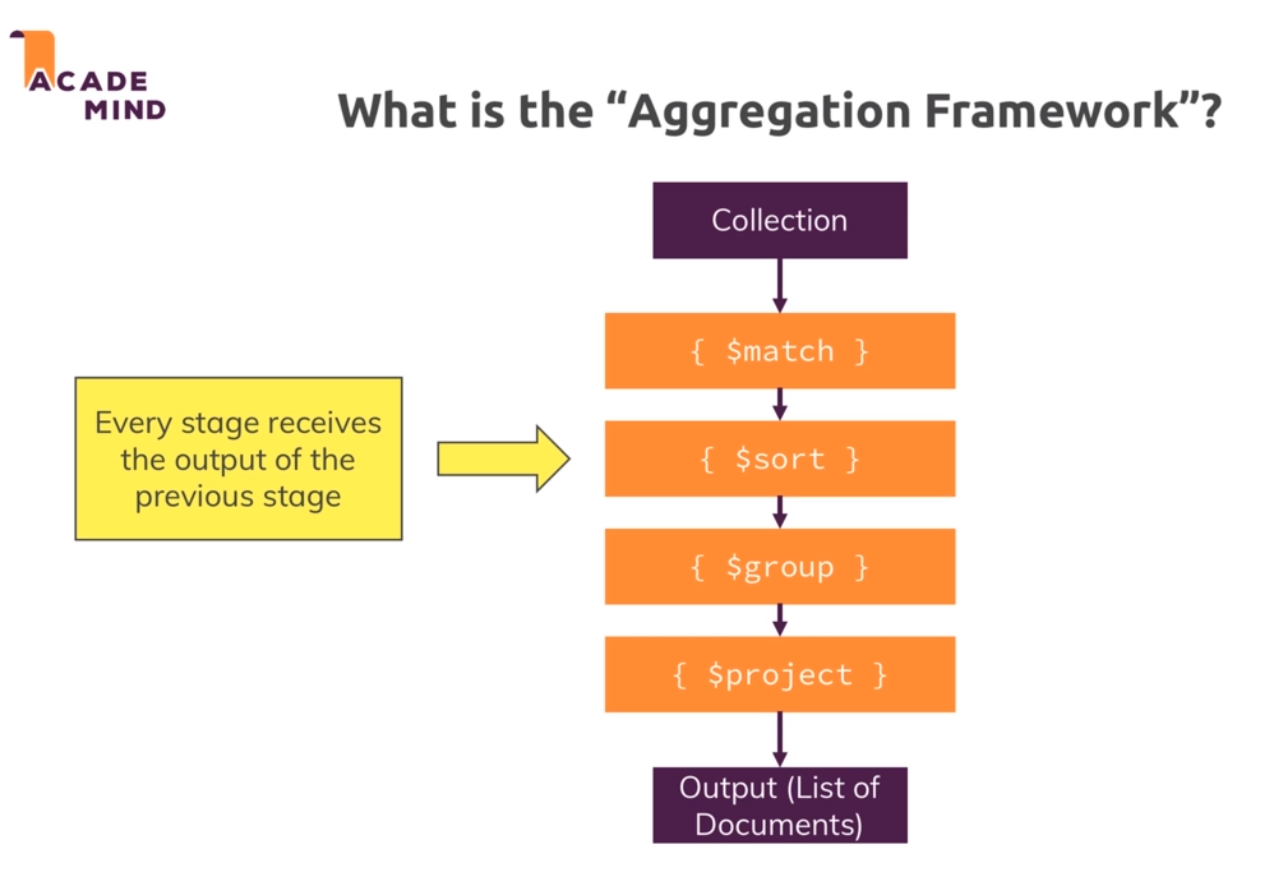

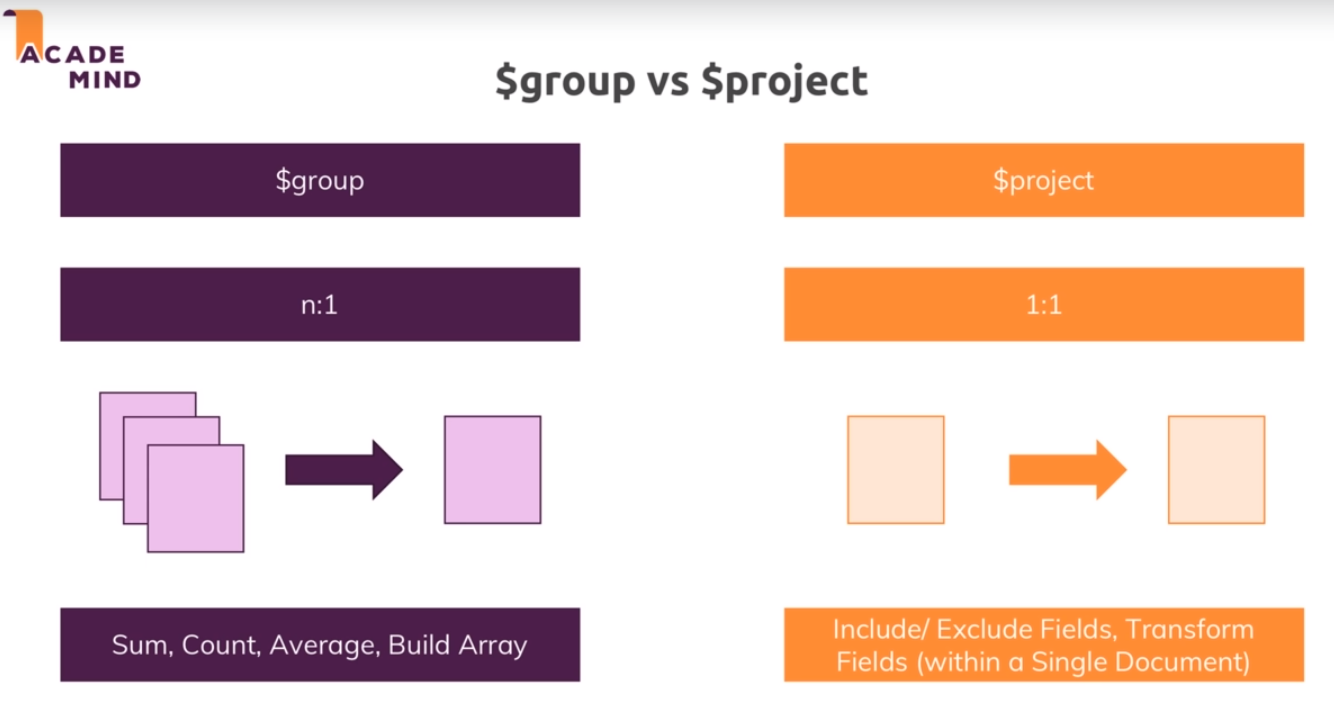

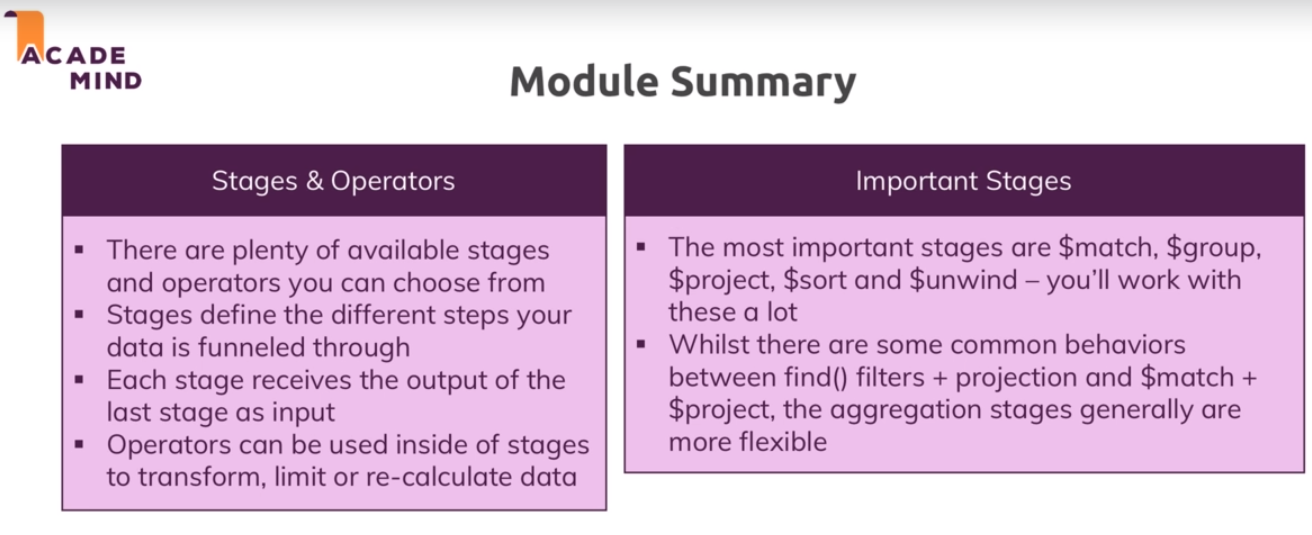

- Understanding the Aggregation Framework

- Working with Numeric Data

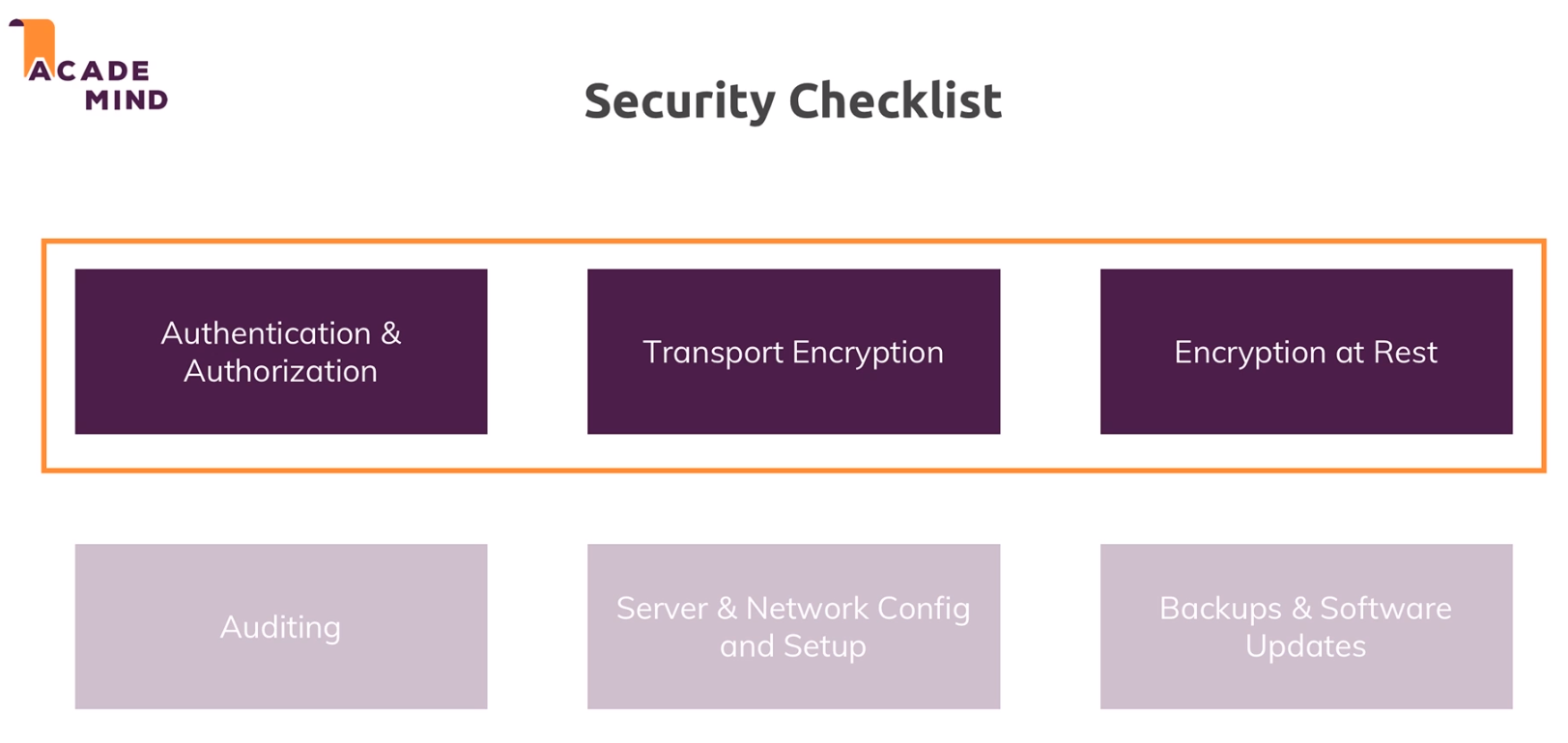

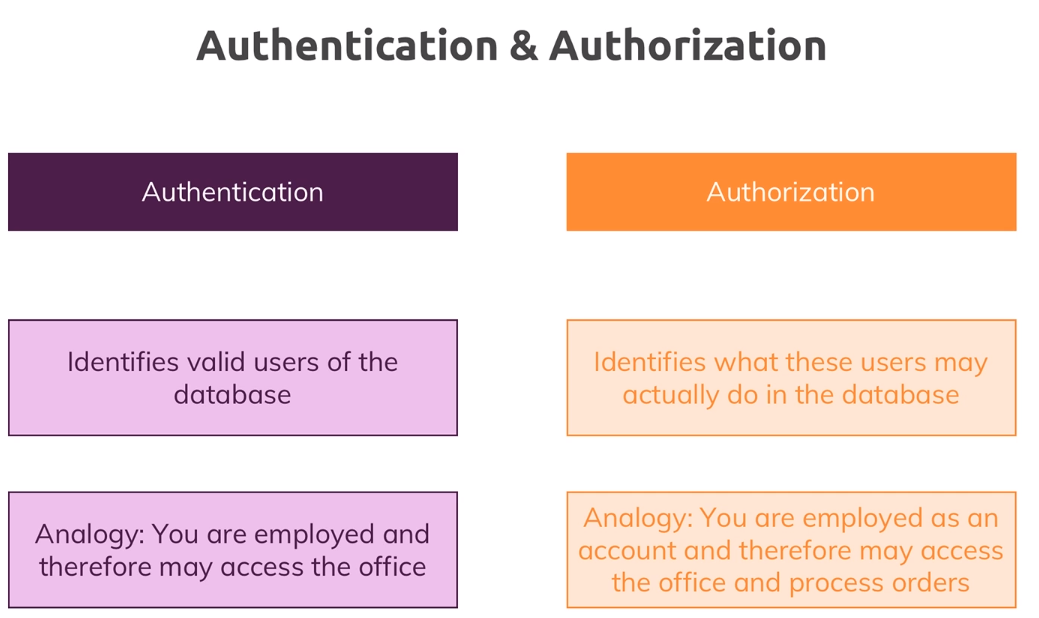

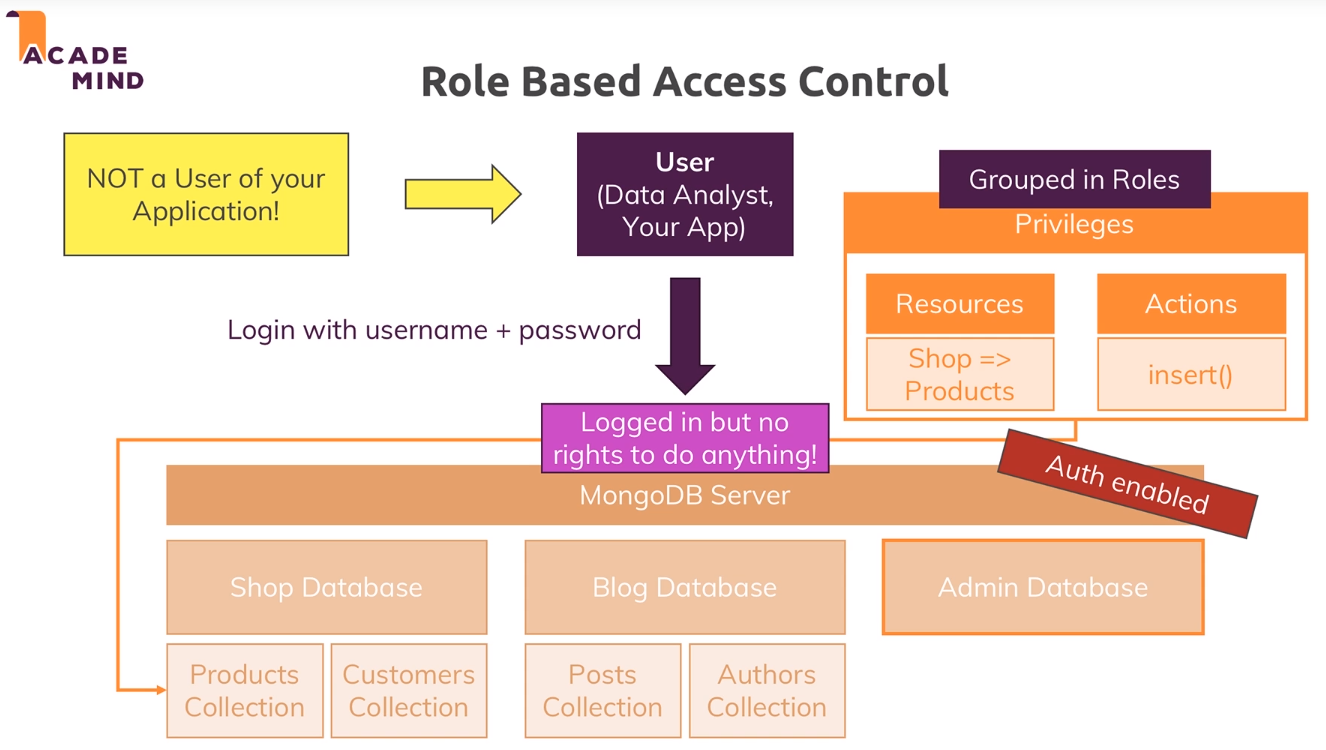

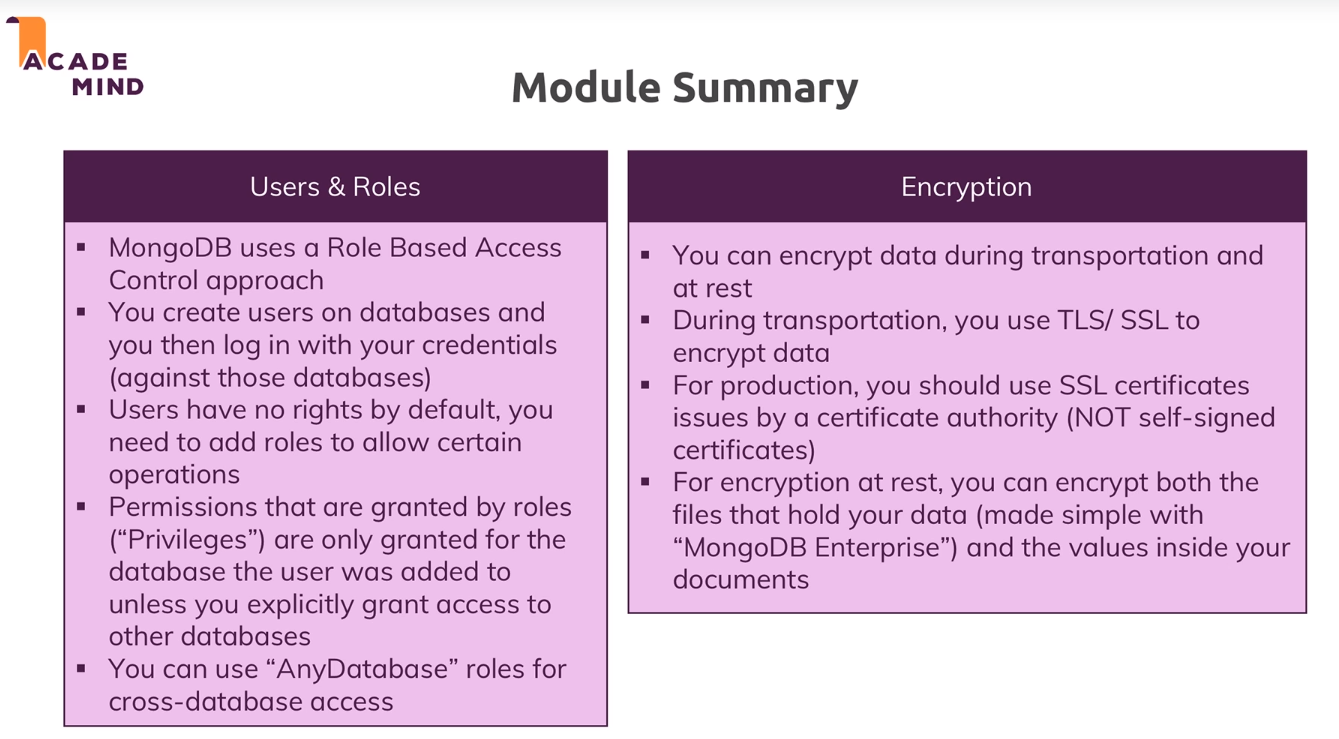

- MongoDB & Security

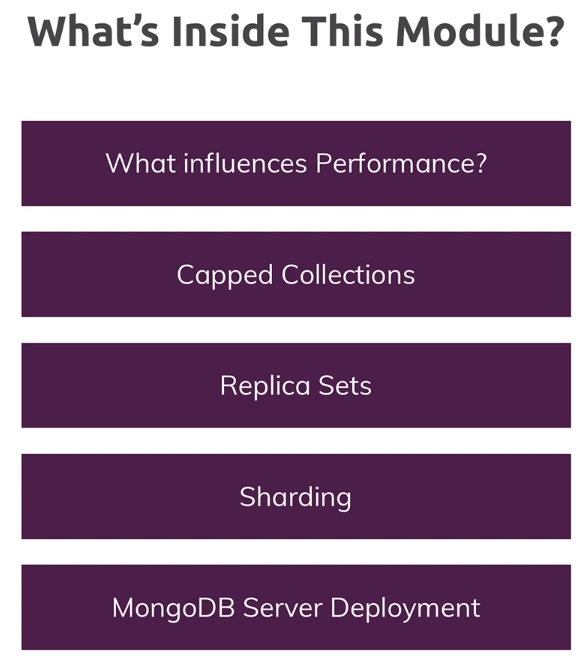

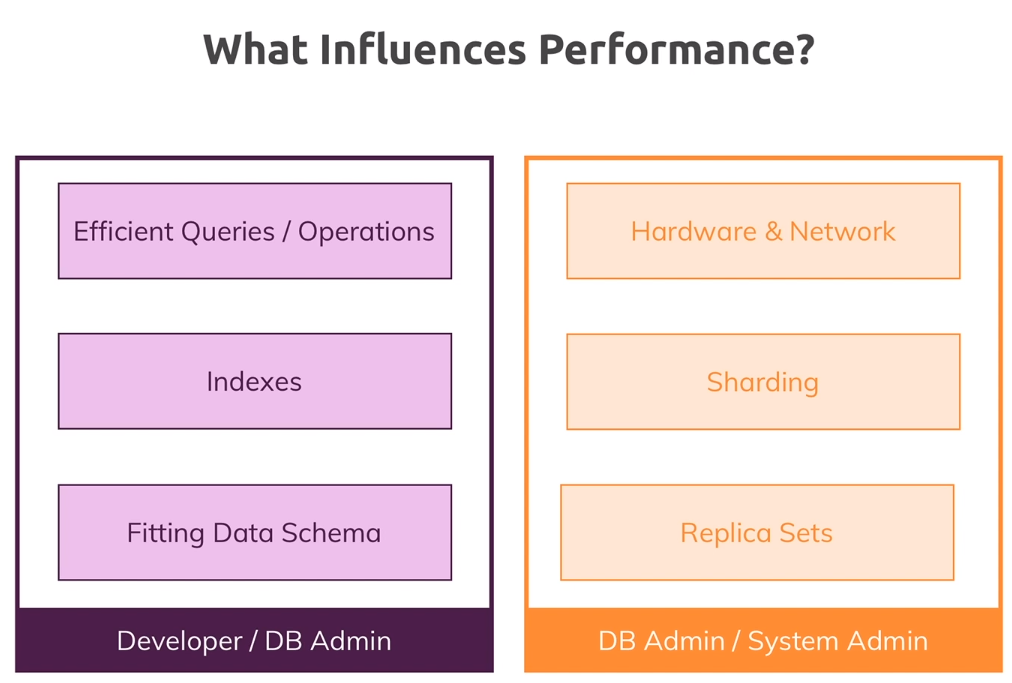

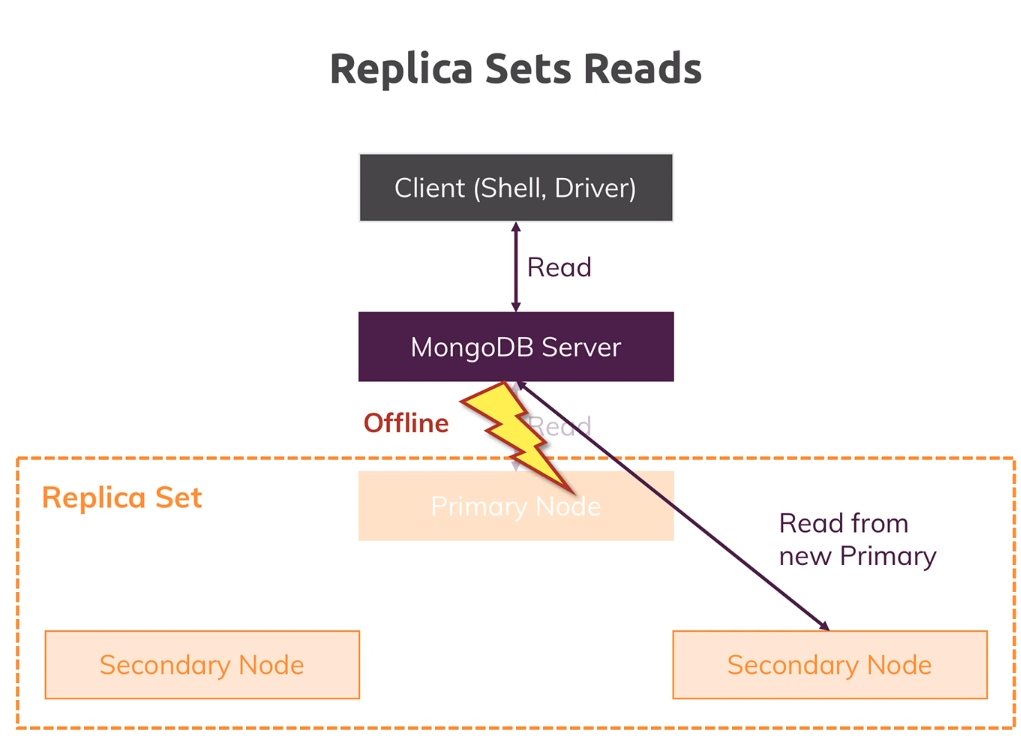

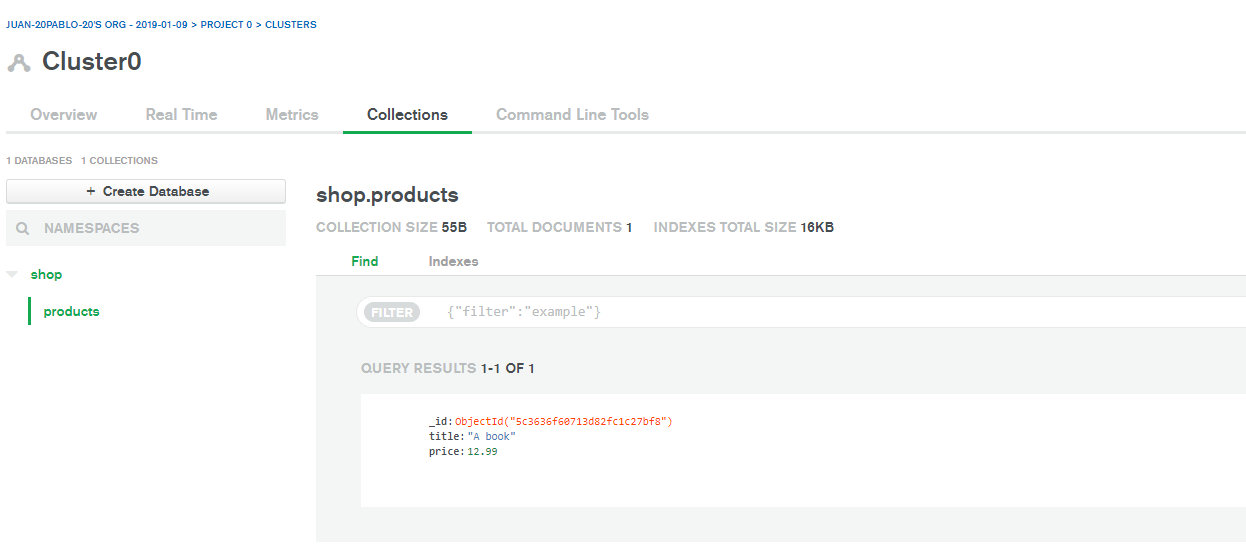

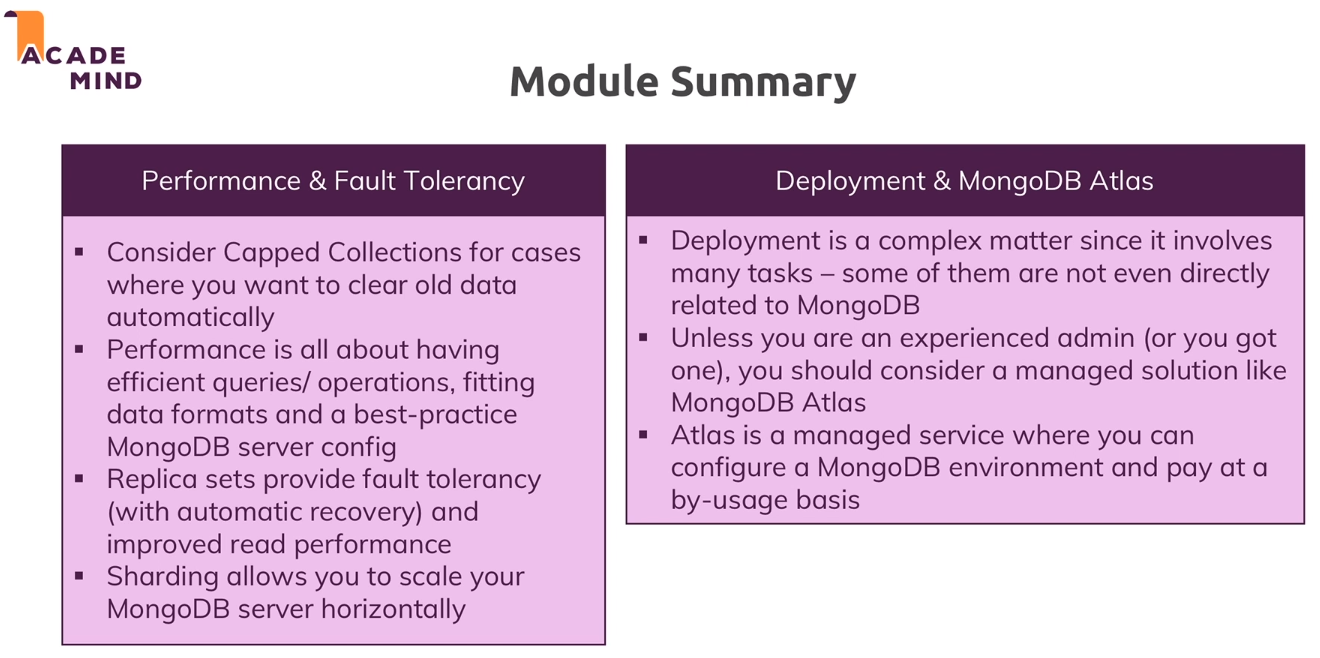

- Performance, Fault Tolerancy & Deployment

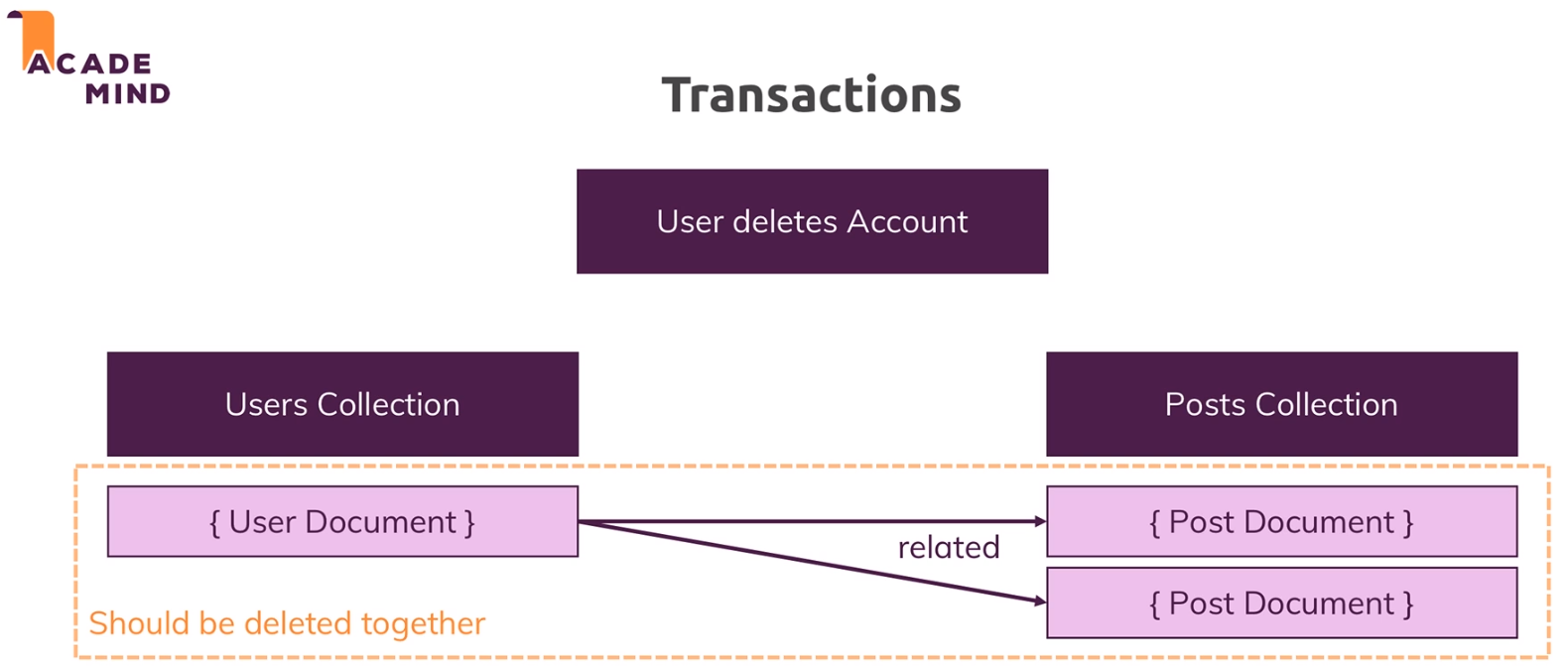

- Transactions

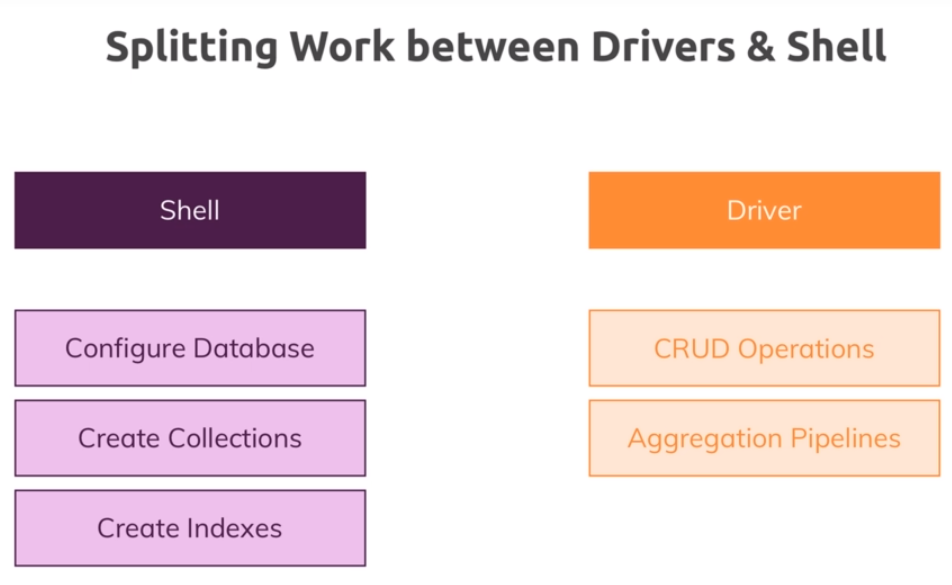

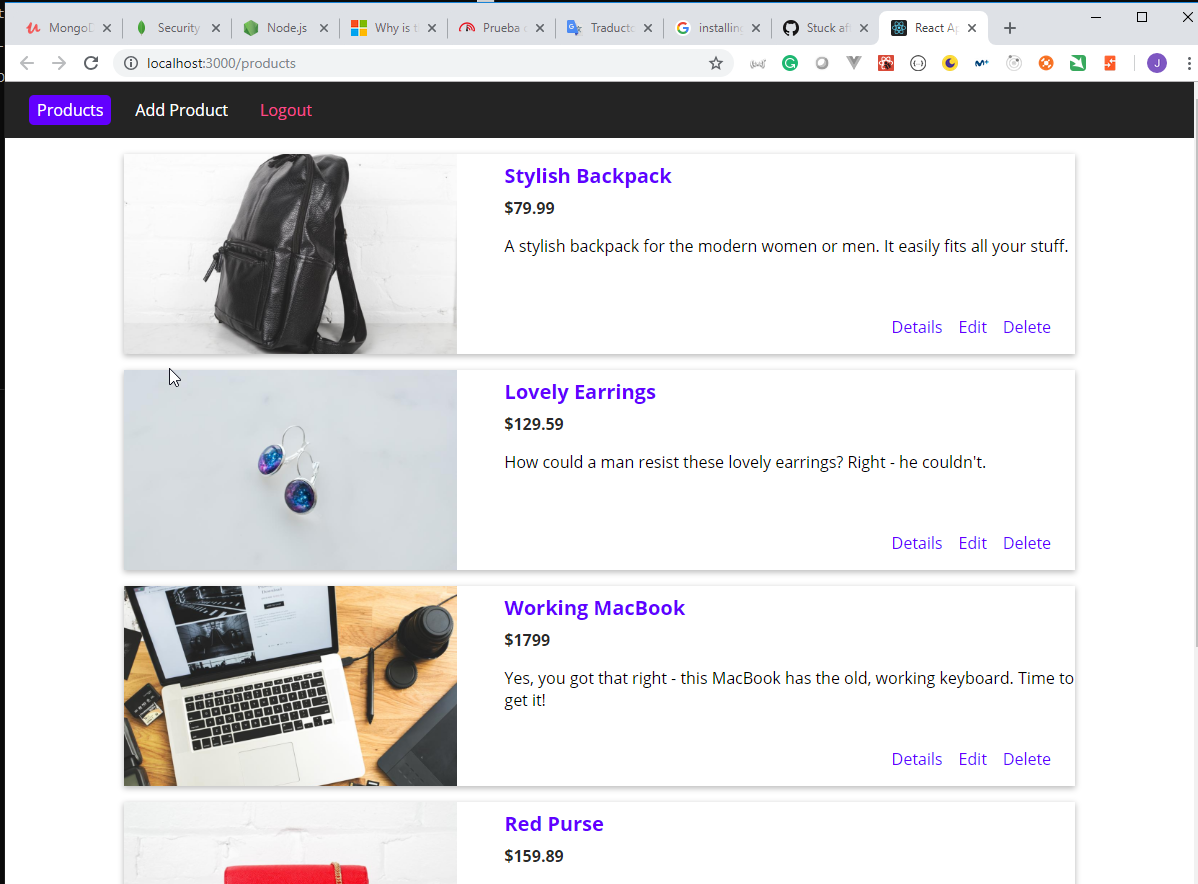

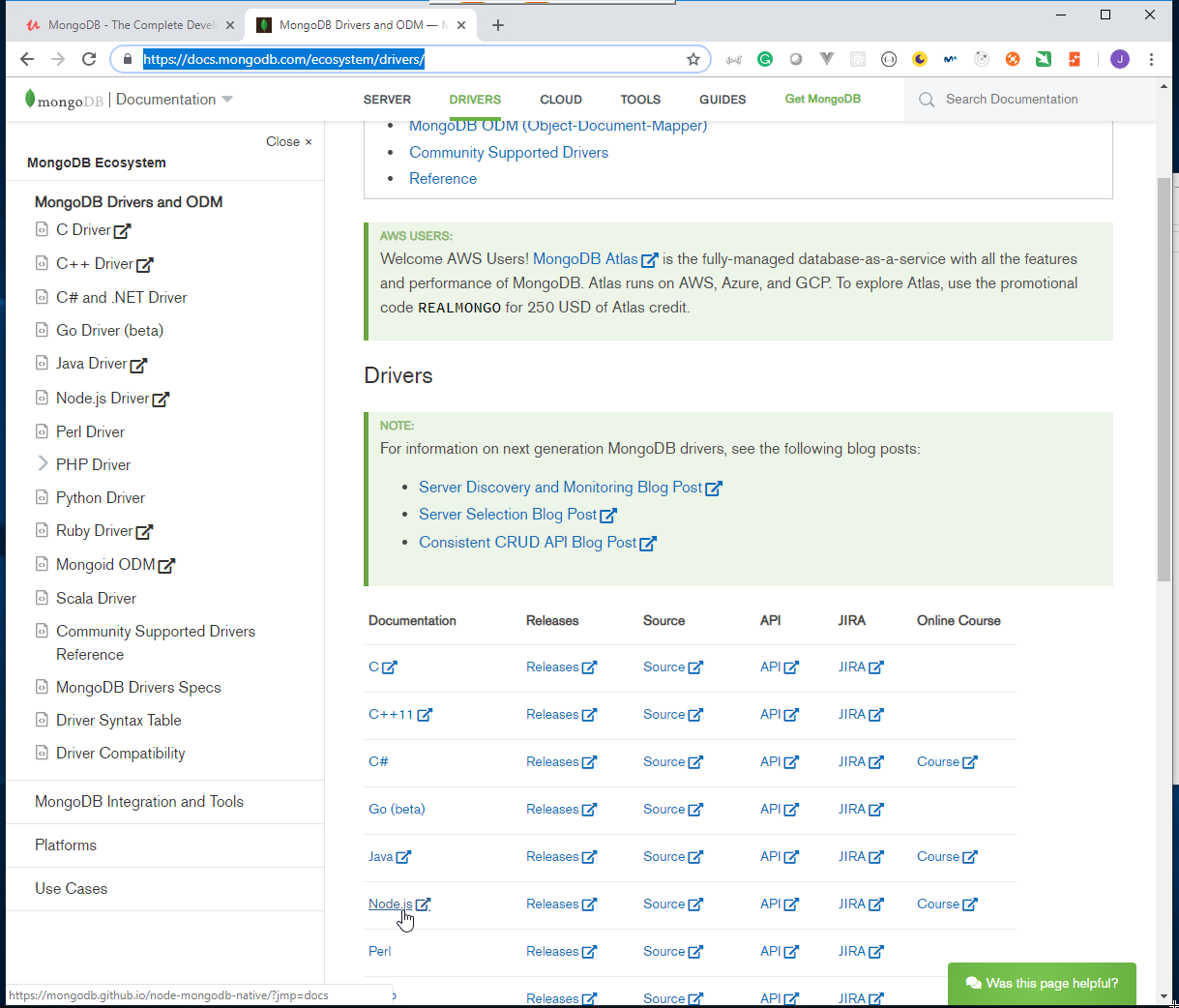

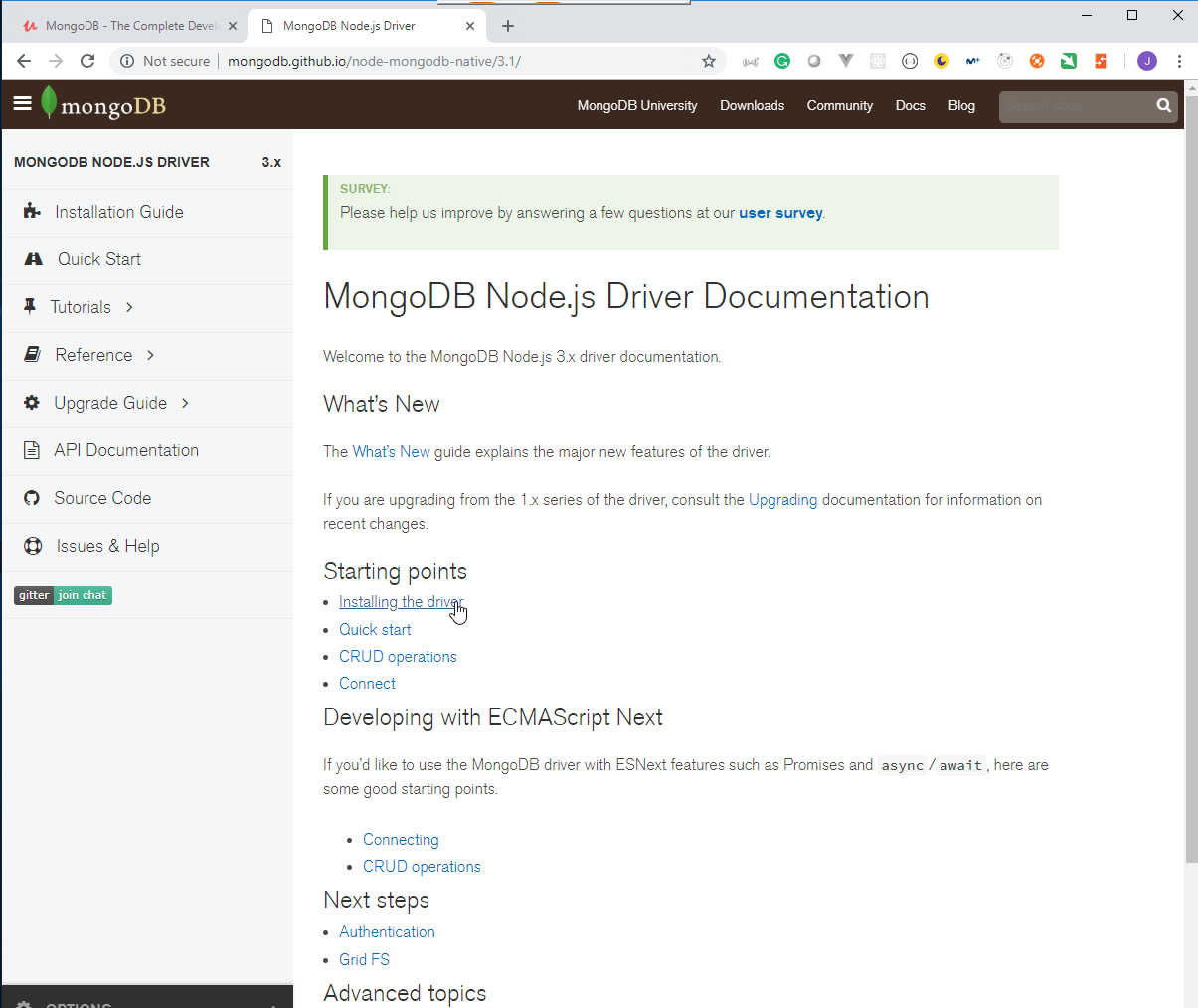

- From Shell to Driver

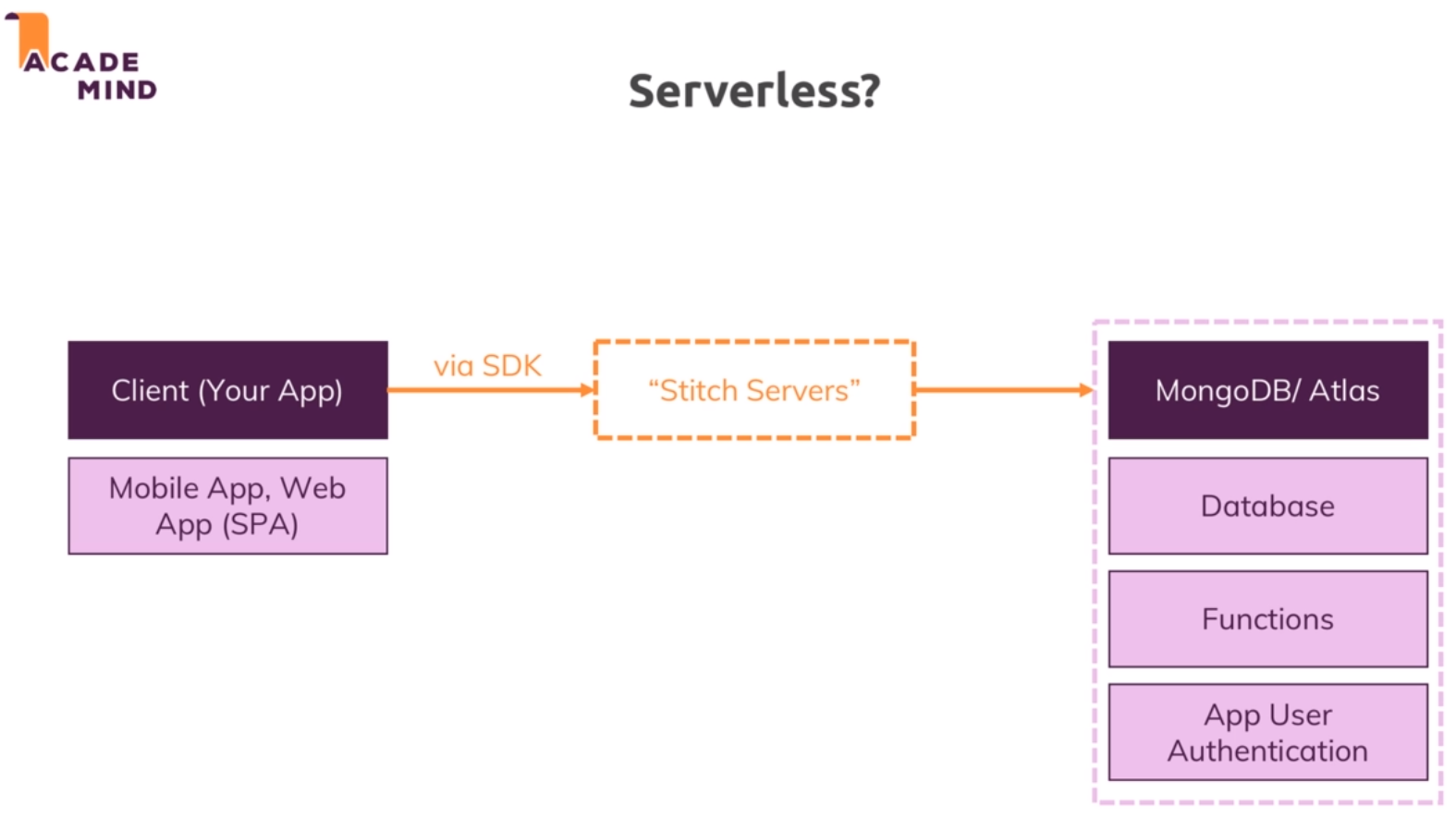

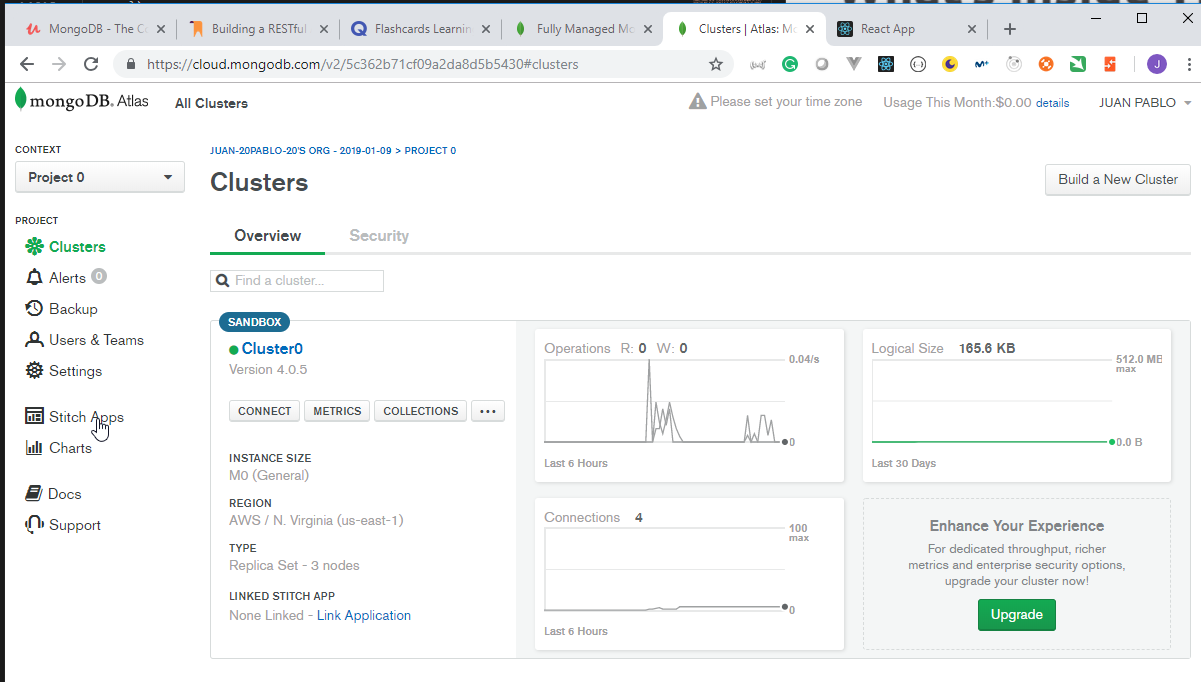

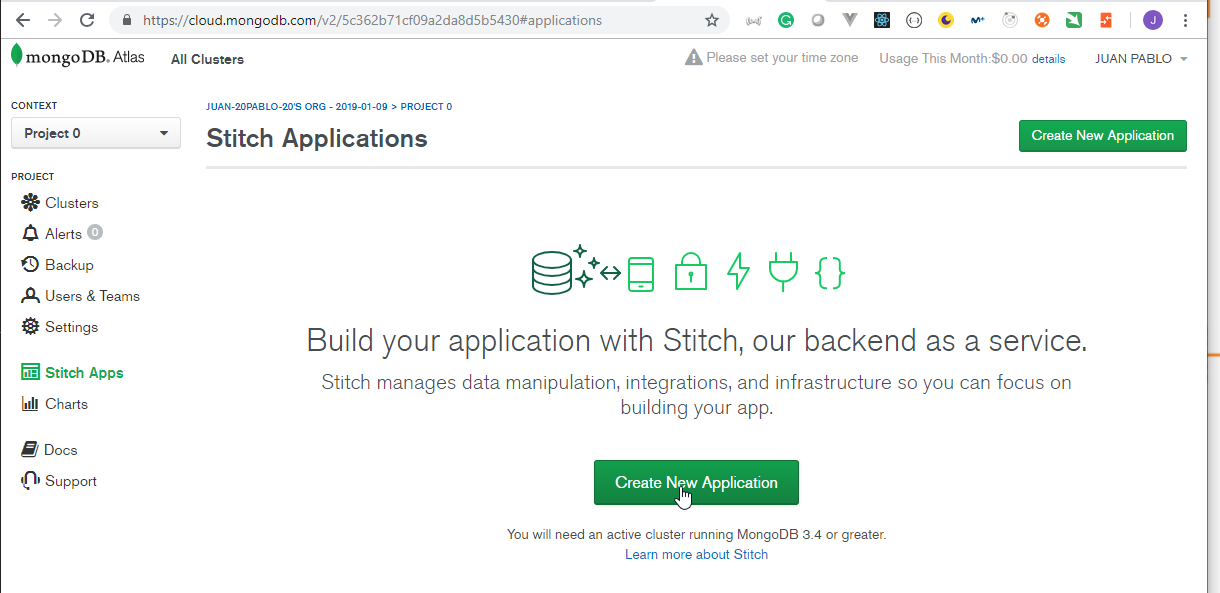

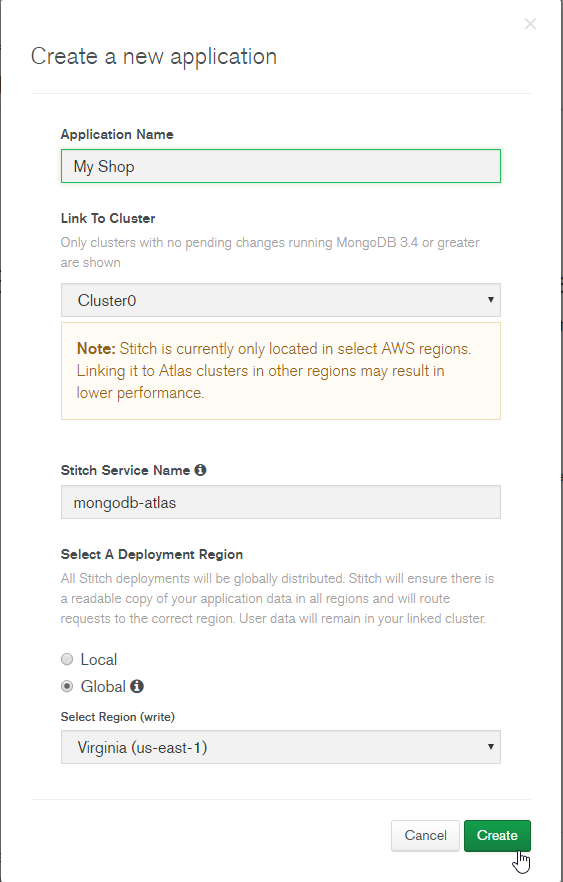

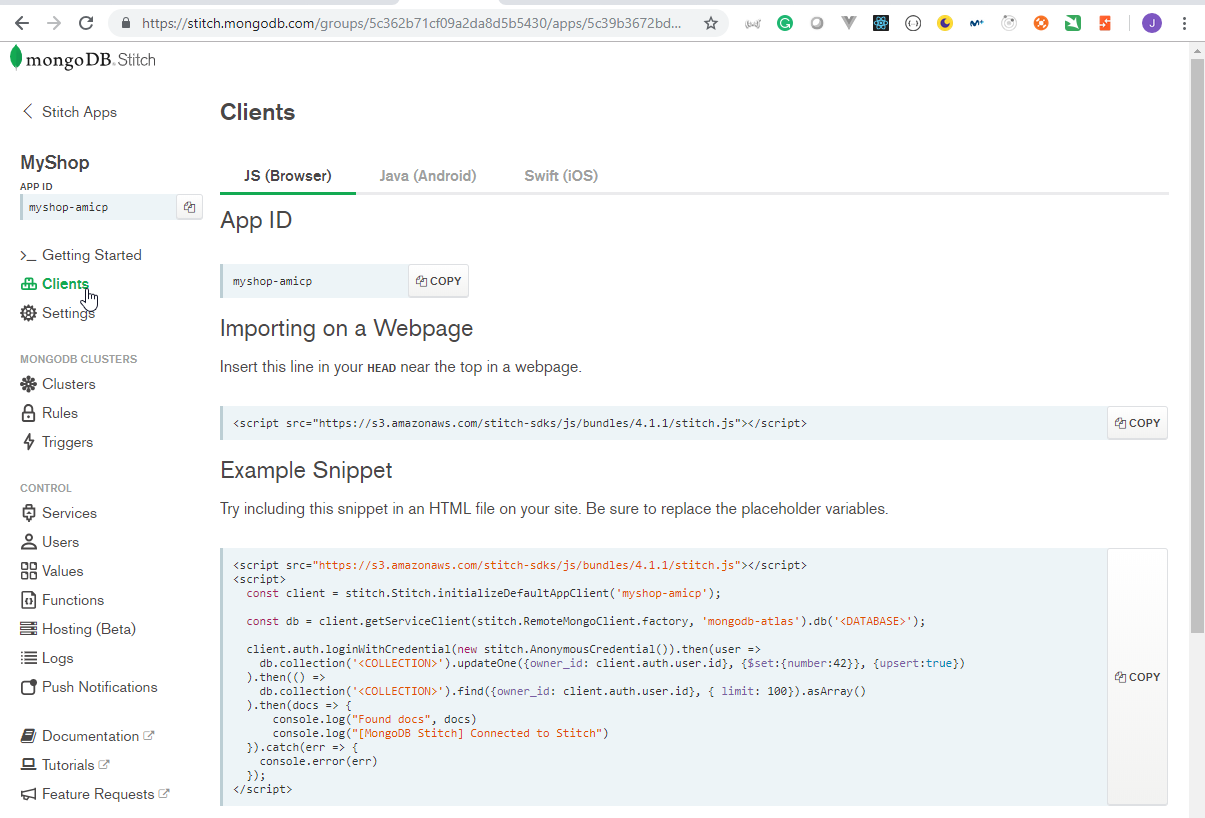

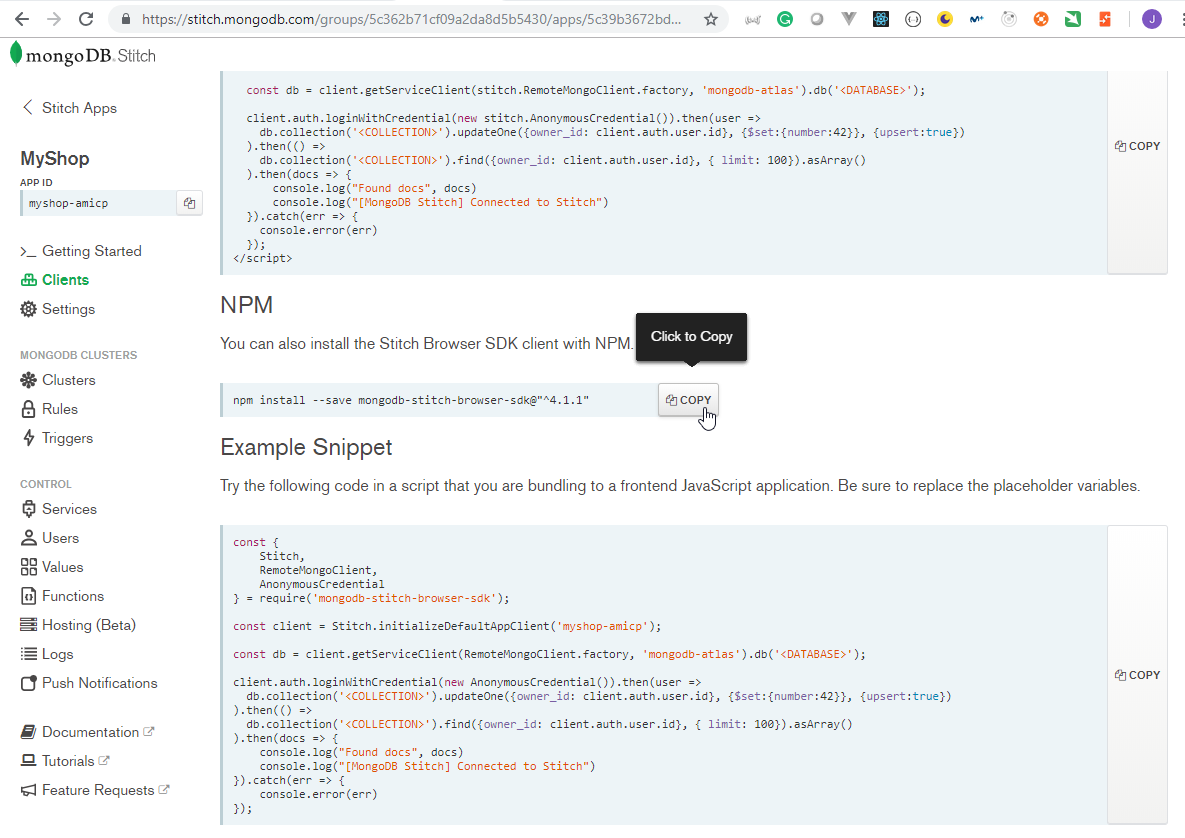

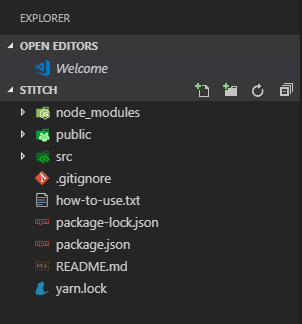

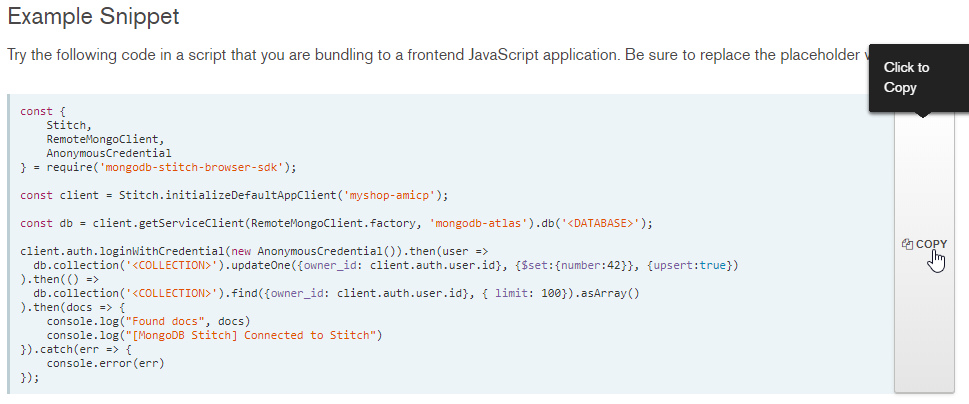

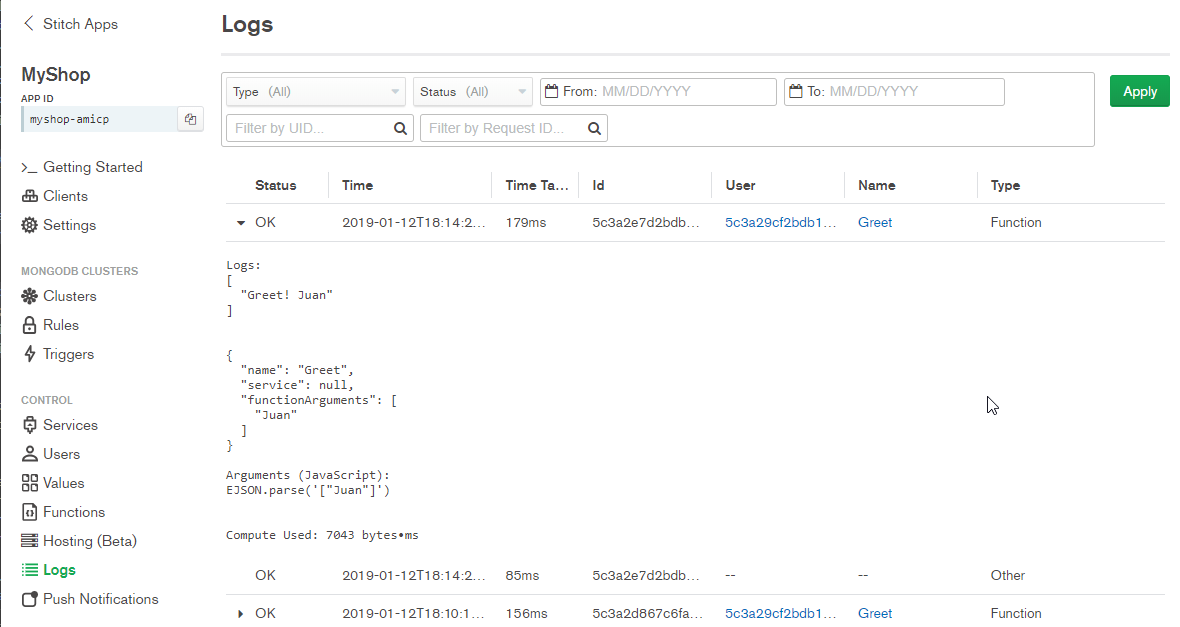

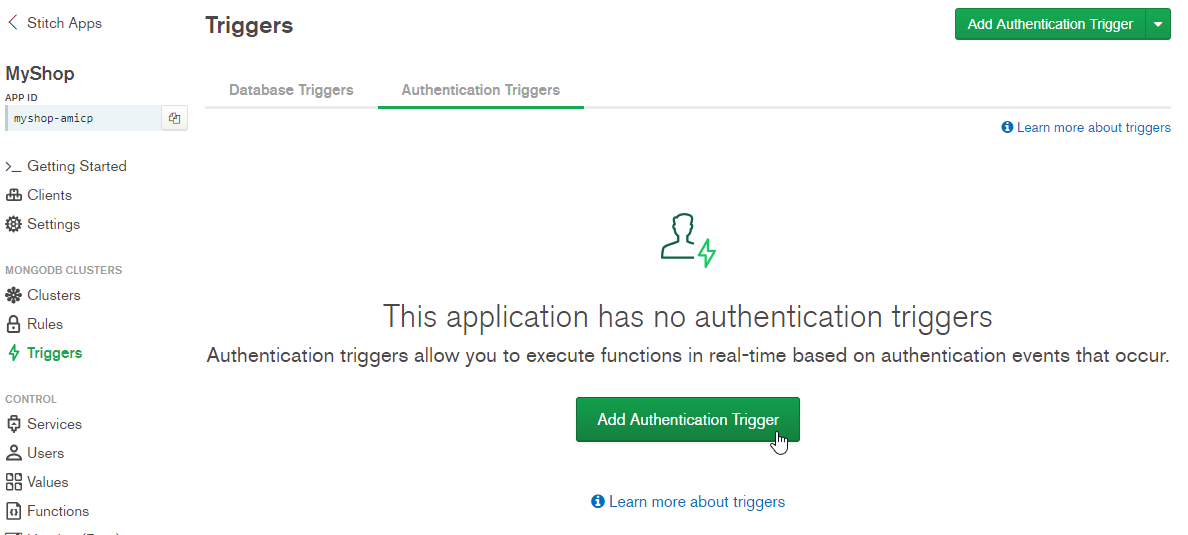

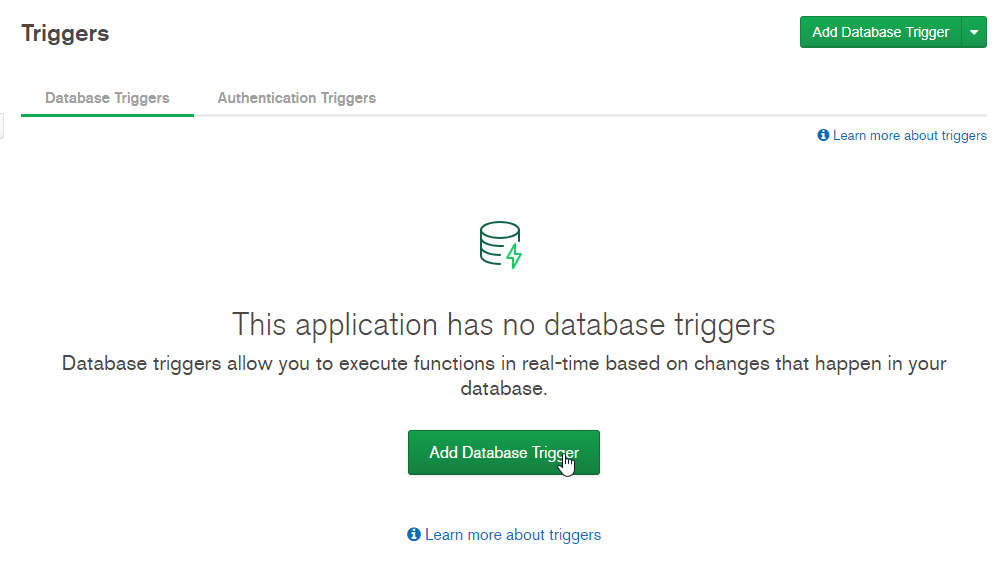

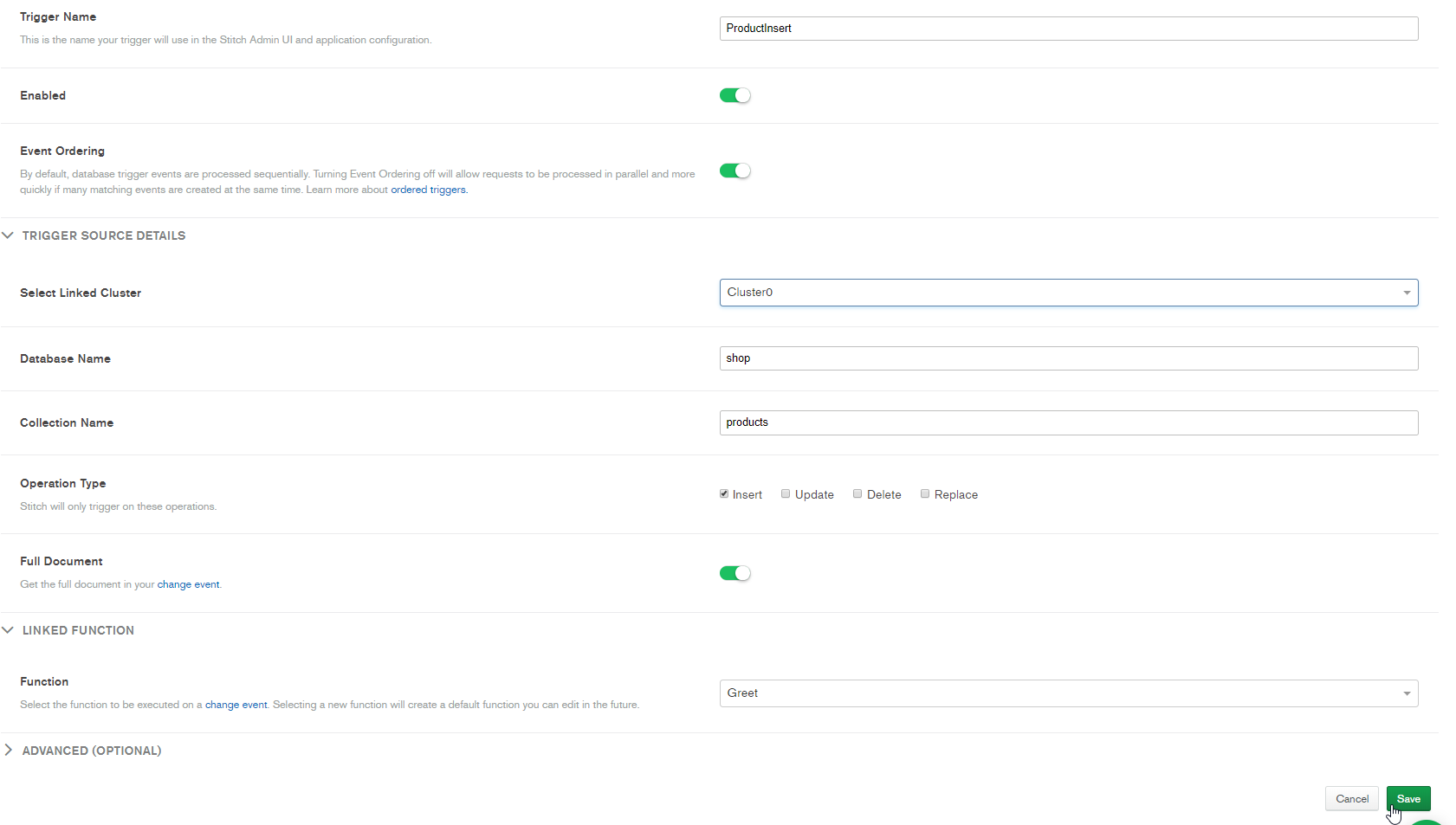

- Introducing Stitch

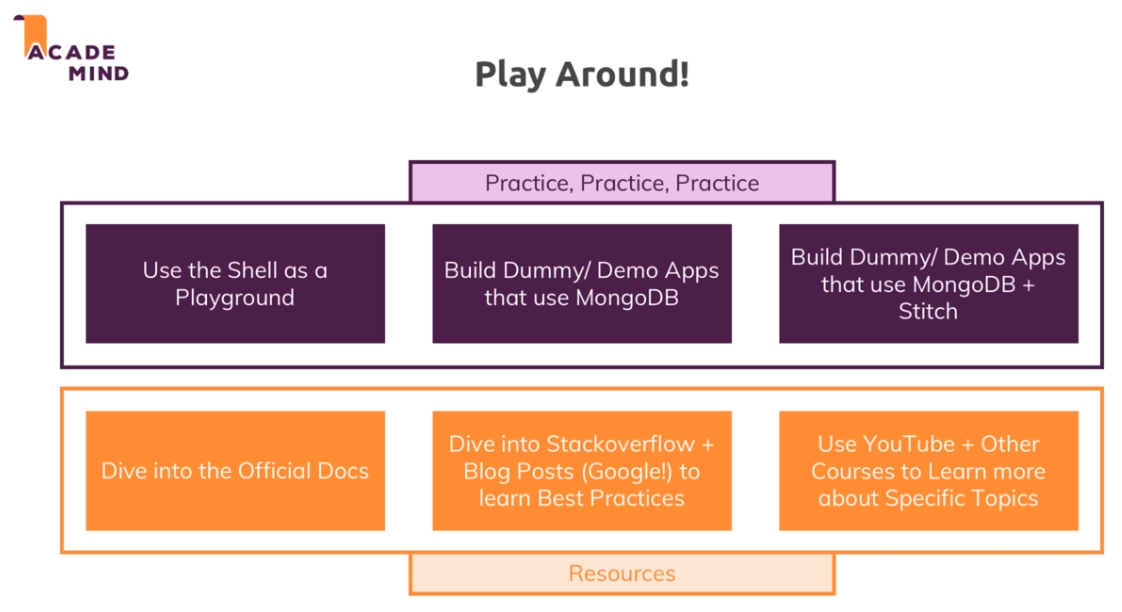

- Course Roundup

What I've learned

- Use MongoDB to its full potential in future projects

- Write efficient and well-performing queries to fetch data in the format you need it

- Use all features MongoDB offers you to work with data efficiently

Introduction

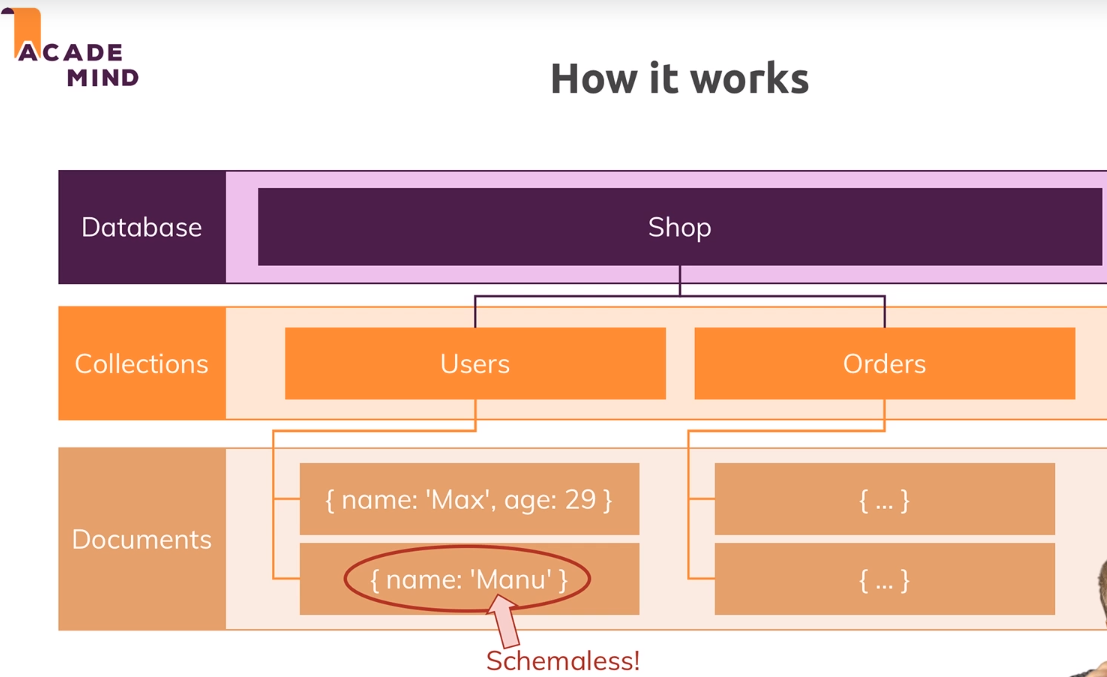

- How it works

- It is a

NoSQLsolution - We can have different

databases - Each

databasehascollections - Each

collectionhasschemalessdocuments

The

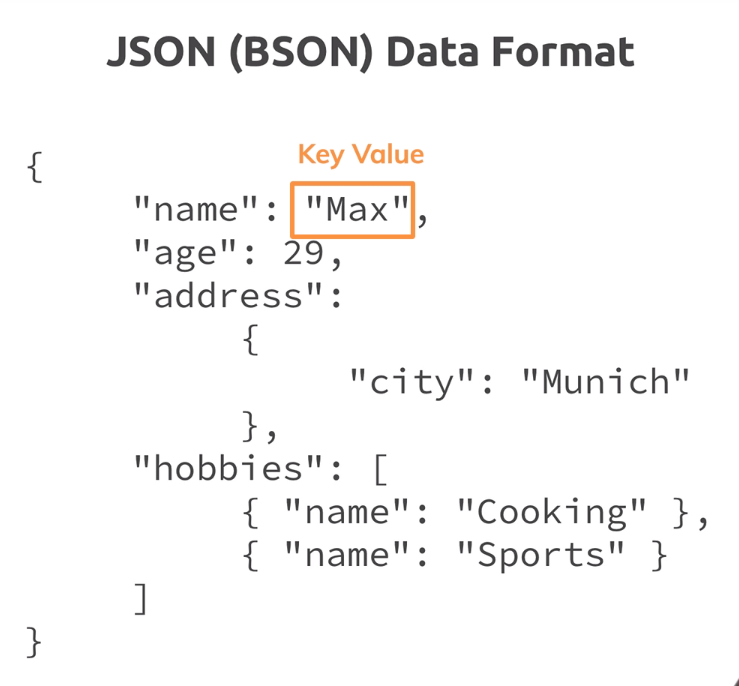

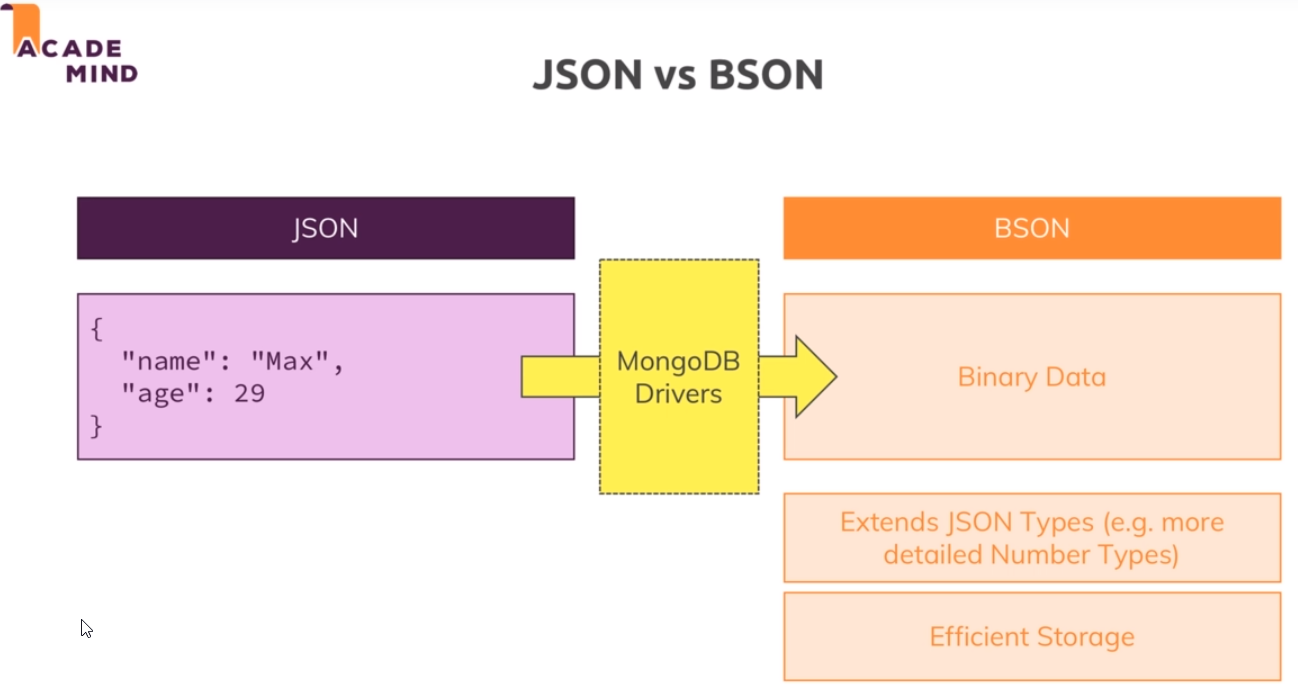

documentshave aJson Data Format. In fact, it is aBSONformat.They can have

embeddeddata

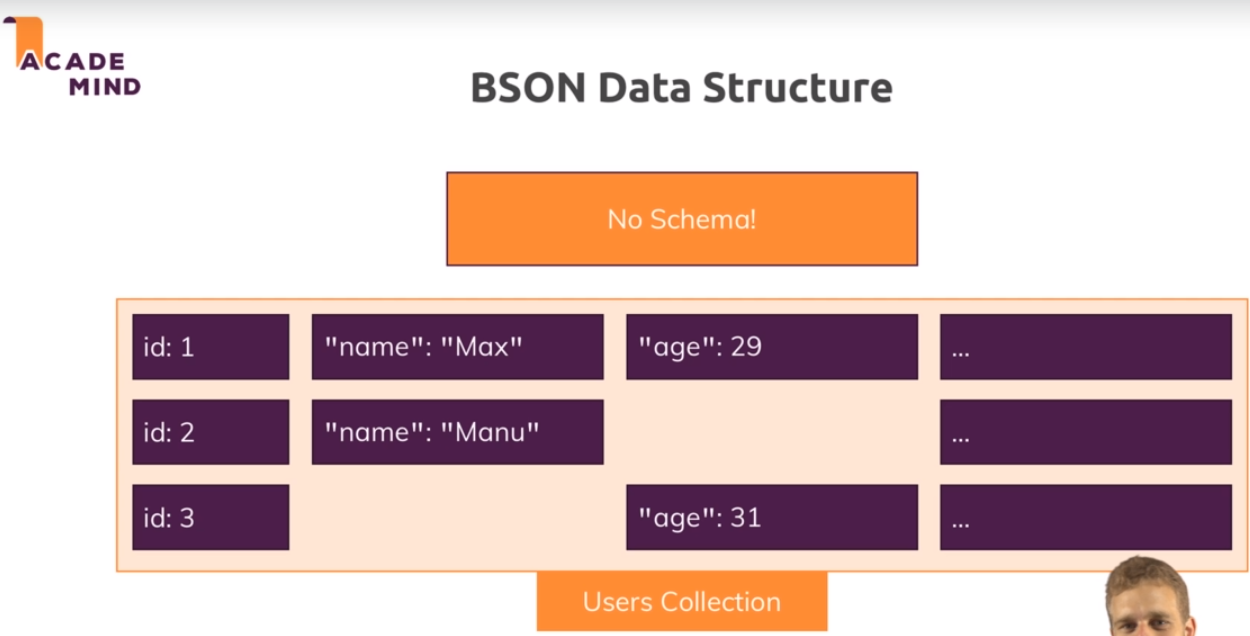

- Each element can have a different structure

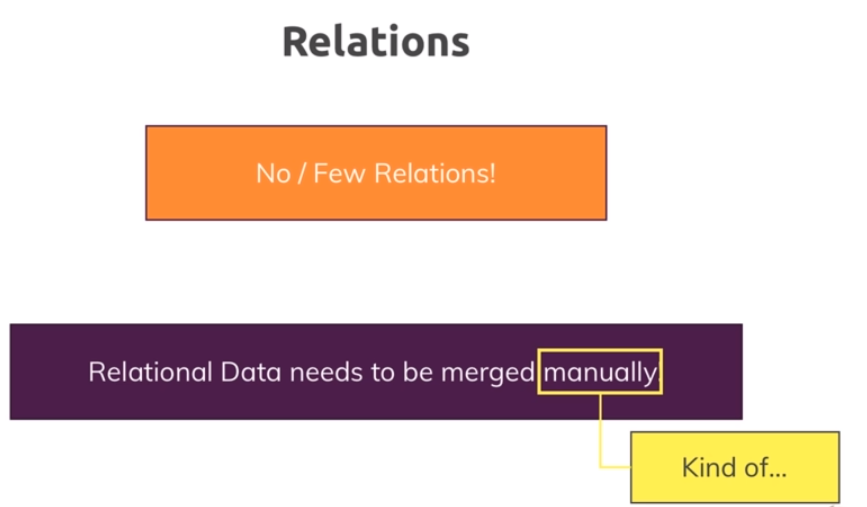

- There is no relations or there are few of them

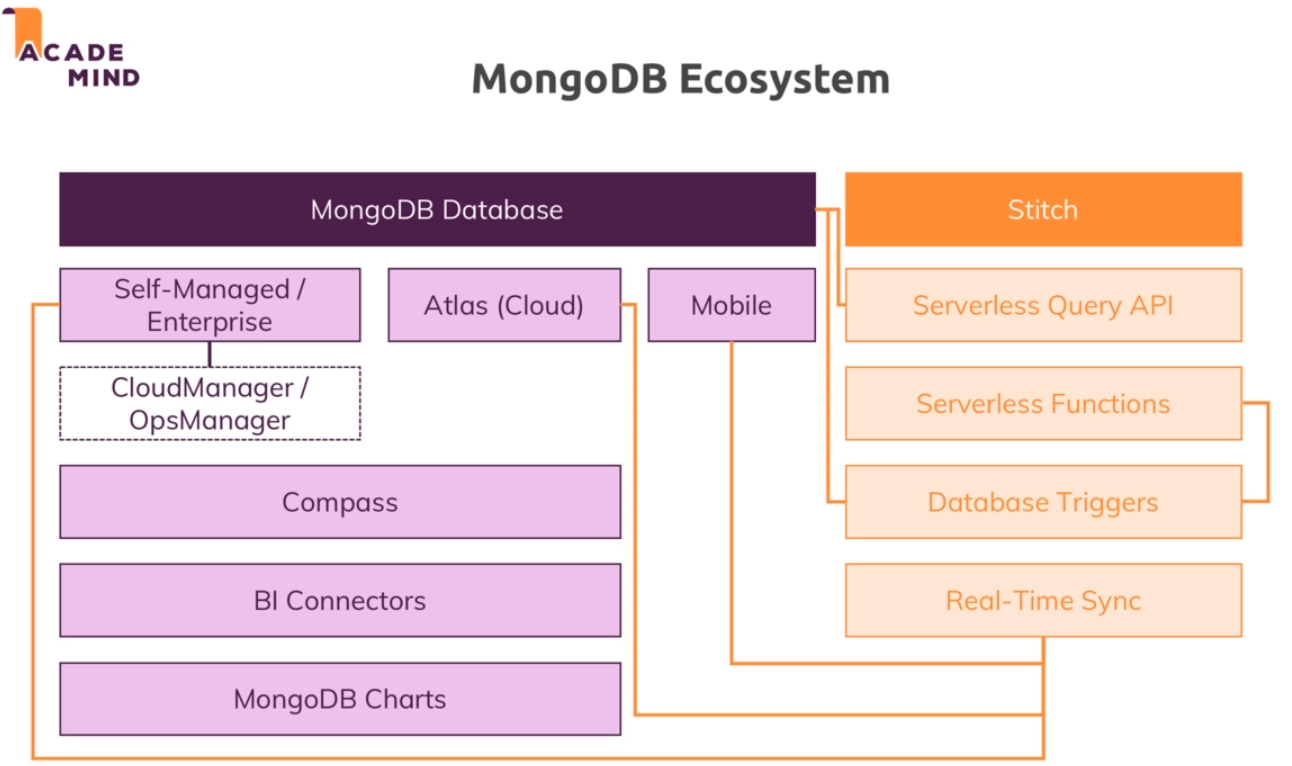

- MongoDb Ecosystem

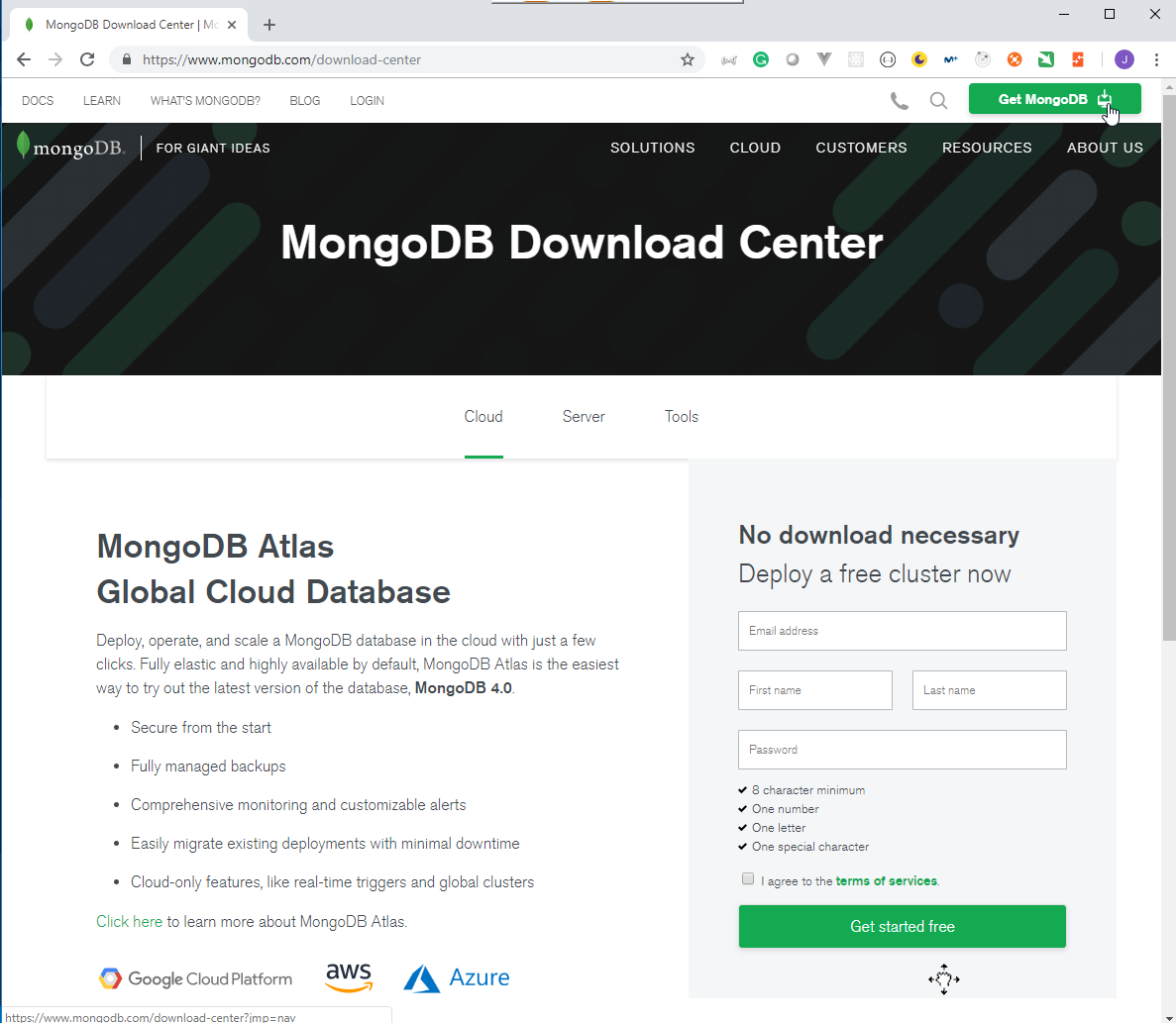

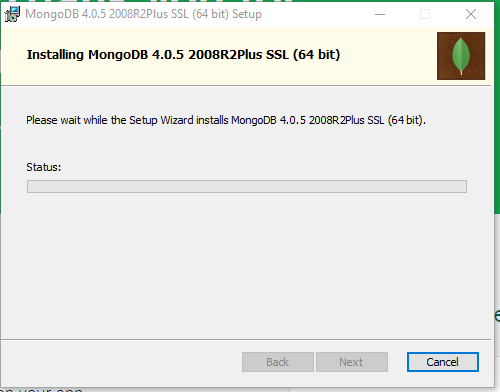

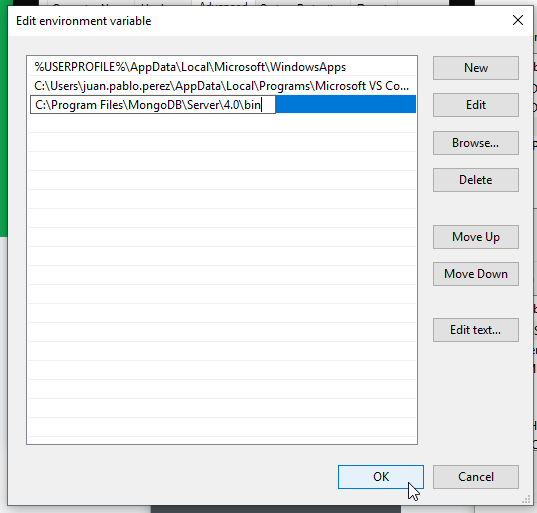

- Install MondoDB

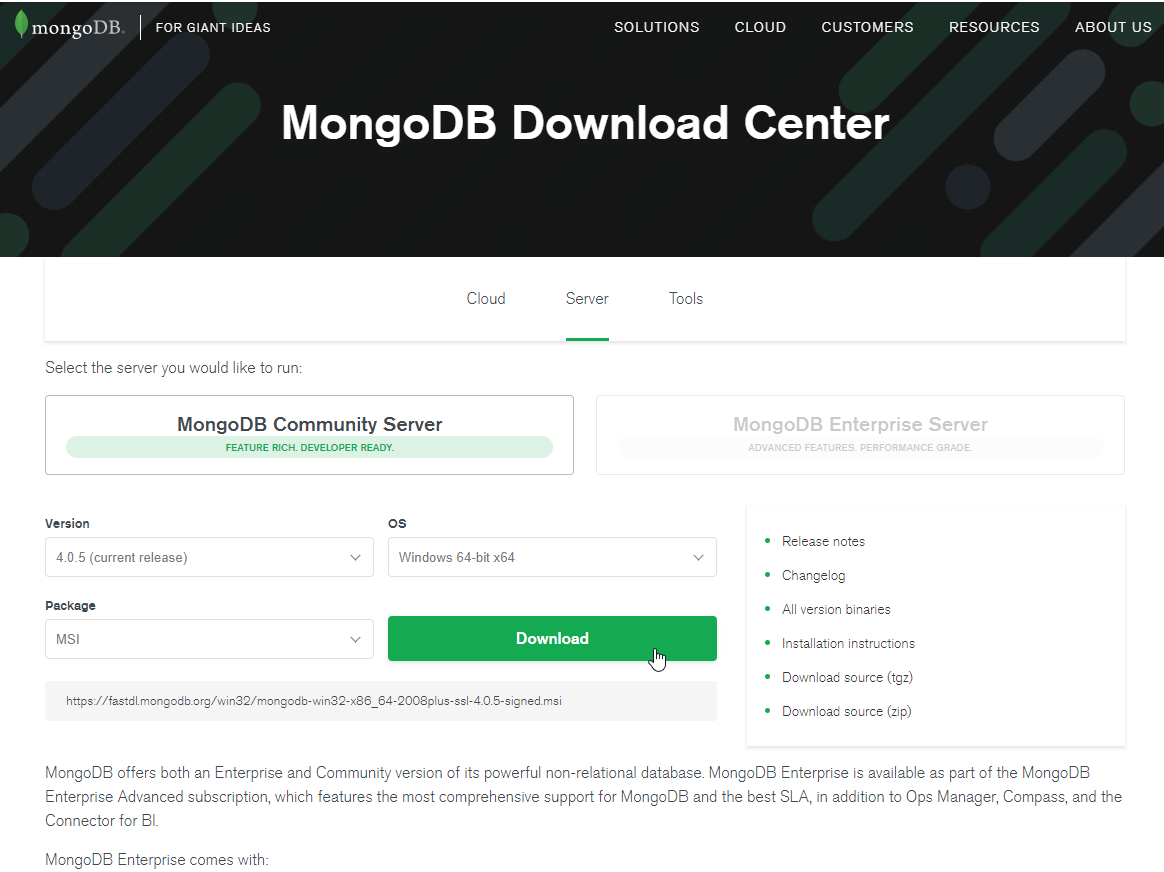

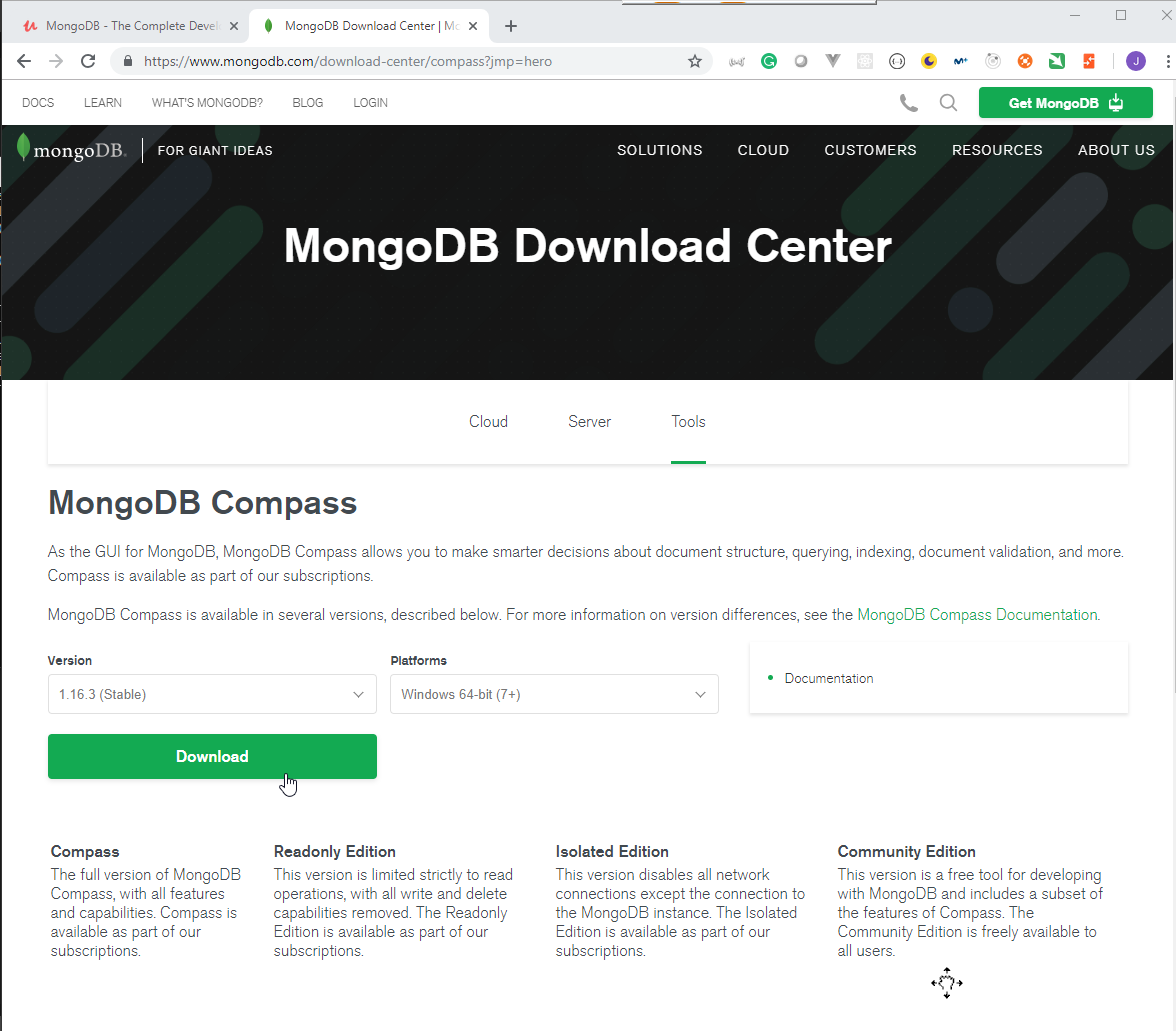

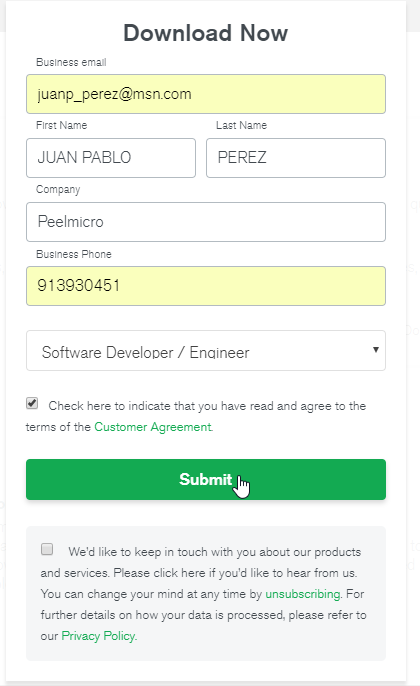

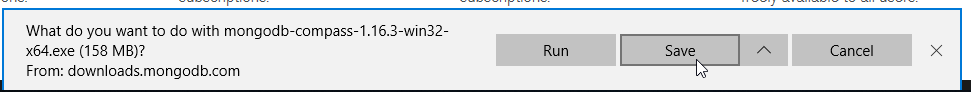

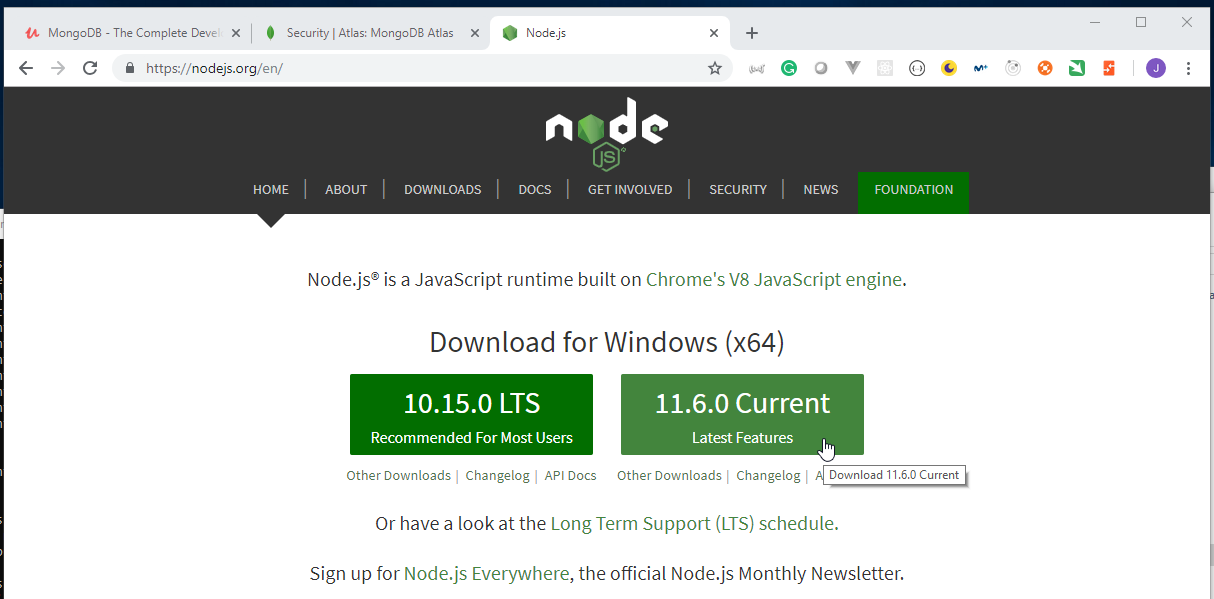

- browse to MongoDB Download Center

- Click on

Get MongoDB

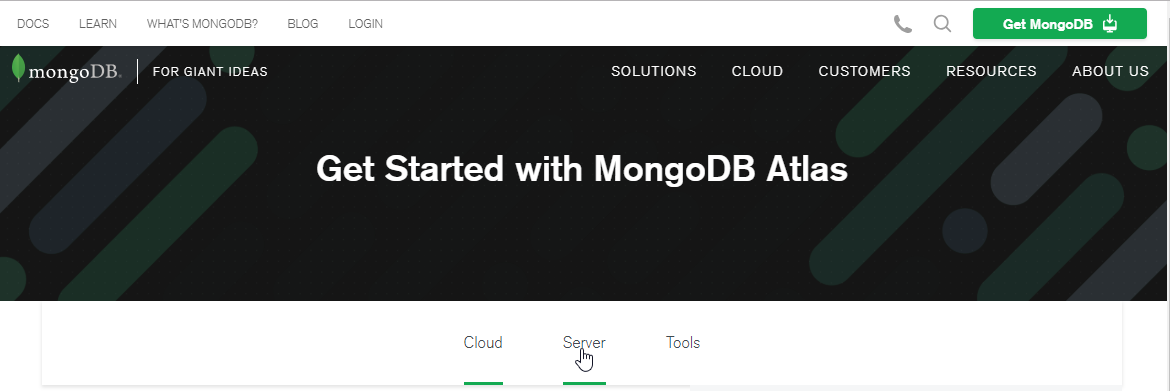

- Click on

Server

- Click on

Download

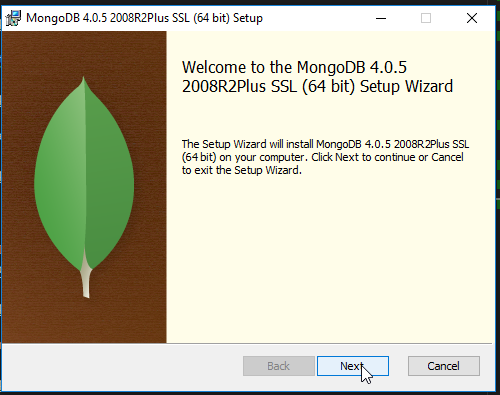

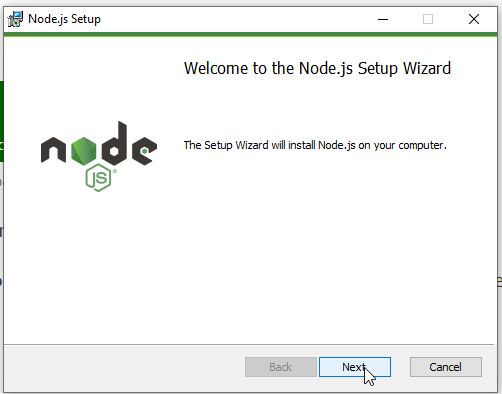

- Click on

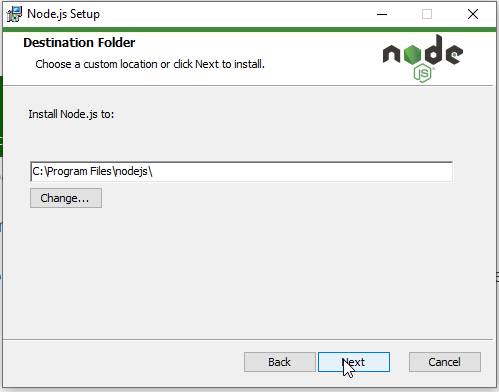

Next

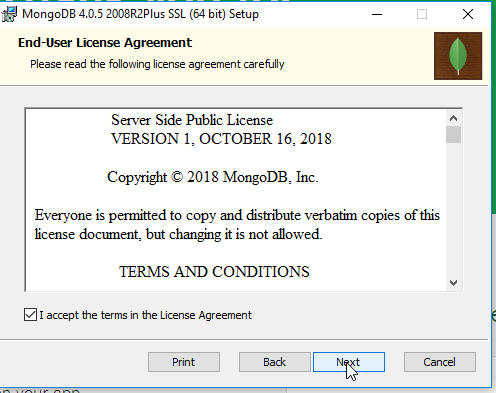

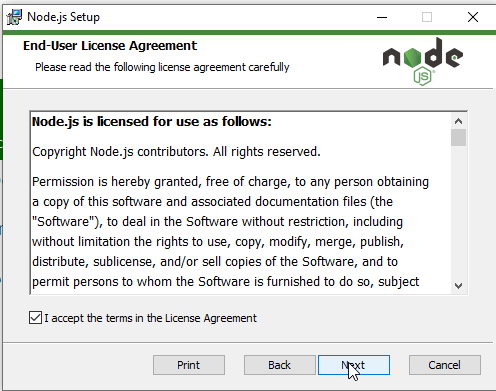

- Click on

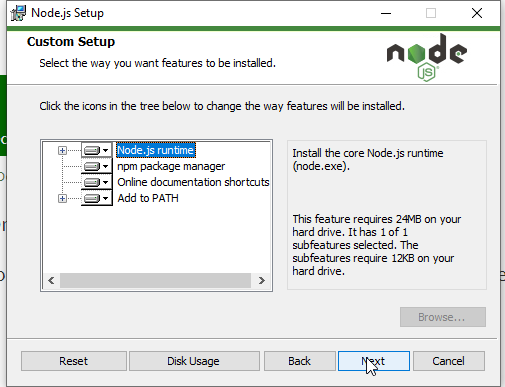

Next

- Click on

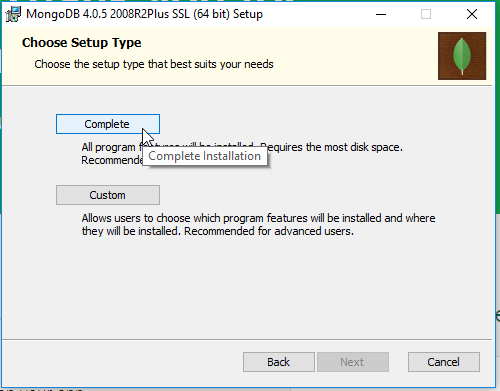

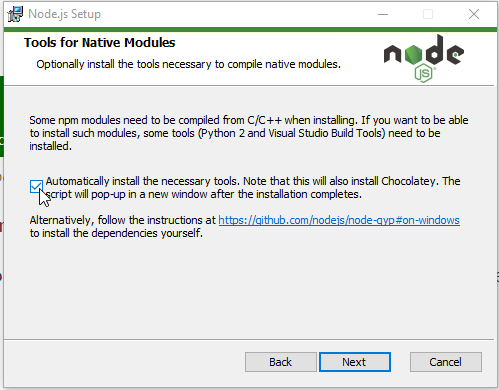

Complete

- Click on

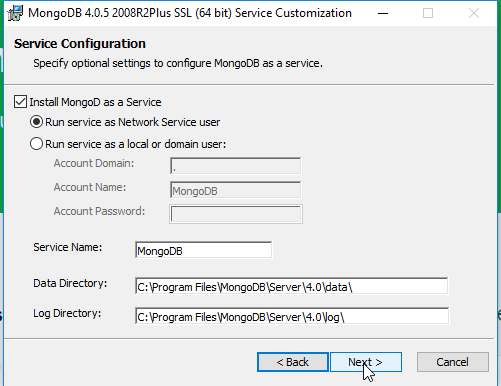

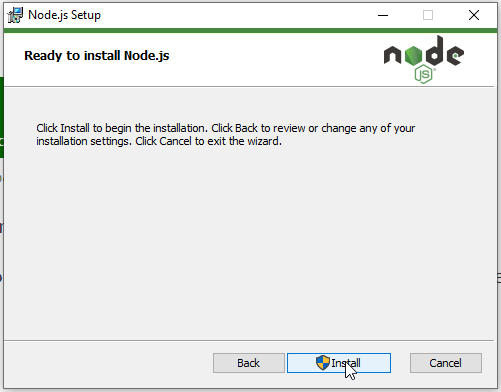

Next

- Click on

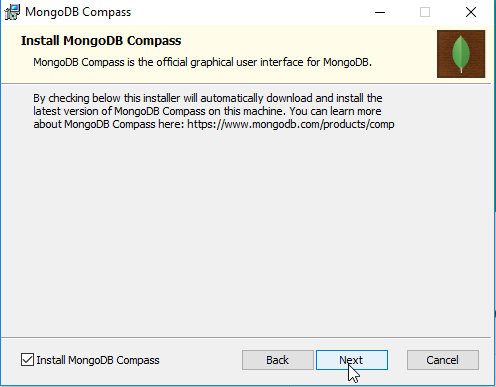

Next

- Click on

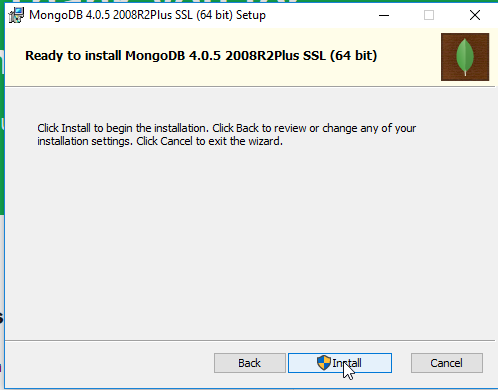

Install

- Click on

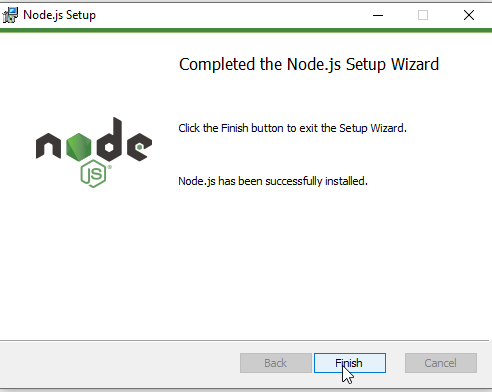

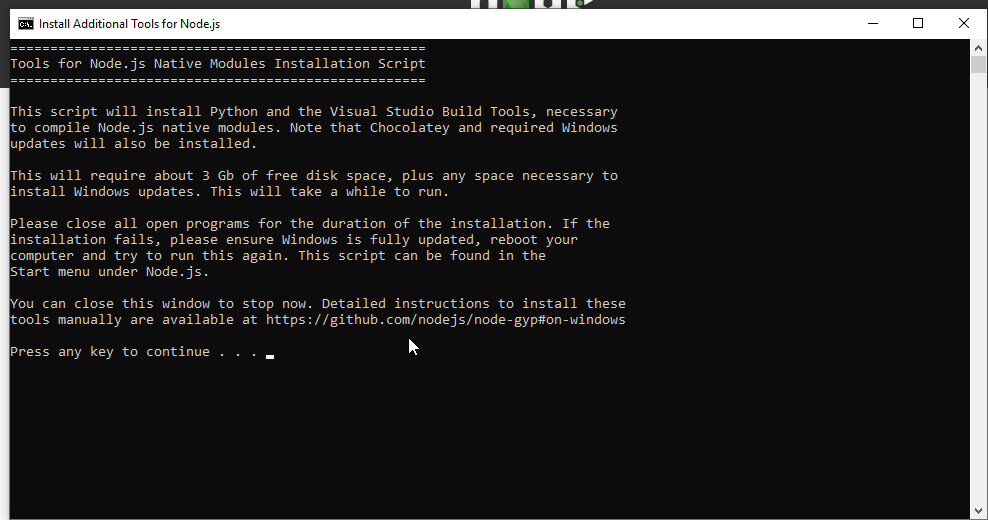

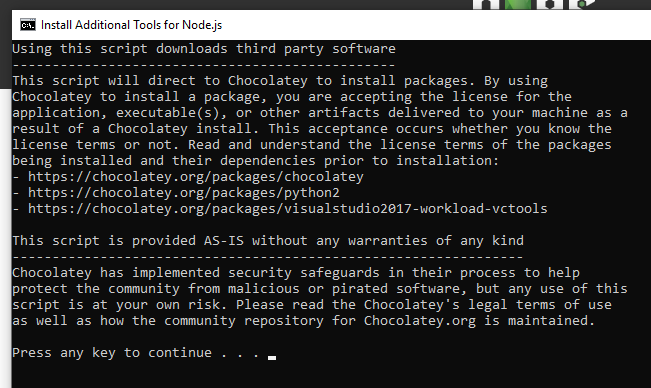

Finish

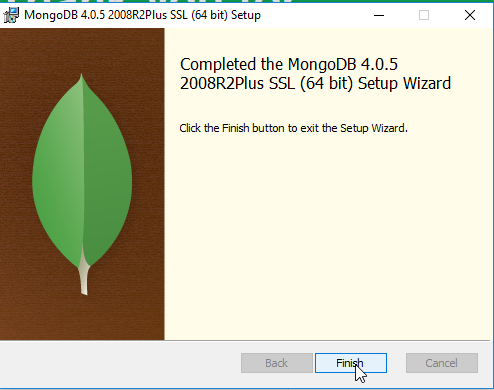

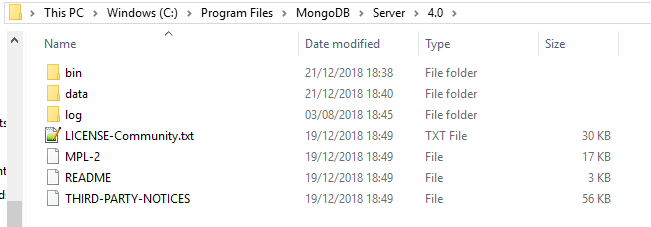

- Update

$PATH

- Check if it works by typing

mongoat thecommand propmt

Microsoft Windows [Version 10.0.17134.285]

(c) 2018 Microsoft Corporation. All rights reserved.

C:\WINDOWS\system32>mongo

MongoDB shell version v4.0.5

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("c9289df3-43a2-45a0-b5c5-4e28a9d1371a") }

MongoDB server version: 4.0.5

Server has startup warnings:

2018-12-21T18:38:38.705+0000 I CONTROL [initandlisten]

2018-12-21T18:38:38.705+0000 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2018-12-21T18:38:38.705+0000 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2018-12-21T18:38:38.705+0000 I CONTROL [initandlisten]

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

>

- In order to stop

MongoDBwe can runnet stop MongoDBbecause it is running as a service

C:\WINDOWS\system32>net stop MongoDB

The MongoDB Server service is stopping.

The MongoDB Server service was stopped successfully.

- In order to start

MongoDBwe can runmongod

C:\WINDOWS\system32>mongod

2018-12-21T10:59:52.717-0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

2018-12-21T10:59:52.722-0800 I CONTROL [initandlisten] MongoDB starting : pid=19744 port=27017 dbpath=C:\data\db\ 64-bit host=RIMDUB-0232

2018-12-21T10:59:52.722-0800 I CONTROL [initandlisten] targetMinOS: Windows 7/Windows Server 2008 R2

2018-12-21T10:59:52.723-0800 I CONTROL [initandlisten] db version v4.0.5

2018-12-21T10:59:52.723-0800 I CONTROL [initandlisten] git version: 3739429dd92b92d1b0ab120911a23d50bf03c412

2018-12-21T10:59:52.724-0800 I CONTROL [initandlisten] allocator: tcmalloc

2018-12-21T10:59:52.724-0800 I CONTROL [initandlisten] modules: none

2018-12-21T10:59:52.724-0800 I CONTROL [initandlisten] build environment:

2018-12-21T10:59:52.725-0800 I CONTROL [initandlisten] distmod: 2008plus-ssl

2018-12-21T10:59:52.725-0800 I CONTROL [initandlisten] distarch: x86_64

2018-12-21T10:59:52.726-0800 I CONTROL [initandlisten] target_arch: x86_64

2018-12-21T10:59:52.726-0800 I CONTROL [initandlisten] options: {}

2018-12-21T10:59:52.732-0800 I STORAGE [initandlisten] Detected data files in C:\data\db\ created by the 'wiredTiger' storage engine, so setting the active storage engine to 'wiredTiger'.

2018-12-21T10:59:52.734-0800 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=7506M,session_max=20000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000),statistics_log=(wait=0),verbose=(recovery_progress),

2018-12-21T10:59:52.990-0800 I STORAGE [initandlisten] WiredTiger message [1545418792:990055][19744:140724605041744], txn-recover: Main recovery loop: starting at 1/22912 to 2/256

2018-12-21T10:59:53.167-0800 I STORAGE [initandlisten] WiredTiger message [1545418793:167055][19744:140724605041744], txn-recover: Recovering log 1 through 2

2018-12-21T10:59:53.270-0800 I STORAGE [initandlisten] WiredTiger message [1545418793:270053][19744:140724605041744], txn-recover: Recovering log 2 through 2

2018-12-21T10:59:53.352-0800 I STORAGE [initandlisten] WiredTiger message [1545418793:352063][19744:140724605041744], txn-recover: Set global recovery timestamp: 0

2018-12-21T10:59:53.371-0800 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(0, 0)

2018-12-21T10:59:53.399-0800 I CONTROL [initandlisten]

2018-12-21T10:59:53.399-0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2018-12-21T10:59:53.400-0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2018-12-21T10:59:53.401-0800 I CONTROL [initandlisten]

2018-12-21T10:59:53.401-0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2018-12-21T10:59:53.401-0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server.

2018-12-21T10:59:53.401-0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP

2018-12-21T10:59:53.402-0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to

2018-12-21T10:59:53.402-0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the

2018-12-21T10:59:53.403-0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning.

2018-12-21T10:59:53.403-0800 I CONTROL [initandlisten]

2018-12-21T18:59:54.739+0000 W FTDC [initandlisten] Failed to initialize Performance Counters for FTDC: WindowsPdhError: PdhExpandCounterPathW failed with 'The specified object was not found on the computer.' for counter '\Memory\Available Bytes'

2018-12-21T18:59:54.740+0000 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory 'C:/data/db/diagnostic.data'

2018-12-21T18:59:54.744+0000 I NETWORK [initandlisten] waiting for connections on port 27017

- To start MongoDB in the default server we have to add the

--dbpath "C:\Program Files\MongoDB\Server\4.0\data"parameter

C:\WINDOWS\system32>mongod --dbpath "C:\Program Files\MongoDB\Server\4.0\data"

2018-12-21T11:03:44.233-0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

2018-12-21T11:03:44.240-0800 I CONTROL [initandlisten] MongoDB starting : pid=18604 port=27017 dbpath=C:\Program Files\MongoDB\Server\4.0\data 64-bit host=RIMDUB-0232

2018-12-21T11:03:44.240-0800 I CONTROL [initandlisten] targetMinOS: Windows 7/Windows Server 2008 R2

2018-12-21T11:03:44.243-0800 I CONTROL [initandlisten] db version v4.0.5

2018-12-21T11:03:44.243-0800 I CONTROL [initandlisten] git version: 3739429dd92b92d1b0ab120911a23d50bf03c412

2018-12-21T11:03:44.244-0800 I CONTROL [initandlisten] allocator: tcmalloc

2018-12-21T11:03:44.247-0800 I CONTROL [initandlisten] modules: none

2018-12-21T11:03:44.254-0800 I CONTROL [initandlisten] build environment:

2018-12-21T11:03:44.256-0800 I CONTROL [initandlisten] distmod: 2008plus-ssl

2018-12-21T11:03:44.258-0800 I CONTROL [initandlisten] distarch: x86_64

2018-12-21T11:03:44.258-0800 I CONTROL [initandlisten] target_arch: x86_64

2018-12-21T11:03:44.268-0800 I CONTROL [initandlisten] options: { storage: { dbPath: "C:\Program Files\MongoDB\Server\4.0\data" } }

2018-12-21T11:03:44.279-0800 I STORAGE [initandlisten] Detected data files in C:\Program Files\MongoDB\Server\4.0\data created by the 'wiredTiger' storage engine, so setting the active storage engine to 'wiredTiger'.

2018-12-21T11:03:44.286-0800 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=7506M,session_max=20000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000),statistics_log=(wait=0),verbose=(recovery_progress),

2018-12-21T11:03:44.588-0800 I STORAGE [initandlisten] WiredTiger message [1545419024:587443][18604:140724605041744], txn-recover: Main recovery loop: starting at 32/43520 to 33/256

2018-12-21T11:03:44.804-0800 I STORAGE [initandlisten] WiredTiger message [1545419024:804447][18604:140724605041744], txn-recover: Recovering log 32 through 33

2018-12-21T11:03:44.910-0800 I STORAGE [initandlisten] WiredTiger message [1545419024:909443][18604:140724605041744], txn-recover: Recovering log 33 through 33

2018-12-21T11:03:45.001-0800 I STORAGE [initandlisten] WiredTiger message [1545419025:455][18604:140724605041744], txn-recover: Set global recovery timestamp: 0

2018-12-21T11:03:45.024-0800 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(0, 0)

2018-12-21T11:03:45.073-0800 I CONTROL [initandlisten]

2018-12-21T11:03:45.074-0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2018-12-21T11:03:45.075-0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2018-12-21T11:03:45.083-0800 I CONTROL [initandlisten]

2018-12-21T11:03:45.083-0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2018-12-21T11:03:45.085-0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server.

2018-12-21T11:03:45.097-0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP

2018-12-21T11:03:45.099-0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to

2018-12-21T11:03:45.107-0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the

2018-12-21T11:03:45.109-0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning.

2018-12-21T11:03:45.118-0800 I CONTROL [initandlisten]

2018-12-21T19:03:47.252+0000 W FTDC [initandlisten] Failed to initialize Performance Counters for FTDC: WindowsPdhError: PdhExpandCounterPathW failed with 'The specified object was not found on the computer.' for counter '\Memory\Available Bytes'

2018-12-21T19:03:47.252+0000 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory 'C:/Program Files/MongoDB/Server/4.0/data/diagnostic.data'

2018-12-21T19:03:47.265+0000 I NETWORK [initandlisten] waiting for connections on port 27017

- Query MongoDB

- Check the current databases

> show dbs

TasksAppMongo 0.000GB

admin 0.000GB

blog 0.000GB

config 0.000GB

local 0.000GB

- We can use a

databaseeven if it doesn't exist yet

> use shop

switched to db shop

- We can create a new

collection

> db.products.insertOne({name: "Book", price: 12.99})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c1d3b5499e4cbc46ce07f10")

}

- We can query the data just created

> db.products.find()

{ "_id" : ObjectId("5c1d3b5499e4cbc46ce07f10"), "name" : "Book", "price" : 12.99 }

> db.products.find().pretty()

{

"_id" : ObjectId("5c1d3b5499e4cbc46ce07f10"),

"name" : "Book",

"price" : 12.99

}

> db.products.insertOne({name: "Notebook", price: 17.99, description: "Large Notebook with 500 pages"})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c1d3ca099e4cbc46ce07f11")

}

> db.products.find().pretty()

{

"_id" : ObjectId("5c1d3b5499e4cbc46ce07f10"),

"name" : "Book",

"price" : 12.99

}

{

"_id" : ObjectId("5c1d3ca099e4cbc46ce07f11"),

"name" : "Notebook",

"price" : 17.99,

"description" : "Large Notebook with 500 pages"

}

> db.products.insertOne({name: "Another Computer", price: 1299.49, description: "A high quality computer", details: {cpu: "Intel i7 8770", memory: 32}})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c1d3dcd99e4cbc46ce07f13")

}

> db.products.find().pretty()

{

"_id" : ObjectId("5c1d3b5499e4cbc46ce07f10"),

"name" : "Book",

"price" : 12.99

}

{

"_id" : ObjectId("5c1d3ca099e4cbc46ce07f11"),

"name" : "Notebook",

"price" : 17.99,

"description" : "Large Notebook with 500 pages"

}

{

"_id" : ObjectId("5c1d3d3c99e4cbc46ce07f12"),

"name" : "Computer",

"price" : 1299.49,

"description" : "A high quality computer"

}

{

"_id" : ObjectId("5c1d3dcd99e4cbc46ce07f13"),

"name" : "Another Computer",

"price" : 1299.49,

"description" : "A high quality computer",

"details" : {

"cpu" : "Intel i7 8770",

"memory" : 32

}

}

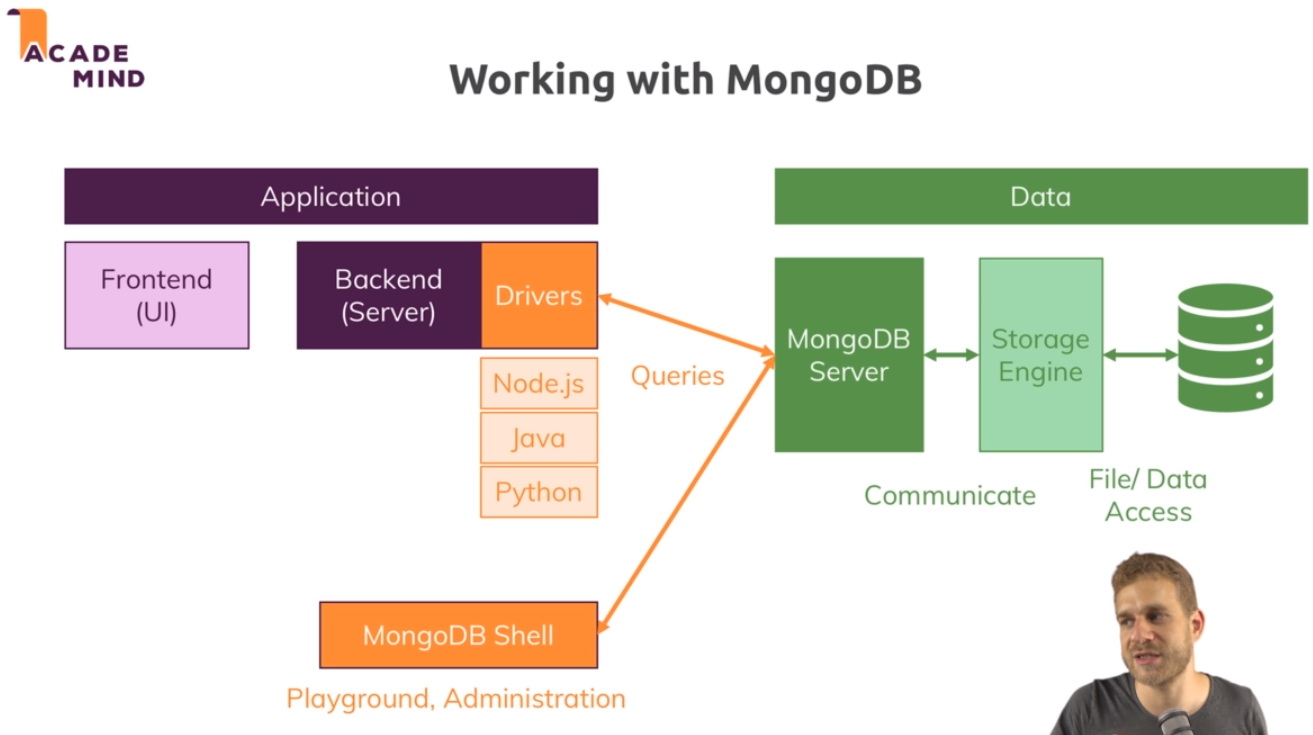

- Working with MongoDB

Working with the Database (CRUD Operations)

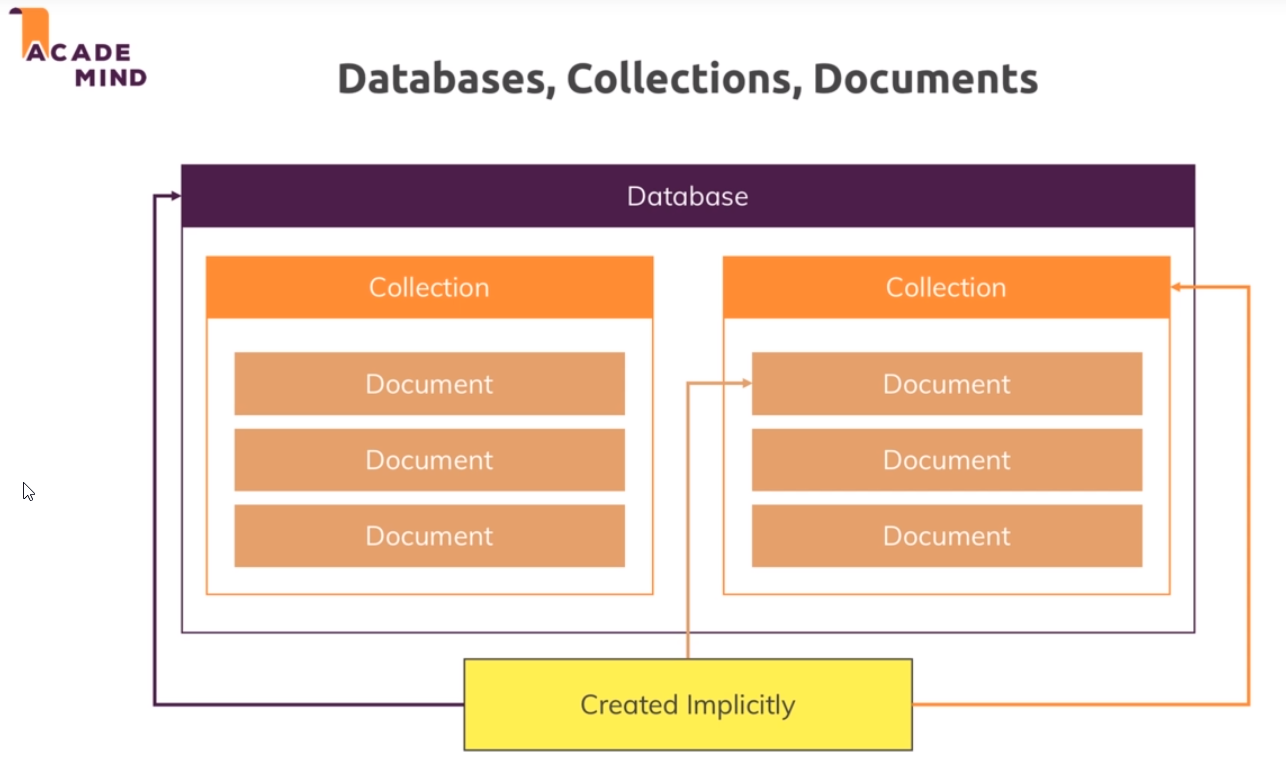

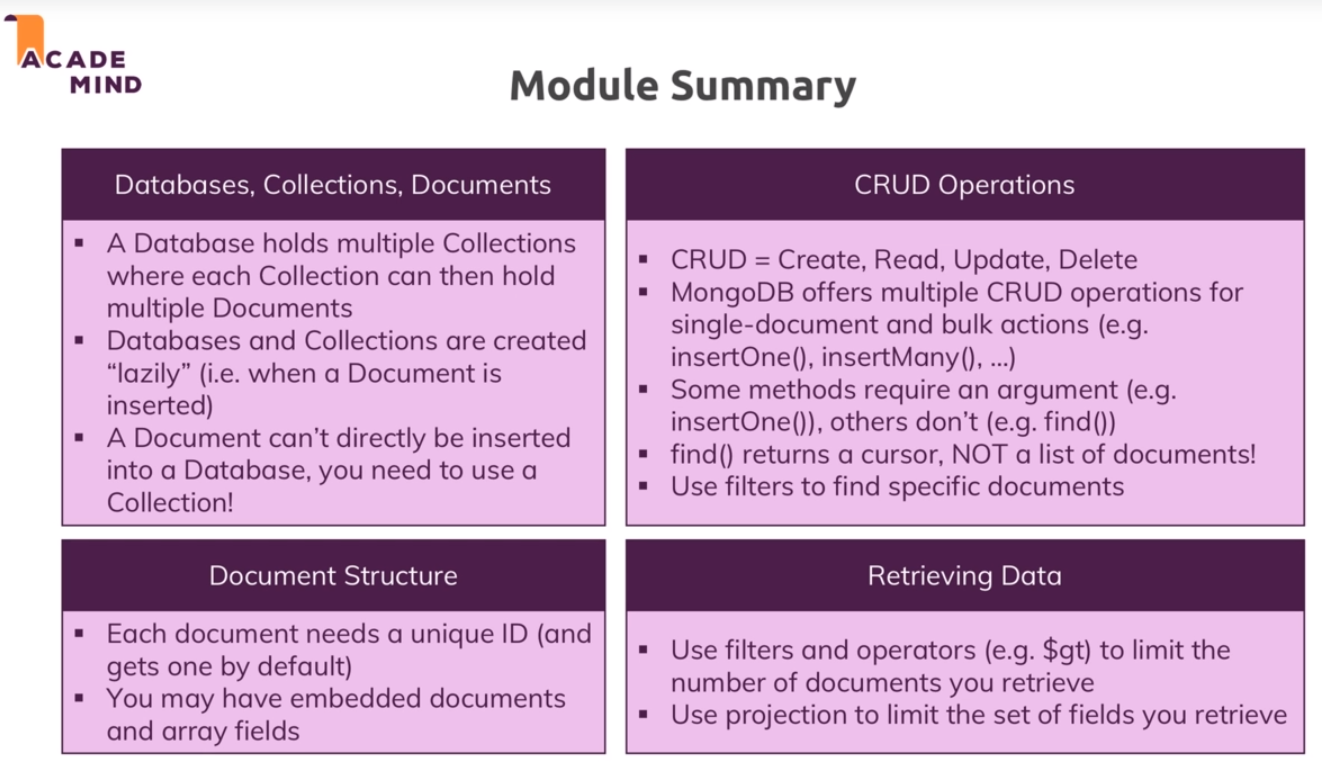

Databases, Collections & Documents

show dbsshows all the available databases

> show dbs

TasksAppMongo 0.000GB

admin 0.000GB

blog 0.000GB

config 0.000GB

local 0.000GB

shop 0.000GB

- Switch to a database using the

usecomand.

- if the database doesn't exist it is created

on the flythe first time we add any data on it

> use flights

switched to db flights

> show dbs

TasksAppMongo 0.000GB

admin 0.000GB

blog 0.000GB

config 0.000GB

local 0.000GB

shop 0.000GB

- Insert a new

documentwith thedb.collectionName.insertOne({json data})function

> db.flightData.insertOne({

... "departureAirport": "MUC",

... "arrivalAirport": "SFO",

... "aircraft": "Airbus A380",

... "distance": 12000,

... "intercontinental": true

... })

{

"acknowledged" : true,

"insertedId" : ObjectId("5c1ddb9799e4cbc46ce07f14")

}

| Tag | Description | Example |

|---|---|---|

db | current database | flgths in this case |

collectionName | Name of the collection we want to interact with. If it doesn't exist it's created on the fly | flightData in this case |

insertOne | Insert a new document | |

json data | Data we want to insert into the document. They are key value pairs: " name": "value" name - Name of the key value - Value for that name. | { "departureAirport": "MUC", "arrivalAirport": "SFO", "aircraft": "Airbus A380", "distance": 12000, "intercontinental": true } |

value types | string - Always with quotation marksnumber - it can have decimal pointsboolean - true or false | string - "departureAirport": "MUCnumber - "distance": 12000boolean - "intercontinental": true |

returned information | acknowledged - true if successfully executedinsertedId : ObjectId(ìd) - ìd assigned to that document | { "acknowledged" : true, "insertedId" : ObjectId("5c1ddb9799e4cbc46ce07f14") } |

- Get the documents in a collection using

findand withprettyif we want it in apretty way

> db.flightData.find()

{ "_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"), "departureAirport" : "MUC", "arrivalAirport" : "SFO", "aircraft" : "Airbus A380", "distance" : 12000, "intercontinental" : true }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

- JSON vs BSON

- Behind the scenes, MongoDB uses BSON

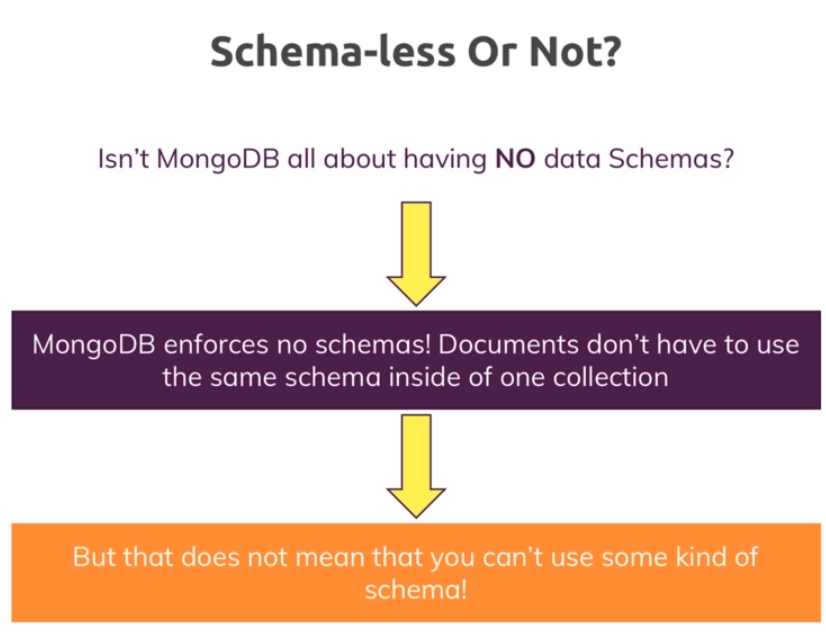

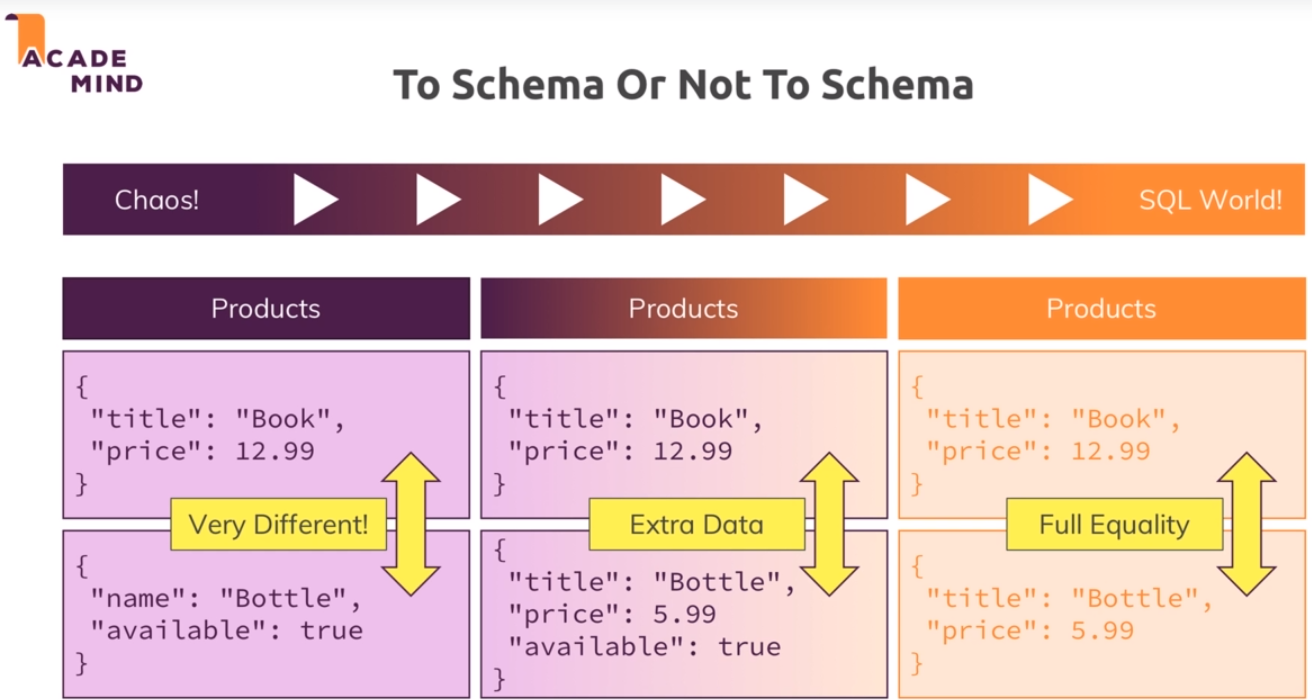

- Schemaless. Each document can have a

differentschema.

> db.flightData.insertOne({departureAirport: "TXL",arrivalAirport: "LHR"})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c1e0bb799e4cbc46ce07f15")

}

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : ObjectId("5c1e0bb799e4cbc46ce07f15"),

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

- The id can be assigned when creating the document adding it with the

_idkey.

> db.flightData.insertOne({departureAirport: "TXL",arrivalAirport: "LHR", _id: "txl_lhr-1"})

{ "acknowledged" : true, "insertedId" : "txl_lhr-1" }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : ObjectId("5c1e0bb799e4cbc46ce07f15"),

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

{

"_id" : "txl_lhr-1",

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

- We cannot insert the same

idagain

> db.flightData.insertOne({departureAirport: "TXL",arrivalAirport: "LHR", _id: "txl_lhr-1"})

2018-12-22T10:06:51.925+0000 E QUERY [js] WriteError: E11000 duplicate key error collection: flights.flightData index: _id_ dup key: { : "txl_lhr-1" } :

WriteError({

"index" : 0,

"code" : 11000,

"errmsg" : "E11000 duplicate key error collection: flights.flightData index: _id_ dup key: { : \"txl_lhr-1\" }",

"op" : {

"departureAirport" : "TXL",

"arrivalAirport" : "LHR",

"_id" : "txl_lhr-1"

}

})

WriteError@src/mongo/shell/bulk_api.js:461:48

Bulk/mergeBatchResults@src/mongo/shell/bulk_api.js:841:49

Bulk/executeBatch@src/mongo/shell/bulk_api.js:906:13

Bulk/this.execute@src/mongo/shell/bulk_api.js:1150:21

DBCollection.prototype.insertOne@src/mongo/shell/crud_api.js:252:9

@(shell):1:1

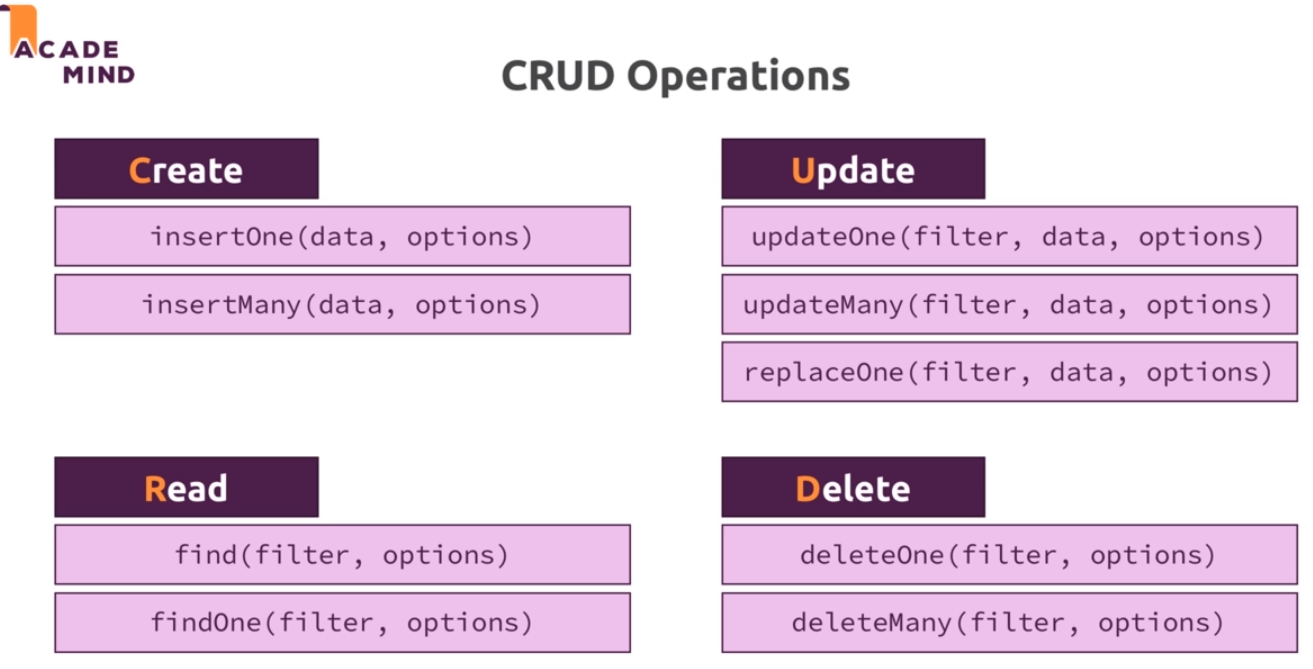

- CRUD Operations

- Clear one document using

deleteOne

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : ObjectId("5c1e0bb799e4cbc46ce07f15"),

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

{

"_id" : "txl_lhr-1",

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

> db.flightData.deleteOne({departureAirport: "TXL"})

{ "acknowledged" : true, "deletedCount" : 1 }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : "txl_lhr-1",

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

> db.flightData.deleteOne({_id: "txl_lhr-1"})

{ "acknowledged" : true, "deletedCount" : 1 }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

- Update one document using

updateOne

> db.flightData.insertOne({departureAirport: "TXL",arrivalAirport: "LHR", _id: "txl_lhr-1"})

{ "acknowledged" : true, "insertedId" : "txl_lhr-1" }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : "txl_lhr-1",

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

> db.flightData.update({distance: 12000}, {marker: 'delete'})

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

> db.flightData.find().pretty()

{ "_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"), "marker" : "delete" }

{

"_id" : "txl_lhr-1",

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

> db.flightData.updateOne({"marker": "delete"}, {$set: {"departureAirport": "MUC","arrivalAirport": "SFO", "aircraft": "Airbus A380", distance: 12000, "intercontinental": true }})

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"marker" : "delete",

"aircraft" : "Airbus A380",

"arrivalAirport" : "SFO",

"departureAirport" : "MUC",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : "txl_lhr-1",

"departureAirport" : "TXL",

"arrivalAirport" : "LHR"

}

> db.flightData.updateOne({distance: 12000}, {marketing: 'direct'})

2018-12-22T11:07:02.332+0000 E QUERY [js] Error: the update operation document must contain atomic operators :

DBCollection.prototype.updateOne@src/mongo/shell/crud_api.js:542:1

@(shell):1:1

- Use

updateManyto update the same data to all the documents

> db.flightData.updateMany({},{$set: {marker: "toDelete"}})

{ "acknowledged" : true, "matchedCount" : 2, "modifiedCount" : 2 }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1ddb9799e4cbc46ce07f14"),

"marker" : "toDelete",

"aircraft" : "Airbus A380",

"arrivalAirport" : "SFO",

"departureAirport" : "MUC",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : "txl_lhr-1",

"departureAirport" : "TXL",

"arrivalAirport" : "LHR",

"marker" : "toDelete"

}

- Delete many documents using

deleteMany. We need to pass acriteria.

> db.flightData.deleteMany()

2018-12-22T10:45:38.561+0000 E QUERY [js] Error: find() requires query criteria :

Bulk/this.find@src/mongo/shell/bulk_api.js:781:1

DBCollection.prototype.deleteMany@src/mongo/shell/crud_api.js:408:20

@(shell):1:1

- We can use

{}(all) as the criteria to delete all. - We can use another criteria to delete all the ones that match the criteria.

> db.flightData.deleteMany( {marker: "toDelete"})

{ "acknowledged" : true, "deletedCount" : 2 }

> db.flightData.find().pretty()

>

- Use

insertManyto insert many documents in one go.

> db.flightData.insertMany([

... {

... "departureAirport": "MUC",

... "arrivalAirport": "SFO",

... "aircraft": "Airbus A380",

... "distance": 12000,

... "intercontinental": true

... },

... {

... "departureAirport": "LHR",

... "arrivalAirport": "TXL",

... "aircraft": "Airbus A320",

... "distance": 950,

... "intercontinental": false

... }

... ]

... )

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("5c1e1cf599e4cbc46ce07f16"),

ObjectId("5c1e1cf599e4cbc46ce07f17")

]

}

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f17"),

"departureAirport" : "LHR",

"arrivalAirport" : "TXL",

"aircraft" : "Airbus A320",

"distance" : 950,

"intercontinental" : false

}

- Search for specific documents using

findandfindOnewith search criteria

> db.flightData.find({"intercontinental" : true}).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

> db.flightData.find({distance : 12000}).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

> db.flightData.find({distance : {$gt:1000}}).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

pretty is not supported with findOne

> db.flightData.findOne({distance : {$gt:900}}).pretty()

2018-12-23T06:13:01.752+0000 E QUERY [js] TypeError: db.flightData.findOne(...).pretty is not a function :

@(shell):1:1

> db.flightData.findOne({distance : {$gt:900}})

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

- Use

update,updatemanyandupdateOneto update documents.

> db.flightData.updateOne({"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16")}, {$set: {delayed: true}})

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.flightData.find({distance : 12000}).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true,

"delayed" : true

}

> db.flightData.updateMany({"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16")}, {$set: {delayed: true}})

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 0 })

> db.flightData.updateMany({"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16")}, {$set: {delayed: false}})

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

> db.flightData.find({distance : 12000}).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true,

"delayed" : false

}

Updatedoes not need$setbut itreplacesthe content if we use it.

> db.flightData.update({"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16")}, {delayed: true})

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

> db.flightData.find({distance : 12000}).pretty()

- It is better to use

replaceandreplaceOneif we want to replace the whole document.

> db.flightData.replaceOne({"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16")}, {

... "departureAirport": "MUC",

... "arrivalAirport": "SFO",

... "aircraft": "Airbus A380",

... "distance": 12000,

... "intercontinental": true

... })

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.flightData.find({distance : 12000}).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true

}

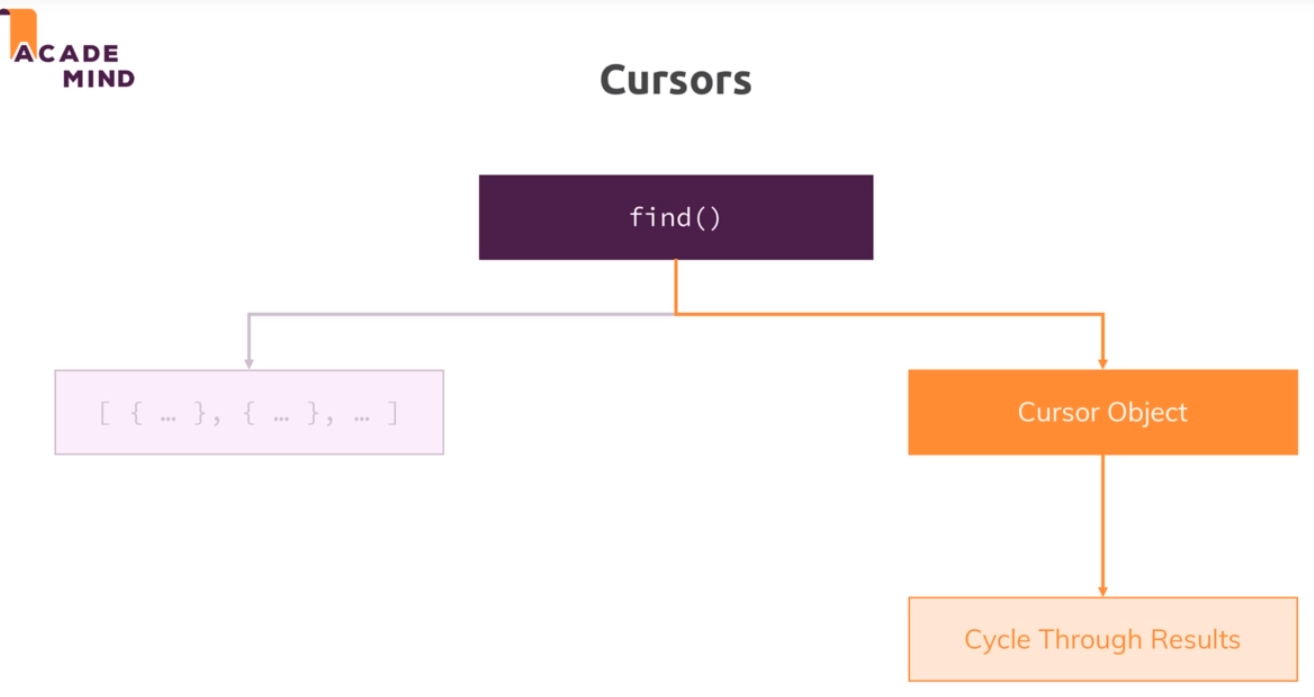

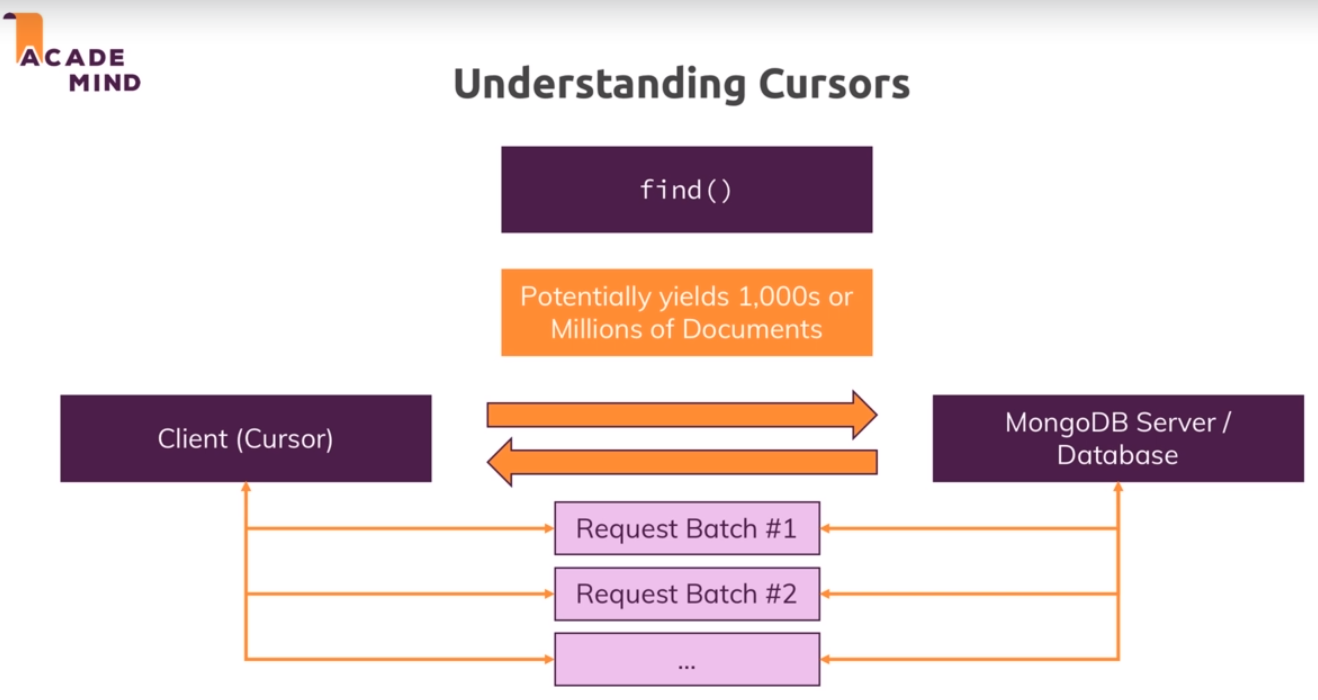

findand thecursorobject.

> db.passengers.insertMany([

... {

... "name": "Max Schwarzmueller",

... "age": 29

... },

... {

... "name": "Manu Lorenz",

... "age": 30

... },

... {

... "name": "Chris Hayton",

... "age": 35

... },

... {

... "name": "Sandeep Kumar",

... "age": 28

... },

... {

... "name": "Maria Jones",

... "age": 30

... },

... {

... "name": "Alexandra Maier",

... "age": 27

... },

... {

... "name": "Dr. Phil Evans",

... "age": 47

... },

... {

... "name": "Sandra Brugge",

... "age": 33

... },

... {

... "name": "Elisabeth Mayr",

... "age": 29

... },

... {

... "name": "Frank Cube",

... "age": 41

... },

... {

... "name": "Karandeep Alun",

... "age": 48

... },

... {

... "name": "Michaela Drayer",

... "age": 39

... },

... {

... "name": "Bernd Hoftstadt",

... "age": 22

... },

... {

... "name": "Scott Tolib",

... "age": 44

... },

... {

... "name": "Freddy Melver",

... "age": 41

... },

... {

... "name": "Alexis Bohed",

... "age": 35

... },

... {

... "name": "Melanie Palace",

... "age": 27

... },

... {

... "name": "Armin Glutch",

... "age": 35

... },

... {

... "name": "Klaus Arber",

... "age": 53

... },

... {

... "name": "Albert Twostone",

... "age": 68

... },

... {

... "name": "Gordon Black",

... "age": 38

... }

... ]

... )

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("5c1f2bcb99e4cbc46ce07f18"),

ObjectId("5c1f2bcb99e4cbc46ce07f19"),

ObjectId("5c1f2bcb99e4cbc46ce07f1a"),

ObjectId("5c1f2bcb99e4cbc46ce07f1b"),

ObjectId("5c1f2bcb99e4cbc46ce07f1c"),

ObjectId("5c1f2bcb99e4cbc46ce07f1d"),

ObjectId("5c1f2bcb99e4cbc46ce07f1e"),

ObjectId("5c1f2bcb99e4cbc46ce07f1f"),

ObjectId("5c1f2bcb99e4cbc46ce07f20"),

ObjectId("5c1f2bcb99e4cbc46ce07f21"),

ObjectId("5c1f2bcb99e4cbc46ce07f22"),

ObjectId("5c1f2bcb99e4cbc46ce07f23"),

ObjectId("5c1f2bcb99e4cbc46ce07f24"),

ObjectId("5c1f2bcb99e4cbc46ce07f25"),

ObjectId("5c1f2bcb99e4cbc46ce07f26"),

ObjectId("5c1f2bcb99e4cbc46ce07f27"),

ObjectId("5c1f2bcb99e4cbc46ce07f28"),

ObjectId("5c1f2bcb99e4cbc46ce07f29"),

ObjectId("5c1f2bcb99e4cbc46ce07f2a"),

ObjectId("5c1f2bcb99e4cbc46ce07f2b"),

ObjectId("5c1f2bcb99e4cbc46ce07f2c")

]

}

> db.passengers.find().pretty()

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f18"),

"name" : "Max Schwarzmueller",

"age" : 29

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f19"),

"name" : "Manu Lorenz",

"age" : 30

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1a"),

"name" : "Chris Hayton",

"age" : 35

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1b"),

"name" : "Sandeep Kumar",

"age" : 28

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1c"),

"name" : "Maria Jones",

"age" : 30

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1d"),

"name" : "Alexandra Maier",

"age" : 27

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1e"),

"name" : "Dr. Phil Evans",

"age" : 47

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1f"),

"name" : "Sandra Brugge",

"age" : 33

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f20"),

"name" : "Elisabeth Mayr",

"age" : 29

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f21"),

"name" : "Frank Cube",

"age" : 41

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f22"),

"name" : "Karandeep Alun",

"age" : 48

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f23"),

"name" : "Michaela Drayer",

"age" : 39

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f24"),

"name" : "Bernd Hoftstadt",

"age" : 22

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f25"),

"name" : "Scott Tolib",

"age" : 44

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f26"),

"name" : "Freddy Melver",

"age" : 41

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f27"),

"name" : "Alexis Bohed",

"age" : 35

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f28"),

"name" : "Melanie Palace",

"age" : 27

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f29"),

"name" : "Armin Glutch",

"age" : 35

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2a"),

"name" : "Klaus Arber",

"age" : 53

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2b"),

"name" : "Albert Twostone",

"age" : 68

}

Type "it" for more

- We have to put

itto see the rest

> it

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2c"),

"name" : "Gordon Black",

"age" : 38

}

findretunrs acursorObject that allows us to surf through the results.

- We can add

toArrayto get all the data.

> db.passengers.find().toArray()

[

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f18"),

"name" : "Max Schwarzmueller",

"age" : 29

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f19"),

"name" : "Manu Lorenz",

"age" : 30

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1a"),

"name" : "Chris Hayton",

"age" : 35

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1b"),

"name" : "Sandeep Kumar",

"age" : 28

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1c"),

"name" : "Maria Jones",

"age" : 30

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1d"),

"name" : "Alexandra Maier",

"age" : 27

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1e"),

"name" : "Dr. Phil Evans",

"age" : 47

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1f"),

"name" : "Sandra Brugge",

"age" : 33

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f20"),

"name" : "Elisabeth Mayr",

"age" : 29

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f21"),

"name" : "Frank Cube",

"age" : 41

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f22"),

"name" : "Karandeep Alun",

"age" : 48

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f23"),

"name" : "Michaela Drayer",

"age" : 39

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f24"),

"name" : "Bernd Hoftstadt",

"age" : 22

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f25"),

"name" : "Scott Tolib",

"age" : 44

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f26"),

"name" : "Freddy Melver",

"age" : 41

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f27"),

"name" : "Alexis Bohed",

"age" : 35

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f28"),

"name" : "Melanie Palace",

"age" : 27

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f29"),

"name" : "Armin Glutch",

"age" : 35

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2a"),

"name" : "Klaus Arber",

"age" : 53

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2b"),

"name" : "Albert Twostone",

"age" : 68

},

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2c"),

"name" : "Gordon Black",

"age" : 38

}

]

- We can use

forEachto do something for each documentfound. The code that we have to put isJavabecause is the language used to create theMongoDB Console

> db.passengers.find().forEach((passenger) => {printjson(passenger)})

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f18"),

"name" : "Max Schwarzmueller",

"age" : 29

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f19"),

"name" : "Manu Lorenz",

"age" : 30

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1a"),

"name" : "Chris Hayton",

"age" : 35

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1b"),

"name" : "Sandeep Kumar",

"age" : 28

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1c"),

"name" : "Maria Jones",

"age" : 30

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1d"),

"name" : "Alexandra Maier",

"age" : 27

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1e"),

"name" : "Dr. Phil Evans",

"age" : 47

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1f"),

"name" : "Sandra Brugge",

"age" : 33

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f20"),

"name" : "Elisabeth Mayr",

"age" : 29

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f21"),

"name" : "Frank Cube",

"age" : 41

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f22"),

"name" : "Karandeep Alun",

"age" : 48

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f23"),

"name" : "Michaela Drayer",

"age" : 39

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f24"),

"name" : "Bernd Hoftstadt",

"age" : 22

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f25"),

"name" : "Scott Tolib",

"age" : 44

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f26"),

"name" : "Freddy Melver",

"age" : 41

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f27"),

"name" : "Alexis Bohed",

"age" : 35

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f28"),

"name" : "Melanie Palace",

"age" : 27

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f29"),

"name" : "Armin Glutch",

"age" : 35

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2a"),

"name" : "Klaus Arber",

"age" : 53

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2b"),

"name" : "Albert Twostone",

"age" : 68

}

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2c"),

"name" : "Gordon Black",

"age" : 38

}

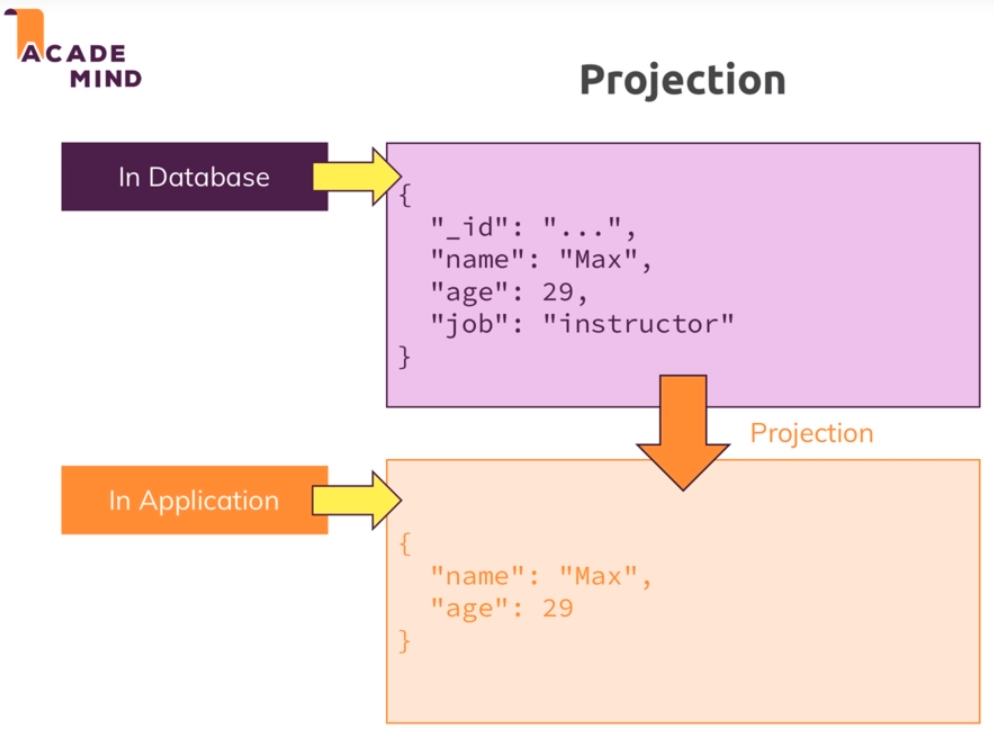

Projection

Projectionis the snapshot where we put the data that we need.

> db.passengers.find({}, {name: 1})

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f18"), "name" : "Max Schwarzmueller" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f19"), "name" : "Manu Lorenz" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1a"), "name" : "Chris Hayton" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1b"), "name" : "Sandeep Kumar" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1c"), "name" : "Maria Jones" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1d"), "name" : "Alexandra Maier" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1e"), "name" : "Dr. Phil Evans" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f1f"), "name" : "Sandra Brugge" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f20"), "name" : "Elisabeth Mayr" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f21"), "name" : "Frank Cube" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f22"), "name" : "Karandeep Alun" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f23"), "name" : "Michaela Drayer" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f24"), "name" : "Bernd Hoftstadt" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f25"), "name" : "Scott Tolib" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f26"), "name" : "Freddy Melver" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f27"), "name" : "Alexis Bohed" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f28"), "name" : "Melanie Palace" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f29"), "name" : "Armin Glutch" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2a"), "name" : "Klaus Arber" }

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2b"), "name" : "Albert Twostone" }

Type "it" for more

> it

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2c"), "name" : "Gordon Black" }

_idis always included. We need to explicity unselect it.

{ "_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2c"), "name" : "Gordon Black" }

> db.passengers.find({}, {_id: 0, name: 1})

{ "name" : "Max Schwarzmueller" }

{ "name" : "Manu Lorenz" }

{ "name" : "Chris Hayton" }

{ "name" : "Sandeep Kumar" }

{ "name" : "Maria Jones" }

{ "name" : "Alexandra Maier" }

{ "name" : "Dr. Phil Evans" }

{ "name" : "Sandra Brugge" }

{ "name" : "Elisabeth Mayr" }

{ "name" : "Frank Cube" }

{ "name" : "Karandeep Alun" }

{ "name" : "Michaela Drayer" }

{ "name" : "Bernd Hoftstadt" }

{ "name" : "Scott Tolib" }

{ "name" : "Freddy Melver" }

{ "name" : "Alexis Bohed" }

{ "name" : "Melanie Palace" }

{ "name" : "Armin Glutch" }

{ "name" : "Klaus Arber" }

{ "name" : "Albert Twostone" }

Type "it" for more

> it

{ "name" : "Gordon Black" }

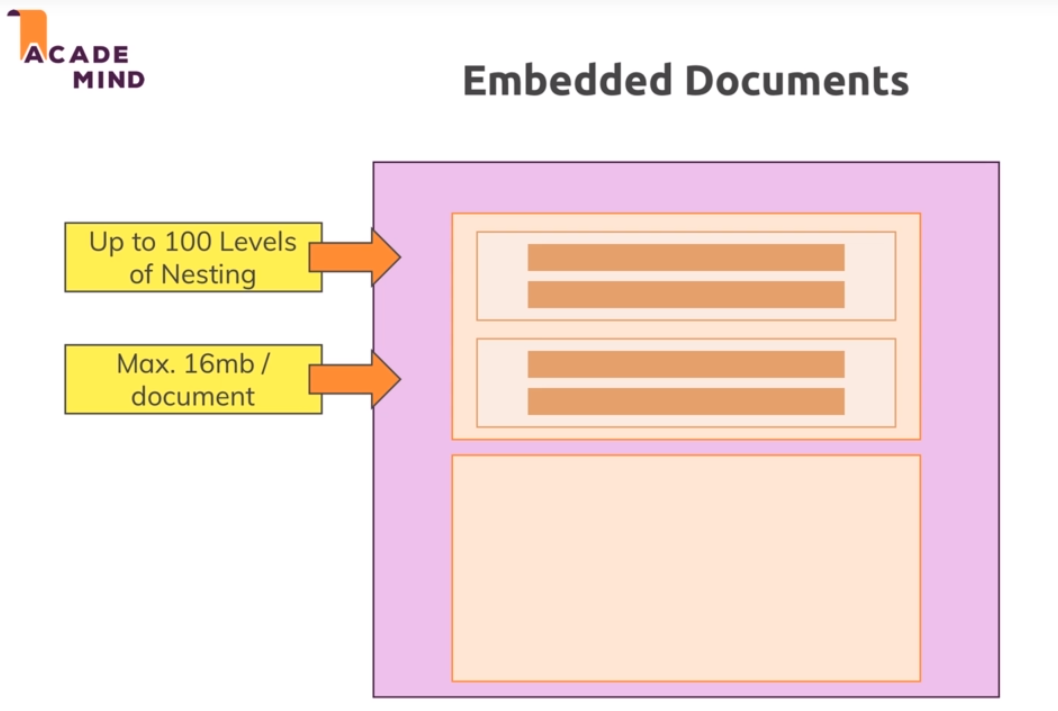

Embedded Documents

- We can add nesting documents in each document up to 100 levels of Nesting

- Each document can store a maximum of 16 Mbytes.

> db.flightData.updateMany({}, {$set: {status: {description: "on-time", updated: "1 hour ago"}}})

{ "acknowledged" : true, "matchedCount" : 2, "modifiedCount" : 2 }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago"

}

}

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f17"),

"departureAirport" : "LHR",

"arrivalAirport" : "TXL",

"aircraft" : "Airbus A320",

"distance" : 950,

"intercontinental" : false,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago"

}

}

> db.flightData.updateMany({}, {$set: {status: {description: "on-time", updated: "1 hour ago", details: {responsible:"Juan Pablo Perez"}}}})

{ "acknowledged" : true, "matchedCount" : 2, "modifiedCount" : 2 }

> db.flightData.find().pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago",

"details" : {

"responsible" : "Juan Pablo Perez"

}

}

}

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f17"),

"departureAirport" : "LHR",

"arrivalAirport" : "TXL",

"aircraft" : "Airbus A320",

"distance" : 950,

"intercontinental" : false,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago",

"details" : {

"responsible" : "Juan Pablo Perez"

}

}

}

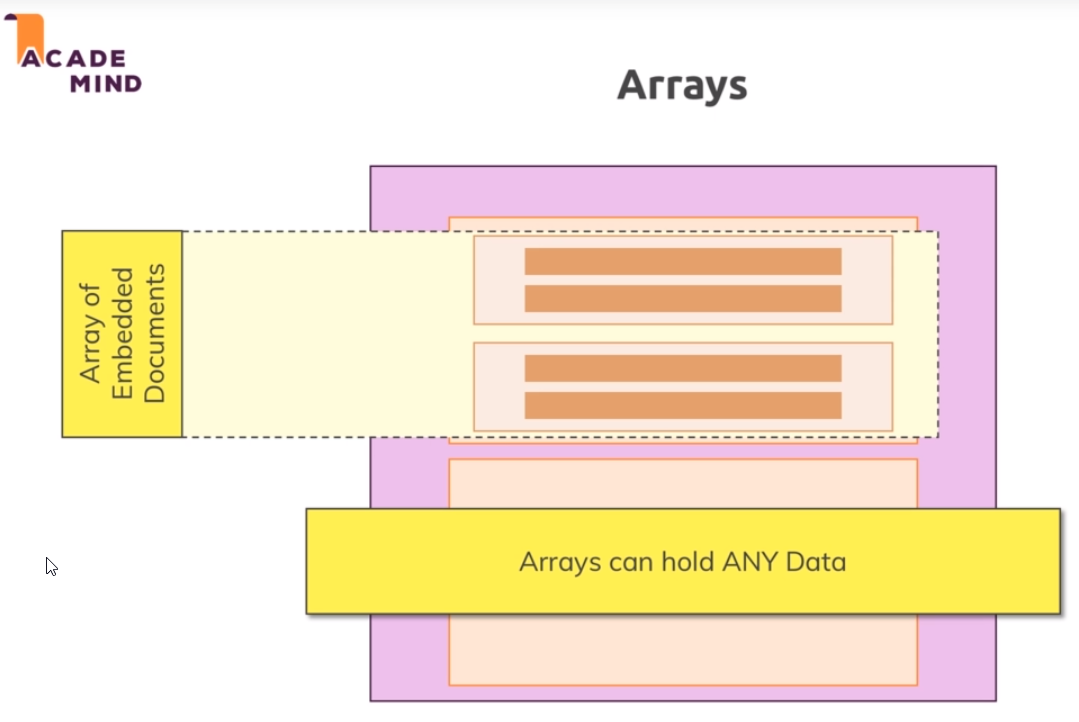

- We can store arrays of embedded documents.

> db.passengers.updateOne({name: "Albert Twostone"}, {$set: {hobbies: ["sports", "cooking"]}})

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.passengers.find({name: "Albert Twostone"}).pretty()

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2b"),

"name" : "Albert Twostone",

"age" : 68,

"hobbies" : [

"sports",

"cooking"

]

}

- Querying embedded documents

> db.passengers.findOne({name: "Albert Twostone"}).hobbies

[ "sports", "cooking" ]

> db.passengers.find({hobbies: "sports"}).pretty()

{

"_id" : ObjectId("5c1f2bcb99e4cbc46ce07f2b"),

"name" : "Albert Twostone",

"age" : 68,

"hobbies" : [

"sports",

"cooking"

]

}

> db.flightData.find({"status.description": "on-time" }).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago",

"details" : {

"responsible" : "Juan Pablo Perez"

}

}

}

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f17"),

"departureAirport" : "LHR",

"arrivalAirport" : "TXL",

"aircraft" : "Airbus A320",

"distance" : 950,

"intercontinental" : false,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago",

"details" : {

"responsible" : "Juan Pablo Perez"

}

}

}

> db.flightData.find({"status.details.responsible": "Juan Pablo Perez" }).pretty()

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f16"),

"departureAirport" : "MUC",

"arrivalAirport" : "SFO",

"aircraft" : "Airbus A380",

"distance" : 12000,

"intercontinental" : true,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago",

"details" : {

"responsible" : "Juan Pablo Perez"

}

}

}

{

"_id" : ObjectId("5c1e1cf599e4cbc46ce07f17"),

"departureAirport" : "LHR",

"arrivalAirport" : "TXL",

"aircraft" : "Airbus A320",

"distance" : 950,

"intercontinental" : false,

"status" : {

"description" : "on-time",

"updated" : "1 hour ago",

"details" : {

"responsible" : "Juan Pablo Perez"

}

}

}

- Assignment

- New database

> use hospital

switched to db hospital

- Insert documents

> db.patient.insertMany([

... {

... "firstName": "Mickey",

... "lastName": "Mouse",

... "age": 35,

... "history": [

... {"disease": "cold", "treatment": "painkillers 3 times a day for 3 days"}

... ]

... },

... {

... "firstName": "Minnie",

... "lastName": "Mouse",

... "age": 31,

... "history": [

... {"disease": "bronchitis", "treatment": "antibiotic 3 times a day for 7 days"}

... ]

... },

... {

... "firstName": "Donald",

... "lastName": "Duck",

... "age": 42,

... "history": [

... {"disease": "high blood pressure", "treatment": "take it easy"}

... ]

... }

... ])

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("5c1f6bc899e4cbc46ce07f2d"),

ObjectId("5c1f6bc899e4cbc46ce07f2e"),

ObjectId("5c1f6bc899e4cbc46ce07f2f")

]

}

> db.patient.find().pretty()

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2d"),

"firstName" : "Mickey",

"lastName" : "Mouse",

"age" : 35,

"history" : [

{

"disease" : "cold",

"treatment" : "painkillers 3 times a day for 3 days"

}

]

}

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2e"),

"firstName" : "Minnie",

"lastName" : "Mouse",

"age" : 31,

"history" : [

{

"disease" : "bronchitis",

"treatment" : "antibiotic 3 times a day for 7 days"

}

]

}

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2f"),

"firstName" : "Donald",

"lastName" : "Duck",

"age" : 42,

"history" : [

{

"disease" : "high blood pressure",

"treatment" : "take it easy"

}

]

}

- Update one document

> db.patient.updateOne({"firstName" : "Minnie"}, {$set: {"age": 29, "history": [{"disease": "bronchitis", "treatment": "antibiotic 3 times a day for 9 days"}, {"filmography": ["Plane Crazy","Steamboat Willie","Mickey's Good","..."]}]}})

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.patient.find({"firstName" : "Minnie"}).pretty()

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2e"),

"firstName" : "Minnie",

"lastName" : "Mouse",

"age" : 29,

"history" : [

{

"disease" : "bronchitis",

"treatment" : "antibiotic 3 times a day for 9 days"

},

{

"filmography" : [

"Plane Crazy",

"Steamboat Willie",

"Mickey's Good",

"..."

]

}

]

}

- Find documents where Age greater than 34

> db.patient.find({ age: {$gt: 34}}).pretty()

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2d"),

"firstName" : "Mickey",

"lastName" : "Mouse",

"age" : 35,

"history" : [

{

"disease" : "cold",

"treatment" : "painkillers 3 times a day for 3 days"

}

]

}

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2f"),

"firstName" : "Donald",

"lastName" : "Duck",

"age" : 42,

"history" : [

{

"disease" : "high blood pressure",

"treatment" : "take it easy"

}

]

}

- Delete one patient

{ "acknowledged" : true, "deletedCount" : 1 }

> db.patient.find().pretty()

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2e"),

"firstName" : "Minnie",

"lastName" : "Mouse",

"age" : 29,

"history" : [

{

"disease" : "bronchitis",

"treatment" : "antibiotic 3 times a day for 9 days"

},

{

"filmography" : [

"Plane Crazy",

"Steamboat Willie",

"Mickey's Good",

"..."

]

}

]

}

{

"_id" : ObjectId("5c1f6bc899e4cbc46ce07f2f"),

"firstName" : "Donald",

"lastName" : "Duck",

"age" : 42,

"history" : [

{

"disease" : "high blood pressure",

"treatment" : "take it easy"

}

]

}

- Summay

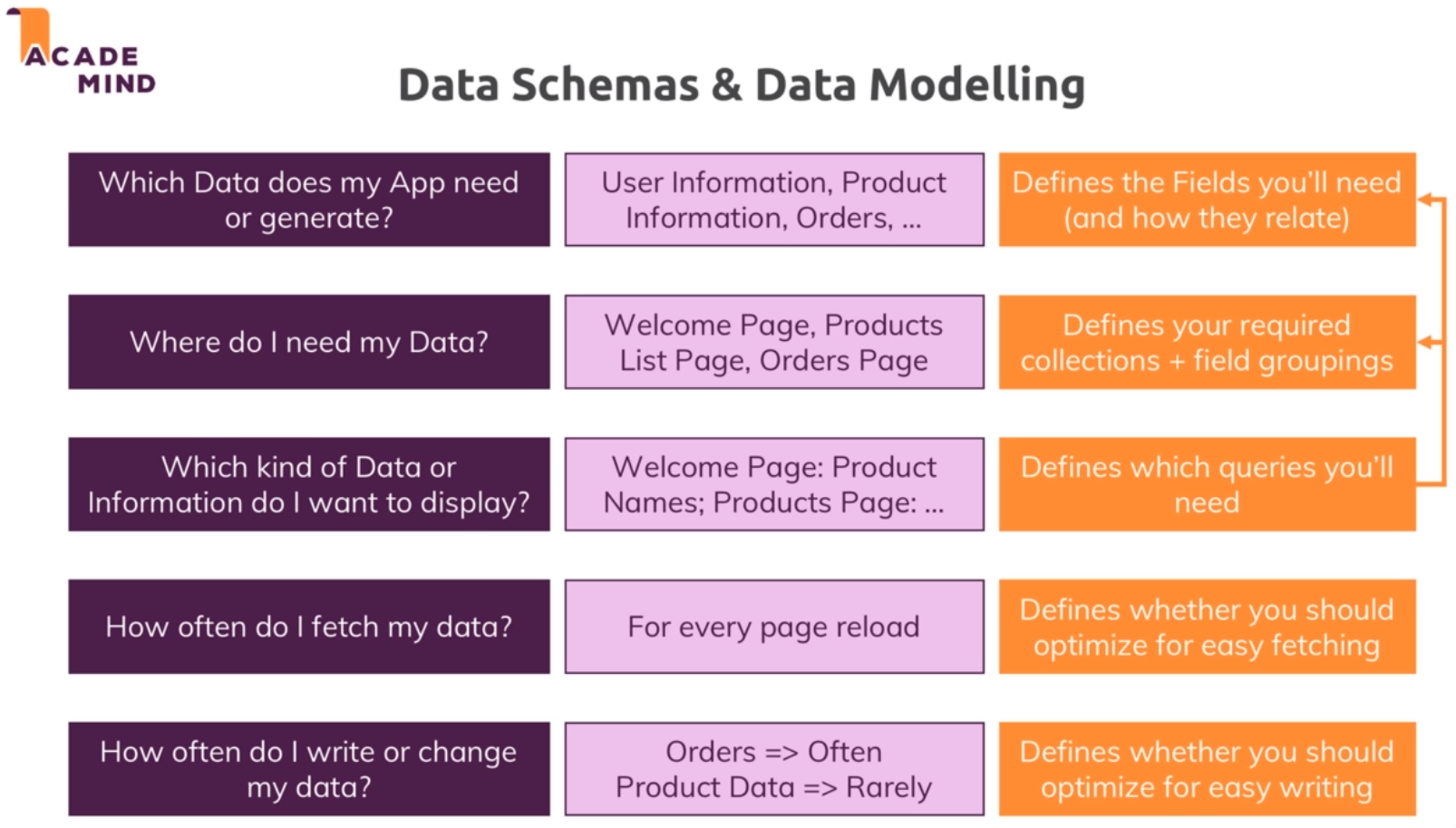

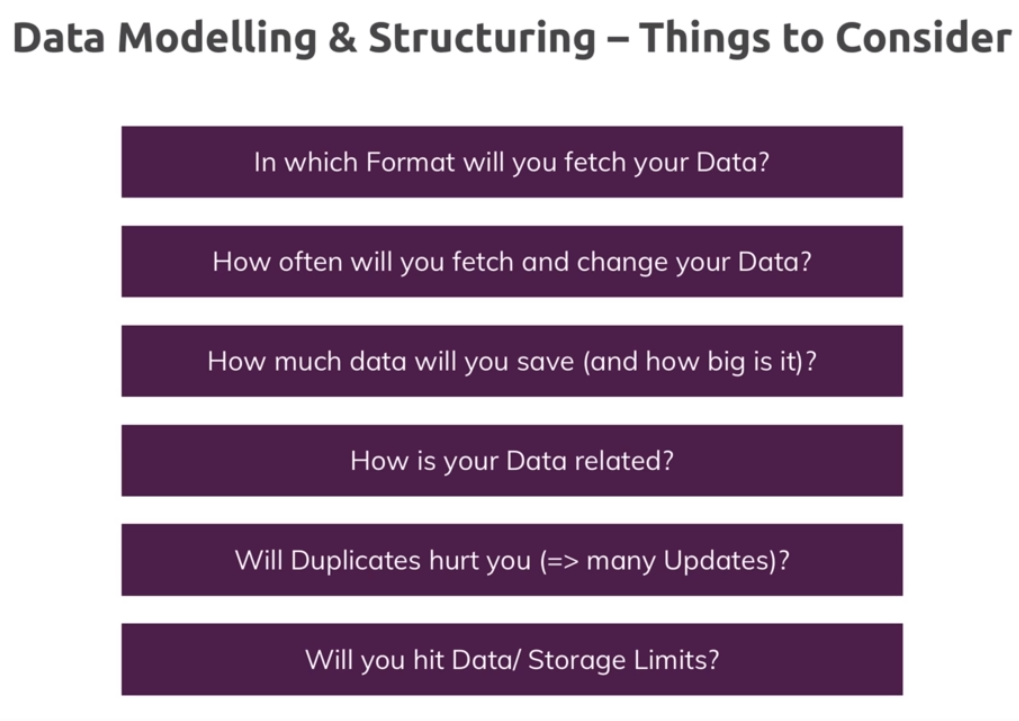

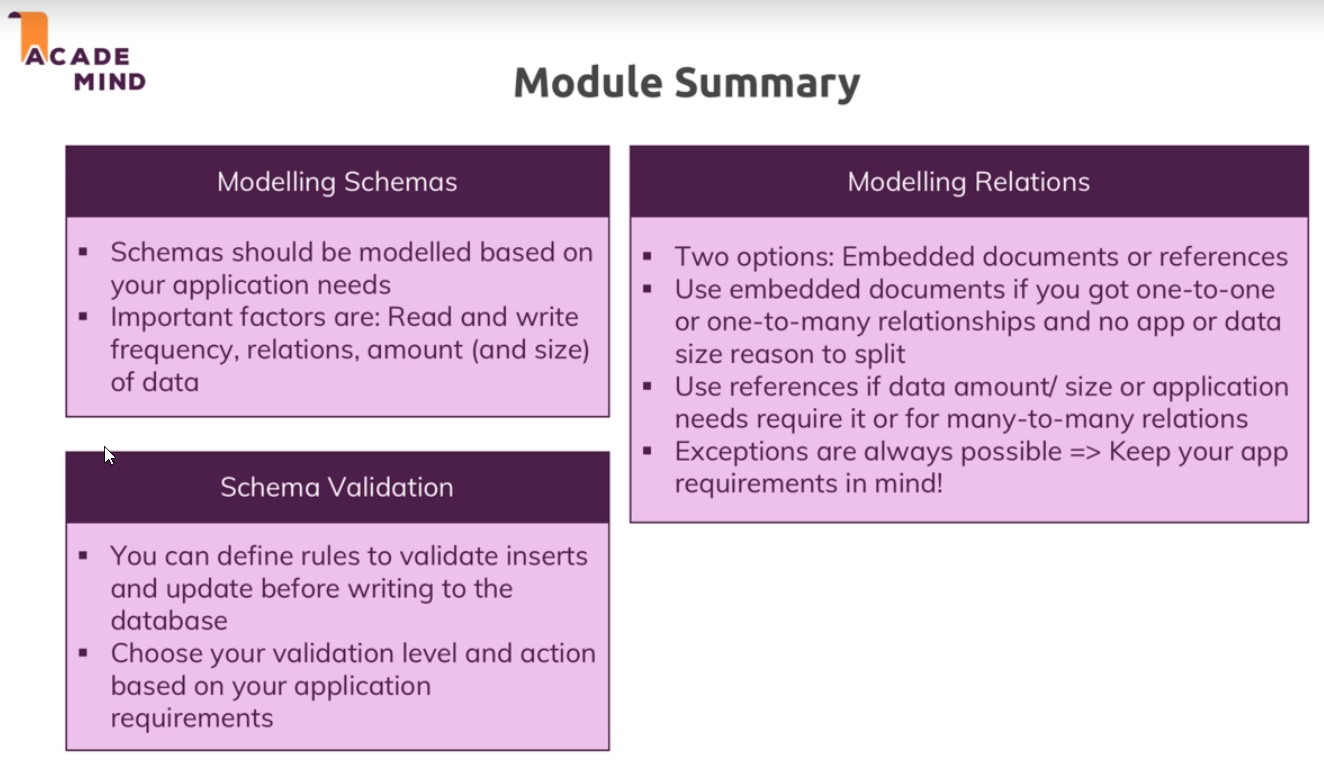

Schemas & Relations: How to Structure Documents

- Resetting your database.

- We can can simply load the database we want to get rid of (

use databaseName) and then executedb.dropDatabase().

> show dbs

TasksAppMongo 0.000GB

admin 0.000GB

blog 0.000GB

config 0.000GB

flights 0.000GB

hospital 0.000GB

local 0.000GB

shop 0.000GB

> use shop

switched to db shop

> db.dropDatabse()

2018-12-31T06:20:58.509+0000 E QUERY [js] TypeError: db.dropDatabse is not a function :

@(shell):1:1

> db.dropDatabase()

{ "dropped" : "shop", "ok" : 1 }

- We could get rid of a single collection in a database via

db.myCollection.drop().

> use hospital

switched to db hospital

> db.getCollectionNames()

[ "patient" ]

> db.patient.drop()

true

> db.getCollectionNames()

[ ]

>

- Why Do We Use Schemas?

- we can insert some documnents without schema

> db.products.insertOne({name: "Book", price: 12.99})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29b884ba280a45572d8b88")

}

> db.products.insertOne({title: "T-Shirt", seller: {name: "Juan", age: 52}})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29b88cba280a45572d8b89")

}

> db.products.find().pretty()

{

"_id" : ObjectId("5c29b884ba280a45572d8b88"),

"name" : "Book",

"price" : 12.99

}

{

"_id" : ObjectId("5c29b88cba280a45572d8b89"),

"title" : "T-Shirt",

"seller" : {

"name" : "Juan",

"age" : 52

}

}

> db.products.insertOne({name: "Book", price: 12.99, details: null})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29bb3cba280a45572d8b90")

}

> db.products.insertOne({name: "T-Shirt", price: 20.99, details: null})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29bb48ba280a45572d8b91")

}

> db.products.insertOne({name: "Computer", price: 1299, details: {cpu: "Intel i7 8770"}})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29bb4cba280a45572d8b92")

}

> db.products.find().pretty()

{

"_id" : ObjectId("5c29bb3cba280a45572d8b90"),

"name" : "Book",

"price" : 12.99,

"details" : null

}

{

"_id" : ObjectId("5c29bb48ba280a45572d8b91"),

"name" : "T-Shirt",

"price" : 20.99,

"details" : null

}

{

"_id" : ObjectId("5c29bb4cba280a45572d8b92"),

"name" : "Computer",

"price" : 1299,

"details" : {

"cpu" : "Intel i7 8770"

}

}

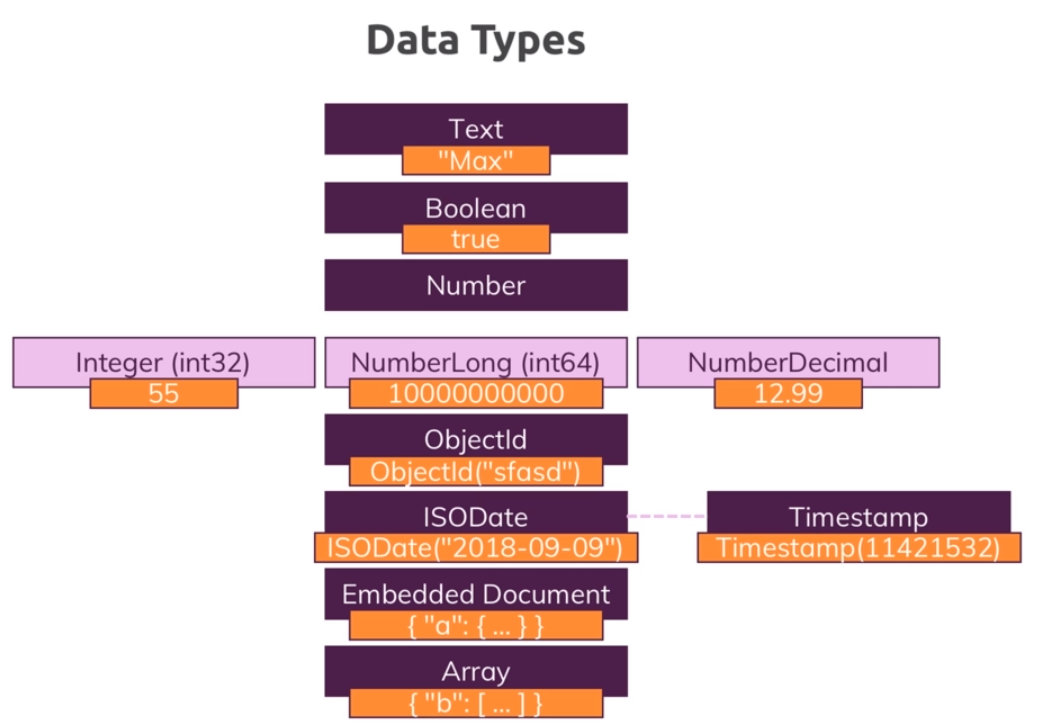

- Data Types.

- Insert a document with different

Data Types.

> use companyData

switched to db companyData

> db.companies.insertOne({name: "Fresh Apples Inc", isStartup: true, employees: 33, funding: 12345678901234567890, details: {ceo: "Mark Super"}, tags: ["super","perfect"], foundingDate: new Date(), insertedAt: new Timestamp()})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29bf05ba280a45572d8b93")

}

> db.companies.findOne()

{

"_id" : ObjectId("5c29bf05ba280a45572d8b93"),

"name" : "Fresh Apples Inc",

"isStartup" : true,

"employees" : 33,

"funding" : 12345678901234567000,

"details" : {

"ceo" : "Mark Super"

},

"tags" : [

"super",

"perfect"

],

"foundingDate" : ISODate("2018-12-31T07:02:29.167Z"),

"insertedAt" : Timestamp(1546239749, 1)

}

- The

12345678901234567890long number inserted in thefundingvalue is truncated to12345678901234567000.

> db.numbers.insertOne({a: 1})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29bfe9ba280a45572d8b94")

}

> db.stats()

{

"db" : "companyData",

"collections" : 2,

"views" : 0,

"objects" : 2,

"avgObjSize" : 122.5,

"dataSize" : 245,

"storageSize" : 20480,

"numExtents" : 0,

"indexes" : 2,

"indexSize" : 20480,

"fsUsedSize" : 268846039040,

"fsTotalSize" : 494586032128,

"ok" : 1

}

- With

dropthe colection iscompletely removed.

> db.companies.drop()

true

> db.stats()

{

"db" : "companyData",

"collections" : 1,

"views" : 0,

"objects" : 1,

"avgObjSize" : 33,

"dataSize" : 33,

"storageSize" : 16384,

"numExtents" : 0,

"indexes" : 1,

"indexSize" : 16384,

"fsUsedSize" : 268846575616,

"fsTotalSize" : 494586032128,

"ok" : 1

}

- With

deleteManyit is still there.

> db.numbers.deleteMany({})

{ "acknowledged" : true, "deletedCount" : 1 }

> db.stats()

{

"db" : "companyData",

"collections" : 1,

"views" : 0,

"objects" : 0,

"avgObjSize" : 0,

"dataSize" : 0,

"storageSize" : 16384,

"numExtents" : 0,

"indexes" : 1,

"indexSize" : 16384,

"fsUsedSize" : 268846960640,

"fsTotalSize" : 494586032128,

"ok" : 1

}

> db.getCollectionNames()

[ "numbers" ]

- Internally the numbers are stored depending on their type.

> db.numbers.insertOne({a: NumberInt(1)})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29c160ba280a45572d8b95")

}

> db.stats()

{

"db" : "companyData",

"collections" : 1,

"views" : 0,

"objects" : 1,

"avgObjSize" : 29,

"dataSize" : 29,

"storageSize" : 20480,

"numExtents" : 0,

"indexes" : 1,

"indexSize" : 20480,

"fsUsedSize" : 268848025600,

"fsTotalSize" : 494586032128,

"ok" : 1

}

- We can know the type of the value using

typeof

> typeof db.numbers.findOne().a

number

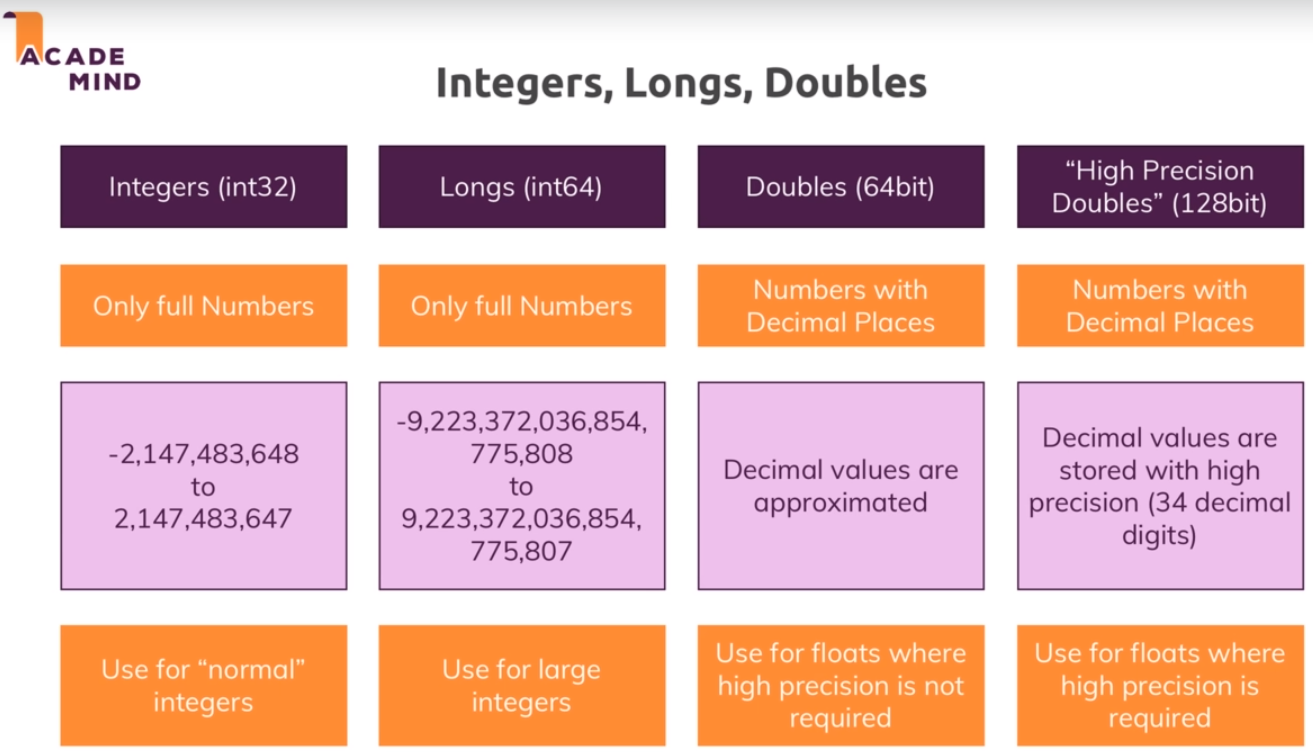

Important data type limits are:

Normal integers (int32)can hold a maximum value of +-2,147,483,647.Long integers (int64)can hold a maximum value of +-9,223,372,036,854,775,807.Text can be as long as you want- the limit is the 16mb restriction for the overall document.- It's also important to understand the difference between int32 (NumberInt), int64 (NumberLong) and a normal number as you can enter it in the shell. The same goes for a normal double and NumberDecimal.

NumberIntcreates a int32 value => NumberInt(55)NumberLongcreates a int64 value => NumberLong(7489729384792)- If you just use a

number(e.g. insertOne({a: 1}), this will get added as anormal doubleinto the database. The reason for this is that the shell is based on JS which only knows float/ double values and doesn't differ between integers and floats. NumberDecimalcreates a high-precision double value => NumberDecimal("12.99") => This can be helpful for cases where you need (many) exact decimal places for calculations.

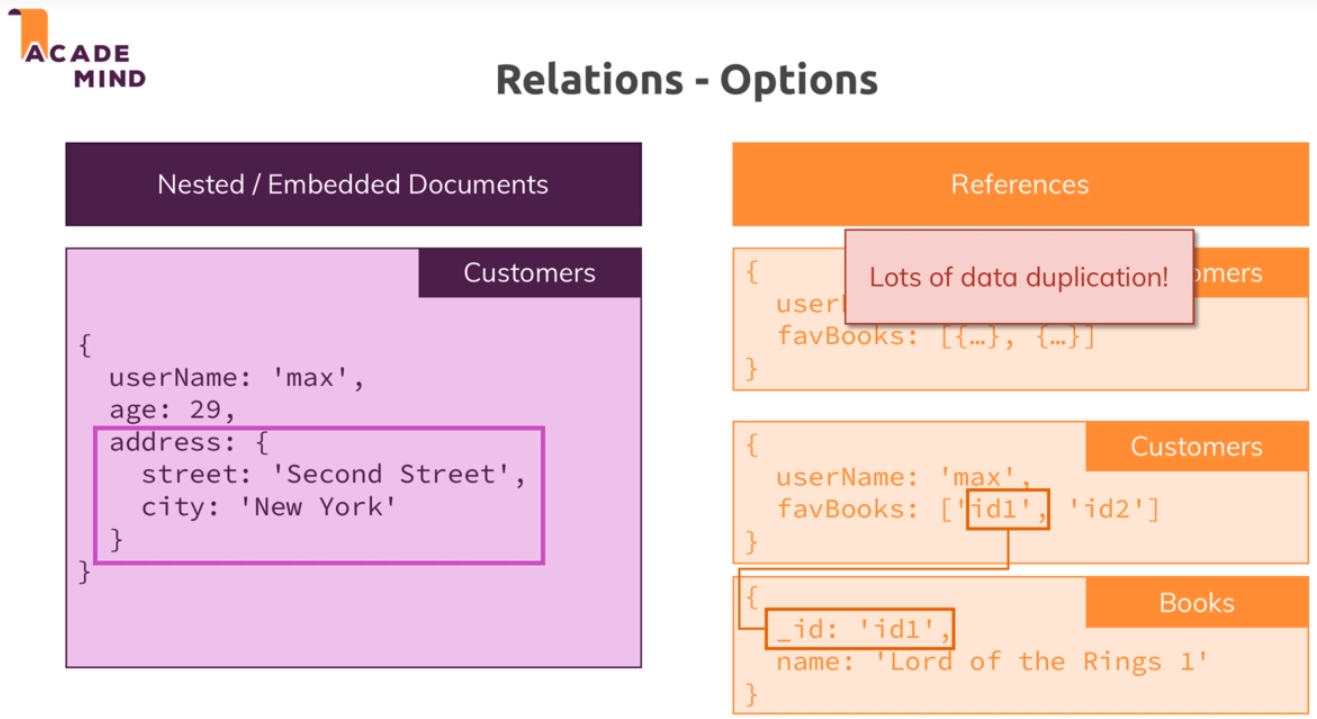

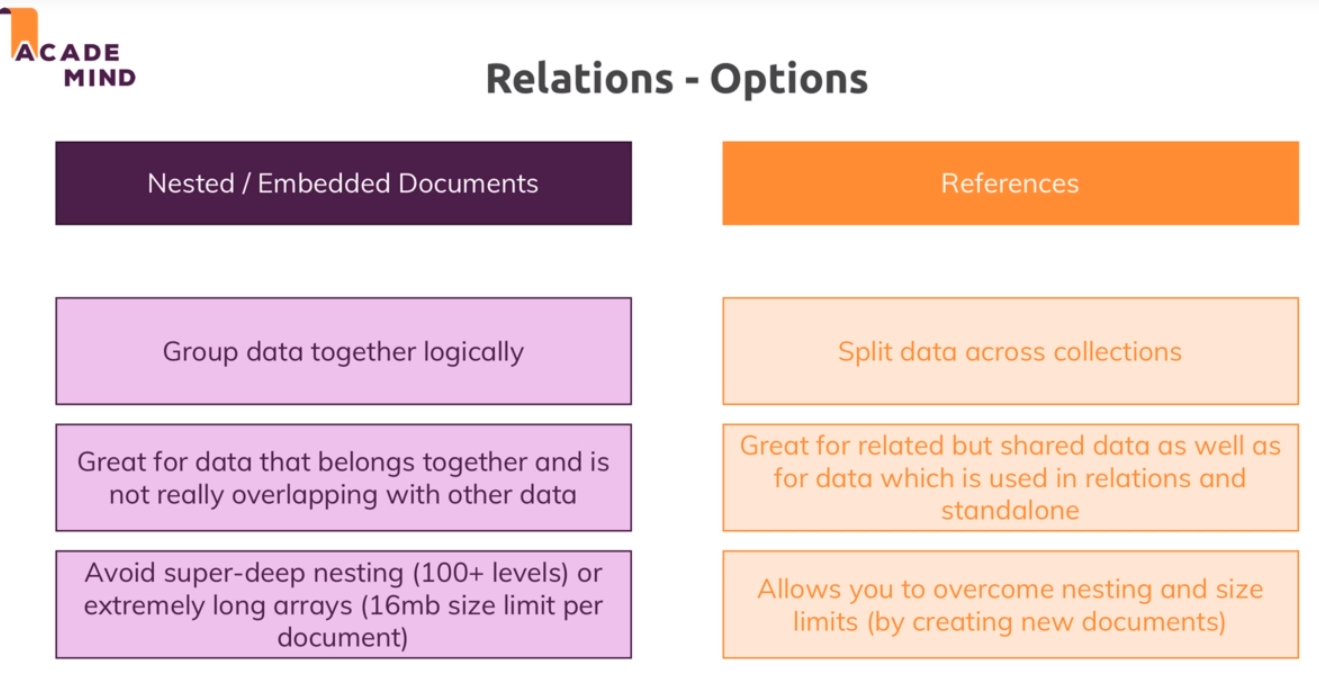

- Relations

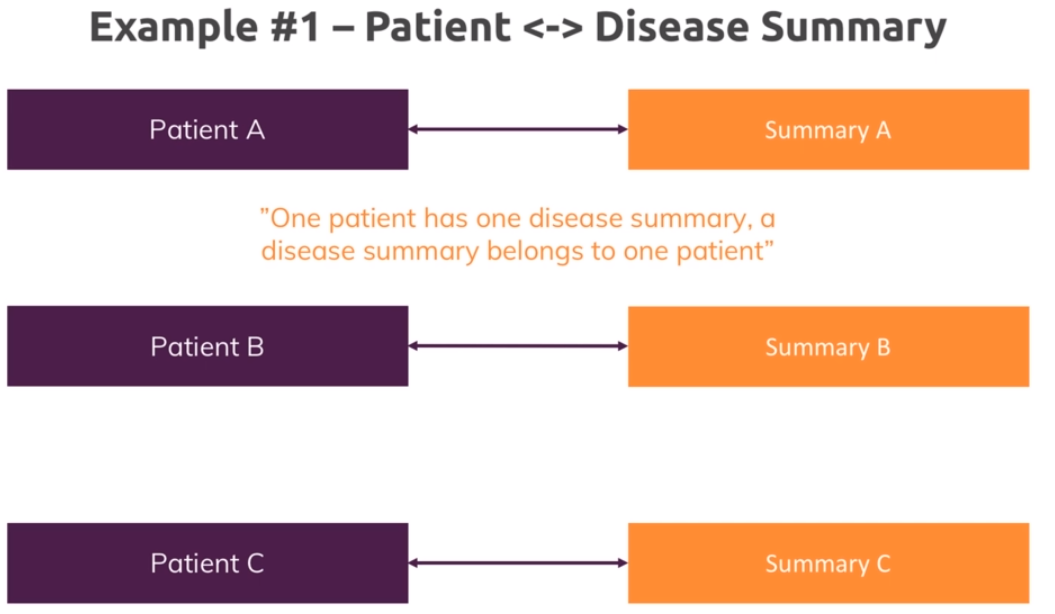

One to One - Embedded

- Querying using variables

> db.patients.insertOne({ name: "Max", age: 29, diseaseSummary: "summary-max-1"})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29c746ba280a45572d8b97")

}

> db.patients.findOne()

{

"_id" : ObjectId("5c29c746ba280a45572d8b97"),

"name" : "Max",

"age" : 29,

"diseaseSummary" : "summary-max-1"

}

> db.diseaseSummaries.insertOne({_id: "summary-max-1", diseases: ["cold","broken leg"]})

{ "acknowledged" : true, "insertedId" : "summary-max-1" }

> db.diseaseSummaries.findOne()

{ "_id" : "summary-max-1", "diseases" : [ "cold", "broken leg" ] }

> db.patients.findOne()

{

"_id" : ObjectId("5c29c746ba280a45572d8b97"),

"name" : "Max",

"age" : 29,

"diseaseSummary" : "summary-max-1"

}

> db.patients.findOne().diseaseSummary

summary-max-1

> var dsid = db.patients.findOne().diseaseSummary

> dsid

summary-max-1

> db.diseaseSummaries.findOne(_id: dsid})

2018-12-31T07:43:28.707+0000 E QUERY [js] SyntaxError: missing ) after argument list @(shell):1:31

> db.diseaseSummaries.findOne({_id: dsid})

{ "_id" : "summary-max-1", "diseases" : [ "cold", "broken leg" ] }

- It will be easier if we add the information

embedded

> db.patients.deleteMany({})

{ "acknowledged" : true, "deletedCount" : 1 }

> db.patients.insertOne({ name: "Max", age: 29, diseases: ["cold","broken leg"]})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29c9a6ba280a45572d8b98")

}

> db.patients.findOne()

{

"_id" : ObjectId("5c29c9a6ba280a45572d8b98"),

"name" : "Max",

"age" : 29,

"diseases" : [

"cold",

"broken leg"

]

}

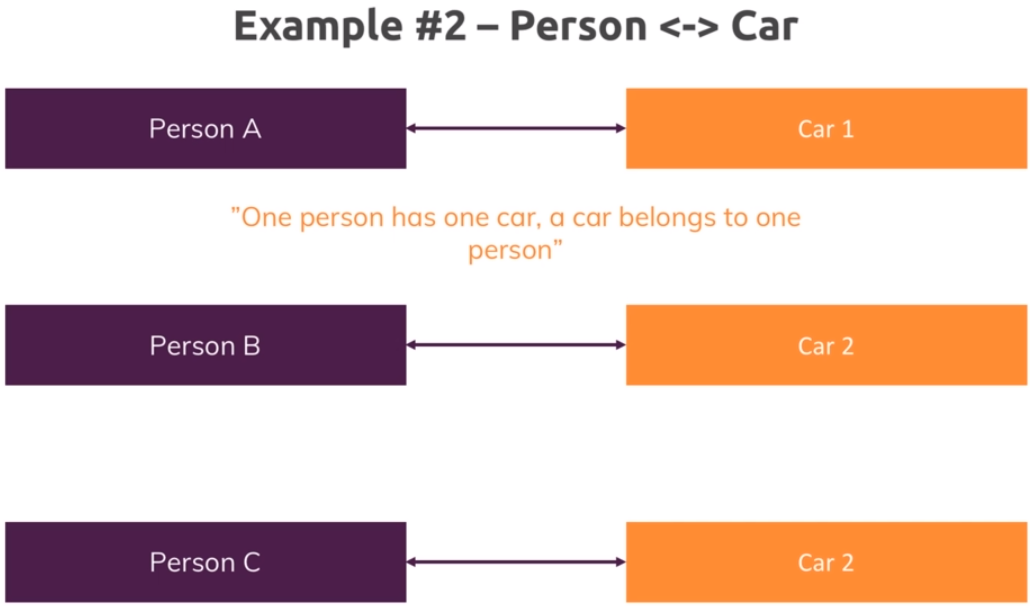

One to One - Using References

> use carData

switched to db carData

> db.persons.insertOne({name: "Max", car: {model: "BMW", price: 40000}})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29caceba280a45572d8b9a")

}

> db.persons.findOne()

{

"_id" : ObjectId("5c29caceba280a45572d8b9a"),

"name" : "Max",

"car" : {

"model" : "BMW",

"price" : 40000

}

}

> db.persons.deleteMany({})

{ "acknowledged" : true, "deletedCount" : 1 }

> db.persons.insertOne({name: "Max", age: 39, salary: 3000})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29cb78ba280a45572d8b9b")

}

> db.cars.insertOne({model: "BMW", price: 4000, owner: ObjectId("5c29cb78ba280a45572d8b9b")})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29cbc5ba280a45572d8b9c")

}

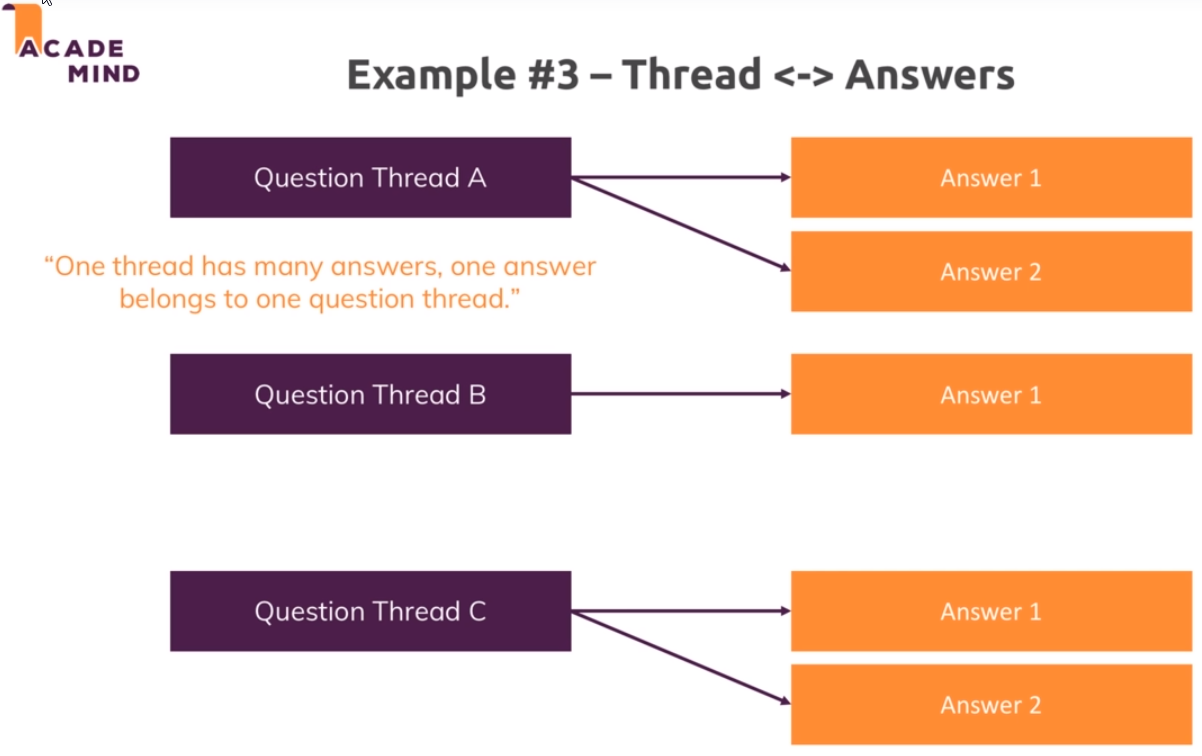

One to Many - Embedded

> use support

switched to db support

> db.questionThreads.insertOne({creator: "Max", question: "How does that all work?", answers: ["q1a1","q1a2"]})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29ccecba280a45572d8b9d")

}

> db.questionThreads.findOne()

{

"_id" : ObjectId("5c29ccecba280a45572d8b9d"),

"creator" : "Max",

"question" : "How does that all work?",

"answers" : [

"q1a1",

"q1a2"

]

}

> db.answers.insertMany([{_id: "q1a1", text: "It works like that"}, {_id: "q1a2", text: "Thanks!"}])

{ "acknowledged" : true, "insertedIds" : [ "q1a1", "q1a2" ] }

> db.answers.find()

{ "_id" : "q1a1", "text" : "It works like that" }

{ "_id" : "q1a2", "text" : "Thanks!" }

> db.answers.deleteMany({})

{ "acknowledged" : true, "deletedCount" : 2 }

> db.questionThreads.deleteMany({})

{ "acknowledged" : true, "deletedCount" : 1 }

> db.questionThreads.insertOne({creator: "Max", question: "How does that all work?", answers: [{text: "It works like that"},{text: "Thanks!"}]})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29ce4bba280a45572d8b9e")

}

> db.questionThreads.findOne()

{

"_id" : ObjectId("5c29ce4bba280a45572d8b9e"),

"creator" : "Max",

"question" : "How does that all work?",

"answers" : [

{

"text" : "It works like that"

},

{

"text" : "Thanks!"

}

]

}

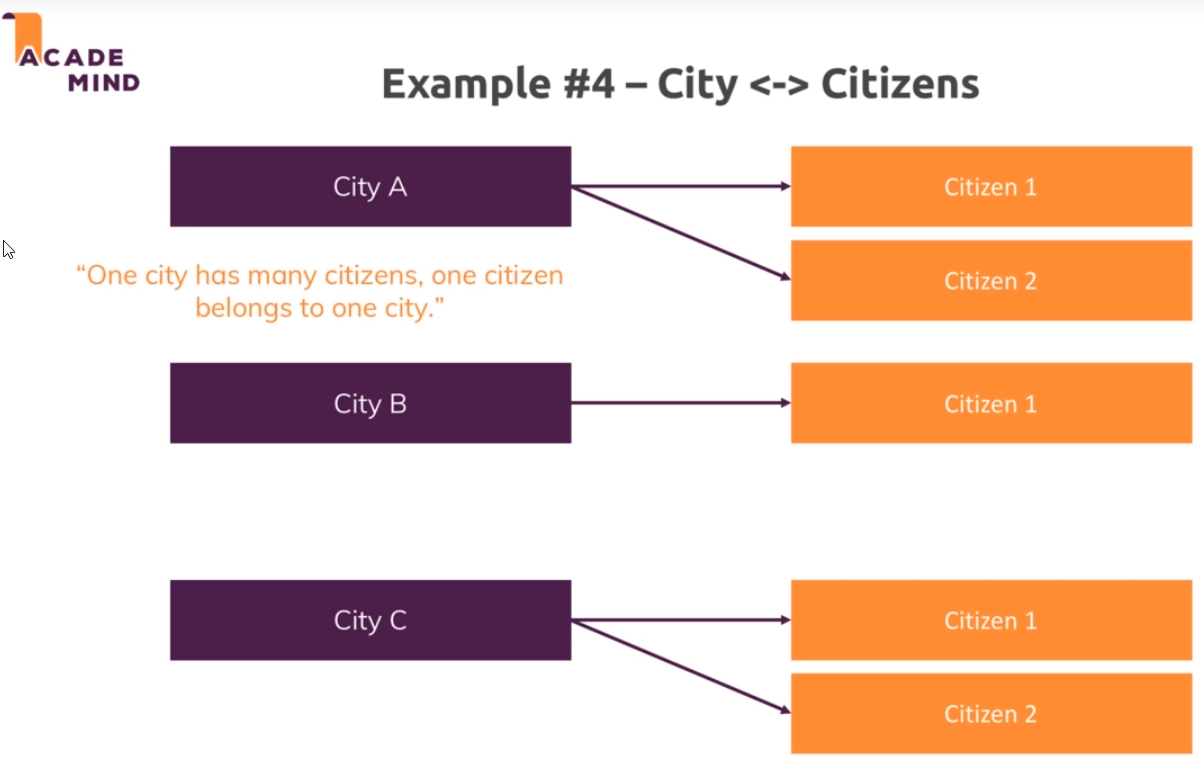

One to Many - Using References

> db.cities.insertOne({name: "New York City", coordinates: {lat: 21, lng: 55}})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29cf77ba280a45572d8b9f")

}

> db.cities.findOne()

{

"_id" : ObjectId("5c29cf77ba280a45572d8b9f"),

"name" : "New York City",

"coordinates" : {

"lat" : 21,

"lng" : 55

}

}

> db.citizens.insertMany([{ name: "Max", cityId: ObjectId("5c29cf77ba280a45572d8b9f")}, {name: "Manuel", cityid: ObjectId("5c29cf77ba280a45572d8b9f")}])

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("5c29d016ba280a45572d8ba0"),

ObjectId("5c29d016ba280a45572d8ba1")

]

}

> db.citizens.find({}).pretty()

{

"_id" : ObjectId("5c29d016ba280a45572d8ba0"),

"name" : "Max",

"cityId" : ObjectId("5c29cf77ba280a45572d8b9f")

}

{

"_id" : ObjectId("5c29d016ba280a45572d8ba1"),

"name" : "Manuel",

"cityid" : ObjectId("5c29cf77ba280a45572d8b9f")

}

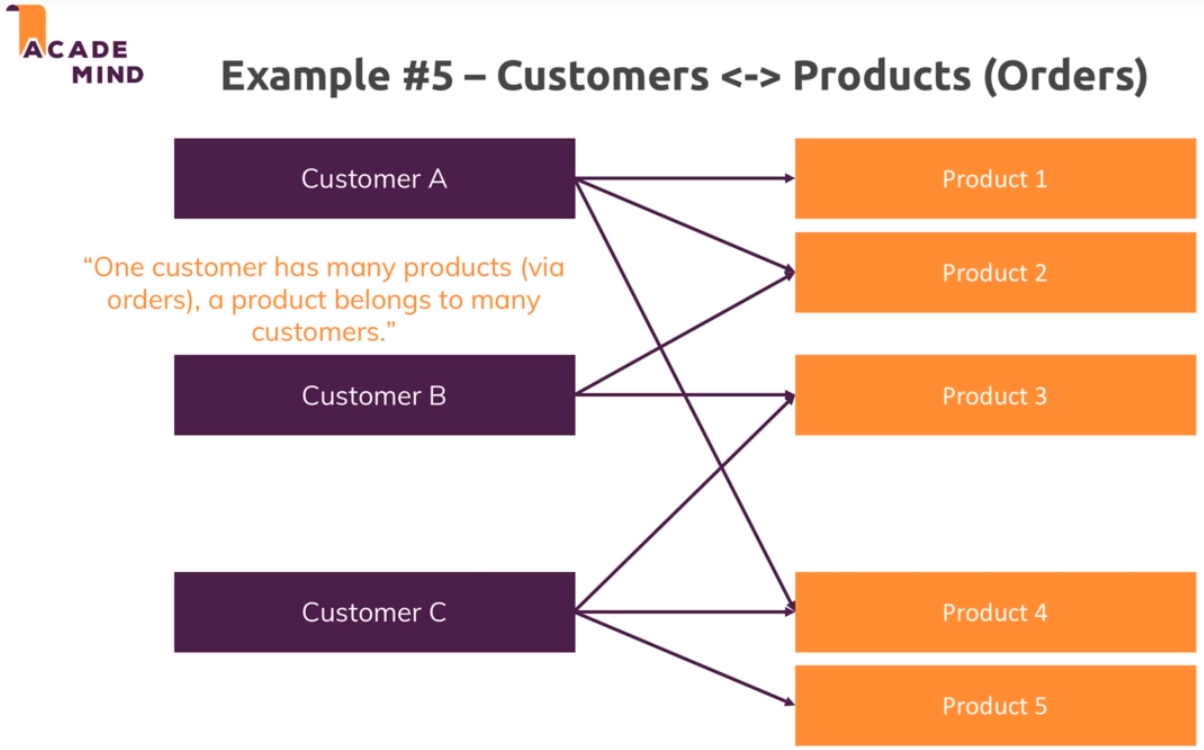

Many to Many - Embedded

> use shop

switched to db shop

> db.products.insertOne({title: "Book", price: 12.99})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29d25bba280a45572d8ba2")

}

> db.customers.insertOne({name: "Max", age: 29})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29d289ba280a45572d8ba3")

}

> db.orders.insertOne({productId: ObjectId("5c29d25bba280a45572d8ba2"), customerId: ObjectId("5c29d289ba280a45572d8ba3")})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29d2bfba280a45572d8ba4")

}

> db.Orders.drop()

false

> db.orders.drop()

true

> db.products.find()

{ "_id" : ObjectId("5c29d25bba280a45572d8ba2"), "title" : "Book", "price" : 12.99 }

> db.customers.find()

{ "_id" : ObjectId("5c29d289ba280a45572d8ba3"), "name" : "Max", "age" : 29 }

> db.customers.updateOne({}, {$set: {orders: [{productId: ObjectId("5c29d25bba280a45572d8ba2"), quantity: 2}]}})

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.customers.find().pretty()

{

"_id" : ObjectId("5c29d289ba280a45572d8ba3"),

"name" : "Max",

"age" : 29,

"orders" : [

{

"productId" : ObjectId("5c29d25bba280a45572d8ba2"),

"quantity" : 2

}

]

}

> db.customers.updateOne({}, {$set: {orders: [{title: "Book", price: 12.99, quantity: 2}]}})

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.customers.find().pretty()

{

"_id" : ObjectId("5c29d289ba280a45572d8ba3"),

"name" : "Max",

"age" : 29,

"orders" : [

{

"title" : "Book",

"price" : 12.99,

"quantity" : 2

}

]

}

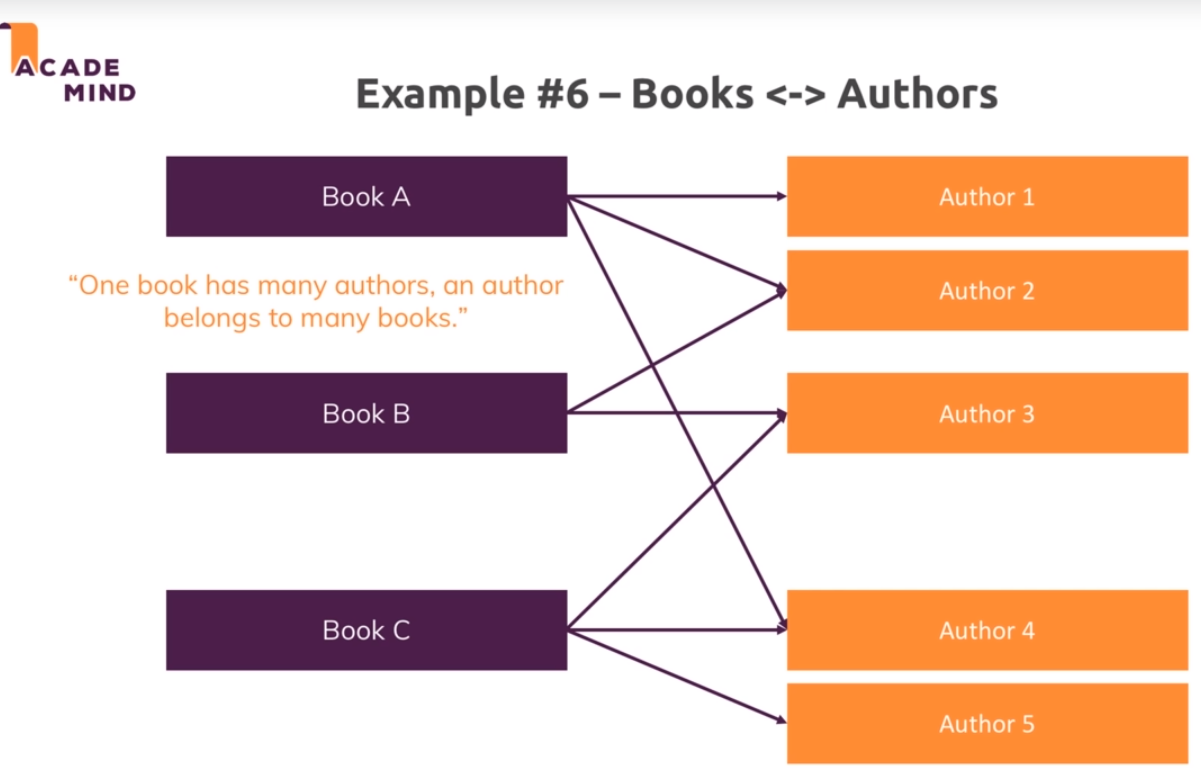

Many to Many - Using References

> use bookRegistry

switched to db bookRegistry

> db.books.insertOne({name: "My favorite Book", authors: [{name: "Max", age: 29}, {name: "Manuel", age: 30}]})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29d629ba280a45572d8ba5")

}

> db.books.find().pretty()

{

"_id" : ObjectId("5c29d629ba280a45572d8ba5"),

"name" : "My favorite Book",

"authors" : [

{

"name" : "Max",

"age" : 29

},

{

"name" : "Manuel",

"age" : 30

}

]

}

> db.authors.insertMany([{name: "Max", age: 29, address: {street: "Main"}},{name: "Manuel", age: 30, address: {street: "Second"}}])

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("5c29d6d3ba280a45572d8ba6"),

ObjectId("5c29d6d3ba280a45572d8ba7")

]

}

> db.authors.find().pretty()

{

"_id" : ObjectId("5c29d6d3ba280a45572d8ba6"),

"name" : "Max",

"age" : 29,

"address" : {

"street" : "Main"

}

}

{

"_id" : ObjectId("5c29d6d3ba280a45572d8ba7"),

"name" : "Manuel",

"age" : 30,

"address" : {

"street" : "Second"

}

}

> db.books.updateOne({}, {$set: {authors: [ObjectId("5c29d6d3ba280a45572d8ba6"),ObjectId("5c29d6d3ba280a45572d8ba7")]}})

{ "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 }

> db.books.find().pretty()

{

"_id" : ObjectId("5c29d629ba280a45572d8ba5"),

"name" : "My favorite Book",

"authors" : [

ObjectId("5c29d6d3ba280a45572d8ba6"),

ObjectId("5c29d6d3ba280a45572d8ba7")

]

}

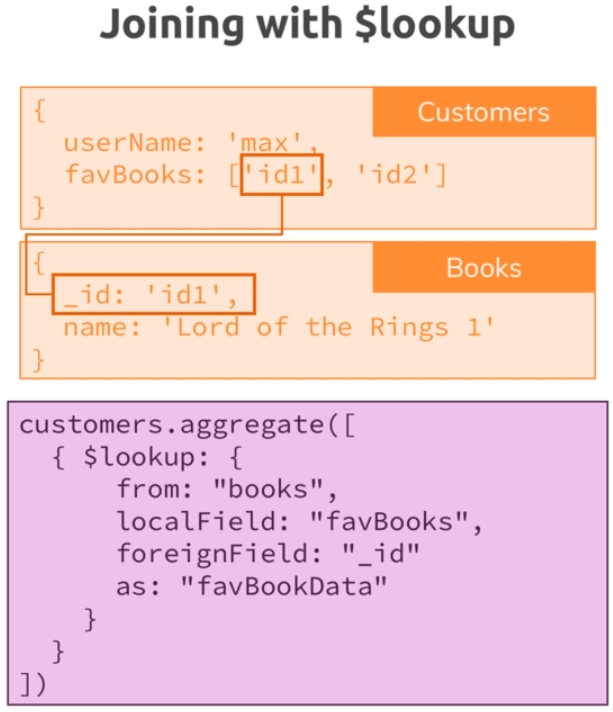

- Using

lookUp()for Merging Reference Relations

> db.books.aggregate([{$lookup: {from: "authors", localField: "authors", foreignField: "_id", as: "creators"}}]).pretty()

{

"_id" : ObjectId("5c29d629ba280a45572d8ba5"),

"name" : "My favorite Book",

"authors" : [

ObjectId("5c29d6d3ba280a45572d8ba6"),

ObjectId("5c29d6d3ba280a45572d8ba7")

],

"creators" : [

{

"_id" : ObjectId("5c29d6d3ba280a45572d8ba6"),

"name" : "Max",

"age" : 29,

"address" : {

"street" : "Main"

}

},

{

"_id" : ObjectId("5c29d6d3ba280a45572d8ba7"),

"name" : "Manuel",

"age" : 30,

"address" : {

"street" : "Second"

}

}

]

}

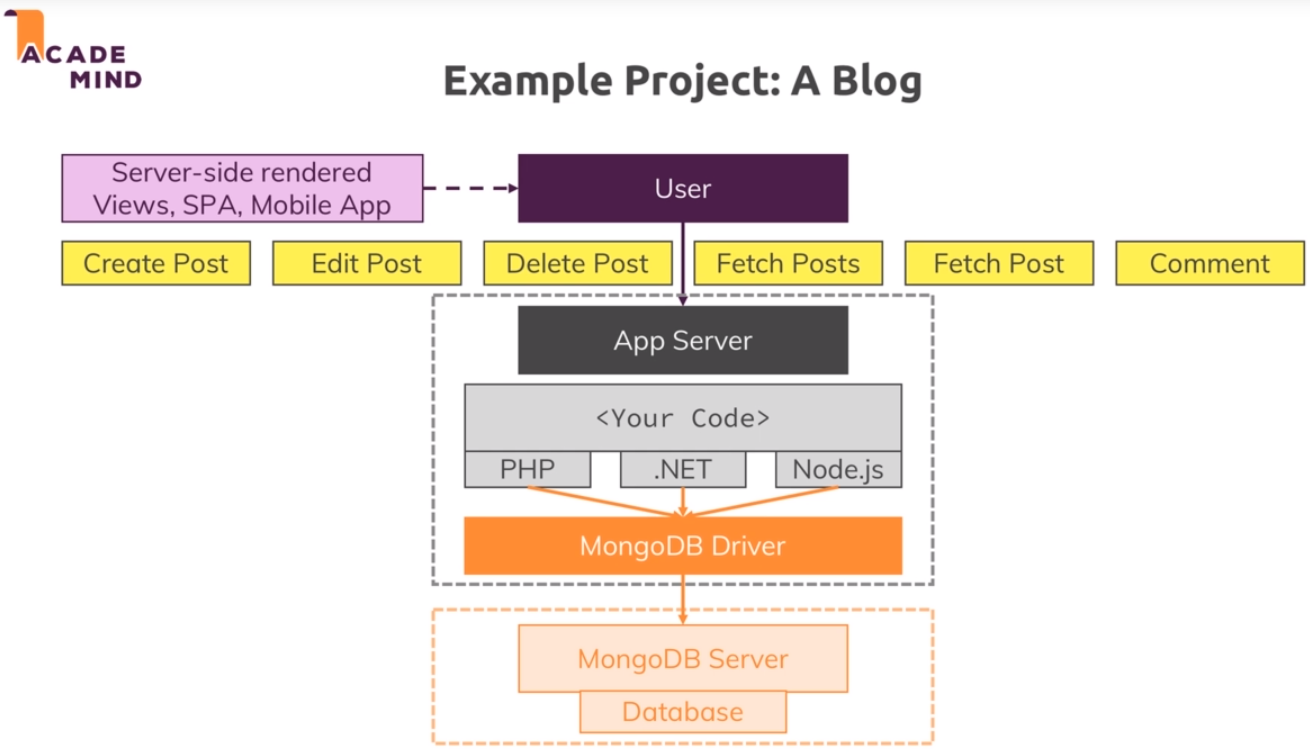

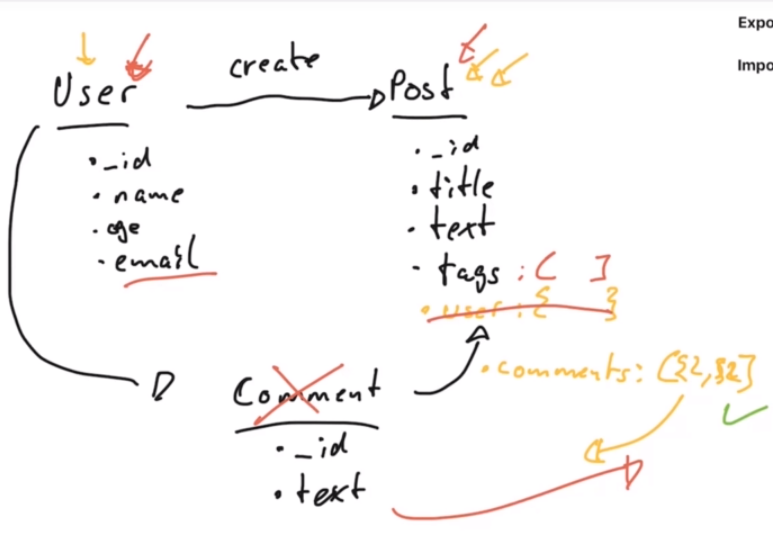

- Example project

> db.users.insertMany([{name: "Max", age: 29, email: "max@test.com"},{name: "Manuel", age: 30, email: "manuel@test.com"}])

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("5c29dffaba280a45572d8ba8"),

ObjectId("5c29dffaba280a45572d8ba9")

]

}

> db.users.find().pretty()

{

"_id" : ObjectId("5c29dffaba280a45572d8ba8"),

"name" : "Max",

"age" : 29,

"email" : "max@test.com"

}

{

"_id" : ObjectId("5c29dffaba280a45572d8ba9"),

"name" : "Manuel",

"age" : 30,

"email" : "manuel@test.com"

}

> db.posts.insertOne({title: "My first Post!", text: "This is the first one", tags: ["new","tech"], creator: ObjectId("5c29dffaba280a45572d8ba9"), comments: [{text: "I like this posts!", author: ObjectId("5c29dffaba280a45572d8ba8")}]})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29e107ba280a45572d8baa")

}

> db.posts.findOne()

{

"_id" : ObjectId("5c29e107ba280a45572d8baa"),

"title" : "My first Post!",

"text" : "This is the first one",

"tags" : [

"new",

"tech"

],

"creator" : ObjectId("5c29dffaba280a45572d8ba9"),

"comments" : [

{

"text" : "I like this posts!",

"author" : ObjectId("5c29dffaba280a45572d8ba8")

}

]

}

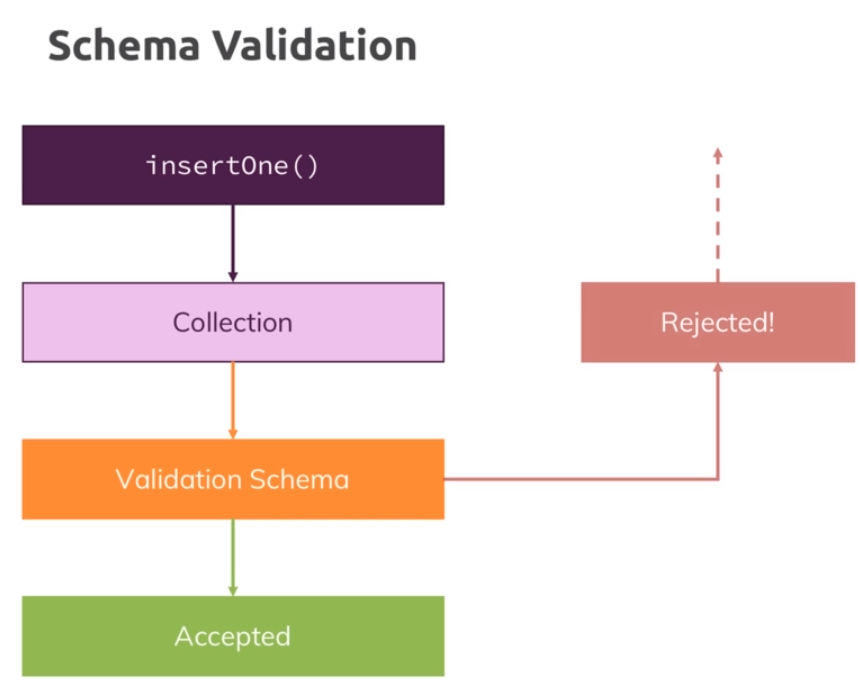

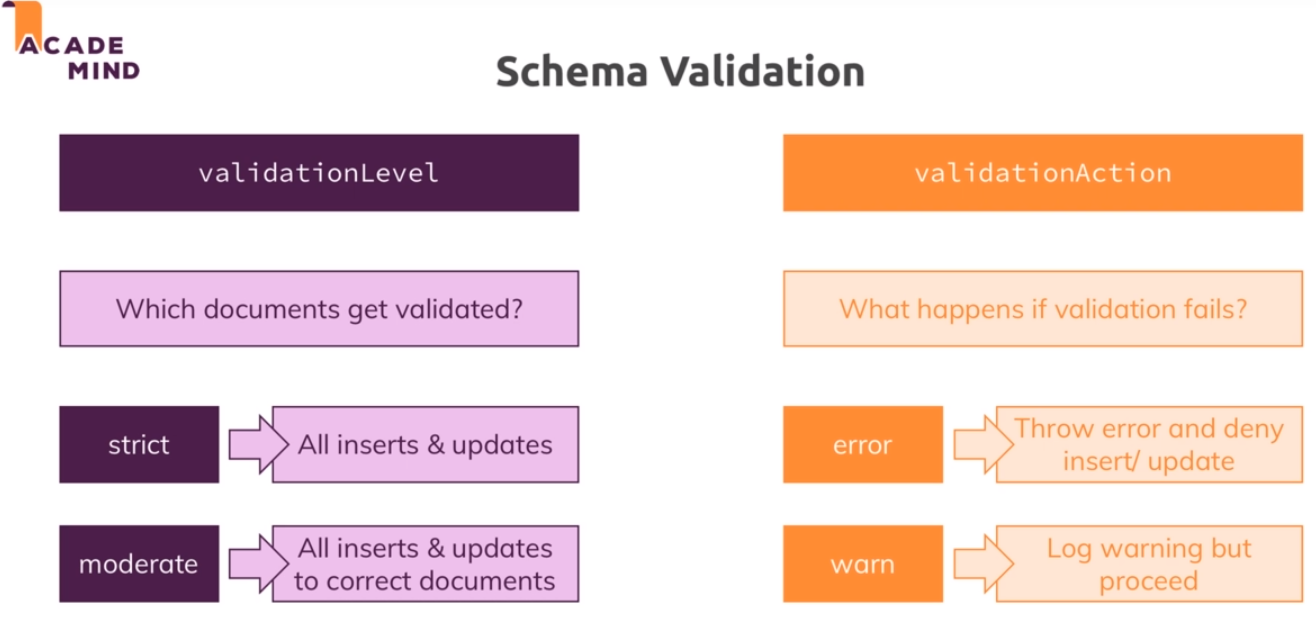

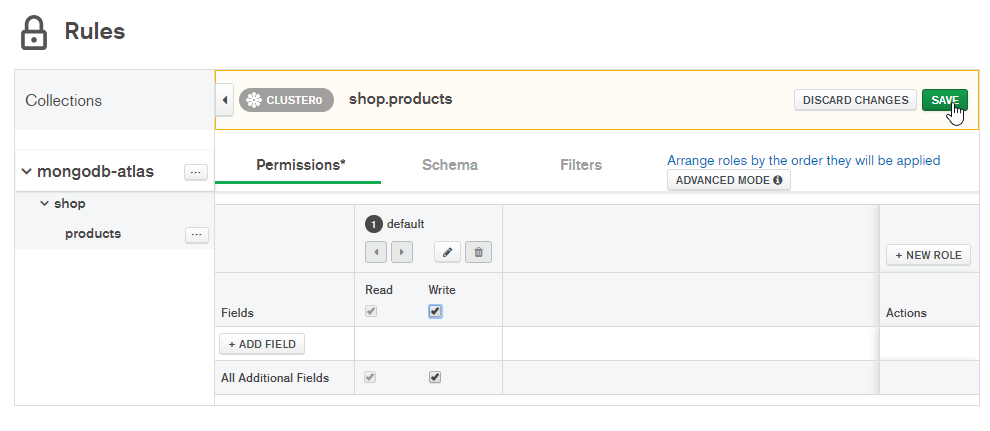

- Schema validation

> db.posts.drop()

true

> db.posts.findOne()

null

> db.createCollection('posts', {

... validator: {

... $jsonSchema: {

... bsonType: 'object',

... required: ['title', 'text', 'creator', 'comments'],

... properties: {

... title: {

... bsonType: 'string',

... description: 'must be a string and is required'

... },

... text: {

... bsonType: 'string',

... description: 'must be a string and is required'

... },

... creator: {

... bsonType: 'objectId',

... description: 'must be an objectid and is required'

... },

... comments: {

... bsonType: 'array',

... description: 'must be an array and is required',

... items: {

... bsonType: 'object',

... required: ['text', 'author'],

... properties: {

... text: {

... bsonType: 'string',

... description: 'must be a string and is required'

... },

... author: {

... bsonType: 'objectId',

... description: 'must be an objectid and is required'

... }

... }

... }

... }

... }

... }

... }

... });

{ "ok" : 1 }

> db.posts.insertOne({title: "My first Post!", text: "This is the first one", tags: ["new","tech"], creator: ObjectId("5c29dffaba280a45572d8ba9"), comments: [{text: "I like this posts!", author: ObjectId("5c29dffaba280a45572d8ba8")}]})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29eb24ba280a45572d8bab")

}

> db.posts.findOne()

{

"_id" : ObjectId("5c29eb24ba280a45572d8bab"),

"title" : "My first Post!",

"text" : "This is the first one",

"tags" : [

"new",

"tech"

],

"creator" : ObjectId("5c29dffaba280a45572d8ba9"),

"comments" : [

{

"text" : "I like this posts!",

"author" : ObjectId("5c29dffaba280a45572d8ba8")

}

]

}

> db.posts.insertOne({title: "My first Post!", text: "This is the first one", tags: ["new","tech"], creator: ObjectId("5c29dffaba280a45572d8ba9"), comments: [{text: "I like this posts!", author: 12}]})

2018-12-31T10:11:21.828+0000 E QUERY [js] WriteError: Document failed validation :

WriteError({

"index" : 0,

"code" : 121,

"errmsg" : "Document failed validation",

"op" : {

"_id" : ObjectId("5c29eb49ba280a45572d8bac"),

"title" : "My first Post!",

"text" : "This is the first one",

"tags" : [

"new",

"tech"

],

"creator" : ObjectId("5c29dffaba280a45572d8ba9"),

"comments" : [

{

"text" : "I like this posts!",

"author" : 12

}

]

}

})

WriteError@src/mongo/shell/bulk_api.js:461:48

Bulk/mergeBatchResults@src/mongo/shell/bulk_api.js:841:49

Bulk/executeBatch@src/mongo/shell/bulk_api.js:906:13

Bulk/this.execute@src/mongo/shell/bulk_api.js:1150:21

DBCollection.prototype.insertOne@src/mongo/shell/crud_api.js:252:9

@(shell):1:1

- We use

db.runCommandandcollModto update the validation.

> db.runCommand({

... collMod: 'posts',

... validator: {

... $jsonSchema: {

... bsonType: 'object',

... required: ['title', 'text', 'creator', 'comments'],

... properties: {

... title: {

... bsonType: 'string',

... description: 'must be a string and is required'

... },

... text: {

... bsonType: 'string',

... description: 'must be a string and is required'

... },

... creator: {

... bsonType: 'objectId',

... description: 'must be an objectid and is required'

... },

... comments: {

... bsonType: 'array',

... description: 'must be an array and is required',

... items: {

... bsonType: 'object',

... required: ['text', 'author'],

... properties: {

... text: {

... bsonType: 'string',

... description: 'must be a string and is required'

... },

... author: {

... bsonType: 'objectId',

... description: 'must be an objectid and is required'

... }

... }

... }

... }

... }

... }

... },

... validationAction: 'warn'

... });

{ "ok" : 1 }

> db.posts.insertOne({title: "My first Post!", text: "This is the first one", tags: ["new","tech"], creator: ObjectId("5c29dffaba280a45572d8ba9"), comments: [{text: "I like this posts!", author: 12}]})

{

"acknowledged" : true,

"insertedId" : ObjectId("5c29ecb5ba280a45572d8bad")

}

Exploring The Shell & The Server

Check the mongo Shell

Execute the

mondod --helpto see all thecommand line options

C:\WINDOWS\system32>mongod --help

Options:

General options:

-v [ --verbose ] [=arg(=v)] be more verbose (include multiple times

for more verbosity e.g. -vvvvv)

--quiet quieter output

--port arg specify port number - 27017 by default

--logpath arg log file to send write to instead of

stdout - has to be a file, not

directory

--logappend append to logpath instead of

over-writing

--logRotate arg set the log rotation behavior

(rename|reopen)

--timeStampFormat arg Desired format for timestamps in log

messages. One of ctime, iso8601-utc or

iso8601-local

--setParameter arg Set a configurable parameter

-h [ --help ] show this usage information

--version show version information

-f [ --config ] arg configuration file specifying

additional options

--bind_ip arg comma separated list of ip addresses to

listen on - localhost by default

--bind_ip_all bind to all ip addresses

--ipv6 enable IPv6 support (disabled by

default)

--listenBacklog arg (=2147483647) set socket listen backlog size

--maxConns arg max number of simultaneous connections

- 1000000 by default

--pidfilepath arg full path to pidfile (if not set, no

pidfile is created)

--timeZoneInfo arg full path to time zone info directory,

e.g. /usr/share/zoneinfo

--keyFile arg private key for cluster authentication

--noauth run without security

--transitionToAuth For rolling access control upgrade.

Attempt to authenticate over outgoing

connections and proceed regardless of

success. Accept incoming connections

with or without authentication.

--clusterAuthMode arg Authentication mode used for cluster

authentication. Alternatives are

(keyFile|sendKeyFile|sendX509|x509)

--slowms arg (=100) value of slow for profile and console

log

--slowOpSampleRate arg (=1) fraction of slow ops to include in the

profile and console log

--networkMessageCompressors [=arg(=disabled)] (=snappy)

Comma-separated list of compressors to

use for network messages

--auth run with security

--clusterIpSourceWhitelist arg Network CIDR specification of permitted

origin for `__system` access.

--profile arg 0=off 1=slow, 2=all

--cpu periodically show cpu and iowait

utilization

--sysinfo print some diagnostic system

information

--noIndexBuildRetry don't retry any index builds that were

interrupted by shutdown

--noscripting disable scripting engine

--notablescan do not allow table scans

Windows Service Control Manager options:

--install install Windows service

--remove remove Windows service

--reinstall reinstall Windows service (equivalent

to --remove followed by --install)

--serviceName arg Windows service name

--serviceDisplayName arg Windows service display name

--serviceDescription arg Windows service description

--serviceUser arg account for service execution

--servicePassword arg password used to authenticate

serviceUser

Replication options:

--oplogSize arg size to use (in MB) for replication op

log. default is 5% of disk space (i.e.

large is good)

--master Master/slave replication no longer

supported

--slave Master/slave replication no longer

supported

Replica set options:

--replSet arg arg is <setname>[/<optionalseedhostlist

>]

--replIndexPrefetch arg specify index prefetching behavior (if

secondary) [none|_id_only|all]

--enableMajorityReadConcern [=arg(=1)] (=1)

enables majority readConcern

Sharding options:

--configsvr declare this is a config db of a

cluster; default port 27019; default

dir /data/configdb

--shardsvr declare this is a shard db of a

cluster; default port 27018

SSL options:

--sslOnNormalPorts use ssl on configured ports

--sslMode arg set the SSL operation mode

(disabled|allowSSL|preferSSL|requireSSL